Research on Lightweight Rice False Smut Disease Identification Method Based on Improved YOLOv8n Model

Abstract

1. Introduction

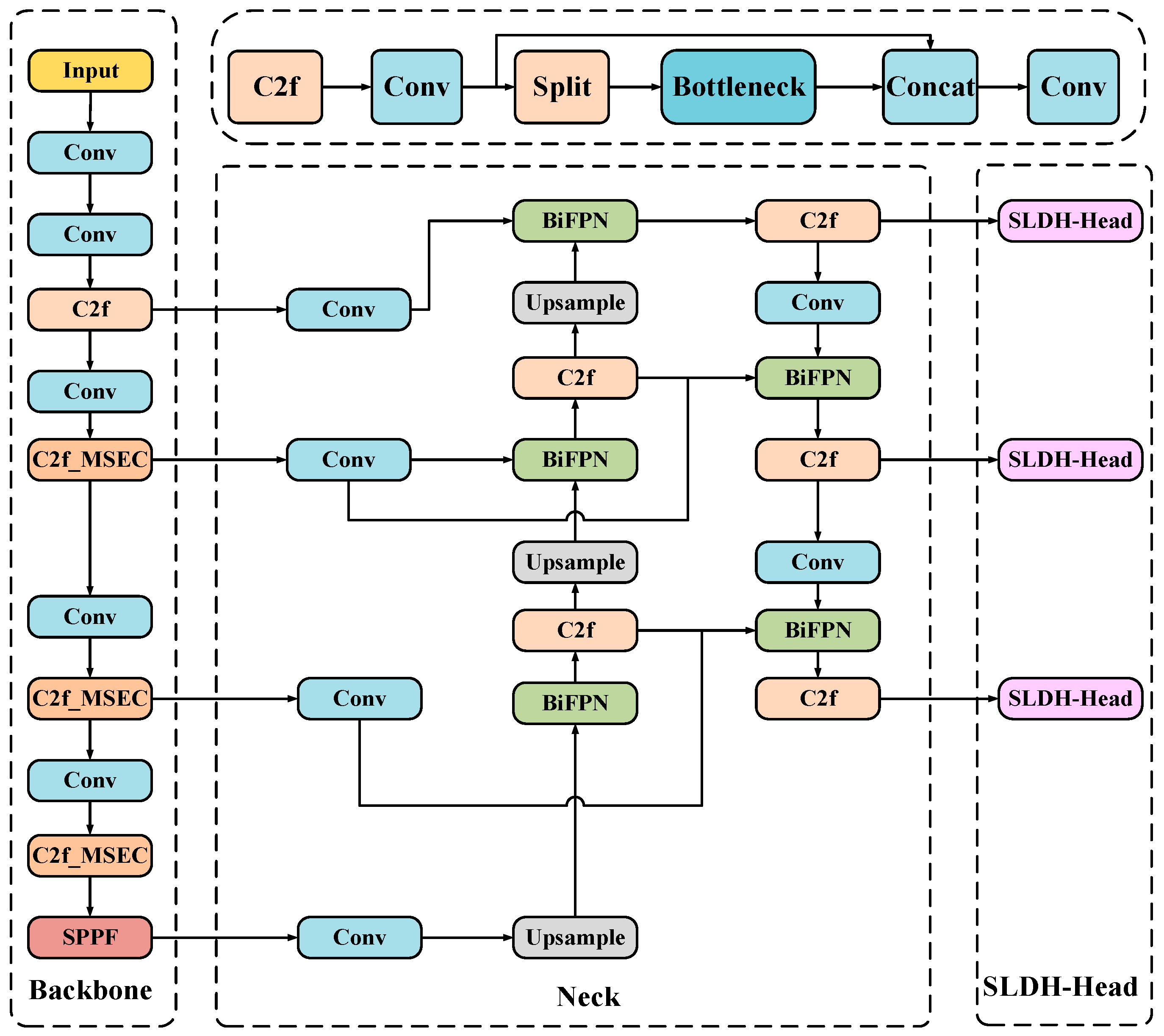

- (1)

- Backbone: design of Multiscale Lightweight Convolutional Module C2f_MSEC, changing some of the C2f modules of the backbone network for better extraction of key features of false smut.

- (2)

- Neck: integrate the BiFPN module and add a new small-size detection layer, removing the large-size detection layer while reducing the model parameters to enhance the feature fusion ability for different false smut sizes.

- (3)

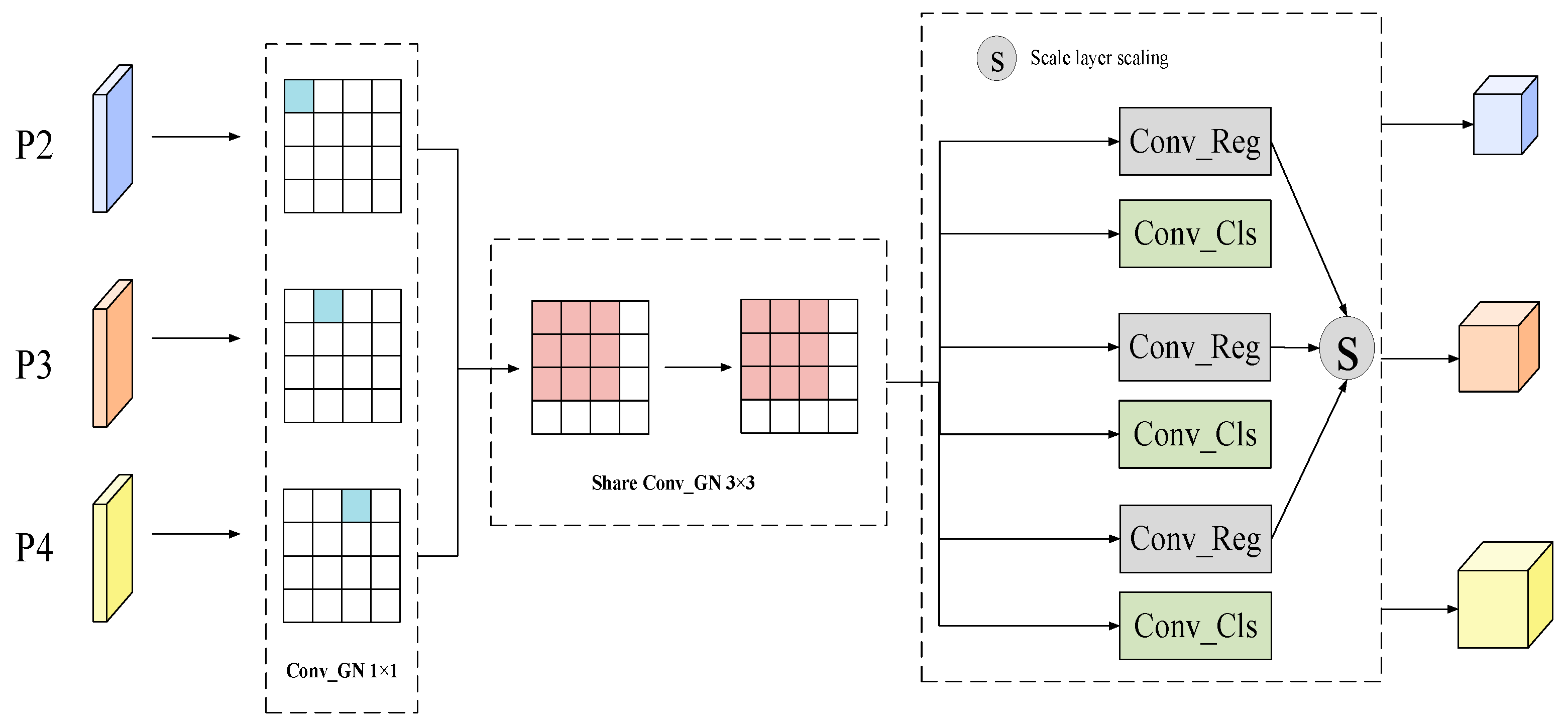

- Detection head: a new shared convolution lightweight detection head is designed to process the results of the convolution operation using a group normalization layer, which further achieves lightweight while ensuring the model training effect.

2. Materials and Methods

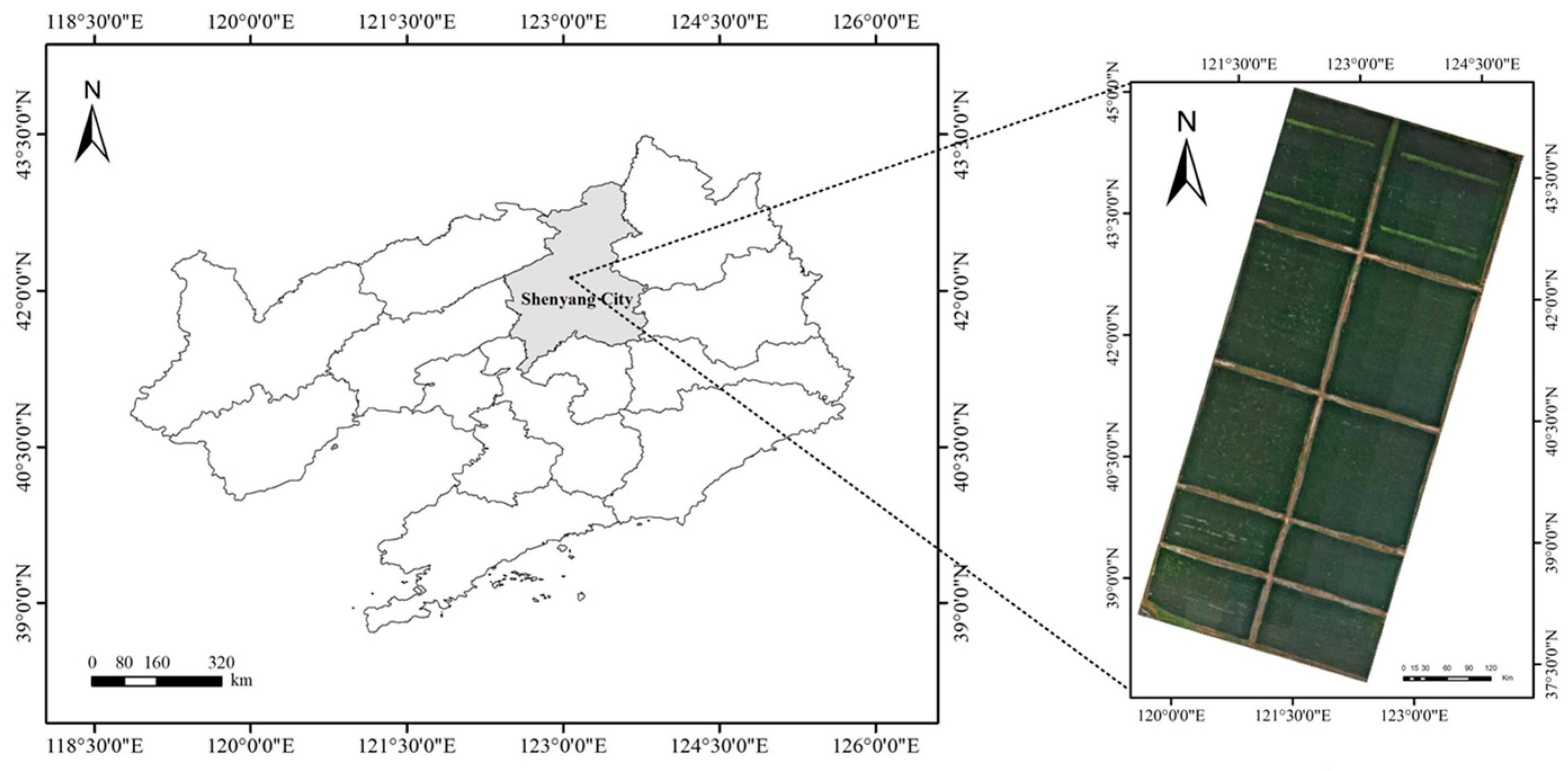

2.1. Digital Image Data Acquisition and Processing

2.1.1. Experimental Design and Digital Image Acquisition of Rice False Smut

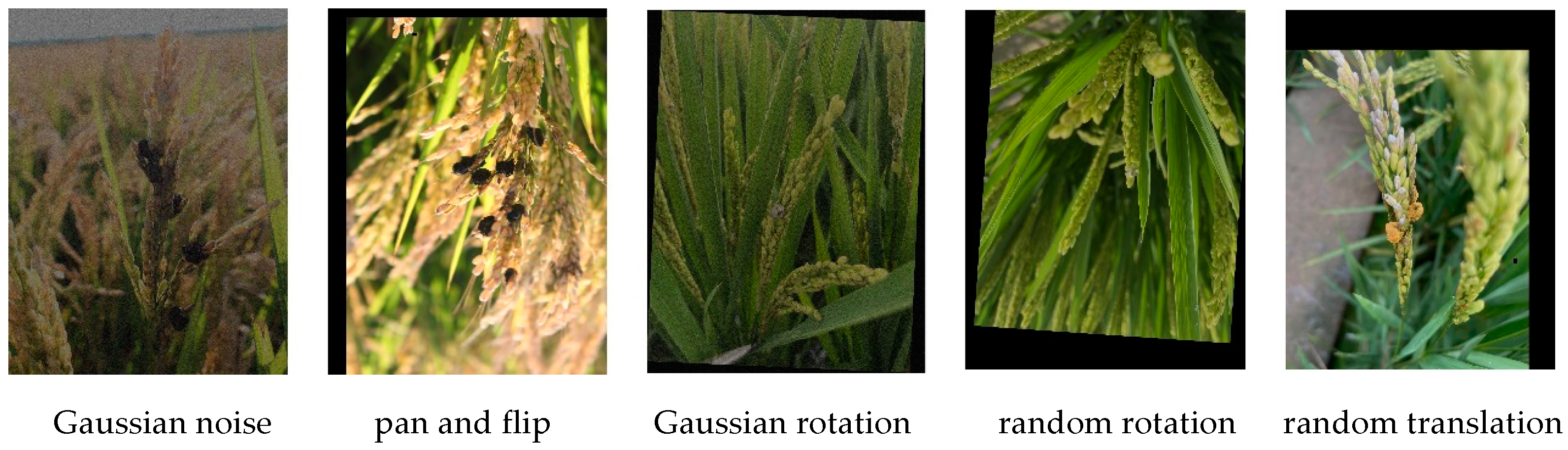

2.1.2. Data Labeling and Dataset Construction

2.2. Improved YOLOv8n Model Design for Lightweight Rice False Smut

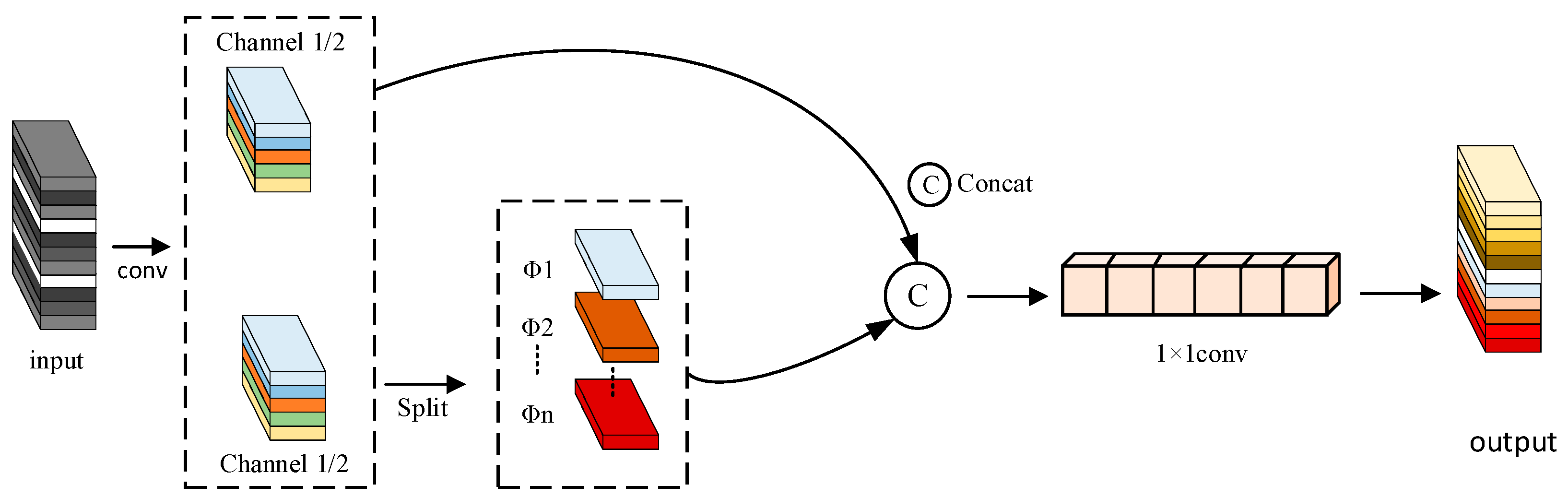

2.2.1. Lightweight Multiscale Convolutional Module Design

2.2.2. Fusion-Weighted Bidirectional Feature Pyramid Networks

2.2.3. Shared Convolutional Lightweight Detection Head Design

2.3. Test Environment Configuration and Parameter Setting

2.4. Evaluation Indicators

3. Results

3.1. Model Training Results

3.1.1. YOLOv8 Series Model Analysis

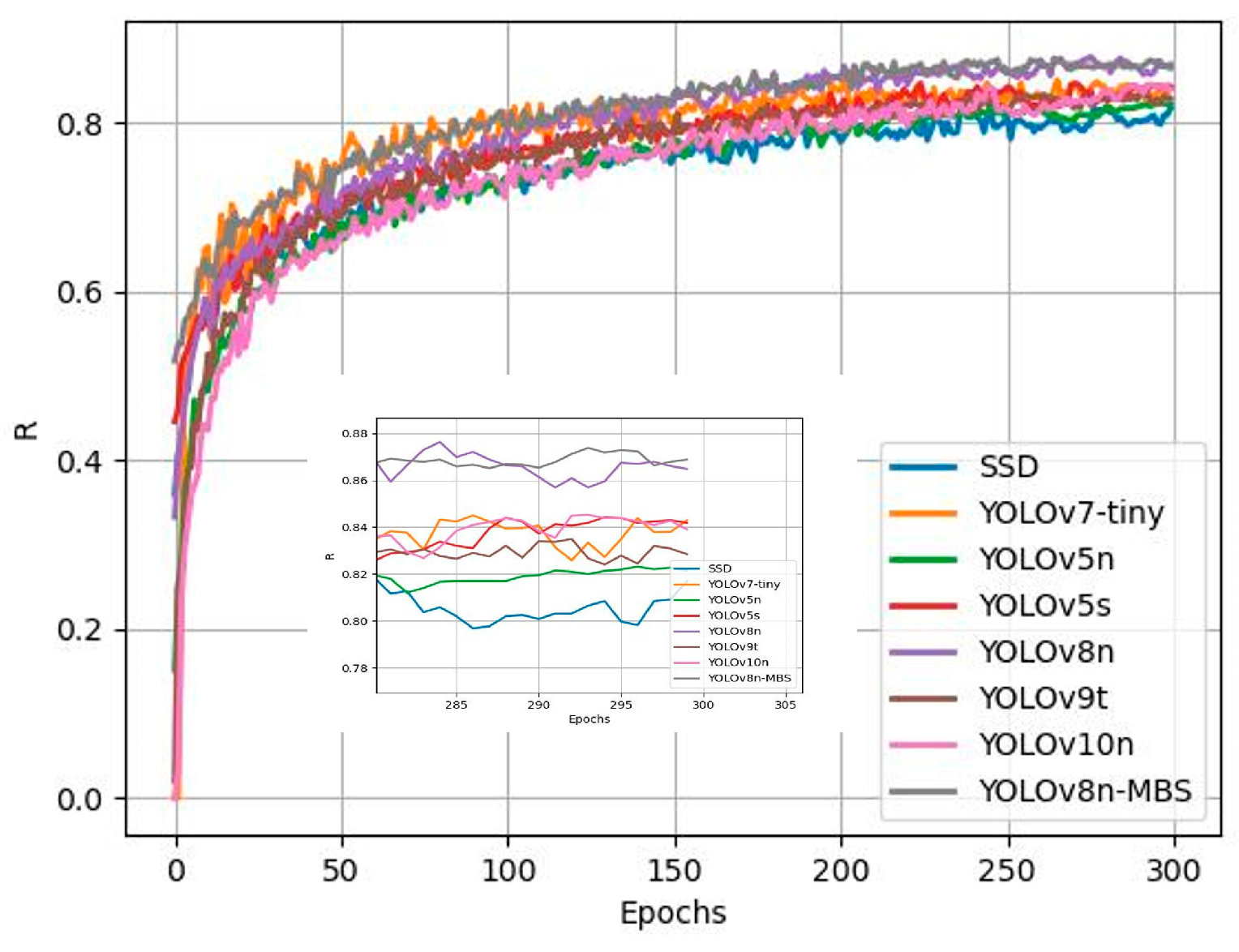

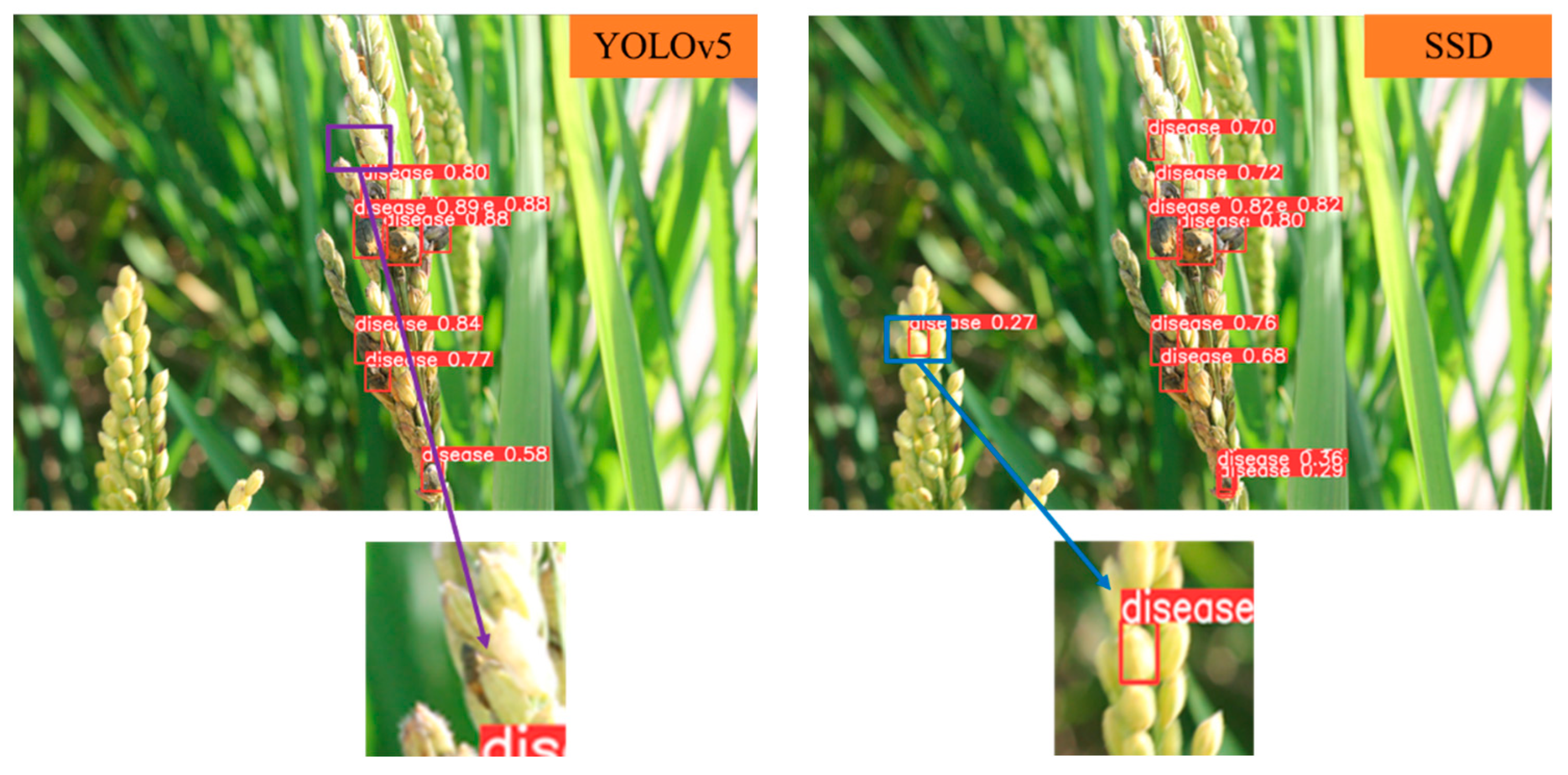

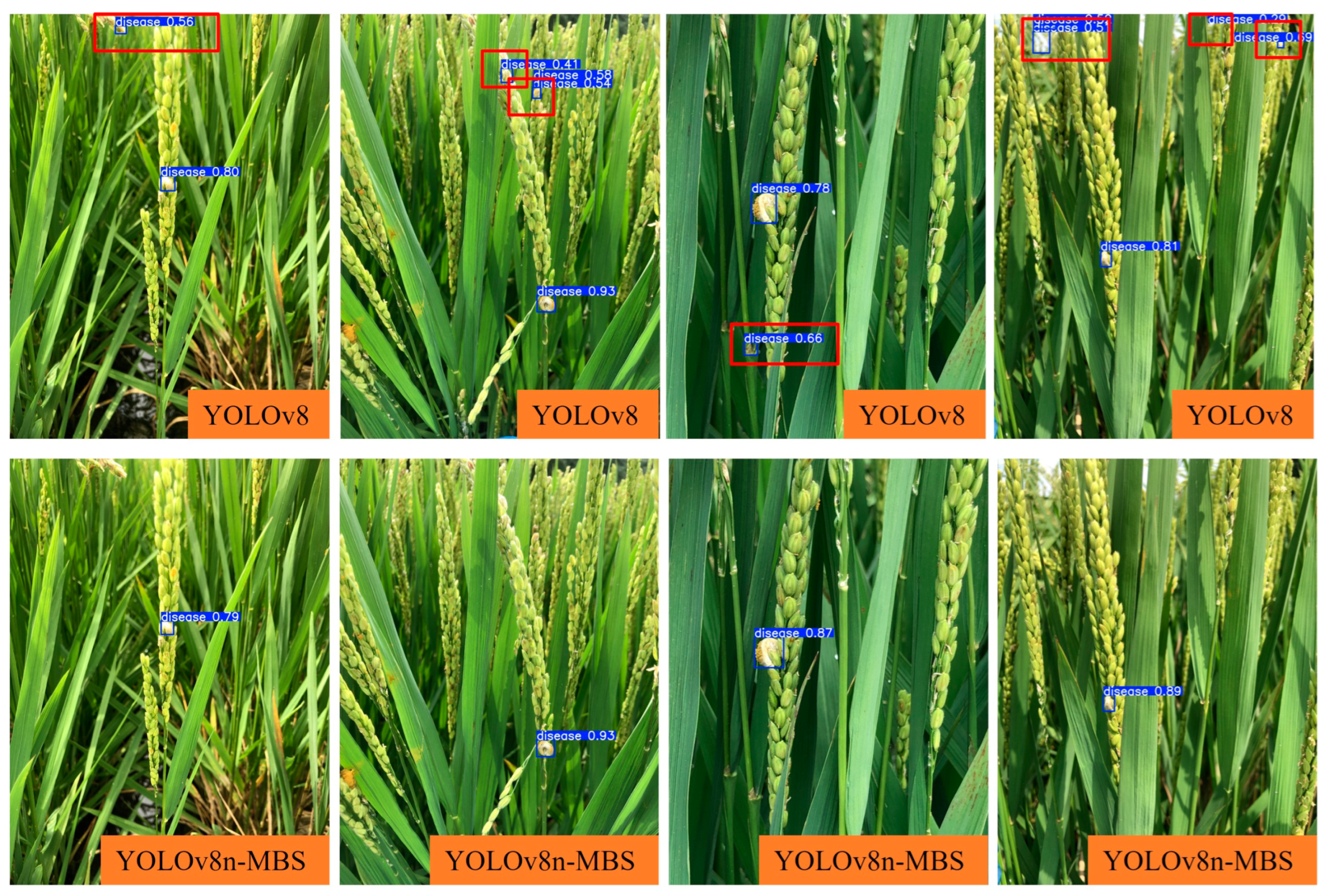

3.1.2. Comparison of Detection Performance of Different Models

4. Discussion

4.1. Ablation Study

4.2. Analysis of Model Detection Performance for Different Shooting Distances

4.3. Feature Visualization Network

4.4. Model Performance Analysis Based on New Data

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lu, Y.; Yi, S.; Zeng, N.; Liu, Y.; Zhang, Y. Identification of rice diseases using deep convolutional neural networks. Neurocomputing 2017, 267, 378–384. [Google Scholar] [CrossRef]

- Rush, M.C.; Shahjahan, A.K.M.; Jones, J.P.; Groth, D.E. Outbreak of false smut of rice in Louisiana. Plant Dis. 2000, 84, 100. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Fan, J.; Li, L.; Huang, F.; Wang, W. Progress in the study of false smut disease in rice. J. Agric. Sci. Technol. 2012, 11, 1211–1217. [Google Scholar]

- Khanal, S.; Gaire, S.P.; Zhou, X.-G. Kernel Smut and False Smut: The Old-Emerging Diseases of Rice—A Review. Phytopathology 2023, 113, 931–944. [Google Scholar] [CrossRef]

- Koiso, Y.; Li, Y.; Iwasaki, S.; Hanaka, K.; Kobayashi, T.; Sonoda, R.; Fujita, Y.; Yaegashi, H.; Sato, Z. Ustiloxins, antimitotic cydic peptides from false smut balls on rice panicles caused by Ustilaginoidea virens. J. Antibiot. 1994, 47, 765–773. [Google Scholar] [CrossRef]

- Nakamura, K.; Izumiyama, N.; Ohtsubo, K.; Koiso, Y.; Iwasaki, S.; Sonoda, R.; Fujita, Y.; Yaegashi, H.; Sato, Z. “Lupinosis”-Like lesions in mice caused by ustiloxin, produced by Ustilaginoieda virens: A morphological study. Nat. Toxins 1994, 2, 22–28. [Google Scholar] [CrossRef] [PubMed]

- Zhou, L.; Lu, S.; Shan, T.; Wang, P. Chemistry and biology of mycotoxins from rice false smut pathogen. In Mycotoxins: Properties, Applications and Hazards; Melborn, B.J., Greene, J.C., Eds.; Nova Science Publishers: New York, NW, USA, 2012. [Google Scholar]

- Chahal, S. Epidemiology and management of two cereals. Indian Phytopathol. 2001, 54, 145–157. [Google Scholar]

- Bin, L.; Yun, Z.; He, D.; Li, Y. Identification of Apple Leaf Diseases Based on Deep Convolutional Neural Networks. Symmetry 2017, 10, 11. [Google Scholar] [CrossRef]

- Vithu, P.; Moses, J.A. Machine vision system for food grain quality evaluation: A review. Trends Food Sci. Technol. 2016, 56, 13–20. [Google Scholar] [CrossRef]

- Dutot, M.; Nelson, L.; Tyson, R. Predicting the spread of postharvest disease in stored fruit, with application to apples. Postharvest Biol. Technol. 2013, 85, 45–56. [Google Scholar] [CrossRef]

- Sujatha, R.; Chatterjee, J.M.; Jhanjhi, N.; Brohi, S.N. Performance of deep learning vs machine learning in plant leaf disease detection. Microprocess. Microsystems 2020, 80, 103615. [Google Scholar] [CrossRef]

- Ye, Z.; Zhao, M.; Jia, L. Research on image recognition of complex background crop diseases. Trans. Chin. Soc. Agric. Mach. 2021, 52, 118–124. [Google Scholar]

- Guo, X.; Yu, S.; Shen, H. A crop disease identification model based on global feature extraction. Trans. Chin. Soc. Agric. Mach. 2022, 53, 301–307. [Google Scholar]

- Sun, J.; Zhu, W.; Luo, Y. Identification of field crop leaf diseases based on improved MobileNet-V2. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2021, 37, 161–169. [Google Scholar]

- Du, T.; Nan, X.; Huang, J.; Zhang, W.; Ma, Z. Improving RegNet to identify the damage degree of various crop diseases. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2022, 38, 150–158. [Google Scholar]

- Sun, F.; Wang, Y.; Lan, P.; Zhang, X.; Chen, X.; Wang, Z. Identification method of apple fruit diseases based on improved YOLOv5s and transfer learning. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2022, 38, 171–179. [Google Scholar]

- Masood, M.; Nawaz, M.; Nazir, T.; Javed, A.; Alkanhel, R.; Elmannai, H.; Dhahbi, S.; Bourouis, S. MaizeNet: A Deep Learning Approach for Effective Recognition of Maize Plant Leaf Diseases. IEEE Access 2023, 11, 52862–52876. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Zhong, Z.; Yun, L.; Cheng, F.; Chen, Z.; Zhang, C. Light-YOLO: A Lightweight and Efficient YOLO-Based Deep Learning Model for Mango Detection. Agriculture 2024, 14, 140. [Google Scholar] [CrossRef]

- Xie, W.; Feng, F.; Zhang, H. A Detection Algorithm for Citrus Huanglongbing Disease Based on an Improved YOLOv8n. Sensors 2024, 24, 4448. [Google Scholar] [CrossRef]

- Sun, Z.; Feng, Z.; Chen, Z. Highly Accurate and Lightweight Detection Model of Apple Leaf Diseases Based on YOLO. Agronomy 2024, 14, 1331. [Google Scholar] [CrossRef]

- Li, R.; Li, Y.; Qin, W.; Abbas, A.; Li, S.; Ji, R.; Wu, Y.; He, Y.; Yang, J. Lightweight Network for Corn Leaf Disease Identification Based on Improved YOLO v8s. Agriculture 2024, 14, 220. [Google Scholar] [CrossRef]

- Yang, C.; Sun, X.; Wang, J.; Lv, H.; Dong, P.; Xi, L.; Shi, L. YOLOv8s-CGF: A lightweight model for wheat ear Fusarium head blight detection. Peer J. Comput. Sci. 2024, 10, 1948. [Google Scholar] [CrossRef]

- Bai, B.; Wang, J.; Li, J.; Yu, L.; Wen, J.; Han, Y. T-YOLO: A lightweight and efficient detection model for nutrient bud in complex tea plantation environment. J. Sci. Food Agric. 2024, 104, 5698–5711. [Google Scholar] [CrossRef] [PubMed]

- Ma, B.; Hua, Z.; Wen, Y.; Deng, H.; Zhao, Y.; Pu, L.; Song, H. Using an improved lightweight YOLOv8 model for real-time detection of multi-stage apple fruit in complex orchard environments. Artif. Intell. Agric. 2024, 11, 70–82. [Google Scholar] [CrossRef]

- Solimani, F.; Cardellicchio, A.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Optimizing tomato plant phenotyping detection: Boosting YOLOv8 architecture to tackle data complexity. Comput. Electron. Agric. 2024, 218, 108728. [Google Scholar] [CrossRef]

- Li, D.; Zeng, X.; Liu, Y. Apple leaf disease identification model by coupling global and patch features. Trans. Chin. Soc. Agric. Eng. 2022, 38, 207–214. [Google Scholar]

- Tzutalin, D. LabelImg. Git Code (2015). Available online: https://github.com/tzutalin/labelImg (accessed on 26 August 2024).

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J. Vegetable disease detection using an improved YOLOv8 algorithm in the greenhouse plant environment. Sci. Rep. 2024, 14, 4261. [Google Scholar] [CrossRef]

- Ye, R.; Shao, G.; He, Y.; Gao, Q.; Li, T. YOLOv8-RMDA: Lightweight YOLOv8 Network for Early Detection of Small Target Diseases in Tea. Sensors 2024, 24, 2896. [Google Scholar] [CrossRef]

- Aboah, A.; Wang, B.; Bagci, U.; Adu-Gyamfi, Y. Real-time multi-class helmet violation detection using few-shot data sampling technique and yolov8. arXiv 2023, arXiv:2304.08256. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. arXiv 2018, arXiv:1801.04381. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar]

- Wang, X.; Kan, M.; Shan, S.; Chen, X. Fully learnable group convolution for the acceleration of deep neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Zhang, T.; Qi, G.J.; Xiao, B.; Wang, J. Interleaved group convolutions. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; Volume 469, pp. 4383–4392. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism. arXiv 2023, arXiv:2309.11331. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. arXiv 2020, arXiv:1911.09070. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. arXiv 2016, arXiv:1512.02325. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Ahmed, A.; Manaf, A. Pediatric Wrist Fracture Detection in X-rays via YOLOv10 Algorithm and Dual Label Assignment System. arXiv 2024, arXiv:2407.15689. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Tian, L.; Xue, B.; Wang, Z.; Li, D.; Yao, X.; Cao, Q.; Zhu, Y.; Cao, W.; Cheng, T. Spectroscopic detection of rice leaf blast infection from asymptomatic to mild stages with integrated machine learning and feature selection. Remote. Sens. Environ. 2021, 257, 112350. [Google Scholar] [CrossRef]

| Experimental | mAP (0.5)% | mAP (0.5–0.95)% | R% | Params/M | Model Size/MB |

|---|---|---|---|---|---|

| YOLOv8n | 93.5 | 66.6 | 86.6 | 3.0 | 6.3 |

| YOLOv8s | 94.3 | 69.2 | 88.6 | 11.1 | 22.5 |

| YOLOv8m | 95.7 | 77.7 | 91.3 | 25.9 | 52.1 |

| YOLOv8l | 95.5 | 79.9 | 91.2 | 43.6 | 87.7 |

| YOLOv8x | 95.9 | 82.3 | 90.9 | 68.1 | 136.7 |

| Experimental | mAP (0.5)% | mAP (0.5–0.95)% | R% | Params/M | Model Size/MB |

|---|---|---|---|---|---|

| SSD | 89.9 | 59.2 | 81.7 | 13.7 | 25.2 |

| YOLOv7-tiny | 90.7 | 61.1 | 84.3 | 6.0 | 14.6 |

| YOLOv5n | 90.1 | 61.7 | 81.7 | 2.5 | 5.3 |

| YOLOv5s | 91.6 | 61.8 | 82.5 | 2.7 | 5.3 |

| YOLOv8n | 93.5 | 66.6 | 86.6 | 3.0 | 6.3 |

| YOLOv9t | 91.1 | 63.9 | 84.0 | 2.0 | 4.7 |

| YOLOv10n | 91.7 | 62.5 | 83.8 | 2.3 | 5.8 |

| YOLOv8n-MBS | 93.9 | 68.5 | 86.7 | 1.4 | 3.3 |

| MSEC | BiFPN | LSCD | mAP (0.5)% | R% | Param/M | Model Size/MB |

|---|---|---|---|---|---|---|

| × | × | × | 93.5 | 86.6 | 3.0 | 6.3 |

| √ | × | × | 93.5 | 86.7 | 2.8 | 6.0 |

| √ | √ | × | 93.9 | 86.1 | 1.8 | 4.0 |

| √ | √ | √ | 93.9 | 86.7 | 1.4 | 3.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Guo, F.; Zhang, H.; Cao, Y.; Feng, S. Research on Lightweight Rice False Smut Disease Identification Method Based on Improved YOLOv8n Model. Agronomy 2024, 14, 1934. https://doi.org/10.3390/agronomy14091934

Yang L, Guo F, Zhang H, Cao Y, Feng S. Research on Lightweight Rice False Smut Disease Identification Method Based on Improved YOLOv8n Model. Agronomy. 2024; 14(9):1934. https://doi.org/10.3390/agronomy14091934

Chicago/Turabian StyleYang, Lulu, Fuxu Guo, Hongze Zhang, Yingli Cao, and Shuai Feng. 2024. "Research on Lightweight Rice False Smut Disease Identification Method Based on Improved YOLOv8n Model" Agronomy 14, no. 9: 1934. https://doi.org/10.3390/agronomy14091934

APA StyleYang, L., Guo, F., Zhang, H., Cao, Y., & Feng, S. (2024). Research on Lightweight Rice False Smut Disease Identification Method Based on Improved YOLOv8n Model. Agronomy, 14(9), 1934. https://doi.org/10.3390/agronomy14091934