YOLOv8-CBSE: An Enhanced Computer Vision Model for Detecting the Maturity of Chili Pepper in the Natural Environment

Abstract

1. Introduction

2. Materials and Methods

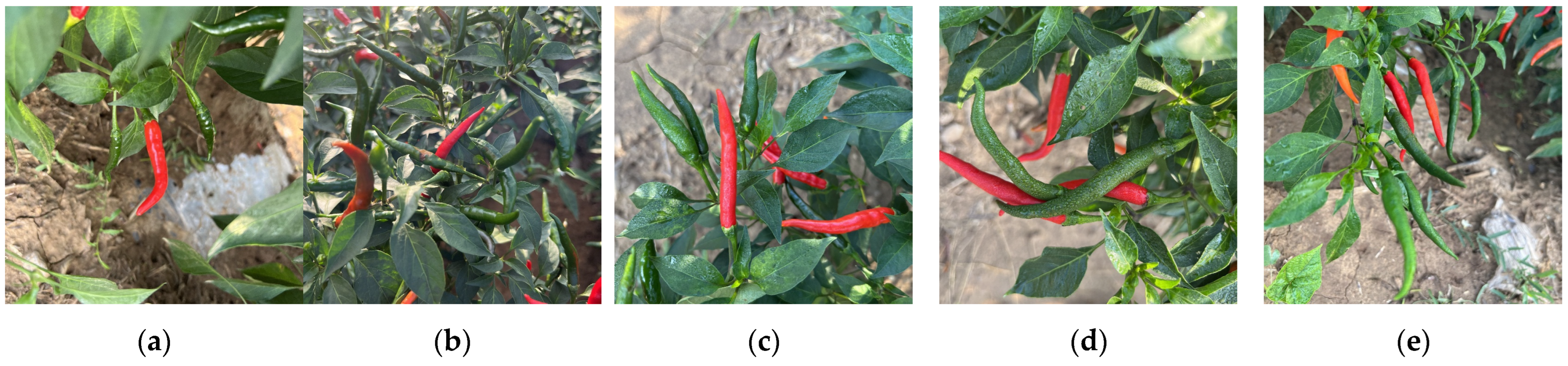

2.1. Construction of Dataset

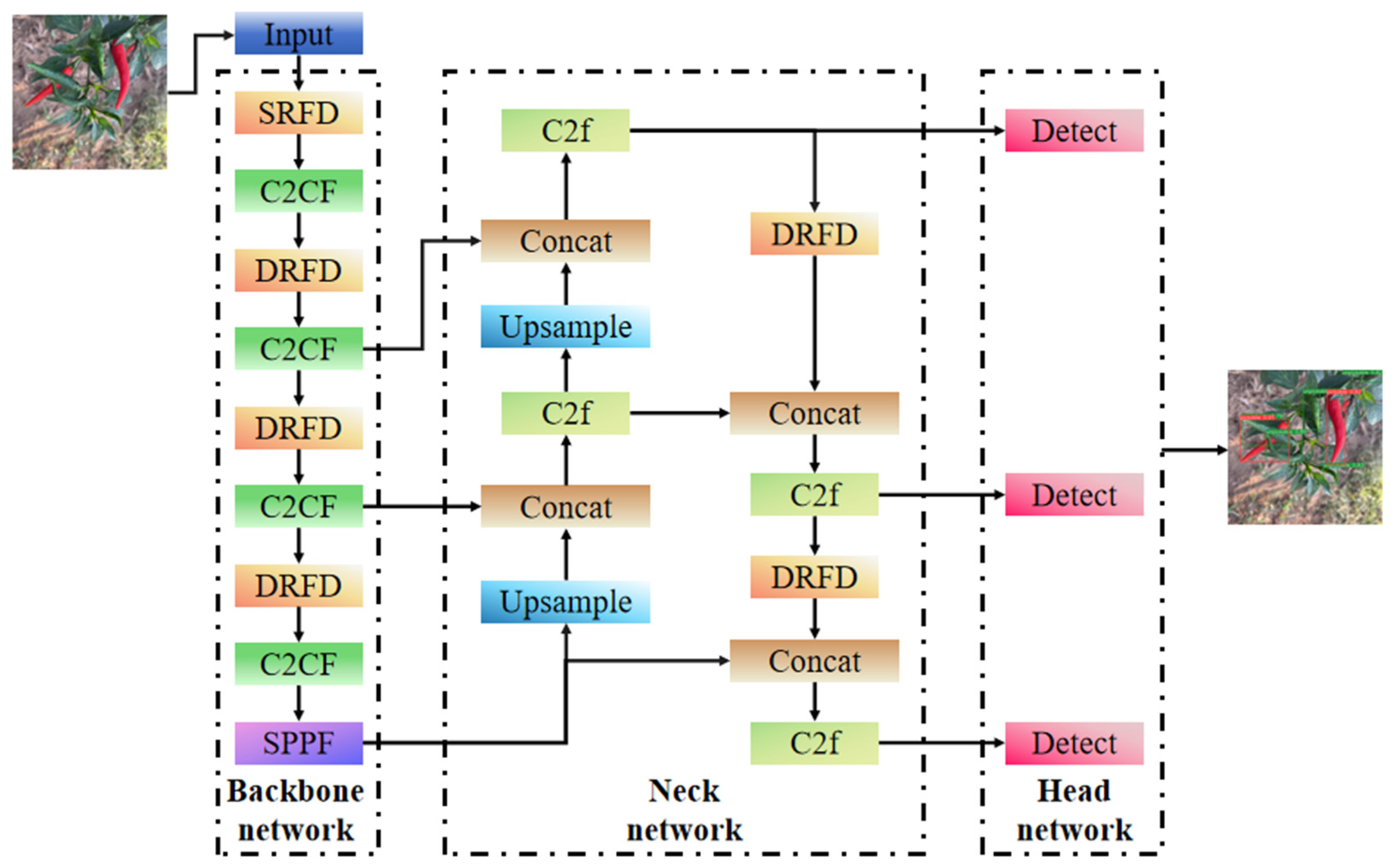

2.2. YOLOv8-CBSE Model Structure

2.2.1. C2CF Module

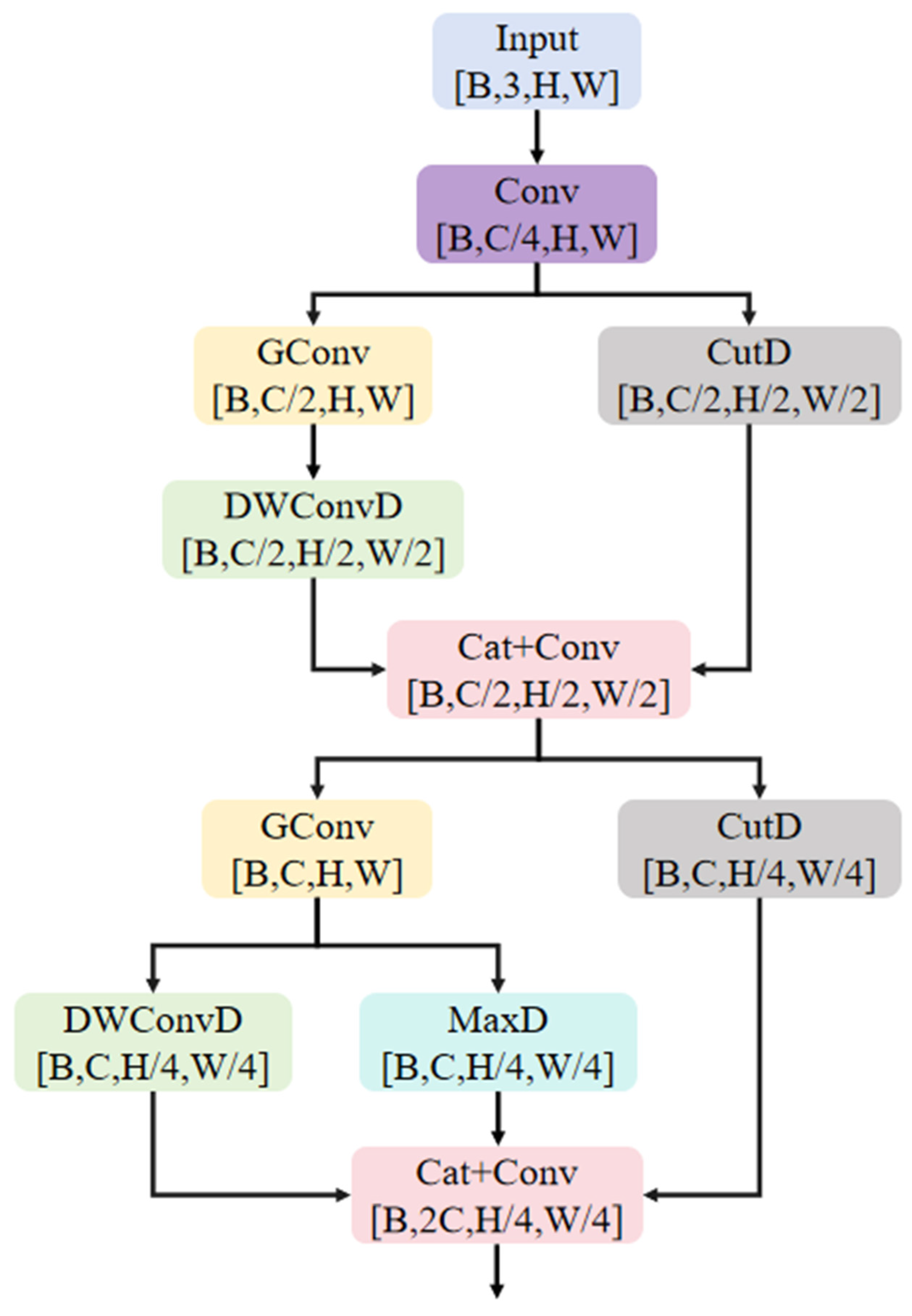

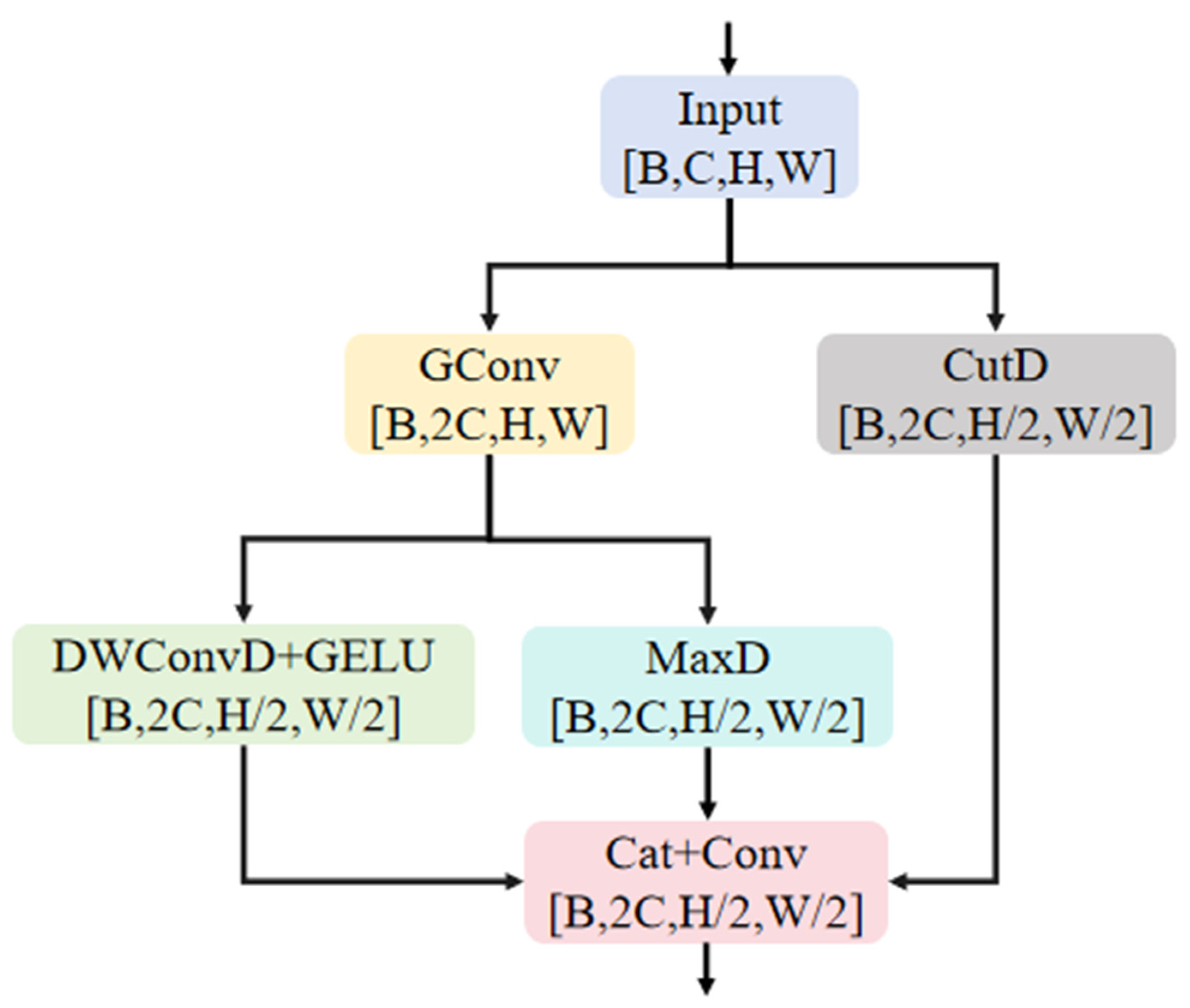

2.2.2. SRFD Module and DRFD Module

2.2.3. EIoU Loss Function

2.3. Test Environment

2.4. Evaluation Indices

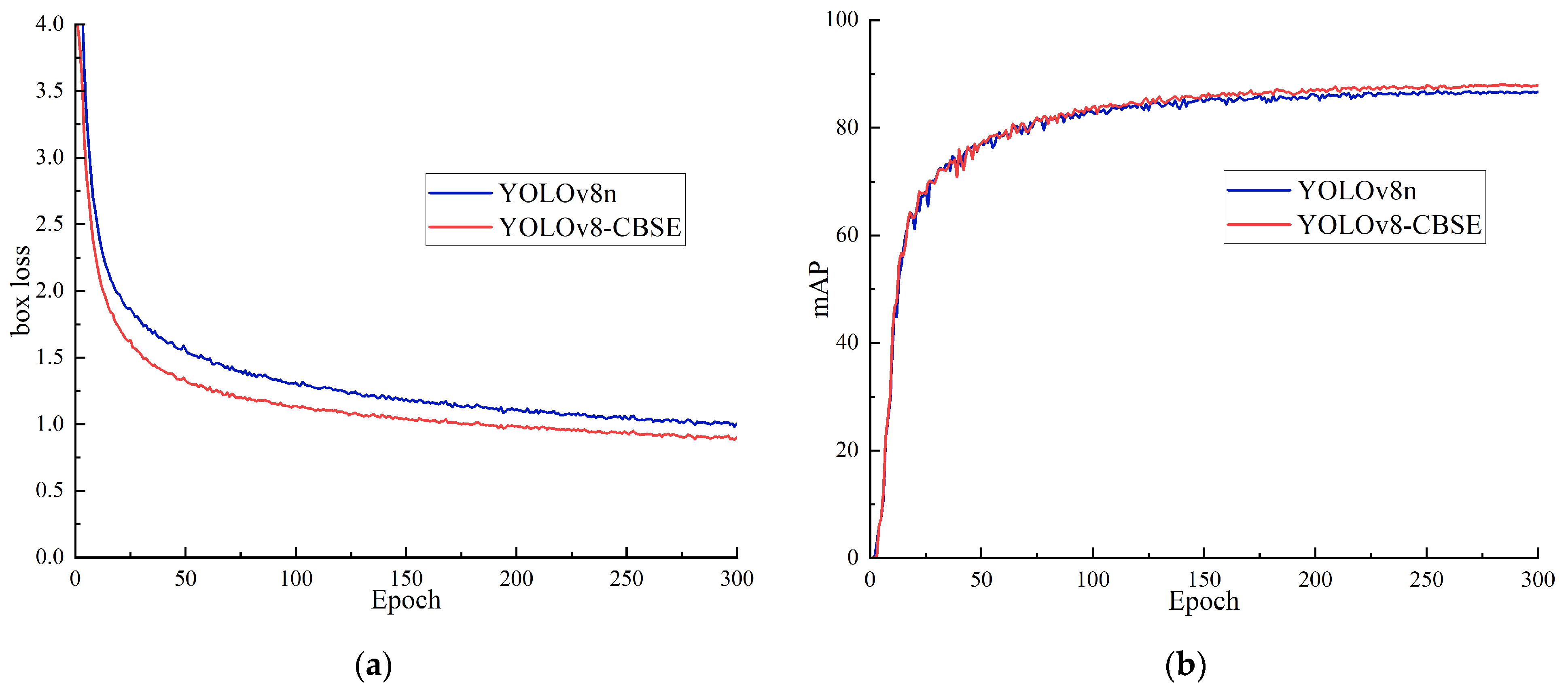

3. Results and Discussion

3.1. C2CF Module Replacement of C2f Module in Different Positions Test

3.2. Loss Function Comparison Test

3.3. Ablation Test

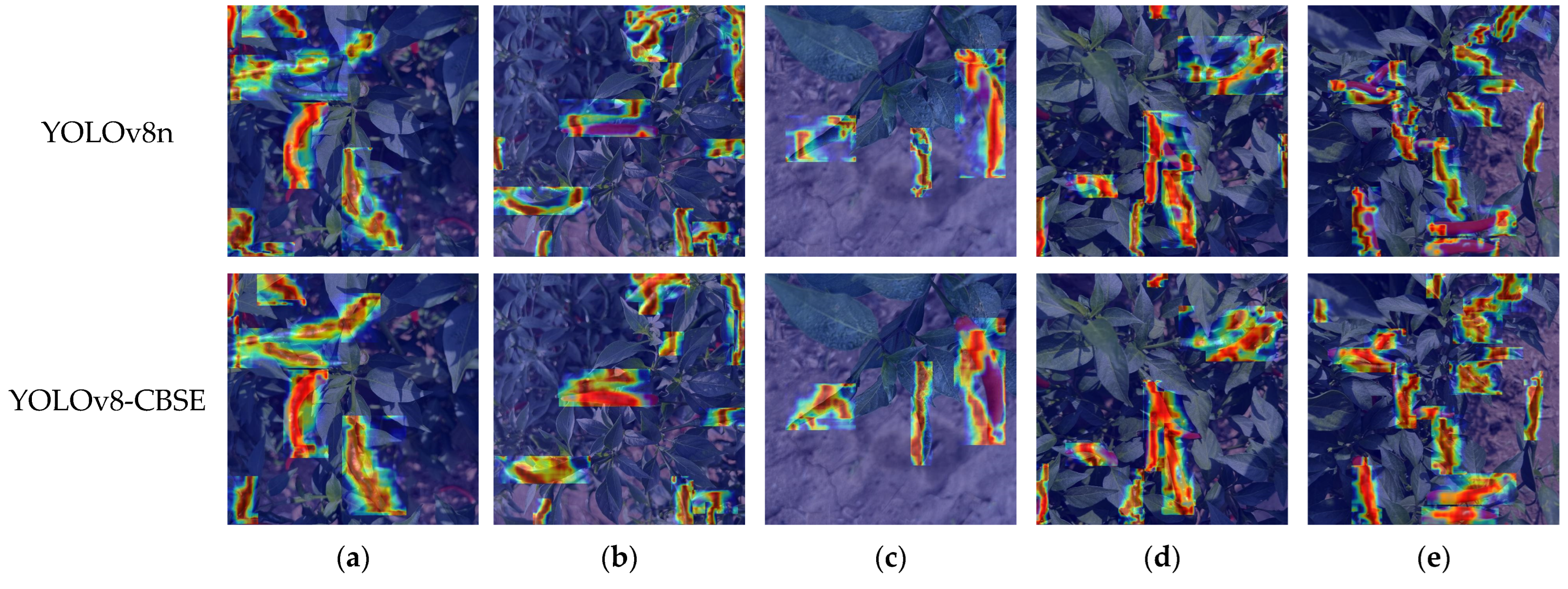

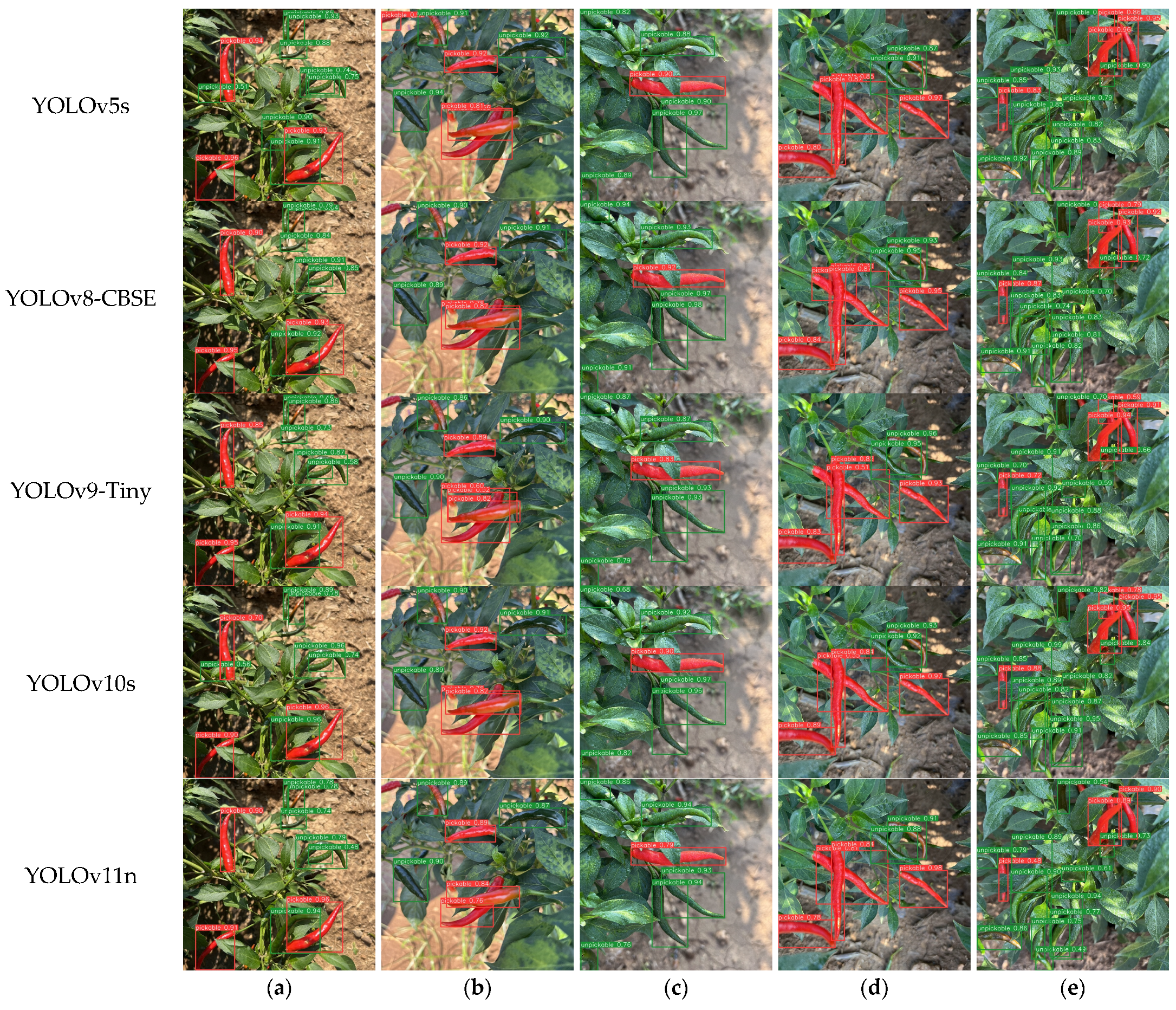

3.4. Comparison Test of Different Models

3.5. Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mulakaledu, A.; Swathi, B.; Jadhav, M.M.; Shukri, S.M.; Bakka, V.; Jangir, P. Satellite Image–Based Ecosystem Monitoring with Sustainable Agriculture Analysis Using Machine Learning Model. Remote Sens. Earth Syst. Sci. 2024, 7, 764–773. [Google Scholar] [CrossRef]

- Tamrakar, N.; Karki, S.; Kang, M.Y.; Deb, N.C.; Arulmozhi, E.; Kang, D.Y.; Kook, J.; Kim, H.T. Lightweight Improved YOLOv5s-CGhostnet for Detection of Strawberry Maturity Levels and Counting. AgriEngineering 2024, 6, 962–978. [Google Scholar] [CrossRef]

- Ye, Z.; Shang, Z.; Li, M.; Zhang, X.; Ren, H.; Hu, X.; Yi, J. Effect of ripening and variety on the physiochemical quality and flavor of fermented Chinese chili pepper (Paojiao). Food Chem. 2022, 368, 130797. [Google Scholar] [CrossRef] [PubMed]

- Ashok Priyadarshan, A.M.; Shyamalamma, S.; Nagesha, S.N.; Anil, V.S.; Nirmala, K.S.; Shravya, K.J. Evaluation of Local Types of Bird’s Eye Chilli (Capsicum frutescens L.) of Plant and Fruit Morphological Characters. Int. J. Plant Soil. Sci. 2024, 36, 339–351. [Google Scholar] [CrossRef]

- Pinar, H.; Kaplan, M.; Karaman, K.; Ciftci, B. Assessment of interspecies (Capsicum annuum X Capsicum frutescens) recombinant inbreed lines (RIL) for fruit nutritional traits. J. Food Compos. Anal. 2023, 115, 104848. [Google Scholar] [CrossRef]

- Salim, E.; Suharjito. Hyperparameter optimization of YOLOv4 tiny for palm oil fresh fruit bunches maturity detection using genetics algorithms. Smart Agric. Technol. 2023, 6, 100364. [Google Scholar] [CrossRef]

- Badeka, E.; Karapatzak, E.; Karampatea, A.; Bouloumpasi, E.; Kalathas, I.; Lytridis, C.; Tziolas, E.; Tsakalidou, V.N.; Kaburlasos, V.G. A Deep Learning Approach for Precision Viticulture, Assessing Grape Maturity via YOLOv7. Sensors 2023, 23, 8126. [Google Scholar] [CrossRef]

- Nugroho, D.P.; Widiyanto, S.; Wardani, D.T. Comparison of Deep Learning-Based Object Classification Methods for Detecting Tomato Ripeness. Int. J. Fuzzy Log. Intell. Syst. 2022, 22, 223–232. [Google Scholar] [CrossRef]

- Khatun, T.; Nirob, M.A.S.; Bishshash, P.; Akter, M.; Uddin, M.S. A comprehensive dragon fruit image dataset for detecting the maturity and quality grading of dragon fruit. Data Brief 2024, 52, 109936. [Google Scholar] [CrossRef]

- Azadnia, R.; Fouladi, S.; Jahanbakhshi, A. Intelligent detection and waste control of hawthorn fruit based on ripening level using machine vision system and deep learning techniques. Results Eng. 2023, 17, 100891. [Google Scholar] [CrossRef]

- Begum, N.; Hazarika, M.K. Maturity detection of tomatoes using transfer learning. Meas. Food 2022, 7, 100038. [Google Scholar] [CrossRef]

- Khan, H.A.; Farooq, U.; Saleem, S.R.; Rehman, U.-u.; Tahir, M.N.; Iqbal, T.; Cheema, M.J.M.; Aslam, M.A.; Hussain, S. Design and development of machine vision robotic arm for vegetable crops in hydroponics. Smart Agric. Technol. 2024, 9, 100628. [Google Scholar] [CrossRef]

- Appe, S.N.; Arulselvi, G.; Balaji, G.N. CAM-YOLO: Tomato detection and classification based on improved YOLOv5 using combining attention mechanism. PeerJ Comput. Sci. 2023, 9, e1463. [Google Scholar] [CrossRef]

- Shaikh, I.M.; Akhtar, M.N.; Aabid, A.; Ahmed, O.S. Enhancing sustainability in the production of palm oil: Creative monitoring methods using YOLOv7 and YOLOv8 for effective plantation management. Biotechnol. Rep. 2024, 44, e00853. [Google Scholar] [CrossRef]

- de Almeida, G.P.S.; dos Santos, L.N.S.; da Silva Souza, L.R.; da Costa Gontijo, P.; de Oliveira, R.; Teixeira, M.C.; De Oliveira, M.; Teixeira, M.B.; do Carmo França, H.F. Performance Analysis of YOLO and Detectron2 Models for Detecting Corn and Soybean Pests Employing Customized Dataset. Agronomy 2024, 14, 2194. [Google Scholar] [CrossRef]

- Olisah, C.C.; Trewhella, B.; Li, B.; Smith, M.L.; Winstone, B.; Whitfield, E.C.; Fernández, F.F.; Duncalfe, H. Convolutional neural network ensemble learning for hyperspectral imaging-based blackberry fruit ripeness detection in uncontrolled farm environment. Eng. Appl. Artif. Intell. 2024, 132, 107945. [Google Scholar] [CrossRef]

- Bonora, A.; Bortolotti, G.; Bresilla, K.; Grappadelli, L.C.; Manfrini, L. A convolutional neural network approach to detecting fruit physiological disorders and maturity in ‘Abbé Fétel’ pears. Biosyst. Eng. 2021, 212, 264–272. [Google Scholar] [CrossRef]

- Bortolotti, G.; Piani, M.; Gullino, M.; Mengoli, D.; Franceschini, C.; Grappadelli, L.C.; Manfrini, L. A computer vision system for apple fruit sizing by means of low-cost depth camera and neural network application. Precis. Agric. 2024, 25, 2740–2757. [Google Scholar] [CrossRef]

- Chai, J.J.K.; Xu, J.-L.; O’Sullivan, C. Real-Time Detection of Strawberry Ripeness Using Augmented Reality and Deep Learning. Sensors 2023, 23, 7639. [Google Scholar] [CrossRef]

- Jing, X.; Wang, Y.; Li, D.; Pan, W. Melon ripeness detection by an improved object detection algorithm for resource constrained environments. Plant Methods 2024, 20, 1–17. [Google Scholar] [CrossRef]

- Zhai, X.; Zong, Z.; Xuan, K.; Zhang, R.; Shi, W.; Liu, H.; Han, Z.; Luan, T. Detection of maturity and counting of blueberry fruits based on attention mechanism and bi-directional feature pyramid network. J. Food Meas. Charact. 2024, 18, 6193–6208. [Google Scholar] [CrossRef]

- Wu, M.; Yuan, K.; Shui, Y.; Wang, Q.; Zhao, Z. A Lightweight Method for Ripeness Detection and Counting of Chinese Flowering Cabbage in the Natural Environment. Agronomy 2024, 14, 1835. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, F.; Zheng, Y.; Chen, C.; Peng, X. Detection of Camellia oleifera fruit maturity in orchards based on modified lightweight YOLO. Comput. Electron. Agric. 2024, 226, 109471. [Google Scholar] [CrossRef]

- Sekharamantry, P.K.; Melgani, F.; Malacarne, J. Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO. Remote Sens. 2023, 15, 1516. [Google Scholar] [CrossRef]

- Sekharamantry, P.K.; Melgani, F.; Malacarne, J.; Ricci, R.; de Almeida Silva, R.; Marcato Junior, J. A Seamless Deep Learning Approach for Apple Detection, Depth Estimation, and Tracking Using YOLO Models Enhanced by Multi-Head Attention Mechanism. Computers 2024, 13, 83. [Google Scholar] [CrossRef]

- Dewi, C.; Bilaut, F.Y.; Christanto, H.J.; Dai, G. Deep Learning for the Classification of Rice Leaf Diseases Using YOLOv8. Math. Model. Eng. Probl. 2024, 11, 3025–3034. [Google Scholar] [CrossRef]

- Ramos, L.; Casas, E.; Bendek, E.; Romero, C.; Rivas-Echeverría, F. Hyperparameter optimization of YOLOv8 for smoke and wildfire detection: Implications for agricultural and environmental safety. Artif. Intell. Agric. 2024, 12, 109–126. [Google Scholar] [CrossRef]

- Yu, W.; Si, C.; Zhou, P.; Luo, M.; Feng, J.; Yan, S.; Wang, X. MetaFormer Baselines for Vision. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, Haikou, China, 1 November 2023. [Google Scholar]

- Lu, W.; Chen, S.-B.; Tang, J.; Ding, C.H.Q.; Luo, B. A Robust Feature Downsampling Module for Remote-Sensing Visual Tasks. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Magalhaes, S.A.; Castro, L.; Moreira, G.; Dos Santos, F.N.; Cunha, M.; Dias, J.; Moreira, A.P. Evaluating the Single-Shot MultiBox Detector and YOLO Deep Learning Models for the Detection of Tomatoes in a Greenhouse. Sensors 2021, 21, 3569. [Google Scholar] [CrossRef] [PubMed]

- Suleiman, S.H.; Faki, S.M.; Hemed, I.M.d. Economic Growth and Environmental Pollution in Brunei: ARDL Bounds Testing Approach to Cointegration. Asian J. Econ. Bus. Account. 2019, 10, 1–11. [Google Scholar] [CrossRef]

- Ali, M.; Yin, B.; Bilal, H.; Kumar, A.; Shaikh, A.M.; Rohra, A. Advanced efficient strategy for detection of dark objects based on spiking network with multi-box detection. Multimed. Tools Appl. 2023, 83, 36307–36327. [Google Scholar] [CrossRef]

- Mirhaji, H.; Soleymani, M.; Asakereh, A.; Abdanan Mehdizadeh, S. Fruit detection and load estimation of an orange orchard using the YOLO models through simple approaches in different imaging and illumination conditions. Comput. Electron. Agric. 2021, 191, 106533. [Google Scholar] [CrossRef]

- Huangfu, Y.; Huang, Z.; Yang, X.; Zhang, Y.; Li, W.; Shi, J.; Yang, L. HHS-RT-DETR: A Method for the Detection of Citrus Greening Disease. Agronomy 2024, 14, 2900. [Google Scholar] [CrossRef]

- Shimizu, T.; Nagata, F.; Arima, K.; Miki, K.; Kato, H.; Otsuka, A.; Watanabe, K.; Habib, M.K. Enhancing defective region visualization in industrial products using Grad-CAM and random masking data augmentation. Artif. Life Robot. 2023, 29, 62–69. [Google Scholar] [CrossRef]

- Avro, S.S.; Atikur Rahman, S.M.; Tseng, T.-L.; Fashiar Rahman, M. A deep learning framework for automated anomaly detection and localization in fused filament fabrication. Manuf. Lett. 2024, 41, 1526–1534. [Google Scholar] [CrossRef]

- Paul, A.; Machavaram, R.; Ambuj; Kumar, D.; Nagar, H. Smart solutions for capsicum Harvesting: Unleashing the power of YOLO for Detection, Segmentation, growth stage Classification, Counting, and real-time mobile identification. Comput. Electron. Agric. 2024, 219, 108832. [Google Scholar] [CrossRef]

- Ma, N.; Wu, Y.; Bo, Y.; Yan, H. Chili Pepper Object Detection Method Based on Improved YOLOv8n. Plants 2024, 13, 2402. [Google Scholar] [CrossRef] [PubMed]

| Model | Precision /% | Recall /% | AP/% | mAP /% | Model Size /MB | FLOPs /G | |

|---|---|---|---|---|---|---|---|

| Mature | Immature | ||||||

| YOLOv8n | 84.62 | 78.10 | 89.91 | 83.93 | 86.92 | 5.97 | 8.2 |

| YOLOv8-CB | 82.55 | 79.54 | 89.67 | 84.80 | 87.23 | 5.74 | 7.9 |

| YOLOv8-CN | 85.47 | 75.87 | 89.43 | 84.17 | 86.80 | 5.74 | 8.0 |

| YOLOv8-CBN | 85.50 | 76.99 | 89.97 | 84.53 | 87.25 | 5.50 | 7.7 |

| Loss Function | Precision/% | Recall/% | F1/% | mAP/% |

|---|---|---|---|---|

| CIoU | 84.62 | 78.10 | 81.23 | 86.92 |

| EIoU | 83.55 | 80.32 | 81.90 | 87.18 |

| DIoU | 83.18 | 78.74 | 80.90 | 86.72 |

| ShapeIoU | 84.04 | 78.60 | 81.23 | 87.02 |

| Model | F1/% | AP/% | F1 /% | mAP /% | Model Size /MB | ||

|---|---|---|---|---|---|---|---|

| Mature | Immature | Mature | Immature | ||||

| YOLOv8n | 83.70 | 78.76 | 89.91 | 83.93 | 81.23 | 86.92 | 5.97 |

| YOLOv8-CB | 83.58 | 78.46 | 89.67 | 84.80 | 81.02 | 87.23 | 5.74 |

| YOLOv8-S | 83.64 | 80.04 | 89.66 | 85.31 | 81.84 | 87.49 | 6.06 |

| YOLOv8-E | 84.63 | 79.17 | 89.88 | 84.48 | 81.90 | 87.18 | 5.97 |

| YOLOv8-CBS | 84.34 | 79.62 | 90.16 | 85.52 | 81.98 | 87.84 | 5.82 |

| YOLOv8-SE | 84.11 | 79.16 | 90.76 | 85.06 | 81.64 | 87.91 | 6.06 |

| YOLOv8-CBSE | 84.01 | 79.37 | 90.75 | 85.41 | 81.69 | 88.08 | 5.82 |

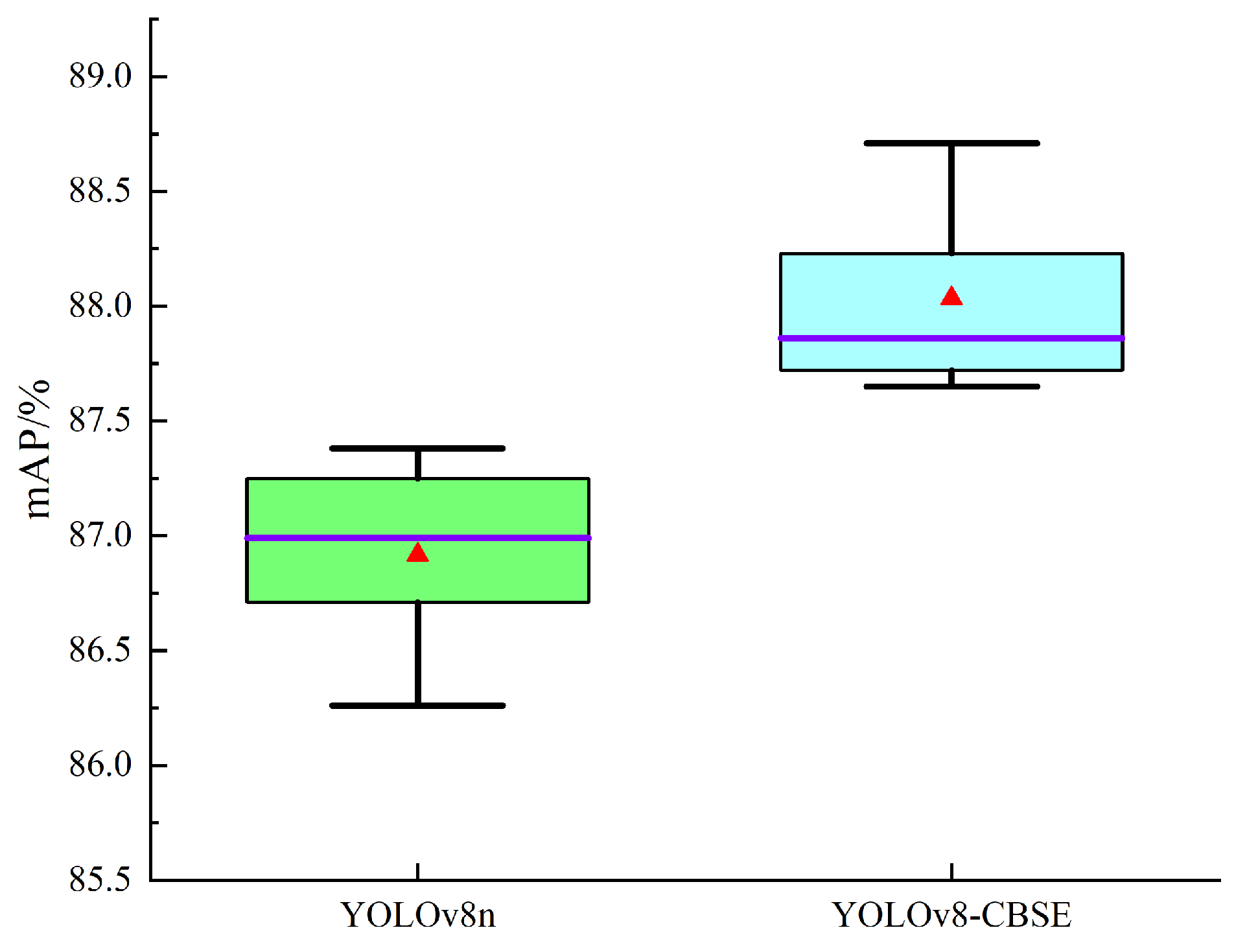

| Model | mAP/% | Standard Deviation | |||||

|---|---|---|---|---|---|---|---|

| Fold 1 | Fold 2 | Fold 3 | Fold 4 | Fold 5 | Mean | ||

| YOLOv8n | 86.71 | 86.26 | 86.99 | 87.25 | 87.38 | 86.92 | 0.46 |

| YOLOv8-CBSE | 87.72 | 87.65 | 87.86 | 88.23 | 88.71 | 88.03 | 0.43 |

| Model | AP/% | F1 /% | mAP /% | Model Size /MB | |

|---|---|---|---|---|---|

| Mature | Immature | ||||

| YOLOv5s | 89.36 | 84.31 | 82.20 | 86.84 | 13.77 |

| YOLOv8-CBSE | 90.75 | 85.41 | 81.69 | 88.08 | 5.82 |

| YOLOv9-Tiny | 90.42 | 82.57 | 80.47 | 86.49 | 4.40 |

| YOLOv10s | 89.32 | 85.67 | 81.28 | 87.50 | 15.77 |

| YOLOv11n | 88.73 | 83.71 | 80.28 | 86.22 | 5.23 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Y.; Zhang, S. YOLOv8-CBSE: An Enhanced Computer Vision Model for Detecting the Maturity of Chili Pepper in the Natural Environment. Agronomy 2025, 15, 537. https://doi.org/10.3390/agronomy15030537

Ma Y, Zhang S. YOLOv8-CBSE: An Enhanced Computer Vision Model for Detecting the Maturity of Chili Pepper in the Natural Environment. Agronomy. 2025; 15(3):537. https://doi.org/10.3390/agronomy15030537

Chicago/Turabian StyleMa, Yane, and Shujuan Zhang. 2025. "YOLOv8-CBSE: An Enhanced Computer Vision Model for Detecting the Maturity of Chili Pepper in the Natural Environment" Agronomy 15, no. 3: 537. https://doi.org/10.3390/agronomy15030537

APA StyleMa, Y., & Zhang, S. (2025). YOLOv8-CBSE: An Enhanced Computer Vision Model for Detecting the Maturity of Chili Pepper in the Natural Environment. Agronomy, 15(3), 537. https://doi.org/10.3390/agronomy15030537