Three-Dimensional Morphological Measurement Method for a Fruit Tree Canopy Based on Kinect Sensor Self-Calibration

Abstract

1. Introduction

2. Materials and Methods

2.1. Structure and Principle of the Measurement System

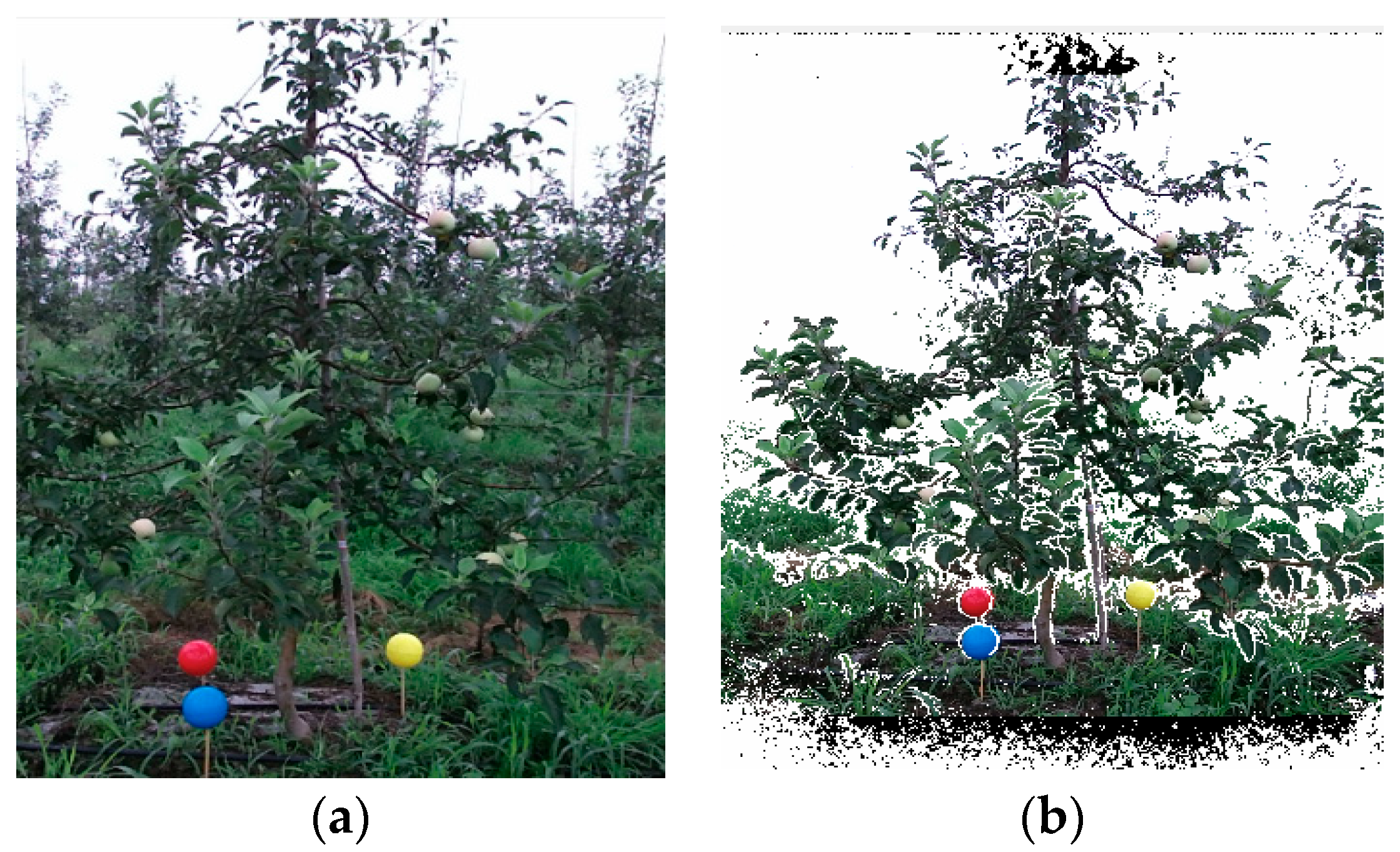

2.2. Procedure of 3D Reconstruction

2.3. Calculation Method for the 3D Morphology of the Fruit Tree Canopy

3. Results

3.1. Data Analysis of 3D Morphological Measurements for Fruit Tree Canopy

3.2. Error Analysis of 3D Morphological Measurements for Fruit Tree Canopy

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jayathunga, S.; Owari, T.; Tsuyuki, S. Digital Aerial Photogrammetry for Uneven-Aged Forest Management: Assessing the Potential to Reconstruct Canopy Structure and Estimate Living Biomass. Remote Sens. 2019, 11, 338. [Google Scholar] [CrossRef]

- Zhou, J.; Francois, T.; Tony, P.; John, D.; Daniel, R.; Neil, H.; Simon, G.; Cheng, T.; Zhu, Y.; Wang, X.; et al. Plant phenomics: History, present status and challenges. J. Nanjing Agric. Univ. 2018, 41, 580–588, (In Chinese with English abstract). [Google Scholar]

- Hu, C.; Pan, Z.; Li, P. A 3D Point Cloud Filtering Method for Leaves Based on Manifold Distance and Normal Estimation. Remote Sens. 2019, 11, 198. [Google Scholar] [CrossRef]

- Zhang, D. Research on Plant’s Three-Dimensional Information Detection and Visual Servo Controlling Technology. Ph.D. Thesis, China Agricultural University, Beijing, China, 2014. [Google Scholar]

- De Castro, A.; Jiménez-Brenes, F.; Torres-Sánchez, J.; Peña, J.; Borra-Serrano, I.; López-Granados, F. 3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications. Remote Sens. 2018, 10, 584. [Google Scholar] [CrossRef]

- Bremer, M.; Wichmann, V.; Rutzinger, M. Calibration and Validation of a Detailed Architectural Canopy Model Reconstruction for the Simulation of Synthetic Hemispherical Images and Airborne LiDAR Data. Remote Sens. 2017, 9, 220. [Google Scholar] [CrossRef]

- Sanz, R.; Rosell, J.; Llorens, J.; Gil, E.; Planas, S. Relationship between tree row LIDAR-volume and leaf area density for fruit orchards and vineyards obtained with a LIDAR 3D Dynamic Measurement System. Agric. For. Meteorol. 2013, 171–172, 153–162. [Google Scholar] [CrossRef]

- Ricardo, S.; Jordi, L.; Alexandre, E.; Jaume, A.; Santiago, P.; Carla, R.; Joan, R. LIDAR and non-LIDAR-based canopy parameters to estimate the leaf area in fruit trees and vineyard. Agric. For. Meteorol. 2018, 260–261, 229–239. [Google Scholar] [CrossRef]

- Nguyen, T.; Vandevoorde, K.; Wouters, N.; Kayacan, E.; Baerdemaeker, J.; Saeys, W. Detection of red and bicoloured apples on tree with an RGB-D camera. Biosyst. Eng. 2016, 146, 33–44. [Google Scholar] [CrossRef]

- Narváez, F.; Pedregal, J.; Prieto, P.; Torres-Torriti, M.; Cheein, F. LiDAR and thermal images fusion for ground-based 3D characterisation of fruit trees. Biosyst. Eng. 2016, 151, 479–494. [Google Scholar] [CrossRef]

- Ana, C.; Jorge, T.; Jose, P.; Francisco, J.; Ovidiu, C.; Francisca, L. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Hu, Z. Research on Automatic Spraying Method of Fruit Trees. Master’s Thesis, Yantai University, Yantai, China, 2017. [Google Scholar]

- Sun, Z.; Lu, S.; Guo, X.; Wen, W. Surfaces reconstruction of plant leaves based on point cloud data. Trans. Chin. Soc. Agric. Mach. 2012, 28, 184–190, (In Chinese with English abstract). [Google Scholar]

- Sun, G.; Wang, X. Three-Dimensional Point Cloud Reconstruction and Morphology Measurement Method for Greenhouse Plants Based on the Kinect Sensor Self-Calibration. Agronomy 2019, 9, 596. [Google Scholar] [CrossRef]

- Chen, C.; Chen, P.; Hsu, C. Three-Dimensional Object Recognition and Registration for Robotic Grasping Systems Using a Modified Viewpoint Feature Histogram. Sensors 2016, 16, 1969. [Google Scholar] [CrossRef] [PubMed]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. Evaluating the Performance of Photogrammetric Products Using Fixed-Wing UAV Imagery over a Mixed Conifer–Broadleaf Forest: Comparison with Airborne Laser Scanning. Remote Sens. 2018, 10, 187. [Google Scholar] [CrossRef]

- Ding, W.; Zhao, S.; Zhao, S.; Gu, J.; Qiu, W.; Guo, B. Measurement Methods of Fruit Tree Canopy Volume Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2016, 47. (In Chinese with English abstract). [Google Scholar] [CrossRef]

- Sun, C.; Qiu, W.; Ding, W.; Gu, J.; Zhao, S. Design and Experiment for Crops Geometrical Feature Measuring System. Trans. Chin. Soc. Agric. Mach. 2015, 46, 1–10, (In Chinese with English abstract). [Google Scholar] [CrossRef]

- Esther, E.; Micha, S.; Alon, E. On the performance of the ICP algorithm. Comput. Geom. 2008, 41, 1–2. [Google Scholar] [CrossRef]

| Parameter | Visual Angle | R2 | RMSE | ARE |

|---|---|---|---|---|

| Height (H) | V1 | 0.91 | 12.28 | 3.8% |

| V2 | 0.84 | 13.79 | 3.3% | |

| V1 and V2 | 0.96 | 8.72 | 2.5% | |

| Width (W) | V1 | 0.86 | 26.09 | 12.7% |

| V2 | 0.91 | 20.27 | 9.5% | |

| V1 and V2 | 0.97 | 9.71 | 3.6% | |

| Thickness (D) | V1 | 0.73 | 13.38 | 5.0% |

| V2 | 0.67 | 14.34 | 4.9% | |

| V1 and V2 | 0.82 | 9.12 | 3.2% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, H.; Wang, X.; Sun, G. Three-Dimensional Morphological Measurement Method for a Fruit Tree Canopy Based on Kinect Sensor Self-Calibration. Agronomy 2019, 9, 741. https://doi.org/10.3390/agronomy9110741

Yang H, Wang X, Sun G. Three-Dimensional Morphological Measurement Method for a Fruit Tree Canopy Based on Kinect Sensor Self-Calibration. Agronomy. 2019; 9(11):741. https://doi.org/10.3390/agronomy9110741

Chicago/Turabian StyleYang, Haihui, Xiaochan Wang, and Guoxiang Sun. 2019. "Three-Dimensional Morphological Measurement Method for a Fruit Tree Canopy Based on Kinect Sensor Self-Calibration" Agronomy 9, no. 11: 741. https://doi.org/10.3390/agronomy9110741

APA StyleYang, H., Wang, X., & Sun, G. (2019). Three-Dimensional Morphological Measurement Method for a Fruit Tree Canopy Based on Kinect Sensor Self-Calibration. Agronomy, 9(11), 741. https://doi.org/10.3390/agronomy9110741