AgROS: A Robot Operating System Based Emulation Tool for Agricultural Robotics

Abstract

:1. Introduction

- connected equipment and farmers’ interaction with legacy technology;

- automation in agricultural operations; and

- scientific assessment methods for real-time monitoring of input requirements and farming outputs.

- Research Question #1: What are the challenges encountered by current decision support tools for the predictive analysis performance and ex-ante evaluation of digital applications to farming operations?

- Research Question #2: Which characteristics should be included in a farm management emulation-based tool to enable farmers effectively introduce advanced robotic technologies in real-world field operations?

2. Materials and Methods

2.1. Critical Taxonomy

2.2. AgROS Development

3. Robotic Technology-Enabled Agricultural Systems: Background

3.1. Cyber Space: Simulation and Emulation Modelling

3.2. Physical Space: Real-World Implementation

3.3. Cyber–Physical Interface: Joint Simulation, Emulation and Real-World Implementations

4. AgROS: An Emulation Tool for Agriculture

4.1. System Architecture

- the ROS platform;

- the Gazebo 3D scenery environment;

- the Open Street Maps tool;

- several 3D models to represent objects on the agricultural scenery; and

- the business logic layer that enables a vehicle’s autonomous movement in the virtual world.

4.2. Workflow

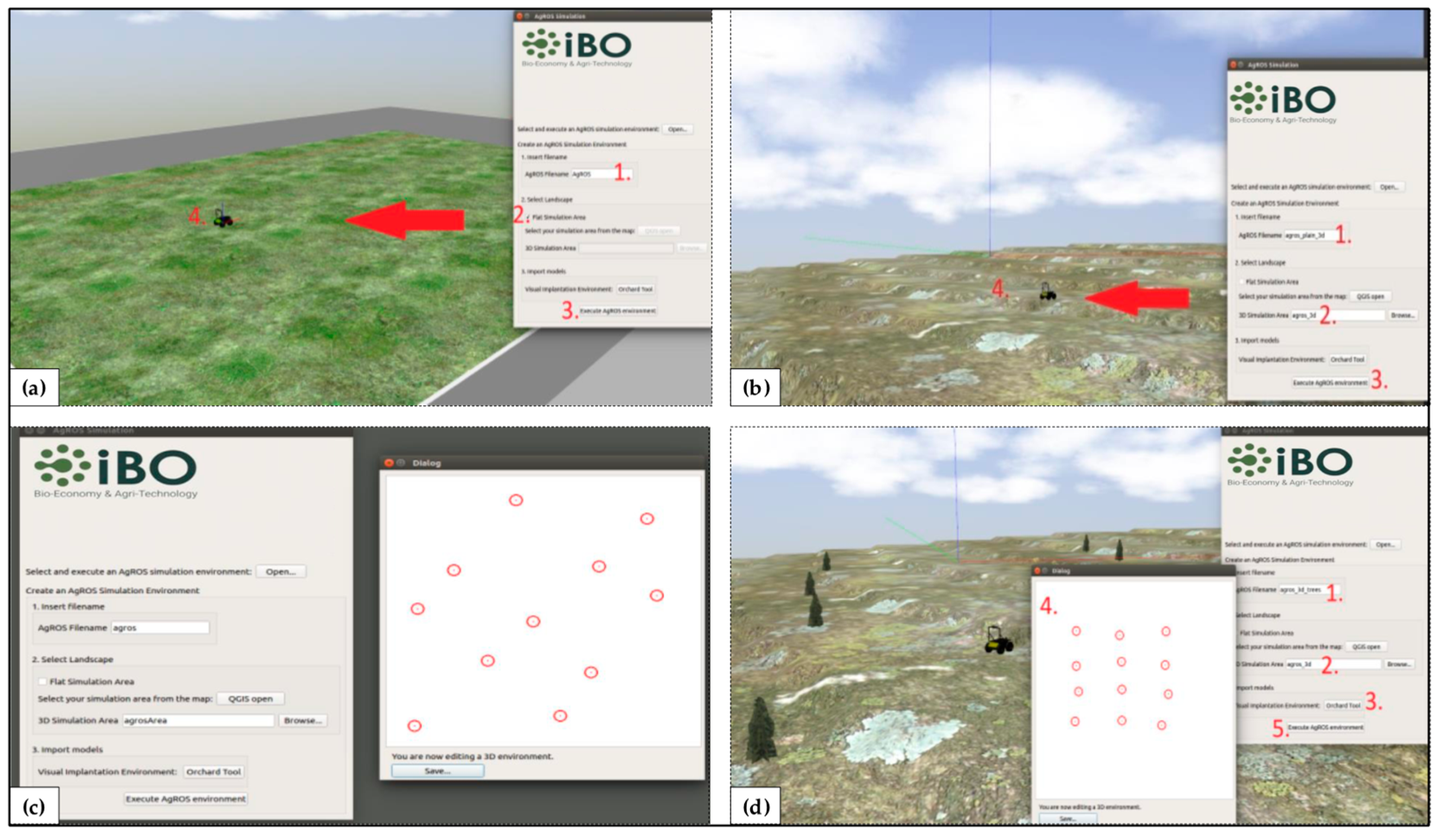

4.3. Graphical User Interface

- Step 1 – create a ROS compatible executable file (or edit an existing one);

- Step 2 – create a 2D or 3D simulation environment using QGIS, an open source geographic information system tool (field landscape);

- Step 3 – enrich the field by virtually planting trees and placing UGVs;

- Step 4 – explore the field in Gazebo’s simulation environment using routing and object recognition algorithms; and

- Step 5 – execute the final simulation based on the ROS backend.

4.3.1. Field Layout Environment

4.3.2. Static and Dynamic Algorithms

4.4. Real-World Implementation

5. Conclusions

5.1. Scientific Implications

5.2. Practical Implications

5.3. Limitations

5.4. Future Research

Author Contributions

Funding

Conflicts of Interest

References

- Tzounis, A.; Katsoulas, N.; Bartzanas, T.; Kittas, C. Internet of Things in agriculture, recent advances and future challenges. Biosyst. Eng. 2017, 164, 31–48. [Google Scholar] [CrossRef]

- Garnett, T.; Appleby, M.C.; Balmford, A.; Bateman, I.J.; Benton, T.G.; Bloomer, P.; Burlingame, B.; Dawkins, M.; Dolan, L.; Fraser, D.; et al. Sustainable intensification in agriculture: Premises and policies. Science 2013, 341, 33–34. [Google Scholar] [CrossRef] [PubMed]

- Sundmaeker, H.; Verdouw, C.; Wolfert, S.; Pérez Freire, L. Internet of food and farm 2020. In Digitising the Industry—Internet of Things Connecting Physical, Digital and Virtual Worlds, 1st ed.; Vermesan, O., Friess, P., Eds.; River Publishers: Gistrup, Denmark; Delft, The Netherlands, 2016; pp. 129–151. [Google Scholar]

- Vasconez, J.P.; Kantor, G.A.; Auat Cheein, F.A. Human–robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Kunz, C.; Weber, J.F.; Gerhards, R. Benefits of precision farming technologies for mechanical weed control in soybean and sugar beet—Comparison of precision hoeing with conventional mechanical weed control. Agronomy 2015, 5, 130–142. [Google Scholar] [CrossRef]

- Bonneau, V.; Copigneaux, B. Industry 4.0 in Agriculture: Focus on IoT Aspects; Directorate-General Internal Market, Industry, Entrepreneurship and SMEs; Directorate F: Innovation and Advanced Manufacturing; Unit F/3 KETs, Digital Manufacturing and Interoperability by the consortium; European Commission: Brussels, Belgium, 2017. [Google Scholar]

- Lampridi, M.G.; Kateris, D.; Vasileiadis, G.; Marinoudi, V.; Pearson, S.; Sørensen, C.G.; Balafoutis, A.; Bochtis, D. A case-based economic assessment of robotics employment in precision arable farming. Agronomy 2019, 9, 175. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Miranda, J.; Ponce, P.; Molina, A.; Wright, P. Sensing, smart and sustainable technologies for Agri-Food 4.0. Comput. Ind. 2019, 108, 21–36. [Google Scholar] [CrossRef]

- Rose, D.C.; Morris, C.; Lobley, M.; Winter, M.; Sutherland, W.J.; Dicks, L.V. Exploring the spatialities of technological and user re-scripting: The case of decision support tools in UK agriculture. Geoforum 2018, 89, 11–18. [Google Scholar] [CrossRef]

- El Bilali, H.; Allahyari, M.S. Transition towards sustainability in agriculture and food systems: Role of information and communication technologies. Inf. Process. Agric. 2018, 5, 456–464. [Google Scholar] [CrossRef]

- Tsolakis, N.; Bechtsis, D.; Srai, J.S. Intelligent autonomous vehicles in digital supply chains: From conceptualisation, to simulation modelling, to real-world operations. Bus. Process Manag. J. 2019, 25, 414–437. [Google Scholar] [CrossRef]

- Rose, D.C.; Sutherland, W.J.; Parker, C.; Lobley, M.; Winter, M.; Morris, C.; Twining, S.; Ffoulkes, C.; Amano, T.; Dicks, L.V. Decision support tools for agriculture: Towards effective design and delivery. Agric. Syst. 2016, 149, 165–174. [Google Scholar] [CrossRef] [Green Version]

- Tao, F.; Zhang, M.; Nee, A.Y.C. Chapter 12—Digital Twin, Cyber–Physical System, and Internet of Things. In Digital Twin Driven Smart Manufacturing, 1st ed.; Tao, F., Zhang, M., Nee, A.Y.C., Eds.; Academic Press: New York, NY, USA, 2019; pp. 243–256. [Google Scholar]

- Rosen, R.; von Wichert, G.; Lo, G.; Bettenhausen, K.D. About the importance of autonomy and digital twins for the future of manufacturing. IFAC-PapersOnLine 2015, 48, 567–572. [Google Scholar] [CrossRef]

- Leeuwis, C. Communication for Rural Innovation. Rethinking Agricultural Extension; Blackwell Science: Oxford, UK, 2004. [Google Scholar]

- Ayani, M.; Ganebäck, M.; Ng, A.H.C. Digital Twin: Applying emulation for machine reconditioning. Procedia CIRP 2018, 72, 243–248. [Google Scholar] [CrossRef]

- McGregor, I. The relationship between simulation and emulation. In Proceedings of the 2002 Winter Simulation Conference, San Diego, CA, USA, 8–11 December 2002; Volume 2, pp. 1683–1688. [Google Scholar] [CrossRef]

- Zambon, I.; Cecchini, M.; Egidi, G.; Saporito, M.G.; Colantoni, A. Revolution 4.0: Industry vs. agriculture in a future development for SMEs. Processes 2019, 7, 36. [Google Scholar] [CrossRef]

- Espinasse, B.; Ferrarini, A.; Lapeyre, R. A multi-agent system for modelisation and simulation-emulation of supply-chains. IFAC Proc. Vol. 2000, 33, 413–418. [Google Scholar] [CrossRef]

- Mongeon, P.; Paul-Hus, A. The journal coverage of Web of Science and Scopus: A comparative analysis. Scientometrics 2016, 106, 213–228. [Google Scholar] [CrossRef]

- Bechtsis, D.; Moisiadis, V.; Tsolakis, N.; Vlachos, D.; Bochtis, D. Unmanned Ground Vehicles in precision farming services: An integrated emulation modelling approach. In Information and Communication Technologies in Modern Agricultural Development; HAICTA 2017, Communications in Computer and Information Science; Salampasis, M., Bournaris, T., Eds.; Springer: Cham, Switzerland, 2019; Volume 953, pp. 177–190. [Google Scholar] [CrossRef]

- Astolfi, P.; Gabrielli, A.; Bascetta, L.; Matteucci, M. Vineyard autonomous navigation in the Echord++ GRAPE experiment. IFAC-PapersOnLine 2018, 51, 704–709. [Google Scholar] [CrossRef]

- Ayadi, N.; Maalej, B.; Derbel, N. Optimal path planning of mobile robots: A comparison study. In Proceedings of the 15th International Multi-Conference on Systems, Signals & Devices (SSD), Hammamet, Tunisia, 19–22 March 2018; pp. 988–994. [Google Scholar] [CrossRef]

- Backman, J.; Kaivosoja, J.; Oksanen, T.; Visala, A. Simulation environment for testing guidance algorithms with realistic GPS noise model. IFAC-PapersOnLine 2010, 3 Pt 1, 139–144. [Google Scholar] [CrossRef]

- Bayar, G.; Bergerman, M.; Koku, A.B.; Konukseven, E.I. Localization and control of an autonomous orchard vehicle. Comput. Electron. Agric. 2015, 115, 118–128. [Google Scholar] [CrossRef] [Green Version]

- Blok, P.M.; van Boheemen, K.; van Evert, F.K.; Kim, G.-H.; IJsselmuiden, J. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electron. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Bochtis, D.; Griepentrog, H.W.; Vougioukas, S.; Busato, P.; Berruto, R.; Zhou, K. Route planning for orchard operations. Comput. Electron. Agric. 2015, 113, 51–60. [Google Scholar] [CrossRef]

- Cariou, C.; Gobor, Z. Trajectory planning for robotic maintenance of pasture based on approximation algorithms. Biosyst. Eng. 2018, 174, 219–230. [Google Scholar] [CrossRef] [Green Version]

- Christiansen, M.P.; Laursen, M.S.; Jørgensen, R.N.; Skovsen, S.; Gislum, R. Designing and testing a UAV mapping system for agricultural field surveying. Sensors 2017, 17, 2703. [Google Scholar] [CrossRef] [PubMed]

- De Preter, A.; Anthonis, J.; De Baerdemaeker, J. Development of a robot for harvesting strawberries. IFAC-PapersOnLine 2018, 51, 14–19. [Google Scholar] [CrossRef]

- De Sousa, R.V.; Tabile, R.A.; Inamasu, R.Y.; Porto, A.J.V. A row crop following behavior based on primitive fuzzy behaviors for navigation system of agricultural robots. IFAC Proc. Vol. 2013, 46, 91–96. [Google Scholar] [CrossRef]

- Demim, F.; Nemra, A.; Louadj, K. Robust SVSF-SLAM for unmanned vehicle in unknown environment. IFAC-PapersOnLine 2016, 49, 386–394. [Google Scholar] [CrossRef]

- Durmus, H.; Gunes, E.O.; Kirci, M. Data acquisition from greenhouses by using autonomous mobile robot. In Proceedings of the 5th International Conference on Agro-Geoinformatics, Tianjin, China, 18–20 July 2016; pp. 5–9. [Google Scholar] [CrossRef]

- Emmi, L.; Paredes-Madrid, L.; Ribeiro, A.; Pajares, G.; Gonzalez-De-Santos, P. Fleets of robots for precision agriculture: A simulation environment. Ind. Robot 2013, 40, 41–58. [Google Scholar] [CrossRef]

- Ericson, S.K.; Åstrand, B.S. Analysis of two visual odometry systems for use in an agricultural field environment. Biosyst. Eng. 2018, 166, 116–125. [Google Scholar] [CrossRef] [Green Version]

- Gan, H.; Lee, W.S. Development of a navigation system for a smart farm. IFAC-PapersOnLine 2018, 51, 1–4. [Google Scholar] [CrossRef]

- Habibie, N.; Nugraha, A.M.; Anshori, A.Z.; Masum, M.A.; Jatmiko, W. Fruit mapping mobile robot on simulated agricultural area in Gazebo simulator using simultaneous localization and mapping (SLAM). In Proceedings of the 2017 International Symposium on Micro-NanoMechatronics and Human Science (MHS), Nagoya, Japan, 3–6 December 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Hameed, I.A.; Member, S. Coverage path planning software for autonomous robotic lawn mower using Dubins’ curve. In Proceedings of the 2017 IEEE International Conference on Real-time Computing and Robotics (RCAR), Okinawa, Japan, 14–18 July 2017; pp. 517–522. [Google Scholar] [CrossRef]

- Hameed, I.A.; Bochtis, D.D.; Sørensen, C.G.; Jensen, A.L.; Larsen, R. Optimized driving direction based on a three-dimensional field representation. Comput. Electron. Agric. 2013, 91, 145–153. [Google Scholar] [CrossRef]

- Hameed, I.A.; La Cour-Harbo, A.; Osen, O.L. Side-to-side 3D coverage path planning approach for agricultural robots to minimize skip/overlap areas between swaths. Robot. Auton. Syst. 2016, 76, 36–45. [Google Scholar] [CrossRef]

- Han, X.Z.; Kim, H.J.; Kim, J.Y.; Yi, S.Y.; Moon, H.C.; Kim, J.H.; Kim, Y.J. Path-tracking simulation and field tests for an auto-guidance tillage tractor for a paddy field. Comput. Electron. Agric. 2015, 112, 161–171. [Google Scholar] [CrossRef]

- Hansen, K.D.; Garcia-Ruiz, F.; Kazmi, W.; Bisgaard, M.; la Cour-Harbo, A.; Rasmussen, J.; Andersen, H.J. An autonomous robotic system for mapping weeds in fields. IFAC Proc. Vol. 2013, 46, 217–224. [Google Scholar] [CrossRef]

- Hansen, S.; Blanke, M.; Andersen, J.C. Autonomous tractor navigation in orchard—Diagnosis and supervision for enhanced availability. IFAC Proc. Vol. 2009, 42, 360–365. [Google Scholar] [CrossRef]

- Harik, E.H.; Korsaeth, A. Combining hector SLAM and artificial potential field for autonomous navigation inside a greenhouse. Robotics 2018, 7, 22. [Google Scholar] [CrossRef]

- Jensen, K.; Larsen, M.; Jørgensen, R.; Olsen, K.; Larsen, L.; Nielsen, S. Towards an open software platform for field robots in precision agriculture. Robotics 2014, 3, 207–234. [Google Scholar] [CrossRef]

- Khan, N.; Medlock, G.; Graves, S.; Anwar, S. GPS guided autonomous navigation of a small agricultural robot with automated fertilizing system. SAE Tech. Pap. Ser. 2018, 1, 1–7. [Google Scholar] [CrossRef]

- Kim, D.H.; Choi, C.H.; Kim, Y.J. Analysis of driving performance evaluation for an unmanned tractor. IFAC-PapersOnLine 2018, 51, 227–231. [Google Scholar] [CrossRef]

- Kurashiki, K.; Fukao, T.; Nagata, J.; Ishiyama, K.; Kamiya, T.; Murakami, N. Laser-based vehicle control in orchard. IFAC Proc. Vol. 2010, 43, 127–132. [Google Scholar] [CrossRef]

- Liu, C.; Zhao, X.; Du, Y.; Cao, C.; Zhu, Z.; Mao, E. Research on static path planning method of small obstacles for automatic navigation of agricultural machinery. IFAC-PapersOnLine 2018, 51, 673–677. [Google Scholar] [CrossRef]

- Mancini, A.; Frontoni, E.; Zingaretti, P. Improving variable rate treatments by integrating aerial and ground remotely sensed data. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 856–863. [Google Scholar] [CrossRef]

- Pérez-Ruiz, M.; Gonzalez-de-Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; Peruzzi, A.; Vieri, M.; Tomic, S.; Agüera, J. Highlights and preliminary results for autonomous crop protection. Comput. Electron. Agric. 2015, 110, 150–161. [Google Scholar] [CrossRef]

- Pierzchała, M.; Giguère, P.; Astrup, R. Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Radcliffe, J.; Cox, J.; Bulanon, D.M. Machine vision for orchard navigation. Comput. Ind. 2018, 98, 165–171. [Google Scholar] [CrossRef]

- Reina, G.; Milella, A.; Galati, R. Terrain assessment for precision agriculture using vehicle dynamic modelling. Biosyst. Eng. 2017, 162, 124–139. [Google Scholar] [CrossRef]

- Santoro, E.; Soler, E.M.; Cherri, A.C. Route optimization in mechanized sugarcane harvesting. Comput. Electron. Agric. 2017, 141, 140–146. [Google Scholar] [CrossRef] [Green Version]

- Shamshiri, R.R.; Hameed, I.A.; Pitonakova, L.; Weltzien, C.; Balasundram, S.K.; Yule, I.J.; Grift, T.E.; Chowdharyet, G. Simulation software and virtual environments for acceleration of agricultural robotics: Features highlights and performance comparison. Int. J. Agric. Biol. Eng. 2018, 11, 15–31. [Google Scholar] [CrossRef]

- Thanpattranon, P.; Ahamed, T.; Takigawa, T. Navigation of autonomous tractor for orchards and plantations using a laser range finder: Automatic control of trailer position with tractor. Biosyst. Eng. 2016, 147, 90–103. [Google Scholar] [CrossRef]

- Vaeljaots, E.; Lehiste, H.; Kiik, M.; Leemet, T. Soil sampling automation case-study using unmanned ground vehicle. Eng. Rural Dev. 2018, 17, 982–987. [Google Scholar] [CrossRef]

- Vasudevan, A.; Kumar, D.A.; Bhuvaneswari, N.S. Precision farming using unmanned aerial and ground vehicles. In Proceedings of the International Conference on Technological Innovations in ICT for Agriculture and Rural Development (TIAR), Chennai, India, 15–16 July 2016; pp. 146–150. [Google Scholar] [CrossRef]

- Wang, Z.; Underwood, J.; Walsh, K.B. Machine vision assessment of mango orchard flowering. Comput. Electron. Agric. 2018, 151, 501–511. [Google Scholar] [CrossRef]

- Wang, B.; Li, S.; Guo, J.; Chen, Q. Car-like mobile robot path planning in rough terrain using multi-objective particle swarm optimization algorithm. Neurocomputing 2018, 282, 42–51. [Google Scholar] [CrossRef]

- Yakoubi, M.A.; Laskri, M.T. The path planning of cleaner robot for coverage region using genetic algorithms. J. Innov. Digit. Ecosyst. 2016, 3, 37–43. [Google Scholar] [CrossRef]

- Kostavelis, I.; Gasteratos, A. Semantic mapping for mobile robotics tasks: A survey. Robot. Auton. Syst. 2015, 66, 86–103. [Google Scholar] [CrossRef]

- D’Urso, G.; Smith, S.L.; Mettu, R.; Oksanen, T.; Fitch, R. Multi-vehicle refill scheduling with queueing. Comput. Electron. Agric. 2018, 144, 44–57. [Google Scholar] [CrossRef] [Green Version]

- Bechtsis, D.; Tsolakis, N.; Vlachos, D.; Iakovou, E. Sustainable supply chain management in the digitalisation era: The impact of Automated Guided Vehicles. J. Clean. Prod. 2017, 142 Pt 4, 3970–3984. [Google Scholar] [CrossRef]

- Rotz, S.; Gravely, E.; Mosby, I.; Duncan, E.; Finnis, E.; Horgan, M.; LeBlanc, J.; Martin, R.; Neufeld, H.T.; Nixon, A.; et al. Automated pastures and the digital divide: How agricultural technologies are shaping labour and rural communities. J. Rural Stud. 2019, 112–122. [Google Scholar] [CrossRef]

| Reference | Analysis Approach 1 | Farming Operation 2 | Farming Type 3 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | |

| [23] | ν | ν | ν | ν | ||||||||||

| [24] | ν | |||||||||||||

| [25] | ν | |||||||||||||

| [26] | ν | ν | ν | |||||||||||

| [27] | ν | ν | ||||||||||||

| [28] | ν | ν | ν | ν | ν | |||||||||

| [29] | ν | ν | ν | ν | ν | |||||||||

| [30] | ν | ν | ν | |||||||||||

| [31] | ν | ν | ν | ν | ||||||||||

| [32] | ν | ν | ||||||||||||

| [33] | ν | ν | ||||||||||||

| [34] | ν | ν | ν | |||||||||||

| [35] | ν | ν | ν | ν | ν | ν | ν | ν | ν | ν | ||||

| [36] | ν | ν | ν | ν | ν | ν | ν | ν | ν | ν | ||||

| [37] | ν | ν | ν | ν | ||||||||||

| [38] | ν | ν | ν | ν | ||||||||||

| [39] | ν | ν | ν | ν | ||||||||||

| [40] | ν | ν | ν | ν | ν | |||||||||

| [41] | ν | ν | ν | ν | ||||||||||

| [42] | ν | ν | ν | ν | ν | ν | ν | ν | ν | ν | ν | |||

| [43] | ν | ν | ν | ν | ||||||||||

| [44] | ν | ν | ν | ν | ν | |||||||||

| [45] | ν | ν | ν | ν | ν | |||||||||

| [46] | ν | ν | ν | ν | ν | ν | ν | |||||||

| [47] | ν | ν | ν | ν | ν | ν | ν | |||||||

| [48] | ν | ν | ν | ν | ν | |||||||||

| [49] | ν | ν | ν | ν | ν | ν | ||||||||

| [50] | ν | |||||||||||||

| [51] | ν | ν | ν | ν | ν | ν | ν | |||||||

| [52] | ν | ν | ν | ν | ||||||||||

| [53] | ν | ν | ν | |||||||||||

| [54] | ν | ν | ν | |||||||||||

| [55] | ν | ν | ν | |||||||||||

| [56] | ν | ν | ν | ν | ||||||||||

| [57] | ν | ν | ν | ν | ν | |||||||||

| [58] | ν | ν | ν | ν | ||||||||||

| [59] | ν | ν | ν | ν | ||||||||||

| [60] | ν | ν | ν | ν | ||||||||||

| [61] | ν | ν | ν | |||||||||||

| [62] | ν | ν | ν | |||||||||||

| [63] | ν | |||||||||||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsolakis, N.; Bechtsis, D.; Bochtis, D. AgROS: A Robot Operating System Based Emulation Tool for Agricultural Robotics. Agronomy 2019, 9, 403. https://doi.org/10.3390/agronomy9070403

Tsolakis N, Bechtsis D, Bochtis D. AgROS: A Robot Operating System Based Emulation Tool for Agricultural Robotics. Agronomy. 2019; 9(7):403. https://doi.org/10.3390/agronomy9070403

Chicago/Turabian StyleTsolakis, Naoum, Dimitrios Bechtsis, and Dionysis Bochtis. 2019. "AgROS: A Robot Operating System Based Emulation Tool for Agricultural Robotics" Agronomy 9, no. 7: 403. https://doi.org/10.3390/agronomy9070403

APA StyleTsolakis, N., Bechtsis, D., & Bochtis, D. (2019). AgROS: A Robot Operating System Based Emulation Tool for Agricultural Robotics. Agronomy, 9(7), 403. https://doi.org/10.3390/agronomy9070403