Impact of AERI Temperature and Moisture Retrievals on the Simulation of a Central Plains Severe Convective Weather Event

Abstract

:1. Introduction

2. Experiments

2.1. Model and Data Assimilation System

2.2. AERI Temperature and Water Vapor Retrievals

2.3. Forecast Period and Case Selection

2.4. Experiment Design and Evaluation Metrics

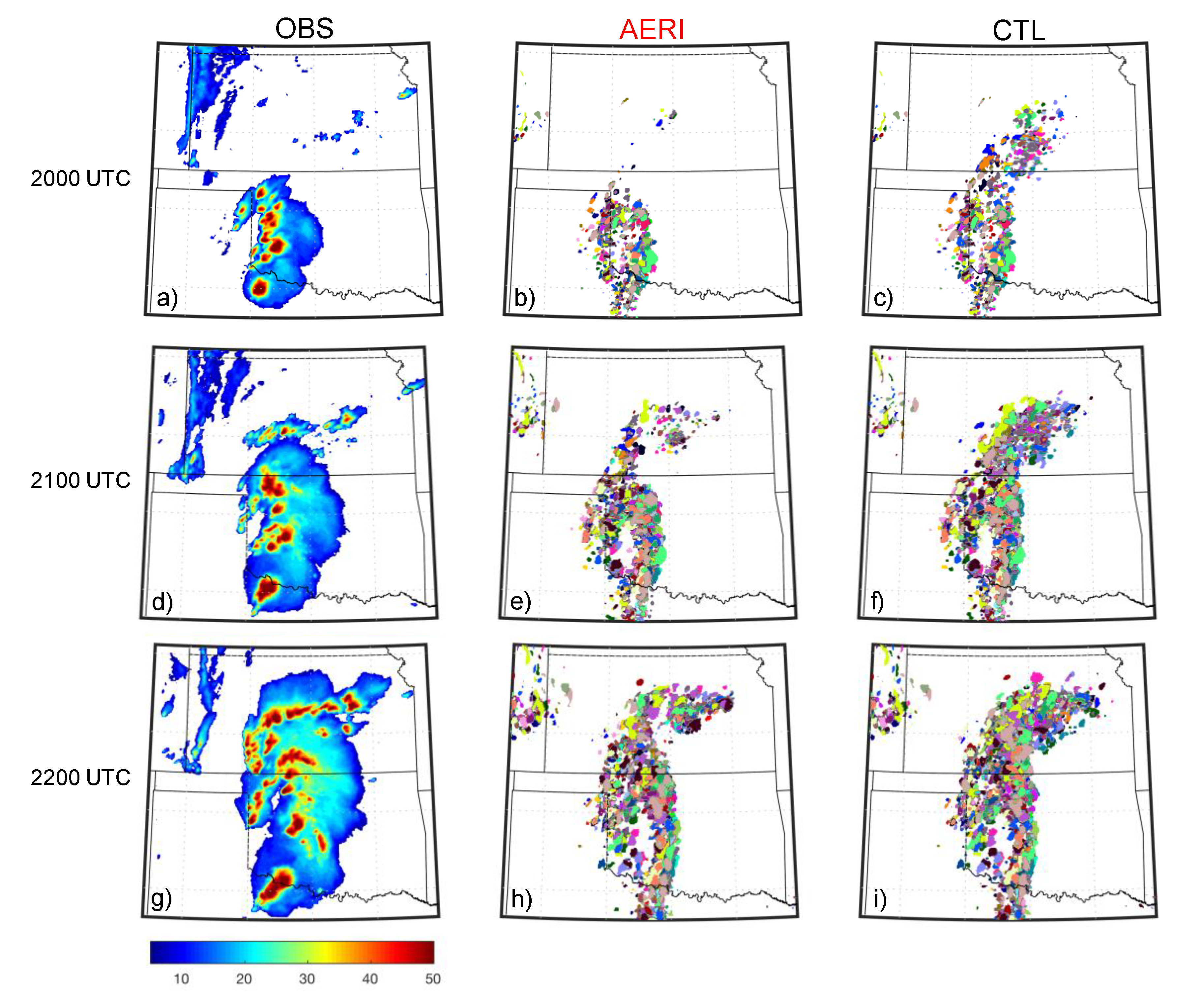

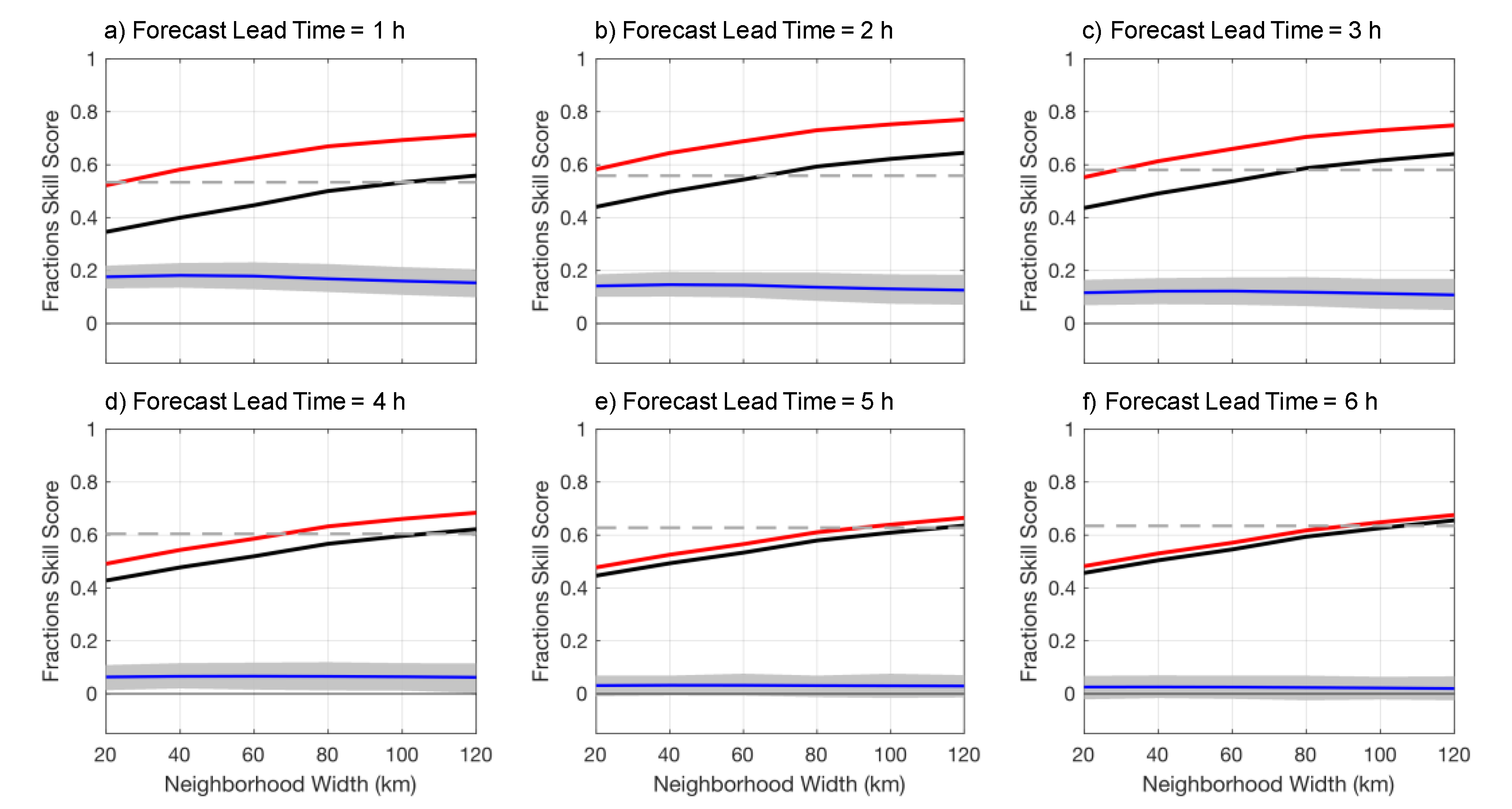

3. Results

4. Summary and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wolff, J.K.; Ferrier, B.S.; Mass, C.F. Establishing Closer Collaboration to Improve Model Physics for Short-Range Forecasts. Bull. Am. Meteorol. Soc. 2012, 93, 51. [Google Scholar] [CrossRef]

- Kwon, I.-H.; English, S.; Bell, W.; Potthast, R.; Collard, A.; Ruston, B. Assessment of Progress and Status of Data Assimilation in Numerical Weather Prediction. Bull. Am. Meteorol. Soc. 2018, 99, ES75–ES79. [Google Scholar] [CrossRef]

- Rotunno, R.; Snyder, C. A Generalization of Lorenz’s Model for the Predictability of Flows with Many Scales of Motion. J. Atmos. Sci. 2008, 65, 1063–1076. [Google Scholar] [CrossRef]

- Durran, D.; Gingrich, M. Atmospheric Predictability: Why Butterflies Are Not of Practical Importance. J. Atmos. Sci. 2014, 71, 2476–2488. [Google Scholar] [CrossRef]

- Nielsen, E.R.; Schumacher, R.S. Using Convection-Allowing Ensembles to Understand the Predictability of an Extreme Rainfall Event. Mon. Weather. Rev. 2016, 144, 3651–3676. [Google Scholar] [CrossRef]

- Stensrud, D.J.; Wicker, L.J.; Kelleher, K.E.; Xue, M.; Foster, M.P.; Schaefer, J.T.; Schneider, R.S.; Benjamin, S.G.; Weygandt, S.S.; Ferree, J.T.; et al. Convective-Scale Warn-on-Forecast System. Bull. Am. Meteorol. Soc. 2009, 90, 1487–1500. [Google Scholar] [CrossRef]

- Stensrud, D.J.; Wicker, L.J.; Xue, M.; Dawson, D.T.; Yussouf, N.; Wheatley, D.M.; Thompson, T.E.; Snook, N.A.; Smith, T.M.; Schenkman, A.D.; et al. Progress and challenges with Warn-on-Forecast. Atmos. Res. 2013, 123, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Wheatley, D.M.; Knopfmeier, K.H.; Jones, T.A.; Creager, G.J. Storm-Scale Data Assimilation and Ensemble Forecasting with the NSSL Experimental Warn-on-Forecast System. Part I: Radar Data Experiments. Weather. Forecast. 2015, 30, 1795–1817. [Google Scholar] [CrossRef]

- Jones, T.A.; Knopfmeier, K.; Wheatley, D.; Creager, G.; Minnis, P.; Palikonda, R. Storm-Scale Data Assimilation and Ensemble Forecasting with the NSSL Experimental Warn-on-Forecast System. Part II: Combined Radar and Satellite Data Experiments. Weather. Forecast. 2016, 31, 297–327. [Google Scholar] [CrossRef]

- Skamarock, W.C.; Coauthors. A description of the Advanced Research WRF version 3. NCAR Tech. Note. 2008, NCAR/TN-475+STR. p. 113.

- Anderson, J.; Hoar, T.; Raeder, K.; Liu, H.; Collins, N.; Torn, R.; Avellano, A. The Data Assimilation Research Testbed: A Community Facility. Bull. Am. Meteorol. Soc. 2009, 90, 1283–1296. [Google Scholar] [CrossRef] [Green Version]

- Knuteson, R.; Revercomb, H.E.; Best, F.A.; Ciganovich, N.C.; Dedecker, R.G.; Dirkx, T.P.; Ellington, S.C.; Feltz, W.F.; Garcia, R.K.; Howell, H.B.; et al. Atmospheric Emitted Radiance Interferometer. Part I: Instrument Design. J. Atmos. Ocean. Technol. 2004, 21, 1763–1776. [Google Scholar] [CrossRef]

- Knuteson, R.; Revercomb, H.E.; Best, F.A.; Ciganovich, N.C.; Dedecker, R.G.; Dirkx, T.P.; Ellington, S.C.; Feltz, W.F.; Garcia, R.K.; Howell, H.B.; et al. Atmospheric Emitted Radiance Interferometer. Part II: Instrument Performance. J. Atmos. Ocean. Technol. 2004, 21, 1777–1789. [Google Scholar] [CrossRef]

- Rodgers, C. Inverse Methods for Atmospheric Sounding: Theory and Practice; World Scientific: Singapore, 2000; p. 238. [Google Scholar]

- Blumberg, W.G.; Wagner, T.J.; Turner, D.D.; Correia, J. Quantifying the Accuracy and Uncertainty of Diurnal Thermodynamic Profiles and Convection Indices Derived from the Atmospheric Emitted Radiance Interferometer. J. Appl. Meteorol. Clim. 2017, 56, 2747–2766. [Google Scholar] [CrossRef]

- Wagner, T.J.; Feltz, W.F.; Ackerman, S. The Temporal Evolution of Convective Indices in Storm-Producing Environments. Weather Forecast. 2008, 23, 786–794. [Google Scholar] [CrossRef]

- Loveless, D.M.; Wagner, T.J.; Turner, D.D.; Ackerman, S.A.; Feltz, W.F. A Composite Perspective on Bore Passages during the PECAN Campaign. Mon. Weather Rev. 2019, 147, 1395–1413. [Google Scholar] [CrossRef]

- Otkin, J.; Hartung, D.C.; Turner, D.D.; Petersen, R.A.; Feltz, W.F.; Janzon, E. Assimilation of Surface-Based Boundary Layer Profiler Observations during a Cool-Season Weather Event Using an Observing System Simulation Experiment. Part I: Analysis Impact. Mon Weather. Rev. 2011, 139, 2309–2326. [Google Scholar] [CrossRef]

- Hartung, D.C.; Otkin, J.; Petersen, R.A.; Turner, D.D.; Feltz, W.F. Assimilation of Surface-Based Boundary Layer Profiler Observations during a Cool-Season Weather Event Using an Observing System Simulation Experiment. Part II: Forecast Assessment. Mon. Weather Rev. 2011, 139, 2327–2346. [Google Scholar] [CrossRef]

- Coniglio, M.C.; Romine, G.S.; Turner, D.D.; Torn, R.D. Impacts of Targeted AERI and Doppler Lidar Wind Retrievals on Short-Term Forecasts of the Initiation and Early Evolution of Thunderstorms. Mon. Weather. Rev. 2019, 147, 1149–1170. [Google Scholar] [CrossRef]

- Wagner, T.J.; Klein, P.M.; Turner, D.D. A New Generation of Ground-Based Mobile Platforms for Active and Passive Profiling of the Boundary Layer. Bull. Am. Meteorol. Soc. 2019, 100, 137–153. [Google Scholar] [CrossRef]

- Hu, J.; Yussouf, N.; Turner, D.D.; Jones, T.A.; Wang, X. Impact of Ground-Based Remote Sensing Boundary Layer Observations on Short-Term Probabilistic Forecasts of a Tornadic Supercell Event. Weather Forecast. 2019, 34, 1453–1476. [Google Scholar] [CrossRef]

- Geerts, B.; Parsons, D.; Ziegler, C.L.; Weckwerth, T.M.; Biggerstaff, M.; Clark, R.D.; Coniglio, M.C.; Demoz, B.B.; Ferrare, R.; Gallus, W.A.; et al. The 2015 Plains Elevated Convection at Night Field Project. Bull. Am. Meteorol. Soc. 2017, 98, 767–786. [Google Scholar] [CrossRef]

- Degelia, S.K.; Wang, X.; Stensrud, D.J. An Evaluation of the Impact of Assimilating AERI Retrievals, Kinematic Profilers, Rawinsondes, and Surface Observations on a Forecast of a Nocturnal Convection Initiation Event during the PECAN Field Campaign. Mon. Weather Rev. 2019, 147, 2739–2764. [Google Scholar] [CrossRef]

- Stokes, G.M.; Schwartz, S.E. The Atmospheric Radiation Measurement (ARM) program: Programmatic back- ground and design of the Cloud and Radiation Test Bed. Bull. Am. Meteor. Soc. 1994, 75, 1201–1221. [Google Scholar] [CrossRef]

- National Research Council. Observing Weather and Climate from the Ground Up: A Nationwide Network of Networks; National Academies Press: Washington, DC, USA, 2019; p. 250. Available online: www.nap.edu/catalog/12540 (accessed on 8 July 2020).

- Anderson, J.L.; Collins, N. Scalable Implementations of Ensemble Filter Algorithms for Data Assimilation. J. Atmos. Ocean. Technol. 2007, 24, 1452–1463. [Google Scholar] [CrossRef]

- Anderson, J.L. An ensemble adjustment filter for data assimilation. Mon. Weather Rev. 2001, 129, 2884–2903. [Google Scholar] [CrossRef] [Green Version]

- Boukabara, S.-A.; Zhu, T.; Tolman, H.L.; Lord, S.; Goodman, S.; Atlas, R.; Goldberg, M.; Auligne, T.; Pierce, B.; Cucurull, L.; et al. S4: An O2R/R2O Infrastructure for Optimizing Satellite Data Utilization in NOAA Numerical Modeling Systems: A Step Toward Bridging the Gap between Research and Operations. Bull. Am. Meteorol. Soc. 2016, 97, 2359–2378. [Google Scholar] [CrossRef]

- Stensrud, D.J.; Bao, J.W.; Warner, T.T. Using initial condition and model physics perturbations in short-range ensemble simulations of mesoscale convective systems. Mon. Weather Rev. 2000, 128, 2077–2107. [Google Scholar] [CrossRef]

- Fujita, T.; Stensrud, D.J.; Dowell, D.C. Surface Data Assimilation Using an Ensemble Kalman Filter Approach with Initial Condition and Model Physics Uncertainties. Mon. Weather Rev. 2007, 135, 1846–1868. [Google Scholar] [CrossRef] [Green Version]

- Ek, M.B.; Mitchell, K.E.; Lin, Y.; Rogers, E.; Grunmann, P.; Koren, V.; Gayno, G.; Tarpley, J.D. Implementation of Noah land surface model advances in the National Centers for Environmental Prediction operational mesoscale Eta model. J. Geophys. Res. Space Phys. 2003, 108, 752–771. [Google Scholar] [CrossRef]

- Gaspari, G.; Cohn, S.E. Construction of correlation functions in two and three dimensions. Q. J. R. Meteorol. Soc. 1999, 125, 723–757. [Google Scholar] [CrossRef]

- Anderson, J.L. Spatially and temporally varying adaptive covariance inflation for ensemble filters. Tellus A: Dyn. Meteorol. Oceanogr. 2009, 61, 72–83. [Google Scholar] [CrossRef]

- Turner, D.D.; Löhnert, U. Information Content and Uncertainties in Thermodynamic Profiles and Liquid Cloud Properties Retrieved from the Ground-Based Atmospheric Emitted Radiance Interferometer (AERI). J. Appl. Meteorol. Clim. 2014, 53, 752–771. [Google Scholar] [CrossRef]

- Clough, S.; Shephard, M.; Mlawer, E.; Delamere, J.; Iacono, M.; Cady-Pereira, K.; Boukabara, S.-A.; Brown, P. Atmospheric radiative transfer modeling: A summary of the AER codes. J. Quant. Spectrosc. Radiat. Transf. 2005, 91, 233–244. [Google Scholar] [CrossRef]

- Turner, D.D.; Knuteson, R.O.; Revercomb, H.E.; Lo, C.; Dedecker, R.G. Noise Reduction of Atmospheric Emitted Radiance Interferometer (AERI) Observations Using Principal Component Analysis. J. Atmos. Ocean. Technol. 2006, 23, 1223–1238. [Google Scholar] [CrossRef]

- Wulfmeyer, V.; Turner, D.D.; Baker, B.; Banta, R.; Behrendt, A.; Bonin, T.; Brewer, W.; Buban, M.; Choukulkar, A.; Dumas, E.; et al. A New Research Approach for Observing and Characterizing Land-Atmosphere Feedback. Bull. Am. Meteorol. Soc. 2018, 136, 78–97. [Google Scholar] [CrossRef]

- Schwartz, C.S.; Sobash, R.A. Generating Probabilistic Forecasts from Convection-Allowing Ensembles Using Neighborhood Approaches: A Review and Recommendations. Mon. Weather Rev. 2017, 145, 3397–3418. [Google Scholar] [CrossRef]

- Roberts, N.M. Assessing the spatial and temporal variation in the skill of precipitation forecasts from an NWP model. Meteorol. Appl. 2008, 15, 163–169. [Google Scholar] [CrossRef] [Green Version]

- Roberts, N.M.; Lean, H.W. Scale-Selective Verification of Rainfall Accumulations from High-Resolution Forecasts of Convective Events. Mon. Weather. Rev. 2008, 136, 78–97. [Google Scholar] [CrossRef]

- Smith, T.M.; Lakshmanan, V.; Stumpf, G.J.; Ortega, K.; Hondl, K.; Cooper, K.; Calhoun, K.M.; Kingfield, D.; Manross, K.L.; Toomey, R.; et al. Multi-Radar Multi-Sensor (MRMS) Severe Weather and Aviation Products: Initial Operating Capabilities. Bull. Am. Meteorol. Soc. 2016, 97, 1617–1630. [Google Scholar] [CrossRef]

- Davison, A.C.; Hinkley, D.V. Bootstrap Methods and their Application. Cambridge Series in Statistical and Probabilistic Mathematics; Cambridge University Press: Cambridge, UK, 1997; p. 592. [Google Scholar]

| EnKF Members | GEFS Members (IC/BC) | Radiation Scheme | Surface Layer Scheme | PBL Scheme |

|---|---|---|---|---|

| 1–9 | 1–9 | RRTM LW Dudhia SW | MM5 | YSU |

| 10–18 | 10–18 | RRTM LW Dudhia SW | MYNN | MYNN 2.5 |

| 19–27 | 18–10 | RRTM LW/SW | MM5 | YSU |

| 28–36 | 9–1 | RRTM LW/SW | MYNN | MYNN 2.5 |

| Parameter | Value |

|---|---|

| Filter Type | Ensemble Adjustment KF |

| Adaptive Inflation Parameters | 1.0 (initial mean), 0.6 (spread) |

| Inflation Damping Parameter | 0.9 |

| Outlier Threshold | 3.0 |

| Covariance Localization Type | Gaspari–Cohn |

| RAOB horizontal/vertical localization half-width (km) | 230/4 |

| ACARS horizontal/vertical localization half-width (km) | 230/4 |

| METAR horizontal/vertical localization half-width (km) | 60/4 |

| AERI horizontal/vertical localization half-width (km) | 200/4 |

| Simulation | 2 m Temperature (K) | 2 m Dewpoint Temperature (K) |

|---|---|---|

| CTL | 2.21 (0.69) | 1.39 (0.45) |

| AERI | 2.09 (0.61) | 1.38 (0.23) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lewis, W.E.; Wagner, T.J.; Otkin, J.A.; Jones, T.A. Impact of AERI Temperature and Moisture Retrievals on the Simulation of a Central Plains Severe Convective Weather Event. Atmosphere 2020, 11, 729. https://doi.org/10.3390/atmos11070729

Lewis WE, Wagner TJ, Otkin JA, Jones TA. Impact of AERI Temperature and Moisture Retrievals on the Simulation of a Central Plains Severe Convective Weather Event. Atmosphere. 2020; 11(7):729. https://doi.org/10.3390/atmos11070729

Chicago/Turabian StyleLewis, William E., Timothy J. Wagner, Jason A. Otkin, and Thomas A. Jones. 2020. "Impact of AERI Temperature and Moisture Retrievals on the Simulation of a Central Plains Severe Convective Weather Event" Atmosphere 11, no. 7: 729. https://doi.org/10.3390/atmos11070729

APA StyleLewis, W. E., Wagner, T. J., Otkin, J. A., & Jones, T. A. (2020). Impact of AERI Temperature and Moisture Retrievals on the Simulation of a Central Plains Severe Convective Weather Event. Atmosphere, 11(7), 729. https://doi.org/10.3390/atmos11070729