Machine Learning Dynamic Ensemble Methods for Solar Irradiance and Wind Speed Predictions

Abstract

:1. Introduction

2. Location and Data

2.1. Wind Speed Data

2.2. Irradiance Data

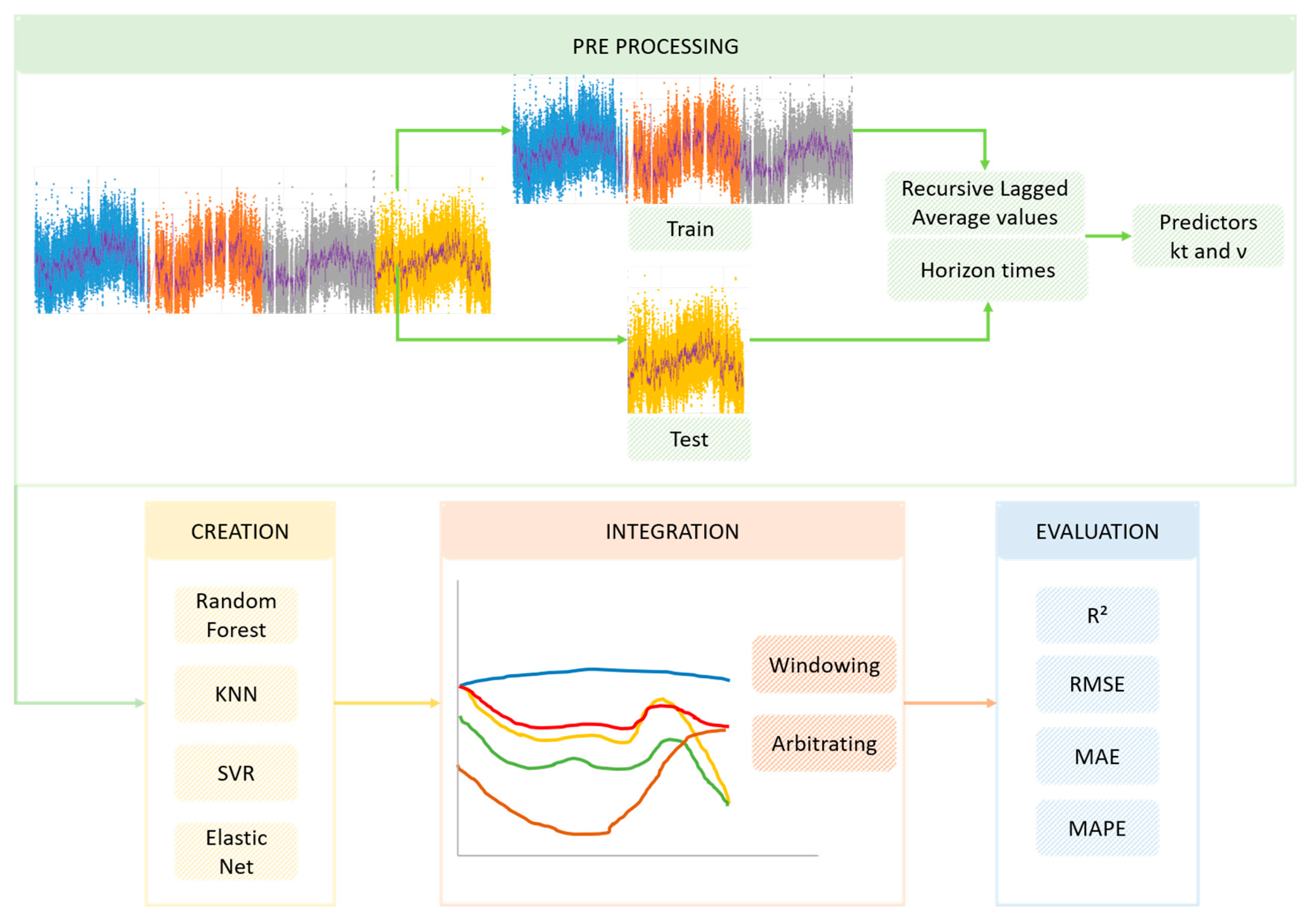

3. Methodology

3.1. Windowing Method

3.2. Arbitrating Method

3.3. Machine Learning Prediction Models and Dynamic Ensemble Method Parameters

3.4. Performance Metrics Comparison Criteria

- Coefficient of determination (R2)

- Root mean squared error (RMSE)

- Mean absolute error (MAE)

- Mean absolute percentage error (MAPE)

4. Results and Discussion

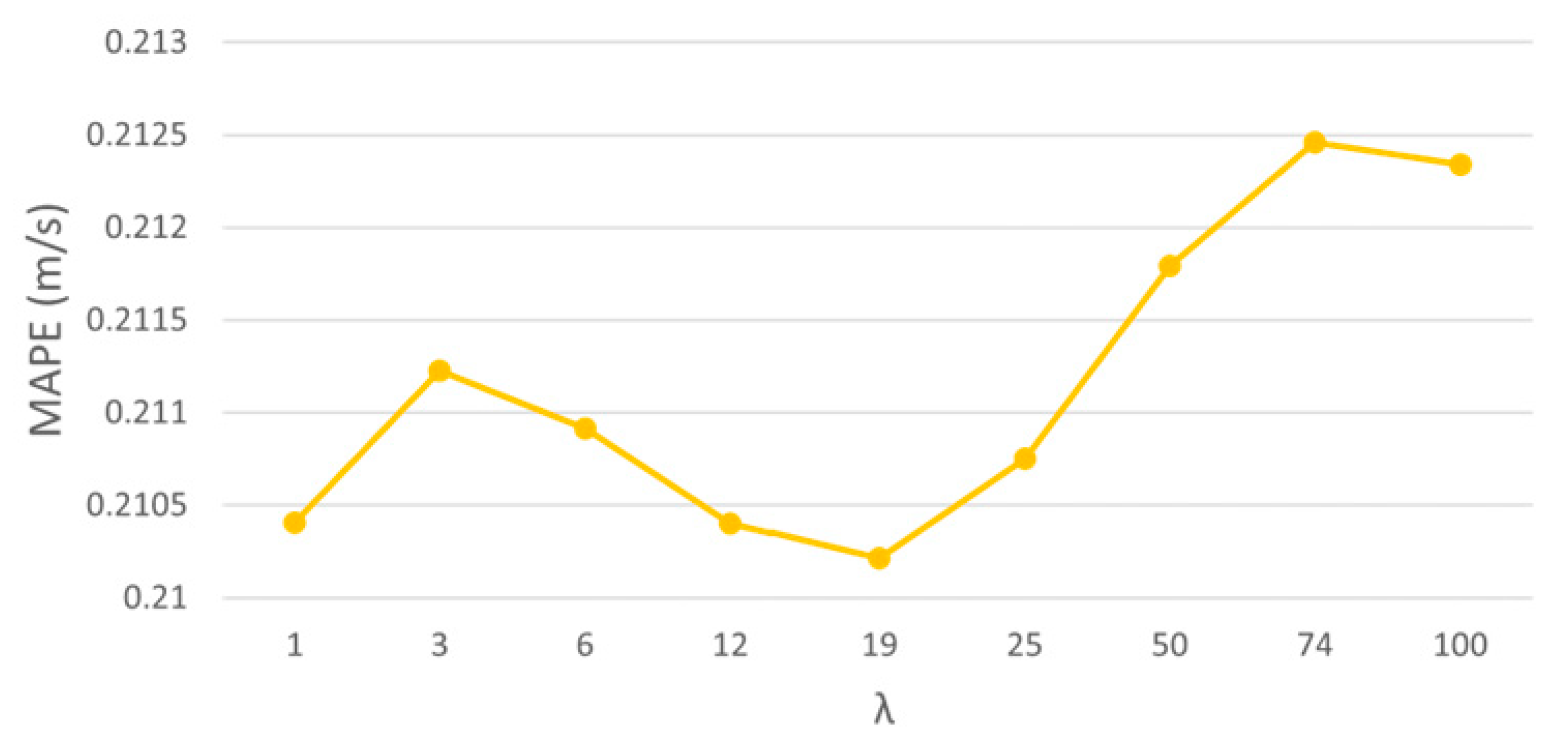

4.1. Wind Speed Predictions

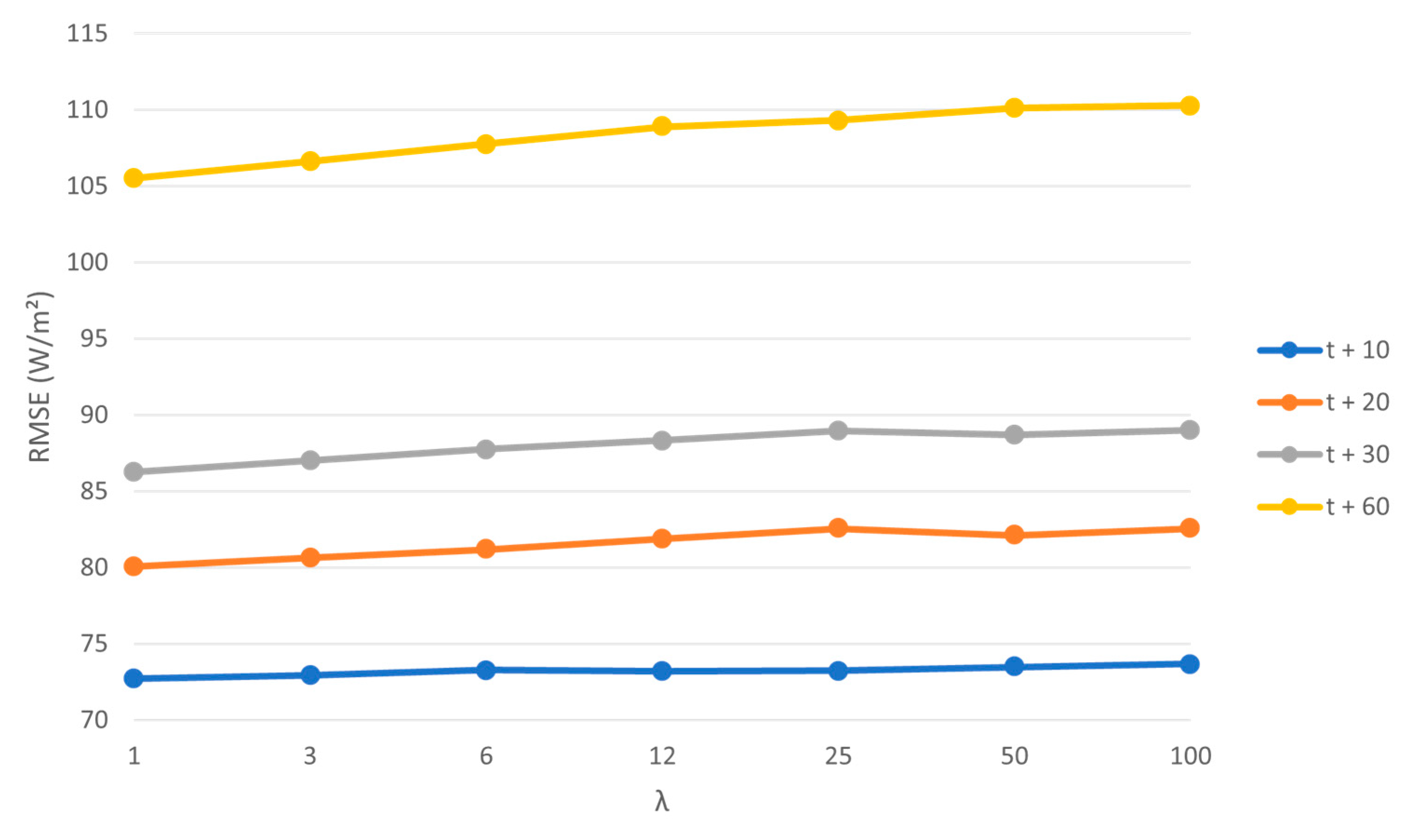

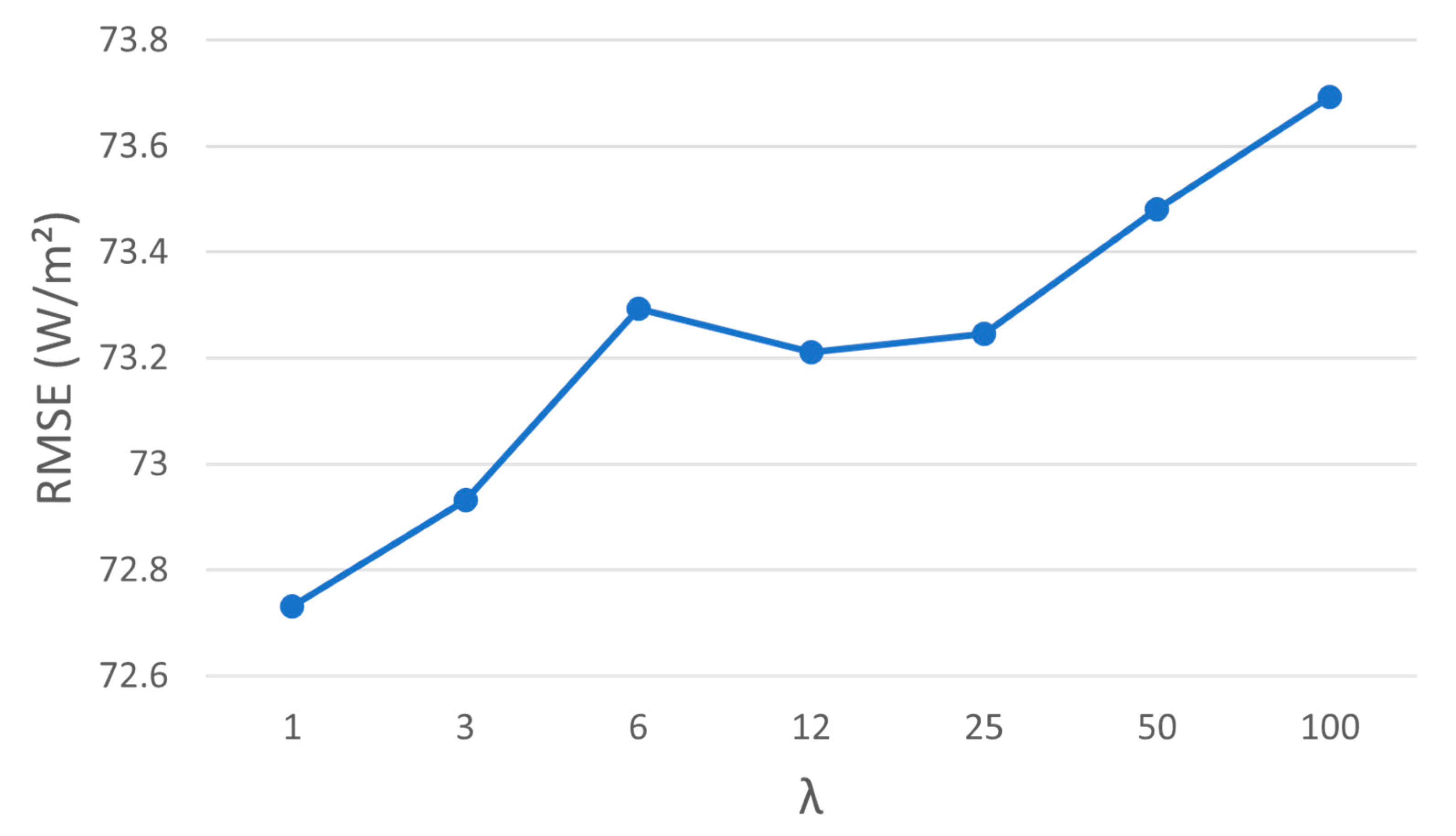

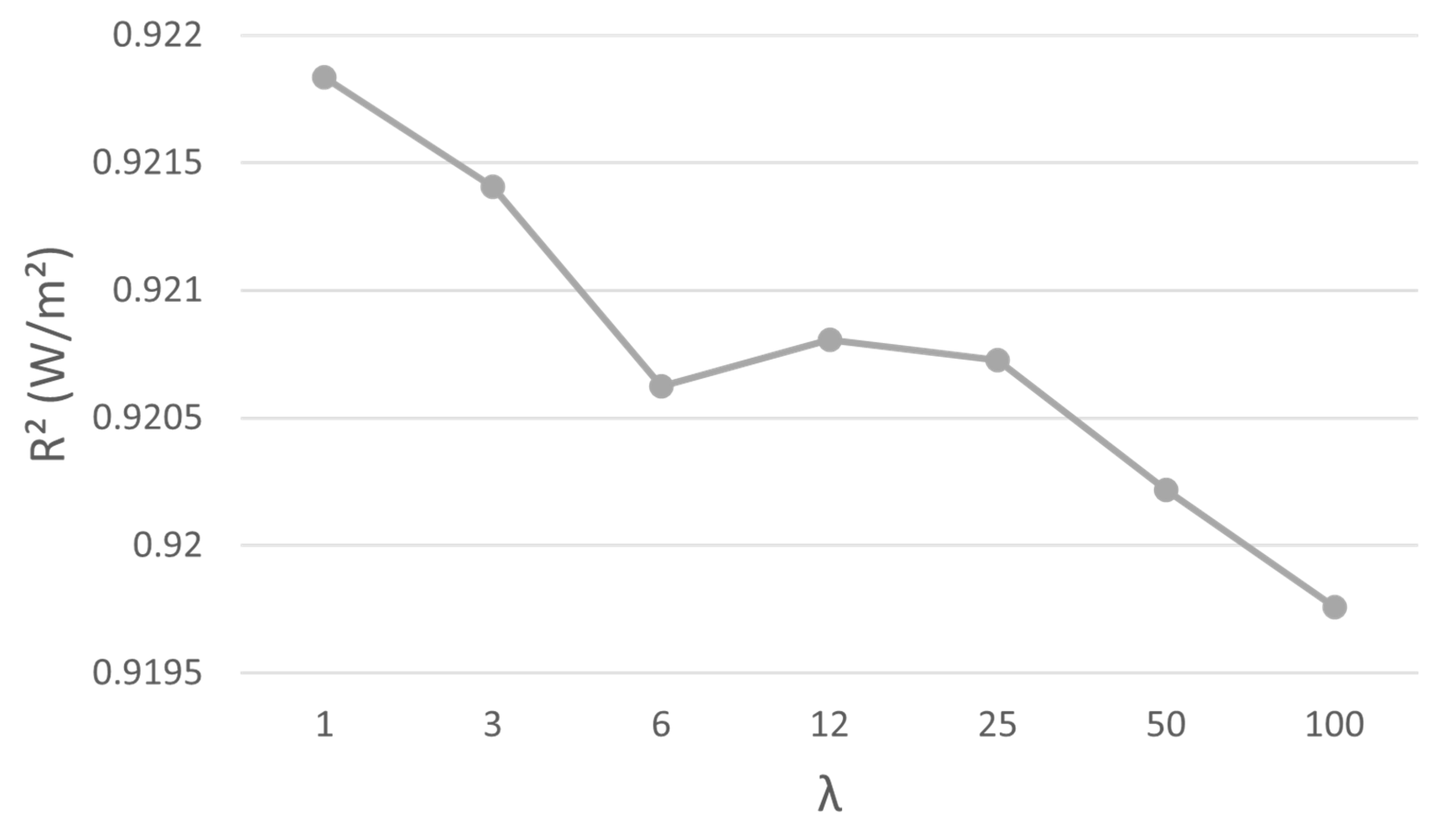

4.2. Irradiance Predictions

4.3. Comparison with Results from the Literature

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Osman, A.I.; Chen, L.; Yang, M.; Msigwa, G.; Farghali, M.; Fawzy, S.; Rooney, D.W.; Yap, P.S. Cost, environmental impact, and resilience of renewable energy under a changing climate: A review. Environ. Chem. Lett. 2022, 21, 741–764. [Google Scholar] [CrossRef]

- Calif, R.; Schmitt, F.G.; Duran Medina, O. −5/3 Kolmogorov turbulent behavior and Intermittent Sustainable Energies. In Sustainable Energy-Technological Issues, Applications and Case Studies; Zobaa, A., Abdel Aleem, S., Affi, S.N., Eds.; Intech: London, UK, 2016. [Google Scholar] [CrossRef]

- Carneiro, T.C.; de Carvalho, P.C.M.; dos Santos, H.A.; Lima, M.A.F.B.; de Souza Braga, A.P. Review on Pho-tovoltaic Power and Solar Resource Forecasting: Current Status and Trends. J. Sol. Energy Eng. Trans. ASME 2022, 144, 010801. [Google Scholar] [CrossRef]

- Shikhovtsev, A.Y.; Kovadlo, P.G.; Kiselev, A.V.; Eselevich, M.V.; Lukin, V.P. Application of Neural Networks to Estimation and Prediction of Seeing at the Large Solar Telescope Site. Publ. Astron. Soc. Pac. 2023, 135, 014503. [Google Scholar] [CrossRef]

- Yuval, J.; O’Gorman, P.A. Neural-Network Parameterization of Subgrid Momentum Transport in the Atmosphere. J. Adv. Model. Earth Syst. 2023, 15, e2023MS003606. [Google Scholar] [CrossRef]

- Meenal, R.; Binu, D.; Ramya, K.C.; Michael, P.A.; Vinoth Kumar, K.; Rajasekaran, E.; Sangeetha, B. Weather Forecasting for Renewable Energy System: A Review. Arch. Comput. Methods Eng. 2022, 29, 2875–2891. [Google Scholar] [CrossRef]

- Mesa-Jiménez, J.J.; Tzianoumis, A.L.; Stokes, L.; Yang, Q.; Livina, V.N. Long-term wind and solar energy generation forecasts, and optimisation of Power Purchase Agreements. Energy Rep. 2023, 9, 292–302. [Google Scholar] [CrossRef]

- Rocha, P.A.C.; Fernandes, J.L.; Modolo, A.B.; Lima, R.J.P.; da Silva, M.E.V.; Bezerra, C.A.D. Estimation of daily, weekly and monthly global solar radiation using ANNs and a long data set: A case study of Fortaleza, in Brazilian Northeast region. Int. J. Energy Environ. Eng. 2019, 10, 319–334. [Google Scholar] [CrossRef]

- Du, L.; Gao, R.; Suganthan, P.N.; Wang, D.Z.W. Bayesian optimization based dynamic ensemble for time series forecasting. Inf. Sci. 2022, 591, 155–175. [Google Scholar] [CrossRef]

- Vapnik, V.N. Adaptive and Learning Systems for Signal Processing, Communications and Control. In The Nature of Statistical Learning Theory; Springer: Berlin/Heidelberg, Germany, 1995. [Google Scholar]

- Smola, A. Regression Estimation with Support Vector Learning Machines. Master’s Thesis, Technische Universit at Munchen, Munich, Germany, 1996. [Google Scholar]

- Mahesh, P.V.; Meyyappan, S.; Alia, R.K.R. Support Vector Regression Machine Learning based Maximum Power Point Tracking for Solar Photovoltaic systems. Int. J. Electr. Comput. Eng. Syst. 2023, 14, 100–108. [Google Scholar] [CrossRef]

- Demir, V.; Citakoglu, H. Forecasting of solar radiation using different machine learning approaches. Neural Comput. Appl. 2023, 35, 887–906. [Google Scholar] [CrossRef]

- Schwegmann, S.; Faulhaber, J.; Pfaffel, S.; Yu, Z.; Dörenkämper, M.; Kersting, K.; Gottschall, J. Enabling Virtual Met Masts for wind energy applications through machine learning-methods. Energy AI 2023, 11, 100209. [Google Scholar] [CrossRef]

- Che, J.; Yuan, F.; Deng, D.; Jiang, Z. Ultra-short-term probabilistic wind power forecasting with spatial-temporal multi-scale features and K-FSDW based weight. Appl. Energy 2023, 331, 120479. [Google Scholar] [CrossRef]

- Nikodinoska, D.; Käso, M.; Müsgens, F. Solar and wind power generation forecasts using elastic net in time-varying forecast combinations. Appl. Energy 2022, 306, 117983. [Google Scholar] [CrossRef]

- Cerqueira, V.; Torgo, L.; Pinto, F.; Soares, C. Arbitrage of forecasting experts. Mach. Learn. 2019, 108, 913–944. [Google Scholar] [CrossRef]

- Lakku, N.K.G.; Behera, M.R. Skill and Intercomparison of Global Climate Models in Simulating Wind Speed, and Future Changes in Wind Speed over South Asian Domain. Atmosphere 2022, 13, 864. [Google Scholar] [CrossRef]

- Ji, L.; Fu, C.; Ju, Z.; Shi, Y.; Wu, S.; Tao, L. Short-Term Canyon Wind Speed Prediction Based on CNN—GRU Transfer Learning. Atmosphere 2022, 13, 813. [Google Scholar] [CrossRef]

- Su, X.; Li, T.; An, C.; Wang, G. Prediction of short-time cloud motion using a deep-learning model. Atmosphere 2020, 11, 1151. [Google Scholar] [CrossRef]

- Carneiro, T.C.; Rocha, P.A.C.; Carvalho, P.C.M.; Fernández-Ramírez, L.M. Ridge regression ensemble of machine learning models applied to solar and wind forecasting in Brazil and Spain. Appl. Energy 2022, 314, 118936. [Google Scholar] [CrossRef]

- Santos, V.O.; Rocha PA, C.; Scott, J.; Thé, J.V.G.; Gharabaghi, B. Spatiotemporal analysis of bidimensional wind speed forecasting: Development and thorough assessment of LSTM and ensemble graph neural networks on the Dutch database. Energy 2023, 278, 127852. [Google Scholar] [CrossRef]

- Marinho, F.P.; Rocha, P.A.C.; Neto, A.R.; Bezerra, F.D.V. Short-Term Solar Irradiance Forecasting Using CNN-1D, LSTM, and CNN-LSTM Deep Neural Networks: A Case Study with the Folsom (USA) Dataset. J. Sol. Energy Eng. Trans. ASME 2023, 145, 041002. [Google Scholar] [CrossRef]

- Wu, Q.; Zheng, H.; Guo, X.; Liu, G. Promoting wind energy for sustainable development by precise wind speed prediction based on graph neural networks. Renew. Energy 2022, 199, 977–992. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Thé, J.V.G.; Gharabaghi, B. Graph-Based Deep Learning Model for Forecasting Chloride Concentration in Urban Streams to Protect Salt-Vulnerable Areas. Environments 2023, 10, 157. [Google Scholar] [CrossRef]

- Tabrizi, S.E.; Xiao, K.; van Griensven Thé, J.; Saad, M.; Farghaly, H.; Yang, S.X.; Gharabaghi, B. Hourly road pavement surface temperature forecasting using deep learning models. J. Hydrol. 2021, 603, 126877. [Google Scholar] [CrossRef]

- Zhang, Y.; Gu, Z.; Thé, J.V.G.; Yang, S.X.; Gharabaghi, B. The Discharge Forecasting of Multiple Monitoring Station for Humber River by Hybrid LSTM Models. Water 2022, 14, 1794. [Google Scholar] [CrossRef]

- INPE. SONDA—Sistema de Organização Nacional de Dados Ambientais. 2012. Available online: http://sonda.ccst.inpe.br/ (accessed on 26 September 2023).

- GOOGLE. Google Earth Website. Available online: http://earth.google.com/ (accessed on 12 July 2023).

- Peel, M.C.; Finlayson, B.L.; McMahon, T.A. Updated world map of the Köppen-Geiger climate classification. Hydrol. Earth Syst. Sci. 2007, 11, 1633–1644. [Google Scholar] [CrossRef]

- Landberg, L.; Myllerup, L.; Rathmann, O.; Petersen, E.L.; Jørgensen, B.H.; Badger, J.; Mortensen, N.G. Wind resource estimation—An overview. Wind. Energy 2003, 6, 261–271. [Google Scholar] [CrossRef]

- Kasten, F.; Czeplak, G. Solar and terrestrial radiation dependent on the amount and type of cloud. Sol. Energy 1980, 24, 177–189. [Google Scholar] [CrossRef]

- Ineichen, P.; Perez, R. A new airmass independent formulation for the linke turbidity coefficient. Sol. Energy 2002, 73, 151–157. [Google Scholar] [CrossRef]

- Marquez, R.; Coimbra, C.F.M. Proposed metric for evaluation of solar forecasting models. J. Sol. Energy Eng. Trans. ASME 2013, 135, 011016. [Google Scholar] [CrossRef]

- Rocha, P.A.C.; Santos, V.O. Global horizontal and direct normal solar irradiance modeling by the machine learning methods XGBoost and deep neural networks with CNN-LSTM layers: A case study using the GOES-16 satellite imagery. Int. J. Energy Environ. Eng. 2022, 13, 1271–1286. [Google Scholar] [CrossRef]

- Cerqueira, V.; Torgo, L.; Soares, C. Arbitrated ensemble for solar radiation forecasting. In Advances in Computational Intelligence; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Neshat, M.; Nezhad, M.M.; Abbasnejad, E.; Mirjalili, S.; Tjernberg, L.B.; Astiaso Garcia, D.; Alexander, B.; Wagner, M. A deep learning-based evolutionary model for short-term wind speed forecasting: A case study of the Lillgrund offshore wind farm. Energy Convers. Manag. 2021, 236, 114002. [Google Scholar] [CrossRef]

- Dowell, J.; Weiss, S.; Infield, D. Spatio-temporal prediction of wind speed and direction by continuous directional regime. In Proceedings of the 2014 International Conference on Probabilistic Methods Applied to Power Systems, PMAPS 2014, Durham, UK, 7–10 July 2014. [Google Scholar] [CrossRef]

- Liu, Z.; Hara, R.; Kita, H. Hybrid forecasting system based on data area division and deep learning neural network for short-term wind speed forecasting. Energy Convers. Manag. 2021, 238, 114136. [Google Scholar] [CrossRef]

- Zhu, Q.; Chen, J.; Shi, D.; Zhu, L.; Bai, X.; Duan, X.; Liu, Y. Learning Temporal and Spatial Correlations Jointly: A Unified Framework for Wind Speed Prediction. IEEE Trans. Sustain. Energy 2020, 11, 509–523. [Google Scholar] [CrossRef]

- Gupta, P.; Singh, R. Combining a deep learning model with multivariate empirical mode decomposition for hourly global horizontal irradiance forecasting. Renew. Energy 2023, 206, 908–927. [Google Scholar] [CrossRef]

- Yang, L.; Gao, X.; Hua, J.; Wang, L. Intra-day global horizontal irradiance forecast using FY-4A clear sky index. Sustain. Energy Technol. Assess. 2022, 50, 101816. [Google Scholar] [CrossRef]

- Kallio-Myers, V.; Riihelä, A.; Lahtinen, P.; Lindfors, A. Global horizontal irradiance forecast for Finland based on geostationary weather satellite data. Sol. Energy 2020, 198, 68–80. [Google Scholar] [CrossRef]

- Liu, J.; Zang, H.; Cheng, L.; Ding, T.; Wei, Z.; Sun, G. A Transformer-based multimodal-learning framework using sky images for ultra-short-term solar irradiance forecasting. Appl. Energy 2023, 342, 121160. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Peng, Z.; Peng, S.; Fu, L.; Lu, B.; Tang, J.; Wang, K.; Li, W. A novel deep learning ensemble model with data denoising for short-term wind speed forecasting. Energy Convers. Manag. 2020, 207, 112524. [Google Scholar] [CrossRef]

- Abdellatif, A.; Mubarak, H.; Ahmad, S.; Ahmed, T.; Shafiullah, G.M.; Hammoudeh, A.; Abdellatef, H.; Rahman, M.M.; Gheni, H.M. Forecasting Photovoltaic Power Generation with a Stacking Ensemble Model. Sustainability 2022, 14, 11083. [Google Scholar] [CrossRef]

- Wu, H.; Levinson, D. The ensemble approach to forecasting: A review and synthesis. Transp. Res. Part C Emerg. Technol. 2021, 132, 103357. [Google Scholar] [CrossRef]

- Ghojogh, B.; Crowley, M. The Theory behind Overfitting, cross Validation, Regularization, Bagging and Boosting: Tutorial. arXiv 2023, arXiv:1905.12787. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 14–18 August 2016. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Scott, J.; Van Griensven Thé, J.; Gharabaghi, B. Spatiotemporal Air Pollution Forecasting in Houston-TX: A Case Study for Ozone Using Deep Graph Neural Networks. Atmosphere 2023, 14, 308. [Google Scholar] [CrossRef]

- Oliveira Santos, V.; Costa Rocha, P.A.; Scott, J.; Thé, J.V.G.; Gharabaghi, B. A New Graph-Based Deep Learning Model to Predict Flooding with Validation on a Case Study on the Humber River. Water 2023, 15, 1827. [Google Scholar] [CrossRef]

| Type | Lat. (◦) | Long. (◦) | Alt. (m) | MI (min) | MP |

|---|---|---|---|---|---|

| Anemometric | 09°04′08″ S | 40°19′11″ O | 387 | 10 | 1 January 2007 to 12 December 2010 |

| Solarimetric | 1 January 2010 to 12 December 2010 |

| Method | Search Parameter | Grid Values |

|---|---|---|

| Random forest | maxdepth | [2, 5, 7, 9, 11, 13, 15, 21, 35] |

| KNN | nearest neighbours k | 1 ≤ k ≤ 50, k integer |

| SVR | penalty term C | [0.1, 1, 10, 100, 1000] |

| coefficient λ | [1, 0.1. 0.01, 0.001, 0.0001] | |

| Elastic net | regularization term λ | [1, 0.1. 0.01, 0.001, 0.0001] |

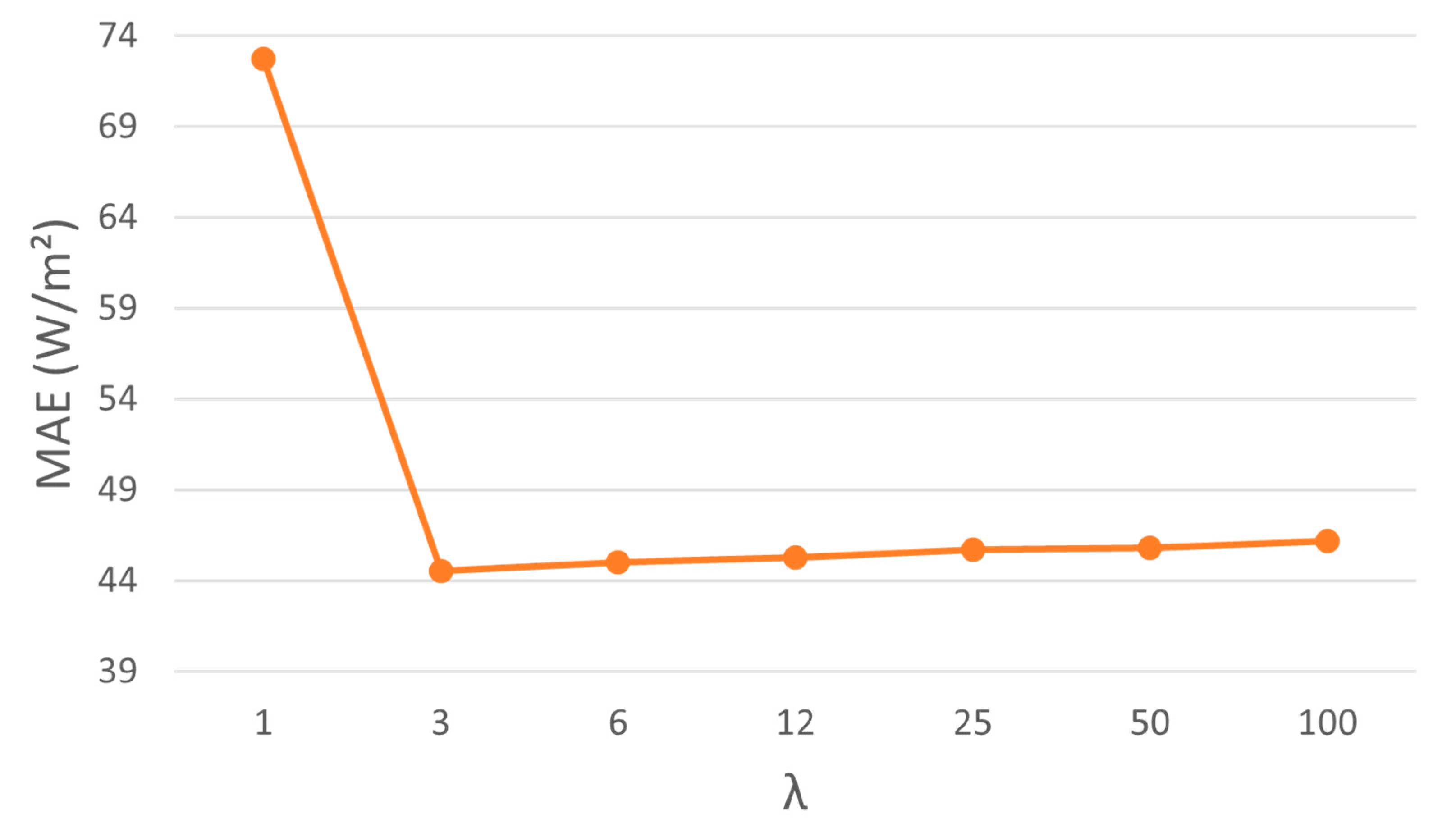

| Windowing | Λ | [1, 3, 6, 12, 25, 50, 100] |

| Arbitrating | * | |

| Method | Parameter | t + 10 | t + 20 | t + 30 | t + 60 |

|---|---|---|---|---|---|

| Random forest | best_max_depth | 7 | 7 | 7 | 7 |

| best_n_estimators | 20 | 20 | 20 | 20 | |

| KNN | best_n_neighbors | 49 | 49 | 49 | 49 |

| SVR | best_C | 1 | 1 | 1 | 1 |

| best_epsilon | 0.1 | 0.1 | 1 | 0.1 | |

| Elastic net | best_l1_ratio | 1 | 1 | 1 | 1 |

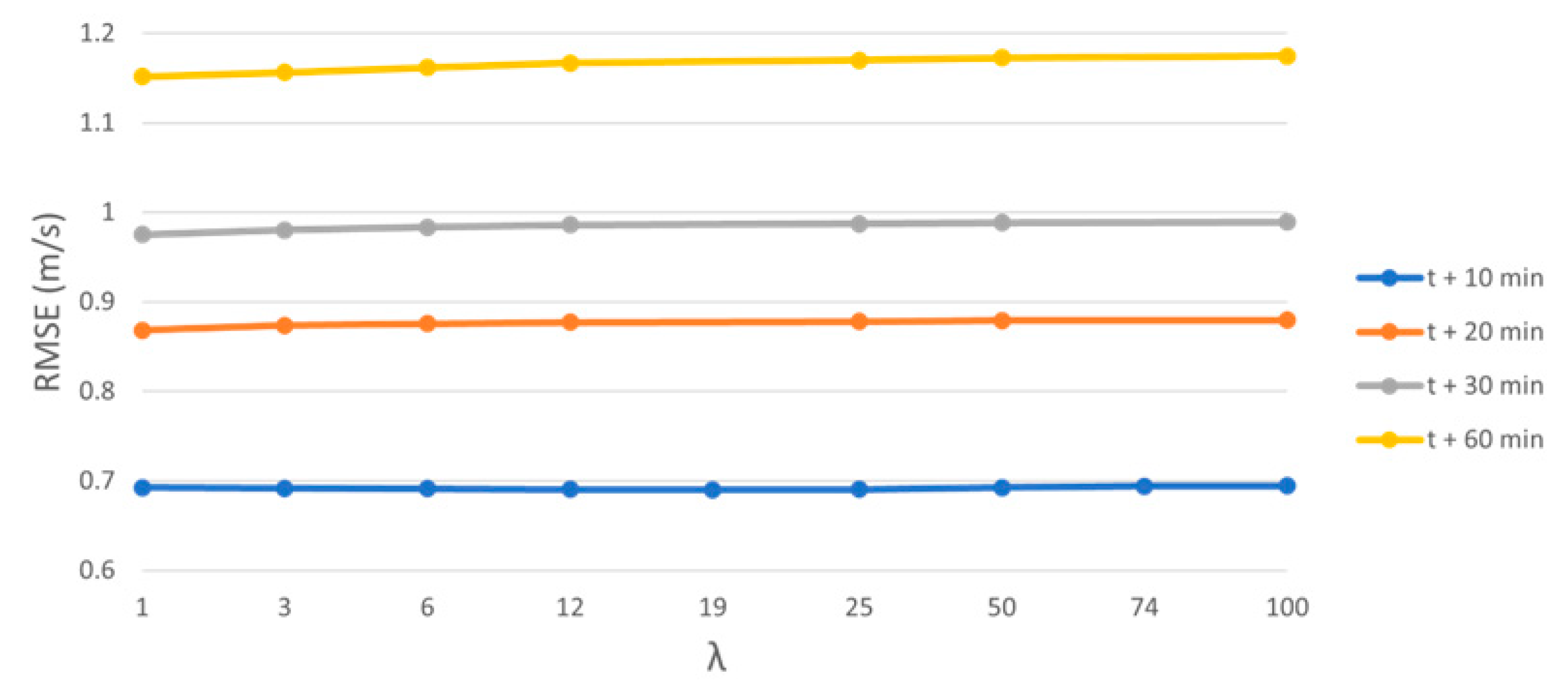

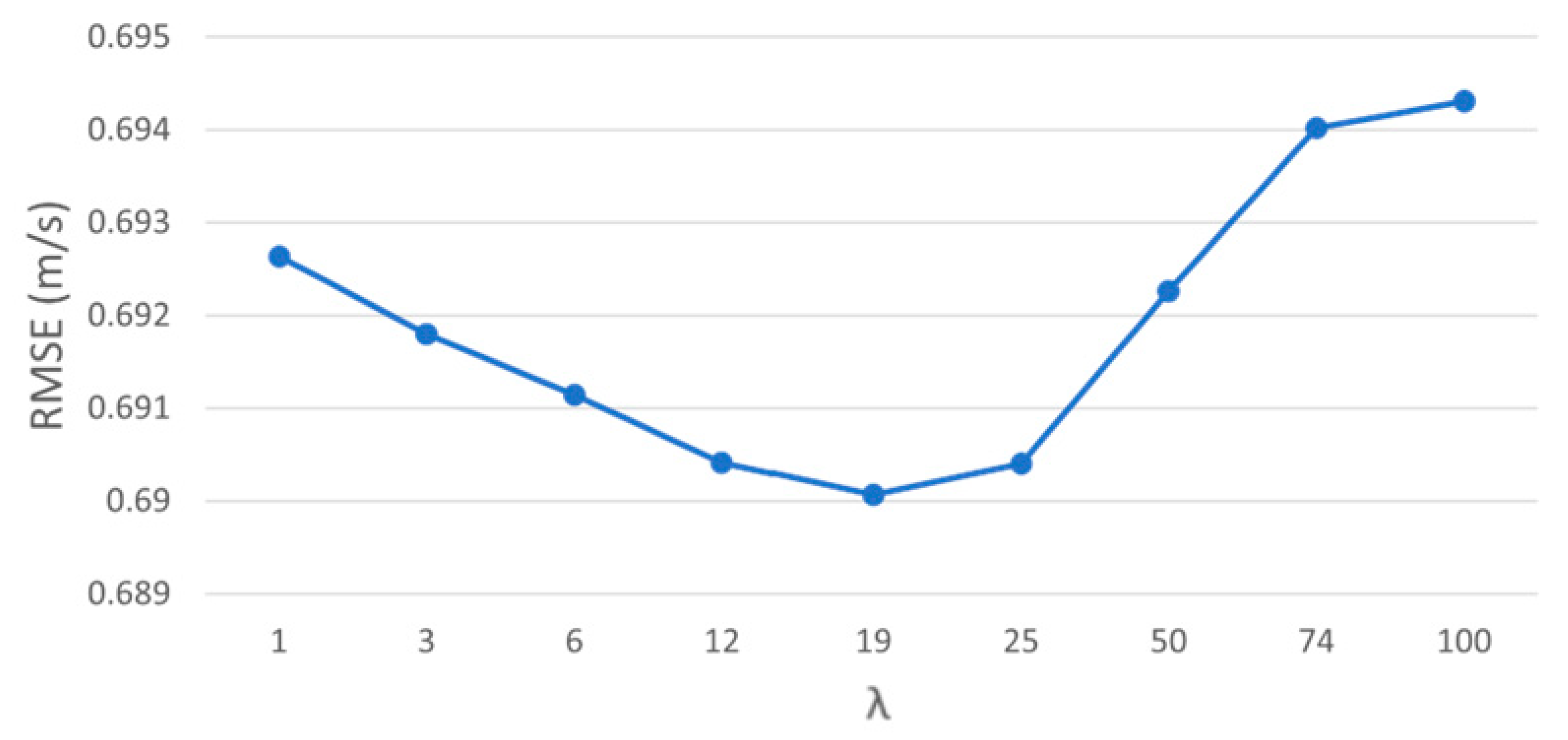

| Time Horizon | λ | RF | KNN | SVR | Elastic Net | Windowing | Arbitrating |

|---|---|---|---|---|---|---|---|

| t + 10 min | 1 | 0.69458 | 0.71040 | 0.69396 | 0.69828 | 0.69263 | 0.69447 |

| 3 | 0.69180 | ||||||

| 6 | 0.69114 | ||||||

| 12 | 0.69041 | ||||||

| 19 | 0.69007 | ||||||

| 25 | 0.69040 | ||||||

| 50 | 0.69226 | ||||||

| 74 | 0.69402 | ||||||

| 100 | 0.69431 | ||||||

| t + 20 min | 1 | 0.88310 | 0.89332 | 0.88372 | 0.88554 | 0.86817 | 0.88315 |

| 3 | 0.87353 | ||||||

| 6 | 0.87563 | ||||||

| 12 | 0.87699 | ||||||

| 25 | 0.87803 | ||||||

| 50 | 0.87889 | ||||||

| 100 | 0.87960 | ||||||

| t + 30 min | 1 | 0.99469 | 0.99859 | 0.99130 | 0.99660 | 0.97497 | 0.99091 |

| 3 | 0.98017 | ||||||

| 6 | 0.98333 | ||||||

| 12 | 0.98583 | ||||||

| 25 | 0.98702 | ||||||

| 50 | 0.98832 | ||||||

| 100 | 0.98902 | ||||||

| t + 60 min | 1 | 1.18092 | 1.19527 | 1.17764 | 1.18281 | 1.15150 | 1.18156 |

| 3 | 1.15647 | ||||||

| 6 | 1.16170 | ||||||

| 12 | 1.16685 | ||||||

| 25 | 1.16987 | ||||||

| 50 | 1.17254 | ||||||

| 100 | 1.17455 |

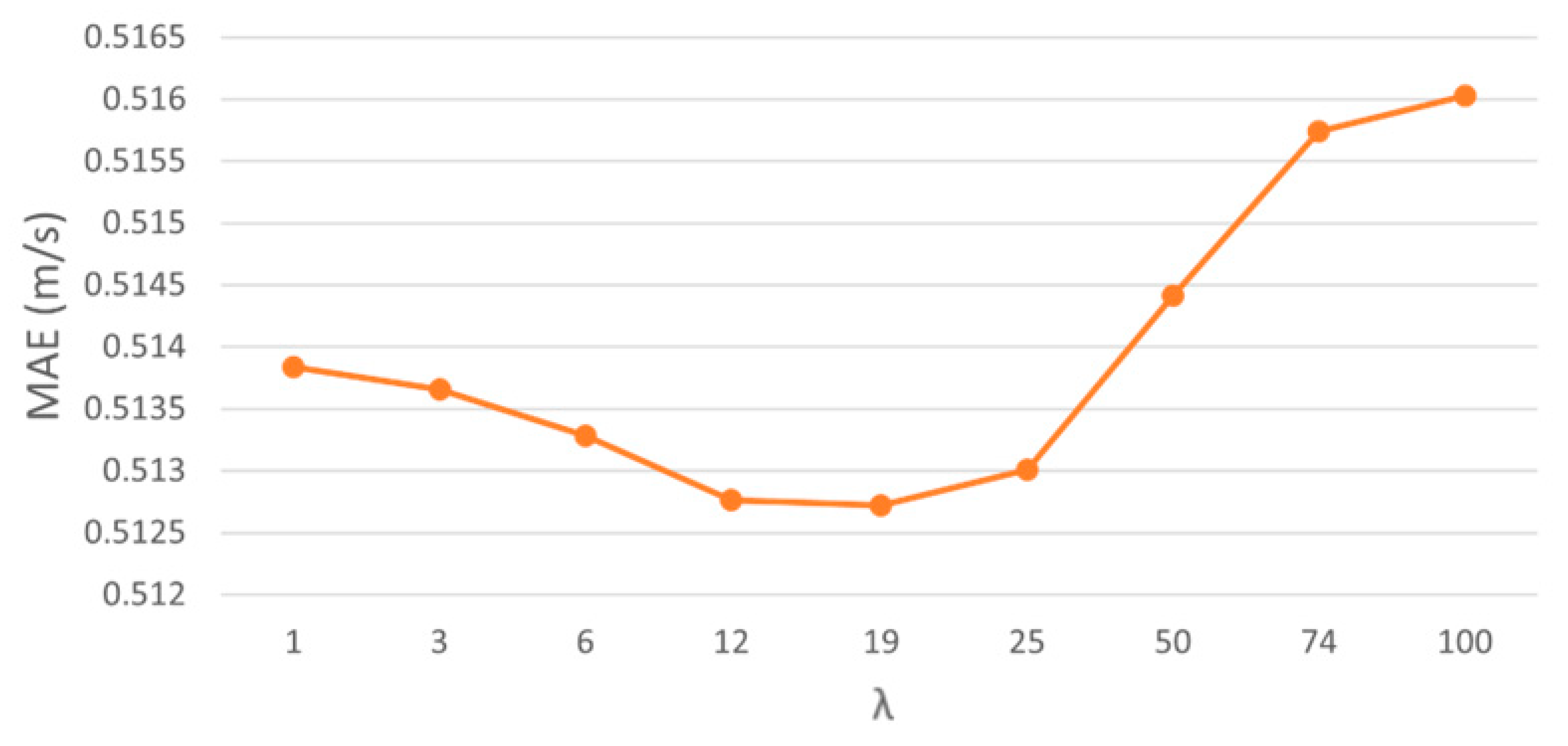

| Time Horizon | λ | RF | KNN | SVR | Elastic Net | Windowing | Arbitrating |

|---|---|---|---|---|---|---|---|

| t + 10 min | 1 | 0.51592 | 0.53216 | 0.51438 | 0.51853 | 0.51384 | 0.51711 |

| 3 | 0.51366 | ||||||

| 6 | 0.51328 | ||||||

| 12 | 0.51276 | ||||||

| 19 | 0.51272 | ||||||

| 25 | 0.51301 | ||||||

| 50 | 0.51441 | ||||||

| 74 | 0.51574 | ||||||

| 100 | 0.51603 | ||||||

| t + 20 min | 1 | 0.65845 | 0.66882 | 0.66040 | 0.65990 | 0.64663 | 0.65936 |

| 3 | 0.65140 | ||||||

| 6 | 0.65332 | ||||||

| 12 | 0.65435 | ||||||

| 25 | 0.65554 | ||||||

| 50 | 0.65637 | ||||||

| 100 | 0.65695 | ||||||

| t + 30 min | 1 | 0.74250 | 0.74735 | 0.74125 | 0.74347 | 0.72594 | 0.74097 |

| 3 | 0.73105 | ||||||

| 6 | 0.73402 | ||||||

| 12 | 0.73625 | ||||||

| 25 | 0.73732 | ||||||

| 50 | 0.73846 | ||||||

| 100 | 0.73902 | ||||||

| t + 60 min | 1 | 0.89496 | 0.90753 | 0.89179 | 0.89589 | 0.86784 | 0.89570 |

| 3 | 0.87277 | ||||||

| 6 | 0.87826 | ||||||

| 12 | 0.88307 | ||||||

| 25 | 0.88580 | ||||||

| 50 | 0.88813 | ||||||

| 100 | 0.88963 |

| Time Horizon | λ | RF | KNN | SVR | Elastic Net | Windowing | Arbitrating |

|---|---|---|---|---|---|---|---|

| t + 10 min | 1 | 0.84248 | 0.83522 | 0.84275 | 0.84079 | 0.84336 | 0.84252 |

| 3 | 0.84373 | ||||||

| 6 | 0.84403 | ||||||

| 12 | 0.84436 | ||||||

| 19 | 0.84451 | ||||||

| 25 | 0.84436 | ||||||

| 50 | 0.84353 | ||||||

| 74 | 0.84273 | ||||||

| 100 | 0.84260 | ||||||

| t + 20 min | 1 | 0.74534 | 0.73941 | 0.74498 | 0.74393 | 0.75388 | 0.74531 |

| 3 | 0.75083 | ||||||

| 6 | 0.74963 | ||||||

| 12 | 0.74885 | ||||||

| 25 | 0.74825 | ||||||

| 50 | 0.74776 | ||||||

| 100 | 0.74736 | ||||||

| t + 30 min | 1 | 0.67690 | 0.67436 | 0.67909 | 0.67566 | 0.68958 | 0.67935 |

| 3 | 0.68626 | ||||||

| 6 | 0.68423 | ||||||

| 12 | 0.68262 | ||||||

| 25 | 0.68186 | ||||||

| 50 | 0.68102 | ||||||

| 100 | 0.68057 | ||||||

| t + 60 min | 1 | 0.54443 | 0.53329 | 0.54695 | 0.54297 | 0.56685 | 0.54393 |

| 3 | 0.56310 | ||||||

| 6 | 0.55914 | ||||||

| 12 | 0.55522 | ||||||

| 25 | 0.55291 | ||||||

| 50 | 0.55087 | ||||||

| 100 | 0.54933 |

| Time Horizon | λ | RF | KNN | SVR | Elastic Net | Windowing | Arbitrating |

|---|---|---|---|---|---|---|---|

| t + 10 min | 1 | 0.21277 | 0.25360 | 0.20257 | 0.21848 | 0.21040 | 0.21634 |

| 3 | 0.21122 | ||||||

| 6 | 0.21092 | ||||||

| 12 | 0.21040 | ||||||

| 19 | 0.21022 | ||||||

| 25 | 0.21075 | ||||||

| 50 | 0.21179 | ||||||

| 74 | 0.21246 | ||||||

| 100 | 0.21234 | ||||||

| t + 20 min | 1 | 0.31534 | 0.33823 | 0.34178 | 0.31206 | 0.31280 | 0.32577 |

| 3 | 0.31558 | ||||||

| 6 | 0.31658 | ||||||

| 12 | 0.31745 | ||||||

| 25 | 0.31906 | ||||||

| 50 | 0.31990 | ||||||

| 100 | 0.32101 | ||||||

| t + 30 min | 1 | 0.38089 | 0.39786 | 0.37520 | 0.37064 | 0.36711 | 0.38499 |

| 3 | 0.36968 | ||||||

| 6 | 0.37245 | ||||||

| 12 | 0.37227 | ||||||

| 25 | 0.37367 | ||||||

| 50 | 0.37352 | ||||||

| 100 | 0.37538 | ||||||

| t + 60 min | 1 | 0.52320 | 0.53567 | 0.51731 | 0.51284 | 0.50552 | 0.52440 |

| 3 | 0.50730 | ||||||

| 6 | 0.51189 | ||||||

| 12 | 0.51289 | ||||||

| 25 | 0.51480 | ||||||

| 50 | 0.51571 | ||||||

| 100 | 0.51872 |

| Method | Parameter | t + 10 | t + 20 | t + 30 | t + 60 |

|---|---|---|---|---|---|

| Random forest | best_max_depth | 5 | 5 | 5 | 5 |

| best_n_estimators | 20 | 20 | 20 | 20 | |

| KNN | best_n_neighbors | 37 | 37 | 49 | 48 |

| SVR | best_C | 0.1 | 0.1 | 0.1 | 0.1 |

| best_epsilon | 0.1 | 0.1 | 0.1 | 0.1 | |

| Elastic net | best_l1_ratio | 1 | 1 | 1 | 1 |

| Time Horizon | λ | RF | KNN | SVR | Elastic Net | Windowing | Arbitrating |

|---|---|---|---|---|---|---|---|

| t + 10 min | 1 | 75.02000 | 75.26000 | 74.19000 | 74.98000 | 72.73186 | 74.01000 |

| 3 | 72.93221 | ||||||

| 6 | 73.29363 | ||||||

| 12 | 73.21035 | ||||||

| 25 | 73.24620 | ||||||

| 50 | 73.48055 | ||||||

| 100 | 73.69330 | ||||||

| t + 20 min | 1 | 90.94000 | 83.50000 | 84.45000 | 84.53000 | 80.07000 | 83.19000 |

| 3 | 80.63000 | ||||||

| 6 | 81.19000 | ||||||

| 12 | 81.87000 | ||||||

| 25 | 82.56000 | ||||||

| 50 | 82.11000 | ||||||

| 100 | 82.57000 | ||||||

| t + 30 min | 1 | 90.15000 | 90.50000 | 91.49000 | 93.49000 | 86.25000 | 89.70000 |

| 3 | 87.00000 | ||||||

| 6 | 87.75000 | ||||||

| 12 | 88.33000 | ||||||

| 25 | 88.95000 | ||||||

| 50 | 88.70000 | ||||||

| 100 | 89.01000 | ||||||

| t + 60 min | 1 | 112.05000 | 112.13000 | 112.76000 | 118.08000 | 105.51000 | 111.13000 |

| 3 | 106.62000 | ||||||

| 6 | 107.76000 | ||||||

| 12 | 108.89000 | ||||||

| 25 | 109.32000 | ||||||

| 50 | 110.12000 | ||||||

| 100 | 110.30000 |

| Time Horizon | λ | RF | KNN | SVR | Elastic Net | Windowing | Arbitrating |

|---|---|---|---|---|---|---|---|

| t + 10 min | 1 | 0.92000 | 0.92000 | 0.92000 | 0.92000 | 0.92184 | 0.92000 |

| 3 | 0.92141 | ||||||

| 6 | 0.92062 | ||||||

| 12 | 0.92080 | ||||||

| 25 | 0.92073 | ||||||

| 50 | 0.92022 | ||||||

| 100 | 0.91976 | ||||||

| t + 20 min | 1 | 0.88000 | 0.90000 | 0.90000 | 0.90000 | 0.91000 | 0.90000 |

| 3 | 0.91000 | ||||||

| 6 | 0.90000 | ||||||

| 12 | 0.90000 | ||||||

| 25 | 0.90000 | ||||||

| 50 | 0.90000 | ||||||

| 100 | 0.90000 | ||||||

| t + 30 min | 1 | 0.88000 | 0.88000 | 0.88000 | 0.87000 | 0.89000 | 0.88000 |

| 3 | 0.89000 | ||||||

| 6 | 0.89000 | ||||||

| 12 | 0.89000 | ||||||

| 25 | 0.89000 | ||||||

| 50 | 0.88000 | ||||||

| 100 | 0.89000 | ||||||

| t + 60 min | 1 | 0.83000 | 0.83000 | 0.82000 | 0.51223 | 0.85000 | 0.83000 |

| 3 | 0.84000 | ||||||

| 6 | 0.84000 | ||||||

| 12 | 0.84000 | ||||||

| 25 | 0.83000 | ||||||

| 50 | 0.83000 | ||||||

| 100 | 0.83000 |

| Time Horizon | λ | RF | KNN | SVR | Elastic Net | Windowing | Arbitrating |

|---|---|---|---|---|---|---|---|

| t + 10 min | 1 | 48.29000 | 48.47000 | 44.16000 | 49.31000 | 72.73186 | 46.24000 |

| 3 | 44.52301 | ||||||

| 6 | 45.00717 | ||||||

| 12 | 45.27759 | ||||||

| 25 | 45.67924 | ||||||

| 50 | 45.79140 | ||||||

| 10 | 46.16632 | ||||||

| t + 20 min | 1 | 65.19000 | 55.63000 | 59.67000 | 58.86000 | 52.53000 | 56.20000 |

| 3 | 53.31000 | ||||||

| 6 | 54.12000 | ||||||

| 12 | 55.27000 | ||||||

| 25 | 56.88000 | ||||||

| 50 | 55.59000 | ||||||

| 10 | 56.79000 | ||||||

| t + 30 min | 1 | 62.09000 | 61.58000 | 64.77000 | 67.13000 | 58.14000 | 60.91000 |

| 3 | 59.02000 | ||||||

| 6 | 59.91000 | ||||||

| 12 | 60.85000 | ||||||

| 25 | 61.34000 | ||||||

| 50 | 61.84000 | ||||||

| 10 | 61.51000 | ||||||

| t + 60 min | 1 | 81.28000 | 79.84000 | 81.44000 | 89.07000 | 74.59000 | 79.80000 |

| 3 | 75.92000 | ||||||

| 6 | 77.11000 | ||||||

| 12 | 78.47000 | ||||||

| 25 | 79.08000 | ||||||

| 50 | 79.48000 | ||||||

| 10 | 79.63000 |

| Time Horizon | λ | RF | KNN | SVR | Elastic Net | Windowing | Arbitrating |

|---|---|---|---|---|---|---|---|

| t + 10 min | 1 | 0.22000 | 0.24000 | 0.21000 | 0.23000 | 0.20701 | 0.22000 |

| 3 | 0.21027 | ||||||

| 6 | 0.21254 | ||||||

| 12 | 0.21364 | ||||||

| 25 | 0.21444 | ||||||

| 50 | 0.21541 | ||||||

| 100 | 0.21684 | ||||||

| t + 20 min | 1 | 0.32000 | 0.28000 | 0.28000 | 0.27000 | 0.25000 | 0.27000 |

| 3 | 0.25000 | ||||||

| 6 | 0.26000 | ||||||

| 12 | 0.26000 | ||||||

| 25 | 0.27000 | ||||||

| 50 | 0.26000 | ||||||

| 100 | 0.27000 | ||||||

| t + 30 min | 1 | 0.29000 | 0.30000 | 0.29000 | 0.33000 | 0.27000 | 0.29000 |

| 3 | 0.28000 | ||||||

| 6 | 0.28000 | ||||||

| 12 | 0.28000 | ||||||

| 25 | 0.29000 | ||||||

| 50 | 0.29000 | ||||||

| 100 | 0.29000 | ||||||

| t + 60 min | 1 | 0.34000 | 0.35000 | 0.34000 | 0.54747 | 0.32000 | 0.34000 |

| 3 | 0.32000 | ||||||

| 6 | 0.33000 | ||||||

| 12 | 0.33000 | ||||||

| 25 | 0.34000 | ||||||

| 50 | 0.34000 | ||||||

| 100 | 0.34000 |

| Metric | Time Horizon | Wind Speed | GHI |

|---|---|---|---|

| RMSE | t + 10 | 0.69007 m/s | 72.73186 W/m2 |

| t + 20 | 0.86817 m/s | 80.07 W/m2 | |

| t + 30 | 0.97497 m/s | 86.25 W/m2 | |

| t + 60 | 1.1515 m/s | 105.51 W/m2 | |

| R2 | t + 10 | 0.84451 | 0.92184 |

| t + 20 | 0.75388 | 0.91 | |

| t + 30 | 0.68958 | 0.89 | |

| t + 60 | 0.56685 | 0.85 | |

| MAE | t + 10 | 0.51272 m/s | 44.52301 W/m2 |

| t + 20 | 0.64663 m/s | 52.53 W/m2 | |

| t + 30 | 0.72594 m/s | 58.14 W/m2 | |

| t + 60 | 0.86784 m/s | 74.59 W/m2 | |

| MAPE | t + 10 | 0.21022 | 0.20701 |

| t + 20 | 0.3128 | 0.25 | |

| t + 30 | 0.36711 | 0.27 | |

| t + 60 | 0.50552 | 0.32 |

| Model | Metric Value | Author |

|---|---|---|

| GNN SAGE GAT | RMSE 0.638 for t + 60 forecasting horizon MAE 0.458 for t + 60 forecasting horizon | Oliveira Santos et al. [22] |

| ED-HGNDO-BiLSTM | RMSE 0.696 average for t + 10 forecasting horizon 1.445 average for t + 60 forecasting horizon MAE 0.717 average for t + 10 forecasting horizon 0.953 average for t + 60 forecasting horizon MAPE 0.590 average for t + 10 forecasting horizon 9.769 average for t + 60 forecasting horizon | Neshat et al. [37] |

| Statistical model for wind speed forecasting | RMSE 1.090 for t + 60 forecasting horizon | Dowell et al. [38] |

| Hybrid wind speed forecasting model using area division (DAD) method and a deep learning neural network | RMSE 0.291 average for t + 10 forecasting horizon 0.355 average for t + 30 forecasting horizon 0.426 average for t + 60 forecasting horizon MAE 0.221 average for t + 10 forecasting horizon 0.293 average for t + 30 forecasting horizon 0.364 average for t + 60 forecasting horizon | Liu et al. [39] |

| Hybrid model CNN-LSTM | RMSE 0.547 for t + 10 forecasting horizon 0.802 for t + 20 forecasting horizon 0.895 for t + 30 forecasting horizon 1.114 for t + 60 forecasting horizon MAPE 4.385 for t + 10 forecasting horizon 6.023 for t + 20 forecasting horizon 7.510 for t + 30 forecasting horizon 11.127 for t + 60 forecasting horizon | Zhu et al. [40] |

| Model | Metric Value | Author |

|---|---|---|

| CNN-1D | RMSE (R2) 36.24 (0.98) for t + 10 forecasting horizon 39.00 (0.98) for t + 20 forecasting horizon 38.46 (0.98) for t + 30 forecasting horizon | Marinho et al. [23] |

| MEMD-PCA-GRU | RMSE (R2) 31.92 (0.99) for t + 60 forecasting horizon | Gupta and Singh [41] |

| Physical-based forecasting model | RMSE 75.91 for t + 30 forecasting horizon 89.81 for t + 60 forecasting horizon MAE 48.85 for t + 30 forecasting horizon 57.01 for t + 60 forecasting horizon | Yang et al. [42] |

| Physical-based forecasting model | RMSE 114.06 for t + 60 forecasting horizon | Kallio-Meyers et al. [43] |

| Deep learning transformer-based forecasting model | MAE 34.21 for t + 10 forecasting horizon 43.64 for t + 20 forecasting horizon 49.53 for t + 30 forecasting horizon | Liu et al. [44] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vidal Bezerra, F.D.; Pinto Marinho, F.; Costa Rocha, P.A.; Oliveira Santos, V.; Van Griensven Thé, J.; Gharabaghi, B. Machine Learning Dynamic Ensemble Methods for Solar Irradiance and Wind Speed Predictions. Atmosphere 2023, 14, 1635. https://doi.org/10.3390/atmos14111635

Vidal Bezerra FD, Pinto Marinho F, Costa Rocha PA, Oliveira Santos V, Van Griensven Thé J, Gharabaghi B. Machine Learning Dynamic Ensemble Methods for Solar Irradiance and Wind Speed Predictions. Atmosphere. 2023; 14(11):1635. https://doi.org/10.3390/atmos14111635

Chicago/Turabian StyleVidal Bezerra, Francisco Diego, Felipe Pinto Marinho, Paulo Alexandre Costa Rocha, Victor Oliveira Santos, Jesse Van Griensven Thé, and Bahram Gharabaghi. 2023. "Machine Learning Dynamic Ensemble Methods for Solar Irradiance and Wind Speed Predictions" Atmosphere 14, no. 11: 1635. https://doi.org/10.3390/atmos14111635