Short-Term Wind Speed Forecasting Based on the EEMD-GS-GRU Model

Abstract

1. Introduction

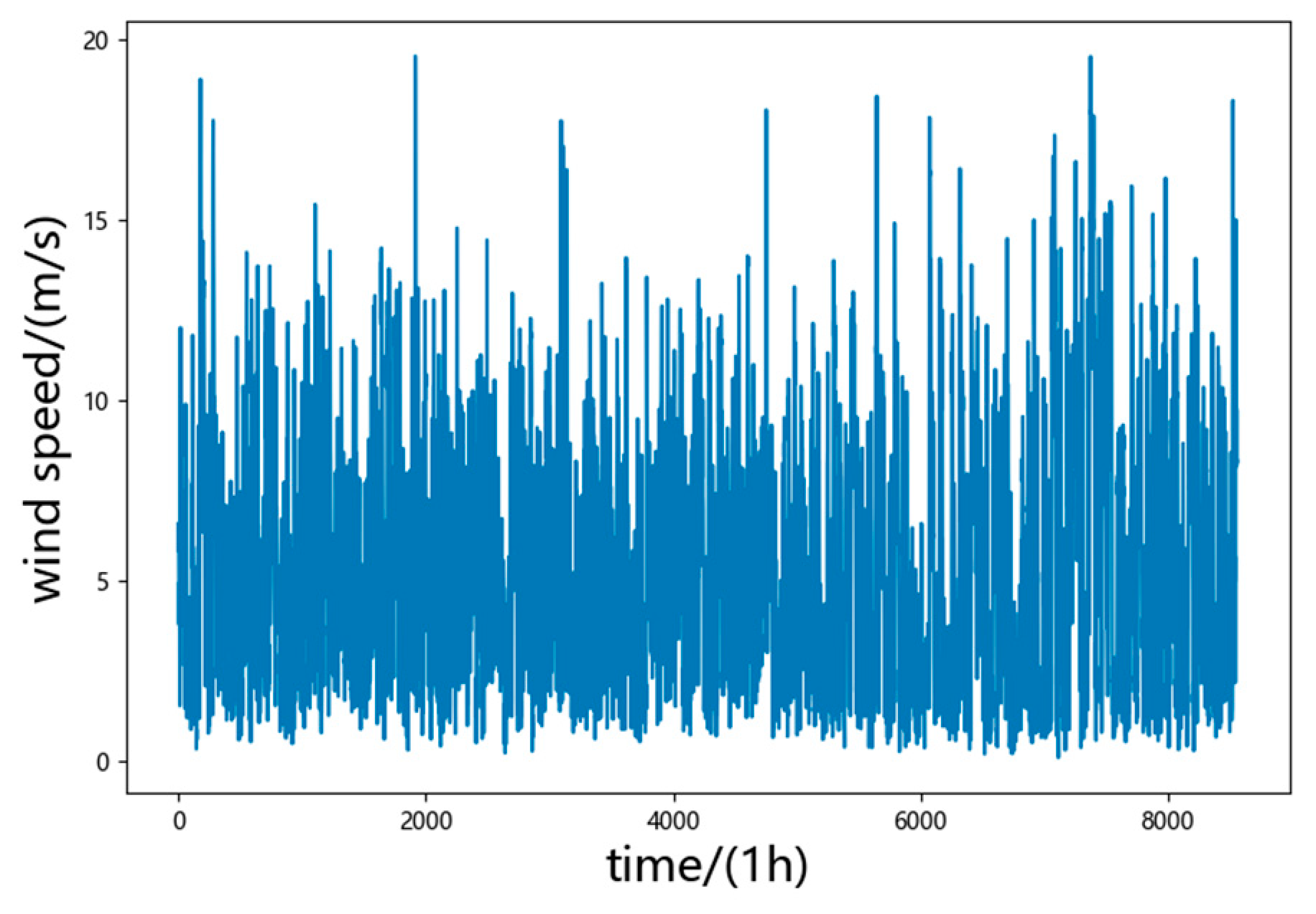

2. Research Methods

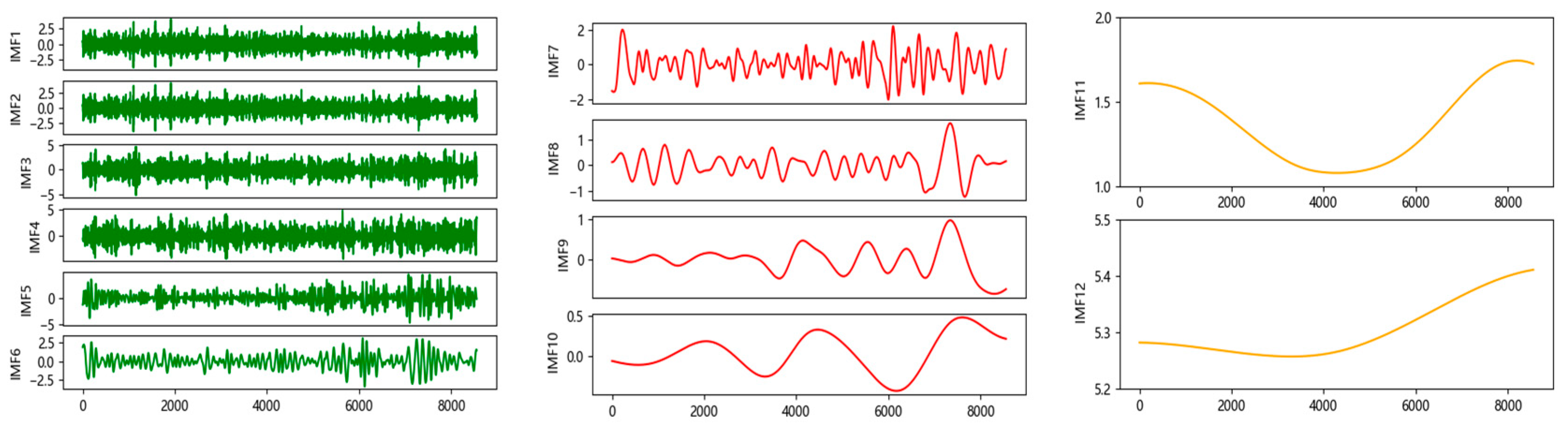

2.1. Ensemble Empirical Mode Decomposition Method

- Set the overall average times, M.

- Add white noise, , with a normal distribution to the original signal, , to generate a new signal, as follows:where represents the addition of a white noise sequence for the i-th time and represents the additional noise signal of the i-th test, = 1, 2, … M.

- EMD decomposition was performed on the obtained noise-containing signal, , and the form of the respective IMF’s sum was obtained, as follows:where was the -th IMF decomposed after adding white noise for the i-th time; was the residual function, which represents the average trend of the signal; and J was the IMF’s quantity.

- Repeat steps 2 and 3 for M times, adding white noise signals with different amplitudes for each decomposition to obtain the set of the IMF, as follows: … = 1, 2, … .

- Using the principle that the statistical average value of uncorrelated sequences was zero, the above corresponding IMFs are subjected to collective averaging operations to obtain the final IMF after EEMD decomposition, as follows:where was the th IMF decomposed by EEMD, = 1, 2, … M, = 1, 2, … J.

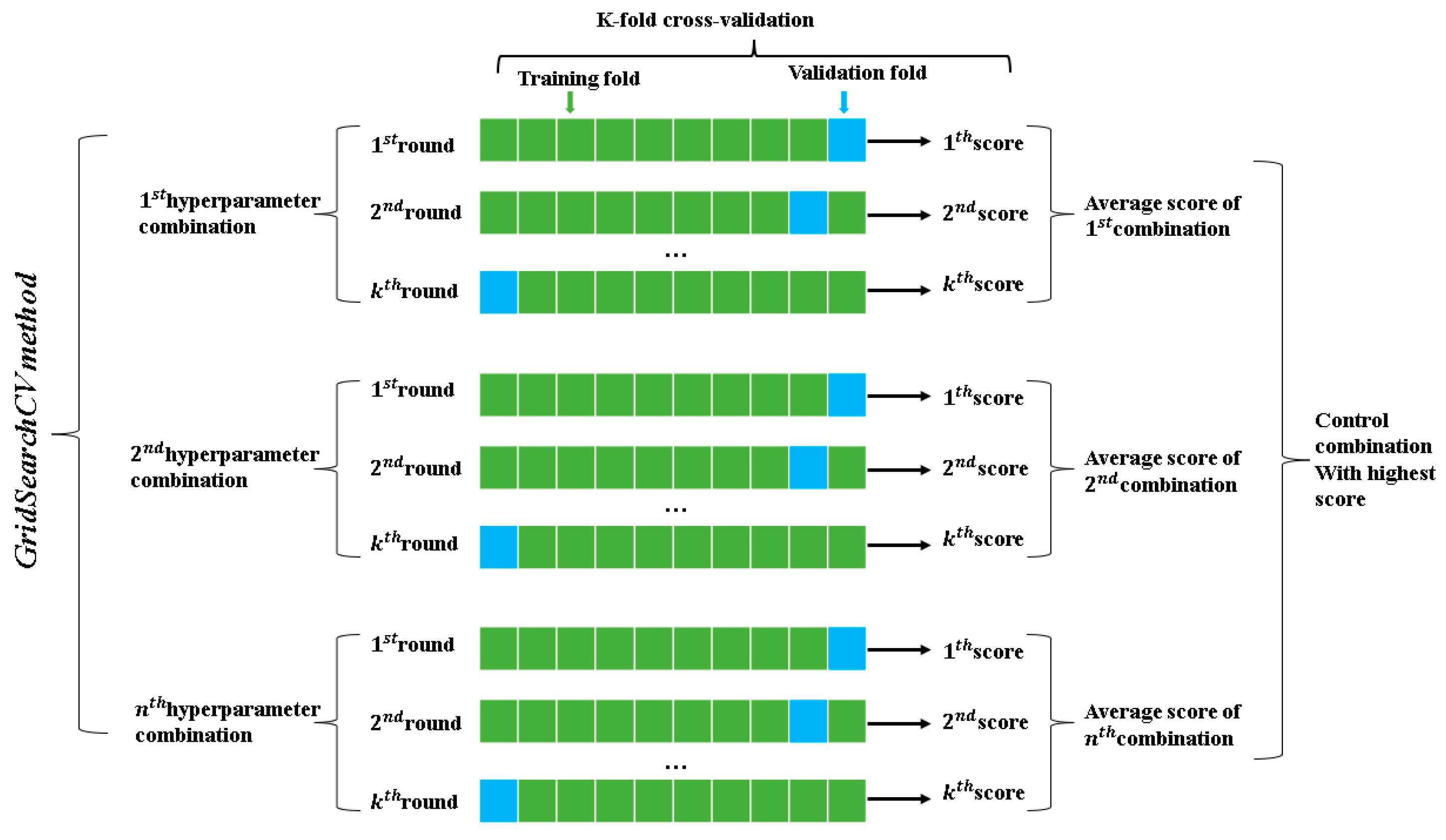

2.2. Grid Search Cross Validation Parameter Optimization Method

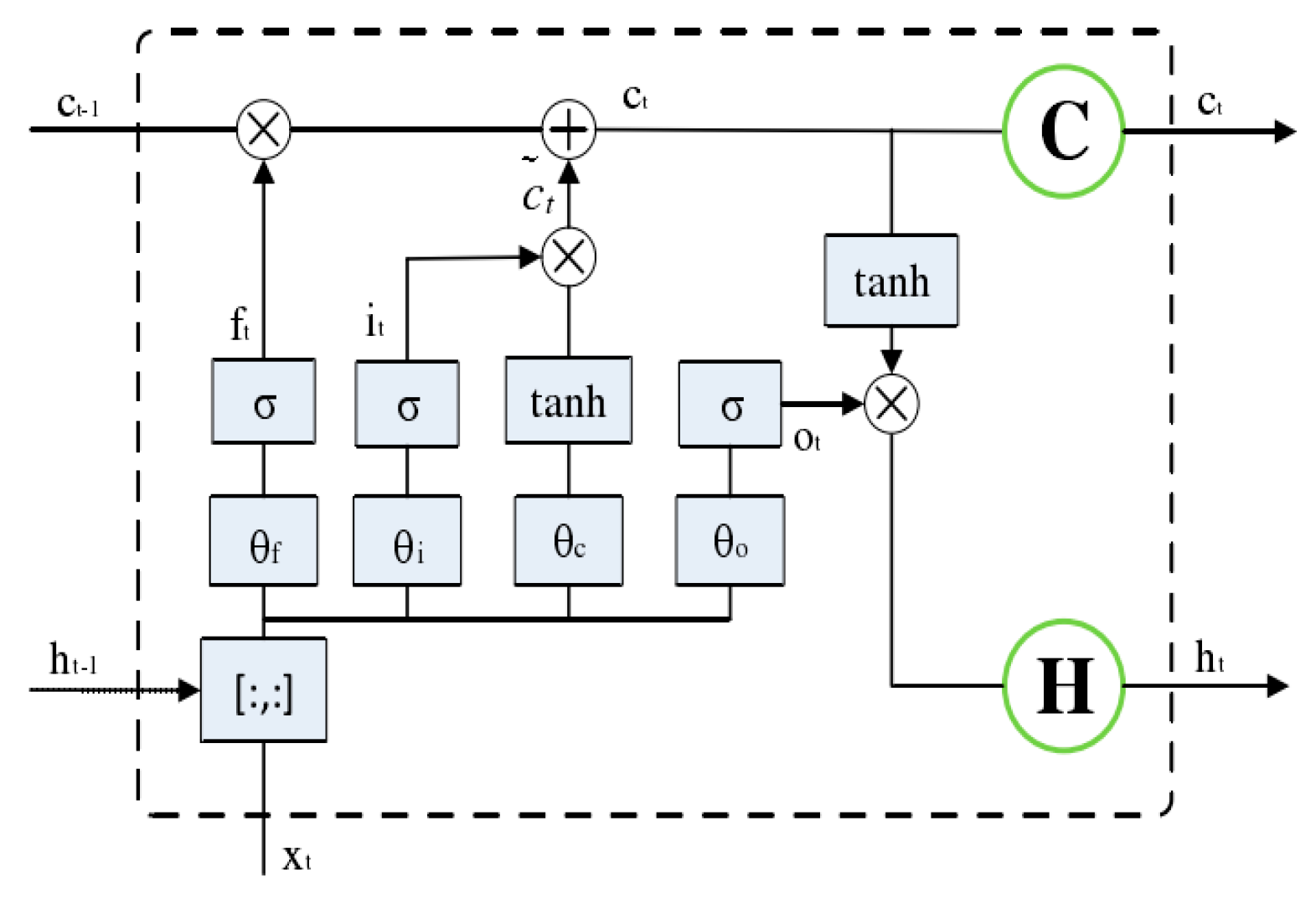

2.3. Long Short-Term Memory Network

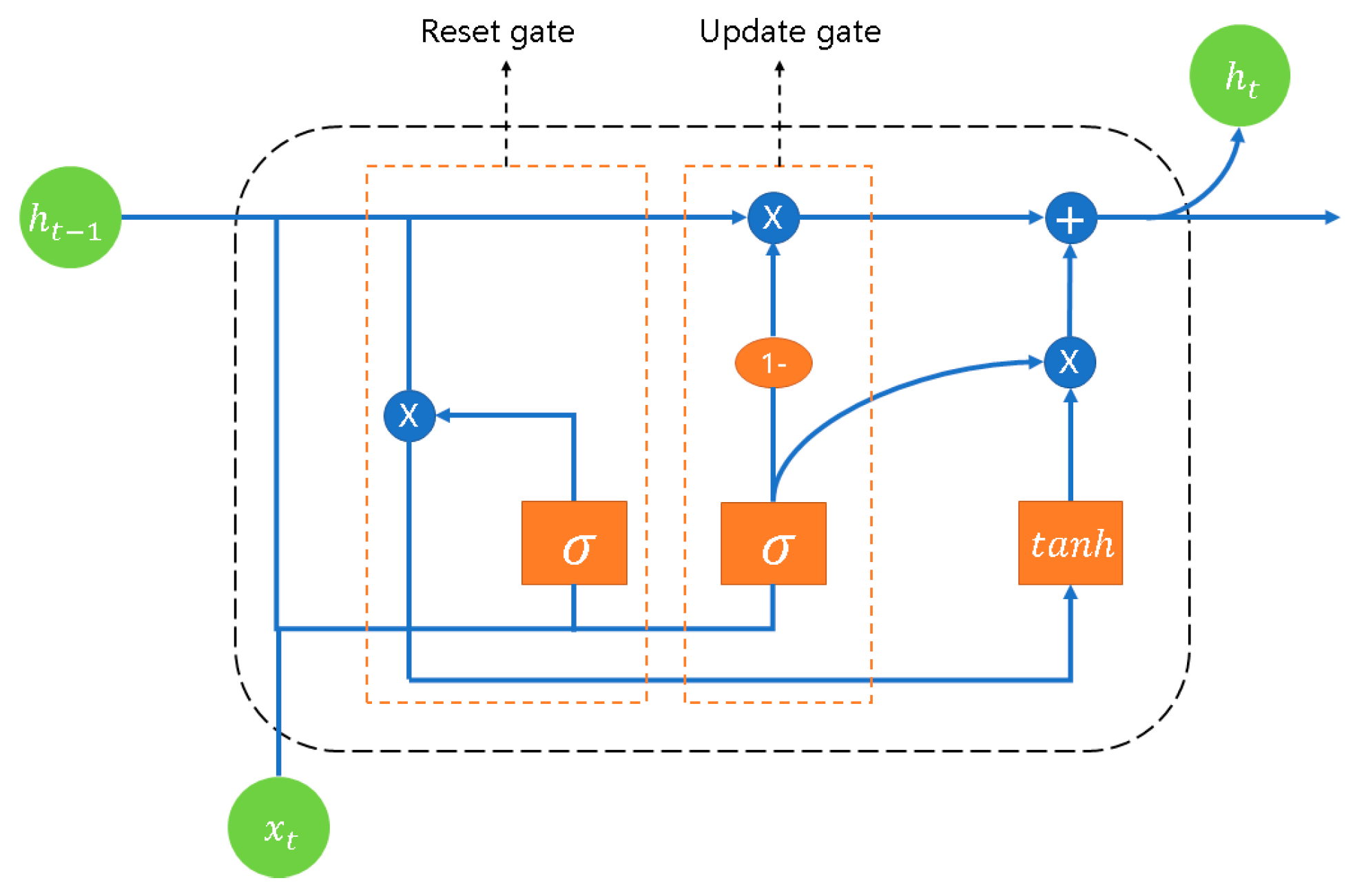

2.4. Gated Recurrent Unit

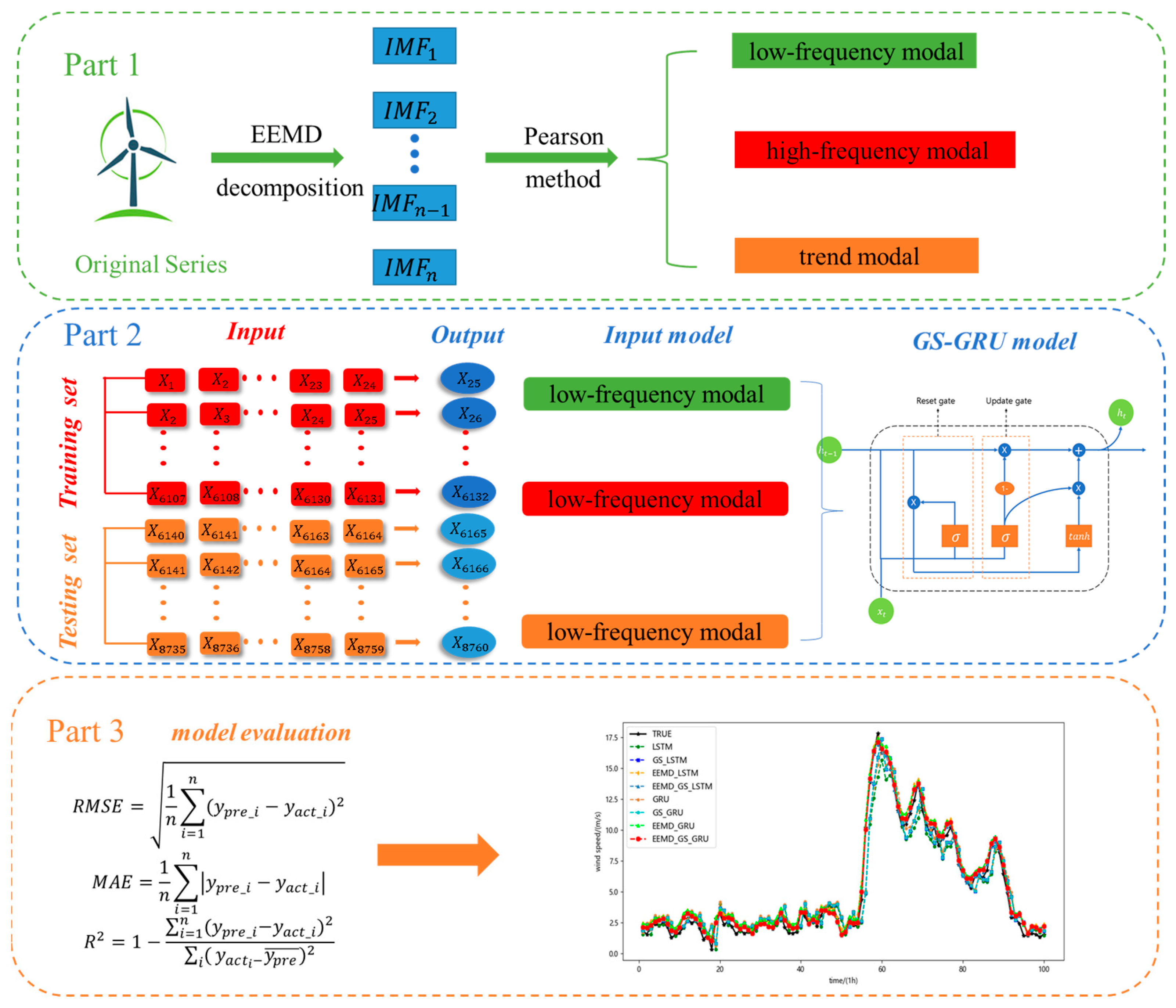

3. Wind Speed Forecasting Model

3.1. EEMD-GS-GRU Modeling Process

- EEMD was decomposed the original wind speed time series into subsequences.

- According to the modes decomposed in (1), the low-frequency, high-frequency, and trend components were judged, and the models were established for forecasting.

- The results of each subsequence predicted in (2) were superimposed to obtain the final result of the wind speed forecasting.

3.2. Predictive Evaluation Index

3.3. Case Analysis

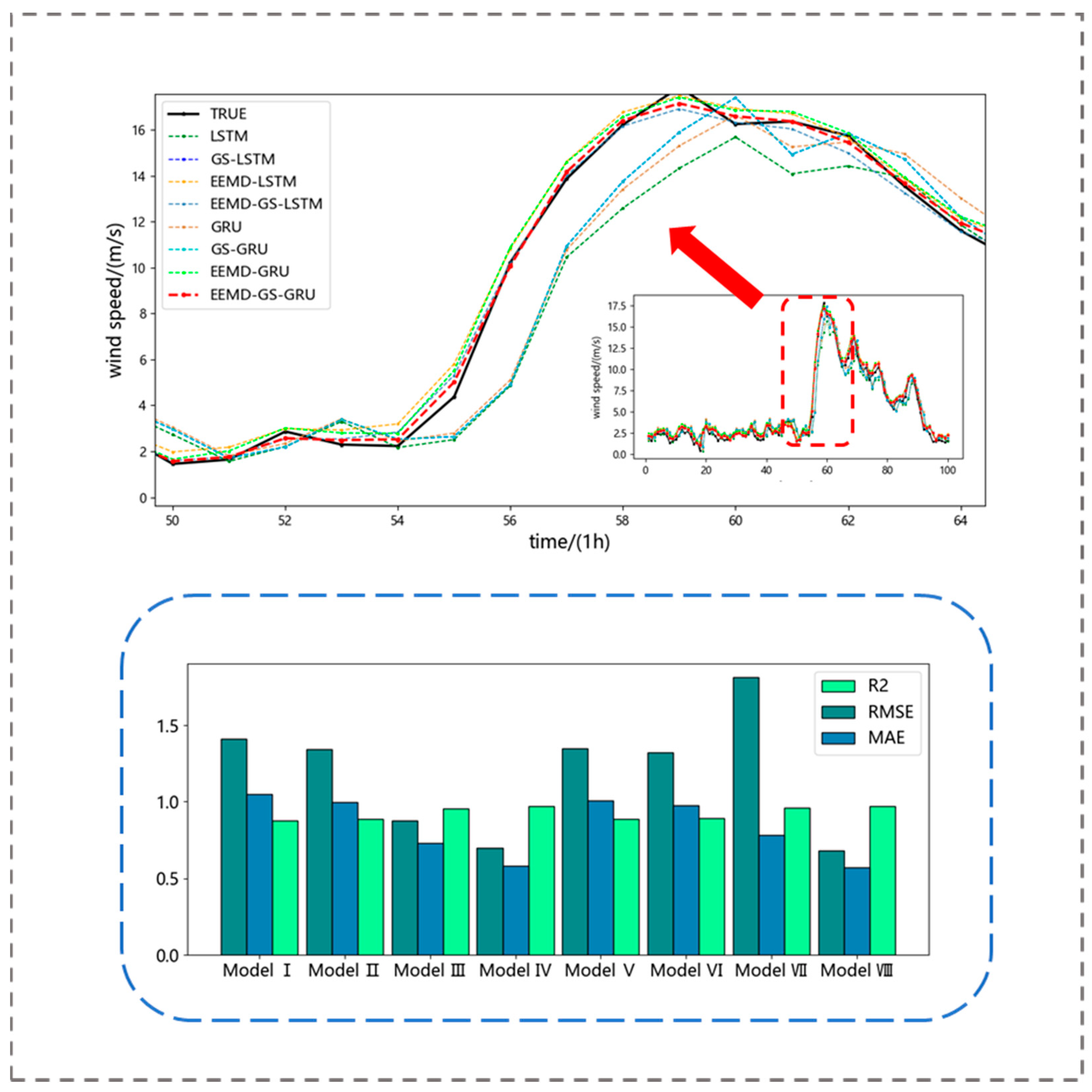

- (a)

- By comparing the basic Model I (LSTM) and Model V (GRU) models, it could be found that the forecasting effect of the GRU model used in this paper was slightly better than the LSTM model.

- (b)

- Comparing the error metrics of Model V (GRU) and Model VII (EEMD-GRU), in the forecast result for test set, it could be found that the RMSE, MAE, and of EEMD-GRU were reduced by 39.51%, 22.54% respectively, and was improved by 7.99%. This indicated that the introduction of the ensemble empirical mode decomposition method (EEMD) into the forecasting of wind speed time series could significantly improve the forecasting accuracy of the model.

- (c)

- In wind speed forecasting, the algorithm-optimized Model II (GS-LSTM) and Model VI (GS-GRU) performed better than a single model. Taking the forecasting statistical measures of wind farm which used LSTM and GRU models, respectively, as an example, the RMSE of GS-LSTM was reduced by 4.96%, MAE was reduced by 5.04%, and was increased 1.37%. The RMSE of GS-GRU is reduced 1.85%, MAE was reduced by 2.15%, and was increased 0.5%. This showed that adding algorithm optimization could improve the forecast performance of the model; it meant the algorithm was better able to find neural network parameters to achieve better forecasting results.

- (d)

- From the forecast statistical error, compared with the Model V (GRU) and Model VIII (EEMD-GS-GRU), the RMSE and MAE of EEMD-GS-GRU were reduced by 48.26% and 43.29%, respectively, and was increased by 9.34%. It shows that the composite model combining the modal decomposition and the optimization search algorithm was more suitable for wind speed forecasting.

- (e)

- Comparing the indicators of the Model IV (EEMD-GS-LSTM) and Model VIII (EEMD-GS-GRU), it could be seen that three error indicator values of the hybrid models using GRU model were slightly better than using the LSTM hybrid model. This suggested that the GRU method was more suitable for the forecasting of wind speed, which could track the wind speed time series more effectively. As a result, the hybrid model proposed in this study was suitable for the forecasting of wind speed.

4. Conclusions

- (1)

- The grid parameter optimization algorithm was combined with the GRU model to predict the wind speed, and the forecasting accuracy was slightly improved.

- (2)

- The original wind speed time series was decomposed by EEMD, which could effectively reduce the influence of wind speed nonlinearity, intermittency, and instability on wind speed forecasting; therefore, the accuracy of the wind speed forecasting was improved.

- (3)

- We decomposed the original wind speed time series into high-frequency components, low-frequency components, and trend quantities through EEMD, and we performed GS-LSTM and GS-GRU modeling and forecasting on them, respectively. After this, the forecasting accuracy was improved, to some extent. Therefore, the model presented in this study can more clearly reflect the characteristics of the wind speed time series.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shu, Y. Developing new power systems to help achieve the goal of “double carbon”. China Power Enterp. Manag. 2021, 7, 8–9. [Google Scholar]

- Ji, L.; Fu, C.; Ju, Z.; Shi, Y.; Wu, S.; Tao, L. Short-Term canyon wind speed prediction based on CNN—GRU transfer learning. Atmosphere 2022, 13, 813. [Google Scholar] [CrossRef]

- Zhang, Y.; Han, P.; Wang, D. Short-term Wind Speed Prediction of Wind Farm Based on Variational Mode Decomposition and LSSVM. Sol. Energy J. 2018, 39, 194–202. [Google Scholar]

- Ding, C.; Zhou, Y.; Ding, Q. Integrated carbon-capture-based low-carbon economic dispatch of power systems based on EEMD-LSTM-SVR wind power forecasting. Energies 2022, 15, 1613. [Google Scholar] [CrossRef]

- Gao, G.; Yuan, K.; Zeng, X. Short-Term wind speed prediction based on improved CEEMD-CS-ELM. Sol. Energy J. 2021, 42, 284–289. [Google Scholar]

- Chen, C.; Zhao, X.; Bi, G. Short-Term Wind Speed Prediction Based on Kmeans-VMD-LSTM. Mot. Control. Appl. 2021, 48, 85–93. [Google Scholar]

- Nasiri, H.; Ebadzadeh, M.M. Multi-step-ahead Stock Price Prediction Using Recurrent Fuzzy Neural Network and Variational Mode Decomposition. arXiv 2022, arXiv:2212.14687. [Google Scholar]

- Jiang, Z.; Che, J.; Wang, L. Ultra-short-term wind speed forecasting based on EMD-VAR model and spatial correlation. Energy Convers. Manag. 2021, 250, 114919. [Google Scholar] [CrossRef]

- Wu, Q.; Lin, H. Short-term wind speed forecasting based on hybrid variational mode decomposition and least squares support vector machine optimized by bat algorithm model. Sustainability 2019, 11, 652. [Google Scholar] [CrossRef]

- Li, H.; Ren, Y.; Gu, R. Combined Wind Speed Prediction Based on Flower Pollination Algorithm. Sci. Technol. Eng. 2020, 20, 1436–1441. [Google Scholar]

- Nasiri, H.; Ebadzadeh, M.M. MFRFNN: Multi-Functional Recurrent Fuzzy Neural Network for Chaotic Time Series Prediction. Neurocomputing 2022, 507, 292–310. [Google Scholar] [CrossRef]

- Li, J.; Wang, Y.; Chang, J. Ultra-short-term prediction of wind power based on parallel machine learning. J. Hydroelectr. Power Gener. 2023, 42, 40–51. [Google Scholar]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proceedings of the Royal Society of London. Ser. A Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Wu, Z.; Norden, E.H. Ensemble empirical mode decomposition: A noise-assisted data analysis method. Adv. Adapt. Data Anal. 2009, 1, 1–41. [Google Scholar] [CrossRef]

- Wu, Z.; Wu, N.; Huang, S.E.; Long, R. On the trend, detrending, and variability of nonlinear and nonstationary time series. Proc. Natl. Acad. Sci. USA 2007, 104, 14889–14894. [Google Scholar] [CrossRef]

- Sun, S.; Fu, J.; Li, A. A compound wind power forecasting strategy based on clustering, two-stage decomposition, parameter optimization, and optimal combination of multiple machine learning approaches. Energies 2019, 12, 3586. [Google Scholar] [CrossRef]

- Wen, B.; Dong, W.; Xie, W. Random Forest Parameter Optimization Based on Improved Grid Search Algorithm. Comput. Eng. Appl. 2018, 54, 154–157. [Google Scholar]

- Wang, J.; Zhang, L.; Chen, G. SVM parameter optimization based on improved grid search method. Appl. Sci. Technol. 2012, 39, 28–31. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Dey, R.; Salem, F.M. Gate-variants of gated recurrent unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Mid-West Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; IEEE: Piscatway, NJ, USA, 2017; pp. 1597–1600. [Google Scholar]

- Chelgani, S.C.; Nasiri, H.; Alidokht, M. Interpretable modeling of metallurgical responses for an industrial coal column flotation circuit by XGBoost and SHAP-A “conscious-lab” development. Int. J. Min. Sci. Technol. 2021, 31, 1135–1144. [Google Scholar] [CrossRef]

| IMF Name | Correlation Coefficients |

|---|---|

| IMF1 | 0.223 *** |

| IMF2 | 0.364 *** |

| IMF3 | 0.579 *** |

| IMF4 | 0.630 *** |

| IMF5 | 0.531 *** |

| IMF6 | 0.418 *** |

| IMF7 | 0.290 *** |

| IMF8 | 0.234 *** |

| IMF9 | 0.136 *** |

| IMF10 | 0.123 *** |

| IMF11 | 0.090 *** |

| IMF12 | 0.064 *** |

| Hyperparameter | Grid Search Range |

|---|---|

| Batch size | [8, 16, 24, 32, 64] |

| Epoch | [10, 15, 20, 25, 30] |

| Optimization | [adam, Adadelta, SGD] |

| Model Name | Running Time |

|---|---|

| LSTM | 3′16″ |

| GS-LSTM | 8′20″ |

| EEMD-LSTM | 25′46″ |

| EEMD-GS-LSTM | 115′25″ |

| GRU | 3′28″ |

| GS-GRU | 8′36″ |

| EEMD-GRU | 26′34″ |

| EEMD-GS-GRU | 117′23″ |

| Hyperparameter Name | Batch Size | Dropout | Epoch | Optimization |

|---|---|---|---|---|

| Model I | 24 | 0.2 | 25 | Adam |

| Model I | 16 | 0.2 | 30 | Adam |

| Model III | 24 | 0.2 | 25 | Adam |

| Model IV | 16 | 0.2 | 30 | Adam |

| Model V | 24 | 0.2 | 20 | Adam |

| Model VI | 16 | 0.2 | 30 | Adam |

| Model VII | 24 | 0.2 | 25 | Adam |

| Model VIII | 16 | 0.2 | 30 | Adam |

| Evaluation Index | R2 | RMSE (m/s) | MAE (m/s) |

|---|---|---|---|

| Model I | 0.877 | 1.411 | 1.051 |

| Model II | 0.889 | 1.341 | 0.998 |

| Model III | 0.953 | 0.876 | 0.729 |

| Model IV | 0.970 | 0.696 | 0.581 |

| Model V | 0.888 | 1.349 | 1.007 |

| Model VI | 0.892 | 1.324 | 0.978 |

| Model VII | 0.959 | 0.816 | 0.78 |

| Model VIII | 0.971 | 0.685 | 0.571 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, H.; Tan, Y.; Hou, J.; Liu, Y.; Zhao, X.; Wang, X. Short-Term Wind Speed Forecasting Based on the EEMD-GS-GRU Model. Atmosphere 2023, 14, 697. https://doi.org/10.3390/atmos14040697

Yao H, Tan Y, Hou J, Liu Y, Zhao X, Wang X. Short-Term Wind Speed Forecasting Based on the EEMD-GS-GRU Model. Atmosphere. 2023; 14(4):697. https://doi.org/10.3390/atmos14040697

Chicago/Turabian StyleYao, Huaming, Yongjie Tan, Jiachen Hou, Yaru Liu, Xin Zhao, and Xianxun Wang. 2023. "Short-Term Wind Speed Forecasting Based on the EEMD-GS-GRU Model" Atmosphere 14, no. 4: 697. https://doi.org/10.3390/atmos14040697

APA StyleYao, H., Tan, Y., Hou, J., Liu, Y., Zhao, X., & Wang, X. (2023). Short-Term Wind Speed Forecasting Based on the EEMD-GS-GRU Model. Atmosphere, 14(4), 697. https://doi.org/10.3390/atmos14040697