Fog Density Evaluation by Combining Image Grayscale Entropy and Directional Entropy

Abstract

:1. Introduction

2. Related Work

2.1. Image Entropy

2.2. Fog Density Estimation

3. Proposed Method

- Step 1. Convert the original image to grayscale and perform pseudo-edge detection;

- Step 2. Calculate two-dimensional grayscale entropy and directional entropy using the pseudo-edge image;

- Step 3. Define a piecewise function to construct the combined entropy based on the fog density discrimination capability of the two entropies;

- Step 4. Conduct experiments on both synthetic and real fog image datasets to evaluate the fog density level recognition performance of the combined entropy.

3.1. Two-Dimensional Grayscale Entropy

3.2. Two-Dimensional Directional Entropy

3.3. The Combined Entropy

3.4. Algorithm Evaluation Indexes

4. Experiments and Results

4.1. Datasets and Preprocessing

4.1.1. Color Hazy Image Database

4.1.2. Haze Groups Training Set

4.1.3. Haze Groups Test Set

4.1.4. Preprocessing

4.2. Experimental Results

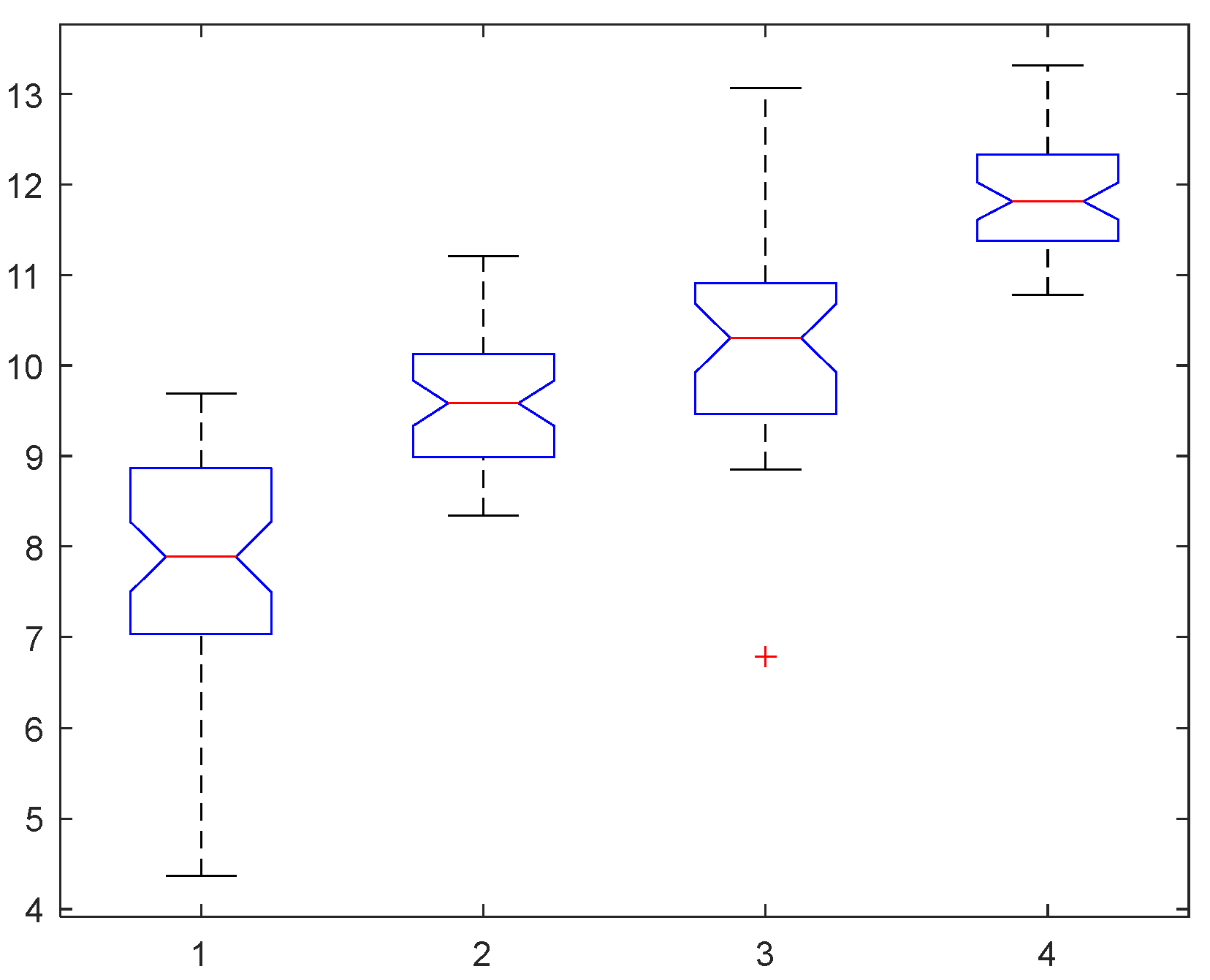

4.2.1. The Threshold of Combinatorial Entropy

4.2.2. Training and Analysis

4.3. Evaluation

4.4. Experimental Results on Test Set

4.5. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bartokova, I.; Bott, A.; Bartok, J.; Gera, M. Fog Prediction for Road Traffic Safety in a Coastal Desert Region: Improvement of Nowcasting Skills by the Machine-Learning Approach. Bound. Layer Meteorol. 2015, 157, 501–516. [Google Scholar] [CrossRef]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless Prediction of Perceptual Fog Density and Perceptual Image Defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef] [PubMed]

- Guo, H.; Wang, X.; Li, H. Density Estimation of Fog in Image Based on Dark Channel Prior. Atmosphere 2022, 13, 710. [Google Scholar] [CrossRef]

- Li, G.; An, C.; Zhang, Y.; Tu, X.; Tan, M. Color Image Clustering Segmentation Based on Fuzzy Entropy and RPCL. J. Image Graph. 2005, 10, 1264–1268+1204. [Google Scholar] [CrossRef]

- Lhermitte, E.; Hilal, M.; Furlong, R.; O’Brien, V.; Humeau-Heurtier, A. Deep Learning and Entropy-Based Texture Features for Color Image Classification. Entropy 2022, 24, 1577. [Google Scholar] [CrossRef]

- Lang, C.; Jia, H. Kapur’s Entropy for Color Image Segmentation Based on a Hybrid Whale Optimization Algorithm. Entropy 2019, 21, 318. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.; Zeng, L.; Wu, Q. Classification of Cervical Cells Based on Convolution Neural Network. Jisuanji Fuzhu Sheji Yu Tuxingxue Xuebao/J. Comput.-Aided Des. Comput. Graph. 2018, 30, 2049–2054. [Google Scholar] [CrossRef]

- Ni, K.; Zhai, M.; Wang, P. Scene Classification of Remote Sensing Images Based on Wavelet-Spatial High-Order Feature Aggregation Network. Guangxue Xuebao/Acta Opt. Sin. 2022, 42, 212–221. [Google Scholar] [CrossRef]

- Cassetti, J.; Delgadino, D.; Rey, A.; Frery, A.C. Entropy Estimators in SAR Image Classification. Entropy 2022, 24, 509. [Google Scholar] [CrossRef]

- Imani, M. Entropy/anisotropy/alpha based 3DGabor filter bank for PolSAR image classification. Geocarto Int. 2022, 37, 18491–18519. [Google Scholar] [CrossRef]

- Wang, Y.; Zhou, L.; Zhang, X. Spatio-temporal regularized shock-diffusion filtering with local entropy for restoration of degraded document images. Appl. Math. Comput. 2023, 439, 127618. [Google Scholar] [CrossRef]

- Jena, B.; Naik, M.K.; Panda, R.; Abraham, A. Maximum 3D Tsallis entropy based multilevel thresholding of brain MR image using attacking Manta Ray foraging optimization. Eng. Appl. Artif. Intell. 2021, 103, 104293. [Google Scholar] [CrossRef]

- Luo, B.; Wang, B.; Ni, S. Texture-Based Automatic classification of B-scan Liver Images. Pattern Recognit. Artif. Intell. 1995, 8, 76–81. [Google Scholar]

- Oddo, L.A. Global shape entropy: A mathematically tractable approach to building extraction in aerial imagery. In Proceedings of the 20th AIPR Workshop: Computer Vision Applications: Meeting the Challenges, McLean, VA, USA, 16–18 October 1991; pp. 91–101. [Google Scholar]

- Briguglio, A.; Hohenegger, J. How to react to shallow water hydrodynamics: The larger benthic foraminifera solution. Mar. Micropaleontol. 2011, 81, 63–76. [Google Scholar] [CrossRef] [Green Version]

- van Anders, G.; Klotsa, D.; Ahmed, N.K.; Engel, M.; Glotzer, S.C. Understanding shape entropy through local dense packing. Proc. Natl. Acad. Sci. USA 2014, 111, E4812–E4821. [Google Scholar] [CrossRef]

- Zhu, M.S.; Sun, T.; Shao, D.D. Impact of Land Reclamation on the Evolution of Shoreline Change and Nearshore Vegetation Distribution in Yangtze River Estuary. Wetlands 2016, 36, S11–S17. [Google Scholar] [CrossRef]

- Lee, S.-H.; Park, C.-M.; Choi, U. A New Measure to Characterize the Degree of Self-Similarity of a Shape and Its Applicability. Entropy 2020, 22, 1061. [Google Scholar] [CrossRef]

- Bonakdari, H.; Gholami, A.; Mosavi, A.; Kazemian-Kale-Kale, A.; Ebtehaj, I.; Azimi, A.H. A Novel Comprehensive Evaluation Method for Estimating the Bank Profile Shape and Dimensions of Stable Channels Using the Maximum Entropy Principle. Entropy 2020, 22, 1218. [Google Scholar] [CrossRef]

- Lu, P.; Hsiao, S.-W.; Wu, F. A Product Shape Design and Evaluation Model Based on Morphology Preference and Macroscopic Shape Information. Entropy 2021, 23, 639. [Google Scholar] [CrossRef]

- Sziova, B.; Nagy, S.; Fazekas, Z. Application of Structural Entropy and Spatial Filling Factor in Colonoscopy Image Classification. Entropy 2021, 23, 936. [Google Scholar] [CrossRef]

- Wen, L.-M.; Ju, Y.-F.; Yan, M.-D. Inspection of Fog Density for Traffic Image Based on Distribution Characteristics of Natural Statistics. Tien Tzu Hsueh Pao/Acta Electron. Sin. 2017, 45, 1888–1895. [Google Scholar] [CrossRef]

- Wan, J.; Qiu, Z.; Gao, H.; Jie, F.; Peng, Q. Classification of fog situations based on Gaussian mixture model. In Proceedings of the 36th Chinese Control Conference, CCC 2017, Dalian, China, 26–28 July 2017; pp. 10902–10906. [Google Scholar]

- Li, K.; Chen, H.; Zhang, S.; Wan, J. An SVM Based Technology for Haze Image Classification. Electron. Opt. Control 2017, 25, 37–41+47. [Google Scholar]

- Jiang, Y.; Sun, C.; Zhao, Y.; Yang, L. Fog Density Estimation and Image Defogging Based on Surrogate Modeling for Optical Depth. IEEE Trans. Image Process. 2017, 26, 3397–3409. [Google Scholar] [CrossRef] [PubMed]

- Ju, M.; Chen, C.; Liu, J.; Cheng, K.; Zhang, D. VRHI: Visibility restoration for hazy images using a haze density model. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, CVPRW 2021, Virtual, 19–25 June 2021; pp. 897–904. [Google Scholar]

- Ngo, D.; Lee, G.-D.; Kang, B. Haziness Degree Evaluator: A Knowledge-Driven Approach for Haze Density Estimation. Sensors 2021, 21, 3896. [Google Scholar] [CrossRef]

- Lou, W.; Li, Y.; Yang, G.; Chen, C.; Yang, H.; Yu, T. Integrating Haze Density Features for Fast Nighttime Image Dehazing. IEEE Access 2020, 8, 113318–113330. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, L.; Huang, S.; Shen, Y.; Zhao, S. Dehazing Evaluation: Real-World Benchmark Datasets, Criteria, and Baselines. IEEE Trans. Image Process. 2020, 29, 6947–6962. [Google Scholar] [CrossRef]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless perceptual fog density prediction model. In Proceedings of the Human Vision and Electronic Imaging XIX, San Francisco, CA, USA, 3–6 February 2014; p. 90140H. [Google Scholar] [CrossRef]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless perceptual image defogging. In Proceedings of the 2014 IEEE Southwest Symposium on Image Analysis and Interpretation, SSIAI 2014, San Diego, CA, USA, 6–8 April 2014; pp. 165–168. [Google Scholar]

- Ling, Z.; Gong, J.; Fan, G.; Lu, X. Optimal Transmission Estimation via Fog Density Perception for Efficient Single Image Defogging. IEEE Trans. Multimed. 2018, 20, 1699–1711. [Google Scholar] [CrossRef]

- El Khoury, J.; Thomas, J.-B.; Mansouri, A. A database with reference for image dehazing evaluation. J. Imaging Sci. Technol. 2018, 62, 10503. [Google Scholar] [CrossRef]

- El Khoury, J.; Thomas, J.-B.; Mansouri, A. A color image database for haze model and dehazing methods evaluation. In Proceedings of the 7th International Conference on Image and Signal Processing, ICISP 2016, Trois Rivieres, QC, Canada, 30 May–1 June 2016; pp. 109–117. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. O-HAZE: A dehazing benchmark with real hazy and haze-free outdoor images. In Proceedings of the 31st Meeting of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, CVPRW 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 867–875. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; Sbert, M.; Timofte, R. Dense-Haze: A Benchmark for Image Dehazing with Dense-Haze and Haze-Free Images. In Proceedings of the 26th IEEE International Conference on Image Processing, ICIP 2019, Taipei, Taiwan, 22–25 September 2019; pp. 1014–1018. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; Van Gool, L.; Zhang, L.; Yang, M.-H.; Guo, T.; Li, X.; Cherukuri, V.; Monga, V.; et al. NTIRE 2019 image dehazing challenge report. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, CVPRW 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 2241–2253. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; De Vleeschouwer, C. D-HAZY: A dataset to evaluate quantitatively dehazing algorithms. In Proceedings of the 23rd IEEE International Conference on Image Processing, ICIP 2016, Phoenix, AZ, USA, 25–28 September 2016; pp. 2226–2230. [Google Scholar]

- Sakaridis, C.; Dai, D.; Van Gool, L. Semantic Foggy Scene Understanding with Synthetic Data. Int. J. Comput. Vis. 2018, 126, 973–992. [Google Scholar] [CrossRef] [Green Version]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3213–3223. [Google Scholar]

- Theodorsson-Norheim, E. Kruskal-Wallis Test: Basic Computer Program to Perform Nonparametric One-Way Analysis of Variance and Multiple Comparisons on Ranks of Several Independent Samples. Comput. Methods Programs Biomed. 1986, 23, 57–62. [Google Scholar] [CrossRef]

- Su, J.; Xu, B.; Yin, H. A survey of deep learning approaches to image restoration. Neurocomputing 2022, 487, 46–65. [Google Scholar] [CrossRef]

- Zhang, J.; Min, X.; Zhu, Y.; Zhai, G.; Zhou, J.; Yang, X.; Zhang, W. HazDesNet: An End-to-End Network for Haze Density Prediction. IEEE Trans. Intell. Transp. Syst. 2022, 23, 3087–3102. [Google Scholar] [CrossRef]

| Set | Heavy Fog | Moderate Fog | Light Fog | Fog-Free | Total N |

|---|---|---|---|---|---|

| Training set | 55 | 51 | 36 | 52 | 194 |

| Test set | 38 | 34 | 24 | 35 | 131 |

| Index | Training Accuracy | Testing Accuracy | |||

|---|---|---|---|---|---|

| 1 | 9.106 | 9.952 | 11.2 | 0.8000 | 0.7692 |

| 2 | 9.02 | 9.777 | 11.21 | 0.7736 | 0.6154 |

| 3 | 9.036 | 9.913 | 11.31 | 0.7642 | 0.8077 |

| 4 | 9.06 | 9.829 | 11.3 | 0.7238 | 0.8519 |

| 5 | 9.178 | 9.998 | 11.26 | 0.8396 | 0.6538 |

| SFDE | FADE | Our Method | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Label | Precision | Recall | f1 | Precision | Recall | f1 | Precision | Recall | f1 |

| 1 | 0.5854 | 0.4364 | 0.5000 | 0.7556 | 0.6182 | 0.6800 | 0.8250 | 0.8919 | 0.8571 |

| 2 | 0.4412 | 0.3000 | 0.3571 | 0.3968 | 0.5000 | 0.4425 | 0.5758 | 0.5588 | 0.5672 |

| 3 | 0.2540 | 0.4324 | 0.3200 | 0.2917 | 0.3784 | 0.3294 | 0.6800 | 0.6071 | 0.6415 |

| 4 | 0.6429 | 0.6923 | 0.6667 | 0.8684 | 0.6346 | 0.7333 | 0.9706 | 1.0000 | 0.9851 |

| weighted avg | 0.5004 | 0.4091 | 0.4735 | 0.6049 | 0.5464 | 0.5662 | 0.7764 | 0.7727 | 0.7787 |

| Accuracy | 0.4691 | 0.5464 | 0.7727 | ||||||

| SFDE | FADE | Our Method | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Label | Precision | Recall | f1 | Precision | Recall | f1 | Precision | Recall | f1 |

| 1 | 0.6415 | 0.8947 | 0.7473 | 0.6271 | 0.9737 | 0.7629 | 0.8571 | 0.9474 | 0.9000 |

| 2 | 0.5652 | 0.3824 | 0.4561 | 0.5714 | 0.3529 | 0.4364 | 0.8077 | 0.6176 | 0.7000 |

| 3 | 0.4074 | 0.4583 | 0.4314 | 0.6000 | 0.5000 | 0.5455 | 0.5862 | 0.7083 | 0.6415 |

| 4 | 0.9286 | 0.7426 | 0.8254 | 0.9355 | 0.8286 | 0.8788 | 0.8824 | 0.8571 | 0.8696 |

| weighted avg | 0.6555 | 0.6412 | 0.6347 | 0.6901 | 0.6870 | 0.6693 | 0.8014 | 0.7939 | 0.7926 |

| Accuracy | 0.6412 | 0.6870 | 0.7939 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, R.; Wang, X.; Li, H. Fog Density Evaluation by Combining Image Grayscale Entropy and Directional Entropy. Atmosphere 2023, 14, 1125. https://doi.org/10.3390/atmos14071125

Cao R, Wang X, Li H. Fog Density Evaluation by Combining Image Grayscale Entropy and Directional Entropy. Atmosphere. 2023; 14(7):1125. https://doi.org/10.3390/atmos14071125

Chicago/Turabian StyleCao, Rong, Xiaochun Wang, and Hongjun Li. 2023. "Fog Density Evaluation by Combining Image Grayscale Entropy and Directional Entropy" Atmosphere 14, no. 7: 1125. https://doi.org/10.3390/atmos14071125