Evaluation of Near-Taiwan Strait Sea Surface Wind Forecast Based on PanGu Weather Prediction Model

Abstract

:1. Introduction

2. Data, Experiments, and Methods

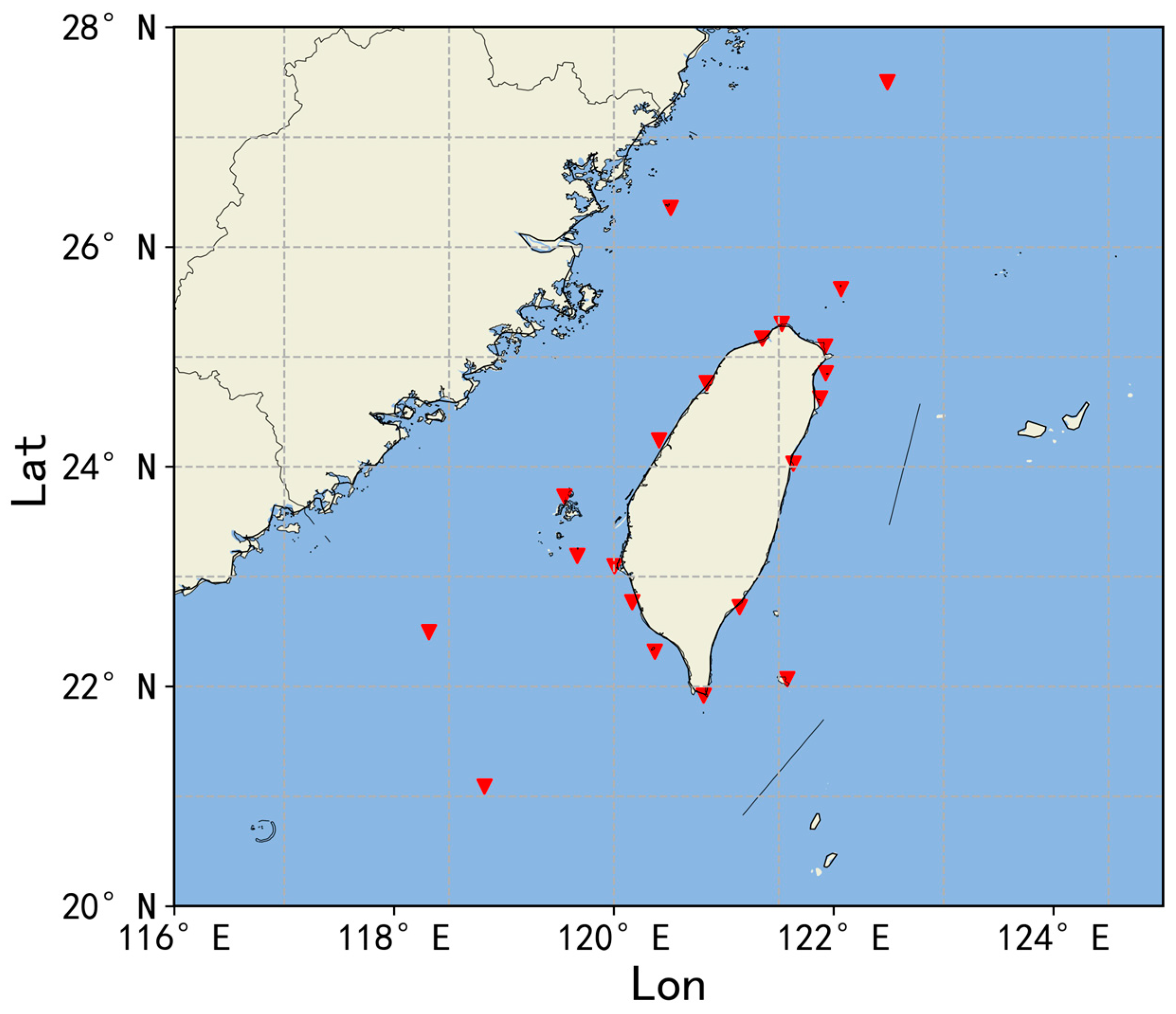

2.1. Data and Experiments

- ERA5 (PanGu): Pre-test with ERA5 reanalysis data as the initial field. This experiment represents the PanGu model pre-test using the optimal initial field.

- GRAPES_GFS (PanGu): Pre-test with GRAPES_GFS analysis data as the initial field. This experiment represents the PanGu model pre-test combined with actual operational forecast data.

2.2. Evaluation Methods and Preprocessing

3. Results Analysis

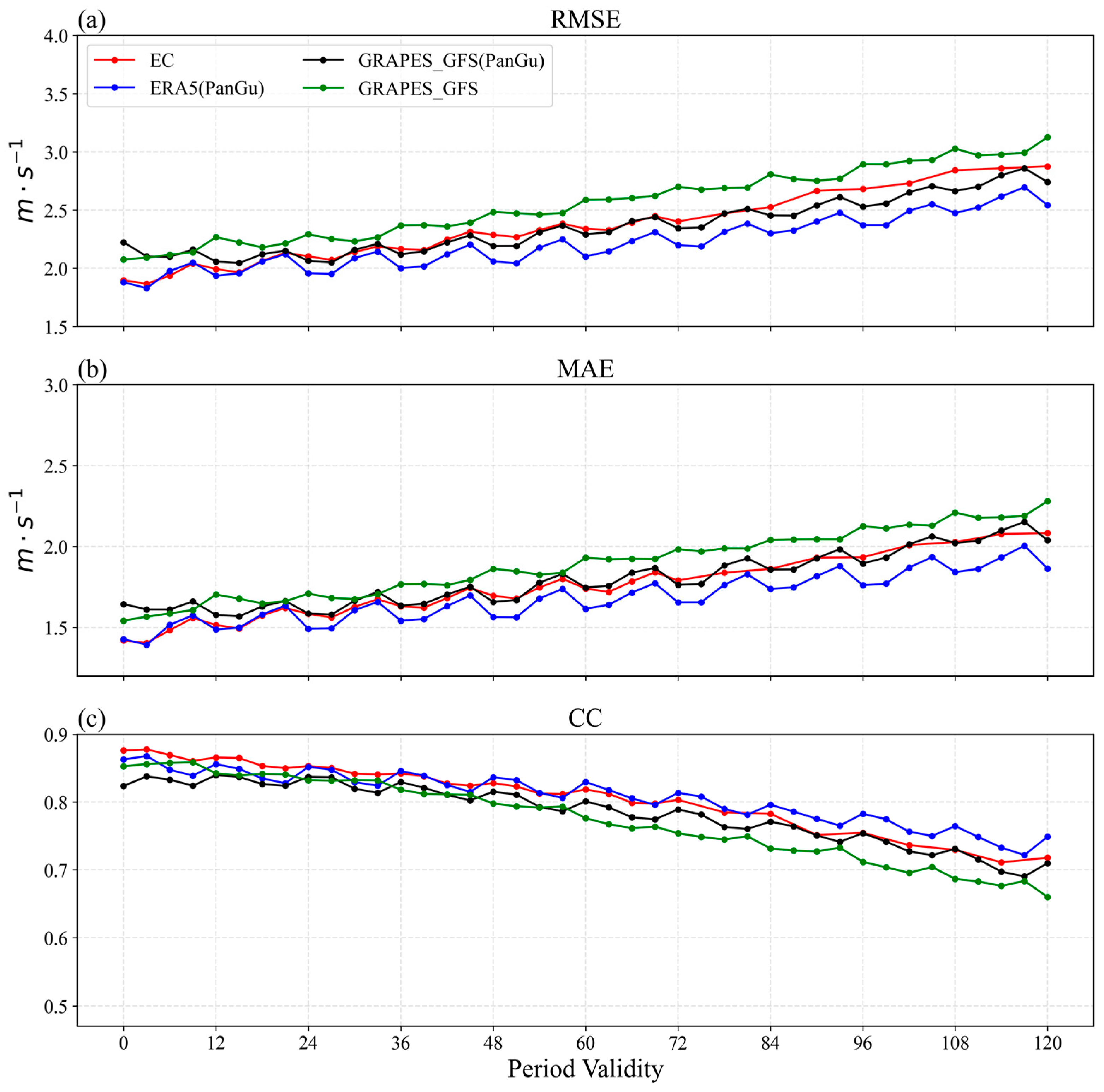

3.1. Wind Speed Comparison and Evaluation

- First, examining the initial fields of the GRAPES_GFS (green line) and GRAPES_GFS (PanGu) (black line) experiments, it is evident that the forecast errors in wind speed differ between them. This discrepancy arises because the GRAPES_GFS data are on a grid of 0.125° × 0.125°. Before being used in the PanGu model experiments, the data must be interpolated to a 0.25° × 0.25° grid, introducing interpolation errors. However, when the forecast lead time is between 0 and 3 h, the black line shows a reduction, and at 3, 6, and 9 h, the green and black lines gradually converge. This indicates that the PanGu model has a certain degree of correction capability and demonstrates strong adaptability.

- Second, compare the GRAPES-GFS (green line) and GRAPES_GFS (PanGu) (black line) experiments, as well as the EC (red line) and ERA5 (PanGu) (blue line) experiments. It is observed that the forecasting error of GRAPES_GFS (PanGu) is lower than that of GRAPES_GFS, and ERA5 (PanGu) exhibits a lower forecasting error compared to EC. This result indicates that the PanGu model, as an advanced intelligent forecasting model, not only outperforms traditional models in forecast response speed but also provides better forecast results compared to conventional NWP (relative to the input NWP).

- Third, by examining the GRAPES_GFS (green line) and ERA5 (PanGu) (blue line) experiments, it is noted that the latter benefits from a superior initial field. Consequently, during the forecast period of 0–120 h, ERA5 (PanGu) demonstrates smaller wind speed forecast errors and better forecast performance. This comparison underscores that a superior initial field enhances the PanGu model’s forecasting accuracy. Therefore, improving assimilation techniques to construct an initial field with reduced observational errors could further enhance the forecasting performance of the PanGu model.

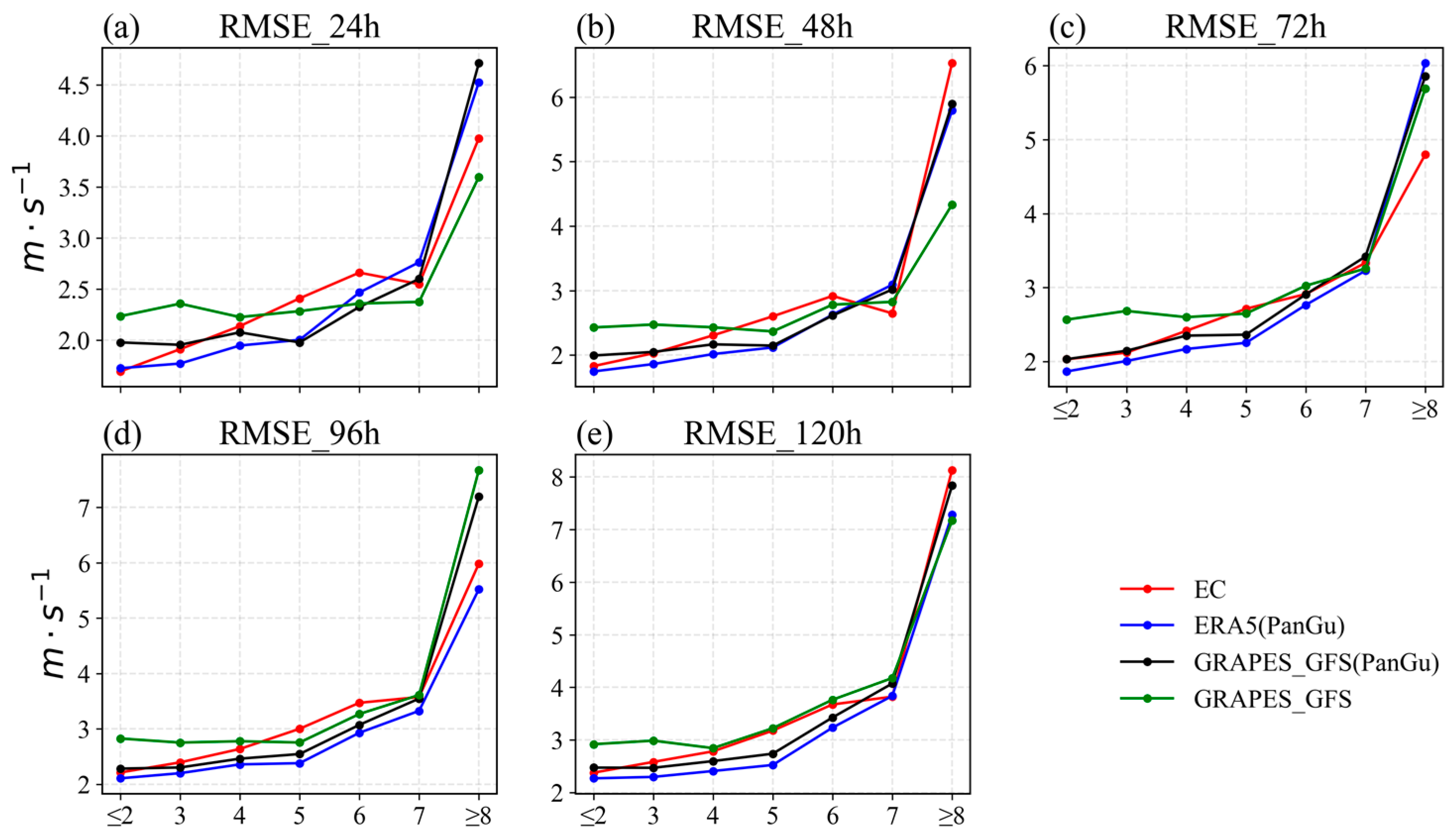

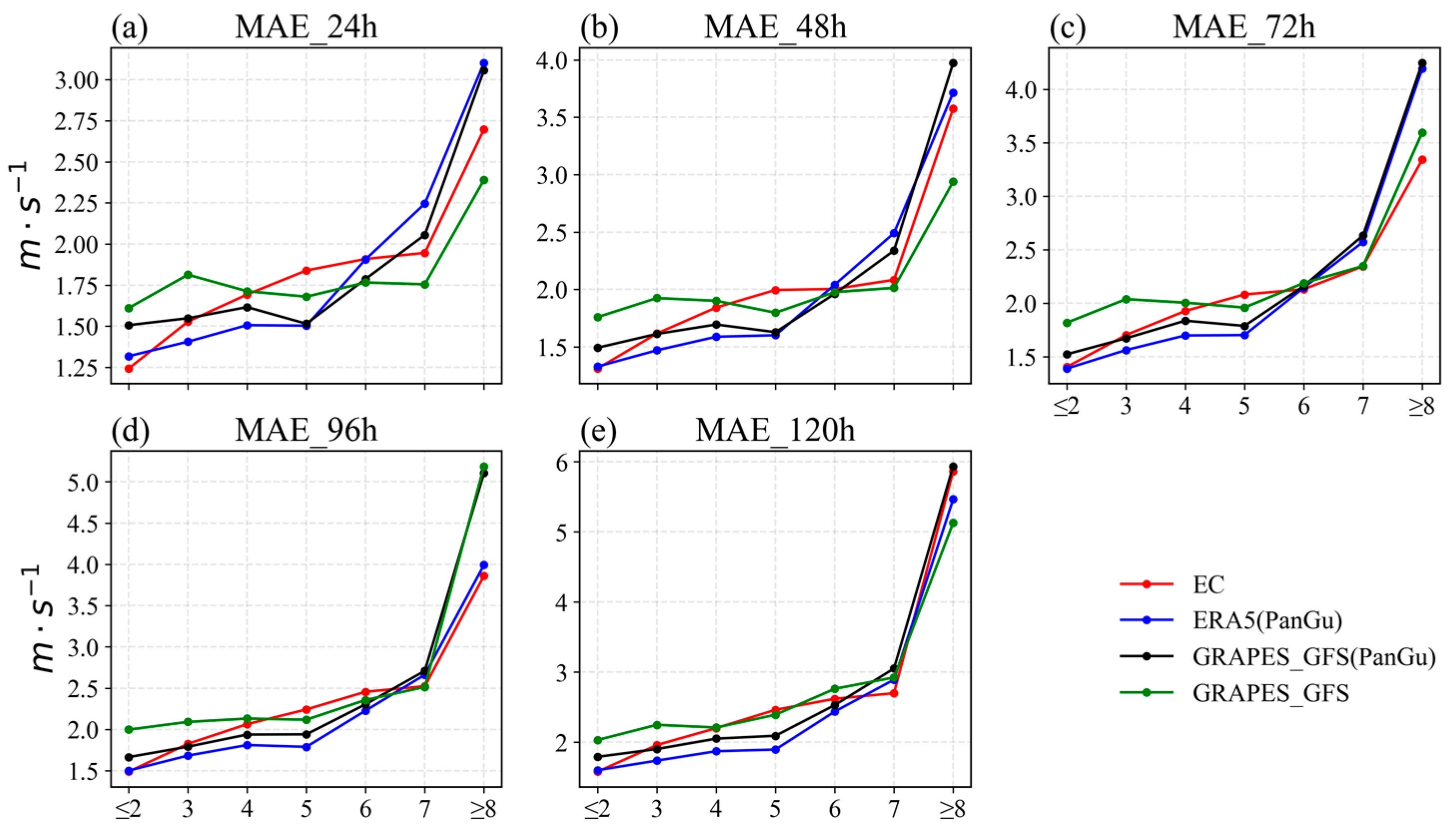

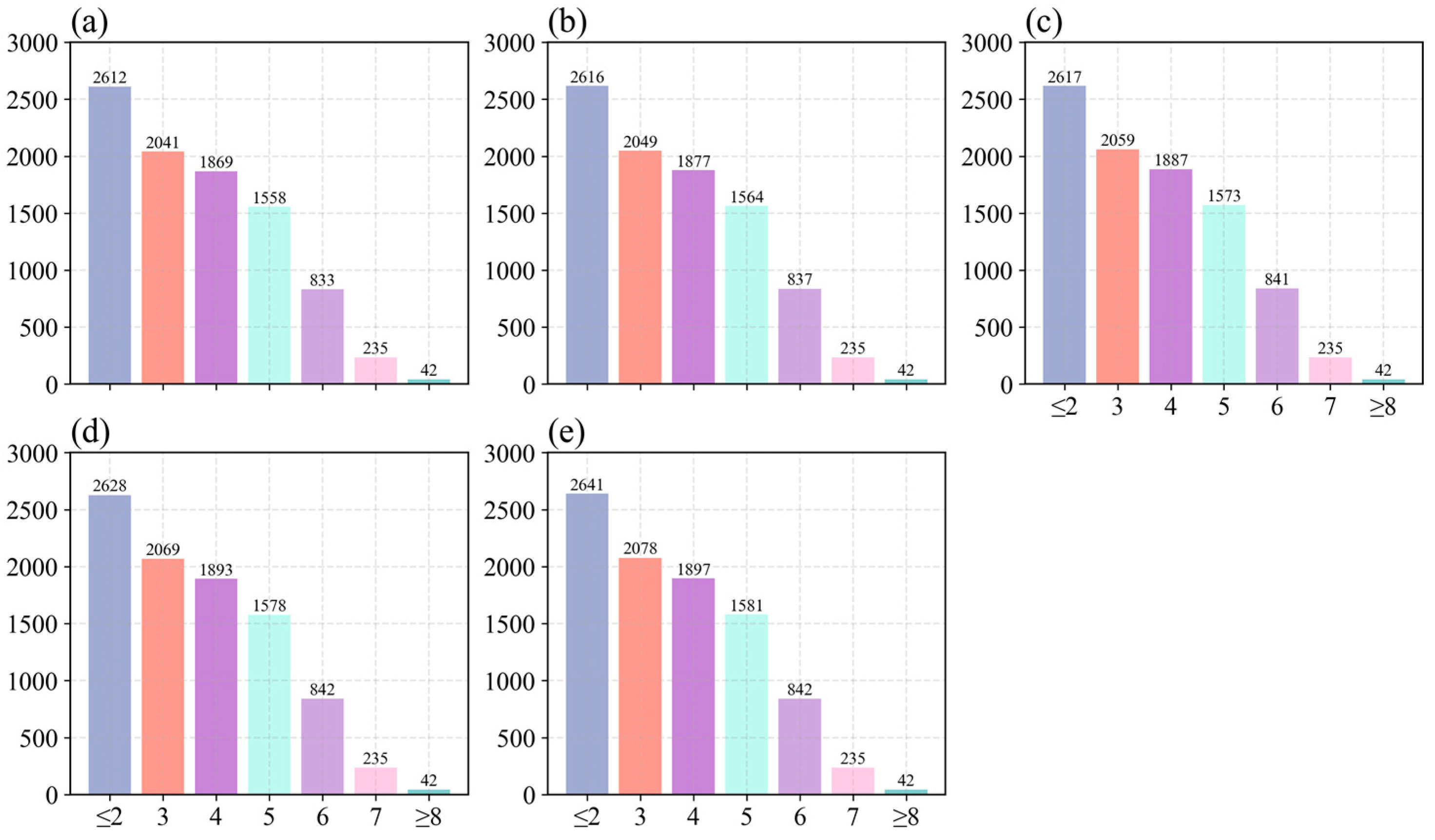

3.2. Wind Speed Level Comparison Evaluation

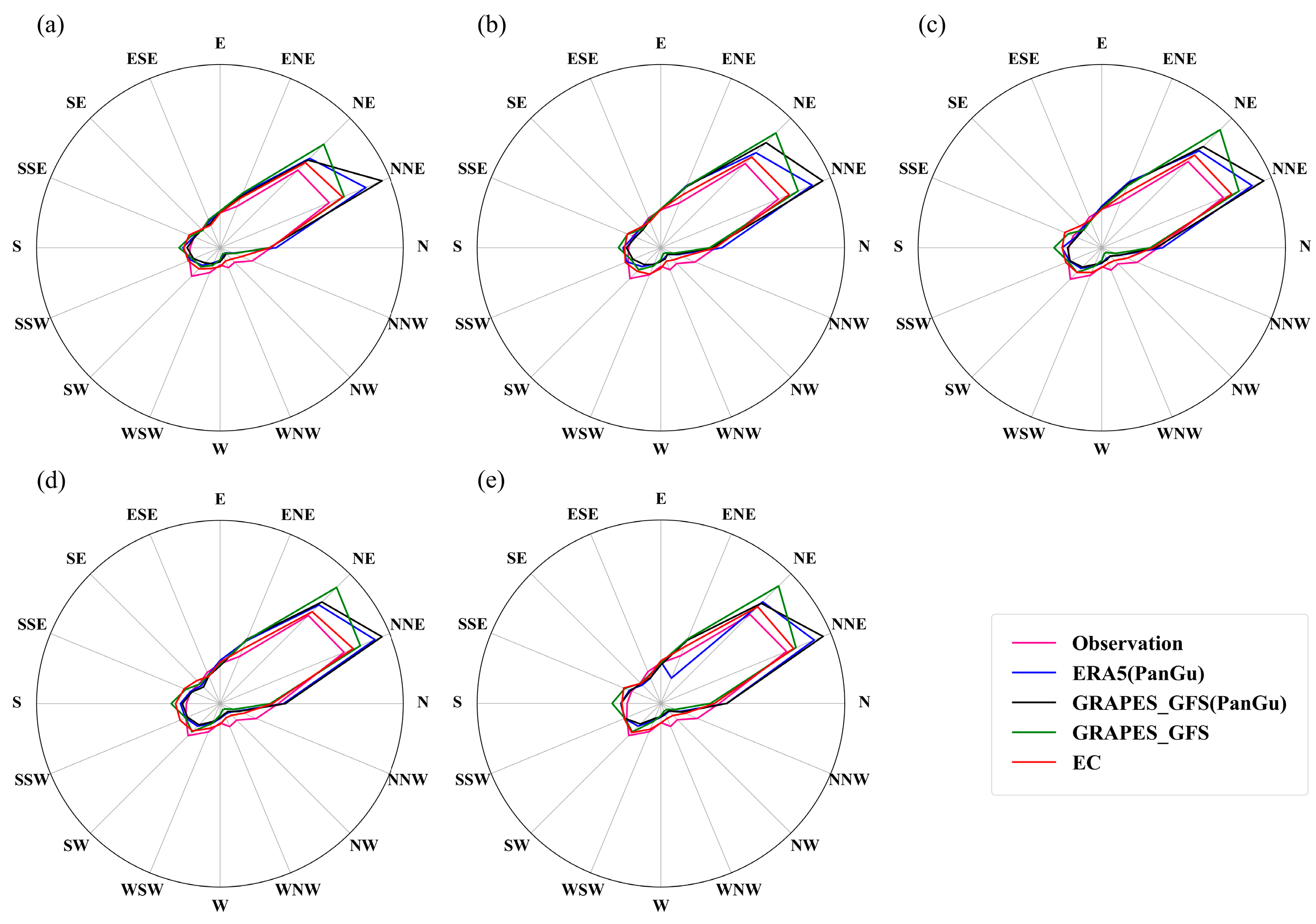

3.3. Wind Direction Comparative Evaluation

4. Conclusions and Discussion

- Performance Comparison:

- Initialization Comparison:

- Despite the lack of physical interpretability in using deep learning for weather forecasting [14,37], its initial proposition highlighted its powerful capability to fit nonlinear equations given ample data [38]. Numerical weather prediction fundamentally involves solving a set of partial differential equations (e.g., thermodynamic equations, N-S equations) starting from initial atmospheric conditions to simulate various physical processes [12,39]. Thus, the PanGu model, trained on 39 years of ERA5 data, can feasibly learn nonlinear relationships among atmospheric variables and has demonstrated transferability using GRAPES_GFS data.

- Bi et al. [27] identified two primary reasons why previous intelligent weather forecasting models have exhibited lower accuracy compared to traditional numerical models: (i) Weather forecasting necessitates consideration of high dimensions. Atmospheric relationships vary rapidly among different pressure levels, and atmospheric distributions across pressure levels are non-uniform. Previous two-dimensional intelligent weather forecasting models [40,41,42] struggle with rapid changes across different pressure levels. Additionally, many weather processes (e.g., radiation, convection) can only be fully described in three-dimensional space, which two-dimensional models cannot effectively utilize. (ii) When models are iteratively invoked, iterative errors accumulate. Intelligent forecasting models, lacking constraints from partial differential equations, experience super-linear growth in iterative errors over time.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

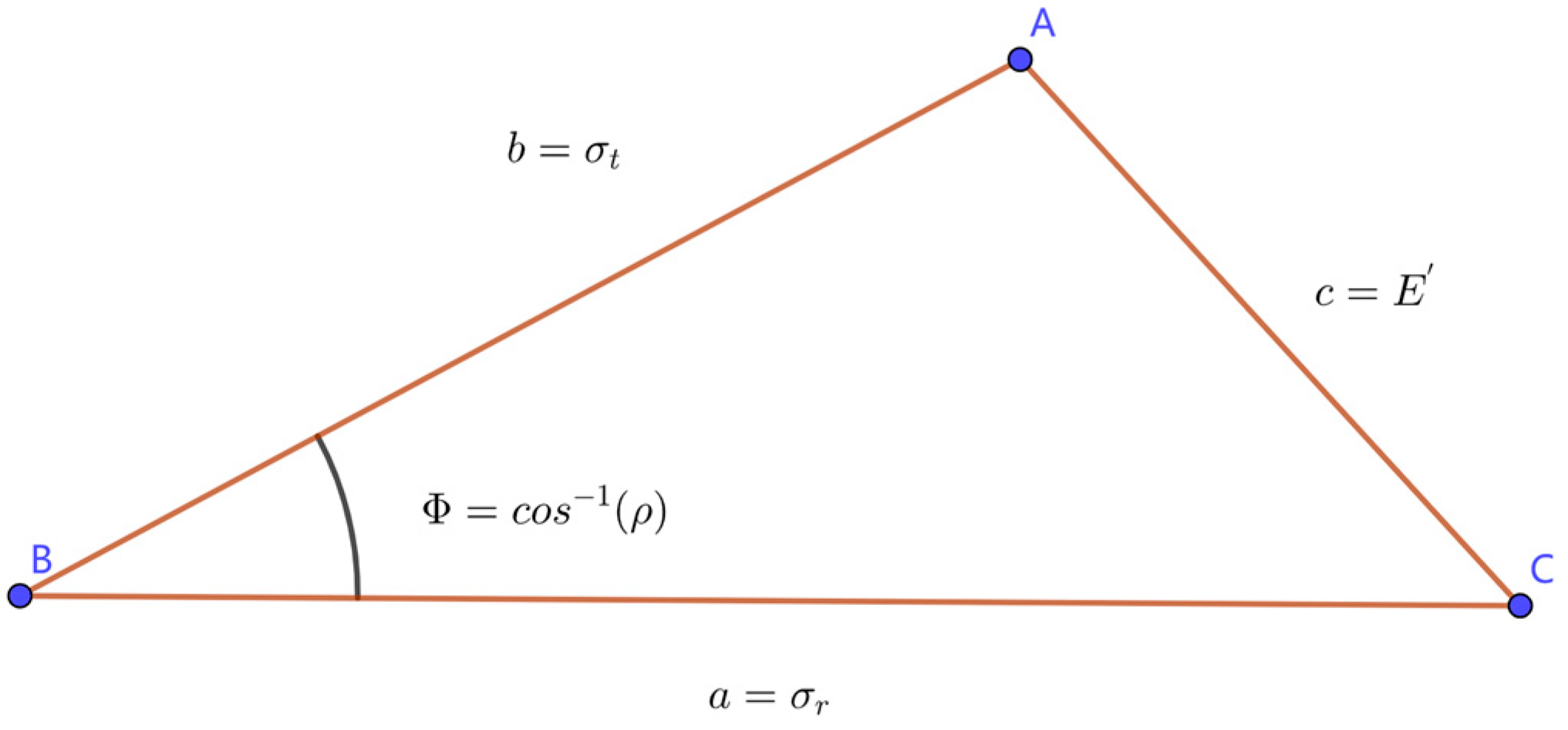

Appendix A. Introduction to Taylor Diagram

References

- Qu, H.; Huang, B.; Zhao, W.; Song, J.; Guo, Y.; Hu, H.; Cao, Y. Comparison and evaluation of HRCLDAS-V1.0 and ERA5 sea-surface wind fields. J. Trop. Meteorol. 2022, 38, 569–579. [Google Scholar]

- Fan, K.; Xu, Q.; Xu, D.; Xie, R.; Ning, J.; Huang, J. Review of remote sensing of sea surface wind field by space-borne SAR. Prog. Geophys. 2022, 37, 1807–1817. [Google Scholar]

- Stammer, D.; Wunsch, C.; Giering, R.; Eckert, C.; Heimbach, P.; Marotzke, J.; Adcroft, A.; Hill, C.N.; Marshall, J. Global Ocean Circulation during 1992–1997, Estimated from Ocean Observations and a General Circulation Model. J. Geophys. Res. Ocean 2002, 107, 1-1–1-27. [Google Scholar] [CrossRef]

- Kim, E.; Manuel, L.; Curcic, M.; Chen, S.S.; Phillips, C.; Veers, P. On the Use of Coupled Wind, Wave, and Current Fields in the Simulation of Loads on Bottom-Supported Offshore Wind Turbines during Hurricanes: March 2012–September 2015; NREL/TP--5000-65283; NREL/TP: Golden, CO, USA, 2016.

- Veers, P.; Dykes, K.; Lantz, E.; Barth, S.; Bottasso, C.L.; Carlson, O.; Clifton, A.; Green, J.; Green, P.; Holttinen, H.; et al. Grand Challenges in the Science of Wind Energy. Science 2019, 366, eaau2027. [Google Scholar] [CrossRef] [PubMed]

- Worsnop, R.P.; Lundquist, J.K.; Bryan, G.H.; Damiani, R.; Musial, W. Gusts and Shear Within Hurricane Eyewalls Can Exceed Offshore Wind-Turbine Design Standards. Geophys. Res. Lett. 2017, 44, 6413–6420. [Google Scholar] [CrossRef]

- Li, M.; Wang, H.; Jin, Q. A review on the forecast method of China offshore wind. Mar. Forecast. 2009, 26, 114–120. [Google Scholar]

- Chen, X.; Hao, Z.; Pan, D.; Huang, S.; Gong, F.; Shi, D. Analysis of temporal and spatial feature of sea surface wind field in China offshore. J. Mar. Sci. 2014, 32, 1–10. [Google Scholar]

- Zheng, C. Sea surface wind field analysis in the China sea during the last 22 years with CCMP wind field. Meteorol. Disaster Reduct. Res. 2011, 34, 41–46. [Google Scholar]

- Zhang, J.; Zhu, X. Verification of prediction capability of NWP products and objective forecast methods. Meteorol. Mon. 2006, 32, 58–63. [Google Scholar]

- Yang, C.; Zheng, Y.; Lin, J.; Li, F. Numerical model output validation and assessment. J. Meteorol. Res. Appl. 2008, 29, 32–37. [Google Scholar]

- Bauer, P.; Thorpe, A.; Brunet, G. The Quiet Revolution of Numerical Weather Prediction. Nature 2015, 525, 47–55. [Google Scholar] [CrossRef] [PubMed]

- de Bezenac, E.; Pajot, A.; Gallinari, P. Deep Learning for Physical Processes: Incorporating Prior Scientific Knowledge. J. Stat. Mech. Theory Exp. 2019, 2019, 124009. [Google Scholar] [CrossRef]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N. Prabhat Deep Learning and Process Understanding for Data-Driven Earth System Science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Lydia, M.; Kumar, G.E.P. Deep Learning Algorithms for Wind Forecasting: An Overview. In Artificial Intelligence for Renewable Energy Systems; Vyas, A.K., Balamurugan, S., Hiran, K.K., Dhiman, H.S., Eds.; Wiley: Hoboken, NJ, USA, 2022; pp. 129–145. [Google Scholar]

- Lin, Z.; Liu, X. Wind Power Forecasting of an Offshore Wind Turbine Based on High-Frequency SCADA Data and Deep Learning Neural Network. Energy 2020, 201, 117693. [Google Scholar] [CrossRef]

- Cheng, L.; Zang, H.; Ding, T.; Sun, R.; Wang, M.; Wei, Z.; Sun, G. Ensemble Recurrent Neural Network Based Probabilistic Wind Speed Forecasting Approach. Energies 2018, 11, 1958. [Google Scholar] [CrossRef]

- Wang, G.; Wang, X.; Hou, M.; Qi, Y.; Song, J.; Liu, K.; Wu, X.; Bai, Z. Research on application of LSTM deep neural network on historical observation data and reanalysis data for sea surface wind speed forecasting. Haiyang Xuebao 2020, 42, 67–77. [Google Scholar]

- Liu, X.; Zhang, H.; Kong, X.; Lee, K.Y. Wind Speed Forecasting Using Deep Neural Network with Feature Selection. Neurocomputing 2020, 397, 393–403. [Google Scholar] [CrossRef]

- Ju, Y.; Sun, G.; Chen, Q.; Zhang, M.; Zhu, H.; Rehman, M.U. A Model Combining Convolutional Neural Network and LightGBM Algorithm for Ultra-Short-Term Wind Power Forecasting. IEEE Access 2019, 7, 28309–28318. [Google Scholar] [CrossRef]

- Lam, R.; Sanchez-Gonzalez, A.; Willson, M.; Wirnsberger, P.; Fortunato, M.; Alet, F.; Ravuri, S.; Ewalds, T.; Eaton-Rosen, Z.; Hu, W.; et al. Learning Skillful Medium-Range Global Weather Forecasting. Science 2023, 382, 1416–1421. [Google Scholar] [CrossRef]

- Kurth, T.; Subramanian, S.; Harrington, P.; Pathak, J.; Mardani, M.; Hall, D.; Miele, A.; Kashinath, K.; Anandkumar, A. FourCastNet: Accelerating Global High-Resolution Weather Forecasting Using Adaptive Fourier Neural Operators. In Proceedings of the Platform for Advanced Scientific Computing Conference, Davos, Switzerland, 26–28 June 2023; ACM: Davos, Switzerland, 2023; pp. 1–11. [Google Scholar]

- Zhang, Y.; Long, M.; Chen, K.; Xing, L.; Jin, R.; Jordan, M.I.; Wang, J. Skilful Nowcasting of Extreme Precipitation with NowcastNet. Nature 2023, 619, 526–532. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Chen, L.; Wang, Z.; Li, H. SwinVRNN: A Data-Driven Ensemble Forecasting Model via Learned Distribution Perturbation. J. Adv. Model. Earth Syst. 2023, 15, e2022MS003211. [Google Scholar] [CrossRef]

- Chen, L.; Zhong, X.; Zhang, F.; Cheng, Y.; Xu, Y.; Qi, Y.; Li, H. FuXi: A Cascade Machine Learning Forecasting System for 15-Day Global Weather Forecast. Clim. Atmos. Sci. 2023, 6, 190. [Google Scholar] [CrossRef]

- Chen, K.; Han, T.; Gong, J.; Bai, L.; Ling, F.; Luo, J.-J.; Chen, X.; Ma, L.; Zhang, T.; Su, R.; et al. FengWu: Pushing the Skillful Global Medium-Range Weather Forecast beyond 10 Days Lead. arXiv 2023, arXiv:2304.02948. [Google Scholar]

- Bi, K.; Xie, L.; Zhang, H.; Chen, X.; Gu, X.; Tian, Q. Accurate Medium-Range Global Weather Forecasting with 3D Neural Networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef]

- Zhang, Y. Evaluation of three reanalysis surface wind products in Taiwan Strait. J. Fish. Res. 2020, 42, 556–571. [Google Scholar]

- Pan, W.; Lin, Y. Spatial feature and seasonal variability characteristics of sea surface wind field in Taiwan Strait from 2007 to 2017. J. Trop. Meteorol. 2019, 35, 296–303. [Google Scholar]

- Han, Y.; Zhou, L.; Zhao, Y.; Jiang, H.; Yu, D. Evaluation of three sea surface wind data sets in Luzon Strait. Mar. Forecast. 2019, 36, 44–52. [Google Scholar]

- Kalverla, P.; Steeneveld, G.; Ronda, R.; Holtslag, A.A. Evaluation of Three Mainstream Numerical Weather Prediction Models with Observations from Meteorological Mast IJmuiden at the North Sea. Wind Energy 2019, 22, 34–48. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, X.; Xiong, N.; Yang, L.T.; Zhao, W. GPU Acceleration for GRAPES Meteorological Model. In Proceedings of the 2011 IEEE International Conference on High Performance Computing and Communications, Banff, AB, Canada, 2–4 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 365–372. [Google Scholar]

- Pappenberger, F.; Cloke, H.L.; Persson, A.; Demeritt, D. HESS Opinions “On forecast (in)consistency in a hydro-meteorological chain: Curse or blessing?”. Hydrol. Earth Syst. Sci. 2011, 15, 2391–2400. [Google Scholar] [CrossRef]

- Case, J.L.; Wheeler, M.M.; Merceret, F.J. Final Report on Land-Breeze Forecasting; NASA Technical Reports Server. Available online: https://kscweather.ksc.nasa.gov/amu/files/final-reports/landbreeze.pdf (accessed on 1 June 2024).

- Weyn, J.A.; Durran, D.R.; Caruana, R. Can Machines Learn to Predict Weather? Using Deep Learning to Predict Gridded 500-hPa Geopotential Height From Historical Weather Data. J. Adv. Model. Earth Syst. 2019, 11, 2680–2693. [Google Scholar] [CrossRef]

- Rasp, S.; Thuerey, N. Data-Driven Medium-Range Weather Prediction with a Resnet Pretrained on Climate Simulations: A New Model for WeatherBench. J. Adv. Model. Earth Syst. 2021, 13, e2020MS002405. [Google Scholar] [CrossRef]

- Schultz, M.G.; Betancourt, C.; Gong, B.; Kleinert, F.; Langguth, M.; Leufen, L.H.; Mozaffari, A.; Stadtler, S. Can Deep Learning Beat Numerical Weather Prediction? Philos. Trans. R. Soc. A-Math. Phys. Eng. Sci. 2021, 379, 20200097. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation. In Parallel Distributed Processing. 1: Foundations; Rumelhart, D.E., Mcclelland, J., Eds.; MIT Press: Cambridge, MA, USA, 1999; ISBN 978-0-262-18120-4. [Google Scholar]

- Lynch, P. The Origins of Computer Weather Prediction and Climate Modeling. J. Comput. Phys. 2008, 227, 3431–3444. [Google Scholar] [CrossRef]

- Rasp, S.; Dueben, P.D.; Scher, S.; Weyn, J.A.; Mouatadid, S.; Thuerey, N. WeatherBench: A Benchmark Data Set for Data-Driven Weather Forecasting. J. Adv. Model. Earth Syst. 2020, 12, e2020MS002203. [Google Scholar] [CrossRef]

- Weyn, J.A.; Durran, D.R.; Caruana, R.; Cresswell-Clay, N. Sub-Seasonal Forecasting with a Large Ensemble of Deep-Learning Weather Prediction Models. J. Adv. Model. Earth Syst. 2021, 13, e2021MS002502. [Google Scholar] [CrossRef]

- Pathak, J.; Subramanian, S.; Harrington, P.; Raja, S.; Chattopadhyay, A.; Mardani, M.; Kurth, T.; Hall, D.; Li, Z.; Azizzadenesheli, K.; et al. FourCastNet: A Global Data-Driven High-Resolution Weather Model Using Adaptive Fourier Neural Operators. arXiv 2022, arXiv:2202.11214. [Google Scholar]

- Taylor, K.E. Summarizing Multiple Aspects of Model Performance in a Single Diagram. J. Geophys. Res. 2001, 106, 7183–7192. [Google Scholar] [CrossRef]

| Four Sets of Forecasting Experiments | 24 h | 48 h | 72 h | 96 h | 120 h |

|---|---|---|---|---|---|

| GRAPES_GFS (PanGu) | 0.975 | 0.973 | 0.971 | 0.974 | 0.970 |

| ERA5 (PanGu) | 0.948 | 0.944 | 0.953 | 0.960 | 0.957 |

| GRAPES_GFS | 1.076 | 1.068 | 1.067 | 1.058 | 1.060 |

| EC | 1.093 | 1.105 | 1.079 | 1.081 | 1.085 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yi, J.; Li, X.; Zhang, Y.; Yao, J.; Qu, H.; Yi, K. Evaluation of Near-Taiwan Strait Sea Surface Wind Forecast Based on PanGu Weather Prediction Model. Atmosphere 2024, 15, 977. https://doi.org/10.3390/atmos15080977

Yi J, Li X, Zhang Y, Yao J, Qu H, Yi K. Evaluation of Near-Taiwan Strait Sea Surface Wind Forecast Based on PanGu Weather Prediction Model. Atmosphere. 2024; 15(8):977. https://doi.org/10.3390/atmos15080977

Chicago/Turabian StyleYi, Jun, Xiang Li, Yunfei Zhang, Jiawei Yao, Hongyu Qu, and Kan Yi. 2024. "Evaluation of Near-Taiwan Strait Sea Surface Wind Forecast Based on PanGu Weather Prediction Model" Atmosphere 15, no. 8: 977. https://doi.org/10.3390/atmos15080977