The Applications of AI Tools in the Fields of Weather and Climate—Selected Examples

Abstract

1. Introduction

2. Materials and Methods

2.1. AI Tools Used in This Research

- ChatGPT 4.0, 4.o, o1, and o3 (both mini and mini-high) [20] by OpenAI [21]. ChatGPT is a conversational AI model developed by OpenAI that processes text, data files, and images. The earlier model, ChatGPT3.5, noted for only 30–40% accuracy in answering scientific queries [22], is no longer available.

- DataAnalyst [32]. DataAnalyst is integrated within ChatGPT’s toolset (often referred to as Advanced Data Analysis or the Code Interpreter) and is designed to help analyze and visualize data across various file types.

- The tools selected in this study—ChatGPT, Gemini, Claude, and Copilot—were chosen based on their high accessibility and popularity at the time of data collection (February–April 2024). These models were publicly available via user-friendly interfaces and were widely adopted across general-purpose applications, including scientific, medical, and educational use cases [42,43,44].

2.2. The Testing Procedure of AI Tools

- All AI tools were provided with identical prompts and input data (including data files and images).

- A diverse set of tasks was administered, comprising:

- ○

- Cloud recognition using images sourced from both the WMO Cloud Atlas and a proprietary cloud database;

- ○

- Supplementing gaps in literature reviews concerning humid heat waves;

- ○

- Generating maps from a provided data file;

- ○

- Comparing and interpreting two maps (provided as images); and

- ○

- Retrieving archival numerical data from line graphs uploaded as images.

- Each outcome was evaluated on a subjective 0–5 scale in terms of accuracy, precision, and overall correctness, where 0 denotes an entirely incorrect response and 5 a fully correct one. For longer responses, one point was added for each correct component; for shorter responses, one point was deducted for every error. For example, if the correct answer was Cumulus humilis and Altocumulus stratiformis translucidus perlucidus but the response provided was “Cirrocumulus stratiformis undulatus, Cumulus humilis”, the score was 3 (one point each for “stratiformis”, “cumulus”, and “humilis”). In another case, if the correct answer was Stratus undulatus and the response was Stratus nebulosus undulatus, one point was deducted for the extraneous “nebulosus”, resulting in a score of 4.

- The research was conducted between February 2024 and February 2025. During this period, ChatGPT 4.0 was upgraded to ChatGPT 4.o, as well as ChatGPT o1 and o3, while Google Bard was rebranded as Gemini Advanced (initially, version 1.5 and later, 2.0). All of these systems were utilized in their paid versions. At the outset, Consensus ranked first in the “Research and Analysis” category; by the conclusion of the study, SciSpace had ascended to the top position, with Consensus ranking second. Currently (March 2025), ScholarGPT has reached the top position. Notably, several LLMs that previously did not support image or data file inputs have since been updated to include these functionalities.

3. Results

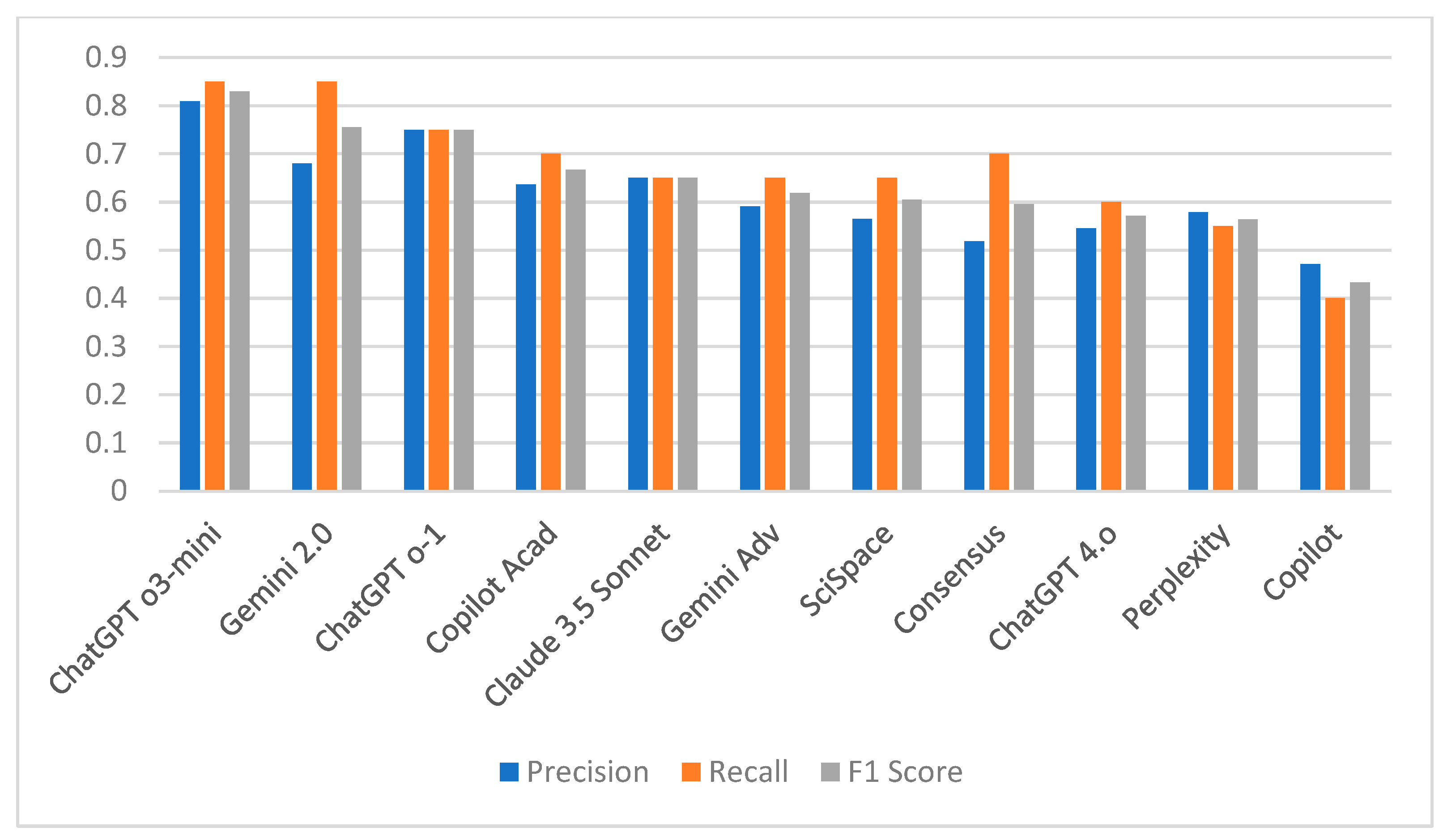

3.1. Cloud Recognition

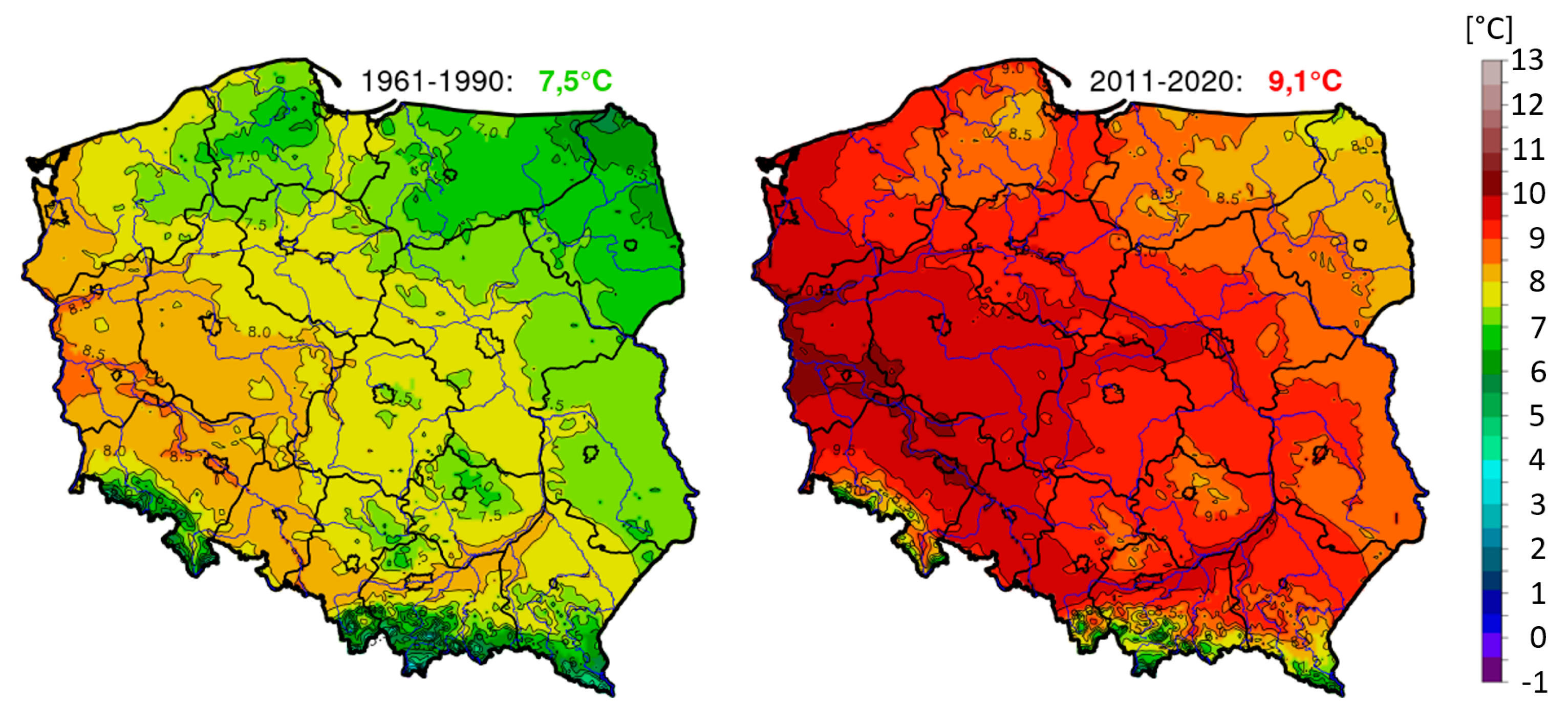

3.2. Maps Interpretation

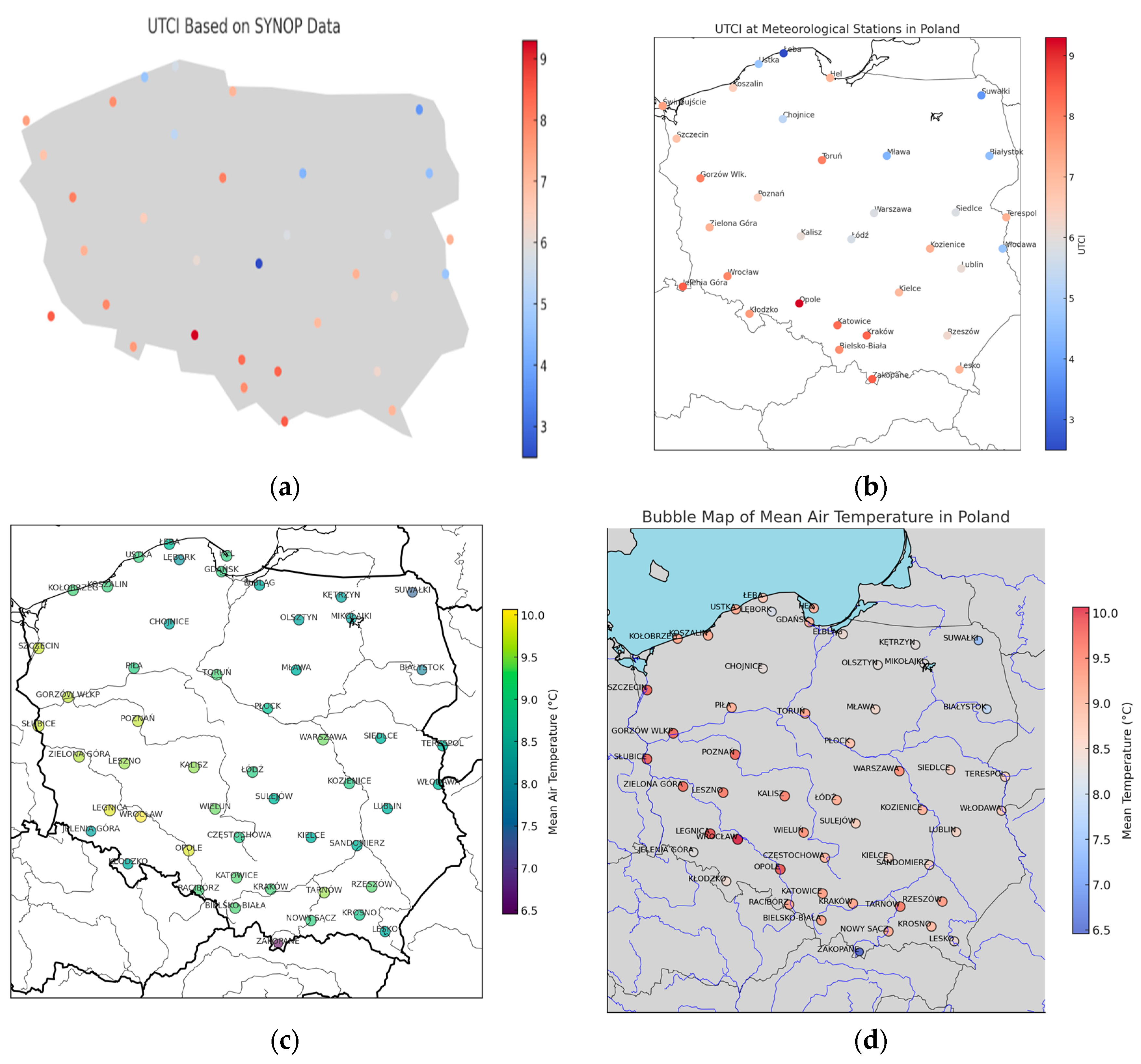

3.3. Maps Creation

- Gemini 2.0 Flash (the only version at that time accepting file uploads rather than solely images) failed to produce a coherent map; instead, it generated an image in which the station names were densely aggregated and overlapping.

- ChatGPT o3-mini-high generated Python code but was unable to render an actual map.

- DataAnalyst reported that it could not detect the file, despite the file being clearly visible in the preview.

- Claude 3.5 Sonnet (operating in experimental mode) produced a grey, irregular shape with only a few cities labeled and lacking an accompanying legend.

- Consensus generated an output resembling a scatterplot that used “x” markers instead of bubbles; while the color coding and station positions were accurate, the map appeared disproportionately stretched in the vertical dimension.

- Perplexity was incapable of producing any graphical output.

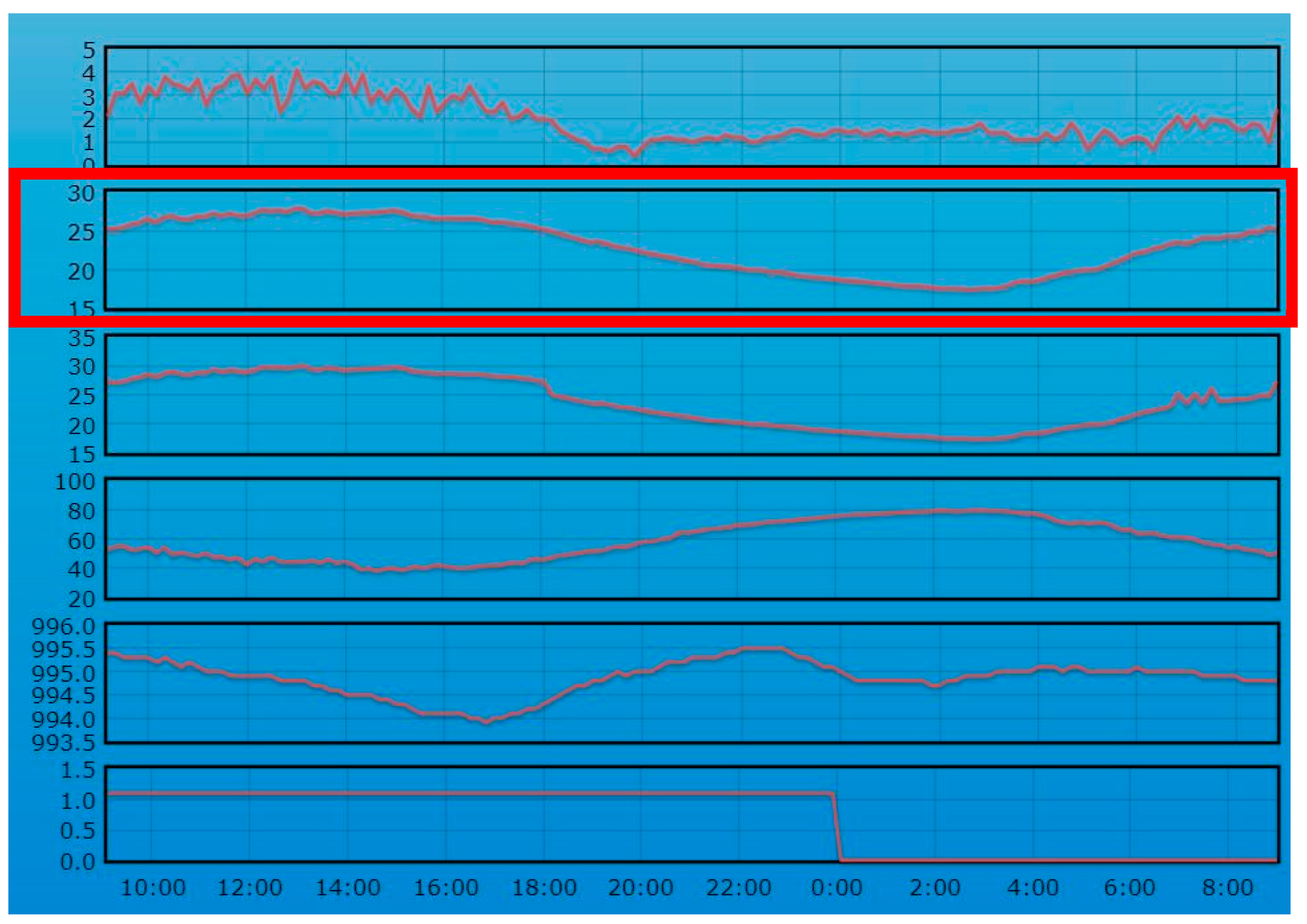

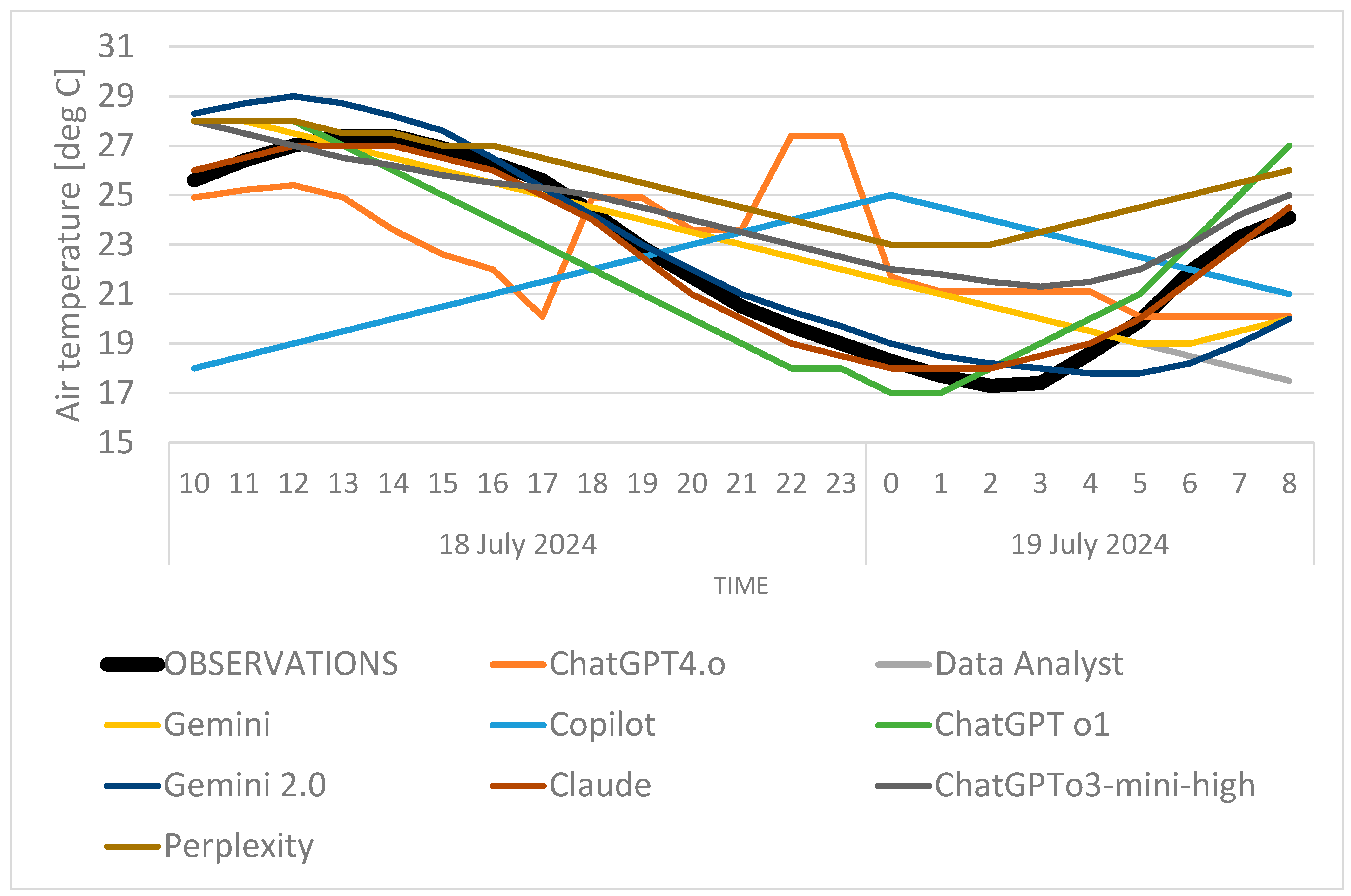

3.4. Archive Data Retrieval

3.5. Filling the Gaps in Literature Review

4. Discussion

5. Summary and Conclusions

- Cloud recognition—Among the tested models, ChatGPT o3-mini achieved the highest performance for both internet-sourced and privately collected cloud images, with an average score approaching 4 out of 5. ChatGPT o1 and Gemini Advanced 2.0 ranked as the second best, while early versions of Copilot performed the poorest—although its Academic version showed marked improvement (average score increasing from 1.8 to 3.2). Common errors across models included the misclassification of visually similar cloud types and occasional confusion between species and genera. Notably, LLMs generally performed better with internet-sourced images than with those from a private collection.

- Map creation from the data—ChatGPT 4.o emerged as the most efficient tool in generating maps from Excel datasets. Although it produced a simple initial map, subsequent iterations incorporated improved details—such as rivers, water bodies, and clearly defined country borders—enhancing the overall quality. In contrast, neither Gemini nor Copilot managed to generate maps effectively from the given coordinates and values. ScholarGPT produced maps comparable to that of ChatGPT 4.o.

- Map interpretation—In interpreting maps, the highest precision was initially demonstrated by Bard (no longer available), with subsequent satisfactory performance observed for Claude 3.5 Sonnet and SciSpace. Both versions of ChatGPT (4.0 and 4.o), along with Consensus and DataAnalyst, delivered accurate interpretations of temperature patterns, although the level of descriptive detail varied. Generally, all models correctly identified the trends and regional differences, even if the granularity of their descriptions differed.

- Filling the gaps in literature review—When tasked with retrieving and summarizing significant scientific publications on humid heat waves, Consensus and ChatGPT o1-preview excelled by providing five highly relevant papers, complete with succinct summaries and full bibliographies. ChatGPT 4.o and ChatGPT o3-mini-high followed in performance levels, whereas other tools occasionally returned incomplete lists or non-peer-reviewed sources. Compared to the early version of ChatGPT 3.5—which frequently hallucinated details [17]—the current models consistently delivered accurate bibliographic information, representing a substantial improvement.

- Archive data retrieval—All evaluated AI tools struggled with extracting accurate numerical data from graphical representations. Claude 3.5 Sonnet produced the closest results, with an average deviation of approximately 0.4 °C; Gemini 2.0 and ChatGPT o1 followed, with errors of 1.3 °C and 1.5 °C, respectively. This limitation underscores a significant gap in current LLM capabilities regarding the precise extraction of data from simple line graphs.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| AI Tool | Result (Accuracy, Correctness, Precision, etc.) | Scores |

|---|---|---|

| ChatGPT4.o | The two maps displayed illustrate the average annual air temperature across Poland for two different time periods: 1961–1990 and 2011–2020. The left map, representing the period 1961–1990, shows a predominantly cooler climate, with average temperatures ranging from 7.0 °C to 8.5 °C. This cooler trend is especially evident in the northern and northeastern regions, where temperatures are as low as 7.0 °C. In contrast, the right map, representing the period 2011–2020, shows a significant warming across the entire country, with average temperatures rising to between 8.5 °C and 10.0 °C or higher. The most noticeable increase in temperature occurs in the western and southwestern regions, where temperatures now exceed 9.0 °C, and in some areas, even approach 11.0 °C. This stark contrast between the two periods underscores the rapid warming that Poland has experienced over recent decades, reflecting broader global climate change trends. The shift towards warmer temperatures has significant implications for Poland’s climate, agriculture, and ecosystems. | 4 |

| ChatGPT4.0 | The two maps in the image represent the average annual air temperature in Poland across two different periods, 1961–1990 and 2011–2020, as indicated by the labels. On the left, the map for 1961–1990 shows a cooler climate, with an average temperature of 7.5 °C. This is visualized by a dominance of greens and yellows, indicating cooler temperatures. On the right, the map for 2011–2020 illustrates a significant warming with an average temperature of 9.1 °C. This period is represented by warmer colors like reds and deep oranges, indicating higher temperatures. The shift from predominantly green and yellow to red and orange between the two maps highlights a clear trend of rising temperatures over the fifty-year span. This comparison suggests a notable increase in temperatures, possibly reflecting broader climate change trends affecting the region. | 4 |

| Bard (March 2024) | As can be seen from the maps, the average annual temperature in Poland has increased by approximately 1.6 °C over the past 30 years. This warming trend is most pronounced in the western and central parts of the country, where the average temperature increase has been greater than 2 °C. The warming trend is evident in the distribution of temperature ranges across the two maps. In the 1961–1990 period, the majority of Poland (approximately 70%) had an average annual temperature between 6 °C and 8 °C. However, in the 2011–2020 period, this temperature range only accounted for approximately 40% of the country. In contrast, the percentage of Poland with an average annual temperature above 8 °C has increased from 20% to 40% over the same period. The warming trend in Poland is consistent with the global trend of climate change. The Intergovernmental Panel on Climate Change (IPCC) has concluded that it is extremely likely that human activities caused more than half of the observed increase in global mean surface temperature from 1951 to 2010. The warming trend in Poland is expected to continue in the future. The IPCC’s Sixth Assessment Report, released in 2022, projects that global average surface temperatures are expected to rise by 0.9 to 3.6 degrees Celsius (1.6 to 6.5 degrees Fahrenheit) by the end of the 21st century. This rise is expected to be unevenly distributed across the globe, with some regions experiencing more warming than others. The projected temperature rise in Poland will have a range of impacts on the environment, economy, and society. These impacts include:

| 5 |

| Gemini Advanced (August 2024) | The two maps visually depict the change in average annual air temperature in Poland between two time periods: 1961–1990 and 2011–2020. The map on the left, representing the earlier period, shows a predominance of cooler colors, particularly yellow and light green, indicating lower average temperatures. In contrast, the map on the right, depicting the more recent period, is dominated by warmer colors, notably orange and red, signifying a significant increase in average temperatures across Poland. This stark color shift between the two maps clearly illustrates a warming trend in the country’s climate over the three decades | 3 |

| DataAnalyst | These two maps present a striking visual comparison of the average annual air temperature in Poland across two different time periods: 1961–1990 and 2011–2020. The left map, representing the earlier period, shows a cooler color palette, dominated by greens and yellows, indicating average temperatures mainly between 7.5 °C and 8.5 °C, with the overall average for the period marked at 7.5 °C. In contrast, the right map, corresponding to the later period, is illustrated with a much warmer color scheme, with reds and deep oranges predominating, showing average temperatures frequently above 9 °C, some areas even nearing 10 °C, with the period average notably higher at 9.1 °C. This comparative visualization underscores a significant increase in average temperatures over the decades, highlighting a possible warming trend in Poland’s climate. | 4 |

| Copilot | The two maps provided depict the average annual air temperature in Poland for two distinct periods: 1961–1990 and 2011–2020. The map for the period 1961–1990 is predominantly green and yellow, indicating a cooler climate with an average temperature of 7.5 °C. In contrast, the map for the period 2011–2020 is dominated by red hues, signifying a warmer climate with an increased average temperature of 9.1 °C. This comparison highlights a noticeable increase in temperature over the decades, which could be indicative of climate change impacts. The color scale on the right side of the maps provides a visual guide for interpreting the temperatures represented by each color, ranging from −1 °C (purple) to 13 °C (dark red). | 3 |

| ChatGPT o3-mini-high | These two maps illustrate a clear increase in the average annual air temperature in Poland when comparing the period 1961–1990 (left) with 2011–2020 (right). The left map, representing 1961–1990, predominantly features cooler shades of green and yellow, indicating average temperatures around 7.5 °C. In contrast, the right map for 2011–2020 is dominated by warmer hues of red and orange, reflecting an average temperature closer to 9.1 °C. The overall shift from greens and yellows to reds and oranges demonstrates that nearly every region of Poland has experienced a noticeable rise in mean annual temperatures, underscoring the warming trend over the last few decades. | 3 |

| Claude 3.7 Sonnet (February 2025) | The image shows a stark comparison of average annual air temperatures in Poland between two time periods: 1961–1990 and 2011–2020. The left map displays a national average of 7.5 °C during 1961–1990, with a predominance of green and yellow colors indicating cooler temperatures, particularly in the northern and eastern regions. The right map reveals a significant warming trend, with the national average rising to 9.1 °C in 2011–2020—an increase of 1.6 °C. This more recent map is dominated by red and orange hues across most of the country, showing that areas that were previously cooler have experienced substantial warming. The temperature gradient is preserved, with the southwestern and central parts of Poland remaining the warmest in both periods, while the mountainous southern regions and northeastern areas remain relatively cooler. However, the overall shift toward higher temperatures is unmistakable, with virtually the entire country experiencing temperatures above 8 °C in the recent period, compared to much more varied temperatures in the earlier timeframe. | 5 |

| Perplexity (February 2025) | The two maps illustrate a clear increase in average annual air temperature in Poland between the periods of 1961–1990 and 2011–2020. The first map, representing 1961–1990, displays a cooler temperature range, predominantly in shades of green and yellow, with an average of 7.5 °C. In contrast, the second map, covering 2011–2020, is significantly warmer, with most of the country depicted in shades of orange and red, indicating higher temperatures and an overall average of 9.1 °C. This shift towards warmer colors across the entire country demonstrates a notable warming trend over the decades. | 3 |

| Consensus (February 2025) | The two maps illustrate the average annual air temperature in Poland for two time periods: 1961–1990 and 2011–2020. The left map, representing 1961–1990, shows predominantly green and yellow shades, indicating lower temperatures, with an overall average of 7.5 °C. In contrast, the right map, for 2011–2020, is dominated by red and orange shades, reflecting significantly higher temperatures, with an average of 9.1 °C. This shift suggests a clear warming trend over time, with nearly all regions experiencing a temperature increase. The most noticeable warming is seen in central and western Poland, where temperatures have risen substantially. The change in color distribution between the maps highlights the impact of climate change, leading to a much warmer climate across the country. | 4 |

| SciSpace (February 2025) | The two maps compare the average annual air temperature in Poland for two different periods: 1961–1990 and 2011–2020. The left map, representing 1961–1990, shows predominantly green and yellow shades, indicating lower temperatures, with a national average of 7.5 °C. Cooler areas, particularly in the northeast and mountainous regions in the south, have temperatures around 6–7 °C, while the western and southwestern parts of the country reach up to 8.5 °C. In contrast, the right map, depicting 2011–2020, is dominated by red and orange shades, reflecting a significant temperature increase, with an average of 9.1 °C. The warming trend is evident across all regions, with the previously cooler northeast now reaching around 8.0–8.5 °C, and the western and southwestern regions exceeding 9.5 °C. This striking difference visually represents climate change’s impact, illustrating a clear rise in temperatures over time. The change suggests a significant warming trend over the past decades, likely linked to global climate change and its regional effects in Poland. |

| STATION | lon | lat | MEAN |

|---|---|---|---|

| BIAŁYSTOK | 23.16228131 | 53.10725902 | 7.9 |

| BIELSKO-BIAŁA | 19.00233792 | 49.80670759 | 9.2 |

| CHOJNICE | 17.53263102 | 53.71520088 | 8.4 |

| CZĘSTOCHOWA | 19.09154253 | 50.81183699 | 9.0 |

| ELBLĄG | 19.54354719 | 54.22319596 | 8.4 |

| GDAŃSK * | 18.69794452 | 54.39977735 | 9.1 |

| GORZÓW WLKP | 15.27725927 | 52.74122952 | 9.7 |

| HEL | 18.81191661 | 54.60360674 | 9.1 |

| JELENIA GÓRA | 15.78881302 | 50.90025271 | 8.3 |

| KALISZ | 18.08092209 | 51.78115133 | 9.5 |

| KATOWICE | 19.03274124 | 50.24056754 | 9.2 |

| KĘTRZYN | 21.36665992 | 54.06718789 | 8.3 |

| KIELCE | 20.69221032 | 50.81047827 | 8.5 |

| KŁODZKO | 16.61422539 | 50.43689126 | 8.4 |

| KOŁOBRZEG | 15.38898512 | 54.15844166 | 9.2 |

| KOSZALIN | 16.15516727 | 54.20454235 | 9.1 |

| KOZIENICE | 21.54362149 | 51.56478486 | 9.0 |

| KRAKÓW | 19.79488375 | 50.07770664 | 9.2 |

| KROSNO | 21.76917439 | 49.70673792 | 8.9 |

| ŁEBA | 17.53481034 | 54.7536764 | 8.7 |

| LĘBORK | 17.75684947 | 54.55302536 | 8.1 |

| LEGNICA | 16.2076622 | 51.19251811 | 9.9 |

| LESKO | 22.34169786 | 49.46647133 | 8.4 |

| LESZNO | 16.53472666 | 51.83554951 | 9.5 |

| ŁÓDŹ | 19.38717768 | 51.71833379 | 9.1 |

| LUBLIN | 22.39311272 | 51.21674542 | 8.6 |

| MIKOŁAJKI | 21.58957414 | 53.78914349 | 8.3 |

| MŁAWA | 20.36109933 | 53.10416919 | 8.5 |

| NOWY SĄCZ | 20.68859999 | 49.62713962 | 9.1 |

| OLSZTYN | 20.42135085 | 53.76857766 | 8.3 |

| OPOLE | 17.96889643 | 50.62698659 | 9.9 |

| PIŁA | 16.74724155 | 53.13054695 | 9.1 |

| PŁOCK | 19.7257651 | 52.58842634 | 8.8 |

| POZNAŃ | 16.83460455 | 52.41654744 | 9.7 |

| RACIBÓRZ | 18.1908525 | 50.06104685 | 9.2 |

| RZESZÓW | 22.04210518 | 50.11069995 | 9.2 |

| SANDOMIERZ | 21.71592165 | 50.69662778 | 8.6 |

| SIEDLCE | 22.24477454 | 52.18097875 | 8.6 |

| SŁUBICE | 14.61959024 | 52.3485205 | 9.8 |

| SULEJÓW | 19.86642913 | 51.35333644 | 8.6 |

| SUWAŁKI | 22.94887812 | 54.13084797 | 7.5 |

| SZCZECIN | 14.62272692 | 53.39526877 | 9.8 |

| TARNÓW | 20.98394047 | 50.02985837 | 9.6 |

| TERESPOL | 23.62198314 | 52.07866005 | 8.6 |

| TORUŃ | 18.5954476 | 53.04208108 | 9.2 |

| USTKA | 16.85409678 | 54.58830633 | 9.1 |

| WARSZAWA | 20.96109105 | 52.16284951 | 9.4 |

| WIELUŃ | 18.55670645 | 51.21022173 | 9.4 |

| WŁODAWA | 23.52951731 | 51.55346759 | 8.7 |

| WROCŁAW | 16.89989337 | 51.10319256 | 10.1 |

| ZAKOPANE | 19.96031756 | 49.29382054 | 6.5 |

| ZIELONA GÓRA | 15.52465645 | 51.92992702 | 9.7 |

References

- Haleem, A.; Javaid, M.; Singh, R.P. An Era of ChatGPT as a Significant Futuristic Support Tool: A Study on Features, Abilities, and Challenges. BenchCouncil Trans. Benchmarks Stand. Eval. 2022, 2, 100089. [Google Scholar] [CrossRef]

- Vaghefi, S.A.; Stammbach, D.; Muccione, V.; Bingler, J.; Ni, J.; Kraus, M.; Allen, S.; Colesanti-Senni, C.; Wekhof, T.; Schimanski, T.; et al. ChatClimate: Grounding Conversational AI in Climate Science. Commun. Earth Environ. 2023, 4, 480. [Google Scholar] [CrossRef]

- Foroumandi, E.; Moradkhani, H.; Sanchez-Vila, X.; Singha, K.; Castelletti, A.; Destouni, G. ChatGPT in Hydrology and Earth Sciences: Opportunities, Prospects, and Concerns. Water Resour. Res. 2023, 59, e2023WR036288. [Google Scholar] [CrossRef]

- Grünebaum, A.; Chervenak, J.; Pollet, S.L.; Katz, A.; Chervenak, F.A. The Exciting Potential for ChatGPT in Obstetrics and Gynecology. Am. J. Obstet. Gynecol. 2023, 228, 696–705. [Google Scholar] [CrossRef]

- Raile, P. The Usefulness of ChatGPT for Psychotherapists and Patients. Humanit. Soc. Sci. Commun. 2024, 11, 47. [Google Scholar] [CrossRef]

- Castro Nascimento, C.M.; Pimentel, A.S. Do Large Language Models Understand Chemistry? A Conversation with ChatGPT. J. Chem. Inf. Model. 2023, 63, 1649–1655. [Google Scholar] [CrossRef]

- Urbina, F.; Lentzos, F.; Invernizzi, C.; Ekins, S. Dual Use of Artificial-Intelligence-Powered Drug Discovery. Nat. Mach. Intell. 2022, 4, 189–191. [Google Scholar] [CrossRef]

- Sarker, D.; Eiaz-Ur-Rahman, A.F.M.; Sakib, A.R.; Terano, H.J.R.; Rahman, M.M. ChatGPT’s Applications in Higher Education: Unmasking Opportunities and Challenges. J. Educ. Manag. Dev. Stud. 2023, 3, 37–47. [Google Scholar] [CrossRef]

- Thorp, H.H. ChatGPT Is Fun, but Not an Author. Science 2023, 379, 313. [Google Scholar] [CrossRef]

- Watkins, R. Guidance for Researchers and Peer-Reviewers on the Ethical Use of Large Language Models (LLMs) in Scientific Research Workflows. AI Ethics 2023, 4, 969–974. [Google Scholar] [CrossRef]

- Bi, K.; Xie, L.; Zhang, H.; Chen, X.; Gu, X.; Tian, Q. Accurate Medium-Range Global Weather Forecasting with 3D Neural Networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Zhang, J.; Su, Q.; Chai, X.; Lu, J.; Ni, W.; Duan, B.; Ren, K. Accurate Initial Field Estimation for Weather Forecasting with a Variational Constrained Neural Network. NPJ Clim. Atmos. Sci. 2024, 7, 223. [Google Scholar] [CrossRef]

- Pu, J.; Mu, M.; Feng, J.; Zhong, X.; Li, H. A Fast Physics-Based Perturbation Generator of Machine Learning Weather Model for Efficient Ensemble Forecasts of Tropical Cyclone Track. NPJ Clim. Atmos. Sci. 2025, 8, 128. [Google Scholar] [CrossRef]

- Maddy, E.S.; Boukabara, S.A.; Iturbide-Sanchez, F. Assessing the Feasibility of an NWP Satellite Data Assimilation System Entirely Based on AI Techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 9828–9845. [Google Scholar] [CrossRef]

- Biswas, S.S. Potential Use of Chat GPT in Global Warming. Ann. Biomed. Eng. 2023, 51, 3–4. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Jiang, Y.; Mostafavi, A. AI-Assisted Protective Action: Study of ChatGPT as an Information Source for a Population Facing Climate Hazards. arXiv 2023, arXiv:2304.06124. [Google Scholar]

- Salekpay, F.; van den Bergh, J.; Savin, I. Comparing Advice on Climate Policy between Academic Experts and ChatGPT. Ecol. Econ. 2024, 226, 108352. [Google Scholar] [CrossRef]

- McGovern, A.; Ebert-Uphoff, I.; Barnes, E.A.; Bostrom, A.; Cains, M.G.; Davis, P.; Demuth, J.L.; Diochnos, D.I.; Fagg, A.H.; Tissot, P.; et al. The NSF AI Institute for Research on Trustworthy AI for Weather, Climate, and Coastal Oceanography (AI2ES). Bull. Am. Meteorol. Soc. 2022, 103, E1658–E1668. [Google Scholar] [CrossRef]

- Bostrom, A.; Demuth, J.L.; Wirz, C.D.; Cains, M.G.; Schumacher, A.; Madlambayan, D.; Bansal, A.S.; Bearth, A.; Chase, R.; Crosman, K.M.; et al. Trust and Trustworthy Artificial Intelligence: A Research Agenda for AI in the Environmental Sciences. Risk Anal. 2024, 44, 1498–1513. [Google Scholar] [CrossRef]

- OpenAI ChatGPT. Available online: https://chatgpt.com/ (accessed on 1 February 2024).

- OpenAI. Available online: https://openai.com/ (accessed on 19 June 2024).

- Krzyżewska, A. Climate Change in Poland—The Assessment of the Conversation with ChatGPT. Misc. Geogr. 2024, 28, 5–12. [Google Scholar] [CrossRef]

- Google Gemini Advanced. Available online: https://gemini.google.com/app (accessed on 3 April 2025).

- Google. Available online: https://about.google (accessed on 3 April 2025).

- Microsoft Copilot. Available online: https://copilot.microsoft.com (accessed on 3 April 2025).

- Microsoft. Available online: https://www.microsoft.com (accessed on 3 April 2025).

- Microsoft Blog Microsoft. Available online: https://blogs.microsoft.com/blog/2023/02/07/reinventing-search-with-a-new-ai-powered-microsoft-bing-and-edge-your-copilot-for-the-web (accessed on 10 September 2024).

- PerplexityAI. Available online: https://www.perplexity.ai (accessed on 10 September 2024).

- Perplexity AI Raises $73.6M in Funding Round Led by Nvidia, Bezos, Now Valued at $522M. Available online: https://www.businesstoday.in/entrepreneurship/start-up/story/perplexity-ai-raises-736m-in-funding-round-led-by-nvidia-bezos-now-valued-at-522m-412130-2024-01-06 (accessed on 10 September 2024).

- Anthropic. Available online: https://claude.ai/ (accessed on 18 February 2025).

- Whitney, L. Anthropic’s Claude 3 Chatbot Claims to Outperform ChatGPT, Gemini. Available online: https://www.zdnet.com/article/anthropics-claude-3-chatbot-claims-to-outperform-chatgpt-gemini/ (accessed on 18 February 2025).

- DataAnalyst. Available online: https://chatgpt.com/g/g-HMNcP6w7d-data-analyst (accessed on 3 April 2025).

- Consensus. Available online: https://chatgpt.com/g/g-bo0FiWLY7-consensus (accessed on 3 April 2025).

- ConsensusApp. Available online: https://consensus.app/home/about-us/ (accessed on 10 September 2024).

- scispace.com SciSpace. Available online: https://typeset.io/ (accessed on 18 February 2025).

- ScholarGPT. Available online: https://chatgpt.com/g/g-kZ0eYXlJe-scholar-gpt (accessed on 18 February 2025).

- Awesomegpts.Ai. Available online: https://awesomegpt.vip/ (accessed on 18 February 2025).

- Academic Assistant Pro. Available online: https://chatgpt.com/g/g-UubB08D4y-academic-assistant-pro (accessed on 3 April 2025).

- @gpt_boost. Available online: https://github.com/ai-boost (accessed on 18 February 2025).

- Hangzhou DeepSeek Artificial Intelligence Basic Technology Research DeepSeek (Hangzhou, China). Available online: https://chat.deepseek.com/ (accessed on 18 February 2025).

- Bloomberg.com Hangzhou DeepSeek Artificial Intelligence Basic Technology Research. Available online: https://www.bloomberg.com/profile/company/2544189D:CH (accessed on 18 February 2025).

- Khanifar, J. Evaluating AI-Generated Responses from Different Chatbots to Soil Science-Related Questions. Soil. Adv. 2025, 3, 100034. [Google Scholar] [CrossRef]

- Cascella, M.; Semeraro, F.; Montomoli, J.; Bellini, V.; Piazza, O.; Bignami, E. The Breakthrough of Large Language Models Release for Medical Applications: 1-Year Timeline and Perspectives. J. Med. Syst. 2024, 48, 22. [Google Scholar] [CrossRef]

- Şahin, M.F.; Doğan, Ç.; Topkaç, E.C.; Şeramet, S.; Tuncer, F.B.; Yazıcı, C.M. Which Current Chatbot Is More Competent in Urological Theoretical Knowledge? A Comparative Analysis by the European Board of Urology in-Service Assessment. World J. Urol. 2025, 43, 116. [Google Scholar] [CrossRef]

- WMO WMO Cloud Atlas. Available online: https://cloudatlas.wmo.int/en/search-image-gallery.html (accessed on 19 June 2024).

- Cahan, P.; Treutlein, B. A Conversation with ChatGPT on the Role of Computational Systems Biology in Stem Cell Research. Stem Cell Rep. 2023, 18, 1–2. [Google Scholar] [CrossRef]

- An, H.; Li, X.; Huang, Y.; Wang, W.; Wu, Y.; Liu, L.; Ling, W.; Li, W.; Zhao, H.; Lu, D.; et al. A New ChatGPT-Empowered, Easy-to-Use Machine Learning Paradigm for Environmental Science. Eco-Environ. Health 2024, 3, 131–136. [Google Scholar] [CrossRef] [PubMed]

- Lai, V.D.; Ngo, N.T.; Veyseh, A.P.B.; Man, H.; Dernoncourt, F.; Bui, T.; Nguyen, T.H. ChatGPT Beyond English: Towards a Comprehensive Evaluation of Large Language Models in Multilingual Learning. In Findings of the Association for Computational Linguistics: EMNLP 2023; Association for Computational Linguistics: Singapore, 2023; pp. 13171–13189. [Google Scholar] [CrossRef]

- Cooper, G. Examining Science Education in ChatGPT: An Exploratory Study of Generative Artificial Intelligence. J. Sci. Educ. Technol. 2023, 32, 444–452. [Google Scholar] [CrossRef]

- Casini, L.; Marchetti, N.; Montanucci, A.; Orrù, V.; Roccetti, M. A Human–AI Collaboration Workflow for Archaeological Sites Detection. Sci. Rep. 2023, 13, 8699. [Google Scholar] [CrossRef]

- McGovern, A.; Demuth, J.; Bostrom, A.; Wirz, C.D.; Tissot, P.E.; Cains, M.G.; Musgrave, K.D. The Value of Convergence Research for Developing Trustworthy AI for Weather, Climate, and Ocean Hazards. NPJ Nat. Hazards 2024, 1, 13. [Google Scholar] [CrossRef]

| (a) | |||||

| No. | Image | ChatGPT 4.o/o1/o3-Mini | Gemini Advanced/2.0 Exp-1206 | Copilot/Copilot Acad (February 2025) | Correct Answer (WMO) |

| 1 |  | Cirrocumulus stratiformis undulatus Cumulus humilis /Cumulus humilis, Altocumulus stratiformis translucidus perlucidus/Cumulus humilis and Altocumulus stratiformis translucidus perlucidus | Cirrocumulus stratiformis undulatus Altocumulus stratiformis translucidus perlucidus Cumulus humilis, mediocris /Cirrocumulus stratiformis undulatus, cumulus humilis, cumulus mediocris | Cirrocumulus stratiformis /Cumulus humilis, Cirrocumulus stratiformis undulatus | Cumulus humilis and altocumulus stratiformis translucidus perlucidus |

| Assessment: (1–5) | 3/5/5 | 4/4 | 1/3 | ||

| 2 |  | Cirrus fibratus radiatus, cirrus uncinus, contrail cirrus/ Cirrus homogenitus, cirrus homomutatus /Cirrus homogenitus, cirrus homomutatus | Cirrus homogenitus spissatus Contrail cirrus /Cirrus homogenitus, cirrus homomutatus | Cirrocumulus stratiformis /Cirrus homogenitus (contrails) | Cirrus homogenitus (contrails) and cirrus homomutatus |

| Assessment (1–5) | 4/5/5 | 4/5 | 0/4 | ||

| 3 |  | Altostratus undulatus/altostratus undulatus opacus asperitas/ Altostratus stratiformis or possibly stratocumulus stratiformis with undulatus and asperitas | Altocumulus stratiformis undulatus asperitas/ Stratocumulus stratiformis undulatus | Stratocumulus undulatus /Altostratus undulatus * radiatus * incorrectly labeled as speciesit is a variety | Stratocumulus stratiformis opacus asperitas |

| Assessment | 0/2/4 | 2/4 | 1/0 | ||

| 4 |  | Altocumulus stratiformis undulatus/ Altocumulus (stratiformis) perlucidus opacus/ Altocumulus stratiformis perlucidus undulatus | Altocumulus stratiformis translucidus undulatus /Altocumulus stratiformis translucidus undulatus | Stratocumulus stratiformis /Altocumulus stratiformis undulatus | Altocumulus stratiformis translucidus perlucidus undulatus |

| Assessment | 3/4/4.5 | 4/4 | 1/3 | ||

| 5 |  | Nimbostratus/ Nimbostratus (pannus) /Nimbostratus with pannus | Stratus nebulosus opacus /Nimbostratus praecipitatio with pannus | Stratocumulus stratiformis /Stratus opacus * * incorrectly labeled as species—it is a variety | Nimbostratus praecipitatio cumulonimbogenitus with stratus fractus of wet weather |

| Assessment: | 1/2/2 | 1/3 | 0/1 | ||

| 6 |  | Cumulonimbus calvus, cumulus congestus /Cumulonimbus capillatus incus praecipitatio /Cumulonimbus capillatus (incus) with praecipitatio | Cumulonimbus calvus praecipitatio with possible virga and pileus Cumulus mediocris or cumulus congestus. /Cumulonimbus capillatus with praecipitatio and incus Cumulus mediocris, cumulus congestus with pileus | Cumulonimbus /Cumulonimbus calvus | Cumulonimbus capillatus incus praecipitatio |

| Assessment: | 2/5/5 | 3/4 | 1/1 | ||

| 7 |  | Stratus nebulosus /Stratus (nebulosus) opacus /Stratus nebulosus with opacus | Stratus nebulosus undulatus /Stratus nebulosus opacus | Stratus /Stratus | Stratus undulatus |

| Assessment: | 3/2/2 | 4/2 | 2/2 | ||

| 8 |  | Stratus nebulosus /Altostratus translucidus /Altostratus translucidus | Altostratus translucidus /Altostratus translucidus | Stratus /Stratus opacus | Altostratus translucidus |

| Assessment: | 0/5/5 | 5/5 | 0/2 | ||

| 9 |  | Cirrostratus fibratus, 22° halo /Cirrostratus (nebulosus) translucidus, with a 22° halo /Cirrostratus nebulosus, accompanied by a 22° halo | Cirrus fibratus radiatus halo around the sun is formed by cirrostratus clouds that are not clearly visible in this photo /Cirrostratus nebulosus with 22° halo Cirrus fibrates, cirrus floccus, cirrus spissatus | Cirrus 22° halo. /Halo on ice crystals in the atmosphere with cumulus humilis | Cirrostratus nebulosus and 22° halo |

| Assessment: | 4/5/5 | 3/4 | 2/1 | ||

| MEAN | 3.0/3.7/4.0 | 3.0/3.4 | 1.7/3.1 | ||

| SD | 1.3/1.3/1.6 | 1.6/1.2 | 1.9/1.5 | ||

| MSE | 5.6/3.3/3.2 | 6.2/3.8 | 14.2/5.7 | ||

| RMSE | 2.4/1.8/1.8 | 2.5/1.9 | 3.8/2.4 | ||

| CI | ±1/0/1.0/1.2 | ±1.2/1.0 | ±1.4/1.2 | ||

| (b) | |||||

| No. | Claude 3.5 Sonnet | Perplexity | Consensus | SciSpace | Correct Answer (WMO) |

| 1 | Altocumulus perlucidus * translucidus, Cumulus humilis radiatus * incorrectly labeled as species—it is a variety | Altocumulus stratiformis perlucidus with possible undulatus, cumulus humilis | Cirrocumulus stratiformis undulatus, Cumulus mediocris/humilis * * incorrectly labeled as species—it is a variety | Cirrocumulus stratiformis undulatus, Cumulus mediocris/humilis * * incorrectly labeled as species—it is a variety | Cumulus humilis, altocumulus stratiformis translucidus perlucidus |

| Assessment (1–5) | 4 | 4 | 3 | 3 | |

| 2 | Cirrus homogenitus | Homogenitus or contrails, homomutatus (no genre for human-made clouds) | Cirrus homogenitus, cirrostratus nebulosus | Cirrus homogenitus (contrails), fibratus | Cirrus homogenitus (contrails) and cirrus homomutatus |

| Assessment (1–5) | 4 | 3 | 2 | 2 | |

| 3 | Altostratus translucidus * undulatus fluctus * incorrectly labeled as species—it is a variety | Altostratus translucidus *, undulatus, radiatus * incorrectly labeled as species—it is a variety | Altostratus or altocumulus undulatus asperitas | Altostratus or stratocumulus undulatus *, asperitas ** * incorrectly labeled as species—it is a variety ** incorrectly labeled as variety—it is a supplementary feature | Stratocumulus stratiformis opacus asperitas |

| Assessment (1–5) | 0 | 0 | 1 | 2 | |

| 4 | Altostratus translucidus * undulatus * incorrectly labeled as species—it is a variety | Altocumulus stratiformis undulatus | Stratocumulus stratiformis undulatus perlucidus | Stratocumulus or altocumulus stratiformis undulatus | Altocumulus stratiformis translucidus perlucidus undulatus |

| Assessment (1–5) | 3 | 3 | 3 | 3 | |

| 5 | Stratus nebulosus opacus | Nimbostratus undulatus praecipitatio | Nimbostratus and stratus fractus | Nimbostratus pannus | Nimbostratus praecipitatio cumulonimbogenitus with stratus fractus of wet weather |

| Assessment (1–5) | 1 | 2 | 3 | 1 | |

| 6 | Cumulonimbus capillatus (incus) with praecipitatio and pannus | Cumulonimbus calvus, or cumulonimbus capillatus praecipitatio with possible arcus | Cumulonimbus capillatus, possibly calvus, with pannus and virga or praecipitatio | Cumulonimbus calvus incus praecipitatio pannus | Cumulonimbus capillatus incus praecipitatio |

| Assessment (1–5) | 5 | 4 | 4 | 3 | |

| 7 | Stratus nebulosus opacus | Stratus nebulosus | Stratus nebulosus | Stratus or stratocumulus stratiformis opacus | Stratus undulatus |

| Assessment (1–5) | 2 | 2 | 2 | 2 | |

| 8 | Altostratus translucidus | Stratus nebulosus opacus | Stratus nebulosus or altostratus nebulosus | Altostratus opacus translucidus | Altostratus translucidus |

| Assessment (1–5) | 5 | 0 | 2 | 4 | |

| 9 | Cirrostratus fibratus or nebulosus translucidus with 22° halo | Cirrostratus nebulosus undulatus with halo | Cirrostratus nebulosus with 22° halo | Cirrostratus fibratus translucidus with 22° halo | Cirrostratus nebulosus and 22° halo |

| Assessment (1–5) | 4 | 4 | 5 | 4 | |

| MEAN | 3.1 | 2.4 | 2.8 | 2.7 | |

| SD | 1.8 | 1.6 | 1.2 | 1.0 | |

| MSE | 6.3 | 8.8 | 6.2 | 6.3 | |

| RMSE | 2.5 | 3.0 | 2.5 | 2.5 | |

| CI | ±1.4 | ±1.2 | ±0.9 | ±0.8 | |

| (a) | |||||

| LP | Image | ChatGPT 4.o/o1/o3-Mini | Gemini Advanced/2.0 Exp-1206 | Copilot/Copilot Acad (February 2025) | Correct Answer |

| 1 |  | Cirrus fibratus radiatus/ Cirrus fibratus, uncinus /Cirrus fibratus, uncinus with a parhelion | Cirrus fibratus, intortus/ Cirrus fibratus, intortus, radiatus Cirrus spissatus fibratus duplicatus mamma Cirrostratus fibratus duplicatus nebulosus | Cirrus fibratus /Cirrus fibratus, uncinus, intortus | Cirrus spissatus, fibratus |

| Assessment: | 4/4/4 | 4/3 | 3/4 | ||

| 2 |  | Stratus nebulosus/ Stratus nebulosus opacus/ Stratus nebulosus opacus | Stratus nebulosus opacus/ Stratus nebulosus fractus | Stratocumulus/ Stratus | Stratocumulus nebulosus opacus |

| Assessment | 1/2/2 | 2/1 | 2/0 | ||

| 3 |  | Stratus nebulosus/ Stratus nebulosus opacus/ Stratus nebulosus opacus | Stratus nebulosus opacus/ Stratus nebulosus | Stratocumulus/ Stratus | Status nebulosus opacus |

| Assessment | 4/5/5 | 5/4 | 0/2 | ||

| 4 |  | Cirrus fibratus radiatus contrail /Cirrus fibratus, intortus, uncinus Cirrocumulus floccus or stratiformis, cirrus homogenitus /Cirrocumulus stratiformis floccus, cirrus homogenitus | Cirrus fibratus intortus /Cirrus fibratus intortus Cirrocumulus stratiformis undulatus cirrus homogenitus | Stratiformis undulatus/ Cirrus fibratus, with contrails (cirrus/cirrocumulus homogenitus), cirrocumulus stratiformis | Cirrocumulus Cirrus fibratus intortus homogenitus |

| Assessment | 4/4/3 | 3/4 | 0/4 | ||

| 5 |  | Altostratus opacus undulatus/ Altostratus opacus undulatus /Altostratus opacus undulatus | Altocumulus stratiformis undulatus/ Stratocumulus stratiformis translucidus undulatus, altocumulus stratiformis lacunosus | Stratocumulus stratiformis undulatus /Stratocumulus stratiformis undulatus | Stratocumulus stratiformis opacus undulatus |

| Assessment | 2/2/5 | 2/3 | 4/4 | ||

| 6 |  | Cirrostratus fibratus nebulosus with halo/ Cirrostratus nebulosus translucidus, with 22° halo/ Cirrostratus nebulosus with a 22° halo | Cirrostratus nebulosus with halo /Cirrostratus fibratus nebulosus, cirrostratus cirrostratomutatus, with halo | Cirrostratus fibratus nebulosus with halo /Cirrostratus nebulosus with halo | Cirrostratus nebulosus with halo |

| Assessment: | 4/5/5 | 5/4 | 4/5 | ||

| 7 |  | Cirrus homogenitus rectus /Cirrocumulus homogenitus /Cirrus homogenitus | Contrail (not classified under WMO system) /Cirrus homogenitus. | Altocumulus stratiformis perlucidus /Cirrus fibratus contrail | Cirrus homogenitus |

| Assessment | 4/4/5 | 1/5 | 0/4 | ||

| 8 |  | Cumulus mediocris/ Cumulus congestus/ Cumulus mediocris | Cumulus congestus/ Cumulus congestus, cumulus fractus, cumulus mediocris | Cumulus mediocris or congestus humilis/ Cumulus congestus | Cumulus humilis |

| Assessment | 4/4/5 | 4/4 | 4/4 | ||

| 9 |  | Altocumulus stratiformis perlucidus Cirrus or cirrostratus /Stratocumulus stratiformis opacus with mamma/ Stratocumulus castellanus opacus | Altocumulus stratiformis undulatus perlucidus cirrus fibratus/ Stratocumulus stratiformis, with translucidus, opacus, and undulatus Altocumulus stratiformis, lacunosus | Cumulus humilis radiatus pileus pannus /Stratocumulus stratiformis, altocumulus stratiformis perlucidus, cirrus fibratus | Stratocumulus stratiformis, floccus |

| Assessment | 1/2/1 | 1/2 | 0/2 | ||

| MEAN | 3.1/3.2/3.9 | 3.0/3.3 | 2.0/3.3 | ||

| SD | 1.4/1.5/1.5 | 1.6/1.2 | 2.1/1.7 | ||

| MSE | 5.2/5.1/3.3 | 6.2/4.1 | 12.8/15.2 | ||

| RMSE | 2.3/2.3/1.8 | 2.5/2.0 | 3.6/2.3 | ||

| CI | ±1.0/1.1/1.2 | ±1.2/0.9 | ±1.6/1.3 | ||

| (b) | |||||

| No. | Claude 3.5 Sonnet | Perplexity | Consensus | SciSpace | Correct Answer |

| 1 | Cirrus fibratus intortus | Cirrus fibratus radiatus | Cirrus fibratus, cirrus uncinus, cirrostratus nebulosus with parhelion | Cirrus and cirrostratus, fibratus, radiatus, parhelion, | Cirrus spissatus, fibratus |

| Assessment (1–5) | 4 | 4 | 4 | 4 | |

| 2 | Stratus | Stratus nebulosus undulatus | Nimbostratus and stratus nebulosus | Nimbostratus opacus pannus | Stratocumulus nebulosus opacus |

| Assessment (1–5) | 0 | 1 | 1 | 1 | |

| 3 | Stratus nebulosus opacus | Stratus nebulosus undulatus | Stratus nebulosus | Stratus nebulosus opacus | Status nebulosus opacus |

| Assessment (1–5) | 5 | 4 | 4 | 5 | |

| 4 | Cirrus fibratus with contrails cirrocumulus | Cirrocumulus stratiformis undulatus | Cirrus fibratus and uncinus, contrail Cirrocumulus | Cirrus fibratus radiatus homogenitus | Cirrocumulus Cirrus fibratus intortus homogenitus |

| Assessment (1–5) | 3 | 1 | 3 | 3 | |

| 5 | Cirrus fibratus Altocumulus translucidus | Altostratus opacus undulatus | Altostratus and altocumulus undulatus | Altostratus and altocumulus StratiformisUndulatus | Stratocumulus stratiformis opacus undulatus |

| Assessment (1–5) | 0 | 1 | 1 | 2 | |

| 6 | Cirrostratus fibratus with halo | Cirrostratus nebulosus undulatus with halo | Cirrostratus nebulosus with halo | Cirrostratus fibratus translucidus with 22° halo | Cirrostratus nebulosus with halo |

| Assessment (1–5) | 4 | 3 | 5 | 3 | |

| 7 | Contrail | Contrail, homogenitus | Cirrus homogenitus | Cirrus homogenitus | Cirrus homogenitus |

| Assessment (1–5) | 1 | 2 | 5 | 5 | |

| 8 | Cumulus congestus | Cumulus mediocris and altocumulus stratiformis opacus | Cumulus congestus | Cumulus congestus | Cumulus humilis |

| Assessment (1–5) | 4 | 4 | 4 | 4 | |

| 9 | Altocumulus stratiformis translucidus perlucidus | Altocumulus lenticularis *, stratiformis, undulatus, altostratus translucidus * * as species, should be variety | Cumulonimbus capillatus mammatus | Altocumulus mammatus | Stratocumulus stratiformis, floccus |

| Assessment (1–5) | 1 | 1 | 0 | 1 | |

| MEAN | 2.4 | 2.3 | 3.0 | 3.1 | |

| SD | 1.9 | 1.4 | 1.9 | 1.5 | |

| MSE | 9.9 | 8.9 | 7.1 | 5.7 | |

| RMSE | 3.1 | 3.0 | 2.7 | 2.4 | |

| CI | ±1.5 | ±1.1 | ±1.4 | ±1.2 | |

| AI Tool | Cc | Cs | Ci | Ac | As | Ns | Sc | St | Cu | Cb | MEAN |

|---|---|---|---|---|---|---|---|---|---|---|---|

| ChatGPT 4.o | 1.0 | 0.3 | 0.2 | 0.7 | 1.0 | 0.0 | 1.0 | 0.8 | 0.3 | 0.0 | 0.5 |

| ChatGPT o-1 | 0.5 | 0.0 | 0.3 | 0.0 | 0.7 | 0.0 | 0.8 | 0.8 | 0.0 | 0.0 | 0.3 |

| ChatGPT o3-mini | 0.0 | 0.0 | 0.0 | 0.0 | 0.7 | 0.0 | 0.5 | 0.8 | 0.0 | 0.0 | 0.2 |

| Claude 3.5 Sonnet | 0.0 | 0.0 | 0.4 | 0.8 | 0.7 | 1.0 | 1.0 | 0.5 | 0.0 | 0.0 | 0.4 |

| Consensus | 0.5 | 0.5 | 0.0 | 1.0 | 0.7 | 0.5 | 1.0 | 0.6 | 0.0 | 0.5 | 0.5 |

| Copilot | 1.0 | 0.5 | 0.8 | 1.0 | 1.0 | 1.0 | 0.6 | 0.7 | 0.7 | 0.0 | 0.7 |

| Copilot Acad. | 0.5 | 0.5 | 0.2 | 0.7 | 1.0 | 1.0 | 0.5 | 0.6 | 0.3 | 0.0 | 0.5 |

| Gemini 2.0 | 0.5 | 0.3 | 0.2 | 0.8 | 0.0 | 0.0 | 0.3 | 0.8 | 0.3 | 0.0 | 0.3 |

| Gemini Adv. | 1.0 | 0.0 | 0.5 | 0.6 | 0.0 | 1.0 | 1.0 | 0.5 | 0.3 | 0.0 | 0.5 |

| Perplexity | 0.0 | 0.0 | 0.8 | 0.5 | 1.0 | 0.0 | 1.0 | 0.8 | 0.0 | 0.0 | 0.4 |

| SciSpace | 1.0 | 0.3 | 0.0 | 0.8 | 0.7 | 0.5 | 0.8 | 0.7 | 0.0 | 1.0 | 0.6 |

| MEAN | 0.5 | 0.2 | 0.3 | 0.6 | 0.7 | 0.5 | 0.8 | 0.7 | 0.2 | 0.1 | 0.5 |

| AI Tool | Result (Accuracy, Correctness, Precision, etc.) | Scores |

|---|---|---|

| ChatGPT4.o | Correct interpretation with precise numerical values and regional description. Accurate conclusions. | 4 |

| ChatGPT4.0 | Correct interpretation with general numerical values and accurate conclusions. | 4 |

| Bard (March 2024) | Correct interpretation with precise numerical values and percentage of temperature ranges. An abundance of additional information about climate change. | 5 |

| Gemini Advanced (August 2024) | Very general description with accurate conclusions. | 3 |

| DataAnalyst | Correct interpretation with correct numerical values and accurate conclusions. | 4 |

| Copilot | Very general description with accurate conclusions. | 3 |

| ChayGPT o3-mini-high (February 2025) | Very general description with accurate conclusions. | 3 |

| Claude 3.5 Sonnet (February 2025) | Detailed destription with focus on regional changes and accurate conclusions. | 5 |

| Perplexity AI | Very general description with accurate conclusions. | 3 |

| Consensus (February 2025) | General desrpition with some focus on regional changes and accurate conclusions. | 4 |

| SciSpace (February 2025) | Detailed destription with focus on regional changes and accurate conclusions. | 5 |

| AI Tool | Result (Accuracy, Correctness, Precision, etc.) | Scores |

|---|---|---|

| ChatGPT o1-preview | Five scientific papers, all of them relevant and important, with summary and bibliography present. | 5 |

| ChatGPT4.o | Five papers, bibliography present, missing most important examples. | 4 |

| ChatGPT4.0 | Four papers, no bibliography, missing most important examples. | 3 |

| Academic Assistant Pro | Two papers, most important examples, good summaries of most important findings. | 3 |

| Scholar GPT | Four papers, went off-topic, missing most important examples, | 2 |

| no bibliography. | ||

| Consensus | Most accurate and precise—five papers, indeed most important ones. | 5 |

| Gemini Advanced | Three papers, one most important example, two news articles; | 1 |

| bibliography present. | ||

| Copilot (www.bing.com) | Three papers, one most important example, one for heat waves (not humid), one without access (despite the fact that the paper was available for free). | 2 |

| Perplexity AI | Three papers, two most important examples, bibliography present. | 2 |

| DeepSeek | Five papers, one most important example, bibliography entry after each description, most from www.nature.com. | 3 |

| SciSpace | Five papers from year 2024, two of them important (not most important). | 2 |

| ChatGPT o3-mini-high | Five papers, four of them important, one not about heat waves, but on humidity. | 4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Krzyżewska, A. The Applications of AI Tools in the Fields of Weather and Climate—Selected Examples. Atmosphere 2025, 16, 490. https://doi.org/10.3390/atmos16050490

Krzyżewska A. The Applications of AI Tools in the Fields of Weather and Climate—Selected Examples. Atmosphere. 2025; 16(5):490. https://doi.org/10.3390/atmos16050490

Chicago/Turabian StyleKrzyżewska, Agnieszka. 2025. "The Applications of AI Tools in the Fields of Weather and Climate—Selected Examples" Atmosphere 16, no. 5: 490. https://doi.org/10.3390/atmos16050490

APA StyleKrzyżewska, A. (2025). The Applications of AI Tools in the Fields of Weather and Climate—Selected Examples. Atmosphere, 16(5), 490. https://doi.org/10.3390/atmos16050490