GPU-Accelerated Laplace Equation Model Development Based on CUDA Fortran

Abstract

:1. Introduction

2. Numerical Method

2.1. Governing Equation

2.2. Finite Volume Method

3. GPU-Accelerated Computing

3.1. CUDA Parallel Programming

3.2. GPU Hardware Structure and Features

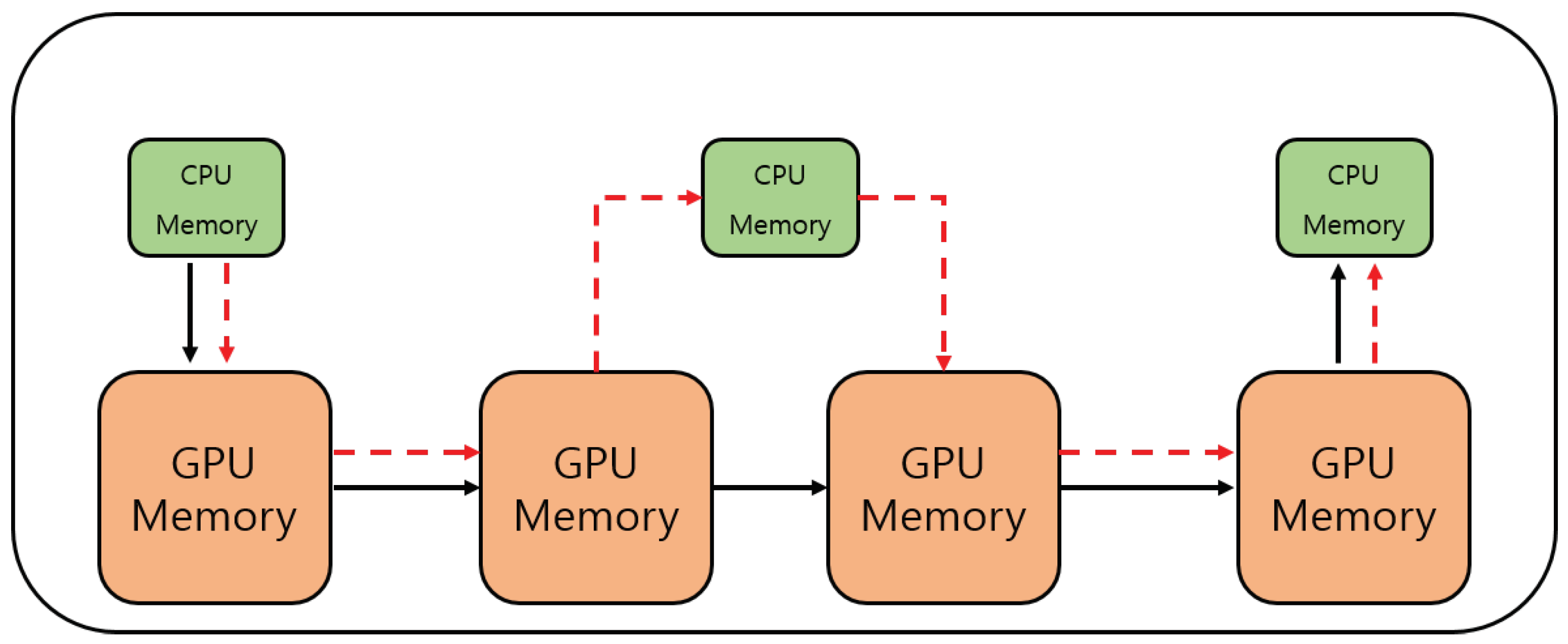

3.3. Data Transfer between the CPU and GPU

4. Numerical Cases and Results

4.1. Comparison of the Numerical Result and the Analysis Solution

4.2. Groundwater Flow around Sheet Pile Dam

4.3. Irregular Calculation Area

4.4. Performance of GPU-Accelerated Laplace Equation Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ALU | Arithmetical Logic Unit |

| CFD | Computational Fluid Dynamics |

| CPU | Central Processing Unit |

| CUDA | Compute Unified Device Architecture |

| GPGPU | General-Purpose Computing on Graphics Processing Units |

| GPU | Graphic Processing Unit |

| ISPH | Incompressible Smooth Particle Hydrodynamics |

| NRMSE | Normalized Root Mean Square Error |

| OpenCL | Open Computing Language |

| OpenMP | Open Multi-Processing |

| PGI | Portland Group Incorporated |

| SPH | Smooth Particle Hydrodynamics |

| WRF | Weather Research and Forecasting |

References

- Harju, A.; Siro, T.; Canova, F.F.; Hakala, S.; Rantalaiho, T. Computational physics on graphics processing units. In International Workshop on Applied Parallel Computing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 3–26. [Google Scholar]

- Chow, A.D.; Rogers, B.D.; Lind, S.J.; Stansby, P.K. Incompressible SPH (ISPH) with fast Poisson solver on a GPU. Comput. Phys. Commun. 2018, 226, 81–103. [Google Scholar] [CrossRef]

- NVIDIA. Cuda c Programming Guide; Version 4.0; NVIDIA Corporation: Santa Clara, CA, USA, 2011. [Google Scholar]

- Munshi, A.; Gaster, B.; Mattson, T.G.; Ginsburg, D. OpenCL Programming Guide; Pearson Education: London, UK, 2011. [Google Scholar]

- Vanderbauwhede, W.; Takemi, T. An investigation into the feasibility and benefits of gpu/multicore acceleration of the weather research and forecasting model. In Proceedings of the 2013 International Conference on High Performance Computing & Simulation (HPCS), Helsinki, Finland, 1–5 July 2013; pp. 482–489. [Google Scholar]

- Bae, S.K. Acceleration of Word2vec Using GPUs. Master’s Thesis, University of Seoul, Seoul, Korea, 2017. [Google Scholar]

- Gomez Gesteira, M.; Crespo, A.J.; Rogers, B.D.; Dalrymple, R.A.; Dominguez, J.M.; Barreiro, A. Sphysics—Development of a freesurface fluid solver—Part 2: Efficiency and test cases. Comput. Geosci. 2012, 48, 300–307. [Google Scholar] [CrossRef]

- Gomez Gesteira, M.; Rogers, B.D.; Crespo, A.J.; Dalrymple, R.A.; Narayanaswamy, M.; Dominguez, J.M. Sphysics—Development of a free-surface fluid solver—Part 1: Theory and formulations. Comput. Geosci. 2012, 48, 289–299. [Google Scholar] [CrossRef]

- Afrasiabi, M.; Klippel, H.; Röthlin, M.; Wegener, K. An improved thermal model for SPH metal cutting simulations on GPU. Appl. Math. Model. 2021, 100, 728–750. [Google Scholar] [CrossRef]

- Kim, Y.T.; Lee, Y.L.; Chung, K.Y. WRF Physics Models Using GP-GPUs with CUDA Fortran. Korean Meteorol. Soc. 2013, 23, 231–235. [Google Scholar] [CrossRef] [Green Version]

- Chang, T.K. Efficient Computation of Compressible flow by Higher-Order Method Accelerated Using GPU. Master’s Thesis, Seoul National University, Seoul, Korea, 2014. [Google Scholar]

- Fletcher, C. Computational Techniques for Fluid Dynamics 1; Springer: New York, NY, USA, 1988; pp. 98–116. [Google Scholar]

- Kim, B. Development of GPU-Accelerated Numerical Model for Surface and Ground Water Flow. Ph.D. Thesis, University of Seoul, Seoul, Korea, 2019. [Google Scholar]

- Zill, D.; Wright, W.S.; Cullen, M.R. Advanced Engineering Mathematics; Jones & Bartlett Learning: Burlington, MA, USA, 2011; pp. 564–566. [Google Scholar]

| CPU | Intel Xeon E5-2620 v2 (2.10 GHz) |

|---|---|

| GPU | NVIDIA GeForce GTXTITAN Z |

| CUDA cores | 5760 |

| Peak GPU Clock/Boost | 876 MHz |

| Peak GFLOPS | 10,091 GFLOPS SP |

| Combined Memory bandwidth | 675 GB/s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, B.; Yoon, K.S.; Kim, H.-J. GPU-Accelerated Laplace Equation Model Development Based on CUDA Fortran. Water 2021, 13, 3435. https://doi.org/10.3390/w13233435

Kim B, Yoon KS, Kim H-J. GPU-Accelerated Laplace Equation Model Development Based on CUDA Fortran. Water. 2021; 13(23):3435. https://doi.org/10.3390/w13233435

Chicago/Turabian StyleKim, Boram, Kwang Seok Yoon, and Hyung-Jun Kim. 2021. "GPU-Accelerated Laplace Equation Model Development Based on CUDA Fortran" Water 13, no. 23: 3435. https://doi.org/10.3390/w13233435

APA StyleKim, B., Yoon, K. S., & Kim, H.-J. (2021). GPU-Accelerated Laplace Equation Model Development Based on CUDA Fortran. Water, 13(23), 3435. https://doi.org/10.3390/w13233435