1. Introduction

Floods are considered major natural disasters globally [

1,

2]. Furthermore, the extreme weather events stemming from climate change may increase their frequency and magnitude [

3,

4]. Urban and suburban landscapes are usually dominated by artificial impervious surfaces that affect the hydrological cycle [

5,

6]. In this context, the meteorological forcing characterization becomes essential: the identification and monitoring of hydrological state indicators, such as rainfall characteristics, are a prerequisite to determine the critical thresholds for flood triggering. These indicators contribute to shape the event and risk scenarios within the territorial context and therefore the criticality and alert levels that establish the indication or warnings to the exposed population [

7,

8].

For the purpose of representation of the territory for urban pluvial flood studies, precipitation measurements are required at local, fine scales with low latency to give near real-time and fine-grained information [

9,

10]. Urban flood risk management can be enhanced by a local scale strategic system integrating near real-time methods, innovative technologies and conventional systems [

11].

Traditionally, rainfall has been assessed by employing several kinds of rain gauges [

12]. Presently, most data are obtained from ground-based measurements or remote sensing: rain gauges, disdrometers, weather radars and satellites often provide limited information in terms of resolution (temporal or spatial) due to the sparseness of the ground monitoring networks that are used for both direct measurement and indirect measurement calibration. Other problems are related to their high costs. Rain gauges are unevenly spread and frequently placed away from the urban centers. Not all observations are continuous and available to the public and often have their limitation due to the variability of the precipitation [

13]. To overcome these limitations, it may be useful to employ a form of aggregation of data obtained from a dense network whose nodes are represented by numerous low-cost inaccurate sensors. Thus, several studies developed unprecedented approaches to retrieve precipitation information with low operational costs. Despite their inaccuracy, novel techniques could furnish valuable additional information in combination with conventional systems [

14].

The first discussions of a camera designed to photograph rainfall emerged with the Raindrop camera [

15], to support radar investigations. The idea of a Camera Based Rain Gauge was suggested by Nayar and Garg, who conducted systematic studies of the visual effects of the rain in pictures and video sequences setting the key theoretical framework [

16,

17] for all later research. The presence of rain produces local variations in the pixel intensity values of digital images, depending on the raindrop intrinsic characteristics, camera parameters and environment.

The literature on the perceptual properties of atmospheric precipitation and weather condition in natural images has highlighted different approaches [

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36]. The most recent advances in the field of Computer Vision (CV) and Machine Learning (ML), the new methods of analysis of digital photography and image processing, have facilitated the study of novel rain detection systems aimed at enhancing the visual aspects to increase the rainfall detection or to remove the raindrops and rain streaks from images and video frames.

The visual effects generated by the presence of rainfall have been attracting much research attention within the fields of outdoor vision. Rain-induced artifacts have typically been addressed as an element that negatively impacts the performance of systems based on video and image processing. Thus, rain detection and removal are considered as a joint pre-processing task in many CV applications. A considerable amount of literature has been published on rain removal techniques [

22,

28] to enhance the adverse weather degraded outdoor images or videos for different applications, such as image or video editing [

33], surveillance vision system and vision-based driver assistance systems [

18,

24,

25]. Although originally developed for visibility enhancement, the methods provide useful insights into detection techniques. Classical CV techniques were applied for the detection of dynamic weather phenomena in videos [

20], whereas Deep Learning (DL) techniques—in particular Convolutional Neural Network (CNNs)—were applied to single images [

29,

30]. 3D CNN systems for the stand-alone rainfall detection from video-frame sequences have proven to be effective for using existing surveillance cameras as a rain detector to decide whether to apply removal algorithms [

36].

Whereas the existing literature on techniques for removing the visual effects caused by rain is fairly extensive (bearing in mind the recentness of major advances in digital photography), there is a smaller body of literature that is concerned with the field of rain measurement for hydrological purposes. A first monitoring system through pictures at the small catchment scale [

21] focuses on snow precipitation visual features that differ significantly from those of the rain in terms of particles size, color and trajectory. Other opportunistic rainfall sensing methods are based classical image processing algorithms: Allamano et al. [

26] estimated the rain rate values with errors of the order of ±25% from pictures taken at adjacent time steps; Kolte and Ghonge [

31] processed video frames collected from a high definition camera; Dong et al. [

34] proposed a method for real-time rainfall rate measurement from videos, counting the focused raindrops in a small depth of field to calculate a raindrop size distribution curve and estimate the corresponding rainfall rate. Jiang et al. [

37] used videos acquired by surveillance cameras under specific settings and evaluated their method on synthetic numerical experiments (i.e., images processed in Adobe Photoshop) and field tests, reaching a mean absolute error of 21.8%. These techniques strongly rely on the image resolution, temporal information, acquisition device characteristics and proper setting of its parameters in order to obtain shootings suitable for the image processing that depicts accurately detectable raindrops and rain streaks.

Adopting a ML approach, researchers have been able to devise robust algorithms for weather classification from single outdoor images, considering different weather categories: Sunny, Cloudy, Rainy [

19]; Sunny and Cloudy [

23,

27]; Sunny, Rainy, Snowy and Haze [

32]; and Sunny, Cloudy, Snowy, Rainy and Foggy [

35].

In comparison with image sequences or video, single images comprise information about the recorded scene per se as they can stand on their own, with a consequent decrease in the amount of data that needs to be processed, stored and transferred reducing computational resources and time.

Despite the diversity of purposes, methods and epistemological fields, all the studies on the visual effects of the rain support the hypothesis that photographs can provide useful information on precipitation and that digital image acquisition devices can function as rain sensors. However, the literature focused on the use of a single image source: dashboard camera, i.e., cameras mounted under the windshield of a vehicle [

18,

19,

24]; surveillance cameras [

20,

34,

36,

37]; outdoor scenes [

23,

27,

30,

32,

33,

35]; and devices with adjustable shooting setting, e.g., exposure time, F-number, depth of field, etc. [

21,

26,

31,

34,

37]. Proposed methods often exploit temporal information requiring videos, frames or sequential still images [

18,

20,

21,

24,

26,

31,

34,

36,

37]. Thus, the literature focuses on use cases with stringent operational requirements, and it lacks heterogeneity and generalizability and tends to be device-specific. The final goal of this research is the characterization of rainfall for creating a dense network of low-cost sensors to support the traditional data acquisition and collection methods with a relatively expeditious solution, easily embeddable into smart devices, benefiting from the aforementioned research progress and the advances in available technology, using DL techniques.

The specific aim of this work is to investigate the use of maximized number of image sources as rain detectors in order to obtain a sensing network as dense as possible providing a large informative and reliable database. As opposed to existing works, the focus of our model is the applicability on all easily accessible image acquisition devices (e.g., smartphones, general-purpose surveillance cameras, dashboard cameras, webcams, digital cameras, etc.), including the pre-existing ones. This encompasses cases where it is not possible to adjust the camera parameters to emphasize rainfall appearance or obtain shots in timelines or videos.

The proposed methodology has a number of attractive features: application simplicity; adherence to the physical-perceptual reality; cost-effective observational and computational resources. In fact, the classification task was performed on single photographs taken in extremely heterogeneous conditions by commonly used tools. The input data can be gained from different acquisition device in different lighting conditions and without special shooting settings, within the limits of visibility (i.e., human visual perception). Shots in timelines or videos are not required, so it is possible to use on pre-acquired images taken for other purposes. In an attempt to adhere physical-perceptual appearance of real rain, any form of digitally simulated rain was excluded from the input data.

In this study, we examine the case of rainfall detection, namely a supervised binary classification in single images answering the question: Is it raining or not?

2. Materials and Methods

The main advantage of ML method is that it uses algorithms that iteratively learn from data and allows computers to solve problems without being explicitly programmed how to do it [

38]. Using this approach, researchers have been able to provide robust algorithms to solve new and complex problems, including image classification in disparate contexts.

The DL techniques, in particular CNN models, are widely used in image-classification applications so appeared especially suitable for this purpose as they are inspired by the structural and functional characteristics of the visual cortex of the animal world. A CNN-based approach was chosen since it is the state-of-art among other image recognition methods [

39]. One benefit of CNNs is that they avoid the problem of pre-processing input data since such algorithms recognize visual patterns directly in images represented by pixels. Traditional CV techniques use manually engineered feature descriptors for object detection, requiring a cumbersome process to decide which features are more descriptive or informative for the desired task. CNN models automate the process of feature engineering: the machine is trained on the given data and discovers the underlying patterns in classes of images, learning automatically the most descriptive and salient features with respect to the addressed task [

40]. Deep architectures contain multiple levels of abstraction and possess the ability to learn complex, non-linear and high-dimensional functions. The general CNN structure consists of a series of layers implementing feature extraction and classification: the densely connected layers learn global patterns in their input feature space while the convolution layers learn local patterns.

The task of detecting rainfall can be achieved through the analysis of the perceptual aspects that determine whether the single photograph in heterogeneous scenes represents a condition of “presence of rain” or “absence of rain” (for obvious reasons, the two conditions are mutually exclusive classes). The scenario of interest for the proposed study is a case of supervised learning of a binary classification type [

41]: the objective is to learn a mapping from known inputs

x (pictures) and outputs

(labels), where

, with

being the number of classes. In the binary case

, thus it is assumed that there are two classes corresponding to the considered weather conditions:

and

. The model aims to generate accurate predictions, associating a novel input (picture) to a label (

or

class).

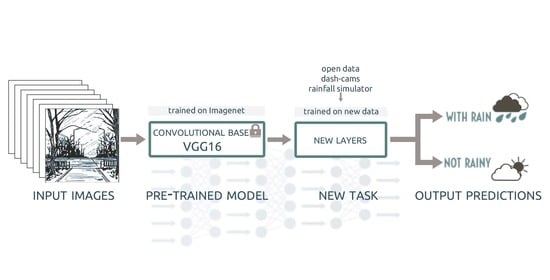

The non-trivial nature of the task of interest and the small size of the available image dataset meant that one of the most effective strategies was the transfer learning [

42,

43] approach (

Figure 1). This ML technique exploits the common visual semantics of the images [

44], employing a pre-trained network for the features extraction as a base for the new classifier trained on the new dataset. A major advantage of re-purposing a network that was previously trained on a large-scale image-classification benchmark dataset such as ImageNet [

45] is the portability of the learned features. Thus, the spatial hierarchy of features can work as a generic model of the visual world for novel perceptual problems [

46].

2.1. Dataset Creation

The entire dataset used in the different stages of the creation of the CNN model—training, validation and test—was produced on the basis of the essential requirements inherent in the required classification task. The task of the detection of liquid precipitation was modeled as a supervised binary classification in single images. Each input instance was paired with exactly one output label describing the class. The class for dichotomization (according to the presence or absence of visible rainfall) were “With Rain” (WR) and “No Rain” (NR).

In realistic rainfall detection scenarios, the accessible images are weather degraded and exhibit a large variability of the conditions under which the images have been taken. The dataset was meant to represent unconstrained and verisimilar image settings for designing robust model capable of coping with the variations in the given images. Criteria for building the dataset were as follows: availability, weather conditions representativeness, the variety of the locations and the diversity of the capture time and lighting conditions. Each photograph depicted an outdoor scene; in the case of pictures belonging to the WR group, their visual appearance altered by the rainfall was perceptible by the human eye. The pictures with digitally synthesized rain were excluded. In particular, raindrops or streaks were not generated through processing with photo editing software (e.g., Adobe Photoshop, GIMP, etc.) or through other computer graphics techniques, such as 3D modeling or rendering with graphics engines (e.g., Blender, Unity 3D, etc.). To increase the scene heterogeneity and have a sufficiently large and balanced number of instances, different datasets with the mentioned characteristics have been combined.

The first part of the dataset was obtained by selecting the images originally labeled as “sunny” and “rain” in the Image2Weather set [

46], which is a large-scale single images dataset grouped by weather condition and freely available (

Figure 2).

The second source for image collection is a set of pictures taken by dashboard cameras mounted on vehicles moving around Tokyo metropolitan area from 19 August 2017 for 48 h, with an average of 4 shots captured in a minute (© NIED—National Research Institute for Earth Science and Disaster Prevention, Tsukuba, Japan).

Figure 3 displays a map for the location of the images collected through the front windshield of the vehicles. The ground truth was obtained associating the rainfall rates retrieved from the high precision multi-parameter radar data [

47,

48] of XRAIN (eXtended RAdar Information Network) operated by the Ministry of Land, Infrastructure, Transport and Tourism (MLIT) of Japan, to the images according to the capture time and GPS location. The minimum rainfall threshold used for labeling images as belonging to rainy class was 4 mm/h [

49] for the veracity of the association of the radar data with the position on the ground surface and reduce the effects of smaller raindrops vertical displacement.

Finally, the third tranche of the data was built through experimental activities in the Large-scale Rainfall Simulator of the NIED located in Tsukuba (Ibaraki prefecture, Japan) shown in

Figure 4. The benefit of using a large-scale rain simulator is that it allows experimental tests to be carried out in a relatively short time, reproducing events of different known intensity, including those with a rather remote occurrence frequency, such as rain showers and downpours with time high return period under controlled and repeatable conditions. This facility for hydro-geological processes simulation and measurement is the largest simulator in the world in terms of rainfall area (approximately 3000 m

) and sprinkling capacity. It can produce fairly accurate precipitation fields for rainfall intensities between 15 and 300 mm/h. The nozzles, set up at a height of 16 m above the ground surface, are capable of reproducing the natural rainfall constant speed and generating raindrops with a diameter ranging from 0.1 to 6 mm.

During the experiments, the produced intensity was set to a constant value for 15 min intervals; the nominal values for each interval ranged from 20 to 150 mm/h and were assumed as ground truth. For the purpose of the heterogeneity of the scenes, the photographs (

Figure 4) were shot with 5 different devices: Canon XC10 (Oita Canon Inc., Oita, Japan), Sony DSC-RX10M3 (Sony Corporation, Tokyo, Japan), Olympus TG-2 (Olympus Corporation, Tokyo, Japan), XiaoYI YDXJ 2 (Xiaoyi Technology Co. Ltd., Shangai, China) and the smartphone XiaoMi MI8 (Xiaomi Communications Co., Ltd., Beijing, China).

The total dataset consisted of 7968 color images coming from the different devices saved in the JPEG format (pixel resolution from 333 × 500 to 5472 × 3648 pixels). All the collected images were labeled and organized into the necessary non-overlapping categories [

50], as shown in

Table 1. The number of instances of the two classes was properly balanced.

To mitigate overfitting caused by the small number of images, data augmentation was adopted as a strategy to artificially create new training examples from the existing ones [

51]. The random geometric transformations were chosen so as to be isometric and guarantee the physical compatibility with the natural appearance of meteorological phenomena. Other data augmentation methods (e.g., color space transformations, random erasing, mixing images, etc.) were avoided since they compromise the visual effects of the rain in pictures. The new pictures were generated by a mirror-reversal of an original across a vertical axis (horizontal flip or reflection) and/or a 2 degrees angle rotation.

2.2. Model Architecture

The deep learning model was entirely implemented with open source tools, namely R programming language with the Integrated Development Environment RStudio using Keras framework and Tensorflow engine backend [

46].

A transfer learning approach was adopted for the features extraction, choosing the VGG16 network [

52] as convolutional base because of its characteristics of generality and portability of the learned features, excluding the densely connected classifier on its top. The VGG16 network was trained on 1.4 million labeled images of the ImageNet dataset and 1000 different classes (mainly animals and everyday objects) achieving a classification accuracy of 92.7%. Since the convolutional base of VGG16 has 14,714,688 parameters, it was frozen before the compiling and training of the new network. Freezing was necessary to prevent the weights from being updated during the training of the new network so as to preserve the representations that were previously learned and avoid overfitting that can result from fine-tuning [

43]. The model was extended by adding new layers on top (

Table 2), in order to obtain a new classifier capable of generating predictions with the two desired output classes (predict whether the picture represents rainy conditions, WR, or not, NR). The dropout layer, which randomly drops some input units and their connections with probability

, was used as a regularization technique to reduce possible data overfitting problems in the learning phase so as to preserve the algorithm generalizability.

3. Results

3.1. Training and Validation

The labeled pictures of the training set (

Table 1) constituted known examples of ordered pairs of input (image) and output (WR or NR class) to feed the network so that the final model can make predictions starting from inputs.

The CNN was initialized with random values of the trainable parameters. Then, the optimizer updated automatically—for each elaborated instance—the values of the weights implementing the error backpropagation algorithm. The optimization procedure modified the training parameters of the model in order to maximize the prediction accuracy and minimize the cost function. The dropout rate was set to 25, meaning one in four inputs was randomly excluded from each update cycle during the training phase. The loss of binary cross entropy between the training data and the model’s predictions was used as the cost function [

44]. A disjoint validation set provided an unbiased evaluation of the model fit on the training dataset while tuning the model’s hyperparameters, thus preserving its generalization ability.

The training configuration setup can be summarized as follows:

Data Augmentation: horizontal flip, 2 rotation

Loss: binary cross-entropy as cost function for monitoring and improving the model skills

Optimizer:

RMSprop Optimizer that implements the RootMean Square Propagation (RMSprop) algorithm [

53] to update the neural network

Metric: accuracy. The amount of correctly classified instances

Learning rate:

Figure 5 illustrates the predictive effectiveness of the network plotting the trends of the accuracy (

acc) and loss (

loss) values over the subsequent iterations (

epoch) calculated on the known data, i.e.training, and

unknown ones, i.e. validation. During the first 15 epochs, accuracy increased while the loss decreased, constantly improving without abrupt changes the training stability. After 17 epochs, the accuracy reached a stable value of ≈87% for the training set and ≈84% for the hold-out validation set. The loss stalled at ≈0.30 for the training set and ≈0.33 for the validation set. For the subsequent epochs, the plots indicate that the training and validation curves stopped improving significantly. The small gap between the curves relative to the two subsets indicates that the network has converged reaching a point of stability without over-learning of the training data.

The training was stopped after 30 epochs, to avoid the degradation of the performance of the model [

44] due to possible overfitting which is graphically identifiable as a divergence between the curves of training and validation for each metric.

During the 30 epochs—complete presentations of the entire training and validation datasets—the learning rate was set to a fairly low value (

) to maintain stability [

44]. The trained model reached an accuracy of 88.95% on the training set and 85.47% on the validation set and a loss (Murphy 2012) of 0.27 and 0.33, respectively.

The Loss (Binary Cross Entropy, i.e. CE) was calculated as follows:

where

and

are, respectively, the ground-truth and the raw score for each class i in C and

is the logistic sigmoid activation function which is necessary for the output to be interpreted as a probability.

The case of interest is a binary classification problem, where . It is assumed that there are two classes, and ; thus, and are the ground truth and the probability value for , and and are the ground truth and the output score for , as the classes are mutually exclusive.

The values are significantly far from a random prediction in a balanced binary problem: modeling the random predictor as a “fair coin” [

41,

54], where the tossing is a discrete-time stochastic process giving a binary output, the non-informative values correspond to accuracy of 50% and loss of 0.69.

3.2. Test

To evaluate the performance of the proposed algorithm, the trained model was applied to the test dataset to give an unbiased estimate of model skill. The test set is independent of the training and validation datasets, but follows the same probability distribution. To assess the quality of the predictions on new pictures, the confusion matrix (

Table 3) was constructed over the entire test set, opposing instances in a predicted class (model response) against instances in an actual class.

Given the WR label (presence of rain) as the positive class and NR (absence of rain) as the negative class, the numbers of True Positives (TP), False Positives (FP), True Negatives (TN) and False Negatives (FN) were counted to calculate useful metrics.

The accuracy, with a significant

p-value (equal to

), and the loss of entropy calculated on the test set are, respectively, 85.28% and 0.34. The accuracy and loss are significantly different from a random prediction and consistent with the metrics obtained in the training and evaluation phase. To measure the rain detection performance, several metrics are used and compared to reference values [

41,

55,

56], as reported in

Table 4.

From the results, it is evident that the model exhibits good overall predictive reliability. The sensitivity, >90%, is higher than the specificity, >80%. That means the test is responsive to rainy condition detection but prone to the risk of reporting false positives. In the application field of interest, i.e., urban flood risk management, high sensitivity is desirable: missing cases of rainfall presence could lead to delays in early warning systems. The detection will easily mark rainy pictures, ensuring that dangerous pluviometric forcing is recognized. On the other hand, the classification was performed on balanced class, although in reality a condition of absence of rain is more likely to occur. Hence, specificity is important to avoid unnecessary alerts.

The false positive occurrence may be explained by the presence of lens flare caused by sun-rays and artificial lighting which produces artifacts that may resemble raindrops accumulated on the lens. Closer examination of the results reveals some ambiguity in the visual appearance of the misclassified pictures and hence a limitation of our system: it does not suit scenes that are also misleading for human vision.

3.3. Model Deployment

To estimate the skills of the proposed model in a real-world operational setting, a completely new set of input images was built. The first test set was obtained from the same sources of the training and validation sets (

Table 1), whereas, for the model deployment, the source of the picture was a Reolink surveillance camera installed by TIM s.p.a. within the “Bari—Matera 5G project” (

http://www.barimatera5g.it/, accessed on 1 January 2021) without any special setting aimed to enhance the precipitation visibility. In fact, the webcam was formerly set for the Public Safety case using 5G network connectivity testing. The camera frames piazza Vittorio Veneto—the main square of Matera (Italy)—and its hypogeum (

Figure 6).

The experiment test set is strongly independent of the training and validation datasets. It contains 2360 unseen examples, 1180 instances for the WR class and 1180 for the NR class. The resolution of the color picture was 2560 X 1920pixels (

Table 1). The ground truth was created by manually labeling the pictures that displayed precipitation streaks or raindrops and selecting the same number of frames that displayed a clean background, with as many lighting conditions as possible (i.e., with diverse capture time). The presence of rain was verified with precipitation data collected both from the JAXA Global Rainfall Watch—GSMaP Project (

https://sharaku.eorc.jaxa.jp/GSMaP, accessed on 1 January 2021) and the Centro Funzionale Decentrato Basilicata (

http://www.centrofunzionalebasilicata.it/, accessed on 1 January 2021).

The learned model was used to generate predictions on the test TIM dataset.

Figure 7 shows the Receiver Operating Characteristic (ROC) curve and Youden index [

57,

58] calculated on the output scores. The dashed grey line represents the non-informative values (chance line) of a random classifier. The ROC curve (blue line) plotted the dependency of true positive rate (Sensitivity) on false positive rate (it equal 1—Specificity) obtained at various thresholds. The area under the ROC curve is

with confidence interval

, suggesting that the model predicts rainfall presence very well. Using the Youden index point, the optimal cutoff value was set to

.

Both TIM image set and binary test set share a significant outcome.

The confusion matrix is given in

Table 5.

The overall accuracy, with a p-value equal to

, and the loss of entropy computed on the TIM image set are 85.13% and 0.396. The metrics values were similar (

Table 6), exhibiting a rather high predictive effectiveness [

41,

55,

56].

Compared to the test results, the model deployed on the TIM camera pictures discloses lower sensitivity, ≈83%, but higher specificity, ≈87%, and precision, ≈86%. That means that the pictures marked as relevant instances (WR) were actually relevant, so the alerts are likely to be true. On the other hand, the higher false negative rate may underestimate the pluviometric forcing.

Considering an optimal balance of recall and precision, F1 score is close to the ideal value of 1 in both the test evaluation and the experiment evaluation. The model withstood the drastic change in the datasets, as the images were completely new.

Figure 8 shows a frame taken by TIM surveillance camera in rainy condition and a visual explanation of the CNN classification, the Gradient-weighted Class Activation Mapping (Grad-CAM) [

59]. The heatmap highlights the specific discriminative regions within the input image that are relevant for the classifier predictions. It is interesting to note that the parts of the image judged more

rain-like (strongly activated in the Grad-CAM) by our CNN are the portions of the scene obstructed by the raindrops accumulated on the lens of the surveillance camera.

4. Discussion

The results of the model test and the deployment on the real use case are encouraging for the binary classification problem that detects the presence or the absence of precipitation.

To assess the classification effectiveness of our study, we compare our method with other state-of-the-art methods for rain detection from a single image [

19,

32,

35]. Because there is no publicly available implementation of these algorithms, the comparison was made in terms of the performance metrics reported in the literature. The model and features used, image source, labels for classification, overall accuracy and rain class sensitivity are given in

Table 7.

Table 7 shows that the AdaBoost-based model [

19] achieves the best accuracy over all other methods. By comparing sensitivity obtained by our model on the test set with that in [

19], we see similar values. On the other hand, the model in [

19] uses engineered features based on in-vehicle vision system (i.e.,

road information) for classification. As a consequence, the model may experience abrupt degradation of accuracy for unconstrained scenarios, as shown in the results of generic outdoor scenes classification reported in [

32]. The results on less restrictive settings reveal our new methods offers performance advantages in both test and deployment real-word scenarios; the values of accuracy and sensitivity obtained by our model in both cases are higher than the values obtained with the approaches by Yan et al. [

19], Zhang et al. [

32], Chu et al. [

35]. This result may be explained by the two main differences between ours and the aforementioned studies. First, our method uses pre-learned filters for feature extraction (frozen VGG16 parameters,

Table 2), so it does not require manually made feature descriptors for object detection, which are susceptible to recall bias. Secondly, the trainable part of our network (

Table 2) learns from a dataset that exhibits large variability and includes both static and in-vehicle cameras, whereas all the studies tend to be device-specific.

Hence, the results achieved surpass the earlier work in this area in terms of input heterogeneity, as the model can handle images captured with both static or moving cameras without significant accuracy loss. Our algorithm is simple, flexible, robust and leaves no requirement for any other step.

The proposed approach allows using heterogeneous and easily producible input data: the images can be acquired from disparate sources in different lighting conditions, without particular requirements in terms of the shooting settings (although some parameters may favor the visibility of the precipitation), and, since each image is classified independently, the detection does not require any sequence or continuous shooting or videos. Once the training phase is over, computational times are extremely reduced.

This approach is especially useful in urban areas where video and image capture devices are ubiquitous and measurement methods such as rain gauges encounter installation difficulties or operational limitations or in contexts where there is no availability of remote sensing data.

The result is a fairly rapid, simple and expeditious solution. As demonstrated in the experiment on a real-world use case, it is immediately applicable at an operational level and easily embeddable in intelligent devices. The experimental activity within the “Bari Matera 5G” project provides a promising perspective on the Smart Environmental Monitoring using 5G connectivity. The system can be managed locally, remotely or via cloud by programming electronic devices (such as smart cameras or IoT devices) for recording and transmitting the rain data. The model can retrieve rainfall information by processing data collected from different sources located in a fixed place (surveillance cameras) or moving (smartphones, dash-cams and action-cams), contributing to the traditional monitoring networks and forecast systems.

These finding corroborate the idea in [

27] that CNN-based classification outperforms engineered learning representation for weather-related vision tasks due to the CNN model’s ability to finding the nonlinear mapping and taking into account extensive factors including low-level abstraction using pre-trained convolutional base (transfer learning).

The major limitation of the proposed approach is intrinsic to the output results: in fact, the detection of the presence of precipitation (without a quantification) is a necessary condition but not sufficient for the creation of a rain gauge network based on cameras. An additional uncontrolled factor, common to all vision based methods, is the possibility to have ambiguity in the pictures: artifacts due to dirty lenses and flare can lead to false positives or the visual effects of precipitation may also often be inconspicuous for human observers. Misclassification error can be compensated for by many observations. The straightforwardness of our approach will make it easy and virtually cost-free to embed the proposed CNN in existing outdoor vision systems to increase the spatial density of rain observations. The rainfall detection is performed on single images the can be collected in a very short time; therefore, very high temporal resolution is possible in principle.

The proposed transfer learning technique can also be combined with incremental learning algorithms [

60,

61]. Incremental learning extends the existing model’s knowledge without access to the original data, by continuously learning from new input data while preserving the previously acquired knowledge. Due to this, the network has the potential to enhance the robustness to rainfall intensity (e.g., light rainfall rates), background and other environmental effects, as well as accommodate different use case scenarios (e.g., setting site-specific rainfall thresholds, detecting snow precipitation).

5. Conclusions

In this study, a DL method for detecting rainfall from single photographs taken in very generalizable conditions with no need for parameter adjustment, photometric constraint or image pre-processing was proposed. It can be applied to any outdoor scene within the limits of the human visibility.

Taken together, the results suggest that using a DL method is an efficient strategy for the CV task of gathering rainfall information using generic cameras as low-cost sensors. This finding, while preliminary, can help define novel strategies for meteorological-hydrological studies on a local scale in urban areas. Despite its exploratory nature, the proposed approach offers a first near real-time tool based on artificial vision techniques which can contribute to creating a support sensors network for the characterization of rainfall by monitoring the onset and end of rain-related events, with a parsimonious approach in terms of economic and computational resources.

The limited operational requirements allowed the proposed model to be immediately applicable in experiments on real use cases. Our CNN can easily exploit pre-existing devices and is practical for systems that need to be implemented quickly. The experimentation raises the possibility that the use of real rain images captured by already installed surveillance cameras can help in setting up a monitoring and warning system of the pluviometric forcing triggering rain-related events.

This information can be used to develop targeted interventions aimed at building-up an information infrastructure integrating low-cost sensor and crowdsourced data that suits the future needs in terms of spatial and temporal resolution, scalability, heterogeneity, complexity and dynamicity.

Future work concerns the development and experimentation of another CNN network based on the proposed methodology to allow the quantitative characterization of the precipitation. The rainfall intensity will be classified into ranges; the dataset will be expanded by the number of instances and improved according to criteria of non-ambiguity and availability of known rainfall intensity values; and the model will be properly re-adapted, evaluated, calibrated and fine-tuned to meet the needs of hydro-meteorological monitoring.

[UML] MLMachine Learning CVComputer Vision DLDeep Learning CNNConvolutional Neural Network ROCReceiver Operating Characteristic