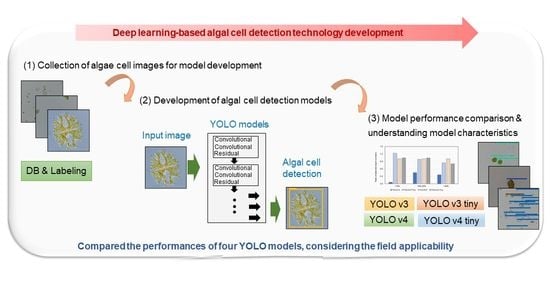

Deep Learning-Based Algal Detection Model Development Considering Field Application

Abstract

:1. Introduction

2. Material and Methods

2.1. Model Selection

2.2. Data Sources

2.3. Model Training Environment

2.4. Effect of the Relative Object Size within the Image

2.5. Model Evaluation

3. Results and Discussion

3.1. Model Simulation Results

3.2. Effect of the Relative Object Size

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Codd, G.A.; Morrison, L.F.; Metcalf, J.S. Cyanobacterial toxins: Risk management for health protection. Toxicol. Appl. Pharmacol. 2005, 203, 264–272. [Google Scholar] [CrossRef] [PubMed]

- Paerl, H.W.; Otten, T.G. Harmful cyanobacterial blooms: Causes, consequences, and controls. Microb. Ecol. 2013, 65, 995–1010. [Google Scholar] [CrossRef] [PubMed]

- Otten, T.G.; Crosswell, J.R.; Mackey, S.; Dreher, T.W. Application of molecular tools for microbial source tracking and public health risk assessment of a Microcystis bloom traversing 300 km of the Klamath River. Harmful Algae 2015, 46, 71–81. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 818–833. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. A review of object detection models based on convolutional neural network. In Intelligent Computing: Image Processing Based Applications; Springer: Singapore, 2020; Volume 1157, pp. 1–16. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Benjdira, B.; Khursheed, T.; Koubaa, A.; Ammar, A.; Ouni, K. Car detection using unmanned aerial vehicles: Comparison between faster R-CNN and yolov3. In Proceedings of the 2019 1st International Conference on Unmanned Vehicle Systems-Oman (UVS), Muscat, Oman, 5–7 February 2019; pp. 1–6. [Google Scholar]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Sonmez, M.E.; Eczacıoglu, N.; Gumuş, N.E.; Aslan, M.F.; Sabanci, K.; Aşikkutlu, B. Convolutional neural network-Support vector machine based approach for classification of cyanobacteria and chlorophyta microalgae groups. Algal Res. 2021, 61, 102568. [Google Scholar] [CrossRef]

- Medina, E.; Petraglia, M.R.; Gomes, J.G.R.; Petraglia, A. Comparison of CNN and MLP classifiers for algae detection in underwater pipelines. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–6. [Google Scholar]

- Park, J.; Baek, J.; You, K.; Nam, S.W.; Kim, J. Microalgae Detection Using a Deep Learning Object Detection Algorithm, YOLOv3. J. Korean Soc. Environ. Eng. 2021, 37, 275–285. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Zhao, K.; Ren, X. Small aircraft detection in remote sensing images based on YOLOv3. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Kazimierz Dolny, Poland, 21–23 November 2019; p. 012056. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Bochkovskiy, A.D. Open Source Neural Networks in Python. 2020. Available online: https://github.com/AlexeyAB/darknet (accessed on 19 January 2021).

- Remon, J.D. Open Source Neural Networks in C, 2013–2016. Available online: https://pjreddie.com/darknet/ (accessed on 15 July 2020).

- Jiang, Z.; Zhao, L.; Li, S.; Jia, Y. Real-time object detection method based on improved YOLOv4-tiny. arXiv 2020, arXiv:2011.04244. [Google Scholar]

- Ozenne, B.; Subtil, F.; Maucort-Boulch, D. The precision–recall curve overcame the optimism of the receiver operating characteristic curve in rare diseases. J. Clin. Epidemiol. 2015, 68, 855–859. [Google Scholar] [CrossRef] [PubMed]

| Model | YOLO v3 | YOLO v3 Tiny | YOLO v4 | YOLO v4 Tiny |

|---|---|---|---|---|

| Backbone (Number of convolutional and pooling layers) | Darknet-53 (75) | 7 convolutional and 6 max pooling layers (13) | CSPDarknet-53 (110) | CSPDarknet-53-tiny (21) |

| Resolution of input image | 416 × 416 | 416 × 416 | 608 × 608 | 416 × 416 |

| Genera | Number of Image | Number of Label | ||

|---|---|---|---|---|

| Train | Test | Train | Test | |

| Acutodesmus obliquus | 8 | 2 | 62 | 11 |

| Ankistrodesmus falcatus | 12 | 4 | 20 | 6 |

| Chlamydomonas asymmetrica | 16 | 6 | 55 | 6 |

| Chlorella vulgaris | 12 | 3 | 60 | 11 |

| Chlorococcum loculatum | 12 | 3 | 53 | 8 |

| Chroomonas coerulea | 12 | 4 | 98 | 15 |

| Closterium sp. | 7 | 2 | 11 | 2 |

| Coelastrella sp. | 7 | 3 | 14 | 5 |

| Coelastrum astroideum var. rugosum | 6 | 2 | 27 | 5 |

| Cosmarium sp. | 13 | 5 | 46 | 6 |

| Cryptomonas lundii | 22 | 6 | 22 | 6 |

| Desmodesmus communis | 16 | 3 | 18 | 3 |

| Diplosphaera chodatii | 2 | 1 | 32 | 6 |

| Eudorina unicocca | 6 | 1 | 40 | 6 |

| Euglena sp. | 28 | 7 | 28 | 7 |

| Kirchneriella aperta | 13 | 3 | 30 | 17 |

| Lithotrichon pulchrum | 8 | 2 | 42 | 11 |

| Micractinium pusillum | 9 | 1 | 44 | 5 |

| Micrasterias sp. | 6 | 2 | 6 | 2 |

| Monoraphidium sp. | 21 | 8 | 40 | 15 |

| Mychonastes sp. | 9 | 4 | 39 | 13 |

| Nephrochlamys subsolitaria | 6 | 2 | 24 | 5 |

| Pectinodesmus pectinatus | 32 | 7 | 39 | 8 |

| Pediastrum duplex | 11 | 4 | 13 | 4 |

| Pseudopediastrum boryanum | 10 | 3 | 10 | 3 |

| Scenedesmus sp. | 6 | 3 | 9 | 4 |

| Selenastrum capricornutum | 6 | 3 | 31 | 7 |

| Sorastrum pediastriforme | 8 | 1 | 8 | 1 |

| Tetrabaena socialis | 11 | 2 | 16 | 2 |

| Tupiella speciosa | 4 | 1 | 25 | 2 |

| Total | 339 | 98 | 962 | 202 |

| Models | YOLOv3 | YOLOv3 Tiny | YOLOv4 | YOLOv4 Tiny |

|---|---|---|---|---|

| Batch size | 64 | 32 | 64 | 32 |

| Learning rate | 0.001 | 0.001 | 0.0013 | 0.00261 |

| max_batch class | 60,000 | 60,000 | 60,000 | 500,200 |

| Model | YOLO v3 | YOLO v3 Tiny | YOLO v4 | YOLO v4 Tiny |

|---|---|---|---|---|

| mAP (IOU 0.5) | 40.9 | 88.8 | 84.4 | 89.8 |

| Inference speed (fps) | 2.0 | 4.1 | 1.7 | 4.0 |

| YOLO v3 | YOLO v3 Tiny | YOLO v4 | YOLO v4 Tiny | Dataset | References |

|---|---|---|---|---|---|

| 51.5–60.6/ 20–45 | 33.1/ 220 | MS COCO (80 classes, 83K images) | Remon [24] | ||

| 52.5/49 | 30.5/277 | 64.9/41 | 38.1/270 | MS COCO (80 classes, 83K images) | Jiang et al. (2020) |

| Genera | Relative Size (%) | Probability of Class Prediction (%) | |||

|---|---|---|---|---|---|

| YOLO v3 | YOLO v3-Tiny | YOLO v4 | YOLO v4-Tiny | ||

| Closterium sp. | 61.7 * | ND | 98.3 | 99.6 | 90.6 |

| 10 | ND | 51.1 | 68.9 | 81.2 | |

| 30 | ND | 99.9 | 98.6 | 98.1 | |

| 50 | ND | 41.9 | 97.3 | 55.5 | |

| 70 | ND | FD | FD | ND | |

| 90 | ND | ND | FD | ND | |

| Pseudopediastrum boryanum | 35.2 * | 81.5 | 100.0 | 99.3 | 98.9 |

| 10 | FD | 96.3 | 99.9 | 92.5 | |

| 30 | ND | 100.0 | 99.7 | 99.8 | |

| 50 | ND | 100.0 | 99.0 | 100.0 | |

| 70 | ND | 99.9 | FD | 98.7 | |

| 90 | ND | FD | FD | AD | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Baek, J.; Kim, J.; You, K.; Kim, K. Deep Learning-Based Algal Detection Model Development Considering Field Application. Water 2022, 14, 1275. https://doi.org/10.3390/w14081275

Park J, Baek J, Kim J, You K, Kim K. Deep Learning-Based Algal Detection Model Development Considering Field Application. Water. 2022; 14(8):1275. https://doi.org/10.3390/w14081275

Chicago/Turabian StylePark, Jungsu, Jiwon Baek, Jongrack Kim, Kwangtae You, and Keugtae Kim. 2022. "Deep Learning-Based Algal Detection Model Development Considering Field Application" Water 14, no. 8: 1275. https://doi.org/10.3390/w14081275

APA StylePark, J., Baek, J., Kim, J., You, K., & Kim, K. (2022). Deep Learning-Based Algal Detection Model Development Considering Field Application. Water, 14(8), 1275. https://doi.org/10.3390/w14081275