Overflow Capacity Prediction of Pumping Station Based on Data Drive

Abstract

1. Introduction

2. Materials and Methods

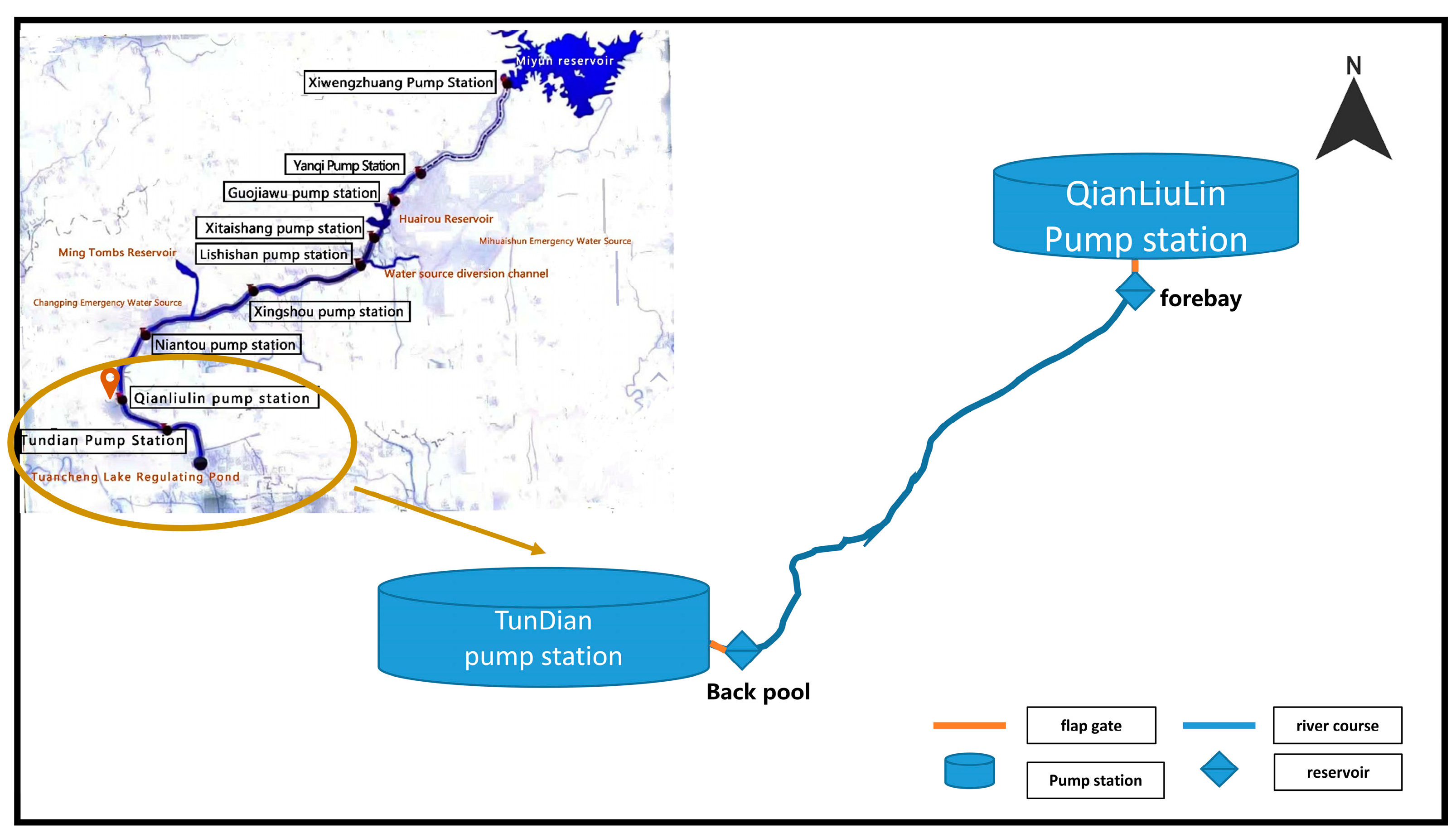

2.1. Study Areas and Monitor Data

2.2. Monitoring Aata Cleaning and Interpolation

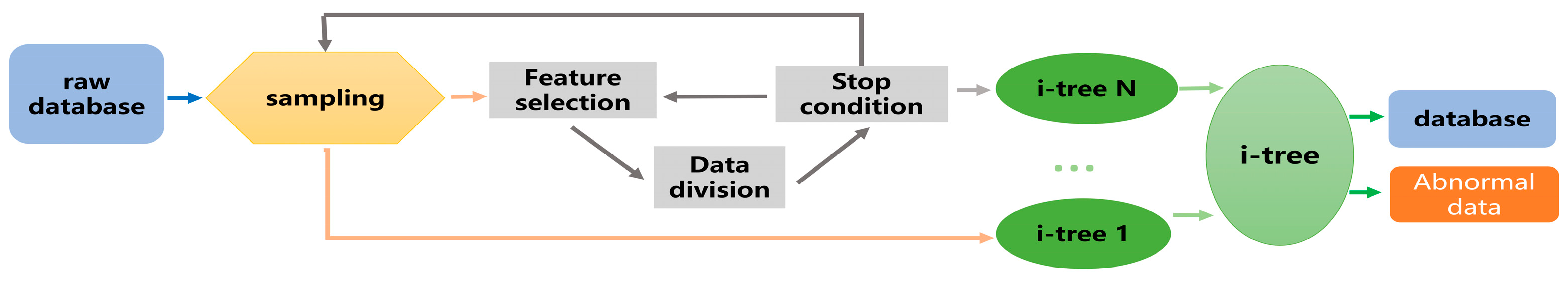

- Randomly select n data from the data sample as a sub-sample, with a maximum value of and a minimum value of , to put in the root node of the i-tree.

- A dimension is randomly selected, and a cut point p is randomly selected from the current node data, .

- The current node data are divided into 2 sections by cutting point p, putting data smaller than p in the selected dimensions in the left subtree of the current node, and data larger than p in the selected dimensions in the right subtree of the current node.

- Repeat steps 1 to 3 recursively on the next node until only one datum exists on each node or the maximum growth height of the tree is reached.

- Select the next binary tree and repeat steps 1 to 4 until all the binary tree training is complete.

- Calculate the average path length for each isolated tree

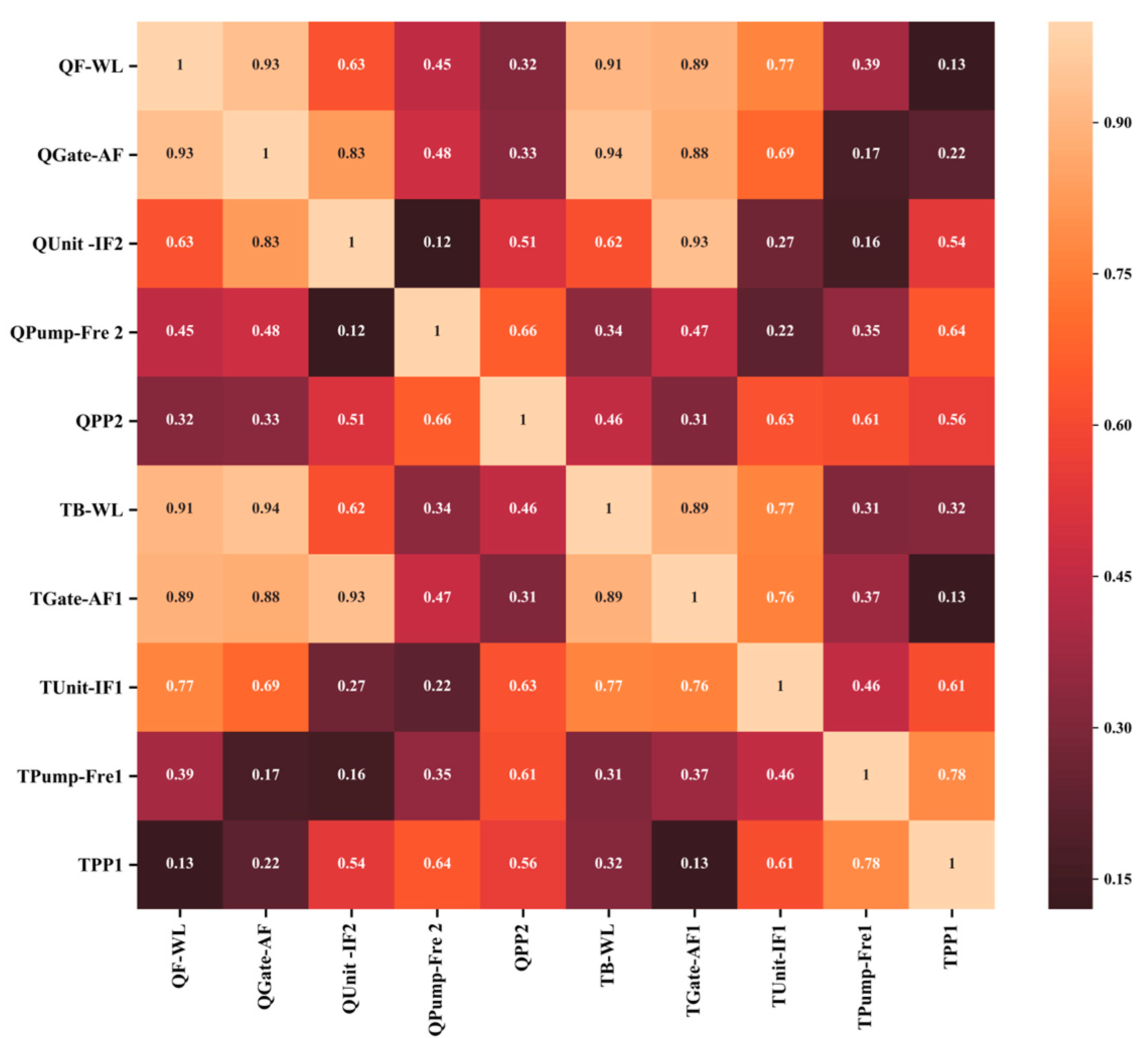

2.3. Variable Selection: Pearson Correlation Coefficient

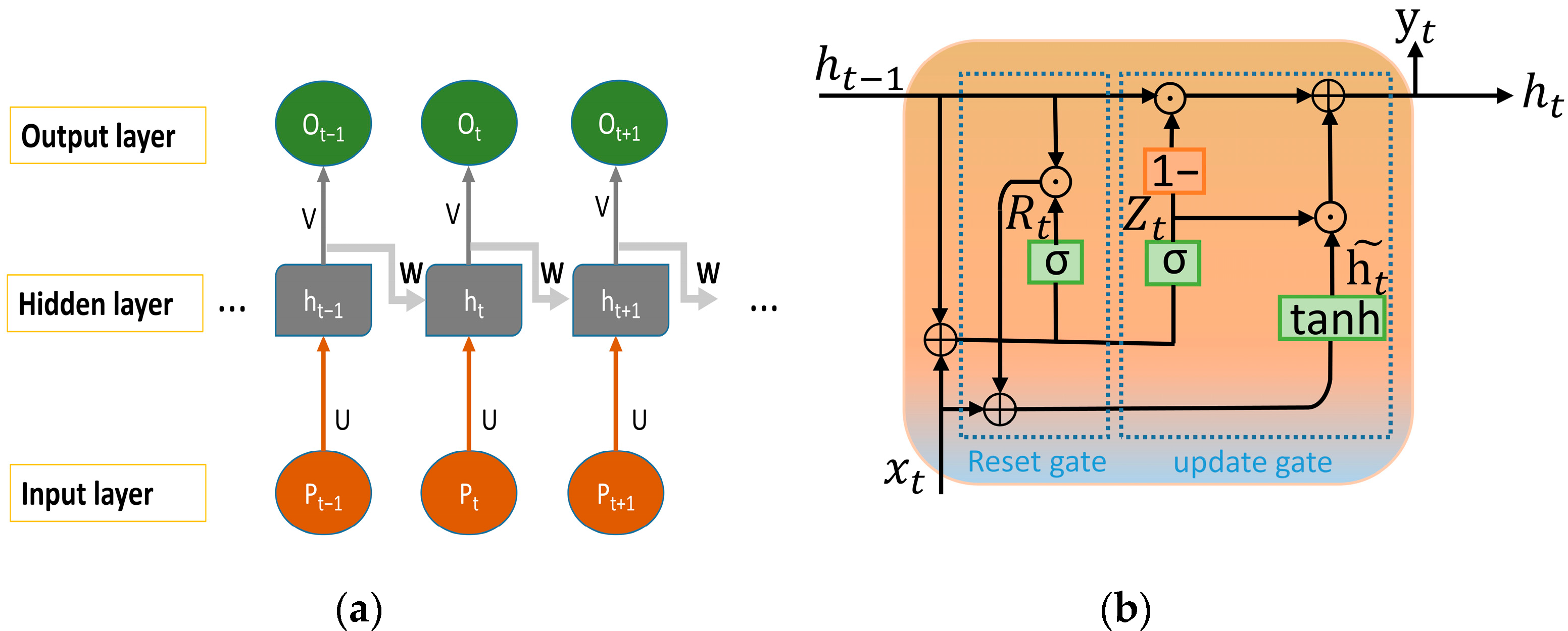

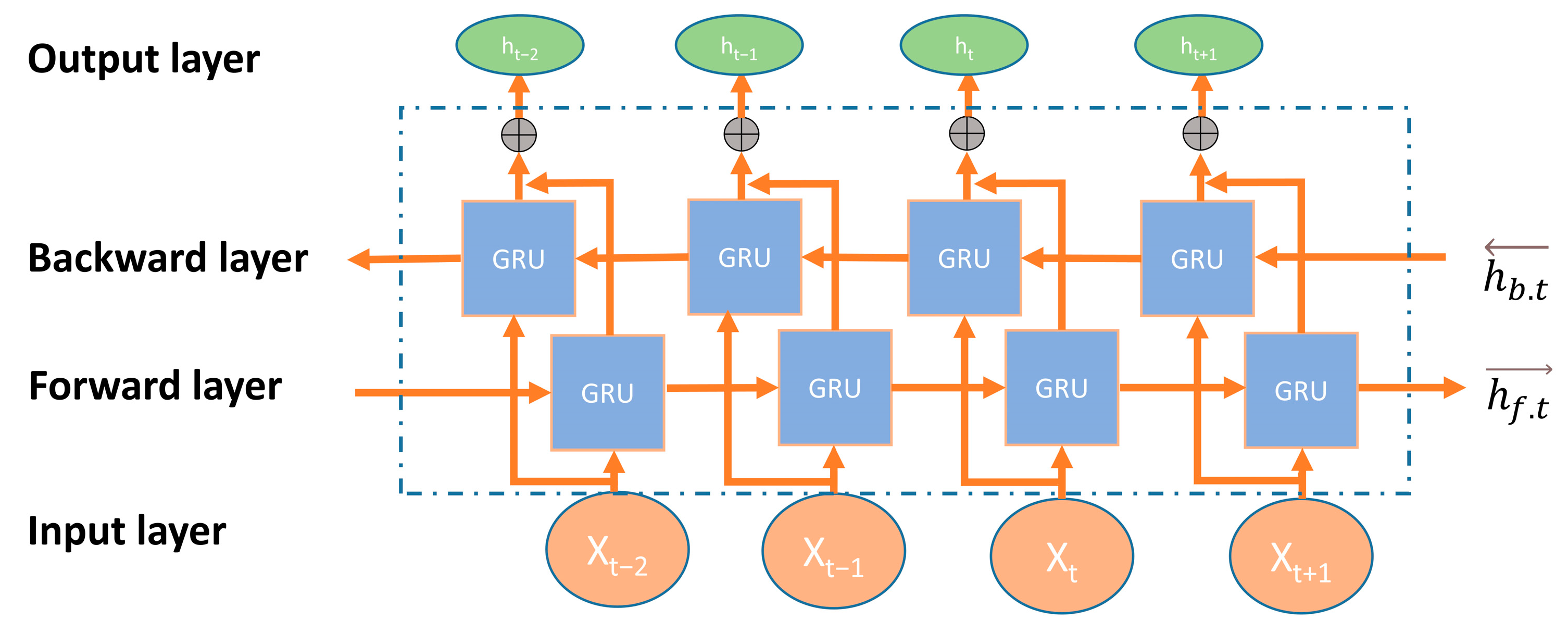

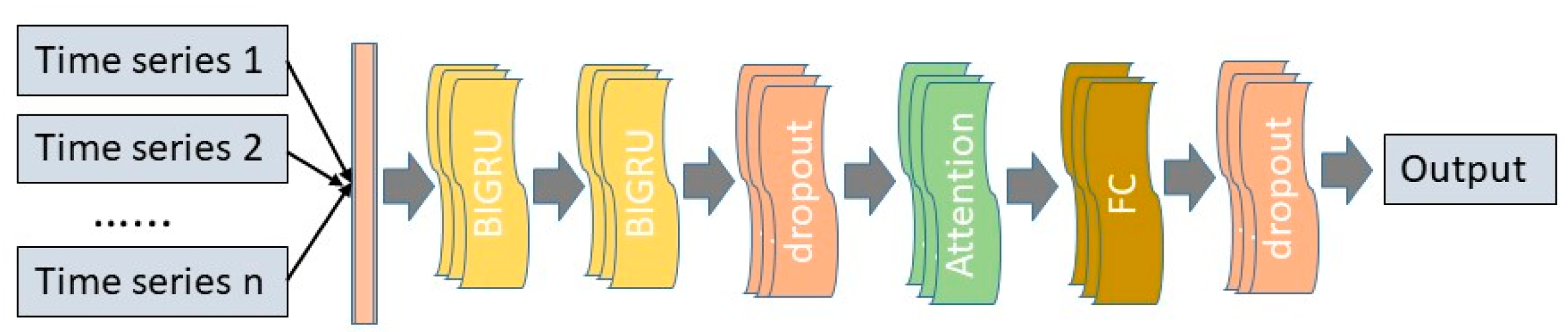

2.4. Bidirectional Gated Recurrent Unit

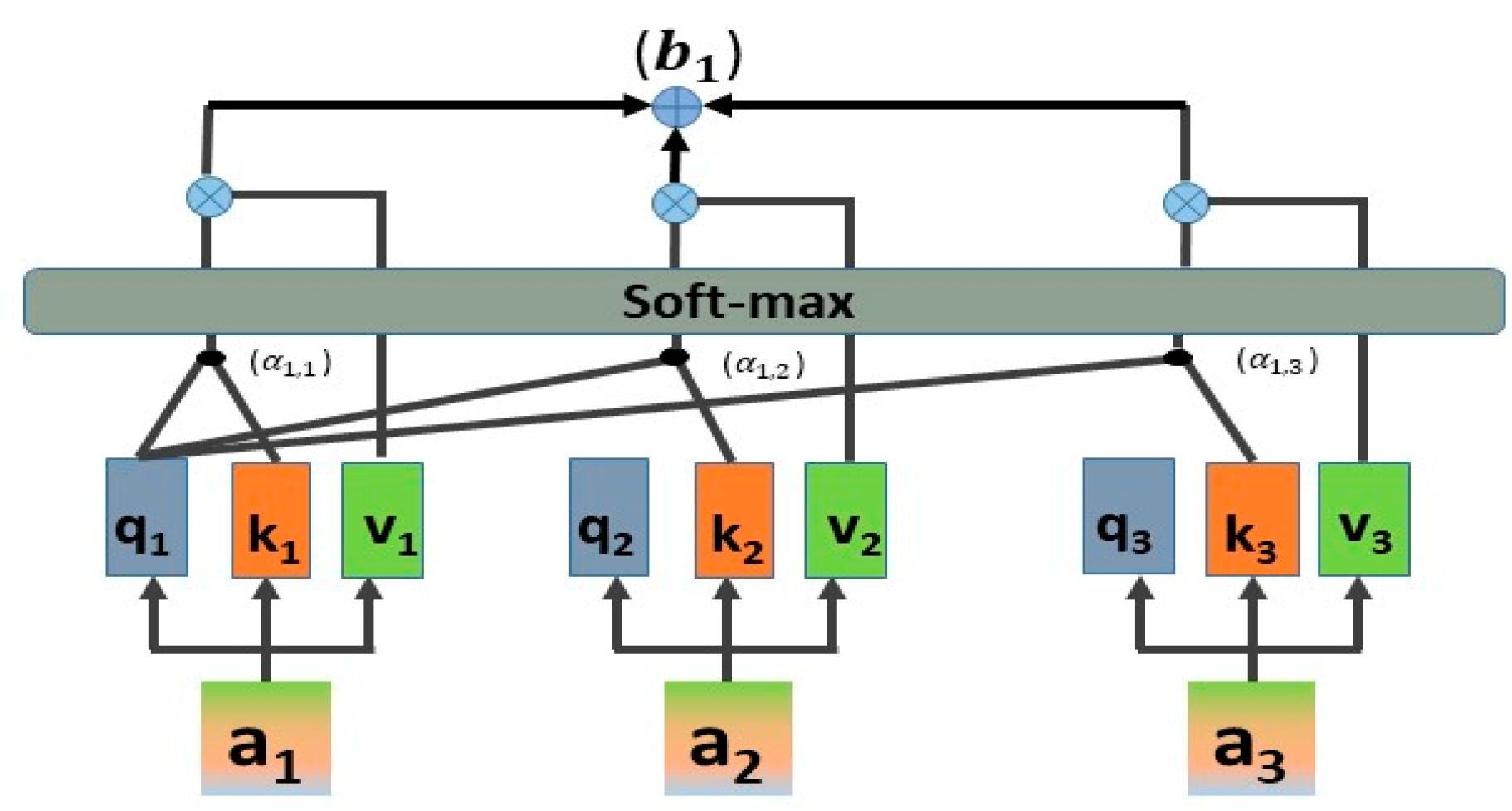

2.5. Self-Attention Mechanism

2.6. Autoregressive Integrated Moving Average Model

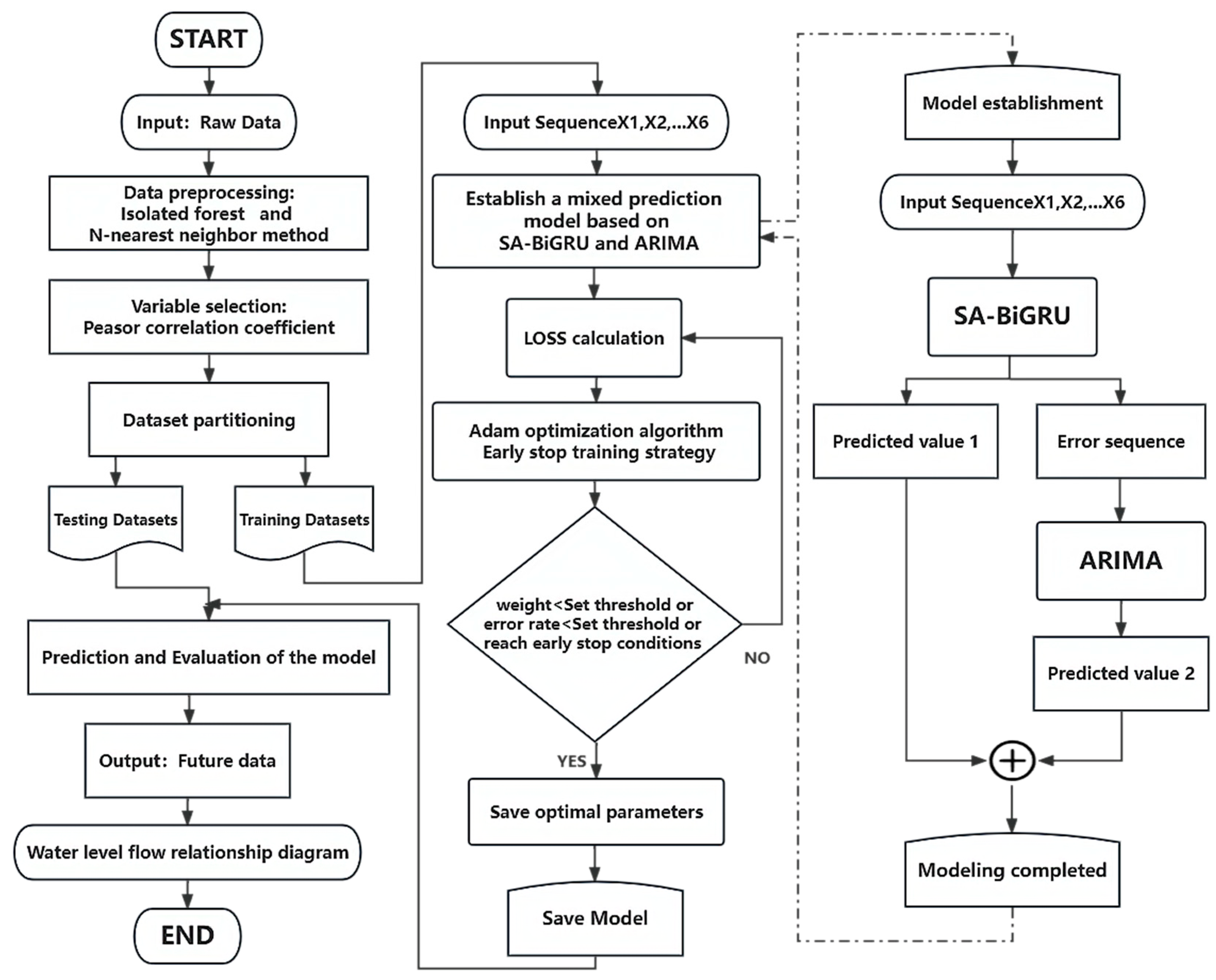

2.7. Overall Framework

- The pumping station data is obtained, and the outliers in the data are processed by screening method, isolated forest algorithm and N-nearest neighbor algorithm. Then, Pearson correlation coefficient is used for feature selection and 6 variables with high correlation are selected as input variables. The pump station data from January to November 2020 are used as the training set, and the data from December as the test set.

- Input variables into the SA–BiGRU model, and the feature sequences are reordered according to the weights obtained by the self-attention mechanism. Adam optimization algorithm and early-stop training strategy are used to select the optimal parameters, and the preliminary predicted value M is outputed by the full connection layer.

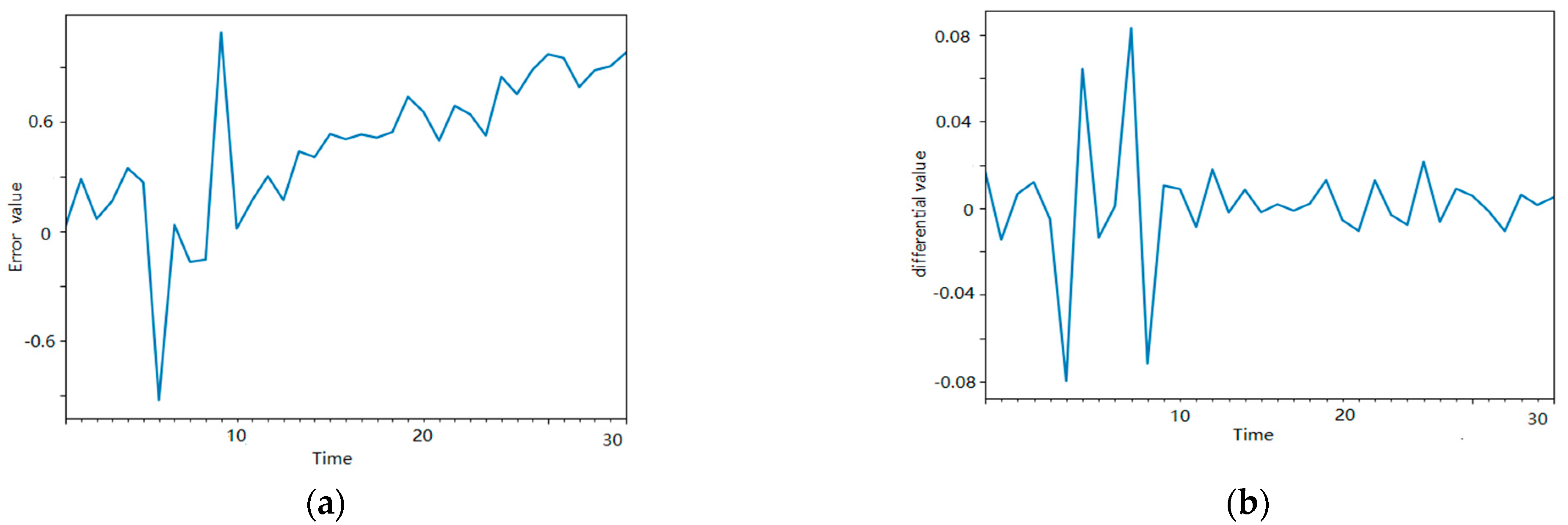

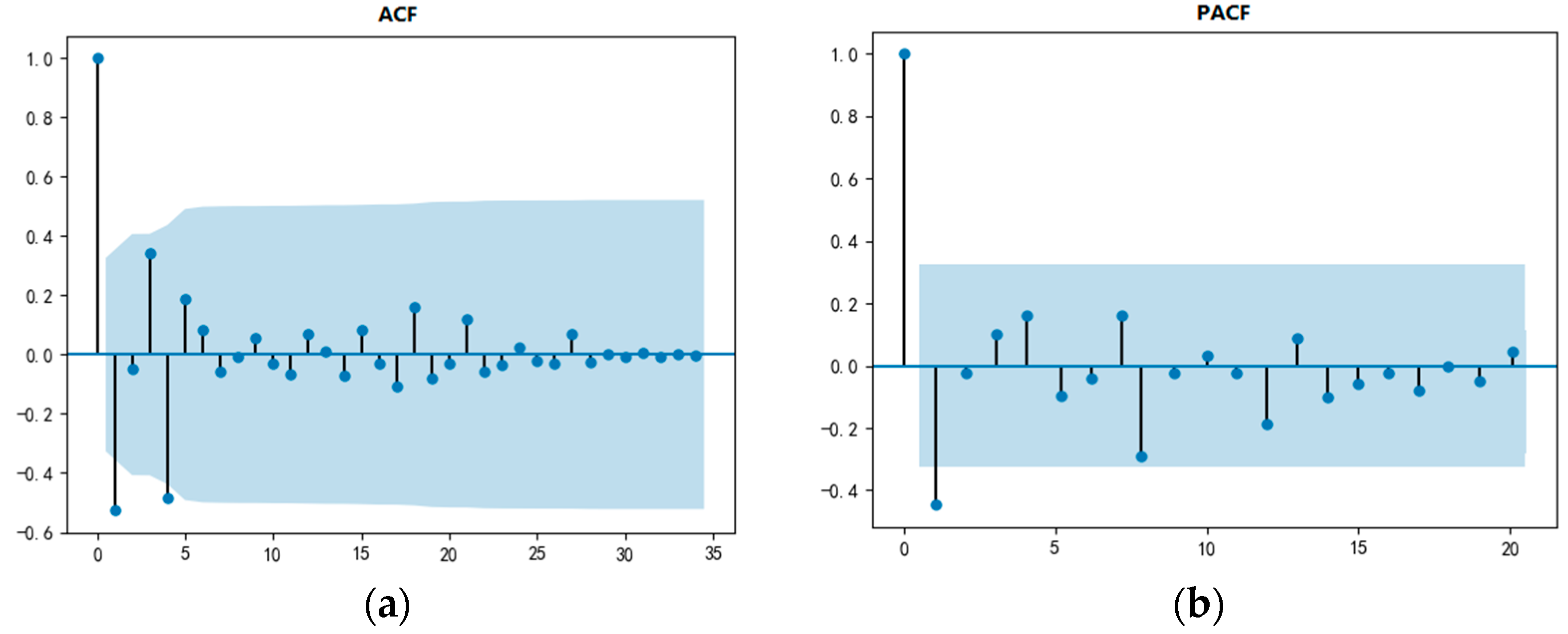

- The error sequence obtained by SA–BiGRU is taken as the input vector of ARIMA model, and the prediction variable E of linear component of the error sequence is output after the stationarity test, model order determination and model fitting.

- The final prediction variable Q = M + E is obtained by adding the prediction variables obtained in the second and third steps.

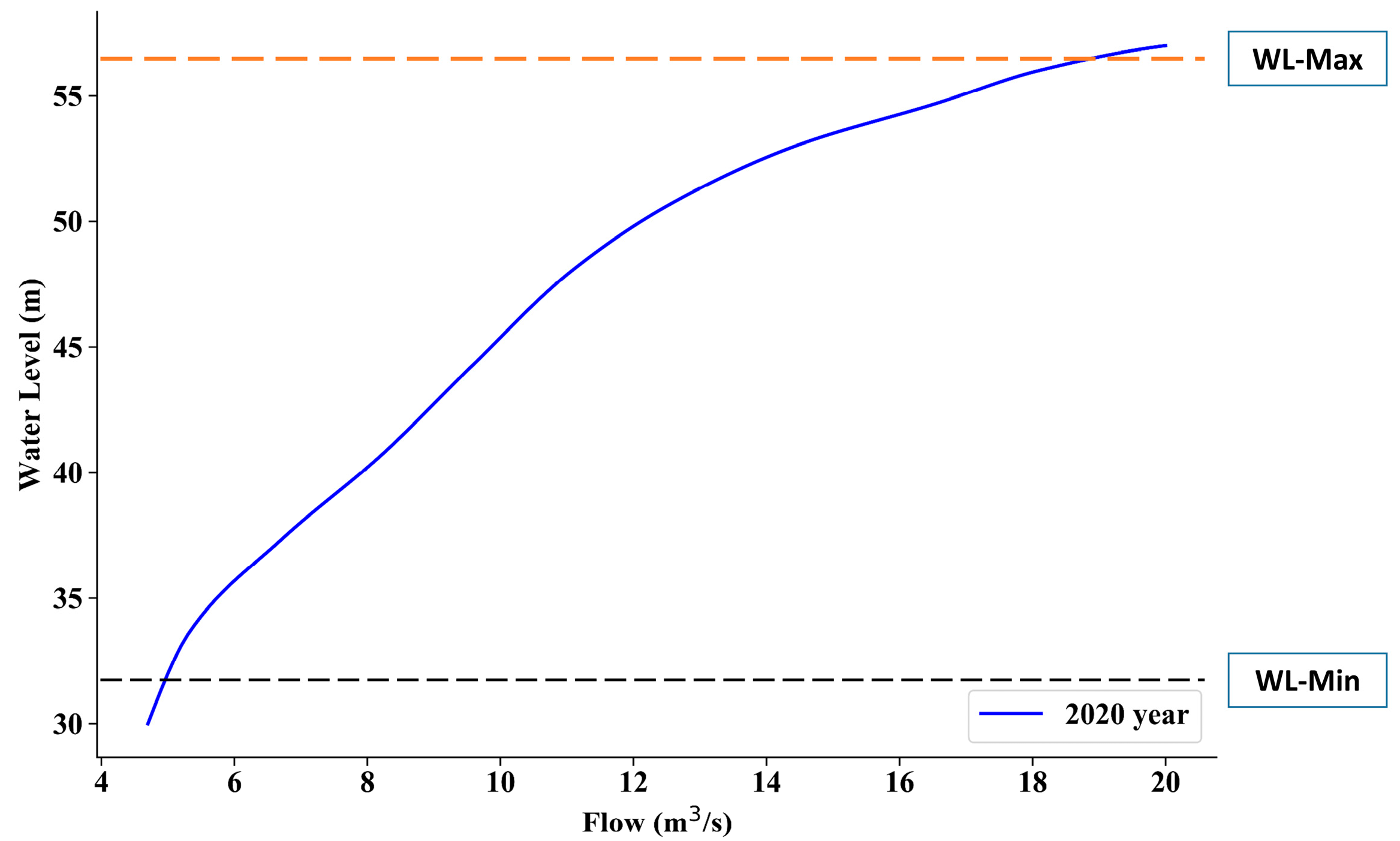

- Finally, the test and evaluation are carried out, and the loss value is obtained according to the comparison between the output probability of the sample and the real value. The water-flow prediction relationship curve is then made.

2.8. Model Training and Evaluation

2.8.1. Training Strategy

2.8.2. Model Evaluation Index

3. Results and Discussion

3.1. Experimental Part

3.1.1. Data Processing and Experimental Environment

3.1.2. Model Parameter Setting

3.2. Result Analysis

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lee, E.; Kim, J. Convertible Operation Techniques for Pump Stations Sharing Centralized Reservoirs for Improving Resilience in Urban Drainage Systems. Water 2017, 9, 843. [Google Scholar] [CrossRef]

- Wang, J.; Chang, T.; Chen, J. An enhanced genetic algorithm for bi-objective pump scheduling in water supply. Expert Syst. Appl. 2009, 36, 10249–10258. [Google Scholar] [CrossRef]

- Slater, L.J. To what extent have changes in channel capacity contributed to flood hazard trends in England and Wales? Earth Surf. Process. Landf. 2016, 41, 1115–1128. [Google Scholar] [CrossRef]

- Naito, K.; Parker, G. Can Bankfull Discharge and Bankfull Channel Characteristics of an Alluvial Meandering River be Cospecified from a Flow Duration Curve? J. Geophys. Res. Earth Surf. 2019, 124, 2381–2401. [Google Scholar] [CrossRef]

- Suzhen, H.; Ping, W.; Yan, G.; Ting, L. Response of bankfull discharge of the Inner Mongolia Yellow River to flow and sediment factors. J. Earth Syst. Sci. 2014, 123, 1307–1316. [Google Scholar]

- Hermann, M.F.; Willi, H.H. Hydraulics of Embankment Weirs. J. Hydraul. Eng. ASCE 1998, 124, 963–971. [Google Scholar]

- Yang, K.; Liu, X.; Cao, S.; Huang, E. Stage-Discharge Prediction in Compound Channels. J. Hydraul. Eng. ASCE 2014, 140, 06014001. [Google Scholar] [CrossRef]

- Chen, G.; Zhao, S.; Huai, W.; Gu, S. General model for stage-discharge prediction in multi-stage compound channels. J. Hydraul. Res. 2019, 57, 517–533. [Google Scholar] [CrossRef]

- Zheng, H.; Lei, X.; Shang, Y.; Cai, S.; Kong, L.; Wang, H. Parameter identification for discharge formulas of radial gates based on measured data. Flow Meas. Instrum. 2017, 58, 62–73. [Google Scholar] [CrossRef]

- Bijankhan, M.; Mazdeh, A.M. Assessing Malcherek’s Outflow Theory to Deduce the Theoretical Stage-Discharge Formula for Overflow Structures. J. Irrig. Drain. Eng. ASCE 2018, 144, 6018007. [Google Scholar] [CrossRef]

- Fencl, M.; Grum, M.; Borup, M.; Mikkelsen, P.S. Robust model for estimating pumping station characteristics and sewer flows from standard pumping station data. Water Sci. Technol. 2019, 79, 1739–1745. [Google Scholar] [CrossRef] [PubMed]

- Timbadiya, P.V.; Patel, P.L.; Porey, P.D. A 1D-2D Coupled Hydrodynamic Model for River Flood Prediction in a Coastal Urban Floodplain. J. Hydrol. Eng. 2015, 20, 5014017. [Google Scholar] [CrossRef]

- Karim, F.; Marvanek, S.; Wallace, J. The use of hydrodynamic modelling and remote sensing to assess hydrological connectivity of floodplain wetlands. In Hydrology and Water Resources Symposium; Engineers Australia: Barton, ACT, Australia, 2012; pp. 1334–1341. [Google Scholar]

- Lv, Y.; Chi, R. Data-driven adaptive iterative learning predictive control. In Proceedings of the 2017 6th Data Driven Control and Learning Systems (DDCLS), Chongqing, China, 26–27 May 2017. [Google Scholar]

- Contreras, J.; Espinola, R.; Nogales, F.J.; Conejo, A.J. ARIMA models to predict next-day electricity prices. IEEE Trans. Power Syst. 2003, 18, 1014–1020. [Google Scholar] [CrossRef]

- Noori, R.; Karbassi, A.R.; Moghaddamnia, A.; Han, D.; Zokaei-Ashtiani, M.H.; Farokhnia, A.; Gousheh, M.G. Assessment of input variables determination on the SVM model performance using PCA, Gamma test, and forward selection techniques for monthly stream flow prediction. J. Hydrol. 2011, 401, 177–189. [Google Scholar] [CrossRef]

- Wang, X.; Liu, T.; Zheng, X.; Peng, H.; Xin, J.; Zhang, B. Short-term prediction of groundwater level using improved random forest regression with a combination of random features. Appl. Water Sci. 2018, 8, 125. [Google Scholar] [CrossRef]

- Kisi, O.; Kerem Cigizoglu, H. Comparison of different ANN techniques in river flow prediction. Civ. Eng. Environ. Syst. 2007, 24, 211–231. [Google Scholar] [CrossRef]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Yan, G.; Ping, W.; Suzhen, H.; Huan, W. Prediction of flow capacity of the Inner Mongolia section of the Yellow River based on BP neural network. J. Water Resour. Constr. Eng. 2021, 19, 246–251. (In Chinese) [Google Scholar]

- Qiu, Y.; Yang, Z.; Zhou, Y.; Wu, J.; Xie, H. Prediction of Flow Capacity of Right Angle Broken Line Weir Based on BP Neural Network. Hydroelectr. Energy Sci. 2021, 39, 74–77. (In Chinese) [Google Scholar]

- Wei, C.; Hsu, N.; Huang, C. Two-Stage Pumping Control Model for Flood Mitigation in Inundated Urban Drainage Basins. Water Resour. Manag. 2014, 28, 425–444. [Google Scholar] [CrossRef]

- Ghorbani, M.A.; Deo, R.C.; Kim, S.; Hasanpour Kashani, M.; Karimi, V.; Izadkhah, M. Development and evaluation of the cascade correlation neural network and the random forest models for river stage and river flow prediction in Australia. Soft Comput. 2020, 24, 12079–12090. [Google Scholar] [CrossRef]

- Elbeltagi, A.; Di Nunno, F.; Kushwaha, N.L.; de Marinis, G.; Granata, F. River flow rate prediction in the Des Moines watershed (Iowa, USA): A machine learning approach. Stoch. Environ. Res. Risk Assess. 2022, 11, 3835–3855. [Google Scholar] [CrossRef]

- Darwen, P.J. Bayesian model averaging for river flow prediction. Appl. Intell. 2019, 49, 103–111. [Google Scholar] [CrossRef]

- Tan, P.C.; Berger, C.S.; Dabke, K.P.; Mein, R.G. Recursive identification and adaptive prediction of wastewater flows. Automatica 1991, 27, 761–768. [Google Scholar] [CrossRef]

- Musarat, M.A.; Alaloul, W.S.; Rabbani, M.B.A.; Ali, M.; Altaf, M.; Fediuk, R.; Vatin, N.; Klyuev, S.; Bukhari, H.; Sadiq, A.; et al. Kabul River Flow Prediction Using Automated ARIMA Forecasting: A Machine Learning Approach. Sustainability 2021, 13, 10720. [Google Scholar] [CrossRef]

- Pierini, J.O.; Gomez, E.A.; Telesca, L. Prediction of water flows in Colorado River, Argentina. Lat. Am. J. Aquat. Res. 2012, 40, 872–880. [Google Scholar] [CrossRef]

- Xu, W.; Jiang, Y.; Zhang, X.; Li, Y.; Zhang, R.; Fu, G. Using long short-term memory networks for river flow prediction. Hydrol. Res. 2020, 51, 1358–1376. [Google Scholar] [CrossRef]

- Aryal, S.K.; Zhang, Y.; Chiew, F. Enhanced low flow prediction for water and environmental management. J. Hydrol. 2020, 584, 124658. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, Z.; Kong, D.; Han, H.; Zhao, Y. EA-LSTM: Evolutionary attention-based LSTM for time series prediction. Knowl. Based Syst. 2019, 181, 104785. [Google Scholar] [CrossRef]

- Li, X.; Xu, W.; Ren, M.; Jiang, Y.; Fu, G. Hybrid CNN-LSTM models for river flow prediction. Water Supply 2022, 22, 4902–4919. [Google Scholar] [CrossRef]

- Zhang, X.; Qiao, W.; Huang, J.; Shi, J.; Zhang, M. Flow prediction in the lower Yellow River based on CEEMDAN-BILSTM coupled model. Water Supply 2023, 23, 396–409. [Google Scholar] [CrossRef]

- Lv, N.; Liang, X.; Chen, C.; Zhou, Y.; Li, J.; Wei, H.; Wang, H. A long Short-Term memory cyclic model with mutual information for hydrology forecasting: A Case study in the xixian basin. Adv. Water Resour. 2020, 141, 103622. [Google Scholar] [CrossRef]

- Chen, C.; Luan, D.; Zhao, S.; Liao, Z.; Zhou, Y.; Jiang, J.; Pei, Q. Flood Discharge Prediction Based on Remote-Sensed Spatiotemporal Features Fusion and Graph Attention. Remote Sens. 2021, 13, 5023. [Google Scholar] [CrossRef]

- Wu, B.; Wang, G.; Xia, J.; Fu, X.; Zhang, Y. Response of bankfull discharge to discharge and sediment load in the Lower Yellow River. Geomorphology 2008, 100, 366–376. [Google Scholar] [CrossRef]

- Tang, X. An improved method for predicting discharge of homogeneous compound channels based on energy concept. Flow Meas. Instrum. 2017, 57, 57–63. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation-based anomaly detection. ACM Trans. Knowl. Discov. Data TKDD 2012, 6, 1–39. [Google Scholar] [CrossRef]

- Zhang, Y.; Dai, H.; Xu, C.; Feng, J.; Wang, T.; Bian, J.; Wang, B.; Liu, T.Y. Sequential Click Prediction for Sponsored Search with Recurrent Neural Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Quebec City, QC, Canada, 27–31 July 2014. [Google Scholar]

- Dey, R.; Salem, F.M. Gate-variants of gated recurrent unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017. [Google Scholar]

- Mauludiyanto, A.; Hendrantoro, G.; Purnomo, M.H.; Ramadhany, T.; Matsushima, A. ARIMA Modeling of Tropical Rain Attenuation on a Short 28-GHz Terrestrial Link. IEEE Antennas Wirel. Propag. Lett. 2010, 9, 223–227. [Google Scholar] [CrossRef]

- Vrieze, S.I. Model selection and psychological theory: A discussion of the differences between the Akaike information criterion (AIC) and the Bayesian information criterion (BIC). Psychol. Methods 2012, 17, 228–243. [Google Scholar] [CrossRef]

- Dahl, G.E.; Sainath, T.N.; Hinton, G.E. Improving deep neural networks for LVCSR using rectified linear units and dropout. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar]

- Chen, L.; Yan, H.; Yan, J.; Wang, J.; Tao, T.; Xin, K.; Li, S.; Pu, Z.; Qiu, J. Short-term water demand forecast based on automatic feature extraction by one-dimensional convolution. J. Hydrol. 2022, 606, 127440. [Google Scholar] [CrossRef]

| Data | Description | Mean Value | Maximum Value | Minimum Value | Standard Deviation | Unit |

|---|---|---|---|---|---|---|

| TB-WL | Back-pool water level | 40.36 | 65.75 | 23.21 | 40.73 | m |

| TGate-AF1 | Gate accumulated flow1 | 11.28 | 20 | 5.2 | 23.26 | m3/s |

| TUnit-IF1 | Unit instantaneous flow1 | 1.74 | 2 | 1.43 | 1.57 | m3/s |

| TPump-Fre1 | pump frequency 1 | 37 | 50 | 25 | 18.32 | Hz |

| TPP1 | Pipeline pressure1 | 1.1 | 1.6 | 0.6 | 0.56 | Mpa |

| QF-WL | Forebay water level | 35.25 | 58.32 | 20.34 | 42.56 | m |

| QGate-AF | Gate accumulated flow2 | 10.37 | 20 | 4.7 | 16.71 | m3/s |

| QUnit-IF2 | Unit instantaneous flow2 | 1.68 | 1.99 | 1.45 | 0.98 | m3/s |

| QPump-Fre 2 | pump frequency 2 | 32 | 50 | 20 | 12.11 | Hz |

| QPP2 | Pipeline pressure2 | 1.2 | 1.6 | 0.7 | 0.33 | Mpa |

| Features | AR (p) | MA (q) | ARMA (p,q) |

|---|---|---|---|

| ACF | trailing | qth order back truncation | trailing |

| PACF | pth order back truncation | features | trailing |

| Impact Factorsr | QF-WL | QGate-AF | QUnit -IF2 | QPump-Fre 2 | QPP2 | TB-WL | TGate-AF1 | TUnit-IF1 | TPump-Fre1 | TPP1 |

|---|---|---|---|---|---|---|---|---|---|---|

| Outlie(%) | 1.3 | 1.1 | 2.9 | 2.8 | 2.2 | 1.7 | 2.3 | 2.1 | 3.0 | 2.3 |

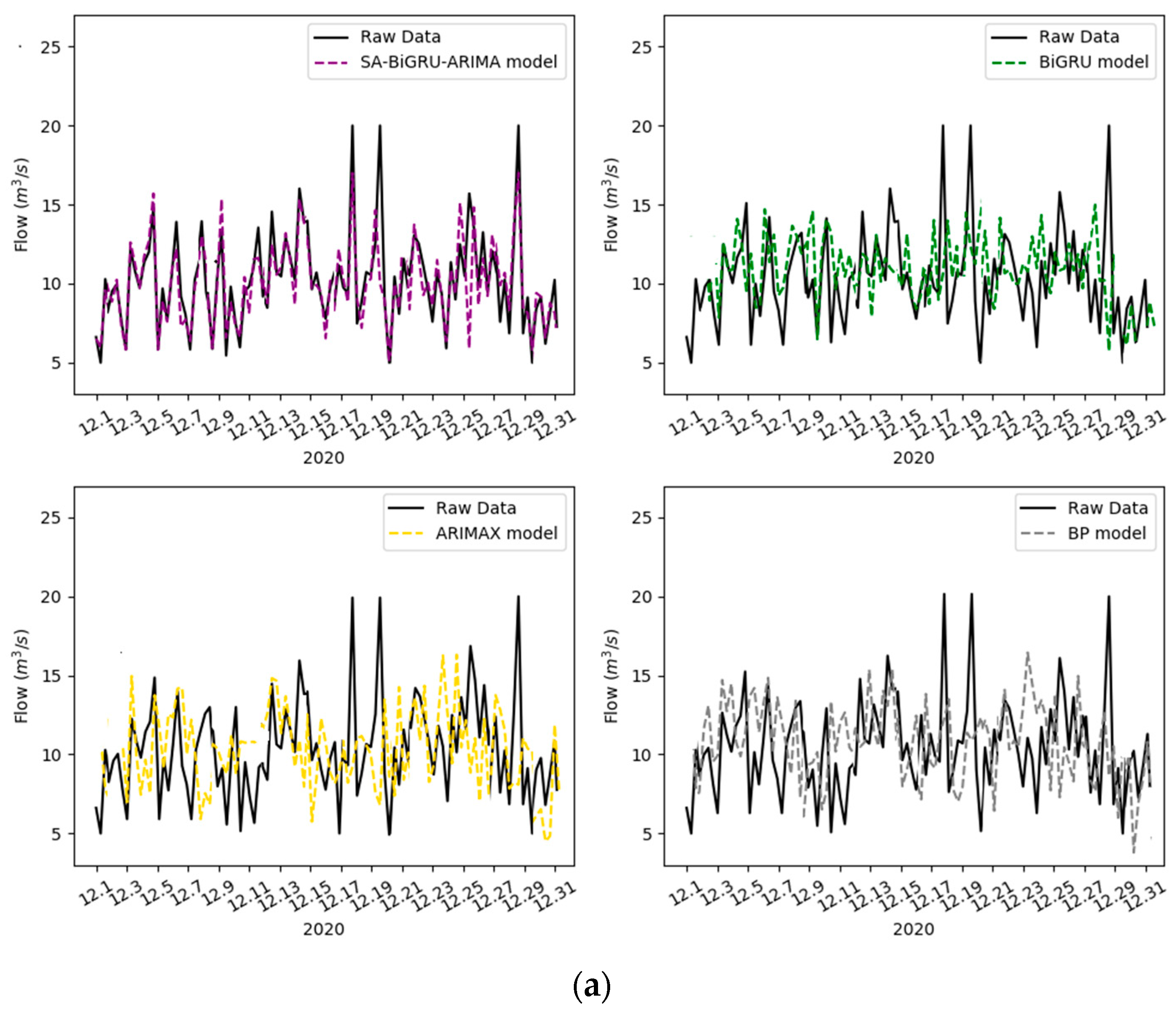

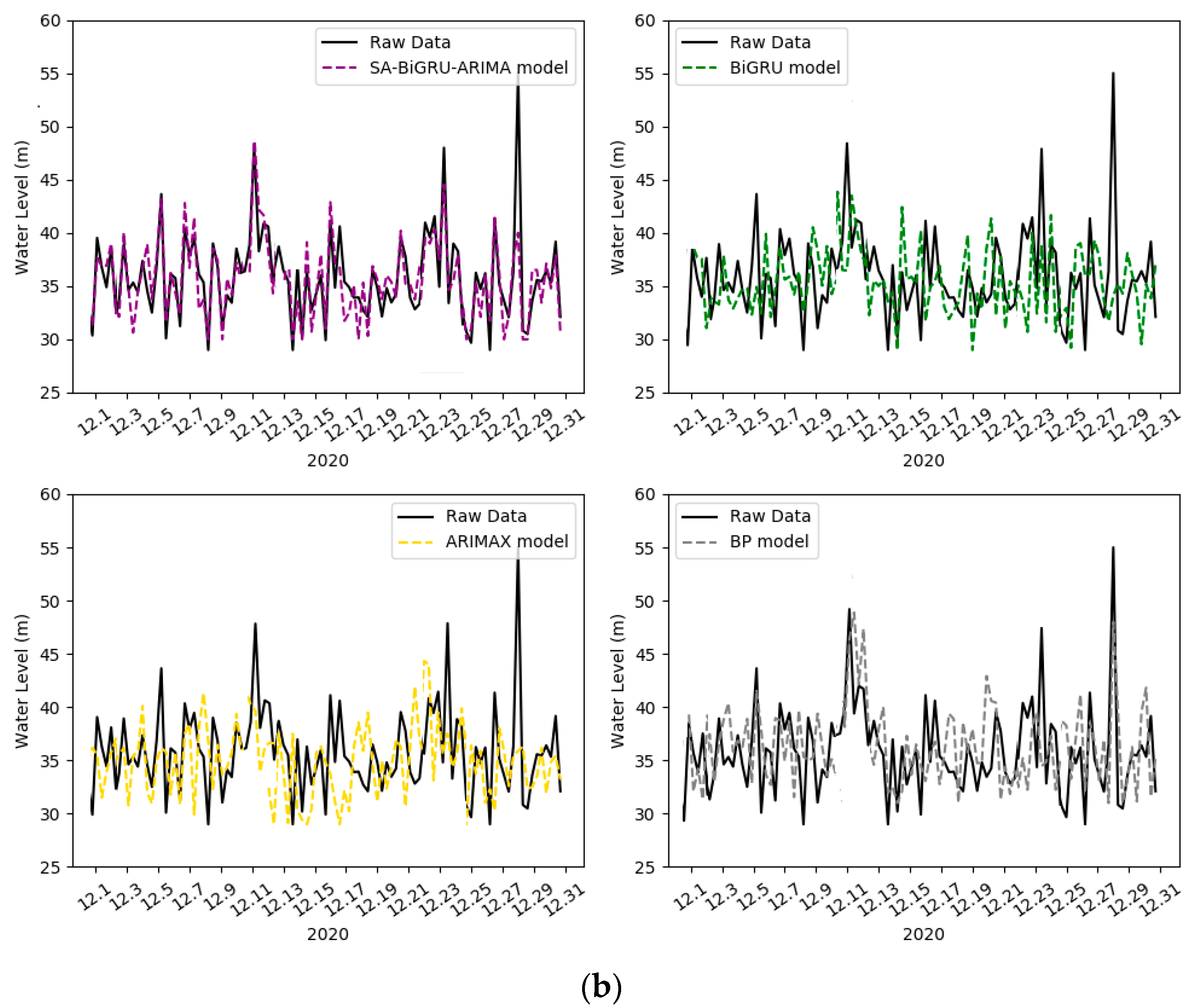

| Data | Indicator | Model | |||

|---|---|---|---|---|---|

| SA–BiGRU–ARIMA | BiGRU | ARIMAX | BP | ||

| QGate-AF | MAE | 9.32 | 15.87 | 20.45 | 18.37 |

| MAPE (100%) | 7.38 | 8.97 | 15.46 | 10.62 | |

| R2 | 0.87 | 0.61 | 0.37 | 0.42 | |

| QF-WL | MAE | 18.78 | 29.53 | 57.31 | 34.65 |

| MAPE (100%) | 6.73 | 10.34 | 18.78 | 16.85 | |

| R2 | 0.81 | 0.56 | 0.43 | 0.44 | |

| Water Level(m) | Flow (m3/s) | Water Level (m) | Flow (m3/s) |

|---|---|---|---|

| 40 | 8.0 | 48 | 12.0 |

| 42 | 9.0 | 50 | 13.0 |

| 44 | 10.0 | 52 | 14.0 |

| 46 | 11.0 | 54 | 15.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, T.; Yan, J.; Chen, J.; Yu, Y. Overflow Capacity Prediction of Pumping Station Based on Data Drive. Water 2023, 15, 2380. https://doi.org/10.3390/w15132380

Guo T, Yan J, Chen J, Yu Y. Overflow Capacity Prediction of Pumping Station Based on Data Drive. Water. 2023; 15(13):2380. https://doi.org/10.3390/w15132380

Chicago/Turabian StyleGuo, Tiantian, Jianzhuo Yan, Jianhui Chen, and Yongchuan Yu. 2023. "Overflow Capacity Prediction of Pumping Station Based on Data Drive" Water 15, no. 13: 2380. https://doi.org/10.3390/w15132380

APA StyleGuo, T., Yan, J., Chen, J., & Yu, Y. (2023). Overflow Capacity Prediction of Pumping Station Based on Data Drive. Water, 15(13), 2380. https://doi.org/10.3390/w15132380