Land Use and Land Cover Mapping in the Era of Big Data

Abstract

:1. Introduction

2. Method and Materials

3. LULC Mapping from Remotely Sensed Imagery Data

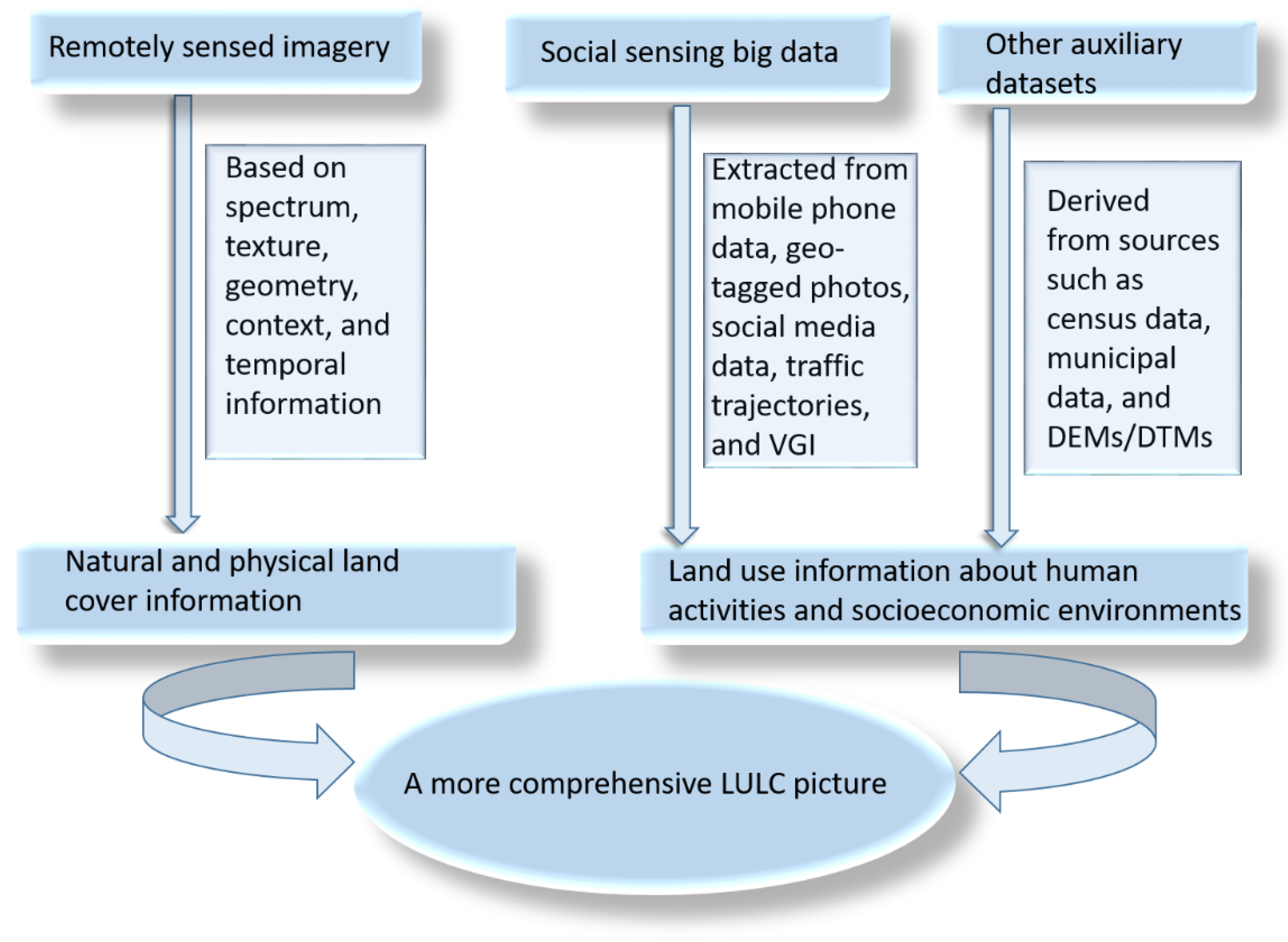

4. LULC Mapping from Integration of Geospatial Big Data and Remotely Sensed Imagery Data

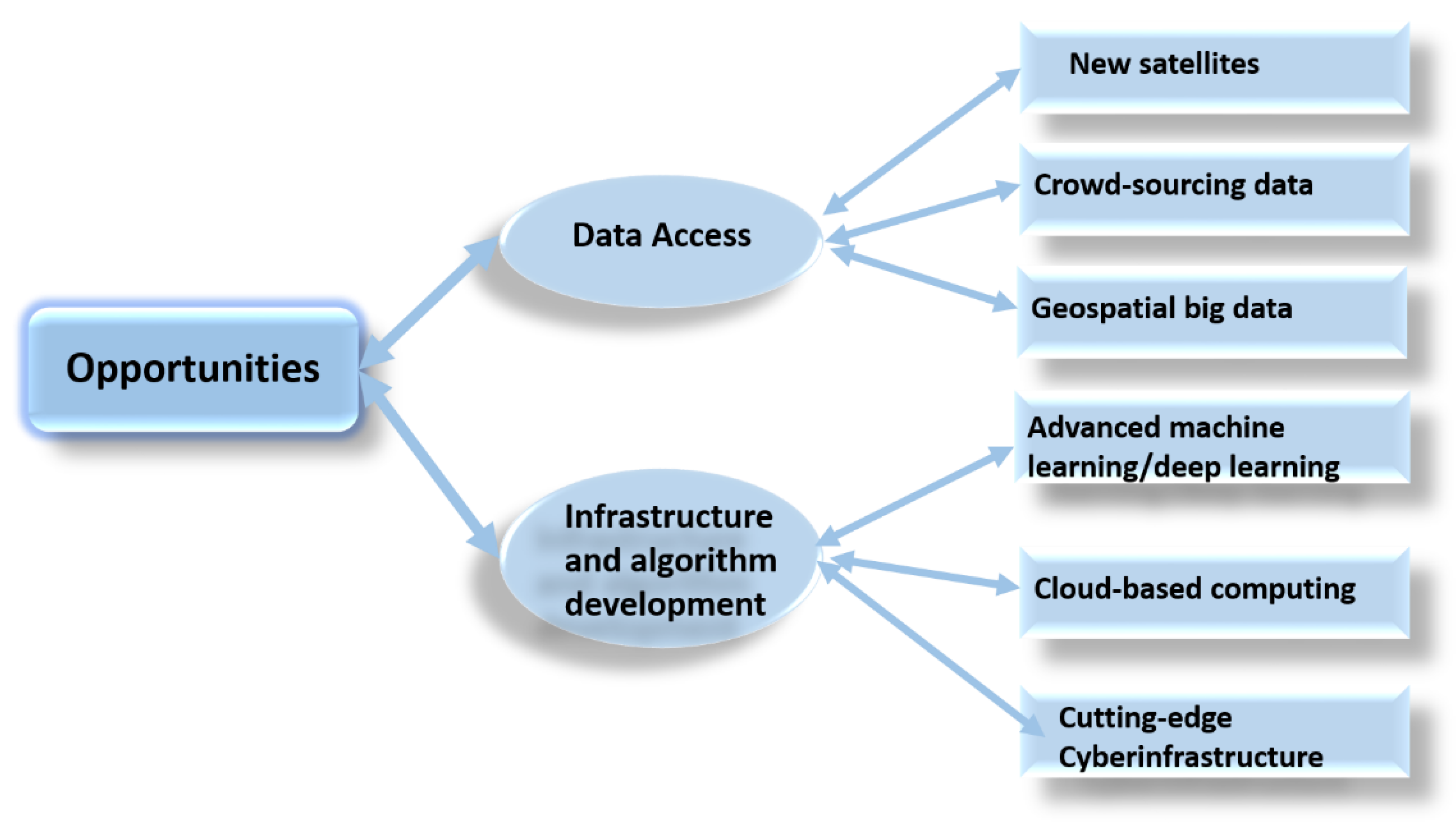

5. Machine Learning and Cloud Computing for LULC Mapping

6. Challenges and Future Research Directions

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Treitz, P.; Rogan, J. Remote sensing for mapping and monitoring land-cover and land-use change-an introduction. Prog. Plan. 2004, 61, 269–279. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D. Remote sensing technology for mapping and monitoring land-cover and land-use change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Mora, B.; Tsendbazar, N.E.; Herold, M.; Arino, O. Global land cover mapping: Current status and future trends. In Land Use and Land Cover Mapping in Europe; Manakos, I., Braun, M., Eds.; Springer: Dordrecht, The Netherland, 2014; Volume 18, pp. 11–30. [Google Scholar] [CrossRef]

- Meyer, W.B.; Turner, B.L. Human population growth and global land-use/cover change. Annu. Rev. Ecol. Syst. 1992, 23, 39–61. [Google Scholar] [CrossRef]

- Hasan, S.S.; Zhen, L.; Miah, M.G.; Ahamed, T.; Samie, A. Impact of land use change on ecosystem services: A review. Environ. Dev. 2020, 34, 100527. [Google Scholar] [CrossRef]

- Nedd, R.; Light, K.; Owens, M.; James, N.; Johnson, E.; Anandhi, A. A synthesis of land use/land cover studies: Definitions, classification systems, meta-studies, challenges and knowledge gaps on a global landscape. Land 2021, 10, 994. [Google Scholar] [CrossRef]

- Parece, T.E.; Campbell, J.B. Land use/land cover monitoring and geospatial technologies: An overview. In Advances in Watershed Science and Assessment; Younos, T., Parece, T., Eds.; Springer: Cham, Switzerland, 2015; Volume 33, pp. 1–32. [Google Scholar] [CrossRef]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.R.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.T.; et al. A review of the application of optical and radar remote sensing data fusion to land use mapping and monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef]

- Chen, B.; Tu, Y.; Song, Y.; Theobald, D.M.; Zhang, T.; Ren, Z.; Li, X.; Yang, J.; Wang, J.; Wang, X.; et al. Mapping essential urban land use categories with open big data: Results for five metropolitan areas in the United States of America. ISPRS J. Photogramm. Remote Sens. 2021, 178, 203–218. [Google Scholar] [CrossRef]

- Alqurashi, A.; Kumar, L. Investigating the use of remote sensing and GIS techniques to detect land use and land cover change: A review. Adv. Remote Sens. 2013, 2, 193–204. [Google Scholar] [CrossRef] [Green Version]

- Phiri, D.; Morgenroth, J. Developments in Landsat land cover classification methods: A review. Remote Sens. 2017, 9, 967. [Google Scholar] [CrossRef]

- MohanRajan, S.N.; Loganathan, A.; Manoharan, P. Survey on Land Use/Land Cover (LU/LC) change analysis in remote sensing and GIS environment: Techniques and Challenges. Environ. Sci. Pollut. Res. 2020, 27, 29900–29926. [Google Scholar] [CrossRef]

- Yin, J.; Dong, J.; Hamm, N.A.; Li, Z.; Wang, J.; Xing, H.; Fu, P. Integrating remote sensing and geospatial big data for urban land use mapping: A review. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102514. [Google Scholar] [CrossRef]

- Lee, J.G.; Kang, M. Geospatial big data: Challenges and opportunities. Big Data Res. 2015, 2, 74–81. [Google Scholar] [CrossRef]

- Deng, X.; Liu, P.; Liu, X.; Wang, R.; Zhang, Y.; He, J.; Yao, Y. Geospatial big data: New paradigm of remote sensing applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3841–3851. [Google Scholar] [CrossRef]

- He, J.; Li, X.; Liu, P.; Wu, X.; Zhang, J.; Zhang, D.; Liu, X.; Yao, Y. Accurate estimation of the proportion of mixed land use at the street-block level by integrating high spatial resolution images and geospatial big data. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6357–6370. [Google Scholar] [CrossRef]

- Yi, J.; Du, Y.; Liang, F.; Tu, W.; Qi, W.; Ge, Y. Mapping human’s digital footprints on the Tibetan Plateau from multi-source geospatial big data. Sci. Total Environ. 2020, 711, 134540. [Google Scholar] [CrossRef]

- Chi, M.; Plaza, A.; Benediktsson, J.A.; Sun, Z.; Shen, J.; Zhu, Y. Big data for remote sensing: Challenges and opportunities. Proc. IEEE 2016, 104, 2207–2219. [Google Scholar] [CrossRef]

- Shi, Y.; Qi, Z.; Liu, X.; Niu, N.; Zhang, H. Urban land use and land cover classification using multisource remote sensing images and social media data. Remote Sens. 2019, 11, 2719. [Google Scholar] [CrossRef]

- Wulder, M.A.; Coops, N.C.; Roy, D.P.; White, J.C.; Hermosilla, T. Land cover 2.0. Int. J. Remote Sens. 2018, 39, 4254–4284. [Google Scholar] [CrossRef] [Green Version]

- Saah, D.; Johnson, G.; Ashmall, B.; Tondapu, G.; Tenneson, K.; Patterson, M.; Poortinga, A.; Markert, K.; Quyen, N.H.; San Aung, K.; et al. Collect Earth: An online tool for systematic reference data collection in land cover and use applications. Environ. Model. Softw. 2019, 118, 166–171. [Google Scholar] [CrossRef]

- Sitthi, A.; Nagai, M.; Dailey, M.; Ninsawat, S. Exploring land use and land cover of geotagged social-sensing images using naive bayes classifier. Sustainability 2016, 8, 921. [Google Scholar] [CrossRef]

- Zhang, C.; Zhao, T.; Li, W. Geospatial Semantic Web; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Chen, B.; Xu, B.; Gong, P. Mapping essential urban land use categories (EULUC) using geospatial big data: Progress, challenges, and opportunities. Big Earth Data 2021, 5, 410–441. [Google Scholar] [CrossRef]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef]

- Nijhawan, R.; Joshi, D.; Narang, N.; Mittal, A.; Mittal, A. A futuristic deep learning framework approach for land use-land cover classification using remote sensing imagery. In Advanced Computing and Communication Technologies; Springer: Singapore, 2019; pp. 87–96. [Google Scholar]

- Jozdani, S.E.; Johnson, B.A.; Chen, D. Comparing deep neural networks, ensemble classifiers, and support vector machine algorithms for object-based urban land use/land cover classification. Remote Sens. 2019, 11, 1713. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Gong, P.; Feng, D.; Li, C.; Clinton, N. Stacked Autoencoder-based deep learning for remote-sensing image classification: A case study of African land-cover mapping. Int. J. Remote Sens. 2016, 37, 5632–5646. [Google Scholar] [CrossRef]

- Alem, A.; Kumar, S. Deep learning methods for land cover and land use classification in remote sensing: A review. In Proceedings of the 2020 8th International Conference on Reliability 2020, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 4–5 June 2020; pp. 903–908. [Google Scholar]

- Chaves, M.E.D.; Picoli, M.C.A.; Sanches, I.D. Recent applications of Landsat 8/OLI and Sentinel-2/MSI for land use and land cover mapping: A systematic review. Remote Sens. 2020, 12, 3062. [Google Scholar] [CrossRef]

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A.J. Advanced spectral classifiers for hyperspectral images: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef]

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in hyperspectral image and signal processing: A comprehensive overview of the state of the art. IEEE Geosci. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef] [Green Version]

- Grekousis, G. Artificial neural networks and deep learning in urban geography: A systematic review and meta-analysis. Comput. Environ. Urban Syst. 2019, 74, 244–256. [Google Scholar] [CrossRef]

- He, L.; Li, J.; Liu, C.; Li, S. Recent advances on spectral–spatial hyperspectral image classification: An overview and new guidelines. IEEE Trans. Geosci. Remote Sens. 2017, 56, 1579–1597. [Google Scholar] [CrossRef]

- Li, S.; Dragicevic, S.; Castro, F.A.; Sester, M.; Winter, S.; Coltekin, A.; Pettit, C.; Jiang, B.; Haworth, J.; Stein, A.; et al. Geospatial big data handling theory and methods: A review and research challenges. ISPRS J. Photogramm. Remote Sens. 2016, 115, 119–133. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep learning for hyperspectral image classification: An overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Pandey, P.C.; Koutsias, N.; Petropoulos, G.P.; Srivastava, P.K.; Ben Dor, E. Land use/land cover in view of earth observation: Data sources, input dimensions, and classifiers—A review of the state of the art. Geocarto Int. 2021, 36, 957–988. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Talukdar, S.; Singha, P.; Mahato, S.; Pal, S.; Liou, Y.A.; Rahman, A. Land-use land-cover classification by machine learning classifiers for satellite observations—A review. Remote Sens. 2020, 12, 1135. [Google Scholar] [CrossRef]

- Vali, A.; Comai, S.; Matteucci, M. Deep learning for land use and land cover classification based on hyperspectral and multispectral earth observation data: A review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

- Wang, J.; Bretz, M.; Dewan, M.A.A.; Delavar, M.A. Machine learning in modelling land-use and land cover-change (LULCC): Current status, challenges and prospects. Sci. Total Environ. 2022, 822, 153559. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, Y.N.; Luo, J. Deep learning for processing and analysis of remote sensing big data: A technical review. Big Earth Data 2021, 5, 1–34. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Bartsch, A.; Höfler, A.; Kroisleitner, C.; Trofaier, A.M. Land cover mapping in northern high latitude permafrost regions with satellite data: Achievements and remaining challenges. Remote Sens. 2016, 8, 979. [Google Scholar] [CrossRef]

- Thyagharajan, K.K.; Vignesh, T. Soft computing techniques for land use and land cover monitoring with multispectral remote sensing images: A review. Arch. Comput. Methods Eng. 2019, 26, 275–301. [Google Scholar] [CrossRef]

- Comber, A.; Wulder, M. Considering spatiotemporal processes in big data analysis: Insights from remote sensing of land cover and land use. Trans. GIS 2019, 23, 879–891. [Google Scholar] [CrossRef]

- Liu, P.; Di, L.; Du, Q.; Wang, L. Remote sensing big data: Theory, methods and applications. Remote Sens. 2018, 10, 711. [Google Scholar] [CrossRef]

- Sugumaran, R.; Hegeman, J.W.; Sardeshmukh, V.B.; Armstrong, M.P. Processing remote-sensing data in cloud computing environments. In Remotely Sensed Data Characterization, Classification, and Accuracies; CRC Press: Boca Raton, FL, USA, 2015; pp. 587–596. [Google Scholar]

- Feng, M.; Li, X. Land cover mapping toward finer scales. Sci. Bull. 2020, 65, 1604–1606. [Google Scholar] [CrossRef]

- Franklin, S.E.; He, Y.; Pape, A.; Guo, X.; McDermid, G.J. Landsat-comparable land cover maps using ASTER and SPOT images: A case study for large-area mapping programmes. Int. J. Remote Sens. 2011, 32, 2185–2205. [Google Scholar] [CrossRef] [Green Version]

- Alphan, H.; Doygun, H.; Unlukaplan, Y.I. Post-classification comparison of land cover using multitemporal Landsat and ASTER imagery: The case of Kahramanmaraş, Turkey. Environ. Monit. Assess. 2009, 151, 327–336. [Google Scholar] [CrossRef]

- de Pinho, C.M.D.; Fonseca, L.M.G.; Korting, T.S.; De Almeida, C.M.; Kux, H.J.H. Land-cover classification of an intra-urban environment using high-resolution images and object-based image analysis. Int. J. Remote Sens. 2012, 33, 5973–5995. [Google Scholar] [CrossRef]

- Novack, T.; Esch, T.; Kux, H.; Stilla, U. Machine learning comparison between WorldView-2 and QuickBird-2-simulated imagery regarding object-based urban land cover classification. Remote Sens. 2011, 3, 2263–2282. [Google Scholar] [CrossRef]

- Toure, S.I.; Stow, D.A.; Shih, H.C.; Weeks, J.; Lopez-Carr, D. Land cover and land use change analysis using multi-spatial resolution data and object-based image analysis. Remote Sens. Environ. 2018, 210, 259–268. [Google Scholar] [CrossRef]

- Malinverni, E.S.; Tassetti, A.N.; Mancini, A.; Zingaretti, P.; Frontoni, E.; Bernardini, A. Hybrid object-based approach for land use/land cover mapping using high spatial resolution imagery. Int. J. Geogr. Inf. Sci. 2011, 25, 1025–1043. [Google Scholar] [CrossRef]

- Qi, Z.; Yeh, A.G.O.; Li, X.; Lin, Z. A novel algorithm for land use and land cover classification using RADARSAT-2 polarimetric SAR data. Remote Sens. Environ. 2012, 118, 21–39. [Google Scholar] [CrossRef]

- Lv, Q.; Dou, Y.; Niu, X.; Xu, J.; Xu, J.; Xia, F. Urban land use and land cover classification using remotely sensed SAR data through deep belief networks. J. Sens. 2015, 2015, 538063. [Google Scholar] [CrossRef]

- Zhang, R.; Tang, X.; You, S.; Duan, K.; Xiang, H.; Luo, H. A novel feature-level fusion framework using optical and SAR remote sensing images for land use/land cover (LULC) classification in cloudy mountainous area. Appl. Sci. 2020, 10, 2928. [Google Scholar] [CrossRef]

- Teeuw, R.M.; Leidig, M.; Saunders, C.; Morris, N. Free or low-cost geoinformatics for disaster management: Uses and availability issues. Environ. Hazards 2013, 12, 112–131. [Google Scholar] [CrossRef]

- Navin, M.S.; Agilandeeswari, L. Comprehensive review on land use/land cover change classification in remote sensing. J. Spectr. Imaging 2020, 9, a8. [Google Scholar] [CrossRef]

- Hasan, S.; Shi, W.; Zhu, X.; Abbas, S. Monitoring of land use/land cover and socioeconomic changes in south china over the last three decades using landsat and nighttime light data. Remote Sens. 2019, 11, 1658. [Google Scholar] [CrossRef]

- Zhang, Q.; Seto, K.C. Mapping urbanization dynamics at regional and global scales using multi-temporal DMSP/OLS nighttime light data. Remote Sens. Environ. 2011, 115, 2320–2329. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A.; El-Ashmawy, N. Urban land cover classification using airborne LiDAR data: A review. Remote Sens. Environ. 2015, 158, 295–310. [Google Scholar] [CrossRef]

- Antonarakis, A.S.; Richards, K.S.; Brasington, J. Object-based land cover classification using airborne LiDAR. Remote Sens. Environ. 2008, 112, 2988–2998. [Google Scholar] [CrossRef]

- Li, X.; Zhang, C.; Li, W. Building block level urban land-use information retrieval based on Google Street View images. GIScience Remote Sens. 2017, 54, 819–835. [Google Scholar] [CrossRef]

- Zhang, W.; Li, W.; Zhang, C.; Hanink, D.M.; Li, X.; Wang, W. Parcel feature data derived from Google Street View images for urban land use classification in Brooklyn, New York City. Data Brief 2017, 12, 175–179. [Google Scholar] [CrossRef]

- Li, X.; Zhang, C.; Li, W.; Ricard, R.; Meng, Q.; Zhang, W. Assessing street-level urban greenery using Google Street View and a modified green view index. Urban For. Urban Green. 2015, 14, 675–685. [Google Scholar] [CrossRef]

- Zhang, W.; Li, W.; Zhang, C.; Hanink, D.M.; Li, X.; Wang, W. Parcel-based urban land use classification in megacity using airborne LiDAR, high resolution orthoimagery, and Google Street View. Comput. Environ. Urban Syst. 2017, 64, 215–228. [Google Scholar] [CrossRef]

- Ma, L.; Fu, T.; Blaschke, T.; Li, M.; Tiede, D.; Zhou, Z.; Ma, X.; Chen, D. Evaluation of feature selection methods for object-based land cover mapping of unmanned aerial vehicle imagery using random forest and support vector machine classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar] [CrossRef]

- Green, K.; Kempka, D.; Lackey, L. Using remote sensing to detect and monitor land-cover and land-use change. Photogramm. Eng. Remote Sens. 1994, 60, 331–337. [Google Scholar]

- Wu, H.; Gui, Z.; Yang, Z. Geospatial big data for urban planning and urban management. Geo-Spat. Inf. Sci. 2020, 23, 273–274. [Google Scholar] [CrossRef]

- Huang, B.; Wang, J. Big spatial data for urban and environmental sustainability. Geo-Spat. Inf. Sci. 2020, 23, 125–140. [Google Scholar] [CrossRef]

- See, L.; Schepaschenko, D.; Lesiv, M.; McCallum, I.; Fritz, S.; Comber, A.; Perger, C.; Schill, C.; Zhao, Y.; Maus, V.; et al. Building a hybrid land cover map with crowdsourcing and geographically weighted regression. ISPRS J. Photogramm. Remote Sens. 2015, 103, 48–56. [Google Scholar] [CrossRef]

- Fritz, S.; See, L.; Perger, C.; McCallum, I.; Schill, C.; Schepaschenko, D.; Duerauer, M.; Karner, M.; Dresel, C.; Laso-Bayas, J.C.; et al. A global dataset of crowdsourced land cover and land use reference data. Sci. Data 2017, 4, 170075. [Google Scholar] [CrossRef] [PubMed]

- Johnson, B.A.; Iizuka, K. Integrating OpenStreetMap crowdsourced data and Landsat time-series imagery for rapid land use/land cover (LULC) mapping: Case study of the Laguna de Bay area of the Philippines. Appl. Geogr. 2016, 67, 140–149. [Google Scholar] [CrossRef]

- Fonte, C.C.; Minghini, M.; Patriarca, J.; Antoniou, V.; See, L.; Skopeliti, A. Generating up-to-date and detailed land use and land cover maps using OpenStreetMap and GlobeLand30. ISPRS Int. J. Geo-Inf. 2017, 6, 125. [Google Scholar] [CrossRef]

- Andrade, R.; Alves, A.; Bento, C. POI mining for land use classification: A case study. ISPRS Int. J. Geo-Inf. 2020, 9, 493. [Google Scholar] [CrossRef]

- Vargas-Munoz, J.E.; Srivastava, S.; Tuia, D.; Falcao, A.X. OpenStreetMap: Challenges and opportunities in machine learning and remote sensing. IEEE Geosci. Remote Sens. Mag. 2020, 9, 184–199. [Google Scholar] [CrossRef]

- Wu, H.; Lin, A.; Xing, X.; Song, D.; Li, Y. Identifying core driving factors of urban land use change from global land cover products and POI data using the random forest method. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102475. [Google Scholar] [CrossRef]

- Ai, T.; Yang, W. The detection of transport land-use data using crowdsourcing taxi trajectory. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; pp. 785–788. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Wu, W.; Thakuriah, P.; Wang, J. The geography of human activity and land use: A big data approach. Cities 2020, 97, 102523. [Google Scholar] [CrossRef]

- Schultz, M.; Voss, J.; Auer, M.; Carter, S.; Zipf, A. Open land cover from OpenStreetMap and remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2017, 63, 206–213. [Google Scholar] [CrossRef]

- Xing, J.; Sieber, R.E. A land use/land cover change geospatial cyberinfrastructure to integrate big data and temporal topology. Int. J. Geogr. Inf. Sci. 2016, 30, 573–593. [Google Scholar] [CrossRef]

- Yang, D.; Fu, C.S.; Smith, A.C.; Yu, Q. Open land-use map: A regional land-use mapping strategy for incorporating OpenStreetMap with earth observations. Geo-Spat. Inf. Sci. 2017, 20, 269–281. [Google Scholar] [CrossRef]

- Hu, T.; Yang, J.; Li, X.; Gong, P. Mapping urban land use by using landsat images and open social data. Remote Sens. 2016, 8, 151. [Google Scholar] [CrossRef]

- Yin, J.; Fu, P.; Hamm, N.A.; Li, Z.; You, N.; He, Y.; Cheshmehzangi, A.; Dong, J. Decision-level and feature-level integration of remote sensing and geospatial big data for urban land use mapping. Remote Sens. 2021, 13, 1579. [Google Scholar] [CrossRef]

- Copenhaver, K.L. Combining Tabular and Satellite-Based Datasets to Better Understand Cropland Change. Land 2022, 11, 714. [Google Scholar] [CrossRef]

- Guan, Q.; Cheng, S.; Pan, Y.; Yao, Y.; Zeng, W. Sensing mixed urban land-use patterns using municipal water consumption time series. Ann. Am. Assoc. Geogr. 2021, 111, 68–86. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Multi-source remotely sensed data fusion for improving land cover classification. ISPRS J. Photogramm. Remote Sens. 2017, 124, 27–39. [Google Scholar] [CrossRef]

- Haklay, M. Citizen science and volunteered geographic information: Overview and typology of participation. In Crowdsourcing Geographic Knowledge; Sui, D., Elwood, S., Goodchild, M., Eds.; Springer: Dordrecht, The Netherland, 2013; pp. 105–122. [Google Scholar]

- Laban, N.; Abdellatif, B.; Ebeid, H.M.; Shedeed, H.A.; Tolba, M.F. Machine Learning for Enhancement Land Cover and Crop Types Classification. In Machine Learning Paradigms: Theory and Application; Springer: Cham, Switzerland, 2019; pp. 71–87. [Google Scholar]

- Kuras, A.; Brell, M.; Rizzi, J.; Burud, I. Hyperspectral and lidar data applied to the urban land cover machine learning and neural-network-based classification: A review. Remote Sens. 2021, 13, 3393. [Google Scholar] [CrossRef]

- Jamali, A. Land use land cover mapping using advanced machine learning classifiers: A case study of Shiraz city, Iran. Earth Sci. Inform. 2020, 13, 1015–1030. [Google Scholar] [CrossRef]

- Schmitt, M.; Prexl, J.; Ebel, P.; Liebel, L.; Zhu, X.X. Weakly supervised semantic segmentation of satellite images for land cover mapping—Challenges and opportunities. arXiv 2020, arXiv:2002.08254. [Google Scholar] [CrossRef]

- Kussul, N.; Shelestov, A.; Lavreniuk, M.; Butko, I.; Skakun, S. Deep learning approach for large scale land cover mapping based on remote sensing data fusion. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 198–201. [Google Scholar]

- Zhang, P.; Ke, Y.; Zhang, Z.; Wang, M.; Li, P.; Zhang, S. Urban land use and land cover classification using novel deep learning models based on high spatial resolution satellite imagery. Sensors 2018, 18, 3717. [Google Scholar] [CrossRef] [PubMed]

- Storie, C.D.; Henry, C.J. Deep learning neural networks for land use land cover mapping. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3445–3448. [Google Scholar]

- Srivastava, S.; Vargas-Munoz, J.E.; Tuia, D. Understanding urban landuse from the above and ground perspectives: A deep learning, multimodal solution. Remote Sens. Environ. 2019, 228, 129–143. [Google Scholar] [CrossRef]

- Feizizadeh, B.; Mohammadzade Alajujeh, K.; Lakes, T.; Blaschke, T.; Omarzadeh, D. A comparison of the integrated fuzzy object-based deep learning approach and three machine learning techniques for land use/cover change monitoring and environmental impacts assessment. GIScience Remote Sens. 2021, 58, 1543–1570. [Google Scholar] [CrossRef]

- Digra, M.; Dhir, R.; Sharma, N. Land use land cover classification of remote sensing images based on the deep learning approaches: A statistical analysis and review. Arab. J. Geosci. 2022, 15, 1003. [Google Scholar] [CrossRef]

- Bhosle, K.; Musande, V. Evaluation of deep learning CNN model for land use land cover classification and crop identification using hyperspectral remote sensing images. J. Indian Soc. Remote Sens. 2019, 47, 1949–1958. [Google Scholar] [CrossRef]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GIScience Remote Sens. 2020, 57, 1–20. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google earth engine cloud computing platform for remote sensing big data applications: A comprehensive review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Kang, X.; Liu, J.; Dong, C.; Xu, S. Using high-performance computing to address the challenge of land use/land cover change analysis on spatial big data. ISPRS Int. J. Geo-Inf. 2018, 7, 273. [Google Scholar] [CrossRef] [Green Version]

- Karra, K.; Kontgis, C.; Statman-Weil, Z.; Mazzariello, J.C.; Mathis, M.; Brumby, S.P. Global land use/land cover with Sentinel 2 and deep learning. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4704–4707. [Google Scholar]

- de Sousa, C.; Fatoyinbo, L.; Neigh, C.; Boucka, F.; Angoue, V.; Larsen, T. Cloud-computing and machine learning in support of country-level land cover and ecosystem extent mapping in Liberia and Gabon. PLoS ONE 2020, 15, e0227438. [Google Scholar] [CrossRef]

- Yang, C.; Yu, M.; Hu, F.; Jiang, Y.; Li, Y. Utilizing cloud computing to address big geospatial data challenges. Comput. Environ. Urban Syst. 2017, 61, 120–128. [Google Scholar] [CrossRef]

- Dubertret, F.; Le Tourneau, F.M.; Villarreal, M.L.; Norman, L.M. Monitoring Annual Land Use/Land Cover Change in the Tucson Metropolitan Area with Google Earth Engine (1986–2020). Remote Sens. 2022, 14, 2127. [Google Scholar] [CrossRef]

- Mou, X.; Li, H.; Huang, C.; Liu, Q.; Liu, G. Application progress of Google Earth Engine in land use and land cover remote sensing information extraction. Remote Sens. Land Resour. 2021, 33, 1–10. [Google Scholar]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land Cover Classification using Google Earth Engine and Random Forest Classifier—The Role of Image Composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Xie, S.; Liu, L.; Zhang, X.; Yang, J.; Chen, X.; Gao, Y. Automatic land-cover mapping using landsat time-series data based on google earth engine. Remote Sens. 2019, 11, 3023. [Google Scholar] [CrossRef]

- Xia, H.; Zhao, J.; Qin, Y.; Yang, J.; Cui, Y.; Song, H.; Ma, L.; Jin, N.; Meng, Q. Changes in water surface area during 1989–2017 in the Huai River Basin using Landsat data and Google earth engine. Remote Sens. 2019, 11, 1824. [Google Scholar] [CrossRef]

- Kumar, L.; Mutanga, O. Google Earth Engine applications since inception: Usage, trends, and potential. Remote Sens. 2018, 10, 1509. [Google Scholar] [CrossRef]

- Wang, L.; Diao, C.; Xian, G.; Yin, D.; Lu, Y.; Zou, S.; Erickson, T.A. A summary of the special issue on remote sensing of land change science with Google earth engine. Remote Sens. Environ. 2020, 248, 112002. [Google Scholar] [CrossRef]

- Liu, X.; Hu, G.; Chen, Y.; Li, X.; Xu, X.; Li, S.; Pei, F.; Wang, S. High-resolution multi-temporal mapping of global urban land using Landsat images based on the Google Earth Engine Platform. Remote Sens. Environ. 2018, 209, 227–239. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, L.; Wu, C.; Chen, X.; Gao, Y.; Xie, S.; Zhang, B. Development of a global 30 m impervious surface map using multisource and multitemporal remote sensing datasets with the Google Earth Engine platform. Earth Syst. Sci. Data 2020, 12, 1625–1648. [Google Scholar] [CrossRef]

- Tang, Z.; Li, Y.; Gu, Y.; Jiang, W.; Xue, Y.; Hu, Q.; LaGrange, T.; Bishop, A.; Drahota, J.; Li, R. Assessing Nebraska playa wetland inundation status during 1985–2015 using Landsat data and Google Earth Engine. Environ. Monit. Assess. 2016, 188, 654. [Google Scholar] [CrossRef] [PubMed]

- Floreano, I.X.; de Moraes, L.A.F. Land use/land cover (LULC) analysis (2009–2019) with Google Earth Engine and 2030 prediction using Markov-CA in the Rondônia State, Brazil. Environ. Monit. Assess. 2021, 193, 239. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, K.R.; Queiroz, G.R.; Camara, G.; Souza, R.C.; Vinhas, L.; Marujo, R.F.; Simoes, R.E.; Noronha, C.A.; Costa, R.W.; Arcanjo, J.S.; et al. Using remote sensing images and cloud services on AWS to improve land use and cover monitoring. In Proceedings of the 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS), Santiago, Chile, 22–26 March 2020; pp. 558–562. [Google Scholar]

- Tran, B.H.; Aussenac-Gilles, N.; Comparot, C.; Trojahn, C. Semantic integration of raster data for earth observation: An RDF dataset of territorial unit versions with their land cover. ISPRS Int. J. Geo-Inf. 2020, 9, 503. [Google Scholar] [CrossRef]

- Gutman, G.; Byrnes, R.A.; Masek, J.; Covington, S.; Justice, C.; Franks, S.; Headley, R. Towards monitoring land-cover and land-use changes at a global scale: The Global Land Survey 2005. Photogramm. Eng. Remote Sens. 2008, 74, 6–10. [Google Scholar]

- Li, X.; Chen, G.; Liu, X.; Liang, X.; Wang, S.; Chen, Y.; Pei, F.; Xu, X. A new global land-use and land-cover change product at a 1-km resolution for 2010 to 2100 based on human–environment interactions. Ann. Am. Assoc. Geogr. 2017, 107, 1040–1059. [Google Scholar] [CrossRef]

- Buyantuyev, A.; Wu, J. Effects of thematic resolution on landscape pattern analysis. Landsc. Ecol. 2007, 22, 7–13. [Google Scholar] [CrossRef]

- Lechner, A.M.; Rhodes, J.R. Recent progress on spatial and thematic resolution in landscape ecology. Curr. Landsc. Ecol. Rep. 2016, 1, 98–105. [Google Scholar] [CrossRef]

- Di Gregorio, A.; O’BRIEN, D. Overview of land-cover classifications and their interoperability. In Remote Sensing of Land Use and Land Cover: Principles and Applications; Giri, C.P., Ed.; CRC Press: Boca Raton, FL, USA, 2012; pp. 37–47. [Google Scholar]

- Yang, H.; Li, S.; Chen, J.; Zhang, X.; Xu, S. The standardization and harmonization of land cover classification systems towards harmonized datasets: A review. ISPRS Int. J. Geo-Inf. 2017, 6, 154. [Google Scholar] [CrossRef]

- Feng, C.C.; Flewelling, D.M. Assessment of semantic similarity between land use/land cover classification systems. Comput. Environ. Urban Syst. 2004, 28, 229–246. [Google Scholar] [CrossRef]

- Herold, M.; Latham, J.S.; Di Gregorio, A.; Schmullius, C.C. Evolving standards in land cover characterization. J. Land Use Sci. 2006, 1, 157–168. [Google Scholar] [CrossRef] [Green Version]

- Cruz, I.F.; Sunna, W.; Makar, N.; Bathala, S. A visual tool for ontology alignment to enable geospatial interoperability. J. Vis. Lang. Comput. 2007, 18, 230–254. [Google Scholar] [CrossRef]

- Katharopoulos, A.; Fleuret, F. Not all samples are created equal: Deep learning with importance sampling. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2525–2534. [Google Scholar]

- Ghorbanian, A.; Kakooei, M.; Amani, M.; Mahdavi, S.; Mohammadzadeh, A.; Hasanlou, M. Improved land cover map of Iran using Sentinel imagery within Google Earth Engine and a novel automatic workflow for land cover classification using migrated training samples. ISPRS J. Photogramm. Remote Sens. 2020, 167, 276–288. [Google Scholar] [CrossRef]

- Traunmueller, M.; Marshall, P.; Capra, L. Crowdsourcing safety perceptions of people: Opportunities and limitations. In International Conference on Social Informatics; Springer: Cham, Switzerland, 2015; pp. 120–135. [Google Scholar]

- Ji, M.; Jensen, J.R. Effectiveness of subpixel analysis in detecting and quantifying urban imperviousness from Landsat Thematic Mapper imagery. Geocarto Int. 1999, 14, 33–41. [Google Scholar] [CrossRef]

- Powell, R.L.; Roberts, D.A.; Dennison, P.E.; Hess, L.L. Sub-pixel mapping of urban land cover using multiple endmember spectral mixture analysis: Manaus, Brazil. Remote Sens. Environ. 2007, 106, 253–267. [Google Scholar] [CrossRef]

- MacLachlan, A.; Roberts, G.; Biggs, E.; Boruff, B. Subpixel land-cover classification for improved urban area estimates using Landsat. Int. J. Remote Sens. 2017, 38, 5763–5792. [Google Scholar] [CrossRef]

- He, D.; Shi, Q.; Liu, X.; Zhong, Y.; Zhang, X. Deep subpixel mapping based on semantic information modulated network for urban land use mapping. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10628–10646. [Google Scholar] [CrossRef]

- Huang, Z.; Qi, H.; Kang, C.; Su, Y.; Liu, Y. An ensemble learning approach for urban land use mapping based on remote sensing imagery and social sensing data. Remote Sens. 2020, 12, 3254. [Google Scholar] [CrossRef]

- Naushad, R.; Kaur, T.; Ghaderpour, E. Deep transfer learning for land use and land cover classification: A comparative study. Sensors 2021, 21, 8083. [Google Scholar] [CrossRef]

- Foody, G.M. Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification. Remote Sens. Environ. 2020, 239, 111630. [Google Scholar] [CrossRef]

- McGwire, K.C.; Fisher, P. Spatially variable thematic accuracy: Beyond the confusion matrix. In Spatial Uncertainty in Ecology; Springer: New York, NY, USA, 2001; pp. 308–329. [Google Scholar]

- Pontius Jr, R.G.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Guo, N.; Xiong, W.; Wu, Q.; Jing, N. An efficient tile-pyramids building method for fast visualization of massive geospatial raster datasets. Adv. Electr. Comput. Eng. 2016, 16, 3–9. [Google Scholar] [CrossRef]

- Malik, K.; Robertson, C.; Roberts, S.A.; Remmel, T.K.; Long, J.A. Computer vision models for comparing spatial patterns: Understanding spatial scale. Int. J. Geogr. Inf. Sci. 2022, 36, 1–35. [Google Scholar] [CrossRef]

- Förstner, W.; Bonn, U. Computer Vision and Remote Sensing-Lessons Learned. Fritsch Dieter (Hg.) Photogramm. Week 2009, 241–249. [Google Scholar]

- Wilkinson, G.G. Recent developments in remote sensing technology and the importance of computer vision analysis techniques. In Machine Vision and Advanced Image Processing in Remote Sensing; Kanellopoulos, I., Wilkinson, G.G., Moons, T., Eds.; Springer: Berlin/Heidelberg, Germany, 1999; pp. 5–11. [Google Scholar]

- Chen, W.; Li, X.; He, H.; Wang, L. A review of fine-scale land use and land cover classification in open-pit mining areas by remote sensing techniques. Remote Sens. 2017, 10, 15. [Google Scholar] [CrossRef]

- Liu, X.; He, J.; Yao, Y.; Zhang, J.; Liang, H.; Wang, H.; Hong, Y. Classifying urban land use by integrating remote sensing and social media data. Int. J. Geogr. Inf. Sci. 2017, 31, 1675–1696. [Google Scholar] [CrossRef]

- Heydari, S.S.; Mountrakis, G. Meta-analysis of deep neural networks in remote sensing: A comparative study of mono-temporal classification to support vector machines. ISPRS J. Photogramm. Remote Sens. 2019, 152, 192–210. [Google Scholar] [CrossRef]

- Yang, X.; Chen, Z.; Li, B.; Peng, D.; Chen, P.; Zhang, B. A fast and precise method for large-scale land-use mapping based on deep learning. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5913–5916. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Li, X. Land Use and Land Cover Mapping in the Era of Big Data. Land 2022, 11, 1692. https://doi.org/10.3390/land11101692

Zhang C, Li X. Land Use and Land Cover Mapping in the Era of Big Data. Land. 2022; 11(10):1692. https://doi.org/10.3390/land11101692

Chicago/Turabian StyleZhang, Chuanrong, and Xinba Li. 2022. "Land Use and Land Cover Mapping in the Era of Big Data" Land 11, no. 10: 1692. https://doi.org/10.3390/land11101692

APA StyleZhang, C., & Li, X. (2022). Land Use and Land Cover Mapping in the Era of Big Data. Land, 11(10), 1692. https://doi.org/10.3390/land11101692