Improved Cropland Abandonment Detection with Deep Learning Vision Transformer (DL-ViT) and Multiple Vegetation Indices

Abstract

:1. Introduction

2. Materials and Methods

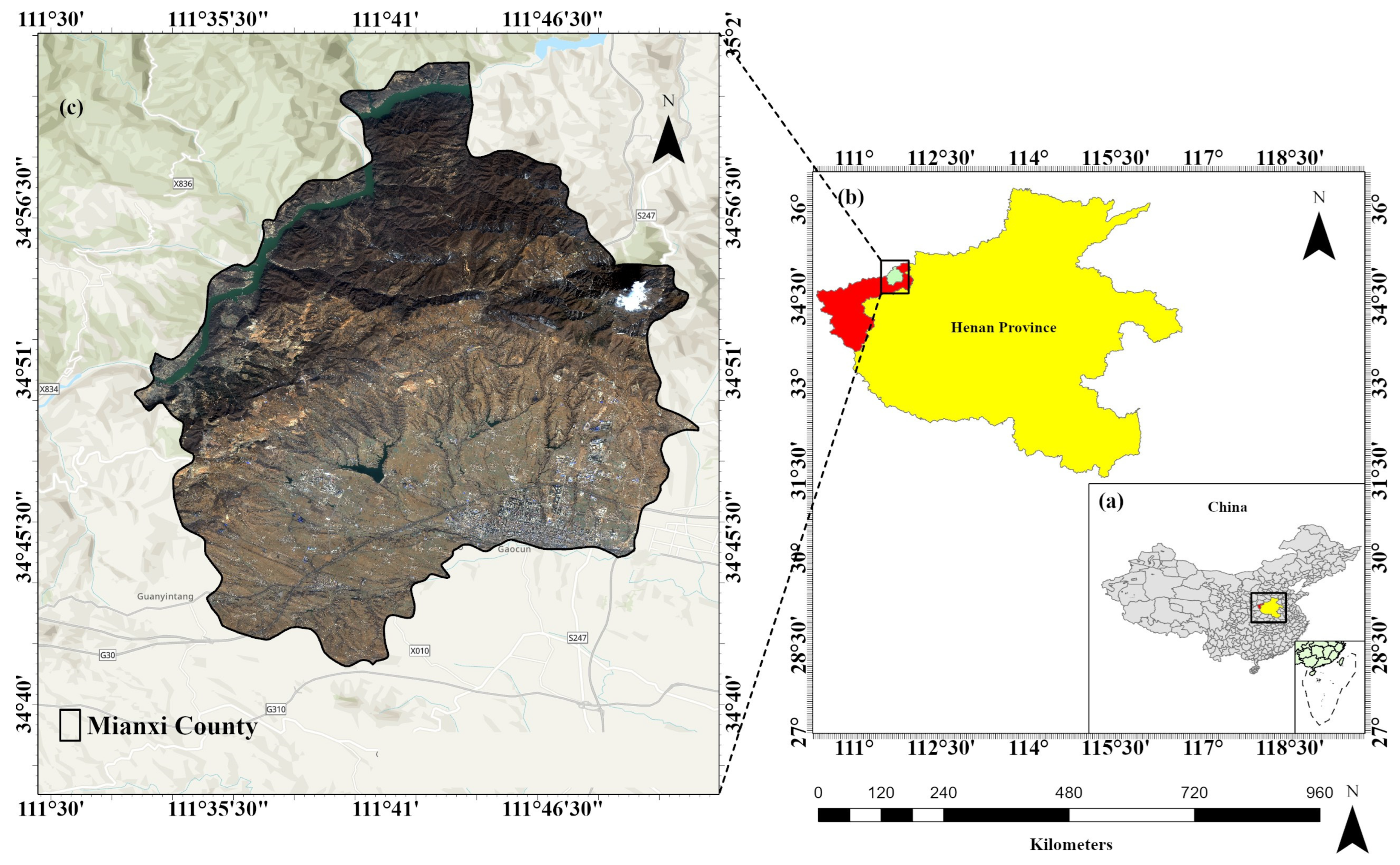

2.1. Study Area

2.2. Datasets

2.3. Software

2.4. Image Preprocessing

- (1)

- The first step involves the creation of subsets of the original images to focus specifically on the study area of interest, allowing for efficient computational processing and analysis of the relevant image data.

- (2)

- To generate multispectral imagery for our study, a layer-stacked image was assembled by combining georeferenced images. The resulting imagery consists of four bands: B, G, R, and NIR.

- (3)

- A color-normalized (Brovey) sharpening technique [29] was applied to convert the 8 m resolution GF-6 satellite images to 2 m resolution for sample labeling and results verification of the classifier. The Brovey Transform sharpens the multispectral image by combining it with the panchromatic image using a weighted ratio. The basic idea is to emphasize the spatial details from the panchromatic image while preserving the spectral information from the multispectral image. The technique is based on the following mathematical expression (Equation (1)):where is the sharpened pixel value in the -th spectral band at coordinates . is the pixel value of the multispectral image in the -th spectral band at coordinates . is the pixel value of the panchromatic image at coordinates . is the average pixel value of the panchromatic image within the spatial neighborhood of the corresponding pixel in the multispectral image.

- (4)

- Since the GF-6 images sometimes did not cover the entire study area, mosaicking was conducted by merging two or more images of the same season to obtain a comprehensive view of the entire study area. This ensured that all relevant features and land cover patterns were captured in the analysis.

- (5)

- To ensure consistency and compatibility with other datasets, the images were projected to a standard coordinate system. This step facilitated seamless integration and comparison with other geospatial data.

- (6)

- Additionally, in order to derive terrain-related information, slope calculation was performed in degrees. This allowed for assessing topographic characteristics and their potential influence on land cover dynamics.

- (7)

- To achieve accurate reflectance values and compensate for atmospheric effects, Fast Line-of-sight Atmospheric Analysis of Spectral Hypercubes (FLAASH) atmospheric correction [30] was employed. The primary objective of FLAASH is to eliminate the atmospheric interference in remote sensing data, thereby enhancing data accuracy and reliability for a wide spectrum of applications. This correction accounted for atmospheric conditions and improved the reliability of subsequent analyses. FLAASH works according to the following mathematical formulas as in Equations (2) and (3).where represents the pixel’s surface reflectance, denotes the average surface reflectance of the surrounding region, signifies the spherical albedo of the atmosphere, capturing the backscattered surface-reflected photons, signifies the radiance backscattered by the atmosphere without reaching the surface, and and represent surface-independent coefficients that vary with atmospheric and geometric conditions. It is important to note that all of these variables implicitly depend on wavelength.

- (8)

- Lastly, radiometric calibration was carried out to normalize the pixel values across the image, ensuring consistent and accurate measurements.

2.5. Vegetation Indices (VIs)

2.6. Optimizing Image Selection for Classifier

2.7. Classes and Image Labeling

2.8. Cropland Abandonment Rate

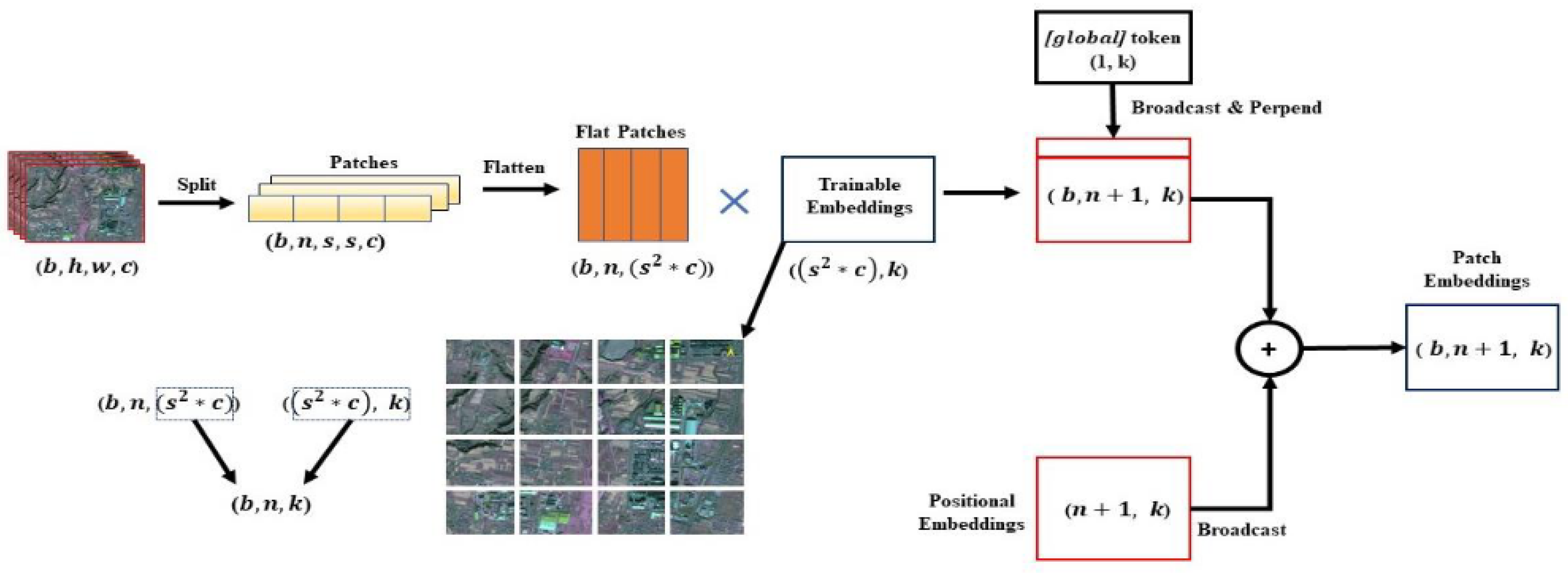

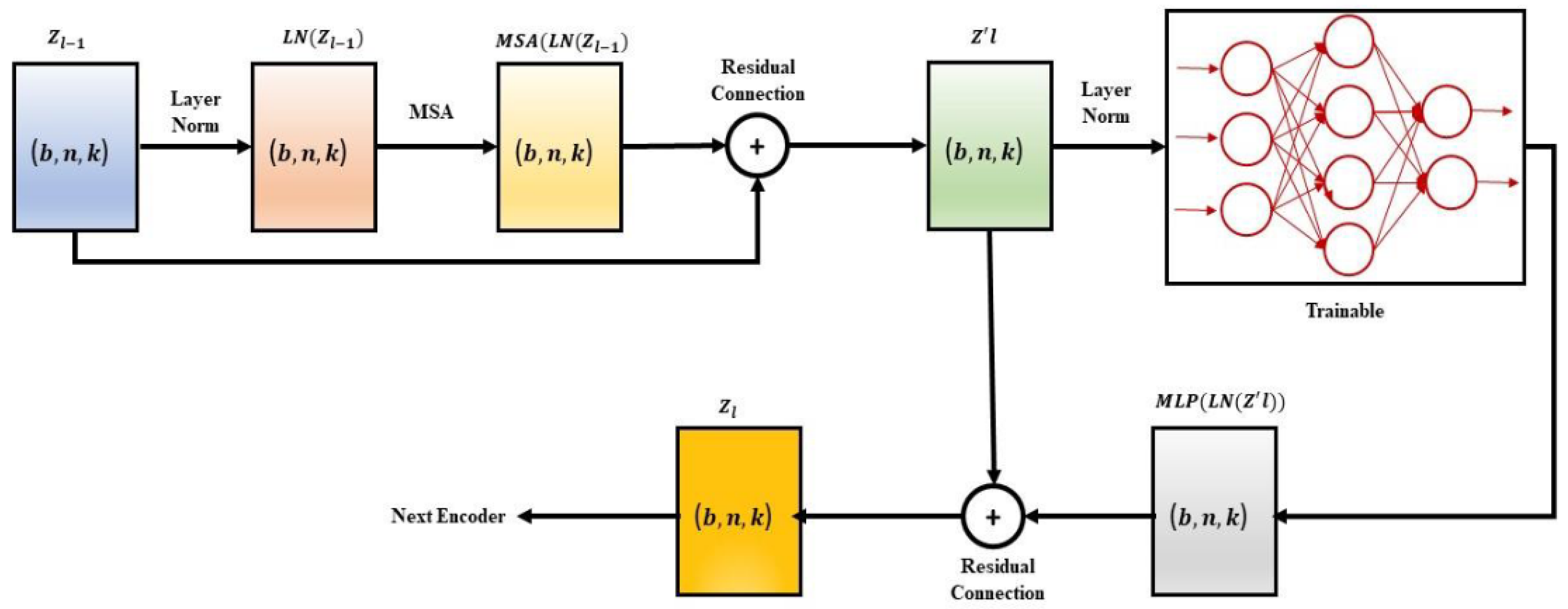

2.9. Vision Transformer Model (ViT)

2.10. Performance Metrics

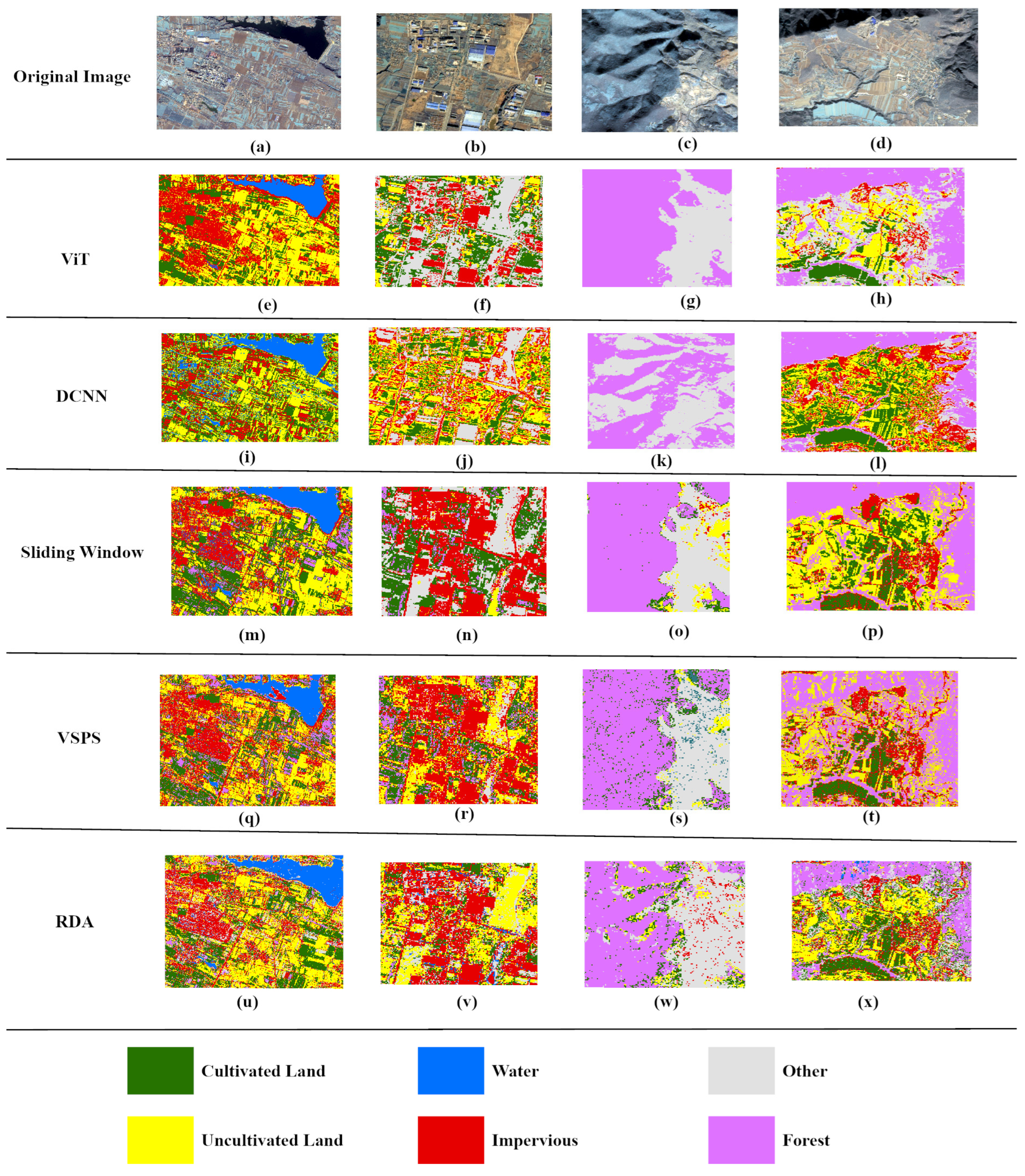

2.11. Comparison with Other Methods

- (1)

- Deep convolutional neural network (DCNN) [41] can automatically construct the training dataset and execute the classification of multispectral satellite images through deep neural networks.

- (2)

- (3)

- Vegetation–soil–pigment indices and synthetic-aperture radar (SAR) time-series images (VSPS) [44] comprise a multi-temporal indicator-based, large-area mapping framework, which facilitates the automatic identification of active croplands.

- (4)

- Redundancy analysis (RDA) [17] entails a direct gradient analysis methodology that succinctly captures the linear relationship between the response variable components of a cluster of redundant explanatory variables. This technique can quantify the contribution rate of determinants to the phenomenon of cropland abandonment.

3. Results

3.1. Choosing the Optimal Multiband Composite Image for ViT

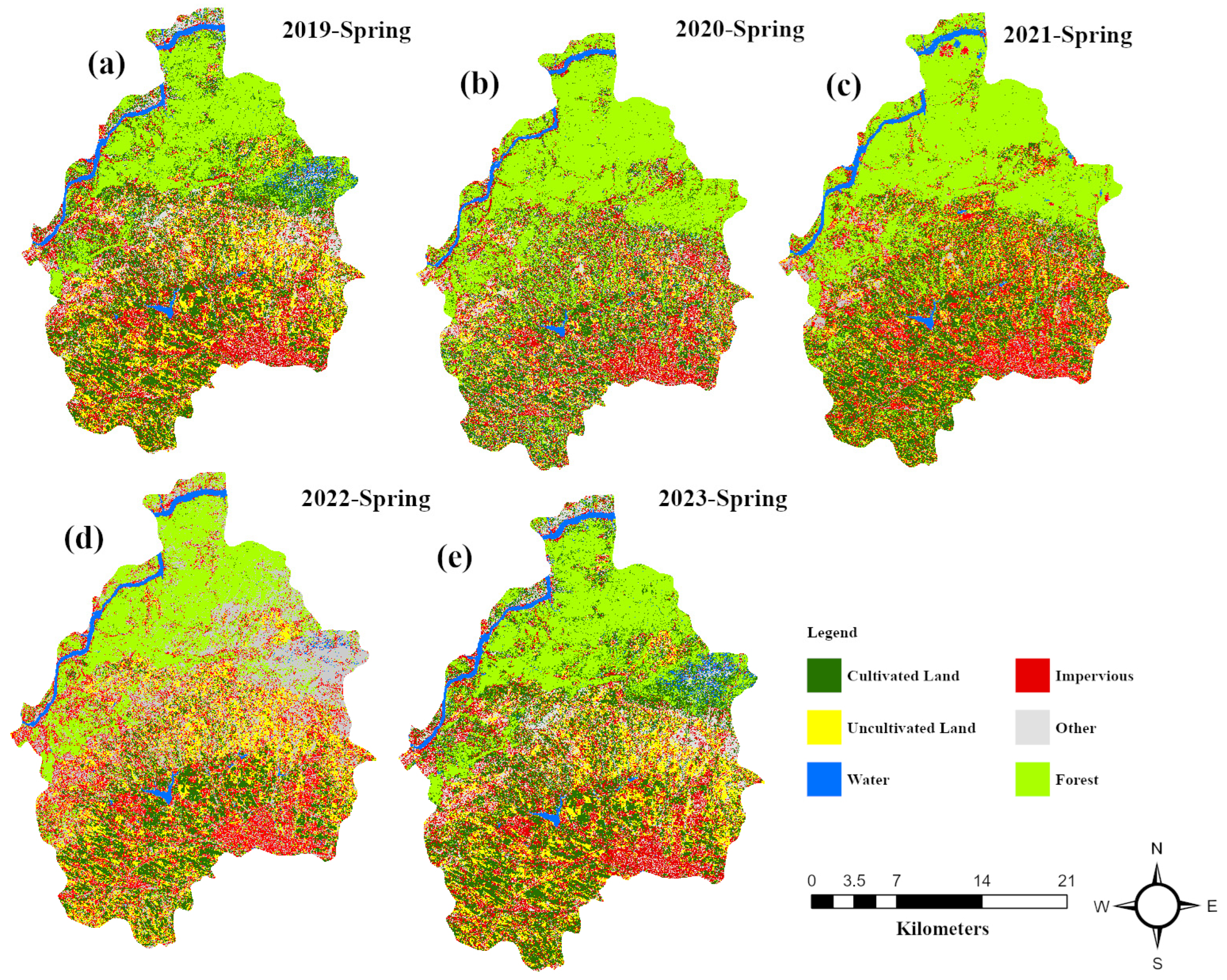

3.2. Inter-Annual Land Use Dynamics and Assessing Classification Accuracy

Classifier Performance Evaluation

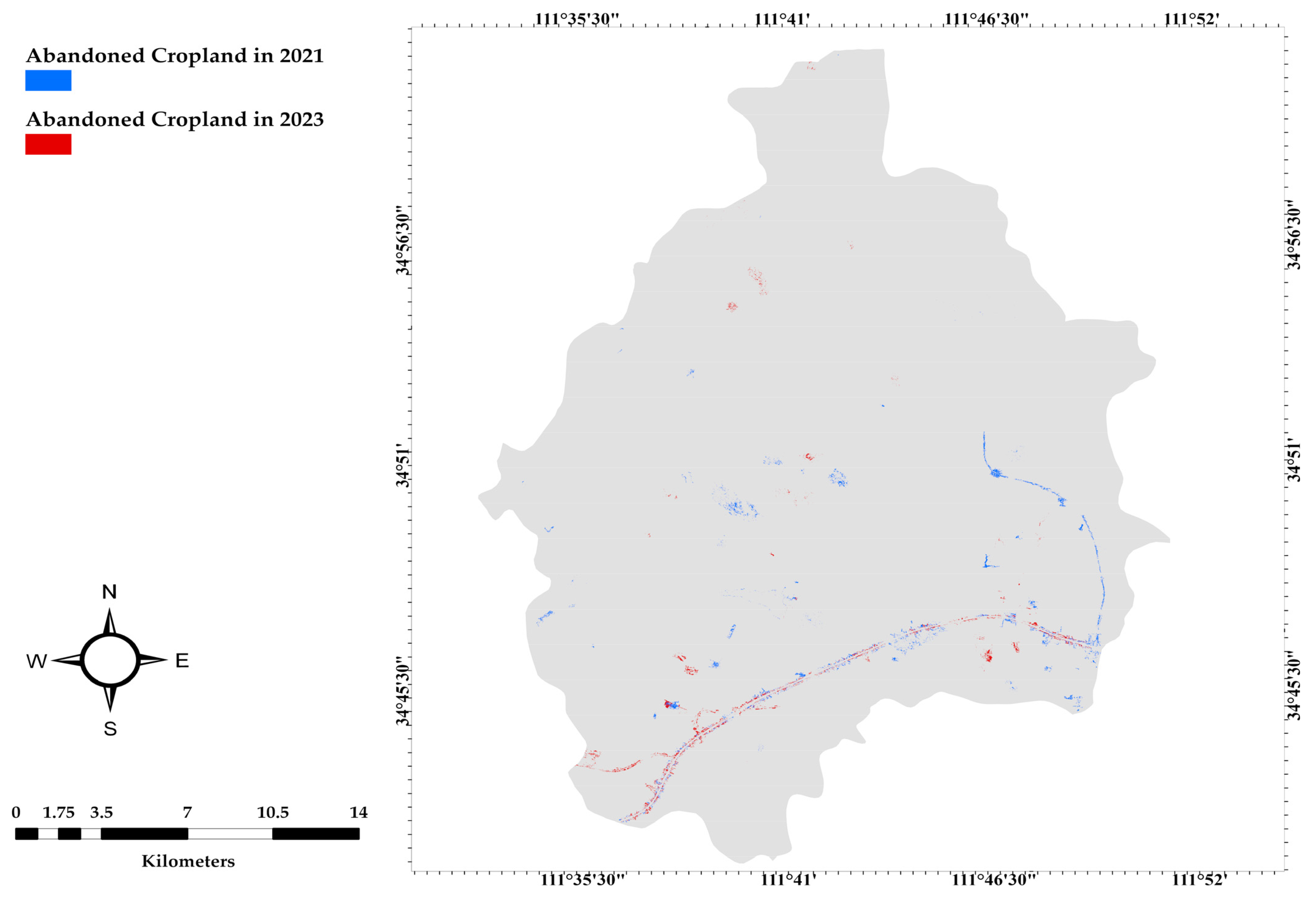

3.3. Spatiotemporal Analysis of Cropland Abandonment: Distribution, Magnitude, Patterns, and Trends

3.4. Explanatory Variables

3.5. Comparative Analysis of Contemporary Approaches for Cropland Abandonment Detection

3.6. Comparison of Employed VIs

4. Discussion

4.1. Understanding Spatiotemporal Dynamics and Factors of Cropland Abandonment: Methodological Advancements and Insights

4.2. Contrasting Trends in Cropland Abandonment

4.3. Shortcomings and Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

Appendix C

| VIs | Spectral Bands | Sensitivity to Vegetation | Robustness to Noise | Ease of Calculation | Studies |

|---|---|---|---|---|---|

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | [61] |

| Dead Fuel Index (DFI) | R, NIR, and SWIR | Low | Moderate | Moderate | [49] |

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | |

| Normalized Difference Water Index (NDWI) | NIR and SWIR | High | Low | High | [17] |

| Normalized Difference Soil Index (NDSI) | R and SWIR | High | High | Moderate | |

| Enhanced Vegetation Index (EVI2) | R, NIR, and SWIR | Very High | Low | High | |

| Enhanced Vegetation Index (EVI) | R, NIR, and SWIR | Very High | High | Moderate | [13] |

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | [21] |

| Normalized Difference Snow Index (NDSI) | NIR and SWIR | High | High | Easy | |

| Dry Bare-Soil Index (DBSI) | R and NIR | High | High | Moderate | [62] |

| Enhanced Vegetation Index (EVI2) | R, NIR, and SWIR | Very High | Low | High | [63] |

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | [23] |

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | [24] |

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | [25] |

| Green Normalized Difference Vegetation Index (GNDVI) | G and NIR | High | Moderate | Easy | |

| Enhanced Vegetation Index (EVI) | R, NIR, and SWIR | Very High | High | Moderate | |

| Normalized Difference Infrared Index (NDII) | R and NIR | High | High | Easy | |

| Normalized Burn Ratio (NBR) | SWIR and NIR | High | Low | Easy | |

| Normalized Difference Building Index (NDBI) | NIR and SWIR | High | Moderate | Easy | [22] |

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | |

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | [18] |

| Normalized Difference Vegetation Index (NDVI) | R and NIR | High | Moderate | Easy | Proposed VIs |

| Soil-adjusted Vegetation Index (SAVI) | R and NIR | High | Low | Easy | |

| Modified Soil-adjusted Vegetation Index (MSAVI) | R and NIR | High | High | Easy | |

| Perpendicular Vegetation Index (PVI) | G and NIR | High | High | Easy | |

| Red-Edge Triangulated Vegetation Index (RTVICore) | R, NIR, and SWIR | Very High | High | Moderate | |

| Simple Ratio (SR) | R and NIR | High | Low | Easy |

References

- United Nations. Every Year, 12 Million Hectares of Productive Land Lost, Secretary-General Tells Desertification Forum, Calls for Scaled-up Restoration Efforts, Smart Policies. UN Press: New York, NY, USA. Available online: https://press.un.org/en/2019/sgsm19680.doc.htm (accessed on 3 April 2023).

- Zakkak, S.; Radovic, A.; Nikolov, S.C.; Shumka, S.; Kakalis, L.; Kati, V. Assessing the Effect of Agricultural Land Abandonment on Bird Communities in Southern-Eastern Europe. J. Environ. Manag. 2015, 164, 171–179. [Google Scholar] [CrossRef]

- Hou, D.; Meng, F.; Prishchepov, A.V. How Is Urbanization Shaping Agricultural Land-Use? Unraveling the Nexus between Farmland Abandonment and Urbanization in China. Landsc. Urban Plan. 2021, 214, 104170. [Google Scholar] [CrossRef]

- Zheng, Q.; Ha, T.; Prishchepov, A.V.; Zeng, Y.; Yin, H.; Koh, L.P. The Neglected Role of Abandoned Cropland in Supporting Both Food Security and Climate Change Mitigation. Nat. Commun. 2023, 14, 6083. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Song, W. Cropland Abandonment and Influencing Factors in Chongqing, China. Land 2021, 10, 1206. [Google Scholar] [CrossRef]

- Prishchepov, A.V.; Müller, D.; Dubinin, M.; Baumann, M.; Radeloff, V.C. Determinants of Agricultural Land Abandonment in Post-Soviet European Russia. Land Use Policy 2013, 30, 873–884. [Google Scholar] [CrossRef]

- Zhong, F.; Li, Q.; Xiang, J.; Zhu, J. Economic Growth, Demographic Change and Rural-Urban Migration in China. J. Integr. Agric. 2013, 12, 1884–1895. [Google Scholar] [CrossRef]

- Foley, J.A.; DeFries, R.; Asner, G.P.; Barford, C.; Bonan, G.; Carpenter, S.R.; Chapin, F.S.; Coe, M.T.; Daily, G.C.; Gibbs, H.K.; et al. Global Consequences of Land Use. Science 2005, 309, 570–574. [Google Scholar] [CrossRef]

- Tscharntke, T.; Clough, Y.; Wanger, T.C.; Jackson, L.; Motzke, I.; Perfecto, I.; Vandermeer, J.; Whitbread, A. Global Food Security, Biodiversity Conservation and the Future of Agricultural Intensification. Biol. Conserv. 2012, 151, 53–59. [Google Scholar] [CrossRef]

- West, P.C.; Gibbs, H.K.; Monfreda, C.; Wagner, J.; Barford, C.C.; Carpenter, S.R.; Foley, J.A. Trading Carbon for Food: Global Comparison of Carbon Stocks vs. Crop Yields on Agricultural Land. Proc. Natl. Acad. Sci. USA 2010, 107, 19645–19648. [Google Scholar] [CrossRef]

- Houghton, R.A. Revised Estimates of the Annual Net Flux of Carbon to the Atmosphere from Changes in Land Use and Land Management 1850–2000. Tellus B 2003, 55, 378–390. [Google Scholar] [CrossRef]

- Zhu, X.; Xiao, G.; Zhang, D.; Guo, L. Mapping Abandoned Farmland in China Using Time Series MODIS NDVI. Sci. Total Environ. 2021, 755, 142651. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Tan, Y.; Xiao, W.; He, T.; Xu, S.; Meng, F.; Li, X.; Xiong, W. Assessment of Continuity and Efficiency of Complemented Cropland Use in China for the Past 20 Years: A Perspective of Cropland Abandonment. J. Clean. Prod. 2023, 388, 135987. [Google Scholar] [CrossRef]

- Khorchani, M.; Gaspar, L.; Nadal-Romero, E.; Arnaez, J.; Lasanta, T.; Navas, A. Effects of Cropland Abandonment and Afforestation on Soil Redistribution in a Small Mediterranean Mountain Catchment. Int. Soil Water Conserv. Res. 2023, 11, 339–352. [Google Scholar] [CrossRef]

- Zhang, M.; Li, G.; He, T.; Zhai, G.; Guo, A.; Chen, H.; Wu, C. Reveal the Severe Spatial and Temporal Patterns of Abandoned Cropland in China over the Past 30 Years. Sci. Total Environ. 2023, 857, 159591. [Google Scholar] [CrossRef]

- Liu, B.; Song, W. Mapping Abandoned Cropland Using Within-Year Sentinel-2 Time Series. CATENA 2023, 223, 106924. [Google Scholar] [CrossRef]

- Hong, C.; Prishchepov, A.V.; Jin, X.; Han, B.; Lin, J.; Liu, J.; Ren, J.; Zhou, Y. The Role of Harmonized Landsat Sentinel-2 (HLS) Products to Reveal Multiple Trajectories and Determinants of Cropland Abandonment in Subtropical Mountainous Areas. J. Environ. Manag. 2023, 336, 117621. [Google Scholar] [CrossRef] [PubMed]

- Lotfi, P.; Ahmadi Nadoushan, M.; Besalatpour, A. Cropland Abandonment in a Shrinking Agricultural Landscape: Patch-Level Measurement of Different Cropland Fragmentation Patterns in Central Iran. Appl. Geogr. 2023, 158, 103023. [Google Scholar] [CrossRef]

- Luo, K.; Moiwo, J.P. Rapid Monitoring of Abandoned Farmland and Information on Regulation Achievements of Government Based on Remote Sensing Technology. Environ. Sci. Policy 2022, 132, 91–100. [Google Scholar] [CrossRef]

- Portalés-Julià, E.; Campos-Taberner, M.; García-Haro, F.J.; Gilabert, M.A. Assessing the Sentinel-2 Capabilities to Identify Abandoned Crops Using Deep Learning. Agronomy 2021, 11, 654. [Google Scholar] [CrossRef]

- Su, Y.; Wu, S.; Kang, S.; Xu, H.; Liu, G.; Qiao, Z.; Liu, L. Monitoring Cropland Abandonment in Southern China from 1992 to 2020 Based on the Combination of Phenological and Time-Series Algorithm Using Landsat Imagery and Google Earth Engine. Remote Sens. 2023, 15, 669. [Google Scholar] [CrossRef]

- Wang, Y.; Song, W. Mapping Abandoned Cropland Changes in the Hilly and Gully Region of the Loess Plateau in China. Land 2021, 10, 1341. [Google Scholar] [CrossRef]

- Feng, H. Individual Contributions of Climate and Vegetation Change to Soil Moisture Trends across Multiple Spatial Scales. Sci. Rep. 2016, 6, 32782. [Google Scholar] [CrossRef] [PubMed]

- Kocev, D.; Džeroski, S.; White, M.D.; Newell, G.R.; Griffioen, P. Using Single- and Multi-Target Regression Trees and Ensembles to Model a Compound Index of Vegetation Condition. Ecol. Model. 2009, 220, 1159–1168. [Google Scholar] [CrossRef]

- Praticò, S.; Solano, F.; Di Fazio, S.; Modica, G. Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sens. 2021, 13, 586. [Google Scholar] [CrossRef]

- Pastick, N.J.; Wylie, B.K.; Wu, Z. Spatiotemporal Analysis of Landsat-8 and Sentinel-2 Data to Support Monitoring of Dryland Ecosystems. Remote Sens. 2018, 10, 791. [Google Scholar] [CrossRef]

- Mianchi County People’s Government Portal. Available online: http://www.mianchi.gov.cn/ (accessed on 11 June 2023).

- Gaofen 6. Available online: https://catalyst.earth/catalyst-system-files/help/references/gdb_r/Gaofen-6.html (accessed on 2 February 2023).

- Rokni, K. Investigating the Impact of Pan Sharpening on the Accuracy of Land Cover Mapping in Landsat OLI Imagery. Geod. Cartogr. 2023, 49, 12–18. [Google Scholar] [CrossRef]

- Rani, N.; Mandla, V.R.; Singh, T. Evaluation of Atmospheric Corrections on Hyperspectral Data with Special Reference to Mineral Mapping. Geosci. Front. 2017, 8, 797–808. [Google Scholar] [CrossRef]

- Jönsson, P.; Cai, Z.; Melaas, E.; Friedl, M.A.; Eklundh, L. A Method for Robust Estimation of Vegetation Seasonality from Landsat and Sentinel-2 Time Series Data. Remote Sens. 2018, 10, 635. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Chen, B.; Torbick, N.; Jin, C.; Zhang, G.; Biradar, C. Mapping Deciduous Rubber Plantations through Integration of PALSAR and Multi-Temporal Landsat Imagery. Remote Sens. Environ. 2013, 134, 392–402. [Google Scholar] [CrossRef]

- Kim, B.Y.; Park, S.K.; Heo, J.S.; Choi, H.G.; Kim, Y.S.; Nam, K.W. Biomass and Community Structure of Epilithic Biofilm on the Yellow and East Coasts of Korea. Open J. Mar. Sci. 2014, 4, 286–297. [Google Scholar] [CrossRef]

- Zhao, B.; Duan, A.; Ata-Ul-Karim, S.T.; Liu, Z.; Chen, Z.; Gong, Z.; Zhang, J.; Xiao, J.; Liu, Z.; Qin, A.; et al. Exploring New Spectral Bands and Vegetation Indices for Estimating Nitrogen Nutrition Index of Summer Maize. Eur. J. Agron. 2018, 93, 113–125. [Google Scholar] [CrossRef]

- Zhen, Z.; Chen, S.; Qin, W.; Li, J.; Mike, M.; Yang, B. A Modified Transformed Soil Adjusted Vegetation Index for Cropland in Jilin Province, China. Acta Geol. Sin.-Engl. Ed. 2019, 93, 173–176. [Google Scholar] [CrossRef]

- Baret, F.; Guyot, G. Potentials and Limits of Vegetation Indices for LAI and APAR Assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Xing, N.; Huang, W.; Xie, Q.; Shi, Y.; Ye, H.; Dong, Y.; Wu, M.; Sun, G.; Jiao, Q. A Transformed Triangular Vegetation Index for Estimating Winter Wheat Leaf Area Index. Remote Sens. 2020, 12, 16. [Google Scholar] [CrossRef]

- Ryu, J.-H.; Oh, D.; Cho, J. Simple Method for Extracting the Seasonal Signals of Photochemical Reflectance Index and Normalized Difference Vegetation Index Measured Using a Spectral Reflectance Sensor. J. Integr. Agric. 2021, 20, 1969–1986. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale 2021. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Han, K.; Xiao, A.; Wu, E.; Guo, J.; Xu, C.; Wang, Y. Transformer in Transformer. Adv. Neural Inf. Process. Syst. 2021, 34, 15908–15919. [Google Scholar]

- Hu, Y.; Zhang, Q.; Zhang, Y.; Yan, H. A Deep Convolution Neural Network Method for Land Cover Mapping: A Case Study of Qinhuangdao, China. Remote Sens. 2018, 10, 2053. [Google Scholar] [CrossRef]

- Sheikhy Narany, T.; Aris, A.Z.; Sefie, A.; Keesstra, S. Detecting and Predicting the Impact of Land Use Changes on Groundwater Quality, a Case Study in Northern Kelantan, Malaysia. Sci. Total Environ. 2017, 599–600, 844–853. [Google Scholar] [CrossRef]

- He, T.; Xiao, W.; Zhao, Y.; Deng, X.; Hu, Z. Identification of Waterlogging in Eastern China Induced by Mining Subsidence: A Case Study of Google Earth Engine Time-Series Analysis Applied to the Huainan Coal Field. Remote Sens. Environ. 2020, 242, 111742. [Google Scholar] [CrossRef]

- Qiu, B.; Lin, D.; Chen, C.; Yang, P.; Tang, Z.; Jin, Z.; Ye, Z.; Zhu, X.; Duan, M.; Huang, H.; et al. From Cropland to Cropped Field: A Robust Algorithm for National-Scale Mapping by Fusing Time Series of Sentinel-1 and Sentinel-2. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 103006. [Google Scholar] [CrossRef]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land Cover Classification Using Google Earth Engine and Random Forest Classifier—The Role of Image Composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Han, Z.; Song, W. Spatiotemporal Variations in Cropland Abandonment in the Guizhou–Guangxi Karst Mountain Area, China. J. Clean. Prod. 2019, 238, 117888. [Google Scholar] [CrossRef]

- China Economic Data. Available online: https://wap.ceidata.cei.cn/detail?id=lXpY%2Fwo%2FHU8%3D (accessed on 15 May 2023).

- Land Price in Yuchi County|Land Transaction Data|Land Transaction|Land Bidding and Auction-Where to Choose. Available online: https://www.xuanzhi.com/henan-sanmenxia-mianchi/dijiashuju/at1mint202212maxt202212 (accessed on 15 May 2023).

- Zhao, X.; Wu, T.; Wang, S.; Liu, K.; Yang, J. Cropland Abandonment Mapping at Sub-Pixel Scales Using Crop Phenological Information and MODIS Time-Series Images. Comput. Electron. Agric. 2023, 208, 107763. [Google Scholar] [CrossRef]

- Guo, A.; Yue, W.; Yang, J.; Xue, B.; Xiao, W.; Li, M.; He, T.; Zhang, M.; Jin, X.; Zhou, Q. Cropland Abandonment in China: Patterns, Drivers, and Implications for Food Security. J. Clean. Prod. 2023, 138154. [Google Scholar] [CrossRef]

- Johansen, K.; Phinn, S.; Taylor, M. Mapping Woody Vegetation Clearing in Queensland, Australia from Landsat Imagery Using the Google Earth Engine. Remote Sens. Appl. Soc. Environ. 2015, 1, 36–49. [Google Scholar] [CrossRef]

- Yusoff, N.; Muharam, F. The Use of Multi-Temporal Landsat Imageries in Detecting Seasonal Crop Abandonment. Remote Sens. 2015, 7, 11974–11991. [Google Scholar] [CrossRef]

- Jiang, Y.; He, X.; Yin, X.; Chen, F. The Pattern of Abandoned Cropland and Its Productivity Potential in China: A Four-Years Continuous Study. Sci. Total Environ. 2023, 870, 161928. [Google Scholar] [CrossRef]

- De Castro, P.I.B.; Yin, H.; Teixera Junior, P.D.; Lacerda, E.; Pedroso, R.; Lautenbach, S.; Vicens, R.S. Sugarcane Abandonment Mapping in Rio de Janeiro State Brazil. Remote Sens. Environ. 2022, 280, 113194. [Google Scholar] [CrossRef]

- Chen, S.; Olofsson, P.; Saphangthong, T.; Woodcock, C.E. Monitoring Shifting Cultivation in Laos with Landsat Time Series. Remote Sens. Environ. 2023, 288, 113507. [Google Scholar] [CrossRef]

- Chaudhary, S.; Wang, Y.; Dixit, A.M.; Khanal, N.R.; Xu, P.; Fu, B.; Yan, K.; Liu, Q.; Lu, Y.; Li, M. A Synopsis of Farmland Abandonment and Its Driving Factors in Nepal. Land 2020, 9, 84. [Google Scholar] [CrossRef]

- Baumann, M.; Kuemmerle, T.; Elbakidze, M.; Ozdogan, M.; Radeloff, V.C.; Keuler, N.S.; Prishchepov, A.V.; Kruhlov, I.; Hostert, P. Patterns and Drivers of Post-Socialist Farmland Abandonment in Western Ukraine. Land Use Policy 2011, 28, 552–562. [Google Scholar] [CrossRef]

- Díaz, G.I.; Nahuelhual, L.; Echeverría, C.; Marín, S. Drivers of Land Abandonment in Southern Chile and Implications for Landscape Planning. Landsc. Urban Plan. 2011, 99, 207–217. [Google Scholar] [CrossRef]

- Lieskovský, J.; Bezák, P.; Špulerová, J.; Lieskovský, T.; Koleda, P.; Dobrovodská, M.; Bürgi, M.; Gimmi, U. The Abandonment of Traditional Agricultural Landscape in Slovakia—Analysis of Extent and Driving Forces. J. Rural Stud. 2015, 37, 75–84. [Google Scholar] [CrossRef]

- Löw, F.; Prishchepov, A.V.; Waldner, F.; Dubovyk, O.; Akramkhanov, A.; Biradar, C.; Lamers, J.P.A. Mapping Cropland Abandonment in the Aral Sea Basin with MODIS Time Series. Remote Sens. 2018, 10, 159. [Google Scholar] [CrossRef]

- Xu, S.; Xiao, W.; Yu, C.; Chen, H.; Tan, Y. Mapping Cropland Abandonment in Mountainous Areas in China Using the Google Earth Engine Platform. Remote Sens. 2023, 15, 1145. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, J.; Wang, L.; Rao, X.; Ran, W.; Xu, C. Monitoring and Analysis of Abandoned Cropland in the Karst Plateau of Eastern Yunnan, China Based on Landsat Time Series Images. Ecol. Indic. 2023, 146, 109828. [Google Scholar] [CrossRef]

- Meijninger, W.; Elbersen, B.; van Eupen, M.; Mantel, S.; Ciria, P.; Parenti, A.; Sanz Gallego, M.; Perez Ortiz, P.; Acciai, M.; Monti, A. Identification of Early Abandonment in Cropland through Radar-Based Coherence Data and Application of a Random-Forest Model. GCB Bioenergy 2022, 14, 735–755. [Google Scholar] [CrossRef]

| Features | GF-2 | GF-6 | ||

|---|---|---|---|---|

| Frequency (GHz) | 8–12.5 | 4–8 | ||

| Temporal Resolution (d) | 5 | 1 (PMC)–4 (WFV) | ||

| Altitude (km) | 631 | 634 km × 647 km | ||

| Swath Width (km) | One camera 23 km (45 km with two combined cameras) | 95 (PMC), 860 (WFV) | ||

| Spatial Resolution (m) | PAN: 0.8 | MS: 3.2 | PAN: 2 | MS: 8 |

| Bands/Wavelength (μm) | Pan: 0.45–90 | B1/blue: 0.45–0.52 | Pan: 0.45–90 | B1/blue: 0.45–0.52 |

| B2/green: 0.52–0.59 | B2/green: 0.52–0.6 | |||

| B3/red: 0.63–0.69 | B3/red: 0.63–0.69 | |||

| B4/NIR: 0.77–0.89 | B4/NIR: 0.76–0.90 | |||

| Cloud Coverage (%) | 5–10 | 5–10 | ||

| Date of Image Accusation (year/month/day) | 2020/09/07 and 2021/10/18 | 2019/04/09, 2019/08/10, 2020/06/02, 2021/04/11, 2022/05/03, 2022/10/10, and 2023/04/19 | ||

| VIs | Formula | Reference |

|---|---|---|

| Normalized Difference Vegetation Index (NDVI) | [33] | |

| Soil-Adjusted Vegetation Index (SAVI) | [34] | |

| Transformed Soil-Adjusted Vegetation Index (TSAVI) | [35] | |

| Perpendicular Vegetation Index (PVI) | [36] | |

| Red-Edge Triangulated Vegetation Index (RTVICore) | [37] | |

| Simple Ratio (SR) | [38] |

| VIs | F1 Score | OA |

|---|---|---|

| NDVI | 0.82 | 0.82 |

| SAVI | 0.83 | 0.83 |

| TSAVI | 0.82 | 0.83 |

| PVI | 0.84 | 0.84 |

| RTVICore | 0.84 | 0.83 |

| SR | 0.84 | 0.82 |

| NDVI, SAVI, PVI | 0.86 | 0.87 |

| All VIs | 0.88 | 0.91 |

| Precision | Recall | F1 Score | OA | |

|---|---|---|---|---|

| Spring 2019 | 0.9032 | 0.8858 | 0.8885 | 0.9114 |

| Fall 2019 | 0.8696 | 0.8945 | 0.8767 | 0.9328 |

| Spring 2020 | 0.8014 | 0.8790 | 0.8047 | 0.9042 |

| Fall 2020 | 0.8756 | 0.8574 | 0.8497 | 0.9078 |

| Spring 2021 | 0.8995 | 0.8998 | 0.8986 | 0.9386 |

| Fall 2021 | 0.8409 | 0.8877 | 0.8580 | 0.9139 |

| Spring 2022 | 0.8914 | 0.8888 | 0.8839 | 0.9454 |

| Fall 2022 | 0.8319 | 0.8982 | 0.8579 | 0.9332 |

| Spring 2023 | 0.8611 | 0.8784 | 0.8683 | 0.9416 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karim, M.; Deng, J.; Ayoub, M.; Dong, W.; Zhang, B.; Yousaf, M.S.; Bhutto, Y.A.; Ishfaque, M. Improved Cropland Abandonment Detection with Deep Learning Vision Transformer (DL-ViT) and Multiple Vegetation Indices. Land 2023, 12, 1926. https://doi.org/10.3390/land12101926

Karim M, Deng J, Ayoub M, Dong W, Zhang B, Yousaf MS, Bhutto YA, Ishfaque M. Improved Cropland Abandonment Detection with Deep Learning Vision Transformer (DL-ViT) and Multiple Vegetation Indices. Land. 2023; 12(10):1926. https://doi.org/10.3390/land12101926

Chicago/Turabian StyleKarim, Mannan, Jiqiu Deng, Muhammad Ayoub, Wuzhou Dong, Baoyi Zhang, Muhammad Shahzad Yousaf, Yasir Ali Bhutto, and Muhammad Ishfaque. 2023. "Improved Cropland Abandonment Detection with Deep Learning Vision Transformer (DL-ViT) and Multiple Vegetation Indices" Land 12, no. 10: 1926. https://doi.org/10.3390/land12101926