Simulating Urban Element Design with Pedestrian Attention: Visual Saliency as Aid for More Visible Wayfinding Design

Abstract

1. Introduction

1.1. Related Works

1.1.1. Urban Design Tools

1.1.2. Tools and Methods to Promote Iterative, Attention-Aware Design

1.1.3. Visual Saliency as Aggregated Attention to Support Urban Design

| Relevant Works | Feedback Methods | Design Target | Advantages | Disadvantages |

|---|---|---|---|---|

| Luo et al. (2022) [40] | Workshop | Urban park | Promote interactive collaboration among participants | Mainly applicable to the initial and final design stages |

| Marthya et al. (2021) [41] | Interview | Transit corridor | Can gather the various stakeholders’ opinions Contains the detailed requirements of the citizens | Collecting the full response is time-consuming Results are not quantifiable |

| Seetharaman et al. (2020) [11] | Photovoice | Landmark | Can capture instant images of the urban design | Spatial contexts of the design are not considered in detail |

| Saha et al. (2019) [13] | Crowd rating | Sidewalk | No need for face-to-face experiments Fast response from online workers | Cannot guarantee the feedback data quality Crowdworkers are not always the dwellers |

| Vainio et al. (2019) [14] | Eye tracker | Urban scenery | Can collect quantitative human attention including unconscious perception | Requires a dedicated hardware device Difficult to interpret the large amount of data |

| This work (2023) | Visual saliency | Wayfinding sign (user study) Building, poster (tool compatibility) | Real time, no need for human participants Can simulate 3D dynamic interactions | Difficult to observe individual preferences Cannot gather detailed comments |

1.2. Contribution Statement

- We introduced the concept of saliency-guided iterative design practice to the field of urban design, specifically in the domain of simulating wayfinding design.

- We demonstrated that providing visual-saliency prediction as design feedback can improve the design.

- We showed that saliency feedback can be particularly effective for designing accessible wayfinding for older adults with cognitive decline.

- We provided a detailed system implementation and reusable code for a three-dimensional (3D) urban design simulation tool with visual saliency and pedestrian spatial interaction.

2. Methods

2.1. Study Overview

- RQ1: Does saliency prediction feedback help designers create better urban designs?

- RQ2: In which environments are the designs produced with saliency prediction particularly effective?

- RQ3: Which end users will the saliency-guided design method benefit the most?

2.1.1. RH1: Saliency Prediction Helps Designers Create Better Designs by Helping Them Anticipate the Elements to Which Pedestrians Will Pay Attention

2.1.2. RH2: A Design Produced with Saliency Prediction Is More Effective for Capturing Attention in Urban Areas than in Rural Areas

2.1.3. RH3: Elderly People with Subjective Cognitive Decline (SCD) Prefer Designs Created with Saliency Feedback More Strongly Than Young Adults and Elderly People without SCD

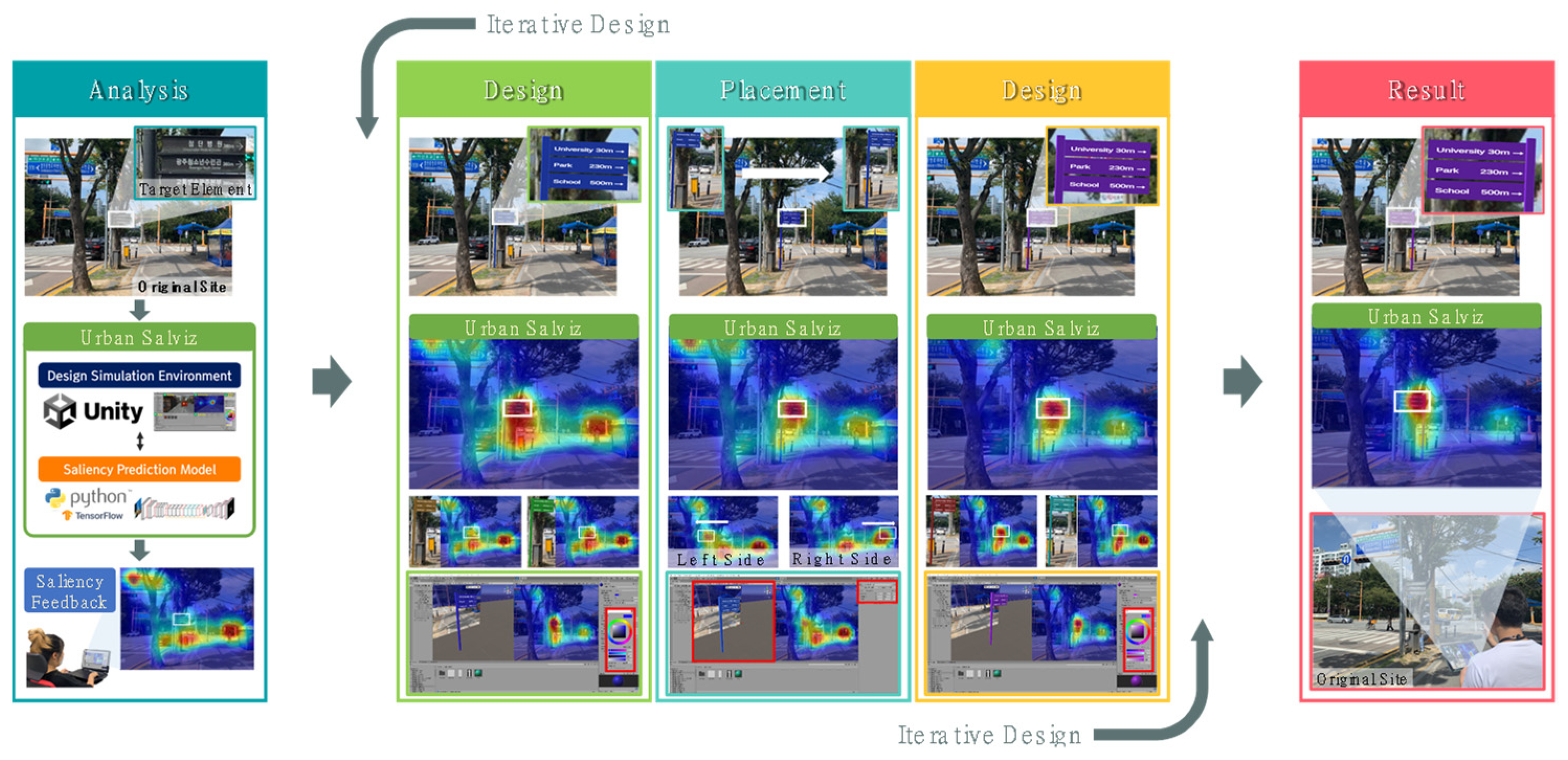

2.2. Urban Salviz

2.2.1. Tool Overview

- A.

- Drawing. Users can alter the design of the urban elements in the Drawing Table. This tab is based on the Scene view of Unity 3D; thus, it includes the transform components of the tool. Users can transform objects in the X, Y, and Z directions (Figure 4, Move); rotate objects around the X, Y, and Z axes (Figure 4, Rotate); scale objects along the X, Y, and Z axes (Figure 4, Scale); and stretch objects along the X and Y axes (Figure 4, Rect). Users can either select virtual-reality (VR) environments or load a camera input for video through mixed-reality applications.

- B.

- Saliency Map. The Saliency Map panel (Figure 3B) offers real-time visual-saliency predictions from a virtual pedestrian viewpoint. The visual-saliency prediction mimics fixation prediction and helps designers to determine where users will pay the most attention to. The board reflects every change in the Drawing tab (Figure 3A) and Design Panel (Figure 3C), updates the visual saliency in real time, and can be turned on or off with the space bar. Users move the virtual pedestrian’s pose and perspective with a mouse or keyboard. In our user study, this function was not used by the control group, whereas the experimental group could freely turn the saliency map on or off.

- C.

- Design Panel. The Design Panel is used to design and adjust the visual details of the crafted elements. It is based on the Unity 3D inspector window, which allows users to view and edit the properties and settings of game objects, assets, and prefabs. When a user clicks an object to edit in the Drawing tab, the content of the design panel changes to display the object’s visual attributes object. Users can add text, change the font size, and adjust the color of each element of the selected object. Users can also move, rotate, and scale objects in a numerical manner.

- D.

- Prefab. A Prefab is a saved reusable game object consisting of multiple object components that functions as a template. We developed four prefab models of wayfinding signs with different designs (Figure 3D). Each prefab consisted of a sign panel, a text box, an arrow, and a pillar. Designers can browse example signs and can also start from a half-made design by loading the existing Unity prefab sample models via dragging and dropping.

- E.

- ProBuilder. This tool allows users to create urban elements with custom geometries by constructing, editing, and texturing basic 3D shapes. ProBuilder—a hybrid 3D modeling and level design tool optimized for creating simple geometries—is utilized for this. With the ability to select any face or edge for extrusion or insetting, users can create objects with various shapes.

2.2.2. Implementation Notes

2.3. User Study with Designers (Sanity Check of Usability and UX for Crafting Road Signs)

2.3.1. Procedure

2.3.2. Questionnaire Assessing Usability and Perceived Design Experience of Tool

2.3.3. Participants

2.4. Design Quality Evaluation (Impact of Saliency Feedback on Design Outcome)

2.4.1. Procedure

2.4.2. Questionnaire for Assessing Quality of Wayfinding Signs

2.4.3. Participants

| This Work | Fontana. (2005) [79] | Martin et al. (2015) [82] | Mishler and Neider (2017) [83] | Yang et al. (2019) [84] | Na et al. (2022) [85] |

|---|---|---|---|---|---|

| Easy to find (eye-catching) | Conspicuity | Intrigue | Distinctiveness Isolation | Legibility Identifiability | Detectability Conspicuity Recognizability Legibility Comprehensibility |

| Aesthetic value | Aesthetics | Graphics | Simplicity | Simplicity | |

| Suits the environment | Environmental harmony | Influence | Consistency | Accuracy Non-interference | |

| N/A | Accessibility | Reassurance | Continuity Completeness | ||

| Information | Intelligibility |

3. Results

3.1. Sanity Check to Verify Usability and Design Experience of Tool

3.1.1. Saliency Prediction can Be Integrated with the Urban Design Tool without Sacrificing Usability

3.1.2. Designers Perceived the Tool to Be More Helpful and Efficient When They Were Provided with Saliency Feedback

3.2. The Saliency Feedback’s Impact on the Quality of the Design Outcome

3.2.1. Designers Produced Better Designs When Provided with Saliency Predictions (RQ1)

3.2.2. The Enhancement in the Design Quality Due to Saliency Feedback Was More Significant in Urban Areas than in Rural Areas (RQ2)

3.2.3. Saliency Feedback Significantly Enhanced the Design Quality Regardless of the User’s Age or Cognitive Ability, but the Improvement in Aesthetics Was Particularly Appreciated by the Elderly with SCD (RQ3)

4. Discussion

4.1. Virtual- and Mixed-Reality Settings of Urban Design Simulation

4.2. Providing Visual Saliency as Aid Enhanced UX of Designer and Design Quality

4.3. Saliency-Guided Urban Design Was Particularly Effective in Urban Areas and for Elderly People with SCD

4.4. Limitations

4.4.1. Selecting an Appropriate Colormap for Saliency Visualization Can Improve Usability

4.4.2. Placebo Effect Was Not Controlled in Our Experiment Design

4.5. Future Work

4.5.1. Implementation of Urban Salviz with More Universal Dataset

4.5.2. Tools and User Studies for Other Specific Urban Design Tasks and Expertise Levels

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Descriptive Statistics for Design Quality Assessment

| Q1 | Experimental (Designing with Saliency Feedback) | |||||

| Age | SCD | Total | ||||

| Older | Younger | SCD60+ | Others | |||

| Environment | Urban | 5.83 | 5.68 | 5.86 | 5.7 | 5.72 |

| Rural | 5.99 | 5.79 | 6.12 | 5.8 | 5.84 | |

| Total | 5.92 | 5.73 | 5.99 | 5.75 | 5.78 | |

| Control (Designing without Saliency Feedback) | ||||||

| Age | SCD | Total | ||||

| Older | Younger | SCD60+ | Others | |||

| Environment | Urban | 5.59 | 5.43 | 5.5 | 5.46 | 5.47 |

| Rural | 5.98 | 5.68 | 6.09 | 5.88 | 5.76 | |

| Total | 5.78 | 5.56 | 5.8 | 5.67 | 5.61 | |

| Q2 | Experimental (Designing with Saliency Feedback) | |||||

| Age | SCD | Total | ||||

| Older | Younger | SCD60+ | Others | |||

| Environment | Urban | 5.3 | 5.14 | 5.26 | 5.17 | 5.18 |

| Rural | 5.28 | 5.19 | 5.3 | 5.2 | 5.21 | |

| Total | 5.29 | 5.17 | 5.28 | 5.19 | 5.2 | |

| Control (Designing without Saliency Feedback) | ||||||

| Age | SCD | Total | ||||

| Older | Younger | SCD60+ | Others | |||

| Environment | Urban | 5.16 | 5.03 | 4.92 | 5.08 | 5.06 |

| Rural | 5.29 | 5.16 | 5.26 | 5.13 | 5.15 | |

| Total | 5.23 | 5.09 | 5.09 | 5.11 | 5.1 | |

| Q3 | Experimental (Designing with Saliency Feedback) | |||||

| Age | SCD | Total | ||||

| Older | Younger | SCD60+ | Others | |||

| Environment | Urban | 5.41 | 5.14 | 5.18 | 5.21 | 5.21 |

| Rural | 5.43 | 5.2 | 5.25 | 5.26 | 5.26 | |

| Total | 5.42 | 5.17 | 5.21 | 5.24 | 5.23 | |

| Control (Designing without Saliency Feedback) | ||||||

| Age | SCD | Total | ||||

| Older | Younger | SCD60+ | Others | |||

| Environment | Urban | 5.33 | 5.11 | 5 | 5.19 | 5.16 |

| Rural | 5.43 | 5.15 | 5.25 | 5.21 | 5.21 | |

| Total | 5.38 | 5.13 | 5.13 | 5.2 | 5.19 | |

Appendix B. Multi-Way Mixed ANOVA for Design Quality Assessment

| Source | df | F | p | Effect Size (η2p) |

|---|---|---|---|---|

| Q1. Easy to find | ||||

| Saliency feedback | 1 | 24.846 *** | <0.001 | 0.213 |

| Saliency feedback × Age | 1 | 1.868 | 0.175 | 0.020 |

| Saliency feedback × SCD | 1 | 1.410 | 0.238 | 0.015 |

| Environment | 1 | 24.120 *** | <0.001 | 0.208 |

| Environment × Age | 1 | 0.016 | 0.898 | 0.000 |

| Environment × SCD | 1 | 2.417 | 0.123 | 0.026 |

| Saliency feedback × Environment | 1 | 19.055 *** | <0.001 | 0.172 |

| Saliency feedback × Environment × Age | 1 | 0.087 | 0.768 | 0.001 |

| Saliency feedback × Environment × SCD | 1 | 2.282 | 0.134 | 0.024 |

| Error | 92 | |||

| Q2. Aesthetic value | ||||

| Saliency feedback | 1 | 8.800 ** | 0.004 | 0.087 |

| Saliency feedback × Age | 1 | 3.654 | 0.059 | 0.038 |

| Saliency feedback × SCD | 1 | 5.038 * | 0.027 | 0.052 |

| Environment | 1 | 2.546 | 0.114 | 0.027 |

| Environment × Age | 1 | 1.422 | 0.236 | 0.015 |

| Environment × SCD | 1 | 2.936 | 0.090 | 0.031 |

| Saliency feedback × Environment | 1 | 5.110 * | 0.026 | 0.053 |

| Saliency feedback × Environment × Age | 1 | 0.007 | 0.936 | 0.000 |

| Saliency feedback × Environment × SCD | 1 | 3.011 | 0.086 | 0.032 |

| Error | 92 | |||

| Q3. Suits the environment | ||||

| Saliency feedback | 1 | 2.780 | 0.099 | 0.029 |

| Saliency feedback × Age | 1 | 0.211 | 0.647 | 0.002 |

| Saliency feedback × SCD | 1 | 0.611 | 0.436 | 0.007 |

| Environment | 1 | 1.148 | 0.287 | 0.012 |

| Environment × Age | 1 | 0.252 | 0.617 | 0.003 |

| Environment × SCD | 1 | 0.820 | 0.367 | 0.009 |

| Saliency feedback × Environment | 1 | 1.130 | 0.291 | 0.012 |

| Saliency feedback × Environment × Age | 1 | 0.029 | 0.865 | 0.000 |

| Saliency feedback × Environment × SCD | 1 | 1.223 | 0.272 | 0.013 |

| Error | 31 |

References

- Caduff, D.; Timpf, S. On the assessment of landmark salience for human navigation. Cogn. Process. 2007, 9, 249–267. [Google Scholar] [CrossRef] [PubMed]

- Xu, R.; Wittkopf, S.; Roeske, C. Quantitative evaluation of BIPV visual impact in building retrofits using saliency models. Energies 2017, 10, 668. [Google Scholar] [CrossRef]

- Afacan, Y. Elderly-friendly inclusive urban environments: Learning from Ankara. Open House Int. 2013, 38, 52–62. [Google Scholar] [CrossRef]

- Raubal, M. Human wayfinding in unfamiliar buildings: A simulation with a cognizing agent. Cogn. Process. 2001, 3, 363–388. [Google Scholar]

- Passini, R. Wayfinding design: Logic, application and some thoughts on universality. Des. Stud. 1996, 17, 319–331. [Google Scholar] [CrossRef]

- Good Practices of Accessible Urban Development. 2022. Available online: https://desapublications.un.org/publications/good-practices-accessible-urban-development (accessed on 19 December 2022).

- Zhang, Y.; Xu, F.; Xia, T.; Li, Y. Quantifying the causal effect of individual mobility on health status in urban space. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–30. [Google Scholar] [CrossRef]

- Clare, L.; Nelis, S.M.; Quinn, C.; Martyr, A.; Henderson, C.; Hindle, J.V.; Jones, I.R.; Jones, R.W.; Knapp, M.; Kopelman, M.D.; et al. Improving the experience of dementia and enhancing active life—Living well with dementia: Study protocol for the ideal study. Health Qual. Life Outcomes 2014, 12, 164. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.; Kim, S.; Han, D.; Yang, H.; Park, Y.-W.; Kwon, B.C.; Ko, S. Guicomp: A GUI design assistant with real-time, multi-faceted feedback. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 20–23 October 2020. [Google Scholar] [CrossRef]

- Mitchell, L.; Burton, E. Neighbourhoods for life: Designing dementia-friendly outdoor environments. Qual. Ageing Older Adults 2006, 7, 26–33. [Google Scholar] [CrossRef]

- Seetharaman, K.; Shepley, M.M.; Cheairs, C. The saliency of geographical landmarks for community navigation: A photovoice study with persons living with dementia. Dementia 2020, 20, 1191–1212. [Google Scholar] [CrossRef] [PubMed]

- Sheehan, B.; Burton, E.; Mitchell, L. Outdoor wayfinding in dementia. Dementia 2006, 5, 271–281. [Google Scholar] [CrossRef]

- Saha, M.; Saugstad, M.; Maddali, H.T.; Zeng, A.; Holland, R.; Bower, S.; Dash, A.; Chen, S.; Li, A.; Hara, K.; et al. Project Sidewalk: A web-based crowdsourcing tool for collecting sidewalk accessibility data at scale. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019. [Google Scholar] [CrossRef]

- Vainio, T.; Karppi, I.; Jokinen, A.; Leino, H. Towards novel urban planning methods—Using eye-tracking systems to understand human attention in urban environments. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019. [Google Scholar] [CrossRef]

- Dupont, L.; Van Eetvelde, V. The use of eye-tracking in landscape perception research. In Proceedings of the Symposium on Eye Tracking Research and Applications, Safety Harbor, FL, USA, 26–28 March 2014. [Google Scholar] [CrossRef]

- Lin, H.; Chen, M. Managing and sharing geographic knowledge in virtual geographic environments (vges). Ann. GIS 2015, 21, 261–263. [Google Scholar] [CrossRef]

- Badwi, I.M.; Ellaithy, H.M.; Youssef, H.E. 3D-GIS parametric modelling for virtual urban simulation using CityEngine. Ann. GIS 2022, 28, 325–341. [Google Scholar] [CrossRef]

- Singh, S.P.; Jain, K.; Mandla, V.R. Image based Virtual 3D Campus modeling by using CityEngine. Am. J. Eng. Sci. Technol. Res. 2014, 2, 1–10. [Google Scholar]

- Kroner, A.; Senden, M.; Driessens, K.; Goebel, R. Contextual encoder–decoder network for visual saliency prediction. Neural Netw. 2020, 129, 261–270. [Google Scholar] [CrossRef]

- Cooney, S.; Raghavan, B. Opening the gate to urban repair: A tool for citizen-led design. Proc. ACM Hum.-Comput. Interact. 2022, 6, 1–25. [Google Scholar] [CrossRef]

- Signorelli, V. Game engines in urban planning: Visual and sound representations in public space. In Proceedings of the International Conference Virtual City and Territory (7è: 2011: Lisboa), Lisbon, Portugal, 11–13 October 2011. [Google Scholar] [CrossRef]

- Indraprastha, A.; Shinozaki, M. The investigation on using Unity3D game engine in Urban Design Study. ITB J. Inf. Commun. Technol. 2009, 3, 1–18. [Google Scholar] [CrossRef]

- Shneiderman, B. Creativity support tools: Accelerating discovery and innovation. Commun. ACM 2007, 50, 20–32. [Google Scholar] [CrossRef]

- Frich, J.; MacDonald Vermeulen, L.; Remy, C.; Biskjaer, M.M.; Dalsgaard, P. Mapping the landscape of creativity support tools in HCI. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019. [Google Scholar] [CrossRef]

- Dupont, L.; Ooms, K.; Antrop, M.; Van Eetvelde, V. Comparing saliency maps and eye-tracking focus maps: The potential use in visual impact assessment based on landscape photographs. Landsc. Urban Plan. 2016, 148, 17–26. [Google Scholar] [CrossRef]

- Simon, L.; Tarel, J.-P.; Bremond, R. Alerting the drivers about road signs with poor visual saliency. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009. [Google Scholar] [CrossRef]

- Lee, S.W.; Krosnick, R.; Park, S.Y.; Keelean, B.; Vaidya, S.; O’Keefe, S.D.; Lasecki, W.S. Exploring real-time collaboration in crowd-powered systems through a UI design tool. Proc. ACM Hum.-Comput. Interact. 2018, 2, 1–23. [Google Scholar] [CrossRef]

- Kim, J.; Agrawala, M.; Bernstein, M.S. Mosaic: Designing online creative communities for sharing works-in-progress. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, Portland, OR, USA, 25 February–1 March 2017. [Google Scholar] [CrossRef]

- Kim, J.; Sterman, S.; Cohen, A.A.; Bernstein, M.S. Mechanical novel: Crowdsourcing complex work through reflection and revision. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, Portland, OR, USA, 25 February–1 March 2017. [Google Scholar] [CrossRef]

- Retelny, D.; Robaszkiewicz, S.; To, A.; Lasecki, W.S.; Patel, J.; Rahmati, N.; Doshi, T.; Valentine, M.; Bernstein, M.S. Expert crowdsourcing with Flash teams. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014. [Google Scholar] [CrossRef]

- Newman, A.; McNamara, B.; Fosco, C.; Zhang, Y.B.; Sukhum, P.; Tancik, M.; Kim, N.W.; Bylinskii, Z. Turkeyes: A web-based toolbox for crowdsourcing attention data. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 October 2020. [Google Scholar] [CrossRef]

- Borji, A.; Itti, L. State-of-the-art in visual attention modeling. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 185–207. [Google Scholar] [CrossRef]

- Borji, A. Saliency prediction in the Deep Learning Era: Successes and limitations. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 679–700. [Google Scholar] [CrossRef] [PubMed]

- Bylinskii, Z.; Kim, N.W.; O’Donovan, P.; Alsheikh, S.; Madan, S.; Pfister, H.; Durand, F.; Russell, B.; Hertzmann, A. Learning visual importance for graphic designs and data visualizations. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, Québec City, QC, Canada, 22–25 October 2017. [Google Scholar] [CrossRef]

- Cheng, S.; Fan, J.; Hu, Y. Visual saliency model based on crowdsourcing eye tracking data and its application in visual design. Pers. Ubiquitous Comput. 2020. [Google Scholar] [CrossRef]

- Shen, C.; Zhao, Q. Webpage saliency. In Proceedings of the European Conference on Computer Vision (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; pp. 33–46. [Google Scholar] [CrossRef]

- Leiva, L.A.; Xue, Y.; Bansal, A.; Tavakoli, H.R.; Köroðlu, T.; Du, J.; Dayama, N.R.; Oulasvirta, A. Understanding visual saliency in mobile user interfaces. In Proceedings of the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services, Oldenburg, Germany, 5–8 October 2020. [Google Scholar] [CrossRef]

- Ban, Y.; Lee, K. Re-enrichment learning: Metadata saliency for the evolutive personalization of a recommender system. Appl. Sci. 2021, 11, 1733. [Google Scholar] [CrossRef]

- Liu, Y.; Heer, J. Somewhere over the rainbow: An empirical assessment of quantitative colormaps. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar] [CrossRef]

- Luo, J.; Liu, P.; Cao, L. Coupling a physical replica with a digital twin: A comparison of participatory decision-making methods in an urban park environment. ISPRS Int. J. Geo-Inf. 2022, 11, 452. [Google Scholar] [CrossRef]

- Marthya, K.; Furlan, R.; Ellath, L.; Esmat, M.; Al-Matwi, R. Place-making of transit towns in Qatar: The case of Qatar National Museum-Souq Waqif Corridor. Designs 2021, 5, 18. [Google Scholar] [CrossRef]

- Carpman, J.R.; Grant, M.A. Wayfinding: A broad view. In Handbook of Environmental Psychology; John Wiley & Sons, Inc.: New York, NY, USA, 2002; pp. 427–442. [Google Scholar]

- Montello, D.; Sas, C. Human factors of wayfinding in navigation. In International Encyclopedia of Ergonomics and Human Factors, Second Edition—3 Volume Set; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar] [CrossRef]

- Costa, M.; Bonetti, L.; Vignali, V.; Bichicchi, A.; Lantieri, C.; Simone, A. Driver’s visual attention to different categories of roadside advertising signs. Appl. Ergon. 2019, 78, 127–136. [Google Scholar] [CrossRef]

- Moffat, S.D. Aging and spatial navigation: What do we know and where do we go? Neuropsychol. Rev. 2009, 19, 478–489. [Google Scholar] [CrossRef]

- Van Patten, R.; Nguyen, T.T.; Mahmood, Z.; Lee, E.E.; Daly, R.E.; Palmer, B.W.; Wu, T.-C.; Tu, X.; Jeste, D.V.; Twamley, E.W. Physical and mental health characteristics of adults with subjective cognitive decline: A study of 3407 people aged 18–81 years from an Mturk-based U.S. Natl. Sample 2020. [Google Scholar] [CrossRef]

- Burgess, N.; Trinkler, I.; King, J.; Kennedy, A.; Cipolotti, L. Impaired allocentric spatial memory underlying topographical disorientation. Rev. Neurosci. 2006, 17, 239–251. [Google Scholar] [CrossRef]

- Kalová, E.; Vlček, K.; Jarolímová, E.; Bureš, J. Allothetic orientation and sequential ordering of places is impaired in early stages of alzheimer’s disease: Corresponding results in real space tests and computer tests. Behav. Brain Res. 2005, 159, 175–186. [Google Scholar] [CrossRef]

- Cerman, J.; Andel, R.; Laczo, J.; Vyhnalek, M.; Nedelska, Z.; Mokrisova, I.; Sheardova, K.; Hort, J. Subjective spatial navigation complaints—A frequent symptom reported by patients with subjective cognitive decline, mild cognitive impairment and alzheimer’s disease. Curr. Alzheimer Res. 2018, 15, 219–228. [Google Scholar] [CrossRef] [PubMed]

- Lee, B.; Srivastava, S.; Kumar, R.; Brafman, R.; Klemmer, S.R. Designing with interactive example galleries. In Proceedings of the 28th International Conference on Human Factors in Computing Systems—CHI ’10, Atlanta Georgia, GA, USA, 10–15 April 2010. [Google Scholar] [CrossRef]

- Bylinskii, Z.; Judd, T.; Oliva, A.; Torralba, A.; Durand, F. What do different evaluation metrics tell us about saliency models? IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 740–757. [Google Scholar] [CrossRef] [PubMed]

- Kümmerer, M.; Wallis, T.S.; Bethge, M. Saliency benchmarking made easy: Separating models, maps and metrics. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018; pp. 798–814. [Google Scholar] [CrossRef]

- Jiang, M.; Huang, S.; Duan, J.; Zhao, Q. Salicon: Saliency in context. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Wooding, D.S. Fixation maps: Quantifying eye-movement traces. In Proceedings of the symposium on Eye tracking research & applications—ETRA ’02, New Orleans, LA, USA, 25–27 March 2002. [Google Scholar] [CrossRef]

- Duchowski, A.T.; Price, M.M.; Meyer, M.; Orero, P. Aggregate gaze visualization with real-time heatmaps. In Proceedings of the Symposium on Eye Tracking Research and Applications, Santa Barbara, CA, USA, 28–30 March 2012. [Google Scholar] [CrossRef]

- Brooke, J. Sus: A ‘quick and dirty’ usability scale. Usability Eval. Ind. 1996, 189, 207–212. [Google Scholar] [CrossRef]

- Ahmed, S.; Wallace, K.M.; Blessing, L.T. Understanding the differences between how novice and experienced designers approach design tasks. Res. Eng. Des. 2003, 14, 1–11. [Google Scholar] [CrossRef]

- Dow, S.P.; Glassco, A.; Kass, J.; Schwarz, M.; Schwartz, D.L.; Klemmer, S.R. Parallel Prototyping leads to better design results, more divergence, and increased self-efficacy. ACM Trans. Comput.-Hum. Interact. 2010, 17, 1–24. [Google Scholar] [CrossRef]

- Foong, E.; Gergle, D.; Gerber, E.M. Novice and expert sensemaking of crowdsourced design feedback. Proc. ACM Hum.-Comput. Interact. 2017, 1, 1–18. [Google Scholar] [CrossRef]

- Luther, K.; Tolentino, J.-L.; Wu, W.; Pavel, A.; Bailey, B.P.; Agrawala, M.; Hartmann, B.; Dow, S.P. Structuring, aggregating, and evaluating crowdsourced design critique. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, Vancouver, BC, Canada, 14–18 March 2015. [Google Scholar] [CrossRef]

- Liang, Q.; Wang, M.; Nagakura, T. Urban immersion: A web-based crowdsourcing platform for collecting urban space perception data. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Yan, B.; Janowicz, K.; Mai, G.; Gao, S. From ITDL to Place2Vec. In Proceedings of the 25th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Redondo Beach, CA, USA, 7–10 November 2017. [Google Scholar] [CrossRef]

- Kruse, J.; Kang, Y.; Liu, Y.-N.; Zhang, F.; Gao, S. Places for play: Understanding human perception of playability in cities using Street View images and Deep Learning. Comput. Environ. Urban Syst. 2021, 90, 101693. [Google Scholar] [CrossRef]

- Panagoulia, E. Open data and human-based outsourcing neighborhood rating: A case study for San Francisco Bay Area gentrification rate. In Lecture Notes in Geoinformation and Cartography; Springer: Berlin/Heidelberg, Germany, 2017; pp. 317–335. [Google Scholar] [CrossRef]

- Kittur, A.; Chi, E.H.; Suh, B. Crowdsourcing user studies with mechanical turk. In Proceeding of the Twenty-Sixth Annual CHI Conference on Human Factors in Computing Systems—CHI ’08, Florence, Italy, 5–10 April 2008. [Google Scholar] [CrossRef]

- Nebeling, M.; Speicher, M.; Norrie, M.C. Crowdstudy: General toolkit for crowdsourced evaluation of web interfaces. In Proceedings of the 5th ACM SIGCHI Symposium on Engineering Interactive Computing Systems—EICS ’13, London, UK, 24–27 June 2013. [Google Scholar] [CrossRef]

- O’Donovan, P.; Agarwala, A.; Hertzmann, A. Designscape: Design with interactive layout suggestions. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015. [Google Scholar] [CrossRef]

- Oppenheimer, D.M.; Meyvis, T.; Davidenko, N. Instructional manipulation checks: Detecting satisficing to increase statistical power. J. Exp. Soc. Psychol. 2009, 45, 867–872. [Google Scholar] [CrossRef]

- DeSimone, J.A.; Harms, P.D. Dirty data: The effects of screening respondents who provide low-quality data in survey research. J. Bus. Psychol. 2017, 33, 559–577. [Google Scholar] [CrossRef]

- Huang, J.L.; Liu, M.; Bowling, N.A. Insufficient effort responding: Examining an insidious confound in survey data. J. Appl. Psychol. 2015, 100, 828–845. [Google Scholar] [CrossRef]

- Meade, A.W.; Craig, S.B. Identifying careless responses in survey data. Psychol. Methods 2012, 17, 437–455. [Google Scholar] [CrossRef]

- Jacinto, A.F.; Brucki, S.M.; Porto, C.S.; de Arruda Martins, M.; Nitrini, R. Subjective memory complaints in the elderly: A sign of cognitive impairment? Clinics 2014, 69, 194–197. [Google Scholar] [CrossRef] [PubMed]

- Kielb, S.; Rogalski, E.; Weintraub, S.; Rademaker, A. Objective features of subjective cognitive decline in a united states national database. Alzheimer’s Dement. 2017, 13, 1337–1344. [Google Scholar] [CrossRef] [PubMed]

- Yuan, A.; Luther, K.; Krause, M.; Vennix, S.I.; Dow, S.P.; Hartmann, B. Almost an expert: The effects of rubrics and expertise on perceived value of crowdsourced design critiques. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing, San Francisco, CA, USA, 27 February–2 March 2016. [Google Scholar] [CrossRef]

- Motamedi, A.; Wang, Z.; Yabuki, N.; Fukuda, T.; Michikawa, T. Signage visibility analysis and optimization system using BIM-enabled virtual reality (VR) environments. Adv. Eng. Inform. 2017, 32, 248–262. [Google Scholar] [CrossRef]

- Hunt, R.R. The subtlety of distinctiveness: What von Restorff really did. Psychon. Bull. Rev. 1995, 2, 105–112. [Google Scholar] [CrossRef]

- Kurosu, M.; Kashimura, K. pparent usability vs. inherent usability: Experimental analysis on the determinants of the apparent usability. In Proceedings of the Conference Companion on Human factors in computing systems—CHI ’95, Denver, CO, USA, 7–11 May 1995. [Google Scholar] [CrossRef]

- Tuch, A.N.; Roth, S.P.; Hornbæk, K.; Opwis, K.; Bargas-Avila, J.A. Is beautiful really usable? toward understanding the relation between usability, aesthetics, and affect in HCI. Comput. Hum. Behav. 2012, 28, 1596–1607. [Google Scholar] [CrossRef]

- Fontana, A.M.F. Psychophysics study on conspicuity, aesthetics and urban environment harmony of traffic signs. Collect. Open Thesis Transp. Res. 2005, 2005, 4. [Google Scholar] [CrossRef]

- Mason, W.; Suri, S. Conducting behavioral research on Amazon’s mechanical turk. Behav. Res. Methods 2011, 44, 1–23. [Google Scholar] [CrossRef]

- Gazova, I.; Laczó, J.; Rubinova, E.; Mokrisova, I.; Hyncicova, E.; Andel, R.; Vyhnalek, M.; Sheardova, K.; Coulson, E.J.; Hort, J. Spatial navigation in young versus older adults. Front. Aging Neurosci. 2013, 5, 94. [Google Scholar] [CrossRef]

- Martin, C.L.; Momtaz, S.; Jordan, A.; Moltschaniwskyj, N.A. An assessment of the effectiveness of in-situ signage in multiple-use marine protected areas in providing information to different recreational users. Mar. Policy 2015, 56, 78–85. [Google Scholar] [CrossRef]

- Mishler, A.D.; Neider, M.B. Improving wayfinding for older users with selective attention deficits. Ergon. Des. Q. Hum. Factors Appl. 2016, 25, 11–16. [Google Scholar] [CrossRef]

- Yang, J.; Bao, X.; Zhu, Y.; Wang, D. Usability study of signage way-finding system in large public space. In Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2019; pp. 875–884. [Google Scholar] [CrossRef]

- Na, E.K.; Park, D.; Lee, D.H.; Yi, J.H. Color visibility of public wayfinding signs: Using the wayfinding sign of the Asia Culture Center. Arch. Des. Res. 2022, 35, 297–311. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An empirical evaluation of the system usability scale. Int. J. Hum.-Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Luigi, M.; Massimiliano, M.; Aniello, P.; Gennaro, R.; Virginia, P.R. On the validity of immersive virtual reality as tool for multisensory evaluation of urban spaces. Energy Procedia 2015, 78, 471–476. [Google Scholar] [CrossRef]

- Borland, D.; Taylor Ii, R.M. Rainbow color map (still) considered harmful. IEEE Comput. Graph. Appl. 2007, 27, 14–17. [Google Scholar] [CrossRef]

- Zhou, L.; Hansen, C.D. A survey of colormaps in visualization. IEEE Trans. Vis. Comput. Graph. 2016, 22, 2051–2069. [Google Scholar] [CrossRef] [PubMed]

- Brewer, C.A. Color use guidelines for mapping and visualization. Vis. Mod. Cartogr. 1994, 2, 123–147. [Google Scholar] [CrossRef]

- Breslow, L.A.; Ratwani, R.M.; Trafton, J.G. Cognitive models of the influence of color scale on data visualization tasks. Hum. Factors J. Hum. Factors Ergon. Soc. 2009, 51, 321–338. [Google Scholar] [CrossRef]

- Vaccaro, K.; Huang, D.; Eslami, M.; Sandvig, C.; Hamilton, K.; Karahalios, K. The illusion of control: Placebo effects of control settings. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar] [CrossRef]

- Sitzmann, V.; Serrano, A.; Pavel, A.; Agrawala, M.; Gutierrez, D.; Masia, B.; Wetzstein, G. Saliency in VR: How do people explore virtual environments? IEEE Trans. Vis. Comput. Graph. 2018, 24, 1633–1642. [Google Scholar] [CrossRef]

| Settings | Components | Advantages | Disadvantages |

|---|---|---|---|

| Mixed reality | Computer USB camera for MR settings | Rapid construction of the ecologically valid environment Consistent to saliency dataset | Difficult to simulate the pedestrian’s visual interaction with the surroundings |

| Virtual reality | Computer (+ digital twin 3D modeling) | Dynamic simulation from the virtual pedestrian’s viewpoint (e.g., walk, stop, watch) | Difficult to include natural visual distractions or on-site events in the experiment |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, G.; Yeo, D.; Lee, J.; Kim, S. Simulating Urban Element Design with Pedestrian Attention: Visual Saliency as Aid for More Visible Wayfinding Design. Land 2023, 12, 394. https://doi.org/10.3390/land12020394

Kim G, Yeo D, Lee J, Kim S. Simulating Urban Element Design with Pedestrian Attention: Visual Saliency as Aid for More Visible Wayfinding Design. Land. 2023; 12(2):394. https://doi.org/10.3390/land12020394

Chicago/Turabian StyleKim, Gwangbin, Dohyeon Yeo, Jieun Lee, and SeungJun Kim. 2023. "Simulating Urban Element Design with Pedestrian Attention: Visual Saliency as Aid for More Visible Wayfinding Design" Land 12, no. 2: 394. https://doi.org/10.3390/land12020394

APA StyleKim, G., Yeo, D., Lee, J., & Kim, S. (2023). Simulating Urban Element Design with Pedestrian Attention: Visual Saliency as Aid for More Visible Wayfinding Design. Land, 12(2), 394. https://doi.org/10.3390/land12020394