Enhancing Explainable AI Land Valuations Reporting for Consistency, Objectivity, and Transparency

Abstract

1. Introduction

2. Setting the Standard: Duty of Care and Data Integrity in AI-Enabled Valuation

2.1. Adhering to Best Practices in AI-Enabled Valuation

2.2. Emphasising Consistency Through Replicability

2.3. Cultivating Objectivity Through Rigorous Validation

2.4. Advancing Transparency and Governance Through Explainable AI (XAI)

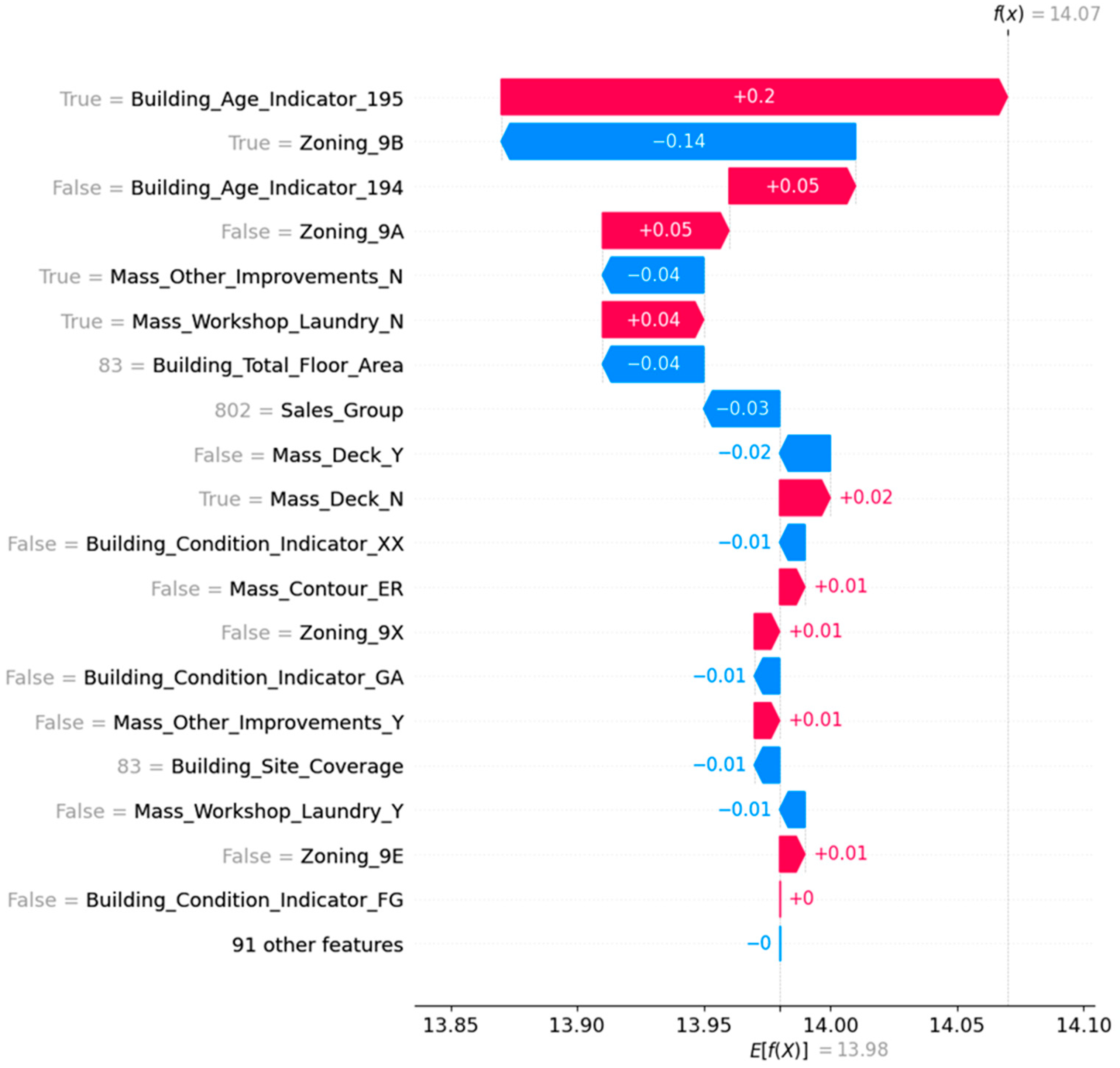

- Feature Contribution Analysis: This section details how each feature contributed to the final valuation. For instance, it could illustrate the impact of a building’s age or the specific zoning on a property’s value;

- Model Decision Process: This part explains the decision-making process of the model in layman’s terms, providing clarity and understanding. This section demystifies AI’s workings, making it more accessible and trustworthy;

- Scenario Simulation: This part offers projections on how changes in the key features might affect a property’s value. It can simulate scenarios like market shifts, land renovations, or changes in zoning laws, providing valuable foresight.

3. Industry Guidance on the Legal and Ethical Implications

3.1. Methodological Approach: Scoping Study for Framework Development

- Identifying the research question—in this case, how to structure and evaluate the transparency, consistency, and accountability of AI-generated property valuations;

- Identifying the relevant materials—including standards from professional bodies (e.g., IVSC, RICS), international guidelines (e.g., EU AI Act, ISO/IEC standards), and peer-reviewed and practitioner literature on XAI reporting;

- Study selection—prioritising sources that propose, critique, or implement explainability principles within decision-support tools or automated valuation models;

- Charting the data—extracting the common themes and criteria relevant to trustworthy and auditable AI reporting in property contexts;

- Collating and reporting the results—leading to the development of the proposed checklist as the practical output of this conceptual synthesis.

3.2. A Checklist for Machine Learning (ML) Valuation Reporting

3.3. Good Practices in Reporting of AI-Enabled Valuation

4. Discussion

4.1. Positioning and Future Development of the Checklist

4.2. Future Research Directions

4.3. Limitations and Adaptability

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AMV | Automated Mass Valuation |

| AVM | Automated Valuation Model |

| ML | Machine Learning |

| RICS | Royal Institution of Chartered Surveyors |

| SHAP | SHapley Additive exPlanation |

| XAI | Explainable Artificial Intelligence |

| 1 | [1975] 3 All ER 99. |

| 2 | [1990] UKHL 1; [1989] 2 WLR 790; [1990] 1 AC 831. |

| 3 | [1989] 1 EGLR 169. |

References

- Terranova, C.; Cestonaro, C.; Fava, L.; Cinquetti, A. AI and professional liability assessment in healthcare. A revolution in legal medicine? Front. Med. 2023, 10, 1337335. [Google Scholar] [CrossRef] [PubMed]

- RICS. Rules of Conduct; Royal Institution of Chartered Surveyors: London, UK, 2021. [Google Scholar]

- RICS. Risk, Liabilities and Insurance Guidance Notes; Royal Institution of Chartered Surveyors: London, UK, 2021. [Google Scholar]

- RICS. RICS Valuation—Global Standards; Royal Institution of Chartered Surveyors: London, UK, 2020. [Google Scholar]

- Jassar, S.; Adams, S.J.; Zarzeczny, A.; Burbridge, B.E. The future of artificial intelligence in medicine: Medical-legal considerations for health leaders. Healthc. Manag. Forum 2022, 35, 185–189. [Google Scholar] [CrossRef] [PubMed]

- Courts of New Zealand. Guidelines for Use of Generative Artificial Intelligence in Courts and Tribunals: Judges, Judicial Officers, Tribunal Members and Judicial Support Staff; Courts of New Zealand: Wellington, New Zealand, 2023. [Google Scholar]

- Cheung, K.S. Real estate insights unleashing the potential of ChatGPT in property valuation reports: The “Red Book” compliance chain-of-thought (CoT) prompt engineering. J. Prop. Invest. Financ. 2023, 42, 200–206. [Google Scholar] [CrossRef]

- Gichoya, J.W.; Thomas, K.; Celi, L.A.; Safdar, N.; Banerjee, I.; Banja, J.D.; Seyyed-Kalantari, L.; Trivedi, H.; Purkayastha, S. AI pitfalls and what not to do: Mitigating bias in AI. Br. J. Radiol. 2023, 96, 20230023. [Google Scholar] [CrossRef] [PubMed]

- Schiff, D.; Borenstein, J. How should clinicians communicate with patients about the roles of artificially intelligent team members? AMA J. Ethics 2019, 21, 138–145. [Google Scholar]

- Khanna, S.; Srivastava, S. Patient-centric ethical framework for privacy, transparency, and bias awareness in deep learning-based medical systems. Appl. Res. Artif. Intell. Cloud Comput. 2020, 3, 16–35. [Google Scholar]

- Ball, P. Is AI leading to a reproducibility crisis in science? Nature 2023, 624, 22–25. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; He, Q.; Huang, W.; Tsim, S.-T.; Qiu, J.-W. Valuation of urban parks under the three-level park system in Shenzhen: A hedonic analysis. Land 2025, 14, 182. [Google Scholar] [CrossRef]

- Jaroszewicz, J.; Horynek, H. Aggregated housing price predictions with no information about structural attributes—Hedonic models: Linear regression and a machine learning approach. Land 2024, 13, 1881. [Google Scholar] [CrossRef]

- RICS. Comparable Evidence in Real Estate Valuation, 1st ed.; Royal Institution of Chartered Surveyors: London, UK, 2019. [Google Scholar]

- Arksey, H.; O’malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- LINZS30300; Rating Valuations Rules 2008. Toitū Te Whenua—Land Information New Zealand: Wellington, New Zealand, 2010.

- International Valuation Standards Council (IVSC). International Valuation Standards; Royal Institution of Chartered Surveyors: London, UK, 2025; Available online: https://www.rics.org/profession-standards/rics-standards-and-guidance/sector-standards/valuation-standards/red-book/international-valuation-standards (accessed on 10 April 2025).

| Section/Topic | Checklist Item |

|---|---|

| Valuation Summary | Indicate AI’s role in the valuation exercise; summarise the methodology, data, and key findings. |

| Background and Objectives | Discuss the relevance of AI in enhancing property/land valuation accuracy and efficiency. |

| Modelling Steps |

|

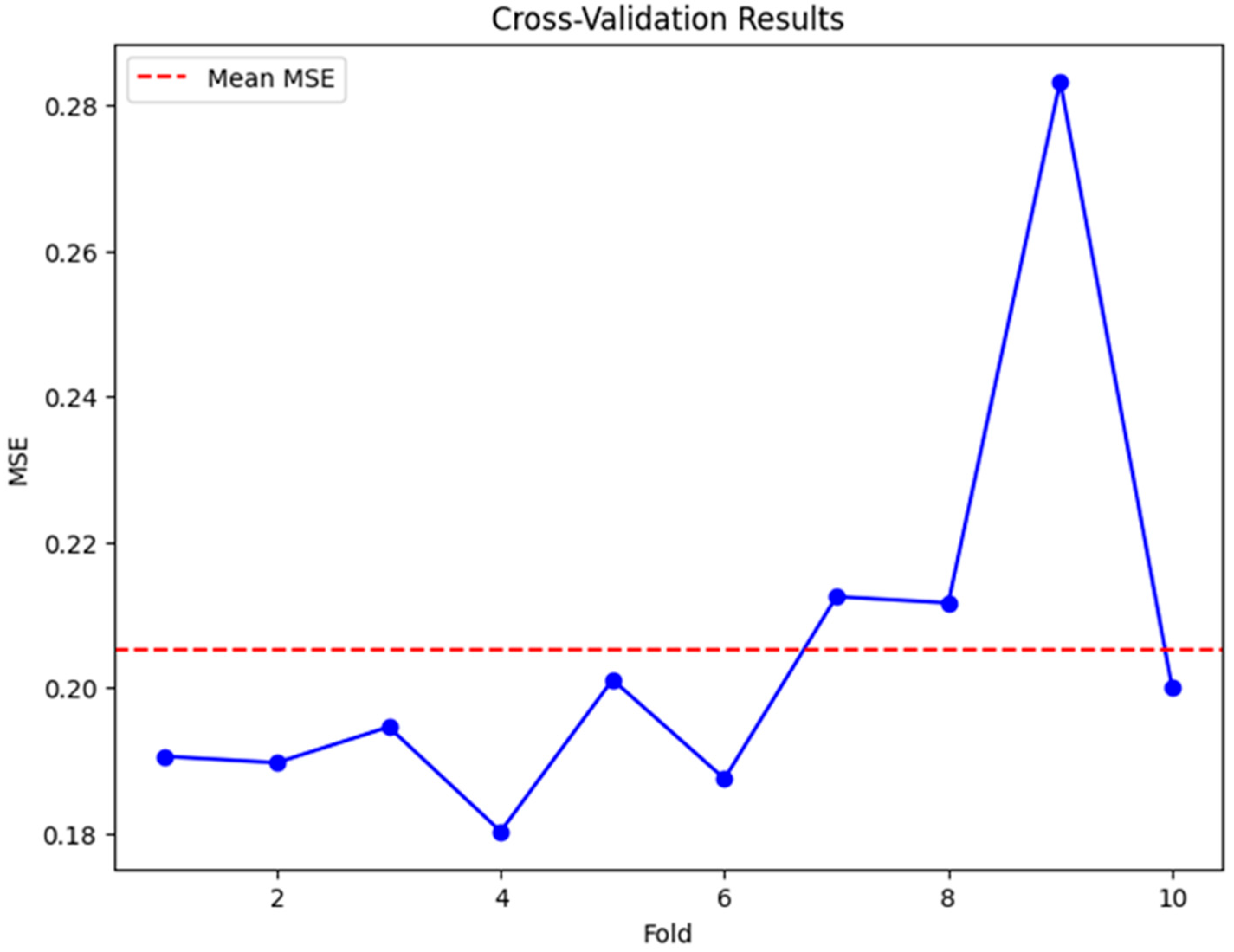

| Model Performance |

|

| Statistical Methods |

|

| Presentation of Models |

|

| Quality Assessment (Risk of Bias) |

|

| Field Text | Field Name | Field Number | Data Type |

|---|---|---|---|

| DVR—Valuation Number Roll DVR—Valuation Number Assessment DVR—Valuation Number Suffix | Valuation_Number_Roll | 1 | Numeric |

| Valuation_Number_Assessment | 2 | Numeric | |

| Valuation_Number_Suffix | 3 | Alpha | |

| DVR—District (Territorial Authority) Code DVR—Situation Number | District_Code | 4 | Numeric |

| Situation_Number | 5 | Numeric | |

| DVR—Additional Situation Number DVR—Situation Name DVR—Legal Description DVR—Land Area | Additional_Situation_Number | 6 | Alpha |

| Situation_Name | 7 | Alpha | |

| Legal_Description | 8 | Alpha | |

| Land_Area | 9 | Numeric | |

| DVR—Property Category DVR—Ownership Code | Property_Category | 10 | Alpha |

| Ownership_Code | 11 | Numeric | |

| DVR—Current Effective Valuation Date | Current_Effective_Valuation_Date | 12 | Numeric |

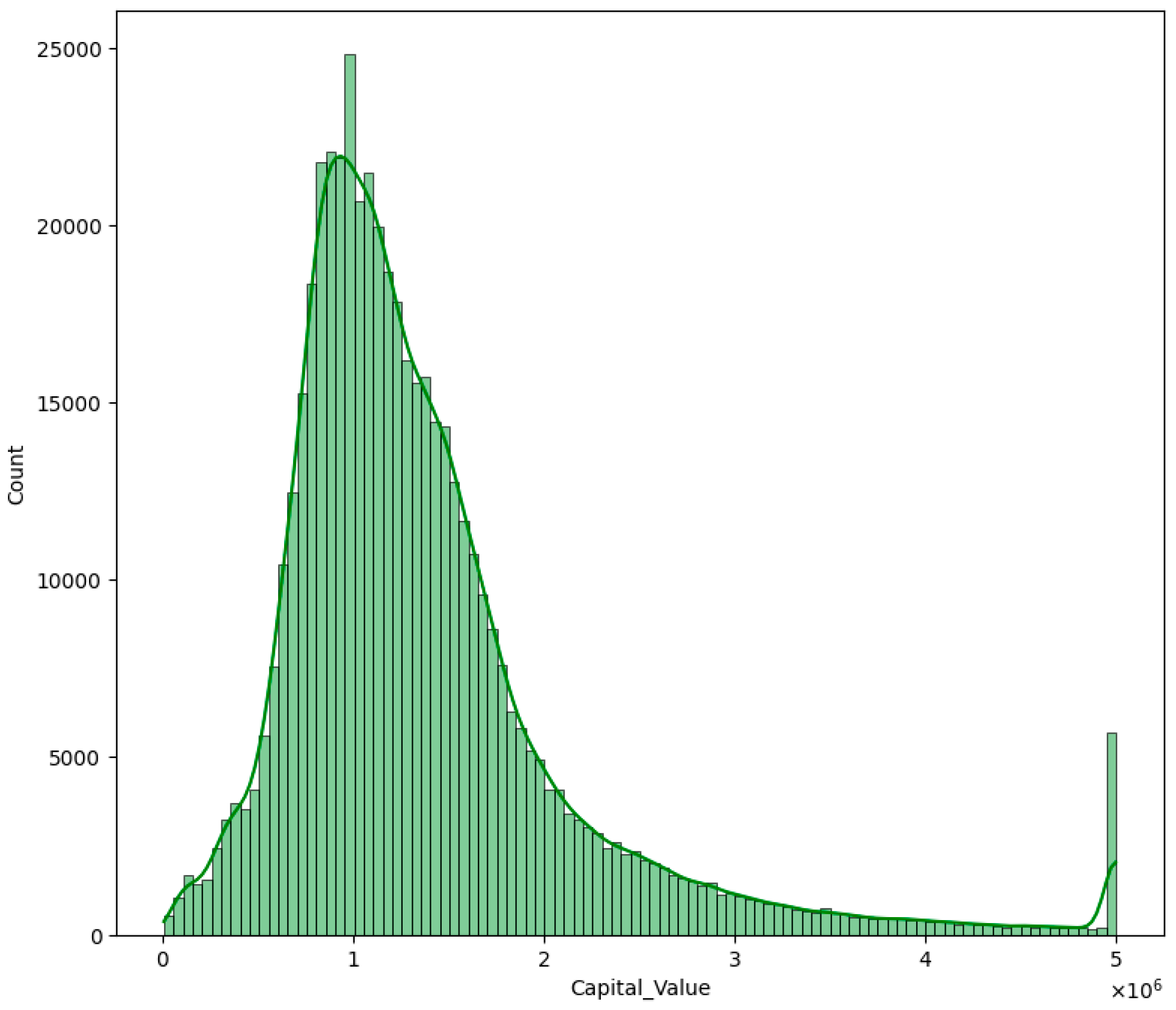

| DVR—Capital Value | Capital_Value | 13 | Numeric |

| DVR—Improvements Value | Improvements_Value | 14 | Numeric |

| DVR—Land Value | Land_Value | 15 | Numeric |

| DVR—Trees | Trees | 16 | Numeric |

| DVR—Improvements Description | Improvements_Description | 17 | Alpha |

| DVR—Certificate of Title | Certificate_of_Title | 18 | Alpha |

| DVR—Additional Certificate of Title | Additional_Certificate_of_Title | 19 | Alpha |

| DVR—Zoning | Zoning | 20 | Alpha |

| DVR—Actual Property Use | Actual_Property_Use | 21 | Numeric |

| DVR—Units of Use | Units_of_Use | 22 | Numeric |

| DVR—Off-street Parking | Off_street_Parking | 23 | Numeric |

| DVR—Building Age Indicator | Building_Age_Indicator | 24 | Alpha |

| DVR—Building Condition Indicator | Building_Condition_Indicator | 25 | Alpha |

| DVR—Building Construction Indicator | Building_Construction_Indicator | 26 | Alpha |

| DVR—Building Site Coverage | Building_Site_Coverage | 27 | Numeric |

| DVR—Building Total Floor Area | Building_Total_Floor_Area | 28 | Numeric |

| DVR—Mass Contour | Mass_Contour | 29 | Alpha |

| DVR—Mass View | Mass_View | 30 | Alpha |

| DVR—Mass Scope of View | Mass_Scope_of_View | 31 | Alpha |

| DVR—Mass Total Living Area | Mass_Total_Living_Area | 32 | Numeric |

| DVR—Mass Deck | Mass_Deck | 33 | Alpha |

| DVR—Mass Workshop Laundry | Mass_Workshop_Laundry | 34 | Alpha |

| DVR—Mass Other Improvements | Mass_Other_Improvements | 35 | Alpha |

| DVR—Mass Garage Freestanding | Mass_Garage_Freestanding | 36 | Numeric |

| DVR—Mass Garage Under Main Roof | Mass_Garage_Under_Main_Roof | 37 | Numeric |

| DVR—Sales Group | Sales_Group | 38 | Numeric |

| SAP—Formatted Street Number | Formatted_street_num | 39 | Alpha |

| SAP—Start House Number | Start_house_num | 40 | Alpha |

| SAP—Start House Number Suffix | Start_house_sfx | 41 | Alpha |

| SAP—Start Unit Number | Start_unit_num | 42 | Alpha |

| SAP—Start Unit Suffix | Start_unit_sfx | 43 | Alpha |

| SAP—Start Level Number | Start_level_num | 44 | Alpha |

| SAP—Start Level Number Suffix | Start_level_sfx | 45 | Alpha |

| SAP—End House Number | End_house_num | 46 | Alpha |

| SAP—End House Number Suffix | End_house_sfx | 47 | Alpha |

| SAP—End Unit Number | End_unit_num | 48 | Alpha |

| SAP—End Unit Number Suffix | End_unit_sfx | 49 | Alpha |

| SAP—End Level Number | End_level_num | 50 | Alpha |

| SAP—End Level Number Suffix | End_level_sfx | 51 | Alpha |

| SAP—Unit Prefix Number | Unit_prefix | 52 | Alpha |

| SAP—Street Name | Street_name | 53 | Alpha |

| SAP—Suburb Name | Suburb_name | 54 | Alpha |

| SAP—Property Postcode | Property_postcode | 55 | Alpha |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yiu, C.Y.; Cheung, K.S. Enhancing Explainable AI Land Valuations Reporting for Consistency, Objectivity, and Transparency. Land 2025, 14, 927. https://doi.org/10.3390/land14050927

Yiu CY, Cheung KS. Enhancing Explainable AI Land Valuations Reporting for Consistency, Objectivity, and Transparency. Land. 2025; 14(5):927. https://doi.org/10.3390/land14050927

Chicago/Turabian StyleYiu, Chung Yim, and Ka Shing Cheung. 2025. "Enhancing Explainable AI Land Valuations Reporting for Consistency, Objectivity, and Transparency" Land 14, no. 5: 927. https://doi.org/10.3390/land14050927

APA StyleYiu, C. Y., & Cheung, K. S. (2025). Enhancing Explainable AI Land Valuations Reporting for Consistency, Objectivity, and Transparency. Land, 14(5), 927. https://doi.org/10.3390/land14050927

_Cheung.jpeg)