Identification and Classification of Maize Drought Stress Using Deep Convolutional Neural Network

Abstract

:1. Introduction

- In order to rapidly, accurately, and nondestructively identify different drought stress levels in maize, we proposed the use of the DCNN method to identify and classify different drought stress levels of maize. We compared the methods of transfer learning and train from scratch using the Resnet50 and Resnet152 model to identify maize drought stress. The results show that the identification accuracy of transfer learning is higher than that of train from scratch, and it saves a lot of time.

- We set up an image dataset for maize drought stress identification and classification. Digital cameras were applied to obtain maize drought stress images in the field. Drought stress was divided into three levels: optimum moisture, light, and moderate drought stress. Maize plant images were captured every two hours. The size of the images was 640 × 480 pixels, and a total of 3461 images were captured in two stages (seedling and jointing stage) and were transformed into gray images.

- We compared the accuracy of maize drought identification between the DCNN and traditional machine learning approach based on manual feature extraction on the same dataset. The results showed that the accuracy of DCNN was significantly higher than that of the traditional machine learning method based on color and texture.

2. Materials and Methods

2.1. Field Experiment

2.2. Image Data Acquisition

2.3. DCNN Model

2.4. Training Process

3. Results

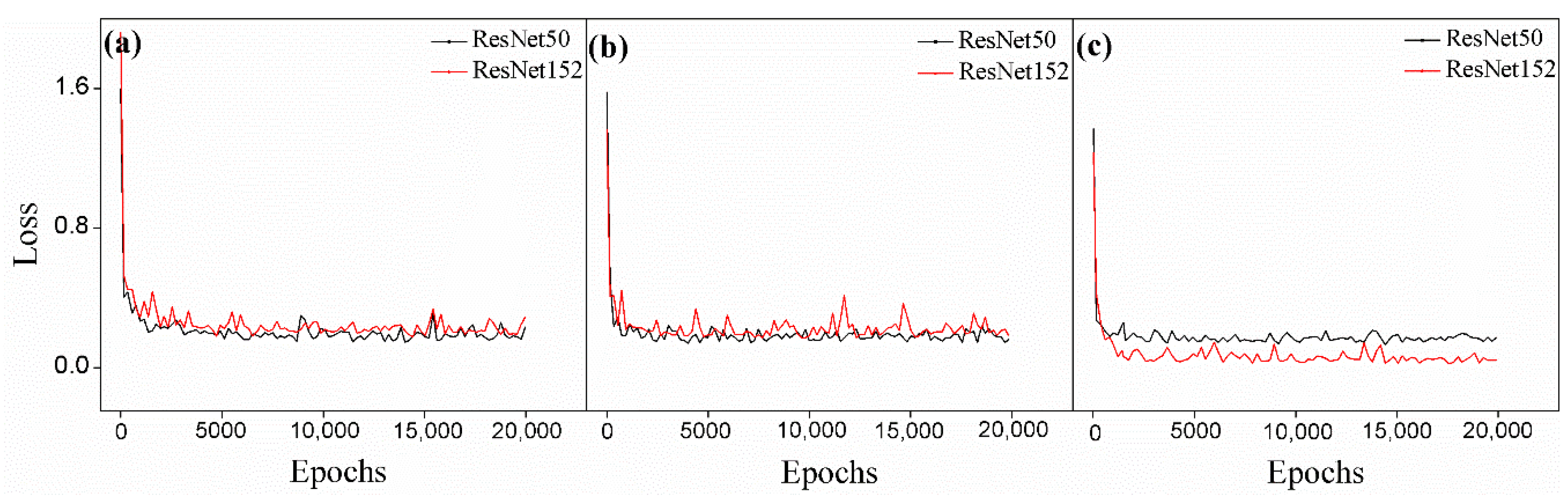

3.1. Comparison of Accuracy and Training Time of Different Models

3.2. Identification and Classification of Drought Stress in Maize by DCNN

3.3. A Comparison between DCNN and Traditional Machine Learning

4. Discussion

4.1. Extraction of the Phenotypic Characteristics of Maize by DCNN

4.2. Comparison of the Accuracy of Color and Gray Images of Maize at Different Stages

4.3. Misclassified Image Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sun, Q.; Liang, X.L.; Zhang, D.G.; Li, X.H.; Hao, Z.F.; Weng, J.F.; Li, M.S.; Zhang, S.H. Trends in drought tolerance in Chinese maize cultivars from the 1950s to the 2000s. Field Crops Res. 2017, 201, 175–183. [Google Scholar] [CrossRef]

- Anwar, S.; Iqbal, M.; Akram, H.M.; Niaz, M.; Rasheed, R. Influence of Drought Applied at Different Growth Stages on Kernel Yield and Quality in Maize (Zea Mays L.). Commun. Soil Sci. Plant Anal. 2016, 47, 2225–2232. [Google Scholar] [CrossRef]

- Jiang, P.; Cai, F.; Zhao, Z.-Q.; Meng, Y.; Gao, L.-Y.; Zhao, T.-H. Physiological and Dry Matter Characteristics of Spring Maize in Northeast China under Drought Stress. Water 2018, 10, 1561. [Google Scholar] [CrossRef]

- Comas, L.H.; Trout, T.J.; DeJonge, K.C.; Zhang, H.; Gleason, S.M. Water productivity under strategic growth stage-based deficit irrigation in maize. Agric. Water Manag. 2019, 212, 433–440. [Google Scholar] [CrossRef]

- Zhao, H.; Xu, Z.; Zhao, J.; Huang, W. A drought rarity and evapotranspiration-based index as a suitable agricultural drought indicator. Ecol. Indic. 2017, 82, 530–538. [Google Scholar] [CrossRef]

- Jones, H.G. Irrigation scheduling: Advantages and pitfalls of plant-based methods. J. Exp. Bot. 2004, 55, 2427–2436. [Google Scholar] [CrossRef] [PubMed]

- Mangus, D.L.; Sharda, A.; Zhang, N. Development and evaluation of thermal infrared imaging system for high spatial and temporal resolution crop water stress monitoring of corn within a greenhouse. Comput. Electron. Agric. 2016, 121, 149–159. [Google Scholar] [CrossRef]

- Prive, J.P.; Janes, D. Evaluation of plant and soil moisture sensors for the detection of drought stress in raspberry. In Environmental Stress and Horticulture Crops; Tanino, K., Arora, R., Graves, B., Griffith, M., Gusta, L.V., Junttila, O., Palta, J., Wisniewski, M., Eds.; International Society Horticultural Science: Leuven, Belgium, 2003; pp. 391–396. [Google Scholar]

- Zhuang, S.; Wang, P.; Jiang, B.; Li, M.S.; Gong, Z.H. Early detection of water stress in maize based on digital images. Comput. Electron. Agric. 2017, 140, 461–468. [Google Scholar] [CrossRef]

- Baret, F.; Madec, S.; Irfan, K.; Lopez, J.; Comar, A.; Hemmerle, M.; Dutartre, D.; Praud, S.; Tixier, M.H. Leaf-rolling in maize crops: From leaf scoring to canopy-level measurements for phenotyping. J. Exp. Bot. 2018, 69, 2705–2716. [Google Scholar] [CrossRef]

- Avramova, V.; Nagel, K.A.; AbdElgawad, H.; Bustos, D.; DuPlessis, M.; Fiorani, F.; Beemster, G.T. Screening for drought tolerance of maize hybrids by multi-scale analysis of root and shoot traits at the seedling stage. J. Exp. Bot. 2016, 67, 2453–2466. [Google Scholar] [CrossRef] [Green Version]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine Learning for High-Throughput Stress Phenotyping in Plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef] [Green Version]

- Naik, H.S.; Zhang, J.; Lofquist, A.; Assefa, T.; Sarkar, S.; Ackerman, D.; Singh, A.; Singh, A.K.; Ganapathysubramanian, B. A real-time phenotyping framework using machine learning for plant stress severity rating in soybean. Plant Methods 2017, 13, 23. [Google Scholar] [CrossRef] [PubMed]

- Chemura, A.; Mutanga, O.; Sibanda, M.; Chidoko, P. Machine learning prediction of coffee rust severity on leaves using spectroradiometer data. Trop. Plant Pathol. 2017, 43, 117–127. [Google Scholar] [CrossRef]

- Huang, K.-Y. Application of artificial neural network for detecting Phalaenopsis seedling diseases using color and texture features. Comput. Electron. Agric. 2007, 57, 3–11. [Google Scholar] [CrossRef]

- Zakaluk, R.; Sri Ranjan, R. Artificial Neural Network Modelling of Leaf Water Potential for Potatoes Using RGB Digital Images: A Greenhouse Study. Potato Res. 2007, 49, 255–272. [Google Scholar] [CrossRef]

- Raza, S.E.; Smith, H.K.; Clarkson, G.J.; Taylor, G.; Thompson, A.J.; Clarkson, J.; Rajpoot, N.M. Automatic detection of regions in spinach canopies responding to soil moisture deficit using combined visible and thermal imagery. PLoS ONE 2014, 9, e97612. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Zhang, Y.; He, D.; Li, Y. Identification of Apple Leaf Diseases Based on Deep Convolutional Neural Networks. Symmetry 2017, 10, 11. [Google Scholar] [CrossRef]

- Ubbens, J.R.; Stavness, I. Deep Plant Phenomics: A Deep Learning Platform for Complex Plant Phenotyping Tasks. Front. Plant Sci. 2017, 8, 1190. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Le Cun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L. Handwritten Digit Recognition with a Back-Propagation Network. In Advances in Neural Information Processing Systems 2; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1990; pp. 396–404. [Google Scholar]

- Singh, A.K.; Ganapathysubramanian, B.; Sarkar, S.; Singh, A. Deep Learning for Plant Stress Phenotyping: Trends and Future Perspectives. Trends Plant Sci. 2018, 23, 883–898. [Google Scholar] [CrossRef]

- Veeramani, B.; Raymond, J.W.; Chanda, P. DeepSort: Deep convolutional networks for sorting haploid maize seeds. BMC Bioinform. 2018, 19, 85–93. [Google Scholar] [CrossRef] [PubMed]

- Uzal, L.C.; Grinblat, G.L.; Namías, R.; Larese, M.G.; Bianchi, J.S.; Morandi, E.N.; Granitto, P.M. Seed-per-pod estimation for plant breeding using deep learning. Comput. Electron. Agric. 2018, 150, 196–204. [Google Scholar] [CrossRef]

- Ghosal, S.; Blystone, D.; Singh, A.K.; Ganapathysubramanian, B.; Singh, A.; Sarkar, S. An explainable deep machine vision framework for plant stress phenotyping. Proc. Natl. Acad. Sci. USA 2018, 15, 4613–4618. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. In Proceedings of the International Conference on International Conference on Machine Learning, Beijing, China, 21–26 June 2014; p. I-647. [Google Scholar]

- Li, E.; Chen, Y.; Li, X.; Li, M. Technical Specification for Field Investigation and Classification of Maize Disaster. Industry Standard Agriculture. 2012. Available online: https://www.docin.com/p-1635041785.html (accessed on 16 February 2019).

- Nivin, T.W.; Scott, G.J.; Davis, C.H.; Marcum, R.A. Rapid broad area search and detection of Chinese surface-to-air missile sites using deep convolutional neural networks. J. Appl. Remote Sens. 2017, 11, 042614. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CPVR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Masuka, B.; Araus, J.L.; Das, B.; Sonder, K.; Cairns, J.E. Phenotyping for abiotic stress tolerance in maize. J. Integr. Plant Biol. 2012, 54, 238–249. [Google Scholar] [CrossRef] [PubMed]

- Behmann, J.; Mahlein, A.-K.; Rumpf, T.; Römer, C.; Plümer, L. A review of advanced machine learning methods for the detection of biotic stress in precision crop protection. Precis. Agric. 2014, 16, 239–260. [Google Scholar] [CrossRef]

- Hasan, M.M.; Chopin, J.P.; Laga, H.; Miklavcic, S.J. Detection and analysis of wheat spikes using Convolutional Neural Networks. Plant Methods 2018, 14, 100. [Google Scholar] [CrossRef]

- Yalcin, H. Phenology Recognition using Deep Learning. In Proceedings of the International Conference on Agro-Geoinformatics, Fairfax, VA, USA, 7–10 August 2017; pp. 1–5. [Google Scholar]

- Ma, J.; Du, K.; Zheng, F.; Zhang, L.; Gong, Z.; Sun, Z. A recognition method for cucumber diseases using leaf symptom images based on deep convolutional neural network. Comput. Electron. Agric. 2018, 154, 18–24. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Xiong, X.; Duan, L.; Liu, L.; Tu, H.; Yang, P.; Wu, D.; Chen, G.; Xiong, L.; Yang, W.; Liu, Q. Panicle-SEG: A robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization. Plant Methods 2017, 13, 104. [Google Scholar] [CrossRef] [PubMed]

- Koushik, J. Understanding Convolutional Neural Networks. 2016. Available online: https://arxiv.org/pdf/1605.09081.pdf (accessed on 16 February 2019).

- Fergus, M.D.Z.R. Visualizing and Understanding Convolutional Networks. arXiv, 2013; arXiv:1311.2901. [Google Scholar]

- Jiang, B.; Wang, P.; Zhuang, S.; Li, M.; Li, Z.; Gong, Z. Detection of maize drought based on texture and morphological features. Comput. Electron. Agric. 2018, 151, 50–60. [Google Scholar] [CrossRef]

- Han, W.; Sun, Y.; Xu, T.; Chen, X.; Su Ki, O. Detecting maize leaf water status by using digital RGB images. Int. J. Agric. Biol. Eng. 2014, 7, 45–53. [Google Scholar]

- Mohanty, S.P.; Hughes, D.P.; Salathe, M. Using Deep Learning for Image-Based Plant Disease Detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef] [PubMed]

| Stage | Treatment | Repeat | Total | ||

|---|---|---|---|---|---|

| A | B | C | |||

| Seedling (n 1 = 1710) | Optimum moisture | 197 | 187 | 188 | 572 |

| Light drought | 196 | 187 | 186 | 569 | |

| Moderate drought | 195 | 188 | 186 | 569 | |

| Jointing (n 1 = 1751) | Optimum moisture | 195 | 194 | 194 | 583 |

| Light drought | 198 | 195 | 196 | 589 | |

| Moderate drought | 195 | 190 | 194 | 579 | |

| Stage | Image Type | Training Mode | ResNet50 | ResNet152 | ||

|---|---|---|---|---|---|---|

| Time (m) | Accuracy (%) | Time (m) | Accuracy (%) | |||

| Seedling | Color | TL 1 | 7.59 | 98.23 ± 0.03 | 19.48 | 98.17 ± 0.14 |

| TFS 2 | 16.35 | 95.67 ± 0.52 | 41.47 | 96.00 ± 0.25 | ||

| Gray | TL | 7.58 | 96.58 ± 0.38 | 20.32 | 96.50 ± 0.43 | |

| TFS | 16.54 | 91.33 ± 1.38 | 42.15 | 93.67 ± 0.14 | ||

| Jointing | Color | TL | 7.58 | 97.58 ± 0.14 | 19.09 | 97.75 ± 0.25 |

| TFS | 16.16 | 95.67 ± 1.01 | 43.48 | 95.08 ± 0.63 | ||

| Gray | TL | 7.40 | 96.58 ± 0.14 | 19.50 | 96.58 ± 0.29 | |

| TFS | 16.16 | 93.25 ± 1.39 | 41.20 | 94.00 ± 0.66 | ||

| Total | Color | TL | 11.28 | 96.00 ± 0.50 | 27.10 | 95.09 ± 0.30 |

| TFS | 23.55 | 95.99 ± 1.00 | 48.48 | 94.85 ± 1.31 | ||

| Gray | TL | 10.42 | 94.95 ± 0.17 | 30.10 | 93.42 ± 0.25 | |

| TFS | 23.35 | 91.33 ± 0.50 | 57.18 | 92.04 ± 1.46 | ||

| Treatment | Color Image | Gray Image | ||||

|---|---|---|---|---|---|---|

| OM | LD | MD | OM | LD | MD | |

| OM | 30 | 1 | 2 | 30 | 1 | 0 |

| LD | 1 | 29 | 0 | 5 | 25 | 0 |

| MD | 0 | 7 | 32 | 1 | 5 | 33 |

| Model | GBDT | ResNet50 | |

|---|---|---|---|

| Color Image | Gray Image | ||

| DI (%) | 90.39 | 98.00 | 93.00 |

| DC (%) | 80.95 | 91.00 | 88.00 |

| Time (s) | - | 6 | 6 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, J.; Li, W.; Li, M.; Cui, S.; Yue, H. Identification and Classification of Maize Drought Stress Using Deep Convolutional Neural Network. Symmetry 2019, 11, 256. https://doi.org/10.3390/sym11020256

An J, Li W, Li M, Cui S, Yue H. Identification and Classification of Maize Drought Stress Using Deep Convolutional Neural Network. Symmetry. 2019; 11(2):256. https://doi.org/10.3390/sym11020256

Chicago/Turabian StyleAn, Jiangyong, Wanyi Li, Maosong Li, Sanrong Cui, and Huanran Yue. 2019. "Identification and Classification of Maize Drought Stress Using Deep Convolutional Neural Network" Symmetry 11, no. 2: 256. https://doi.org/10.3390/sym11020256

APA StyleAn, J., Li, W., Li, M., Cui, S., & Yue, H. (2019). Identification and Classification of Maize Drought Stress Using Deep Convolutional Neural Network. Symmetry, 11(2), 256. https://doi.org/10.3390/sym11020256