Visual Tea Leaf Disease Recognition Using a Convolutional Neural Network Model

Abstract

:1. Introduction

2. Materials and Methods

2.1. Disease Dataset

2.2. Performance Measurements

2.3. Convolutional Neural Networks

2.4. Dense SIFT-Based Bag of Visual Words (BOVW) Model

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Yang, N.; Yuan, M.F.; Wang, P.; Zhang, R.B.; Sun, J.; Mao, H.P. Tea Diseases Detection Based on Fast Infrared Thermal Image Processing Technology. J. Sci. Food Agric. 2019. [Google Scholar] [CrossRef] [PubMed]

- Sulistyo, S.B.; Wu, D.; Woo, W.L.; Dlay, S.S.; Gao, B. Computational Deep Intelligence Vision Sensing for Nutrient Content Estimation in Agricultural Automation. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1243–1257. [Google Scholar] [CrossRef]

- Sulistyo, S.B.; Woo, W.L.; Dlay, S.S. Regularized Neural Networks Fusion and Genetic Algorithm Based On-Field Nitrogen Status Estimation of Wheat Plants. IEEE Trans. Ind. Inform. 2017, 13, 103–114. [Google Scholar] [CrossRef]

- Sulistyo, S.B.; Woo, W.L.; Dlay, S.S.; Gao, B. Building a Globally Optimized Computational Intelligent Image Processing Algorithm for On-Site Inference of Nitrogen in Plants. IEEE Intell. Syst. 2018, 33, 15–26. [Google Scholar] [CrossRef]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant species classification using deepconvolutional neural network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Bashish, D.A.; Braik, M.; Bani-Ahmad, S. Detection and Classification of Leaf Diseases using K-means-based Segmentation and Neural-networks-based Classification. Inf. Technol. J. 2011, 10, 267–275. [Google Scholar] [CrossRef]

- Rumpf, T.; Mahlein, A.-K.; Steinerb, U.; Oerke, E.-C.; Dehne, H.-W.; Plümer, L. Early detection and classification of plant diseases with Support Vector Machines based on hyperspectral reflectance. Comput. Electron. Agric. 2010, 74, 267–275. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef] [PubMed]

- Abdullah, N.E.; Rahim, A.A.; Hashim, H.; Kamal, M.M. Classification of Rubber Tree Leaf Diseases Using Multilayer Perceptron Neural Network. In Proceedings of the 5tn Student Conference on Research and Development-SCOReD, Shah Alam, Malaysia, 11–12 December 2007. [Google Scholar]

- Burges, C.J.C. A Tutorial on Support Vector Machines for Pattern Recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

- Vapnik, V. Statistical Learning Theory; John Wiley and Sons: New York, NY, USA, 1998. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Chaudhary, P.; Chaudhari, A.K.; Cheeran, A.N.; Godara, S. Color Transform Based Approach for Disease Spot Detection on Plant Leaf. Int. J. Comput. Sci. Telecommun. 2012, 3, 65–69. [Google Scholar]

- Chung, C.L.; Huang, K.J.; Chen, S.Y.; Lai, M.H.; Chen, Y.C.; Kuo, Y.F. Detecting Bakanae disease in rice seedlings by machine vision. Comput. Electron. Agric. 2016, 121, 404–411. [Google Scholar] [CrossRef]

- Arivazhagan, S.; Shebiah, R.N.; Ananthi, S.; Varthini, S.V. Detection of unhealthy region of plant leaves and classification of plant leaf diseases using texture features. Agric. Eng. Int. CIGR J. 2013, 15, 211–217. [Google Scholar]

- Shrivastava, S.; Singh, S.K.; Hooda, D.S. Soybean plant foliar disease detection using image retrieval approaches. Multimed. Tools Appl. 2017, 76, 26647–26674. [Google Scholar] [CrossRef]

- Pydipati, R.; Burks, T.F.; Lee, W.S. Identification of citrus disease using color texture features and discriminant analysis. Comput. Electron. Agric. 2006, 52, 49–59. [Google Scholar] [CrossRef]

- Zhang, S.W.; Wu, X.W.; You, Z.H.; Zhang, L.Q. Leaf image based cucumber disease recognition using sparse representation classification. Comput. Electron. Agric. 2017, 134, 135–141. [Google Scholar] [CrossRef]

- Patil, J.K.; Kumar, R. Feature extraction of diseased leaf images. J. Signal Image Process. 2012, 3, 60–63. [Google Scholar]

- Ali, H.; Lali, M.I.; Nawaz, M.Z.; Sharif, M.; Saleem, B.A. Symptom based automated detection of citrus diseases using color histogram and textural descriptors. Comput. Electron. Agric. 2017, 138, 92–104. [Google Scholar] [CrossRef]

- Pires, R.D.L.; Gonçalves, D.N.; Oruê, J.P.M.; Kanashiro, W.E.S.; Rodrigues, J.F., Jr.; Machado, B.B.; Gonçalves, W.N. Local descriptors for soybean disease recognition. Comput. Electron. Agric. 2016, 125, 48–55. [Google Scholar] [CrossRef]

- Zhang, S.W.; Zhu, Y.H.; You, Z.H.; Wu, X.W. Fusion of superpixel, expectation maximization and PHOG for recognizing cucumber diseases. Comput. Electron. Agric. 2017, 140, 338–347. [Google Scholar] [CrossRef]

- Zhang, J.; Marszalek, M.; Lazebnik, S.; Schmid, C. Local features and kernels for classification of texture and object categories: A comprehensive study. Int. J. Comput. Vis. 2007, 73, 213–238. [Google Scholar] [CrossRef]

- Karmokar, B.C.; Ullah, M.S.; Siddiquee, M.K.; Alam, K.M.R. Tea Leaf Diseases Recognition using Neural Network Ensemble. Int. J. Comput. Appl. 2015, 114, 27–30. [Google Scholar]

- Wang, H.; Li, G.; Ma, Z.H.; Li, X.L. Image recognition of plant diseases based on principal component analysis and neural networks. In Proceedings of the 2012 8th International Conference on Natural Computation, Chongqing, China, 29–31 May 2012. [Google Scholar]

- Hossain, M.S.; Mou, R.M.; Hasan, M.M.; Chakraborty, S.; Razzak, M.A. Recognition and Detection of Tea Leaf’s Diseases Using Support Vector Machine. In Proceedings of the 14th International Colloquium on Signal Processing & Its Applications (CSPA 2018), Penang, Malaysia, 9–10 March 2018. [Google Scholar]

- Yao, Q.; Guan, Z.X.; Zhou, Y.F.; Tang, J.; Hu, Y.; Yang, B.J. Application of Support Vector Machine for Detecting Rice Diseases Using Shape and Color Texture Features. In Proceedings of the International Conference on Engineering Computation, Hong Kong, China, 2–3 May 2009. [Google Scholar]

- Pichayoot, O. Corn Disease Identification from Leaf Images Using Convolutional Neural Networks. In Proceedings of the 21st International Computer Science and Engineering Conference (ICSEC), Bangkok, Thailand, 15–18 November 2017. [Google Scholar]

- Ma, J.; Du, K.; Zheng, F.; Zhang, L.; Gong, Z.; Sun, Z. A recognition method for cucumber diseases using leaf symptom images based on deep convolutional neural network. Comput. Electron. Agric. 2018, 154, 18–24. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Y.; He, D.J.; Li, Y. Identification of Apple Leaf Diseases Based on Deep Convolutional Neural Networks. Symmetry 2018, 10, 11. [Google Scholar] [CrossRef]

- Zhang, X.; Qiao, Y.; Meng, F.F.; Fan, C.G.; Zhang, M.M. Identification of Maize Leaf Diseases Using Improved Deep Convolutional Neural Networks. IEEE Access 2018, 6, 30370–30377. [Google Scholar] [CrossRef]

- Prajwala, T.M.; Pranathi, A.; Ashritha, K.S.; Chittaragi, N.B.; Koolagudi, S.G. Tomato Leaf Disease Detection using Convolutional Neural Networks. In Proceedings of the 2018 Eleventh International Conference on Contemporary Computing (IC3), Noida, India, 2–4 August 2018. [Google Scholar]

- Lehmann-Danzinger, H. Diseases and Pests of Tea: Overview and Possibilities of Integrated Pest and Disease Management. J. Agric. Rural Dev. Trop. Subtrop. 2000, 101, 13–38. [Google Scholar]

- Keith, L.; Ko, W.H.; Sato, D.M. Identification Guide for Diseases of Tea (Camellia sinensis). Plant Dis. 2006, 2006, 1–4. [Google Scholar]

- Benammar Elgaaied, A.; Cascio, D.; Bruno, S.; Ciaccio, M.C.; Cipolla, M.; Fauci, A.; Morgante, R.; Taormina, V.; Gorgi, Y.; Triki, R.M.; et al. Computer-Assisted Classification Patterns in Autoimmune Diagnostics: The AIDA Project. Biomed Res. Int. 2016, 2016, 2073076. [Google Scholar] [CrossRef] [PubMed]

- Kim, P. MATLAB Deep Learning With Machine Learning, Neural Networks and Artificial Intelligence; Apress: Berkeley, CA, USA, 2017; pp. 114–116. [Google Scholar]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G. On the importance of initialization and momentum in deep learning. Proc. Mach. Learn. Res. 2013, 28, 1139–1147. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 20, 91–110. [Google Scholar] [CrossRef]

- Juan, L.; Gwun, O. A Comparison of SIFT, PCA-SIFT and SURF. Int. J. Image Process. 2009, 3, 143–152. [Google Scholar]

- Liu, C.; Yuen, J.; Torralba, A. SIFT Flow: Dense Correspondence across Scenes and its Applications. Trans. Pattern Anal. Mach. Intell. 2011, 33, 978–994. [Google Scholar] [CrossRef] [PubMed]

- Sivic, J.; Zisserman, A. Video Google: A text retrieval approach to object matching in videos. In Proceedings of the 9th IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003. [Google Scholar]

- Wang, R.; Ding, K.; Yang, J.; Xue, L. A novel method for image classification based on bag of visual words. J. Vis. Commun. Image Represent. 2016, 40, 24–33. [Google Scholar] [CrossRef]

| Class | Number of Images from the Dataset Used for Training | Number of Images from the Dataset Used for Validation | Number of Images from the Dataset Used for Testing |

|---|---|---|---|

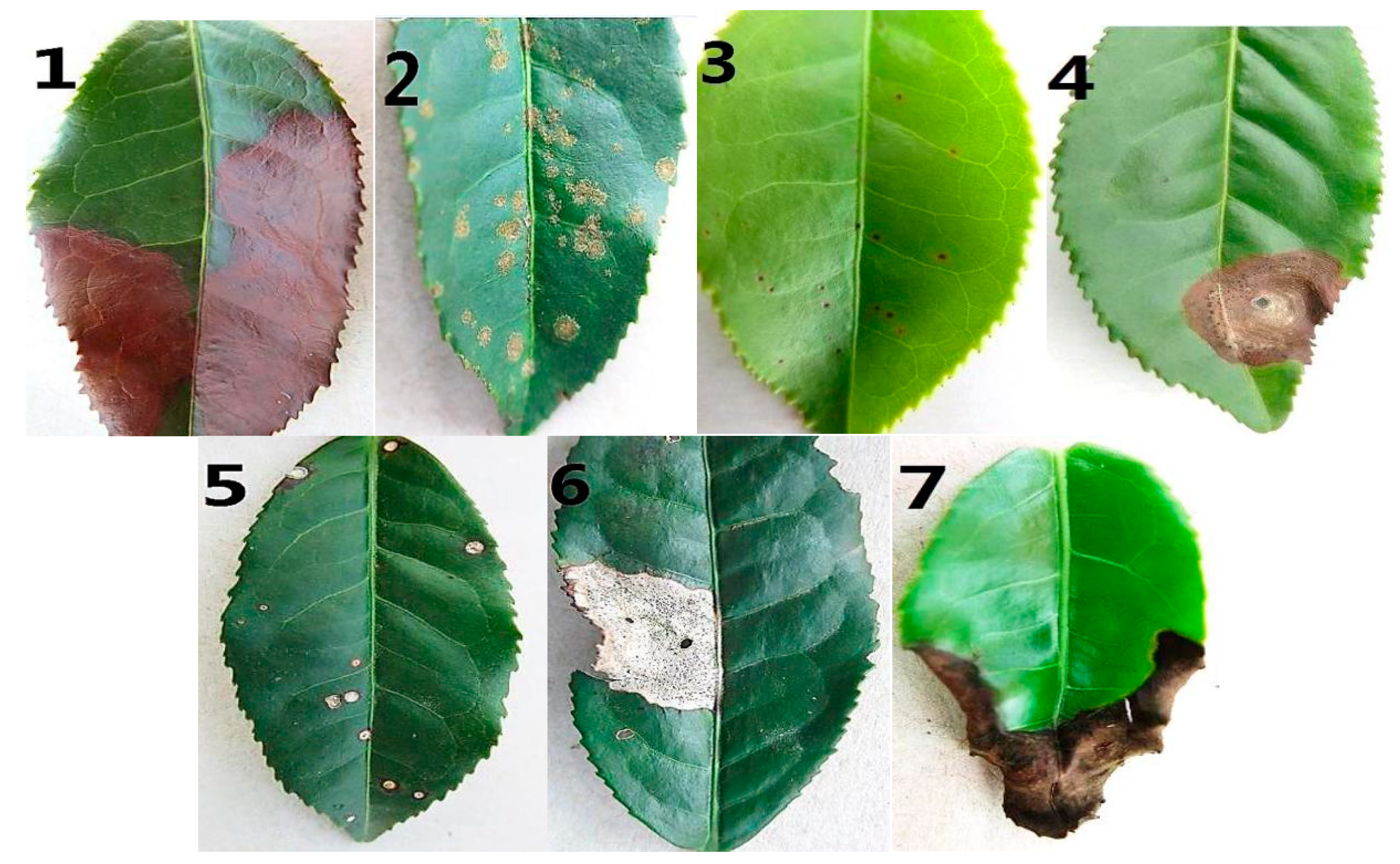

| (1) White spot | 941 | 118 | 117 |

| (2) Bird’s eye spot | 955 | 120 | 119 |

| (3) Red leaf spot | 890 | 111 | 111 |

| (4) Gray blight | 893 | 112 | 111 |

| (5) Anthracnose | 880 | 110 | 110 |

| (6) Brown blight | 920 | 115 | 115 |

| (7) Algal leaf spot | 846 | 106 | 105 |

| Total | 6325 | 792 | 788 |

| Layer | Parameter | Activation Function |

|---|---|---|

| Input | 227 × 227 × 3 | - |

| Convolution1 (Conv1) | 24 convolution filters (11 × 11), 4 stride | ReLU |

| Pooling1 (Pool1) | Max pooling (3 × 3), 2 stride | - |

| Convolution2 (Conv2) | 64 convolution filters (5 × 5), 1 stride | ReLU |

| Pooling2 (Pool2) | Max pooling (3 × 3), 2 stride | - |

| Convolution3 (Conv3) | 96 convolution filters (3 × 3), 1 stride | ReLU |

| Convolution4 (Conv4) | 96 convolution filters (3 × 3), 1 stride | ReLU |

| Convolution5 (Conv5) | 64 convolution filters (3 × 3), 1 stride | ReLU |

| Pooling5 (Pool5) | Max pooling (3 × 3), 2 stride | - |

| Full Connect6 (fc6) | 500 nodes, 1 stride | ReLU |

| Full Connect7 (fc7) | 100 nodes, 1 stride | ReLU |

| Full Connect8 (fc8) | 7 nodes, 1 stride | ReLU |

| Output | 1 node | Softmax |

| White Spot | Bird’s Eye Spot | Red Leaf Spot | Gray Blight | Anthracnose | Brown Blight | Algal Leaf Spot | Sensitivity | Accuracy | MCA | |

|---|---|---|---|---|---|---|---|---|---|---|

| White spot | 111 | 3 | 0 | 0 | 3 | 0 | 0 | 94.87% | 90.23% | 90.16% |

| Bird’s eye spot | 1 | 117 | 0 | 0 | 0 | 0 | 1 | 98.32% | ||

| Red leaf spot | 0 | 0 | 95 | 7 | 0 | 8 | 1 | 85.59% | ||

| Gray blight | 0 | 0 | 4 | 96 | 3 | 7 | 1 | 86.49% | ||

| Anthracnose | 5 | 0 | 1 | 6 | 97 | 1 | 0 | 88.18% | ||

| Brown blight | 0 | 1 | 15 | 2 | 0 | 97 | 0 | 84.35% | ||

| Algal leaf spot | 1 | 1 | 2 | 2 | 1 | 0 | 98 | 93.33% |

| White Spot | Bird’s Eye Spot | Red Leaf Spot | Gray Blight | Anthracnose | Brown Blight | Algal Leaf Spot | Sensitivity | Accuracy | MCA | |

|---|---|---|---|---|---|---|---|---|---|---|

| White spot | 79 | 11 | 0 | 2 | 19 | 1 | 5 | 67.52% | 60.91% | 60.62% |

| Bird’s eye spot | 12 | 89 | 0 | 4 | 1 | 10 | 3 | 74.79% | ||

| Red leaf spot | 2 | 4 | 59 | 23 | 2 | 19 | 2 | 53.15% | ||

| Gray blight | 0 | 0 | 13 | 70 | 8 | 17 | 3 | 63.06% | ||

| Anthracnose | 19 | 0 | 5 | 13 | 56 | 11 | 6 | 50.91% | ||

| Brown blight | 0 | 2 | 19 | 17 | 3 | 73 | 1 | 63.48% | ||

| Algal leaf spot | 9 | 10 | 12 | 13 | 3 | 4 | 54 | 51.43% |

| White Spot | Bird’s Eye Spot | Red Leaf Spot | Gray Blight | Anthracnose | Brown Blight | Algal Leaf Spot | Sensitivity | Accuracy | MCA | |

|---|---|---|---|---|---|---|---|---|---|---|

| White spot | 83 | 13 | 0 | 3 | 15 | 1 | 2 | 70.94% | 70.94% | 70.77% |

| Bird’s eye spot | 6 | 100 | 0 | 6 | 1 | 5 | 1 | 84.03% | ||

| Red leaf spot | 1 | 1 | 80 | 17 | 0 | 11 | 1 | 72.07% | ||

| Gray blight | 0 | 0 | 9 | 81 | 6 | 14 | 1 | 72.97% | ||

| Anthracnose | 13 | 0 | 4 | 10 | 73 | 8 | 2 | 66.36% | ||

| Brown blight | 0 | 5 | 16 | 15 | 3 | 75 | 1 | 65.22% | ||

| Algal leaf spot | 6 | 5 | 9 | 10 | 4 | 4 | 67 | 63.81% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Liu, Q.; Gao, L. Visual Tea Leaf Disease Recognition Using a Convolutional Neural Network Model. Symmetry 2019, 11, 343. https://doi.org/10.3390/sym11030343

Chen J, Liu Q, Gao L. Visual Tea Leaf Disease Recognition Using a Convolutional Neural Network Model. Symmetry. 2019; 11(3):343. https://doi.org/10.3390/sym11030343

Chicago/Turabian StyleChen, Jing, Qi Liu, and Lingwang Gao. 2019. "Visual Tea Leaf Disease Recognition Using a Convolutional Neural Network Model" Symmetry 11, no. 3: 343. https://doi.org/10.3390/sym11030343