1. Introduction

To provide a realistic experience, virtual reality (VR) facilitates interaction that satisfies the diverse senses of a user such as visual, auditory, and tactile senses. Here, immersive VR focuses on presence, whereby a user can feel a realistic experience based on the senses regarding where he/she is, whom he/she is with, and what actions he/she is performing. To elevate the presence of a user in an immersive VR, it is important to satisfy the visual realism by transmitting 3D image information first.

For this purpose, devices such as a 3D monitor and head mounted display (HMD) are used. In addition, to increase the immersion by inducing user participation, studies have been carried out for methods that can detect actions and movements and interact more directly with the virtual environment or objects. Studies were carried out for haptic systems that not only detect the movements of human body joints accurately and express them realistically in a virtual environment but also make accurate calculations and provide feedback regarding physical processes and reactions such as force and tactile senses that occur in this process. Furthermore, studies were performed for motion platforms that implement free walking in a spacious virtual environment in a limited real environment. Lately, by using various approaches of psychology, neural science, and social phenomena, studies have been conducted to analyze the effects of VR on our lives.

In terms of increasing the presence in immersive VR, how a user interacts with the virtual environment is important, but equally important is how diversely virtual scenes are provided to visually satisfy the user. However, because hardware-oriented VR studies (haptic systems, motion platforms, etc.) for the realistic expression of user actions and movements in immersive VR considered only the scenes for experiments, the space was limited, and the structure was simple in most cases. Despite this, because the VR technology is applied to various areas such as fine arts and architectural design as well as games, specialized studies are needed to create a complex virtual space in accordance with the VR. To this end, studies have been carried out for the methods [

1,

2,

3] of using procedural modeling to automatically create virtual scenes such as urban spaces and virtual landscapes, which have complex structures and configurations and distinct characteristics. However, few studies have been conducted on creating novel scenes for the users’ visual realism in immersive VR, or on generating terrains suitable for the environment for experiencing VR.

Accordingly, this study aims to develop immersive VR based on visual realism that provides users with novel presence sensations and experiences. For this purpose, we conduct novel research on a technique for creating maze terrains and develop a new design for an authoring system to support the technique. This system is user-oriented and includes various functions that calculate diverse maze patterns automatically and generate terrains in an easy and intuitive structure. The proposed maze terrain authoring system consists of the following core functions:

A function is designed to create and edit a maze terrain the way a user wants in an easy and intuitive structure by automatically calculating the maze pattern, in which a path from the entrance to the exit is clear, by using a maze creation algorithm.

For the diversity of maze patterns, a circular maze generation function is designed that generates a circular maze automatically by using simple parameters that can be easily understood by the user.

A sketch-based maze transformation function is designed that automatically converts a hand-drawn maze sketch image of the user into a 3D maze terrain through a morphological image-processing operation.

Based on these three core functions, a user can easily and intuitively create desired 3D terrains that have various irregular maze patterns.

2. Related Works

2.1. Immersive VR

Studies on immersive VR have been carried out using various approaches: a display that transmits 3D visual information so realistic experiences can be provided to a user in a virtual environment based on the five senses, sound processing for providing improved spatial perception by using a volumetric audio source, a method of interacting with the virtual environment more directly, and a haptic system and motion platform that feed physical reactions back to the human body (hand, foot, etc.).

To provide an improved presence to users through high immersion, it is necessary to provide interactions that are so realistic that the boundary between VR and reality is vague [

4,

5]. To this end, some studies have been carried out to accurately detect the movements of human body joints moving in a real space and to analyze the intention of the movements or the process of action to reflect it in the virtual environment.

By attaching a surface or optical marker to a hand joint and detecting movements based on the marker, many studies including that of Matcalf et al. [

6] have proposed methods of mapping the detecting movements to the motions of a virtual hand model [

7,

8]. Recently, techniques that express realistic movements in virtual spaces have been developed, e.g., a sphere-mesh tracking model proposed by Remellli et al. [

9] and Total Capture (hand gestures, facial expressions, and whole-body movements are captured) proposed by Joo et al. [

10].

In addition, to express infinite walking in a limited indoor space, studies were carried out on smooth assembled mapping [

11] and a walk-in-place detection-based simulator [

12]. Moreover, at present, methods have been developed to express the natural walking of multiple users in the same space [

13].

Presence-oriented immersive VR studies are making progress in proposing novel ideas related to haptic systems that feedback physical reactions occurring in an interaction process between the user and the virtual environment. Related studies include those on 3-RSR (Revolute-Spherical-Revolute) [

14] or 3-DoF (Degrees of Freedom) [

15] wearable haptic devices and portable hand haptic systems [

16]. These devices handle tactile senses delicately and improve the immersion of the user in VR, thereby providing realistic experiences.

Studies on presence based on that of Slater et al. [

17] have been carried out by using various cases. The user experience cases of VR have been analyzed from various perspectives such as walking [

18] and communication between users [

19]. Moreover, these studies made headway in terms of comprehensively analyzing the convergence with other fields of study such as psychology and neural science, in addition to the psychological effects of the VR environment and conditions on user behavior and perception [

20,

21].

Furthermore, studies have been carried out to analyze the factors that improve the presence in interactions using gazes and hands in the application aspect of immersive VR [

4,

5,

22]. To satisfy the improved presence of the user in immersive VR, visual satisfaction should be preceded. For this reason, interest is growing in multimodality studies [

23,

24] that consider visual and auditory senses or visual and tactile senses simultaneously, and studies on pseudo-haptics that combine visual experiences and haptic systems [

25,

26]. As such, various approaches have been made to enhance presence sensation in immersive VR. However, there are relatively few studies on scene generation methods that focus on the visual realism of users in immersive VR to provide new and diverse experiences.

2.2. Virtual Scene for Visual Realism

A virtual scene generation method that can provide an improved presence along with new visual experiences to a user in a virtual environment is a required technique for the diversity of immersive VR applications. In the future, VR scenes will break free from small and limited spaces such as indoor spaces and create vast outdoor terrains such as cities and landscapes in virtual environments so that a greater scope can be provided to users.

Through the procedural modeling of computer graphics [

27], shape grammar [

28], and image-based modeling [

29], Vanegas et al. [

1] defined the collection of buildings, parcels, etc., and surveyed many studies that efficiently generated and visualized urban spaces composed of streets and roads. Dylla et al. [

30] reconstructed a virtual city by setting Rome as a specific region. However, to prevent side effects such as VR motion sickness, immersive virtual scenes must consider the rendering performance (refresh rate, frames per second (fps), etc.) in addition to realistic expressions.

Considering this point, Kim [

2] improved virtual landscape generation techniques [

31,

32] and proposed an optimized modeling and optimization method for immersive VR. Jeong et al. [

33,

34] conducted studies on a maze generation method for new scene generation in an immersive VR.

With the goal of generating virtual scenes of mazes that can provide new experiences and an improved presence to users in immersive VR by increasing the satisfaction of the visual experience, this study builds on conventional studies and proposes an optimized authoring system that can generate more diverse virtual scenes of mazes in an easy, intuitive, and user-oriented structure.

3. Maze Terrain Authoring System

The maze terrain authoring system of this study generates a maze-based immersive virtual terrain while aiming to increase the presence of visual realism to users in immersive VR through various visual experiences. To this end, three core functions are designed that calculate various maze patterns automatically in an easy, intuitive, and user-oriented structure, and convert them to information required for 3D maze terrain generation.

First, a maze-generation-algorithm-based maze pattern generation function is defined whereby mazes of various sizes and patterns are automatically calculated and can be edited freely by the user. Next, a circular maze generation function is designed to escape the monotony of rectangular maze patterns. Lastly, a sketch-based maze transformation function is implemented to effectively convert irregular maze patterns into a maze terrain.

Figure 1 shows the proposed maze terrain authoring system. The user interface consists of the menu at the top, the 2D maze pattern information viewer on the lower left side, and the 3D maze terrain viewer on the lower right side. Based on the algorithm and calculation processes proposed in this study, the system is configured to enable the user to effectively input and use the required parameters. The proposed authoring system was designed with a function to generate maze terrains using a height map, enabling users to control the resolution of 3D maze terrain and the number of polygons. Thus, the developer can effectively control conditions such as the refresh rate and fps, which are required to create large maze terrains in immersive VR.

3.1. Maze Pattern Based on the Maze Generation Algorithm

The first function of the maze terrain authoring system is to automatically generate maze patterns by using the maze generation algorithm. An important factor when generating a maze pattern automatically is that a finite maze must be generated. Various paths or one absolute path should exist so that a user is not trapped inside the maze. This helps to prevent users from experiencing VR sickness, a prominent issue in immersive VR. This is caused by feelings of frustration and anxiety induced in a closed space, such as when trapped in a maze. This study designs the function of the maze terrain authoring system based on an algorithm of Lee et al. [

22] that calculates the maze pattern by using Prim’s algorithm [

35].

The finite maze generation algorithm of Lee et al. [

22] is a method that starts with the processes of (1) setting a starting position and (2) randomly setting a road cell from surrounding cells. Then, (3) the algorithm examines the four surrounding directions (defining the layer structure of the cells after registering the four surrounding direction cells of a road cell as a wall cell set). The algorithm then repeats the processes of (4, 5) to find one among the registered wall cells, and then creates a road.

When the wall and road cells are determined through the processes of the five steps, a process is performed to calculate a 2D maze texture map that is used as the height map of the 3D maze terrain. This study composes the menu of the authoring system by classifying only the values that need to be adjusted through inputs of the user during the processes from maze pattern calculation to maze terrain information generation.

Figure 2 shows the result of composing the menu of the authoring system with the parameters needed for the automatic generation of maze patterns based on the maze generation algorithm. The user sets a maze pattern of a wanted size, and when the button Maze is pressed, the maze pattern is automatically calculated based on the algorithm of Lee et al. [

22]. Moreover, a button named 4Points is provided so that the entrance, which is needed by the user to experience the maze terrain, can be connected to the roads at the ends of the four directions.

Thus, far, we have shown the process of automatically and diversely generating a maze pattern of a size wanted by the user. Here, when the user wants to edit the automatic maze pattern, the Editing checkbox is activated. Afterwards, the user moves the mouse to directly click and edit. When the mouse is clicked directly on the maze pattern information view of the authoring system, the cell information is set to automatically convert a wall to a road and a road to a wall. Based on this,

Figure 3 shows the process of generating a 2D maze texture map for 3D maze terrain generation. By inputting a margin value for the surrounding scenery setting of the maze and a blur value (smooth edge) that controls the smoothness of the maze wall, the user extracts a 2D maze texture map of 2

n × 2

n size that is required for 3D maze terrain generation.

When a 2D maze texture map is generated, the maze terrain view of the authoring system lets the user see the maze pattern in 3D through the 3D maze generation. Here, the resolution of the 3D maze terrain and the height of the terrain based on the height map can be controlled arbitrarily by the user to check the maze pattern. If the terrain function of a game engine such as Unity 3D is used, the maze terrain can be checked without a separate modification process through the generated height map.

Figure 4 illustrates the process of checking the maze generated through the maze terrain view of the proposed authoring system. Furthermore, it is also confirmed that the maze terrain can be checked through the maze texture map produced by a commercial engine such as Unity 3D.

3.2. Circular Maze Generation Method

The second function of the proposed maze terrain authoring system is a circular maze generation method. This method automatically generates circular mazes of various sizes and patterns in addition to providing the maze pattern generation function of a rectangular grid type. Thus, this method expands the users’ visual experiences in immersive VR. The process of distinguishing and setting up a wall and road cells is basically identical. However, according to the characteristics of the circular maze pattern, the radius of a circular maze and the number of maze entrances are set up as input information.

First, when a user inputs a desired maze radius, the wall cells of the circular maze are automatically calculated by using a midpoint circle drawing algorithm. Then, a wall of the circular maze is repeatedly calculated at a certain interval () from the outer circle. Next, based on the number of entrances inputted by the user, the passages are created by rotating the wall cells as many times as the number of entrances from the outermost circle.

The cells that will become the entrance beginnings in the outermost circle are selected arbitrarily as many times as the number of entrances. Then, in the selected cell, a wall cell is selected whereby the length is the same as the interval () between the circular walls, and it is rotation-transformed just enough to touch the inner circular wall. Lastly, the wall cell of the position where the rotation-transformed wall cell touches the inner circular wall is found, and the above processes are repeated.

Algorithm 1 shows the process of automatically generating a circular maze pattern based on the circular maze radius and number of entrances inputted by a user.

Figure 5 shows the process of generating a circular maze by using the authoring system shown in Algorithm 1. The processes of generating a maze texture map from the generated circular maze pattern and visualizing the maze terrain are performed in the same manner as the content of

Section 3.1.

| Algorithm 1 Circular maze generation algorithm design. |

- 1:

← radius of the circular maze. - 2:

← number of maze entrances. - 3:

procedurecircular maze generation process(, ) - 4:

← interval between maze for user road. - 5:

while do - 6:

define wall cell by using Bresenham’s circle drawing algorithm. - 7:

= . - 8:

end while - 9:

← number of drawing circles. - 10:

[] ← as many entrance cells as are selected from wall cell of the outermost circle. - 11:

for i = , 0 do: generate passages in every circle. - 12:

for j = 0, do - 13:

select cells with length of in entrance cell ([j]). - 14:

rotate selected cells. - 15:

renew [j] by finding a cell that meets the inner circle after rotation. - 16:

end for - 17:

end for - 18:

end procedure

|

3.3. Sketch-Based Maze Transform

The last function of the maze terrain authoring system of this study is sketch-based maze transformation. The basic premise of the maze pattern in

Section 3.1 and circular maze in

Section 3.2 is how a uniform maze is generated. However, in many cases, a maze park or maze theme park that we can experience in daily life has an irregular shape. Therefore, this study designs a function that involves a user’s hand-drawn sketch image so that a natural maze terrain can be easily generated as well as a maze pattern of artificial feeling. The last function enables maze terrains to be used in various applications, such as systems for finding directions or virtual parks.

The proposed transformation function enables a user to draw a maze pattern by using graphic software such as Photoshop or by scanning a sketch that is hand-drawn on a piece of paper. Next, the saved image is loaded into the authoring system, and by applying the image-processing algorithm, it is converted into a maze texture map. The process is carried out in three steps.

The first step is a process of fetching the sketch image in the authoring system. For the generalized computations of subsequent processes, the sketch image is transformed into a one-channel grayscale image. The second step is a process of classifying the walls and roads through binarization. Here, a threshold value is an important element for the classification of walls and roads through binarization. There is a method of finding and applying an adoptive threshold value automatically according to the input image, but because environments for producing input images are all different, the process of classifying walls and roads cannot be accurately performed.

Therefore, this study provides a threshold value through the interface of the authoring system so that the user can intuitively control it. The third step is a process of filling holes that occur in the binarization process. Through a morphology close operation, the holes occurring in the walls are filled. Consequently, a natural maze is generated. Here, a system menu item is provided to enable the user to control the number of iterations of the morphology operation.

Figure 6 shows the process of generating a maze texture map in steps from the user’s hand-drawn maze sketch through the sketch-based maze transformation function. After the third step, the maze texture map production process through the margins and smooth settings is the same as that discussed earlier.

4. Experimental Results and Analysis

The proposed maze terrain authoring system was implemented using Microsoft Visual Studio 2013, OpenCV 2.4.11, and DirectX SDK 9.0c. Furthermore, a Unity 2017.3.1f1 (64-bit) engine was used to generate a maze terrain finished through graphic work based on a maze texture map generated automatically by using the maze authoring system. To test the maze terrain confirmation and experience the result in immersive VR, the system was implemented by integrating the Oculus Rift CV1 HMD device and Oculus SDK (ovr_unity_utilities 1.22.0) with the Unity3D engine. Finally, the PC used for the experiment and implementation had an Intel Core i7-6700, 16 GB of RAM (Random Access Memory), and a Geforce 1080 GPU (Graphics Processing Unit).

First, the process of generating a maze terrain through the maze terrain authoring system is examined.

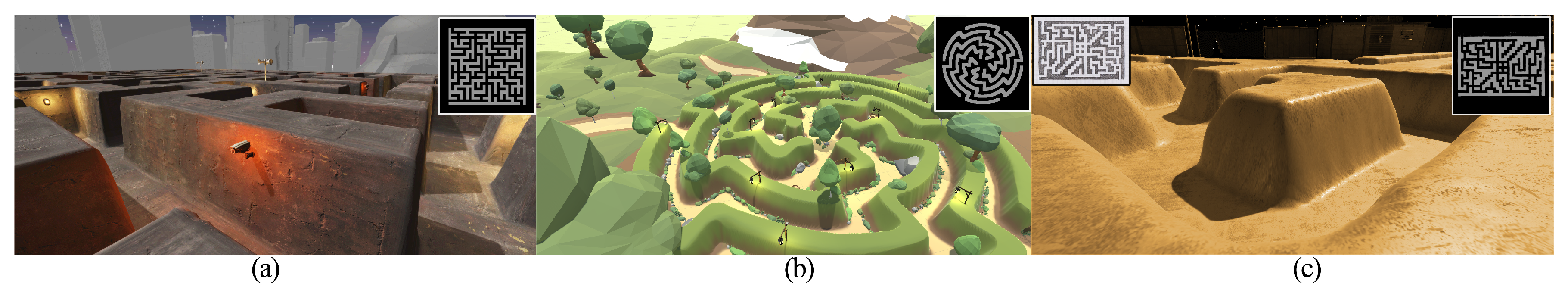

Figure 7 shows this, and a total of three virtual maze terrain scenes are composed. These are the results of generating terrains from the maze patterns calculated based on the core functions of the authoring system, i.e., the maze algorithm-based maze pattern function, circular maze generation function, and sketch-based maze transformation function. Furthermore, it can be seen that variously directed virtual maze environments are constructed by adding graphical factors according to the concept.

Next, an analysis is performed for the basic performance that is necessary when a user experiences a generated maze terrain without VR motion sickness in VR. In general, for scenes of VR content, rendering must be performed for two images transmitted binocularly, unlike in a game. Therefore, to minimize the occurrence of dizziness in the process of scene transition, it is recommended that 75 frames per second should not be exceeded, and the total number of polygons should not exceed 2 M in a rendering scene.

Table 1 lists the comparison results between a basic maze terrain that has no graphical factor and maze terrains that contain graphical factors by measuring the number of frames per second. The measurements were taken by changing the camera position 10 times in total, and the average was recorded.

Here, a difference occurred in the number of polygons according to the graphical factors, and this can have a direct impact on the number of frames per second. Therefore, after measuring the performance in a state of excluding the graphical factors, the differences were compared after placing the graphical factors. Considering the VR motion sickness of the user, the three virtual maze scenes all maintained several polygons under 2 M.

For the deserted city, 0.248 M minimum and 1.811 M maximum were recorded, and here, the number of frames per second was 86.78 on average, showing a difference of 30.37 from the basic maze terrain. The casual land scene that showed the smallest number of polygons (0.101 M minimum and 0.612 M maximum) showed a difference of 15.95 from the basic maze. Therefore, it is confirmed that if the specifications for graphical factors in a maze terrain are carefully considered, they are suitable to be applied as VR content in terms of the overall technical conditions.

Lastly, experiments were performed based on surveys to check whether the maze terrains generated by using the proposed authoring system improved the presence through the high visual immersion of the user in VR. A total of 15 survey participants of ages 21–37 were randomly selected, and while wearing the Oculus Rift CV1 HMD, they experienced the provided maze environment.

Figure 8 shows the experience environment of this study. Moving of virtual characters on the maze terrains was implemented through key inputs of Oculus Touch.

The first survey experiment was performed to analyze the efficiency and satisfaction of the system from the aspect of the users (developers) that used the proposed authoring system. Through the survey, it was checked if the proposed authoring system processed the maze terrain generation efficiently and conveniently from the perspective of developers or level designers who produce VR scenes. The survey was conducted by randomly selecting six people: two persons each from software developers, designers, and students majoring in software.

By dividing the three functions of the authoring system, the time taken to produce a wanted maze and satisfaction value (1–5) for respective functions were recorded.

Table 2 lists these results. For an objective comparison of the proposed authoring system, the time consumed for producing the maze terrains by using the terrain tool provided by the Unity 3D engine was also recorded.

First, in the case of the maze pattern calculation function, the editing times were considered together, and 47.17 s was consumed on average. In the second circular maze case, because no separate editing function was provided, the shortest time was consumed with an average of 24.35 s. The last sketch-based maze transformation function took the longest time with a 192.77 s average as a result of taking the measurement by considering the time taken for a user to draw the maze pattern by hand. According to the existence/nonexistence of experience in hand drawing and using graphical tools such as Adobe Photoshop, large time deviations occurred.

Finally, as a result of measuring the time taken to produce a wanted maze terrain by using the terrain tool of the Unity 3D engine, 1029.81 s was consumed on average. The six users who participated in the experiment had experience with the Unity 3D engine at least once. When the maze terrains were generated through the terrain tool of the engine under these conditions, because the maze patterns had to be planned and fabricated manually by using brushes, a significant amount of time was required.

In the case of satisfaction, the highest satisfaction was shown in the first maze pattern function that provided the generation function of various maze patterns and the editing function to the user at the same time. The second highest satisfaction was shown in the sketch-based maze transformation function, which automatically transforms a hand-drawn maze image, although it takes longer. In the case of the circular maze, because there were fewer functions for the user to select and the results were monotonous, the satisfaction was low.

Lastly, a survey analyzes the user’s visual realism. The survey experiment was performed with the goal of checking if the virtual maze environment produced through the proposed authoring system provided satisfactory visual realism to the users. From questions proposed by Witmer et al. [

36], this study used four questions (4, 6, 7, and 13) related to “visual” in the realism category and recorded the values.

Table 3 lists the results of recording the survey values of users. After experiencing all three maze terrains, the users recorded the experience in each scene with a value between 1 (NOT AT ALL) and 7 (COMPLETELY). As a result, it was confirmed that a majority showed high visual satisfaction close to 6. To check whether the graphical factors of different concepts affected the experience on the terrain, the statistical significance was calculated through a one-way ANOVA (analysis of variance). As a result, F(2,42) = 2.0712, and the p-value was 1.456 ×

, confirming that there was no significant difference between the three scenes in terms of visual realism.

5. Limitation and Discussion

This study produced maze terrains of various concepts using the three functions of the proposed authoring system and conducted tests to examine system performance required in immersive VR and verify their application feasibility. In addition, a questionnaire survey was conducted to identify whether developers were sufficiently satisfied with the authoring system and could easily and intuitively use it. This study, however, found difficulty in conducting a test to directly compare the proposed system with typical terrain-generating authoring systems or relevant software. A comparative test was conducted with the terrain-generating function of a Unity 3D engine, which supports immersive VR applications, but comparisons with more diverse studies are needed for credible verification.

Current maze terrains can be used as a background in the fields of entertainment and tourism, such as in games or maze parks, respectively. These maze terrains can be further developed into serious games that train or analyze users’ sense of space or direction, or analyze or verify the performance of systems for finding directions. However, additional research is needed for functions matching the purpose of various other areas of application.

6. Conclusions

The maze terrain authoring system proposed in this study aimed to provide new presence by increasing the immersion of the user in visual realism in immersive VR. Accordingly, the system was proposed to efficiently generate various maze terrains in a user-oriented intuitive structure. To this end, the authoring system was designed by classifying three functions.

Firstly, a maze-generation-algorithm-based maze pattern generation function was implemented in the authoring system to automatically calculate the maze patterns of various sizes when generating maze terrains of a grid type and to edit them in a desired shape easily and intuitively. To express a circular maze terrain, a circular maze generation algorithm was defined and provided as a function of the authoring system. Lastly, a sketch-based maze transformation function was designed so that irregular maze terrains could be expressed in contrast to the uniform feeling provided by maze patterns generated by the algorithms.

In this method, a user draws a desired maze pattern by hand, and the information required to generate the maze terrain is calculated automatically through the image-processing algorithm. The authoring system was completed by implementing a viewer that can check the 3D maze terrain in real time based on the maze pattern calculated by the authoring system equipped with the three functions. From the maze texture map generated through the proposed authoring system, the maze terrain was produced directly, which satisfies the technical performance required by VR applications, to analyze the visual realism of users in immersive VR. VR applications require better performance (more than 75 fps) than interactive systems such as games because of delay, which may occur when generating scenes and trigger VR sickness. In this respect, the maze terrain produced in this study was verified via tests to be suitable for use as a VR application.

Moreover, a survey experiment was conducted by targeting general participants. Firstly, as for the system performance, through comparative tests with the terrain function of commercial engines, it was confirmed that the proposed authoring system enabled users (developers) to offer desired maze terrains easily and intuitively. This shortened required time and led to high user satisfaction with the system. Lastly, through the realism category of a survey questionnaire about presence sensation, it was confirmed that maze terrains in immersive VR could offer novel visual experiences to users. It was also verified through a statistical analysis that all terrains produced through the proposed three functions were satisfactory with no significant differences between them, which may improve presence sensation. In the future, the system will be further developed to provide a large-scale VR maze experience environment such as a maze park or theme park, where multiple users can experience the maze space together in VR.