OpinionML—Opinion Markup Language for Sentiment Representation

Abstract

1. Introduction

Motivation and Contribution

- We propose OpinionML—a new sentiment annotation markup language,

- We also propose OpinionML ontology supporting OpinionML markup structure,

- We provide a comparison of existing sentiment annotation markup languages,

- We present state-of-art comparison of proposed OpinionML ontology with existing sentiment ontologies,

- We perform a series of experiments in order to highlight the efficacy of proposed OpinionML markup language.

- It provides support for major challenges of opinion mining i.e., contextual ambiguities, temporal expressions, opinion holders, etc.,

- It is easy to understand and modify,

- It has a strong ontological support,

- It provides support to external resources like linked-data and knowledge-bases.

2. Opinion Mining and Previous Annotation Schemes

2.1. Why Opinion Mining is Difficult

2.2. Previous Opinion Annotation Schemes

2.2.1. SentiML

SentiML Example

| Listing 1: SentiML example |

|

2.2.2. OpinionMining-ML

OpinionMining-ML Example

| Listing 2: OpinionMining-ML example |

|

2.2.3. EmotionML

EmotionML Example

| Listing 3: EmotionML example |

|

- Scope: EmotionML is a markup language and also a W3C standard that covers the entire range of emotion features in all concerning fields. On the other hand, OpinionMining-ML and SentiML are limited to the domains of IR and NLP. While talking about SentiML and OpinionMining-ML, SentiML has a larger scope than OpinionMining-ML that is limited only to feature-based sentiment analysis.

- Complexity: EmotionML is considered multifaceted and less user-friendly because of its larger scope than the rest of the two annotation schemes. While the vocabulary of SentiML annotation scheme is easier to use than that of OpinionMining-ML.

- Vocabulary: The annotations in EmotionML, in general, are extended to include new and broader vocabulary. On the other hand, SentiML and OpinionMining-ML languages are more specific. The role of SentiML is limited around collecting the concept of modifier and targets of the sentiments while OpinionMining-ML is equipped with meta-tags and is mainly concerned with extraction of sentiment relevant features of the objects.

- Structure: The structure of all three annotation schemes is based on XML. However, in OpinionMining-ML, the granularities are well defined at feature level.

- Contextual Ambiguities: Like the other two schemas, SentiML also define semantics of the affective expressions like appreciation, suggestion, etc. Furthermore, SentiML also assists in identifying the contextual ambiguities, which is considered as a major research issue nowadays in the field of opinion mining.

- Completeness: Completeness [5] is one of the main characteristic features of the annotation. This property deals with the fact of whether an annotation covers all or most of the real-world scenarios. For SentiML, EmotionML and OpinionMining-ML, in light of this particular characteristic, it is observed that none of these annotation schemes seem to satisfy it. SentiML and EmotionML both apply annotations to sentimental expressions while OpinionMining-ML targets the corresponding features of objects. Unfortunately it failed to focus on contextual aspects of opinions that are also considered as challenging issues in opinion mining.

- Flexibility: EmotionML provides an independent way of selecting the vocabulary of emotional states that suits a particular domain. OpinionMining-ML solely depends on the procedure of EmotionML and opens ways to build a domain-specific ontology as per requirements, while SentiML lacks such flexibility.

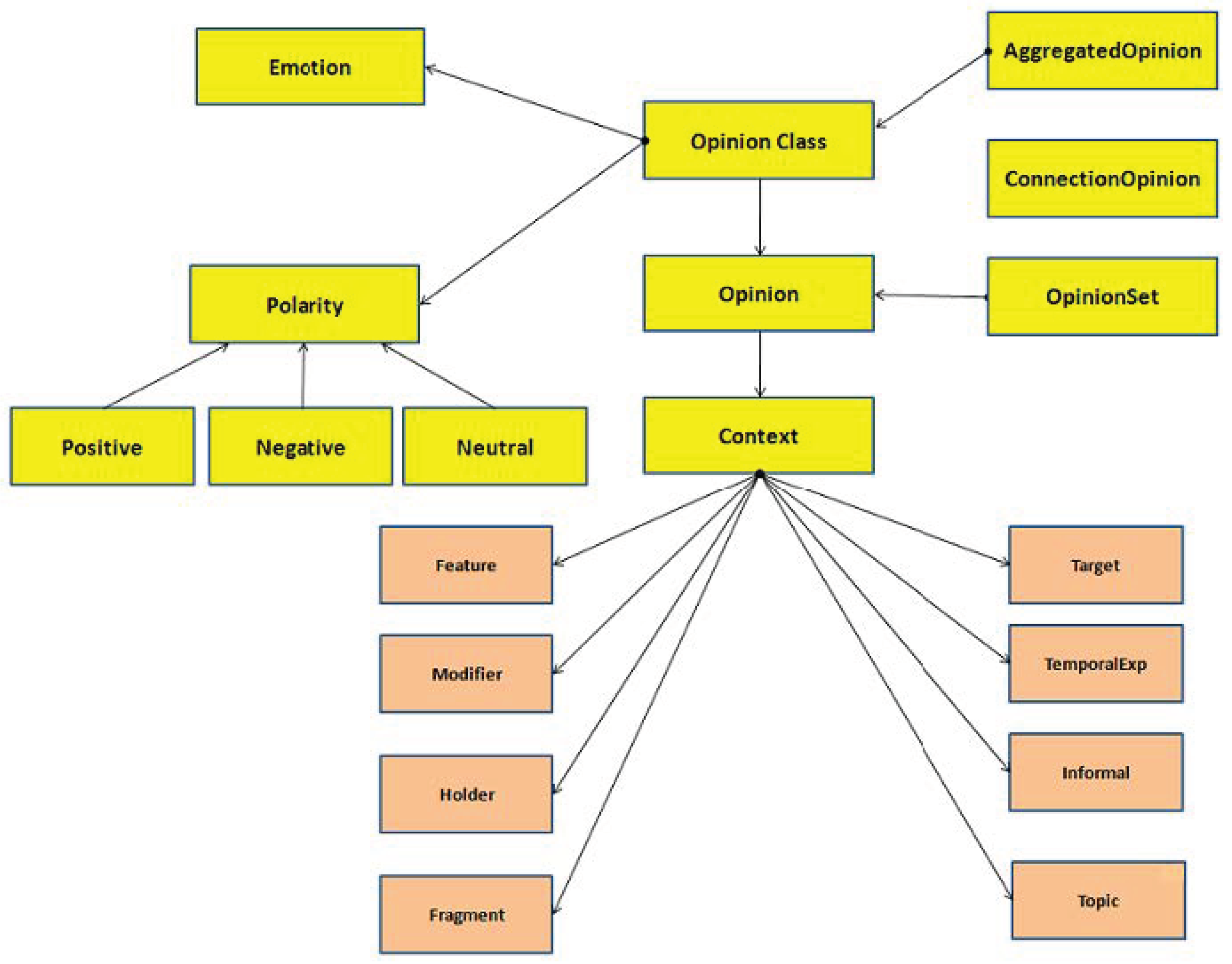

3. OpinionML—A Conceptual, Logical, Ontology Model

3.1. Conceptual Model

- is a set of fragments (sentences or sub-sentences) of the given text.

- is a set of unique holders (i.e., the entity giving opinion about some target) identified in the given text.can be in different fragments.

- is a set of unique targets (i.e., the entity about which opinion has been expressed in the text) identified in the given text.where is defined by a set of features .

- is a set of different attributes or features or properties (for example, screen size of a smart phone) about the targetwhere X represents all features in the text.

- is a set of different modifiers (i.e., subjective words like excellent, destructive, etc.).can be in different fragments.

- is a set of cultural expressions or informal phrases (for example, “bite the bullet, piece of cake” etc.) as found in the given text.can be in different fragments.

- is a set of different temporal expressions (for example, “day after tomorrow”) found in the text

- is a set of different topics in the given text (for example, “human rights, sports” etc.).can be in different fragments.

- is a set of references identified in the text (e.g., source of the text, creator).

- A frequent aggregate function parameter is the attribute in over which the aggregate function is to be applied. For example, in an opinion containing hotels and their rooms, the global quality of the room would be written as:

- The result of is a relation with a single attribute, containing two components:

- –

- the first component is defined by three tuples: label (neutral, positive, negative) polarity value and polarity confidence.

- –

- the second component is defined by a matrix of the emotions with their intensities and confidences.

that holds the result of the polarity aggregate function.

- Typically, groups are specified as a list of attributes , meaning that, for a given relation, tuples should first be clustered into partitions such that these tuples share the same values for . The aggregate function should then be applied to each cluster:

- With groups, the resulting aggregate relation would have as many tuples as there were groups in the original, partitioned relation.

- As a final extension, we note that we can perform more than one aggregate function over the groups in a relational algebra expression. Thus, the general form of the aggregation operation is:

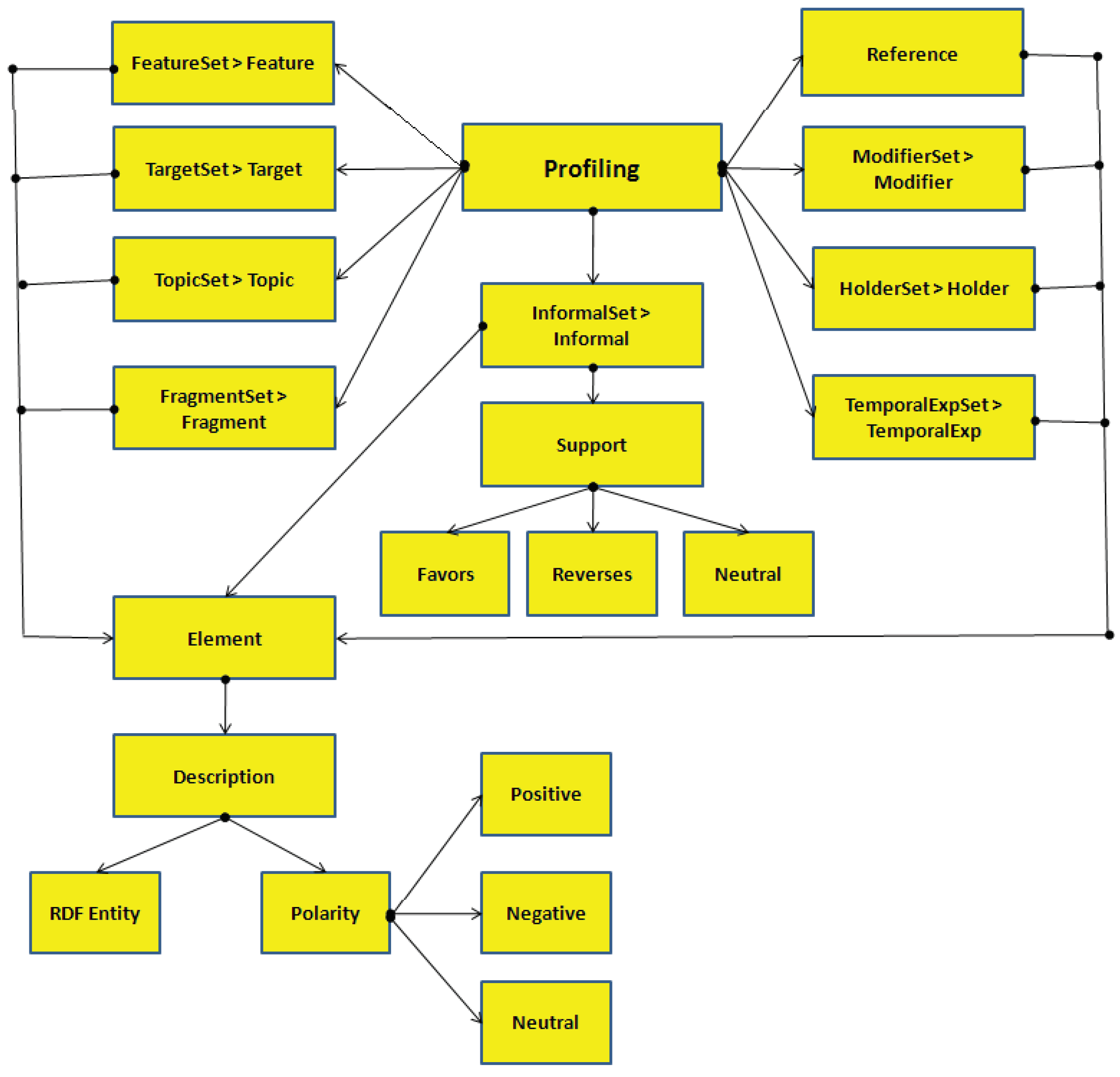

3.2. Logical Model

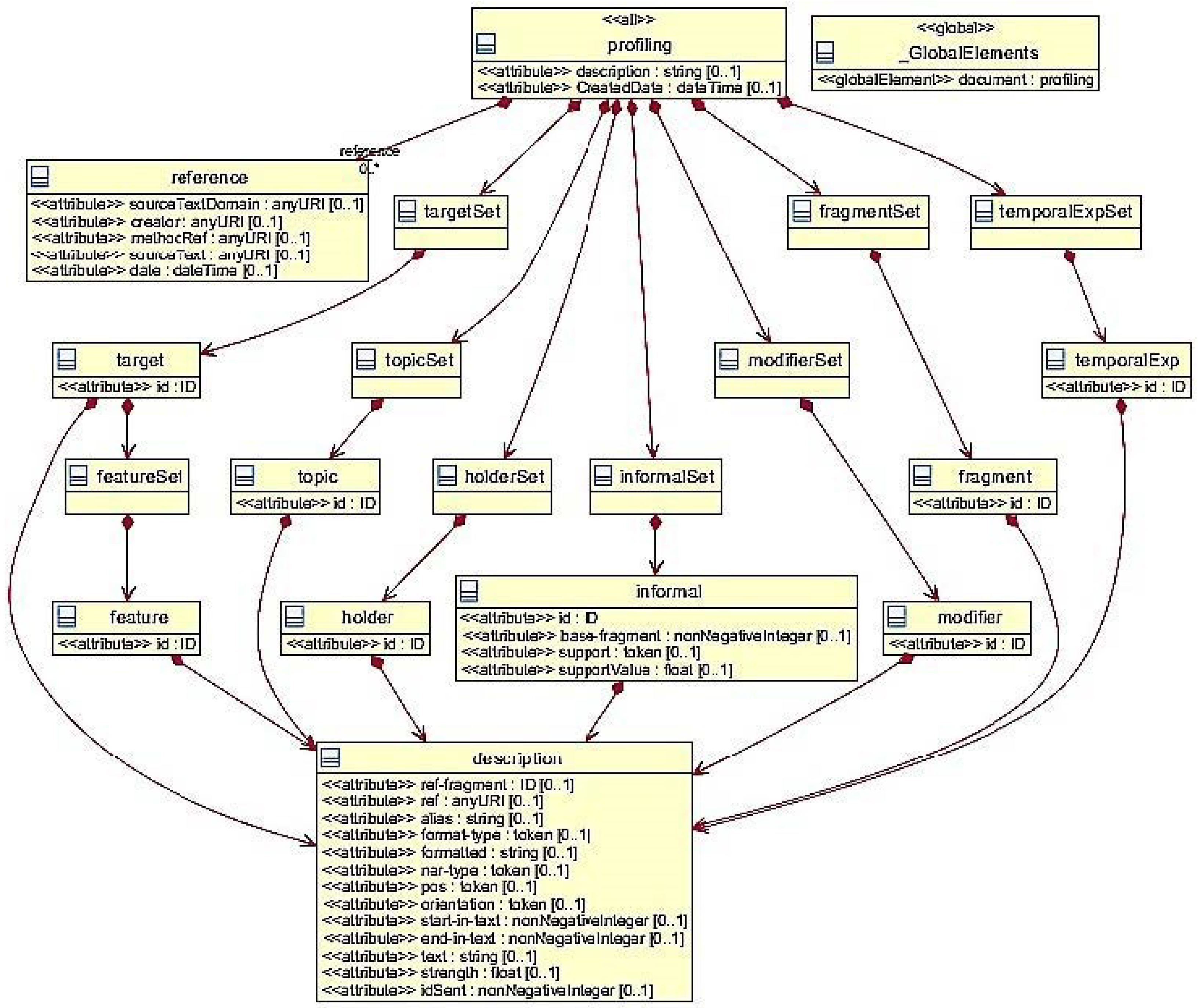

3.3. Structure of OpinionML

3.3.1. Profiling Section

3.3.2. Opinion Section

3.3.3. Vocabulary Section

3.4. OpinionML Example

- Connection: A connection function takes two opinions (let say OP01 and OP02) and the connection operator (for example OR, AND, etc) linking those opinion segments as its input. Polarity and emotions associated to these opinion segments can be combined by exploiting their <polarity> and <emotion> elements.

- Aggregation: Aggregating opinions is one of the hardest tasks in opinion mining. OpinionML makes it easier for us by giving us an opportunity to aggregate opinions on two levels: document level (i.e., aggregating all opinion elements to have one global polarity or emotion score for the given document) and object level (i.e., aggregating all opinion elements talking about different features of the same object to have one global polarity or emotion score for a given object).

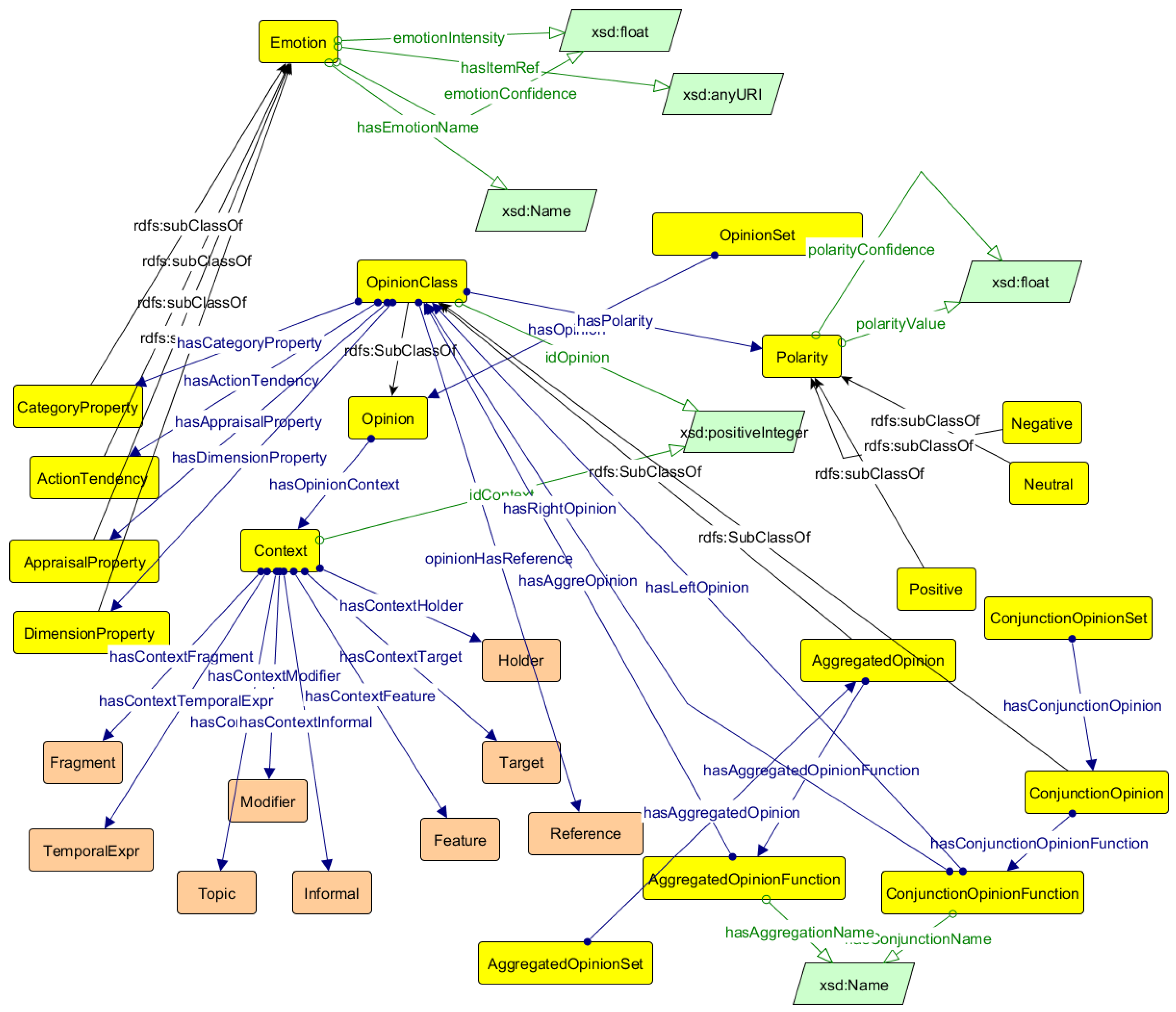

3.5. OpinionML Ontology

- To make it enable in order to publish the raw data about opinionated text in user-generated content,

- To construct a model that helps to compare the opinions automatically, coming from different sources having different polarity, topics, features etc.,

- To link the opinions using contextual information expressed in concepts with other popular specialized ontologies.

- Classes: AggregatedOpinion, Opinion, Polarity,

- Properties: aggregatesOpinion, algorithmConfidence, describesFeature, describesObject, describesObjectPart, extractedFrom, hasOpinion, hasPolarity, maxPolarityValue, minPolarityValue, polarityValue,

- Instances: Negative, Neutral, Positive.

3.6. Comparison with Marl and Onyx

- Linked Data and Knowledge Base Support:One of the biggest advantage of using OpinionML is its support for linked data. All the entities and concepts are identified with Universal Resource Identifiers (URIs) that can be used to link to external resources like knowledge base DBpedia, etc. It will help enrich more information about entities where information is lacking. Besides this, it seeks support of a knowledge base which models complicated relation between features and targets including nested relationships. In the annotated example given above, we have two different targets like ‘North Korea’ and ‘Pyongyang’ in two fragments. If North Korea is linked to the popular knowledge-base using its URI then we can easily know the relationship between both of these targets where ‘Pyongyang’ is represented as the largest city () of North Korea.

- Standard XML Format:The logical model of OpinionML uses XML format for defining its elements. XML format supports interoperability between different platforms and is a tested data exchange format. One of the biggest advantages of using XML is that lot of tools and technologies already exist for XML processing and one does not need to develop new tools.

- Ontology Support:OpinionML takes support of domain ontologies for associating the instances to their relevant concepts. Marl ontology http://www.gi2mo.org/marl/0.1/ns.html is one of the ontologies which defines concepts and relations relevant to opinion mining. OpinionML satisfies almost all the concepts of Marl but Marl ontology still lacks many concepts that still need to be added (like time, informal, etc). Therefore, design and creation of another detailed opinion ontology is part of our future work.

- Ontology-Based Indexation:The support for our proposed opinion ontology facilitates the ontology-based indexation of documents which will definitely ensure the efficient processing of OpinionML documents.

- Domain Independent Support:OpinionML is not limited to textual data annotation of a certain domain but it holds the capability of supporting data from all domains i.e., product reviews, news data, tourism, social network data, etc.

- Granularity Independence:Annotation schemes generally restrict their users to limit themselves to annotation of one granularity level like document, sentence, sub-sentences or passages but OpinionML gives liberty to its users to choose a granularity level of their own choice by identifying them as fragments. A fragment is supposed to be a basic semantic unit having a semantic orientation.

- Semi-Automatic Annotation:Entity orientation perspective of the problem where opinion mining is defined in terms of several sub-tasks makes the opinion annotation much easier using OpinionML graphical annotator. Using the semi-automatic approach rather than a 100 percent manual approach makes it possible to annotate big data collections in less time. However, in the presence of an opinion algorithm it will be a 100% automatic annotation.

- Problem Oriented Annotation: The annotation approaches can be arranged in a three-dimensional space, c.f. [44,45]:

- effort of the annotation,

- completeness of the result (i.e., how well does it capture the real-world situation) and

- (ontological or social) commitment to the result (i.e., how many commit to this model of the world and understand it).

OpinionML is an effort towards achieving completeness of the results where it models most of the real-world situations. For example, it takes into account the contextual ambiguity problem and the processing of cultural expressions or informal language. It is to be noted that no annotation model has dealt with these problems until now. Most of the existing annotation models limit themselves to annotation of subjective expressions while ignoring the rest of the problems. The logical model of OpinionML also helps to aggregate opinions on feature as well as higher granularity level (i.e., sentence, passage, and document). - Adaptability:Although OpinionML is flexible enough to be adopted for any domain but of course it supports the addition of extra information in its model as per requirements. This is made possible by the use of references that are part of the model. These references can be used to model different aspects like user profiles, geo-locations or any other object according to the requirements.

- Problem Oriented Vocabulary:One of the advantages of using OpinionML is its vocabulary conformance with the existing work. The usage of terms like holder, target, etc. makes it easier to understand OpinionML.

- Standard Based Support:The structure of OpinionML is inspired from the W3C standard EmotionML and therefore is equipped with the flexibility and interoperability that EmotionML provides. OpinionML uses the notion of flexible vocabularies exactly the way EmotionML does it.

- Compensating Missing Information:OpinionML compensates for the missing information by using the links between its elements. For example, in the given example, holder information for the fragment FRAG01 is missing but it can still be extracted using its sibling fragment i.e., FRAG0 because both of them make part of a single sentence.

- Redundancy Reduction:The logical model of OpinionML helps to reduce redundancy by keeping profiles of opinion elements separately from actual annotation. Hence, annotation only keeps references of those opinion elements. This model facilitates the modifications in annotations and improves the comprehension of the annotations.

- Modular Approach:OpinionML elements are equipped with rich information about the text to be annotated. This information is split into different modules to save readability and comprehension of the annotation. References are created among several elements of the OpinionML to create a link between several modules. The module acts like a database of all OpinionML elements where information about all elements is kept while in , a collection of several elements are combined to give some semantics of the text. Vocabularies and other knowledge-based resources are kept as a separate module that provides the flexibility of the OpinionML.

- Rich in Information:The logical model of OpinionML enriches the opinion elements with information that could be very helpful. Ontologies are used for obtaining this information about different elements. The structure of the logical model helps to get a benefit from this information.

- Representations of opinion: Opinions may be depicted using the four types of descriptors derived from the scientific literature like emotions is used in EmotionML [32]: <category>, <dimension>, <appraisal>, and <actiontendency>.An <opinion> element may consist of one or more of these descriptors. Each descriptor must be labeled with a name and a value attribute depicting its intensity. For <dimension> descriptor, it is mandatory to assign some points to the value attribute since it describes the opinion strength on one or more scales. For the other descriptors, there is an option to ignore the value since it is possible to make a binary decision about the presence of a given category in the opinionated text. The example below demonstrates various numbers of representing the possible uses of the core opinion representations.

4. OpinionML Evaluation

4.1. Data Collection

- Online Political Speeches: This part of the data collection consists of text documents describing political speeches of US presidents.

- TED Talks: Second source of the data collection is supposed to be Technology, Entertainment, Design (TED) talks on several topics revolving around the theme “ideas worth spreading”.

- MPQA Human Rights: Third source for the used data collection is an extraction from popular MPQA opinion data collection [46]. The “human rights” part of the mentioned data collection is used for this purpose. It is to be mentioned that MPQA original data collections consist of texts with opinion and other emotional annotations of opinions.

4.2. OpinionML Annotation

- identifying opinionated statements within a document,

- identifying (possible) phrases within a statement,

- identifying opinion holders in the sentence,

- identifying the object about which the opinion was (or target) made in a sentence,

- identifying different aspects of target within extracted phrases,

- extracting sentiment of the target from the opinion expressed in a sentence,

- assigning an appropriate title of the given sentence,

- identifying opinion holder at phrase level,

- identifying the possible expressions at temporal level within sentences.

4.3. OpinionML Annotation Guidelines

- Identification of Polarity of a Sentence: Annotating individuals are supposed to label a sentence as positive (or negative) if it shows a positive (or negative) orientation for a given target. The sentence is labeled as “neutral” if you cannot decide about its polarity.

- Labeling Opinion Holders or Opinion Targets Generally, the entity giving opinion (i.e., Holder of the opinion if exists) can be tracked in subject(s) while the entity which is being opinionated (i.e., target of the opinion) can be checked in object of a sentence. If any individual fails or finds difficulty in identification of either or both of holders or targets, he should mark nothing in holder and target elements. The same process can be repeated for phrase level identification of holders and targets.

- Identification of Sentence Topic A web directory like DMoz can be consulted for topic identification of a sentence. While using a web directory, one should be careful about the selected topic and must choose the nearest chain.

- Identify Informal Expressions An idiomatic resource can be used to identify idioms or informal expressions present in the text. It is a very necessary phase because words present in an idiom do not necessarily represent their actual semantics.

4.4. Evaluation

4.5. OpinionML Annotation Tool

- Annotator is presented with a graphical user interface where he opens a document to be annotated.

- Once the document is loaded, all the necessary entities and concepts are marked with different colors automatically.

- Sets of these concepts and entities are automatically defined that can be browsed by the annotator.

- In the first step, annotator is asked to ratify all the identified entities and concepts. He can mark or unmark already identified entities and concepts.

- Once all entities and concepts have been identified semi-automatically, now the annotator is presented with a choice of identifying holders, targets and modifiers of the opinions within each fragment. Generally, they are already marked but a repetition of similar intervention would be required as in the previous step.

- In this step, the polarity of each fragment is marked.

- Finally, cultural phrases are identified and presented to the annotator and he is supposed to change the polarity of the fragments accordingly.

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Appendix A. Elements of OpinionML

Appendix A.1. The <OpinionML> Element

- Optional

- –

- category-set, dimension-set, appraisal-set or action-tendency-set: This attribute is used to define the global emotion vocabulary set for the current OpinionML document. The category-set, dimension-set, appraisal-set or action-tendency-set can be used to declare a global vocabulary (of type category, dimension, appraisal or action-tendency respectively). The attribute MUST be a URI.

- –

- lexicon: This is the reference to the lexicon being used to compute the prior polarities of the modifiers.

- Required

- –

- version: This attribute defined the version of OpinionML being used.

Appendix A.2. The <profiling> Element

- Optional

- –

- sourceText: It is the link towards the text document being annotated,

- –

- sourceTextDomain: This attribute defined the domain of the text i.e., whether this is a document talking about sports, politics, or some event etc.

- –

- creator: This attribute contains the name of the person annotating the document,

- –

- methodRef: Any method used to create profiling of this document can be listed here.

- Required

- –

- time: This is the time of the creation/publication of the document being annotated.

Appendix A.3. The <opinionSet> Element

- Optional

- –

- methodRef (<reference>): This attribute describes whether method used for opinion detection was manual or algorithmic.

- –

- value (<polarity>): This is the value of the polarity of the opinion as computed.

- –

- confidence (<polarity>): The confidence value of the annotator or precision of the algorithm used. The value remains between 0 and 1.

- Required

- –

- id (<opinion>): A number uniquely identifying an opinion,

- –

- fragment (<context>): It is reference to the fragment about which opinion is being expressed,

- –

- holder, target, topic, feature, modifier, informal, temporalExp (<context>): All these attributes provide IDs (or list of IDs) to their corresponding entities defined in the profiling section to represent entity of an opinion. For example, if a fragment contains an informal or cultural phrase then this attribute will contain reference of that informal phrase already existing in profiling section of OpinionML. It becomes necessary in this case that semantic orientation of the current fragment be judged without considering the informal/cultural phrase which is being referenced.

- –

- name (<category>)

- –

- name (<polarity>) This attribute represents the annotated semantic orientation of the opinion. It could have one of three values i.e., “positive”, “negative” or “neutral”.

Appendix A.4. The <fragmentSet> Element

- Optional

- –

- NA

- Required

- –

- id: Unique identity of the fragment.

- –

- start: A number representing start of the text of this fragment in the text,

- –

- end: A number representing end of the text of this fragment in the text,

- –

- SentID: Reference of the sentence this fragment is part of,

- –

- text: It represents the exact text of the fragment as found in the given document to be annotated.

Appendix A.5. The <holderSet> Element

- Optional

- –

- alias: Set of alias for the holder entity as found in the used knowledge base

- Required

- –

- id: Unique identity of the holder.

- –

- text: Exact text of the holder as found,

- –

- ner-type: Type of the holder entity i.e., whether it is person, organization, country, place or thing,

- –

- ref-fragment: It is the list of fragments with their identifiers where this particular holder or its aliases appear as their text or as pronouns. It becomes necessary to keep this to resolve anaphore problem,

- –

- orientation: If the holder entity is “positive”, “negative” or “neutral” in its perception. For example, a murderer found to be holder in a text would be taken as “negative”,

- –

- ref-uri: URI reference of the holder in the knowledge base used.

Appendix A.6. The <TargetSet> Element

- Optional

- –

- alias: Set of alias for the target entity as found in the used knowledge base

- Required

- –

- id: Unique identity of the target.

- –

- text: Exact text of the target as found,

- –

- ner-type: Type of the target entity, i.e., whether it is person, organization, country, thing, place or concept (like, love, etc.),

- –

- ref-fragment: It is the list of fragments with their identifiers where this particular holder or its aliases appear as their text or as pronouns. It becomes necessary to keep this to resolve the anaphore problem,

- –

- orientation: If the target entity is “positive”, “negative” or “neutral” in its perception.

- –

- ref-uri: URI reference of the target in the knowledge base used.

Appendix A.7. The <featureSet> Element

- Optional

- –

- alias: Set of alias for the feature as found in the used knowledge base

- Required

- –

- id: Unique identity of the feature,

- –

- text: Exact text of the target as found,

- –

- ref-fragment: It is the list of fragments with their identifiers where this particular feature or its aliases appear,

- –

- value-type: The type of value this feature could have, i.e., “numeric”, “category” or “mixed”.

- –

- orientation: If the feature is “positive”, “negative” or “neutral” in its perception.

- –

- ref-uri: URI reference of the feature in the knowledge base used. It is especially important for features which can be referred to using several different terms. For example, the sentence “I cannot hear well” or “the speakers are weak” talk about the same feature, i.e., sound of a television but using different vocabulary. Such problems are hard to deal with but using an entry of the knowledge base as a reference (like “sound” in this case) could be useful to resolve this problem.

Appendix A.8. The <modifierSet> Element

- Optional

- –

- alias: Set of alias for the modifier as found in the used knowledge base

- –

- strength: It shows the intensity of semantic orientation a modifier has. It could be extracted from a lexical resource or given by the annotators. Generally, value remains between 0 and 1.

- Required

- –

- id: Unique identity of the modifier,

- –

- text: Exact text of the modifier as found,

- –

- pos: pos stands for “parts of speech” i.e., whether the feature is a noun, adjective, verb or adverb.

- –

- orientation: If the modifier is “positive”, “negative” or “neutral” as found in one of the lexicons,

- –

- ref-fragment: The list of fragments where this modifier or its alias appear,

- –

- ref-uri: URI reference of the modifier in the knowledge base used.

Appendix A.9. The <informalSet> Element

- Optional

- –

- NA

- Required

- –

- id: Unique identity of the fragment.

- –

- start: A number which tells the starts of the informal expression as given in the attribute “text”

- –

- end: A number which tells the end of the informal expression as given in the attribute “text”

- –

- orientation: The actual semantics of the given phrase and not literal semantics.

- –

- support: This attribute could have three possible values i.e., “favors”, “reverses” or “neutral” meaning that whether this phrase supports the semantics of actual phrase it appeared in or it reverses it or it stays neutral,

- –

- ref-fragment: The list of fragments where this modifier or its alias appear,

- –

- base-fragment: This is the ID of the fragment this particular phrase appears in.

Appendix A.10. The <topicSet> Element

- Optional

- –

- NA

- Required

- –

- id: Unique identity of the topic.

- –

- ref: The referenced source used to find the topic. Topics are extracted for each defined fragment but only unique topics are kept in the profiling section and then each fragment provides a reference to the related fragment,

- –

- chain: The actual hierarchy of topics as found in the referenced source of knowledge from which topic has been detected,

- –

- orientation: If the topic detected is positive, negative or neutral in its semantics; For example, a topic labelled as “festival” could be considered as positive while a topic relevant to “wars” would be tagged as negative.

- –

- ref-fragment: The list of fragments this topic is associated with separated by a comma.

Appendix A.11. The <temporalExpSet> Element

- Optional

- –

- NA

- Required

- –

- id: Unique identity of the time fragment.

- –

- text: Exact text of the expression as it exists in the given text,

- –

- type: It contains one of the following values i.e., Date, Duration, Set or Time. Details about range of temporal expression that these values cover can be consulted in documentation of TimeML [51].

- –

- value: It lists the TIMEML equivalent expression of the given temporal expression. For example, if the given temporal expression is at date 24 April then its TIMEML equivalent will be XXXX-04-24. The value XXXX replaces the missing year.

- –

- nature: Whether the time mentioned in the text is referring to real time or something virtual time like “here today, gone tomorrow” (meaning appearing or existing only for a short time).

- –

- tense: It represents whether the time expression referring to some past, present or future time.

- –

- start: A number which tells the starts of the time expression as given in the attribute “text”

- –

- end: A number which tells the end of the time expression as given in the attribute “text”

- –

- ref-fragment: The list of fragments where this time expression appears.

Appendix B. Listings

| Listing 4: Vocabulary Section |

|

| Listing 5: Vocabulary Citation in Opinion Element of OpinionML |

|

| Listing 6: External Vocabulary Section Reference in Opinion Element |

|

| Listing 7: Vocabulary section reference in OpinionML Element |

|

| Listing 8: OpinionML Element |

|

| Listing 9: Profiling Element |

|

| Listing 10: OpinionSet Element |

|

| Listing 11: FragmentSet Element |

|

| Listing 12: HolderSet Element |

|

| Listing 13: TargetSet Element |

|

| Listing 14: FeatureSet Element |

|

| Listing 15: ModifierSet Element |

|

| Listing 16: InformalSet Element |

|

| Listing 17: TopicSet Element |

|

| Listing 18: TemporalExpSet Element |

|

| Listing 19: An OpinionML Example |

|

|

|

| Listing 20: Core Opinion Representation |

|

References

- Murphy, J.; Link, M.W.; Childs, J.H.; Tesfaye, C.L.; Dean, E.; Stern, M.; Pasek, J.; Cohen, J.; Callegaro, M.; Harwood, P. Social Media in Public Opinion Research: Report of the AAPOR Task Force on Emerging Technologies in Public Opinion Research; American Association of Public Opinion Research (AAPOR): Anaheim, CA, USA, 2014. [Google Scholar]

- Thelwall, M. Blog searching: The first general-purpose source of retrospective public opinion in the social sciences? Online Inf. Rev. 2007, 31, 277–289. [Google Scholar] [CrossRef]

- Verma, B.; Thakur, R.S. Sentiment Analysis Using Lexicon and Machine Learning-Based Approaches: A Survey. In Proceedings of the International Conference on Recent Advancement on Computer and Communication; Springer: Singapore, 2018; pp. 441–447. [Google Scholar]

- Kiyavitskaya, N.; Zeni, N.; Cordy, J.R.; Mich, L.; Mylopoulos, J. Semi-Automatic Semantic Annotations for Web Documents. In Proceedings of the SWAP 2005, 2nd Italian Semantic Web Workshop, Trento, Italy, 14–16 December 2005; pp. 14–15. [Google Scholar]

- Oren, E.; Muller, K.; Scerri, S.; Handschuh, S.; Sintek, M. What Are Semantic Annotations? 2006. Available online: https://www.ontotext.com/knowledgehub/fundamentals/semantic-annotation/ (accessed on 1 March 2019).

- Hazarika, D.; Poria, S.; Gorantla, S.; Cambria, E.; Zimmermann, R.; Mihalcea, R. CASCADE: Contextual Sarcasm Detection in Online Discussion Forums. arXiv, 2018; arXiv:1805.06413. [Google Scholar]

- AL-Sharuee, M.T.; Liu, F.; Pratama, M. Sentiment analysis: An automatic contextual analysis and ensemble clustering approach and comparison. Data Knowl. Eng. 2018, 115, 194–213. [Google Scholar] [CrossRef]

- Das, S.; Behera, R.K.; Rath, S.K. Real-Time Sentiment Analysis of Twitter Streaming data for Stock Prediction. Procedia Comput. Sci. 2018, 132, 956–964. [Google Scholar] [CrossRef]

- Holzinger, A. Social Media Mining and Social Network Analysis: Emerging Research; Emerald Group Publishing Limited Howard House: Bingley, UK, 2014. [Google Scholar]

- Kim, S.M.; Hovy, E. Extracting opinions, opinion holders, and topics expressed in online news media text. In Proceedings of the Workshop on Sentiment and Subjectivity in Text, Sydney, Australia, 22 July 2006; pp. 1–8. [Google Scholar]

- Thelwall, M. Gender bias in sentiment analysis. Online Inf. Rev. 2018, 42, 45–57. [Google Scholar] [CrossRef]

- Thelwall, M. Gender bias in machine learning for sentiment analysis. Online Inf. Rev. 2018, 42, 343–354. [Google Scholar] [CrossRef]

- Ravi, K.; Ravi, V. A survey on opinion mining and sentiment analysis: Tasks, approaches and applications. Knowl.-Based Syst. 2015, 89, 14–46. [Google Scholar] [CrossRef]

- Kim, Y.; Dwivedi, R.; Zhang, J.; Jeong, S.R. Competitive intelligence in social media Twitter: iPhone 6 vs. Galaxy S5. Online Inf. Rev. 2016, 40, 42–61. [Google Scholar] [CrossRef]

- Saad Missen, M.M.; Coustaty, M.; Salamat, N.; Prasath, V.S. SentiML++: An extension of the SentiML sentiment annotation scheme. New Rev. Hypermedia Multimed. 2018, 24, 28–43. [Google Scholar] [CrossRef]

- Liu, B. Sentiment Analysis and Subjectivity. Handb. Nat. Lang. Process. 2010, 2, 627–666. [Google Scholar]

- Missen, M.M.S.; Boughanem, M.; Cabanac, G. Challenges for Sentence Level Opinion Detection in Blogs. In Proceedings of the Eighth IEEE/ACIS International Conference on Computer and Information Sciencepp, Shanghai, China, 1–3 June 2009; pp. 347–351. [Google Scholar]

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs Up?: Sentiment Classification Using Machine Learning Techniques. Assoc. Comput. Linguist. 2002, 10, 79–86. [Google Scholar] [CrossRef]

- Maynard, D.; Bontcheva, K.; Rout, D. Challenges in developing opinion mining tools for social media. In Proceedings of the@ NLP Can U Tag# Usergeneratedcontent, Istanbul, Turkey, 21–27 May 2012; pp. 15–22. [Google Scholar]

- Yang, H.L.; Chao, A.F. Sentiment annotations for reviews: An information quality perspective. Online Inf. Rev. 2018, 42, 579–594. [Google Scholar] [CrossRef]

- Turney, P.D.; Littman, M.L. Measuring Praise and Criticism: Inference of Semantic Orientation from Association. ACM Trans. Inf. Syst. 2003, 21, 315–346. [Google Scholar] [CrossRef]

- Zhao, J.; Dong, L.; Wu, J.; Xu, K. Moodlens: An emoticon-based sentiment analysis system for chinese tweets. In Proceedings of the 18th ACM SIGKDD International Conference On Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 1528–1531. [Google Scholar]

- Wilson, T.; Wiebe, J.; Hoffmann, P. Recognizing contextual polarity in phrase-level sentiment analysis. In Proceedings of the Conference on Human Language Technology And Empirical Methods in Natural Language Processing, Vancouver, BC, Canada, 6–8 October 2005; Association for Computational Linguistics: Stroudsburg, PA, USA, 2005; pp. 347–354. [Google Scholar]

- Councill, I.G.; McDonald, R.; Velikovich, L. What’s Great and What’s Not: Learning to Classify the Scope of Negation for Improved Sentiment Analysis. In Proceedings of the Workshop on Negation and Speculation in Natural Language Processing; NeSp-NLP ’10; Association for Computational Linguistics: Stroudsburg, PA, USA, 2010; pp. 51–59. [Google Scholar]

- Kennedy, A.; Inkpen, D. Sentiment Classification of Movie Reviews Using Contextual Valence Shifters. Comput. Intell. 2006, 22, 110–125. [Google Scholar] [CrossRef]

- Krestel, R.; Siersdorfer, S. Generating Contextualized Sentiment Lexica Based on Latent Topics and User Ratings. In Proceedings of the 24th ACM Conference on Hypertext and Social Media, Paris, France, 1–3 May 2013; pp. 129–138. [Google Scholar] [CrossRef]

- Stylios, G.; Tsolis, D.; Christodoulakis, D. Mining and Estimating Usersâ Opinion Strength in Forum Texts Regarding Governmental Decisions. In Artificial Intelligence Applications and Innovations; Iliadis, L., Maglogiannis, I., Papadopoulos, H., Karatzas, K., Sioutas, S., Eds.; IFIP Advances in Information and Communication Technology; Springer: Berlin/Heidelberg, Germany, 2012; Volume 382, pp. 451–459. [Google Scholar]

- Efron, M. Cultural orientation: Classifying subjective documents by cociation analysis. In Proceedings of the AAAI Fall Symposium on Style and Meaning in Language, Art, and Music, Washington, DC, USA, 21–24 October 2004; pp. 41–48. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S.; Mohammad, A.S.; Al-Ayyoub, M.; Zhao, Y.; Qin, B.; De Clercq, O.; et al. SemEval-2016 task 5: Aspect based sentiment analysis. In Proceedings of the 10th international workshop on semantic evaluation (SemEval-2016), San Diego, CA, USA, 16–17 June 2016; pp. 19–30. [Google Scholar]

- Di Bari, M.; Sharoff, S.; Thomas, M. SentiML: Functional Annotation for Multilingual Sentiment Analysis. In Proceedings of the 1st International Workshop on Collaborative Annotations in Shared Environment: Metadata, Vocabularies and Techniques in the Digital Humanities, Florence, Italy, 10 September 2013; ACM: New York, NY, USA, 2013; pp. 15:1–15:7. [Google Scholar] [CrossRef]

- Robaldo, L.; Caro, L.D. OpinionMining-ML. Comput. Standards Interfaces 2013, 35, 454–469. [Google Scholar] [CrossRef]

- Schroder, M.; Baggia, P.; Burkhardt, F.; Pelachaud, C.; Peter, C.; Zovato, E. EmotionML—An upcoming standard for representing emotions and related states. In International Conference on Affective Computing and Intelligent Interaction; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Cambria, E.; Das, D.; Bandyopadhyay, S.; Feraco, A. Affective computing and sentiment analysis. IEEE Intell. Syst. 2016, 30, 102–107. [Google Scholar] [CrossRef]

- Shankland, S. EmotionML: Will Computers Tap into Your Feelings? CNET News. 30 August 2010. Available online: https://www.cnet.com/news/emotionml-will-computers-tap-into-your-feelings/ (accessed on 1 March 2019).

- Liu, B. Sentiment Analysis and Opinion Mining: Synthesis Lectures on Human Language Technologies; Morgan & Claypool Publishers: San Rafael, CA, USA, 2012; p. 167. [Google Scholar]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Inf. Retrieval 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Cambria, E.; Schuller, B.; Xia, Y.; Havasi, C. New Avenues in Opinion Mining and Sentiment Analysis. IEEE Intell. Syst. 2013, 28, 15–21. [Google Scholar] [CrossRef]

- Swami, A.; Mete, A.; Bhosle, S.; Nimbalkar, N.; Kale, S. Ferom: Feature Extraction and Refinement for Opinion Mining; Wiley Online Library: Hoboken, NJ, USA, 2017; pp. 720–730. [Google Scholar]

- Munezero, M.; Montero, C.S.; Sutinen, E.; Pajunen, J. Are They Different? Affect, Feeling, Emotion, Sentiment, and Opinion Detection in Text. Affect. Comput. IEEE Trans. 2014, 5, 101–111. [Google Scholar] [CrossRef]

- Westerski, A.; Iglesias, C.A.; Ric, F.T. Linked opinions: Describing sentiments on the structured web of data. In Proceedings of the 4th International Workshop Social Data on the Web (SDoW2011), Bonn, Germany, 23 October 2012; pp. 10–21. [Google Scholar]

- Sánchez-Rada, J.F.; Iglesias, C.A. Onyx: Describing Emotions on the Web of Data. In Proceedings of the First International Workshop on Emotion and Sentiment in Social and Expressive Media: Approaches and perspectives from AI (ESSEM 2013), Torino, Italy, 3 December 2013; AI*IA, Italian Association for Artificial Intelligence, CEUR-WS: Torino, Italy, 2013; Volume 1096, pp. 71–82. [Google Scholar]

- Dragoni, M.; Poria, S.; Cambria, E. OntoSenticNet: A commonsense ontology for sentiment analysis. IEEE Intell. Syst. 2018, 33, 77–85. [Google Scholar] [CrossRef]

- Peroni, S. Graffoo: Graphical Framework for OWL Ontologies. 2011. Available online: https://opencitations.wordpress.com/2011/06/29/graffoo-a-graphical-framework-for-owl-ontologies/ (accessed on 1 March 2019).

- Abecker, A.; van Elst, L. Ontologies for Knowledge Management, Handbook on Ontologies; Springer: New York, NY, USA, 2004; pp. 435–454. [Google Scholar]

- Van Elst, L.; Abecker, A. Ontologies for information management: Balancing formality, stability, and sharing scope. Expert Syst. Appl. 2002, 23, 357–366. [Google Scholar] [CrossRef]

- Wilson, T.A. Fine-Grained Subjectivity and Sentiment Analysis: Recognizing The Intensity, Polarity, and Attitudes of Private States. Ph.D. Thesis, University of Pittsburgh, Pittsburgh, PA, USA, 2008. [Google Scholar]

- Fleiss, J.L.; Cohen, J. The equivalence of weighted kappa and the intraclass correlation coefficient as measures of reliability. Educ. Psychol. Meas. 1973, 33, 613–619. [Google Scholar] [CrossRef]

- Baeza-Yates, R.; Ribeiro-Neto, B. Modern Information Retrieval; ACM Press: New York, NY, USA, 1999; Volume 463. [Google Scholar]

- He, W.; Tian, X.; Tao, R.; Zhang, W.; Yan, G.; Akula, V. Application of social media analytics: A case of analyzing online hotel reviews. Online Inf. Rev. 2017, 41, 921–935. [Google Scholar] [CrossRef]

- Pustejovsky, J.; Castano, J.M.; Ingria, R.; Sauri, R.; Gaizauskas, R.J.; Setzer, A.; Radev, D.R. Timeml: Robust specification of event and temporal expressions in text. New Direct. Quest. Answ. 2003, 3, 28–34. [Google Scholar]

- Saurí, R.; Littman, J.; Knippen, B.; Gaizauskas, R.; Setzer, A.; Pustejovsky, J. TimeML Annotation Guidelines, version 1.2.1; 2006. Available online: https://www.researchgate.net/profile/James_Pustejovsky/publication/248737128_TimeML_Annotation_Guidelines_Version_121/links/55c9d67c08aeb97567483792.pdf (accessed on 1 March 2019).

| Criteria | SentiML | OpinionMining-ML | EmotionML |

|---|---|---|---|

| Scope | Limited to the domains of IR and NLP | Limited to the domains of IR and NLP | Covers wide range of emotion features |

| Complexity | Simpler and more semantic | Complex and less user-understandable syntax | Multifaceted and less user-friendly |

| Vocabulary | Limited around the concepts of modifier and targets of the sentiments | Equipped with meta-tags and feature-based sentiment extraction vocabulary. | Rich in vocabulary which is further extendable |

| Structure | XML-based structure | XML-based structure | XML-based structure |

| Contextual Ambiguities | Offers support | No Support | No Support |

| Completeness | No | No | No |

| Flexibility | Lacks flexibility | Flexibility depends on ontology used | Flexible in defining emotion states |

| Task | Kappa Agreement Score |

|---|---|

| Sentence Polarity Agreement | 0.87 |

| Holder Recognition Agreement | 0.93 |

| Target Recognition Agreement | 0.94 |

| Topic Identification Agreement | 0.79 |

| Informal Expression Agreement | 0.81 |

| Overall Annotation Agreement | 0.80 |

| Query I | Who blamed North Korean Authorities? |

|---|---|

| Query II | What is report about? |

| Query III | How many times the report said something negative about North Korea? |

| Query IV | Who runs the greatest anti-poverty program? |

| Query V | What IFRC says about human rights situation in Iran? |

| Query VI | Which entity named the US report a “groundless plot”? |

| Query VII | On what issue president Kim made a very positive statement? |

| Query VIII | Who are detained arbitrarily? |

| Query IX | What causes detention of citizens? |

| Query X | Who said that “Terrorists believe that anything goes in the name of their cause”. |

| Query XI | In IHRC declaration who was target of injustice? |

| Query XII | Which organization called on Israel to stop bombing? |

| Query XIII | Analysts have warned about what? |

| Query XIV | What is the purpose of Mary Robinson visit to China? |

| Query XV | What President Obama had to say about violence? |

| Query | SentiML | OpinionML |

|---|---|---|

| Query I | 0 | 1 |

| Query II | 0 | 1 |

| Query III | 0 | 1 |

| Query IV | 1 | 1 |

| Query V | 0 | 1 |

| Query VI | 0 | 1 |

| Query VII | 0 | 1 |

| Query VIII | 1 | 1 |

| Query IX | 0 | 1 |

| Query X | 0 | 1 |

| Query XI | 1 | 1 |

| Query XII | 0 | 1 |

| Query XIII | 1 | 1 |

| Query XIV | 0 | 1 |

| Query XV | 0 | 1 |

| Mean | 0.26 | 1 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Attik, M.; Missen, M.M.S.; Coustaty, M.; Choi, G.S.; Alotaibi, F.S.; Akhtar, N.; Jhandir, M.Z.; Prasath, V.B.S.; Salamat, N.; Husnain, M. OpinionML—Opinion Markup Language for Sentiment Representation. Symmetry 2019, 11, 545. https://doi.org/10.3390/sym11040545

Attik M, Missen MMS, Coustaty M, Choi GS, Alotaibi FS, Akhtar N, Jhandir MZ, Prasath VBS, Salamat N, Husnain M. OpinionML—Opinion Markup Language for Sentiment Representation. Symmetry. 2019; 11(4):545. https://doi.org/10.3390/sym11040545

Chicago/Turabian StyleAttik, Mohammed, Malik Muhammad Saad Missen, Mickaël Coustaty, Gyu Sang Choi, Fahd Saleh Alotaibi, Nadeem Akhtar, Muhammad Zeeshan Jhandir, V. B. Surya Prasath, Nadeem Salamat, and Mujtaba Husnain. 2019. "OpinionML—Opinion Markup Language for Sentiment Representation" Symmetry 11, no. 4: 545. https://doi.org/10.3390/sym11040545

APA StyleAttik, M., Missen, M. M. S., Coustaty, M., Choi, G. S., Alotaibi, F. S., Akhtar, N., Jhandir, M. Z., Prasath, V. B. S., Salamat, N., & Husnain, M. (2019). OpinionML—Opinion Markup Language for Sentiment Representation. Symmetry, 11(4), 545. https://doi.org/10.3390/sym11040545