Research on Moving Target Tracking Based on FDRIG Optical Flow

Abstract

1. Introduction

2. Related Work

3. Principle and Implementation of FDRIG Optical Flow

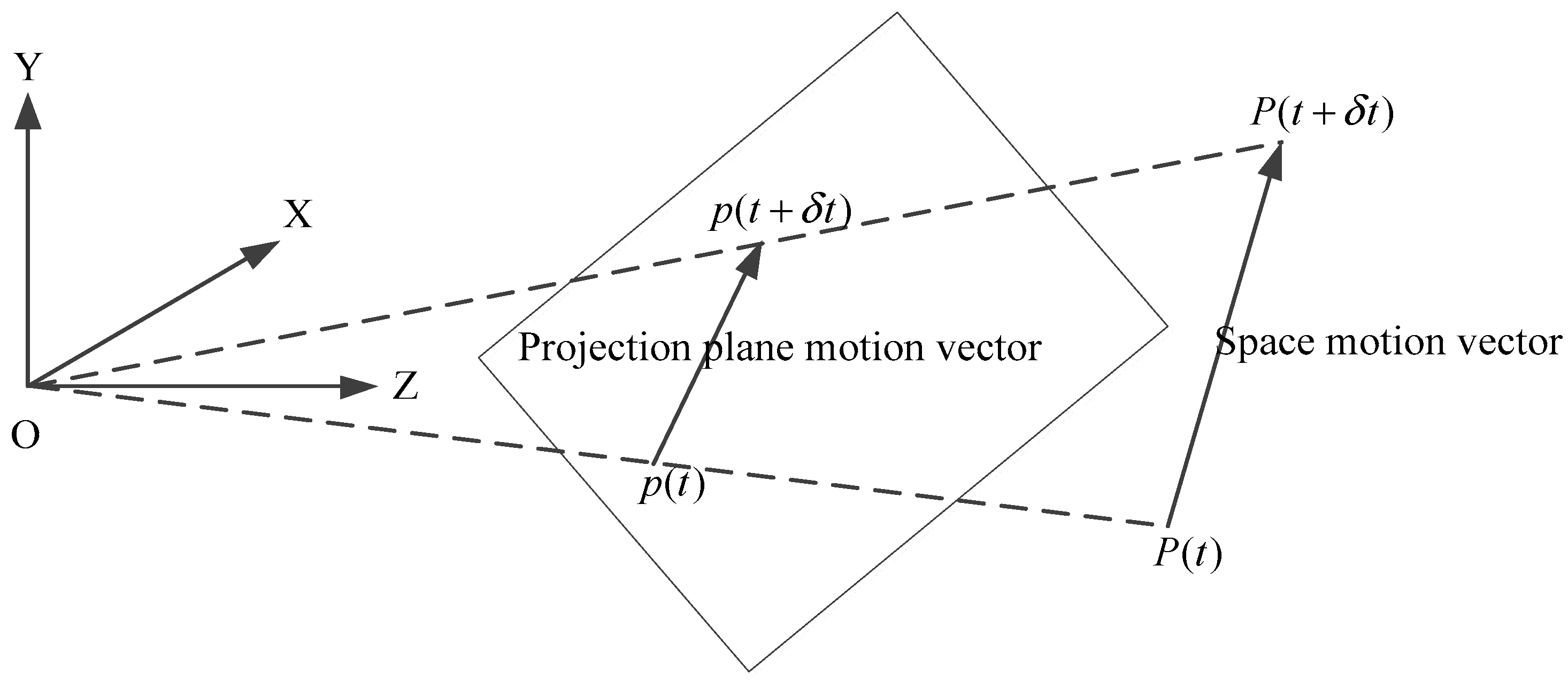

3.1. Theory of Optical Flow

3.2. FDRIG Optical Flow Algorithm

4. Experimental Studies and Discuss

4.1. Experiment 1 on one Vehicle

4.1.1. Description of the Experimental Process

4.1.2. Parameter Setting in Halcon Software Based on FDRIG Optical Flow

4.1.3. Results and Discussion

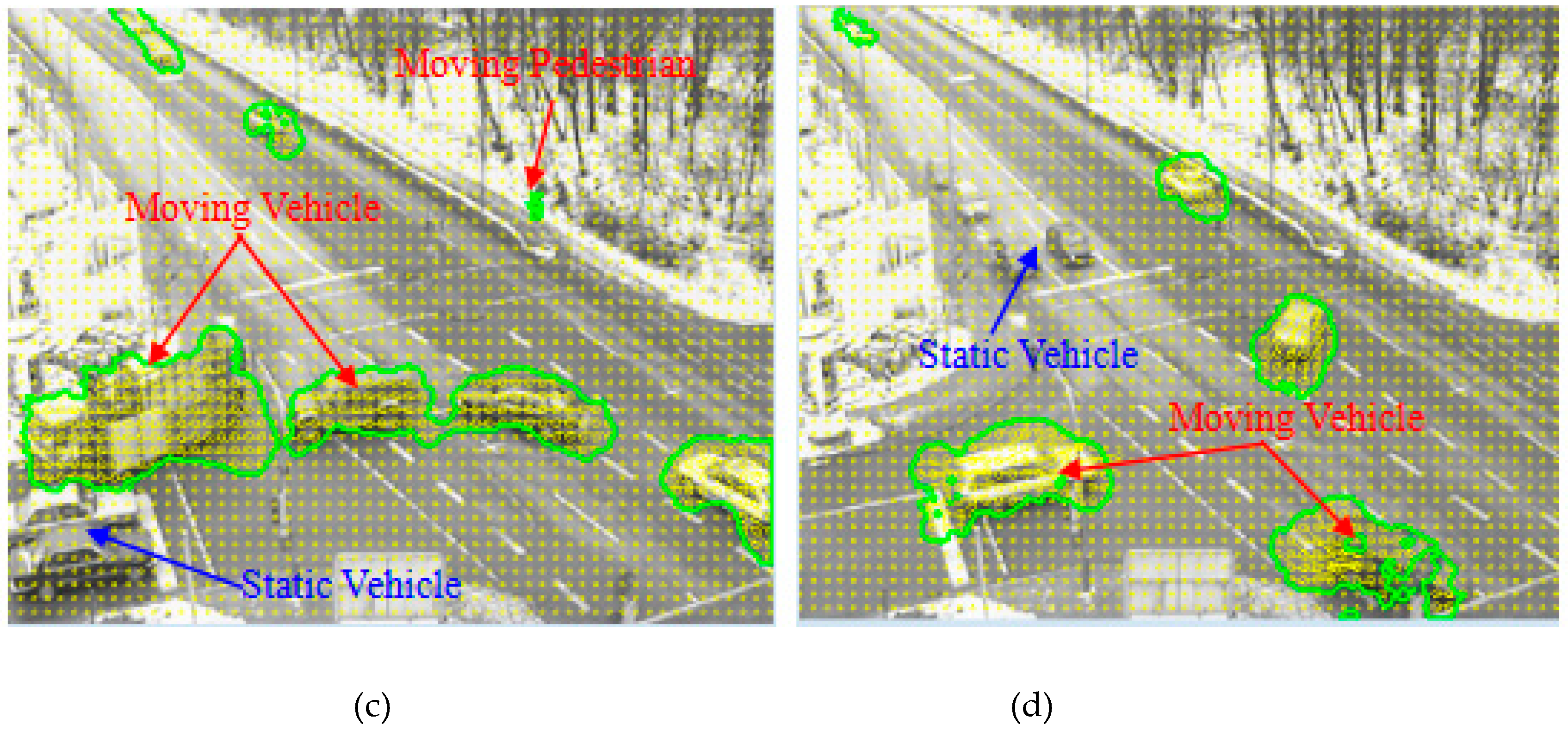

4.2. Analysis and Discussion of Experiment 2 on Multi-Vehicles Motion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Garcia, J.; Gardel, A.; Bravo, I.; Lazaro, J.L.; Martinez, M.; Rodriguez, D. Directional People Counter Based on Head Tracking. IEEE Trans. Ind. Electron. 2013, 60, 3991–4000. [Google Scholar] [CrossRef]

- Muddamsetty, S.M.; Sidibé, D.; Trémeau, A.; Mériaudeau, F. Salient objects detection in dynamic scenes using color and texture features. Multimed. Tools Appl. 2017, 77, 5461–5474. [Google Scholar] [CrossRef]

- Shi, X.B.; Wang, M.; Zhang, D.Y.; Huang, X.S. An Approach for Moving Object Detection Using Continuing Tracking Optical Flow. J. Chin. Comput. Syst. 2014, 35, 643–647. [Google Scholar]

- Jazayeri, A.; Cai, H.; Zheng, J.Y.; Tuceryan, M. Vehicle Detection and Tracking in Car Video Based on Motion Model. IEEE Trans. Intell. Transp. Syst. 2011, 12, 583–595. [Google Scholar] [CrossRef]

- Pijnacker Hordijk, B.J.; Scheper, K.Y.; De Croon, G.C. Vertical landing for micro air vehicles using event-based optical flow. J. Field Robot. 2018, 35, 69–90. [Google Scholar] [CrossRef]

- Guan, W.; Chen, X.; Huang, M.; Liu, Z.; Wu, Y.; Chen, Y.; Wu, X. High-Speed Robust Dynamic Positioning and Tracking Method Based on Visual Visible Light Communication Using Optical Flow Detection and Bayesian Forecast. IEEE Photonics J. 2018, 10, 1–22. [Google Scholar] [CrossRef]

- Schuster, T.; Weickert, J. On the Application of Projection Methods for Computing Optical Flow Fields. Inverse Probl. Imaging 2017, 1, 673–690. [Google Scholar]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Mohamed, M.A.; Rashwan, H.A.; Mertsching, B.; García, M.A.; Puig, D. Illumination-Robust Optical Flow Using a Local Directional Pattern. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1499–1508. [Google Scholar] [CrossRef]

- Bao, L.; Yang, Q.; Jin, H. Fast Edge-Preserving PatchMatch for Large Displacement Optical Flow. IEEE Trans. Image Process. 2014, 23, 4996–5006. [Google Scholar] [CrossRef]

- Muhammad, K.; Hamza, R.; Ahmad, J.; Lloret, J.; Wang, H.; Baik, S.W.; Wang, H.H.G. Secure Surveillance Framework for IoT Systems Using Probabilistic Image Encryption. IEEE Trans. Ind. Inform. 2018, 14, 3679–3689. [Google Scholar] [CrossRef]

- Zhong, Y.; Ma, A.; Ong, Y.S.; Zhu, Z.; Zhang, L. Computational intelligence in optical remote sensing image processing. Appl. Soft Comput. 2018, 64, 75–93. [Google Scholar] [CrossRef]

- Rashwan, H.A.; Puig, D.; Garcia, M.A. Improving the robustness of variational optical flow through tensor voting. Comput. Vis. Image Underst. 2012, 116, 953–966. [Google Scholar] [CrossRef]

- Bung, D.B.; Valero, D. Optical flow estimation in aerated flows. J. Hydraul. Res. 2016, 54, 575–580. [Google Scholar] [CrossRef]

- Bengtsson, T.; McKelvey, T.; Lindström, K. Optical flow estimation on image sequences with differently exposed frames. Opt. Eng. 2015, 54, 093103. [Google Scholar] [CrossRef]

- Lan, H.; Zhou, W.; Qi, Y. Sparse optical flow target extraction and tracking in dynamic backgrounds. J. Image Graph. 2016, 21, 771–780. [Google Scholar]

- Qin, X.-B.; Chai, Z. Optical flow algorithm based on optimized motion estimation. J. Sichuan Univ. 2014, 51, 475–479. [Google Scholar]

- Kumaran, S.K.; Mohapatra, S.; Dogra, D.P.; Roy, P.P.; Kim, B.G. Computer vision-guided intelligent traffic signaling for isolated intersections. Expert Syst. Appl. 2019, 134, 267–278. [Google Scholar] [CrossRef]

- Aminfar, A.H.; Davoodzadeh, N.; Aguilar, G. Application of optical flow algorithms to laser speckle imaging. Microvasc. Res. 2019, 122, 52–59. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, J.; Zhang, C.; Li, B. An effective motion object detection method using optical flow estimation under a moving camera. J. Vis. Commun. Image Represent. 2018, 55, 215–228. [Google Scholar] [CrossRef]

- Sengar, S.S.; Mukhopadhyay, S. Detection of moving objects based on enhancement of optical flow. Optik 2017, 145, 130–141. [Google Scholar] [CrossRef]

- Sun, D.; Sudderth, E.B.; Black, M.J. Layered image motion with explicit occlusions, temporal consistency, and depth ordering. Adv. Neural Inf. Process. Syst. 2010, 10, 2226–2234. [Google Scholar]

- Brox, T.; Bruhn, A.; Papenberg, N.; Weickert, J. High accuracy optical flow estimation based on a theory for warping. In Proceedings of the 8th European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004. [Google Scholar]

- Qian, X.; Guo, L.; Yu, B. Adaptive Gaussian filter based on object scale. Comput. Eng. Appl. 2010, 46, 14–20. [Google Scholar]

- Wang, W. Design of gear defect detection system based on the Halcon. J. Mech. Transm. 2014, 38, 60–63. [Google Scholar]

- Zhang, Q.; Shen, H.; Shen, M. The Quality Detection of the Non-Mark Printing Image Based on Halcon. J. Shantou Univ. 2011, 26, 63–68. [Google Scholar]

- Zhang, Q.; Zhang, N. An improved optical flow algorithm based on global minimum energy function. J. North. Univ. China 2014, 35, 330–336. [Google Scholar]

| Optical flow algorithm | HS Optical Flow | LK Optical Flow | FDRIG Optical Flow |

|---|---|---|---|

| Time of optical flow field (s) | 4 | 1 | 0.5 |

| Optical Flow Algorithm | AAE | SD |

|---|---|---|

| HS optical flow algorithm | 10.58° | 16.20° |

| LK optical flow algorithm | 7.19° | 11.23° |

| Weickert algorithm | 6.15° | 8.86° |

| FDRIG optical flow algorithm | 2.26° | 5.31° |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, L.; Wang, C. Research on Moving Target Tracking Based on FDRIG Optical Flow. Symmetry 2019, 11, 1122. https://doi.org/10.3390/sym11091122

Gong L, Wang C. Research on Moving Target Tracking Based on FDRIG Optical Flow. Symmetry. 2019; 11(9):1122. https://doi.org/10.3390/sym11091122

Chicago/Turabian StyleGong, Lixiong, and Canlin Wang. 2019. "Research on Moving Target Tracking Based on FDRIG Optical Flow" Symmetry 11, no. 9: 1122. https://doi.org/10.3390/sym11091122

APA StyleGong, L., & Wang, C. (2019). Research on Moving Target Tracking Based on FDRIG Optical Flow. Symmetry, 11(9), 1122. https://doi.org/10.3390/sym11091122