An Empirical Analysis of Test Input Generation Tools for Android Apps through a Sequence of Events

Abstract

:1. Introduction

- An extensive comparative study of test input generation tools performed on 50 Android apps using six Android test input generation tools.

- An analysis of the strengths and weaknesses of the six Android test input generation tools.

2. GUI of Android Apps

3. Android Test Input Generation Tools

3.1. Features of Android Test Input Generation Tools

3.1.1. Approach

3.1.2. Exploration Strategy

3.1.3. Events

3.1.4. Methods of System Events Identification

3.1.5. Crash Report

3.1.6. Replay Scripts

3.1.7. Testing Environment

3.1.8. Basis

3.1.9. Code Availability

3.2. Black Box Android Test Input Generation Tools

3.3. Grey Box Android Test Input Generation Tools

4. Case Study Design

4.1. Case Study Objectives

4.2. Case Study Criteria

4.3. Subjects Selection

- (1)

- the app’s number of activities: the apps were categorized by a small (number of activities less than five), medium (number of activities less than ten), and a large (number of activities more than ten). In total, 27 apps were selected for the small group, while 17 apps were screened for the medium group. Lastly, six apps were added to the large group. The app’s activities were determined in the Android manifest file of the app.

- (2)

- user permissions required: in this study, only apps that require at least two of the user permissions were selected to evaluate how the tools react to different system events. These permissions include access to contacts, call logs, Bluetooth, Wi-Fi, location, and camera of the device. The app permissions were determined either by checking the manifest file of the app or by launching the app for the first time and viewing the permissions request(s) that popped up.

- (3)

- version: only apps that are compatible with Android version 1.5 and higher were selected in this study.

5. Case Study Execution

6. Case Study Results

7. Discussion and Future Research Directions

7.1. Events Sequence Redundancy

7.2. Events Sequence Length

7.3. Crashes Diagnose

7.4. Reproducible Test Cases

7.5. System Events

7.6. Ease of Use

7.7. Access Control

7.8. Fragmentation

8. Threats to Validity

9. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chaffey, D. Mobile Marketing Statistics Compilation|Smart Insights. 2018. Available online: https://www.smartinsights.com/mobile-marketing/mobile-marketing-analytics/mobile-marketing-statistics/ (accessed on 16 December 2019).

- IDC. IDC—Smartphone Market Share—OS. Available online: https://www.idc.com/promo/smartphone-market-share (accessed on 16 December 2019).

- TheAppBrain. Number of Android Applications on the Google Play Store|AppBrain. Available online: https://www.appbrain.com/stats/number-of-android-apps (accessed on 16 December 2019).

- Packard, H. Failing to Meet Mobile App User Expectations: A Mobile User Survey. Technical Report. 2015. Available online: https://techbeacon.com/sites/default/files/gated_asset/mobile-app-user-survey-failing-meet-user-expectations.pdf (accessed on 16 December 2019).

- Ammann, P.; Offutt, J. Introduction to Software Testing; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Memon, A. Comprehensive Framework for Testing Graphical User Interfaces; University of Pittsburgh: Pittsburgh, PA, USA, 2001. [Google Scholar]

- Joorabchi, M.E.; Mesbah, A.; Kruchten, P. Real Challenges in Mobile App Development. In Proceedings of the 2013 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, Baltimore, MD, USA, 10–11 October 2013; pp. 15–24. [Google Scholar]

- Choudhary, S.R.; Gorla, A.; Orso, A. Automated Test Input Generation for Android: Are We There yet?(e). In Proceedings of the 2015 30th IEEE/ACM International Conference on Automated Software Engineering (ASE), Lincoln, NE, USA, 9–13 November 2015; pp. 429–440. [Google Scholar]

- Arnatovich, Y.L.; Wang, L.; Ngo, N.M.; Soh, C. Mobolic: An automated Approach to Exercising Mobile Application GUIs Using Symbiosis of Online Testing Technique and Customated Input Generation. Softw. Pr. Exp. 2018, 48, 1107–1142. [Google Scholar] [CrossRef]

- Machiry, A.; Tahiliani, R.; Naik, M. Dynodroid: An Input Generation System for Android Apps. In Proceedings of the 2013 9th Joint Meeting on Foundations of Software Engineering, Saint Petersburg, Russia, 18–26 August 2013; pp. 224–234. [Google Scholar]

- Amalfitano, D.; Fasolino, A.R.; Tramontana, P.; De Carmine, S.; Memon, A.M. Using GUI Ripping for Automated Testing of Android Applications. In Proceedings of the 27th IEEE/ACM International Conference on Automated Software Engineering, Essen, Germany, 3–7 September 2012; pp. 258–261. [Google Scholar]

- Su, T.; Meng, G.; Chen, Y.; Wu, K.; Yang, W.; Yao, Y.; Pu, G.; Liu, Y.; Su, Z. Guided, Stochastic Model-Based GUI Testing of Android Apps. In Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, Paderborn, Germany, 4–8 September 2017; pp. 245–256. [Google Scholar]

- Mao, K.; Harman, M.; Jia, Y. Sapienz: Multi-Objective Automated Testing for Android Applications. In Proceedings of the 25th International Symposium on Software Testing and Analysis, Saarbrücken, Germany, 18–20 July 2016; pp. 94–105. [Google Scholar]

- Zhu, H.; Ye, X.; Zhang, X.; Shen, K. A Context-aware Approach for Dynamic GUI Testing of Android Applications. In Proceedings of the 2015 IEEE 39th Annual Computer Software and Applications Conference, Taichung, Taiwan, 1–5 July 2015; pp. 248–253. [Google Scholar]

- Amalfitano, D.; Fasolino, A.R.; Tramontana, P.; Ta, B.D.; Memon, A.M. MobiGUITAR: Automated model-based testing of mobile apps. IEEE Softw. 2014, 32, 53–59. [Google Scholar] [CrossRef]

- Usman, A.; Ibrahim, N.; Salihu, I.A. Test Case Generation from Android Mobile Applications Focusing on Context Events. In Proceedings of the 2018 7th International Conference on Software and Computer Applications, Kuantan, Malaysia, 8–10 February 2018; pp. 25–30. [Google Scholar]

- Arnatovich, Y.L.; Ngo, M.N.; Kuan, T.H.B.; Soh, C. Achieving High Code Coverage in Android UI Testing via Automated Widget Exercising. In Proceedings of the 2016 23rd Asia-Pacific Software Engineering Conference (APSEC), Hamilton, New Zealand, 6–9 December 2016; pp. 193–200. [Google Scholar]

- Kong, P.; Li, L.; Gao, J.; Liu, K.; Bissyandé, T.F.; Klein, J. Automated testing of android apps: A systematic literature review. IEEE Trans. Reliab. 2018, 68, 45–66. [Google Scholar] [CrossRef]

- Wang, W.; Li, D.; Yang, W.; Cao, Y.; Zhang, Z.; Deng, Y.; Xie, T. An Empirical Study of Android Test Generation Tools in Industrial Cases. In Proceedings of the 33rd ACM/IEEE International Conference on Automated Software Engineering, Montpellier, France, 3–7 September 2018; pp. 738–748. [Google Scholar]

- Google. UI/Application Exerciser Monkey|Android Developers. Available online: https://developer.android.com/studio/test/monkey (accessed on 10 December 2019).

- Linares-Vásquez, M.; White, M.; Bernal-Cárdenas, C.; Moran, K.; Poshyvanyk, D. Mining Android App Usages for Generating Actionable GUI-Based Execution Scenarios. In Proceedings of the 2015 IEEE/ACM 12th Working Conference on Mining Software Repositories, Florence, Italy, 16–17 May 2015; pp. 111–122. [Google Scholar]

- Google. Understand the Activity Lifecycle|Android Developers. Available online: https://developer.android.com/guide/components/activities/activity-lifecycle.html (accessed on 25 December 2019).

- Memon, A. GUI testing: Pitfalls and process. Computer 2002, 35, 87–88. [Google Scholar] [CrossRef]

- Yu, S.; Takada, S. Mobile application test case generation focusing on external events. In Proceedings of the 1st International Workshop on Mobile Development, Amsterdam, The Netherlands, 30 October–31 December 2016; pp. 41–42. [Google Scholar]

- Rubinov, K.; Baresi, L. What Are We Missing When Testing Our Android Apps? Computer 2018, 51, 60–68. [Google Scholar] [CrossRef]

- Anand, S.; Naik, M.; Harrold, M.J.; Yang, H. Automated Concolic Testing of Smartphone Apps. In Proceedings of the ACM SIGSOFT 20th International Symposium on the Foundations of Software Engineering, Cary, NC, USA, 11–16 November 2012; p. 59. [Google Scholar]

- Chen, T.Y.; Kuo, F.-C.; Merkel, R.G.; Tse, T. Adaptive Random Testing: The Art of Test Case Diversity. J. Syst. Softw. 2010, 83, 60–66. [Google Scholar] [CrossRef] [Green Version]

- Wang, P.; Liang, B.; You, W.; Li, J.; Shi, W. Automatic Android GUI Traversal with High Coverage. In Proceedings of the 2014 Fourth International Conference on Communication Systems and Network Technologies, Bhopal, India, 7–9 April 2014; pp. 1161–1166. [Google Scholar]

- Saeed, A.; Ab Hamid, S.H.; Sani, A.A. Cost and effectiveness of search-based techniques for model-based testing: An empirical analysis. Int. J. Softw. Eng. Knowl. Eng. 2017, 27, 601–622. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Méndez Porras, A.; Quesada López, C.; Jenkins Coronas, M. Automated Testing of Mobile Applications: A Systematic Map and Review. In Proceedings of the 2015 18th Conferencia Iberoamericana en Software Engineering (CIbSE 2015), Lima, Peru, 22–24 April 2015; pp. 195–208. [Google Scholar]

- Google. Crashes Android Developers. Available online: https://developer.android.com/topic/performance/vitals/crash (accessed on 25 December 2019).

- Haoyin, L. Automatic Android Application GUI Testing—A Random Walk Approach. In Proceedings of the 2017 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 22–24 March 2017; pp. 72–76. [Google Scholar]

- Amalfitano, D.; Fasolino, A.R.; Tramontana, P.; De Carmine, S.; Imparato, G. A Toolset for GUI Testing of Android Applications. In Proceedings of the 2012 28th IEEE International Conference on Software Maintenance (ICSM), Trento, Italy, 23–28 September 2012; pp. 650–653. [Google Scholar]

- Amalfitano, D.; Fasolino, A.R.; Tramontana, P.; Amatucci, N. Considering Context Events in Event-Based Testing of Mobile Applications. In Proceedings of the 2013 IEEE Sixth International Conference on Software Testing, Verification and Validation Workshops, Luxembourg, 18–22 March 2013; pp. 126–133. [Google Scholar]

- Li, Y.; Yang, Z.; Guo, Y.; Chen, X. DroidBot: A Lightweight UI-Guided Test Input Generator for Android. In Proceedings of the 2017 IEEE/ACM 39th International Conference on Software Engineering Companion (ICSE-C), Buenos Aires, Argentina, 20–28 May 2017; pp. 23–26. [Google Scholar]

- Li, Y.; Yang, Z.; Guo, Y.; Chen, X. A Deep Learning Based Approach to Automated Android App Testing. arXiv 2019, arXiv:1901.02633. [Google Scholar]

- Azim, T.; Neamtiu, I. Targeted and Depth-First Exploration for Systematic Testing of Android Apps. In Proceedings of the 2013 ACM SIGPLAN International Conference on Object Oriented Programming Systems Languages & Applications, Indianapolis, IN, USA, 26–31 October 2013; pp. 641–660. [Google Scholar]

- Koroglu, Y.; Sen, A.; Muslu, O.; Mete, Y.; Ulker, C.; Tanriverdi, T.; Donmez, Y. QBE: QLearning-Based Exploration of Android Applications. In Proceedings of the 2018 IEEE 11th International Conference on Software Testing, Verification and Validation (ICST), Luxembourg, 18–22 March 2013; pp. 105–115. [Google Scholar]

- Moran, K.; Linares-Vásquez, M.; Bernal-Cárdenas, C.; Vendome, C.; Poshyvanyk, D. Automatically Discovering, Reporting and Reproducing Android Application Crashes. In Proceedings of the 2016 IEEE International Conference on Software Testing, Verification and Validation (ICST), Chicago, IL, USA, 11–15 April 2016; pp. 33–44. [Google Scholar]

- Kitchenham, B.A.; Pfleeger, S.L.; Pickard, L.M.; Jones, P.W.; Hoaglin, D.C.; El Emam, K.; Rosenberg, J. Preliminary guidelines for empirical research in software engineering. IEEE Trans. Softw. Eng. 2002, 28, 721–734. [Google Scholar] [CrossRef] [Green Version]

- Perry, D.E.; Sim, S.E.; Easterbrook, S.M. Case Studies for Software Engineers. In Proceedings of the 26th International Conference on Software Engineering, Edinburgh, UK, 23–28 May 2004; pp. 736–738. [Google Scholar]

- Morrison, G.C.; Inggs, C.P.; Visser, W. Automated Coverage Calculation and Test Case Generation. In Proceedings of the South African Institute for Computer Scientists and Information Technologists Conference, Cape Town, South African, 14–16 September 2020; pp. 84–93. [Google Scholar]

- Dashevskyi, S.; Gadyatskaya, O.; Pilgun, A.; Zhauniarovich, Y. The Influence of Code Coverage Metrics on Automated Testing Efficiency in Android. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 2216–2218. [Google Scholar]

- F-Droid. F-Droid—Free and Open Source Android App Repository. Available online: https://f-droid.org/ (accessed on 10 December 2019).

- AppBrain. Monetize, Advertise and Analyze Android Apps|AppBrain. Available online: https://www.appbrain.com/ (accessed on 20 December 2019).

- Hao, S.; Liu, B.; Nath, S.; Halfond, W.G.; Govindan, R. PUMA: Programmable UI-Automation for Large-Scale Dynamic Analysis of Mobile Apps. In Proceedings of the 12th Annual International Conference on Mobile Systems, Applications, and Services, Bretton Woods, NH, USA, 16–19 June 2014; pp. 204–217. [Google Scholar]

- Saswat, A. ELLA: A Tool for Binary Instrumentation of Android Apps. 2015. Available online: https://github.com/saswatanand/ella (accessed on 10 November 2019).

- Roubtsov, V. Emma: A Free Java Code Coverage Tool. 2005. Available online: http://emma.sourceforge.net/ (accessed on 10 November 2020).

- Google. Command Line Tools|Android Developers. Available online: https://developer.android.com/studio/command-line (accessed on 16 December 2019).

- Harman, M.; Mansouri, S.A.; Zhang, Y. Search-based software engineering: Trends, techniques and applications. ACM Comput. Surv. (CSUR) 2012, 45, 11. [Google Scholar] [CrossRef] [Green Version]

- Su, T.; Fan, L.; Chen, S.; Liu, Y.; Xu, L.; Pu, G.; Su, Z. Why my app crashes understanding and benchmarking framework-specific exceptions of android apps. IEEE Trans. Softw. Eng. 2020, 1. [Google Scholar] [CrossRef]

- Xie, Q.; Memon, A.M. Studying the Characteristics of a “Good” GUI Test Suite. In Proceedings of the 2006 17th International Symposium on Software Reliability Engineering, Raleigh, NC, USA, 7–10 November 2006; pp. 159–168. [Google Scholar]

- Baek, Y.-M.; Bae, D.-H. Automated Model-Based Android GUI Testing Using Multi-Level GUI Comparison Criteria. In Proceedings of the 31st IEEE/ACM International Conference on Automated Software Engineering, Singapore, 3–7 September 2016; pp. 238–249. [Google Scholar]

- Hu, Z.; Ma, Y.; Huang, Y. DroidWalker: Generating Reproducible Test Cases via Automatic Exploration of Android Apps. arXiv 2017, arXiv:1710.08562. [Google Scholar]

- Kayes, A.; Kalaria, R.; Sarker, I.H.; Islam, M.; Watters, P.A.; Ng, A.; Hammoudeh, M.; Badsha, S.; Kumara, I. A Survey of Context-Aware Access Control Mechanisms for Cloud and Fog Networks: Taxonomy and Open Research Issues. Sensors 2020, 20, 2464. [Google Scholar] [CrossRef] [PubMed]

- Shebaro, B.; Oluwatimi, O.; Bertino, E. Context-based access control systems for mobile devices. IEEE Trans. Dependable Secur. Comput. 2014, 12, 150–163. [Google Scholar] [CrossRef]

- Sadeghi, A.; Jabbarvand, R.; Malek, S. Patdroid: Permission-Aware GUI Testing of Android. In Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, Paderborn, Germany, 4–8 September 2017; pp. 220–232. [Google Scholar]

- Borges, N.P.; Zeller, A. Why Does this App Need this Data? Automatic Tightening of Resource Access. In Proceedings of the 2019 12th IEEE Conference on Software Testing, Validation and Verification (ICST), Xi′an, China, 22–27 April 2019; pp. 449–456. [Google Scholar]

- Kowalczyk, E.; Cohen, M.B.; Memon, A.M. Configurations in Android testing: They Matter. In Proceedings of the 1st International Workshop on Advances in Mobile App Analysis, Montpellier, France, 4 September 2018; pp. 1–6. [Google Scholar]

| No | Tool | Approach | Exploration Strategy | Events | Method of System Events Identification | Crash Report | Replay Scripts | Testing Environment | Basis | Code Availability |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Humanoid | Black box | Deep Q Network | User, System | Dynamic Analysis (dumpsys) | Log | Yes | Real Device, Emulator | Droidbot | Yes |

| 2 | AndroFrame | Black box | QLearning-Based | User, System | Dynamic Analysis (App’s Permission) | - | Yes | Real Device, Emulator | - | No |

| 3 | DroidBot | Black box | Model-based | User, System | Dynamic Analysis (dumpsys) | Log | Yes | Real Device, Emulator | - | Yes |

| 4 | SmartMonkey | Black box | Random based | User, System | Dynamic Analysis (App’s Permission) | - | - | - | Monkey | No |

| 5 | Stoat | Grey box | Model-based | User, System | Static analysis | Text | Yes | Real Device, Emulator | A3E | Yes |

| 6 | Sapienz | Grey box | Search-based/Random | User, System | Static analysis | Text | Yes | Emulator | Monkey | Yes |

| 7 | Crashscope | Grey box | Systematic | User, System | Dynamic Analysis (App’s Permission) | Text, Image | Yes | Real Device, Emulator | - | No |

| 8 | Dynodroid | Black box | Guided/Random | User, System | Dynamic Analysis (App’s Permission) | Log | No | Emulator | Monkey | Yes |

| 9 | ExtendedRipper | Black box | Model-based | User, System | Dynamic Analysis (Events Pattern) | Log | No | Emulator | GUI Ripper | No |

| 10 | A3E-Targeted | Grey box | Systematic | User, System | Static analysis | - | No | Real Device, Emulator | Troyd | No |

| 11 | Monkey | Black box | Random based | User, System | Dynamic Analysis (dumpsys) | Log | No | Real Device, Emulator | - | Yes |

| No | APP Name | Package Name | Version | Category | Activity | Methods | LOC |

|---|---|---|---|---|---|---|---|

| 1 | Aard | aarddict.android | 1.5 | Books | 6 | 438 | 65,511 |

| 2 | Open Document | at.tomtasche.reader | 1.6 | Books | 3 | 446 | 13,796 |

| 3 | Bubble | com.nkanaev.comics | 4.1 | Books | 2 | 463 | 58,397 |

| 4 | Book Catalogue | com.eleybourn.bookcatalogue | 2.1 | Books | 21 | 1548 | 11,264 |

| 5 | Klaxon | org.nerdcircus.android.klaxon | 1.6 | Communication | 6 | 162 | 5733 |

| 6 | Sanity | cri.sanity | 2 | Communication | 28 | 1398 | 44,215 |

| 7 | WLAN Scanner | org.bitbatzen.wlanscanner | 4 | Communication | 1 | 141 | 5441 |

| 8 | Contact Owner | com.appengine.paranoid_android.lost | 1.5 | Communication | 2 | 79 | 2502 |

| 9 | Divide | com.khurana.apps.divideandconquer | 2.1 | Education | 2 | 195 | 25,284 |

| 10 | Raele.concurseiro | raele.concurseiro | 3 | Education | 2 | 92 | 1309 |

| 11 | LolcatBuilder | com.android.lolcat | 2.3 | Entertainment | 1 | 79 | 578 |

| 12 | MunchLife | info.bpace.munchlife | 2.3 | Entertainment | 2 | 39 | 163 |

| 13 | Currency | org.billthefarmer.currency | 4 | Finance | 5 | 148 | 5202 |

| 14 | Mileage | com.evancharlton.mileage | 1.6 | Finance | 50 | 2486 | 92,548 |

| 15 | TimeSheet | com.tastycactus.timesheet | 2.1 | Finance | 6 | 198 | 7126 |

| 16 | Boogdroid | me.johnmh.boogdroid | 4 | Game | 3 | 398 | 3726 |

| 17 | Hot Death | com.smorgasbork.hotdeath | 2.1 | Game | 3 | 365 | 28,104 |

| 18 | Resdicegame | com.ridgelineapps.resdicegame | 1.5 | Game | 4 | 144 | 2506 |

| 19 | DroidWeight | de.delusions.measure | 2.1 | Health and Fitness | 8 | 411 | 13,215 |

| 20 | OSM Tracker | me.guillaumin.android.osmtracker | 1.6 | Health and Fitness | 8 | 346 | 49,335 |

| 21 | Pedometer | name.bagi.levente.pedometer | 1.6 | Health and Fitness | 2 | 244 | 6695 |

| 22 | Pushup Buddy | org.example.pushupbuddy | 1.6 | Health and Fitness | 7 | 165 | 4602 |

| 23 | Mirrored | de.homac.Mirrored | 2.3 | Magazines | 4 | 219 | 825 |

| 24 | A2DP Volume | a2dp.Vol | 2.3 | Navigation | 8 | 641 | 23,294 |

| 25 | Car cast | com.jadn.cc | 1.5 | Music and Audio | 12 | 459 | 18,127 |

| 26 | Ethersynth | net.sf.ethersynth | 2.1 | Music and Audio | 8 | 168 | 1208 |

| 27 | Jamendo | com.teleca.jamendo | 1.6 | Music and Audio | 13 | 1046 | 30,444 |

| 28 | Adsdroid | hu.vsza.adsdroid | 2.3 | Productivity | 2 | 1215 | 5080 |

| 29 | Maniana | com.zapta.apps.maniana | 2.2 | Productivity | 4 | 891 | 28,526 |

| 30 | Tomdroid | org.tomdroid | 1.6 | Productivity | 8 | 840 | 29,147 |

| 31 | Talalarmo | trikita.talalarmo | 4 | Productivity | 3 | 387 | 1350 |

| 32 | Unit | info.staticfree.android.units | 1.6 | Productivity | 3 | 547 | 22,993 |

| 33 | Alarm Clock | com.angrydoughnuts.android.alarmclock | 2.7 | Productivity | 5 | 676 | 2453 |

| 34 | World Clock | ch.corten.aha.worldclock | 2.3 | Productivity | 4 | 315 | 1156 |

| 35 | Blockinger | org.blockinger.game | 2.3 | Puzzle | 6 | 356 | 2000 |

| 36 | OpenSudoku | cz.romario.opensudoku | 1.5 | Puzzle | 10 | 444 | 24,601 |

| 37 | Applications info | com.majeur.applicationsinfo | 4.1 | Tools | 3 | 323 | 3614 |

| 38 | Dew Point | de.hoffmannsgimmickstaupunkt | 2.1 | Tools | 3 | 75 | 4791 |

| 39 | drhoffmann | de.drhoffmannsoftware | 1.6 | Tools | 9 | 164 | 896 |

| 40 | FindMyphone | se.erikofsweden.findmyphone | 1.6 | Tools | 1 | 2969 | 4056 |

| 41 | List my Apps | de.onyxbits.listmyapps | 2.3 | Tools | 4 | 96 | 4262 |

| 42 | Sensors2Pd | org.sensors2.pd | 2.3 | Tools | 4 | 621 | 16,625 |

| 43 | Terminal Emulator | jackpal.androidterm | 1.6 | Tools | 8 | 994 | 24,930 |

| 44 | Timeriffic | com.alfray.timeriffic | 1.5 | Tools | 7 | 709 | 28,956 |

| 45 | Addi | com.addi | 1.1 | Tools | 4 | 2133 | 133,448 |

| 46 | Alogcat | org.jtb.alogcat | 2.3 | Tools | 2 | 199 | 846 |

| 47 | Android Token | uk.co.bitethebullet.android.token | 2.2 | Tools | 6 | 288 | 3674 |

| 48 | Battery Circle | ch.blinkenlights.battery | 1.5 | Tools | 1 | 81 | 251 |

| 49 | Sensor readout | de.onyxbits.sensorreadout | 2.3 | Tools | 3 | 683 | 6596 |

| 50 | Weather | ru.gelin.android.weather.notification | 2.3 | Weather | 7 | 695 | 19,837 |

| App Name | Method Coverage (%) | Activity Coverage (%) | # of Unique Crashes | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sa | St | Dr | Hu | M | Dy | Sa | St | Dr | Hu | M | Dy | Sa | St | Dr | Hu | M | Dy | |

| A2DP Volume | 53.6 | 55.8 | 49.7 | 60.3 | 39.3 | 0.0 | 100 | 100 | 100 | 100 | 71 | 0 | 1 | 0 | 2 | 2 | 0 | 0 |

| Aard | 17.4 | 16.4 | 16.4 | 16.2 | 17.4 | 17.4 | 33 | 33 | 33 | 33 | 33 | 33 | 1 | 0 | 1 | 1 | 0 | 0 |

| Addi | 10.1 | 9.5 | 9.6 | 9.6 | 4.6 | 4.7 | 50 | 50 | 50 | 50 | 50 | 25 | 1 | 1 | 1 | 1 | 1 | 1 |

| Adsdroid | 56.0 | 56.0 | 56.0 | 56.3 | 56.1 | 56.0 | 100 | 100 | 100 | 100 | 100 | 100 | 1 | 1 | 0 | 0 | 1 | 0 |

| Alarm Clock | 24.6 | 64.5 | 43.9 | 62.7 | 24.9 | 17.9 | 60 | 60 | 60 | 60 | 20 | 40 | 1 | 0 | 2 | 2 | 0 | 0 |

| Alogcat | 72.9 | 75.9 | 52.8 | 72.9 | 67.3 | 46.2 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| Android Token | 54.5 | 57.6 | 54.5 | 49.5 | 51.4 | 50.0 | 67 | 67 | 50 | 67 | 50 | 50 | 0 | 0 | 0 | 0 | 0 | 0 |

| applicationsinfo | 64.5 | 64.3 | 64.4 | 64.7 | 44.3 | 44.3 | 100 | 100 | 100 | 100 | 67 | 67 | 0 | 0 | 0 | 0 | 0 | 0 |

| Battery Circle | 81.5 | 81.5 | 81.5 | 84.0 | 79.0 | 79.0 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| Blockinger game | 77.5 | 81.7 | 81.5 | 80.1 | 16.6 | 11.2 | 100 | 100 | 100 | 100 | 67 | 50 | 0 | 0 | 0 | 0 | 0 | 0 |

| Boogdroid | 13.0 | 10.1 | 13.0 | 16.7 | 15.6 | 15.3 | 100 | 33 | 67 | 67 | 56 | 33 | 1 | 0 | 1 | 1 | 1 | 1 |

| Book Catalogue | 31.7 | 4.0 | 33.0 | 32.9 | 43.4 | 32.7 | 41 | 5 | 48 | 38 | 43 | 29 | 1 | 1 | 1 | 1 | 1 | 1 |

| Bubble | 54.9 | 55.9 | 36.9 | 30.5 | 67.6 | 0.0 | 100 | 100 | 100 | 50 | 100 | 0 | 2 | 2 | 0 | 0 | 1 | 0 |

| Car cast | 44.9 | 46.2 | 41.6 | 43.2 | 34.0 | 29.8 | 75 | 67 | 67 | 67 | 47 | 72 | 2 | 3 | 1 | 1 | 2 | 1 |

| Contact Owner | 54.4 | 54.4 | 57.0 | 57.0 | 51.1 | 51.9 | 50 | 50 | 50 | 50 | 50 | 50 | 1 | 1 | 1 | 1 | 1 | 1 |

| Currency | 58.8 | 64.0 | 40.3 | 56.8 | 42.6 | 40.5 | 100 | 100 | 80 | 100 | 100 | 60 | 1 | 1 | 0 | 0 | 0 | 0 |

| Dew Point | 75.6 | 73.3 | 76.4 | 77.3 | 58.7 | 58.7 | 100 | 100 | 100 | 100 | 67 | 67 | 2 | 1 | 1 | 1 | 0 | 1 |

| Divide | 52.8 | 47.2 | 52.8 | 52.8 | 74.4 | 46.2 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| DroidWeight | 74.1 | 62.9 | 69.8 | 70.6 | 72.7 | 72.7 | 50 | 38 | 67 | 75 | 58 | 38 | 1 | 0 | 0 | 0 | 0 | 0 |

| drhoffmann | 55.9 | 59.8 | 51.2 | 59.1 | 43.3 | 36.6 | 85 | 100 | 93 | 93 | 93 | 78 | 2 | 2 | 1 | 2 | 2 | 1 |

| Ethersynth | 66.7 | 65.1 | 50.0 | 57.7 | 66.7 | 64.3 | 100 | 100 | 100 | 100 | 88 | 63 | 1 | 1 | 0 | 0 | 0 | 0 |

| FindMyphone | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| Hot Death | 74.6 | 59.4 | 65.8 | 70.4 | 76.8 | 55.6 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 1 | 0 | 0 | 0 | 0 |

| Jamendo | 53.1 | 34.7 | 43.9 | 43.9 | 24.4 | 0.0 | 62 | 31 | 38 | 38 | 46 | 0 | 2 | 1 | 1 | 1 | 0 | 0 |

| Klaxon | 44.4 | 38.3 | 36.6 | 35.8 | 39.9 | 41.4 | 83 | 83 | 83 | 83 | 83 | 78 | 0 | 0 | 0 | 0 | 0 | 0 |

| List my Apps | 76.4 | 75.3 | 46.9 | 72.9 | 72.9 | 72.9 | 50 | 100 | 25 | 100 | 100 | 25 | 0 | 0 | 0 | 0 | 0 | 0 |

| LolcatBuilder | 32.9 | 27.8 | 32.9 | 32.9 | 25.3 | 25.3 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 1 | 1 | 1 | 0 |

| Maniana | 75.4 | 58.4 | 54.1 | 54.0 | 72.8 | 66.9 | 75 | 75 | 75 | 75 | 75 | 50 | 0 | 0 | 1 | 1 | 0 | 0 |

| Mileage | 30.7 | 16.0 | 25.6 | 25.5 | 25.0 | 27.6 | 44 | 25 | 38 | 40 | 22 | 27 | 1 | 1 | 1 | 1 | 1 | 1 |

| Mirrored | 33.3 | 39.7 | 47.0 | 47.0 | 32.4 | 30.1 | 75 | 75 | 75 | 75 | 50 | 50 | 1 | 0 | 0 | 0 | 0 | 0 |

| MunchLife | 66.7 | 66.7 | 66.7 | 66.7 | 59.0 | 59.0 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| Open Document | 54.4 | 42.2 | 45.3 | 45.3 | 70.6 | 36.8 | 33 | 33 | 33 | 33 | 33 | 33 | 0 | 0 | 0 | 0 | 0 | 0 |

| OpenSudoku | 62.5 | 54.5 | 40.3 | 40.3 | 42.2 | 38.1 | 50 | 30 | 30 | 30 | 50 | 50 | 1 | 1 | 1 | 1 | 1 | 1 |

| OSM Tracker | 44.2 | 62.9 | 48.1 | 48.3 | 50.6 | 43.1 | 75 | 71 | 83 | 88 | 92 | 50 | 1 | 2 | 1 | 1 | 1 | 1 |

| Pedometer | 70.1 | 69.7 | 61.5 | 61.5 | 83.2 | 70.4 | 100 | 100 | 50 | 100 | 100 | 50 | 1 | 2 | 0 | 0 | 1 | 0 |

| Pushup Buddy | 48.5 | 48.9 | 48.9 | 56.4 | 44.2 | 43.0 | 57 | 57 | 43 | 71 | 57 | 43 | 0 | 0 | 0 | 0 | 0 | 0 |

| Raele.concurseiro | 41.7 | 42.1 | 41.7 | 41.7 | 41.7 | 41.7 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| Resdicegame | 62.5 | 48.6 | 62.5 | 53.5 | 47.9 | 47.9 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| Sanity | 28.4 | 18.6 | 24.9 | 24.3 | 30.4 | 14.2 | 48 | 29 | 48 | 46 | 61 | 21 | 1 | 1 | 0 | 0 | 0 | 0 |

| Sensor read out | 30.2 | 30.0 | 30.0 | 30.0 | 27.8 | 30.0 | 100 | 100 | 67 | 67 | 67 | 67 | 1 | 0 | 0 | 0 | 0 | 1 |

| Sensors2Pd | 17.1 | 20.0 | 20.5 | 21.7 | 20.5 | 20.5 | 100 | 100 | 100 | 100 | 100 | 100 | 2 | 1 | 1 | 1 | 1 | 0 |

| Talalarmo | 88.1 | 88.1 | 82.2 | 88.9 | 90.4 | 71.9 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| Terminal Emulator | 55.2 | 44.2 | 51.3 | 51.7 | 52.3 | 49.5 | 38 | 38 | 38 | 38 | 38 | 25 | 1 | 1 | 0 | 0 | 0 | 0 |

| Timeriffic | 63.8 | 50.5 | 57.1 | 57.1 | 59.8 | 54.9 | 86 | 52 | 57 | 57 | 71 | 29 | 0 | 0 | 0 | 0 | 0 | 0 |

| TimeSheet | 59.4 | 33.5 | 27.8 | 27.8 | 20.7 | 20.2 | 100 | 67 | 50 | 50 | 50 | 56 | 0 | 0 | 0 | 0 | 0 | 0 |

| Tomdroid | 36.1 | 51.9 | 37.5 | 37.0 | 40.8 | 0.0 | 63 | 63 | 75 | 75 | 63 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Unit | 69.9 | 55.6 | 52.5 | 52.5 | 69.1 | 54.8 | 33 | 33 | 33 | 33 | 67 | 33 | 0 | 0 | 0 | 0 | 0 | 0 |

| Weather notifications | 59.4 | 50.0 | 41.7 | 37.4 | 74.2 | 67.6 | 71 | 52 | 57 | 57 | 71 | 43 | 1 | 0 | 0 | 0 | 1 | 0 |

| WLAN Scanner | 66.0 | 63.1 | 65.2 | 65.2 | 61.7 | 61.7 | 100 | 100 | 100 | 100 | 100 | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| World Clock | 43.8 | 56.8 | 29.2 | 29.2 | 22.9 | 22.9 | 100 | 100 | 75 | 75 | 50 | 50 | 0 | 0 | 0 | 0 | 0 | 0 |

| Overall | 39.8 | 35.1 | 36.1 | 36.8 | 36.9 | 28.8 | 66.3 | 55.3 | 60.7 | 62.8 | 58.1 | 42.0 | 32 | 25 | 19 | 20 | 17 | 11 |

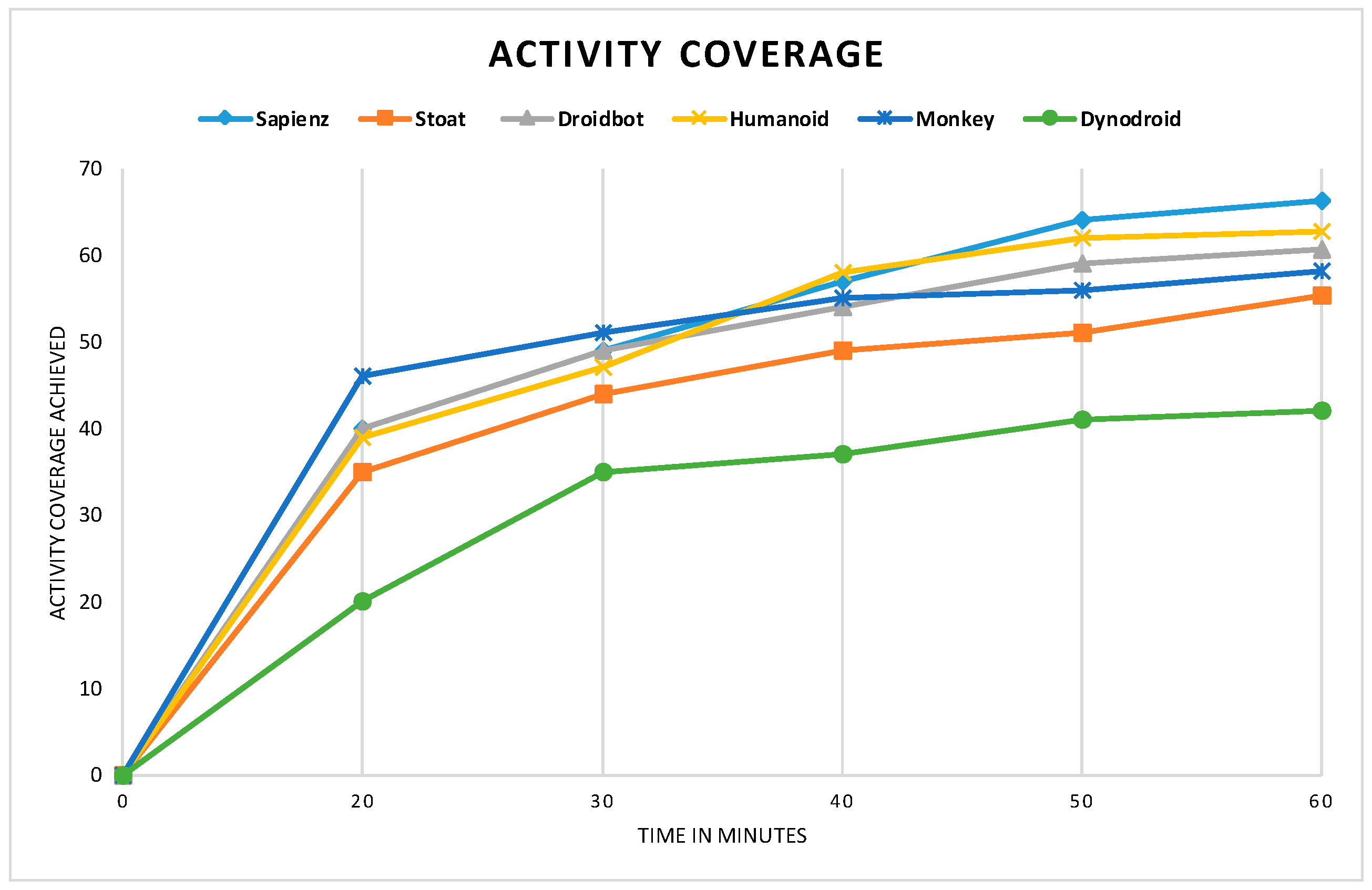

| Tools | Activity Coverage (%) | Method Coverage (%) | Number of Crashes | Max Events Number |

|---|---|---|---|---|

| Sapienz | 66.3 | 39.8 | 32 | 30,000 |

| Stoat | 55.3 | 35.1 | 25 | 3000 |

| Droidbot | 60.7 | 36.1 | 19 | 1000 |

| Humanoid | 62.8 | 36.8 | 20 | 1000 |

| Monkey | 58.1 | 36.9 | 17 | 20,000 |

| Dynodroid | 42.0 | 28.8 | 11 | 2000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yasin, H.N.; Hamid, S.H.A.; Yusof, R.J.R.; Hamzah, M. An Empirical Analysis of Test Input Generation Tools for Android Apps through a Sequence of Events. Symmetry 2020, 12, 1894. https://doi.org/10.3390/sym12111894

Yasin HN, Hamid SHA, Yusof RJR, Hamzah M. An Empirical Analysis of Test Input Generation Tools for Android Apps through a Sequence of Events. Symmetry. 2020; 12(11):1894. https://doi.org/10.3390/sym12111894

Chicago/Turabian StyleYasin, Husam N., Siti Hafizah Ab Hamid, Raja Jamilah Raja Yusof, and Muzaffar Hamzah. 2020. "An Empirical Analysis of Test Input Generation Tools for Android Apps through a Sequence of Events" Symmetry 12, no. 11: 1894. https://doi.org/10.3390/sym12111894

APA StyleYasin, H. N., Hamid, S. H. A., Yusof, R. J. R., & Hamzah, M. (2020). An Empirical Analysis of Test Input Generation Tools for Android Apps through a Sequence of Events. Symmetry, 12(11), 1894. https://doi.org/10.3390/sym12111894