Efficient Chaotic Imperialist Competitive Algorithm with Dropout Strategy for Global Optimization

Abstract

:1. Introduction

2. Literature Review

2.1. Imperialist Competitive Algorithm (ICA)

2.2. Chaotic Imperialist Competitive Algorithm (CICA)

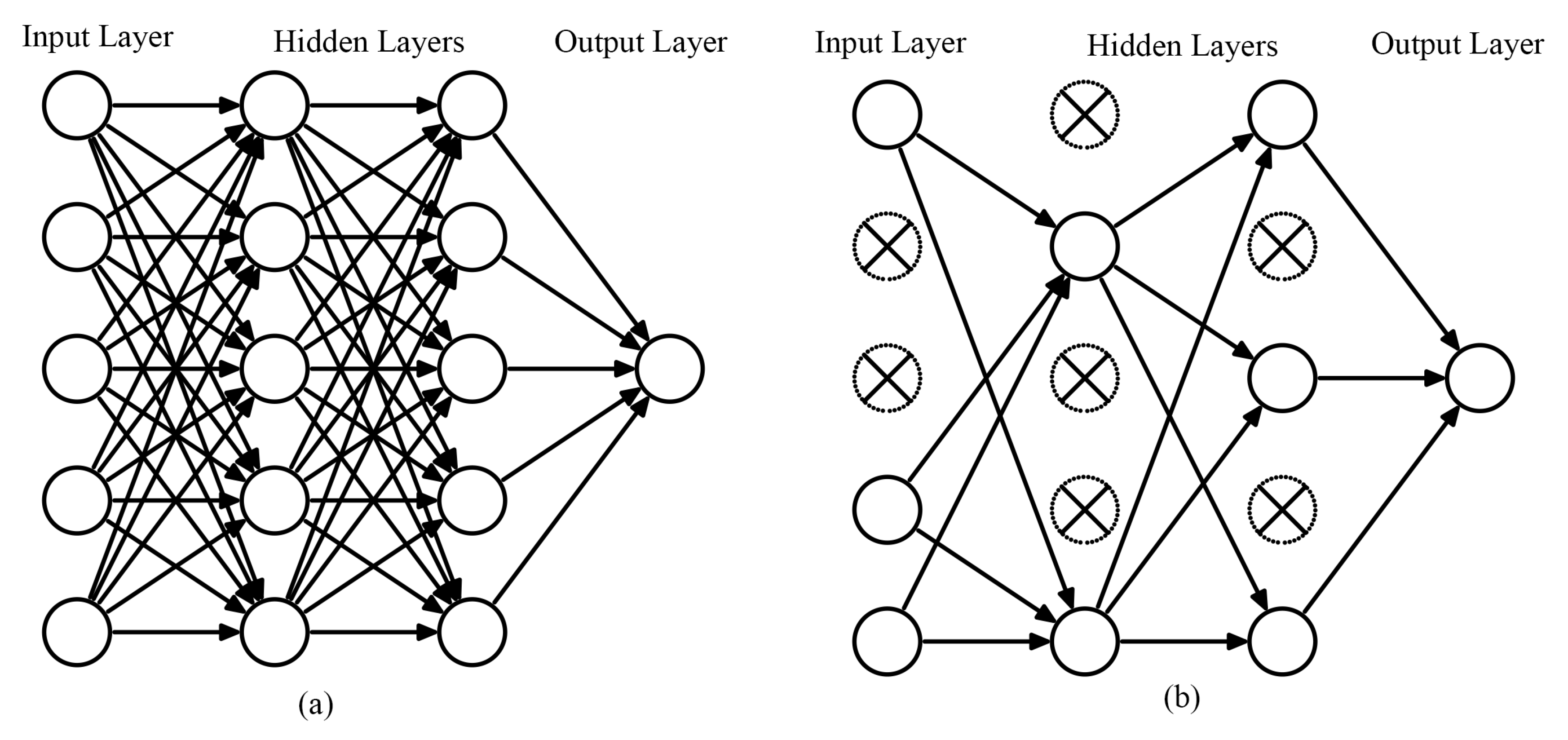

2.3. Dropout

3. Chaotic Imperialist Competitive Algorithm with Dropout (CICA-D)

| Algorithm 1 CICA-D |

|

4. Numerical Examples

4.1. Success Rate

4.2. Statistical Results

4.3. Computational Complexity

5. Application

5.1. Objective Function

5.2. Experimental Results

- The optimal solution passes through the obstacles.

- The optimal solution is worse than the median of all trails.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Atashpaz-Gargari, E.; Lucas, C. Imperialist competitive algorithm: An algorithm for optimization inspired by imperialistic competition. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 4661–4667. [Google Scholar]

- Cattani, M.; Caldas, I.L.; Souza, S.L.d.; Iarosz, K.C. Deterministic chaos theory: Basic concepts. Rev. Bras. de Ensino de Física 2017, 39. [Google Scholar] [CrossRef] [Green Version]

- Rosso, O.; Larrondo, H.; Martin, M.; Plastino, A.; Fuentes, M. Distinguishing noise from chaos. Phys. Rev. Lett. 2007, 99, 154102. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, Y.; Li, L.; Xiao, J.; Yang, Y.; Liang, J.; Li, T. Particle swarm optimizer with crossover operation. Eng. Appl. Artif. Intell. 2018, 70, 159–169. [Google Scholar] [CrossRef]

- Aliniya, Z.; Keyvanpour, M.R. CB-ICA: A crossover-based imperialist competitive algorithm for large-scale problems and engineering design optimization. Neural Comput. Appl. 2019, 31, 7549–7570. [Google Scholar] [CrossRef]

- Xu, S.; Wang, Y.; Lu, P. Improved imperialist competitive algorithm with mutation operator for continuous optimization problems. Neural Comput. Appl. 2017, 28, 1667–1682. [Google Scholar] [CrossRef]

- Ma, Z.; Yuan, X.; Han, S.; Sun, D.; Ma, Y. Improved Chaotic Particle Swarm Optimization Algorithm with More Symmetric Distribution for Numerical Function Optimization. Symmetry 2019, 11, 876. [Google Scholar] [CrossRef] [Green Version]

- Alatas, B.; Akin, E.; Ozer, A.B. Chaos embedded particle swarm optimization algorithms. Chaos Solitons Fractals 2009, 40, 1715–1734. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.S.; Talatahari, S.; Alavi, A.H. Firefly algorithm with chaos. Commun. Innonlinear Sci. Numer. Simul. 2013, 18, 89–98. [Google Scholar] [CrossRef]

- Wang, G.G.; Deb, S.; Gandomi, A.H.; Zhang, Z.; Alavi, A.H. Chaotic cuckoo search. Soft Comput. 2016, 20, 3349–3362. [Google Scholar] [CrossRef]

- Zhao, H.; Gao, W.; Deng, W.; Sun, M. Study on an Adaptive Co-Evolutionary ACO Algorithm for Complex Optimization Problems. Symmetry 2018, 10, 104. [Google Scholar] [CrossRef] [Green Version]

- Talatahari, S.; Azar, B.F.; Sheikholeslami, R.; Gandomi, A. Imperialist competitive algorithm combined with chaos for global optimization. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 1312–1319. [Google Scholar] [CrossRef]

- Fiori, S.; Di Filippo, R. An improved chaotic optimization algorithm applied to a DC electrical motor modeling. Entropy 2017, 19, 665. [Google Scholar] [CrossRef] [Green Version]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Wu, H.; Gu, X. Towards dropout training for convolutional neural networks. Neural Netw. 2015, 71, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Park, S.; Kwak, N. Analysis on the dropout effect in convolutional neural networks. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 189–204. [Google Scholar]

- Moon, T.; Choi, H.; Lee, H.; Song, I. Rnndrop: A novel dropout for rnns in asr. In Proceedings of the 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Scottsdale, AZ, USA, 13–17 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 65–70. [Google Scholar]

- Kaveh, A.; Talatahari, S. Optimum design of skeletal structures using imperialist competitive algorithm. Comput. Struct. 2010, 88, 1220–1229. [Google Scholar] [CrossRef]

- May, R.M. Simple mathematical models with very complicated dynamics. In The Theory of Chaotic Attractors; Springer: Berlin/Heidelberg, Germany, 2004; pp. 85–93. [Google Scholar]

- He, D.; He, C.; Jiang, L.G.; Zhu, H.W.; Hu, G.R. Chaotic characteristics of a one-dimensional iterative map with infinite collapses. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 2001, 48, 900–906. [Google Scholar]

- Hilborn, R.C. Chaos and Nonlinear Dynamics: An Introduction for Scientists and Engineers; Oxford University Press on Demand: Oxford, UK, 2004. [Google Scholar]

- Ott, E. Chaos in Dynamical Systems; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Zheng, W.M. Kneading plane of the circle map. Chaos Solitons Fractals 1994, 4, 1221–1233. [Google Scholar] [CrossRef]

- Little, M.; Heesch, D. Chaotic root-finding for a small class of polynomials. J. Differ. Equ. Appl. 2004, 10, 949–953. [Google Scholar] [CrossRef] [Green Version]

- Semeniuta, S.; Severyn, A.; Barth, E. Recurrent Dropout without Memory Loss. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 1757–1766. [Google Scholar]

- Wang, S.; Manning, C. Fast dropout training. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 118–126. [Google Scholar]

- Choset, H.M.; Hutchinson, S.; Lynch, K.M.; Kantor, G.; Burgard, W.; Kavraki, L.E.; Thrun, S. Principles of Robot Motion: Theory, Algorithms, and Implementation; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Lamini, C.; Fathi, Y.; Benhlima, S. Collaborative Q-learning path planning for autonomous robots based on holonic multi-agent system. In Proceedings of the 2015 10th International Conference on Intelligent Systems: Theories and Applications (SITA), Rabat, Morocco, 20–21 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Woon, S.F.; Rehbock, V. A critical review of discrete filled function methods in solving nonlinear discrete optimization problems. Appl. Math. Comput. 2010, 217, 25–41. [Google Scholar] [CrossRef] [Green Version]

- Puchinger, J.; Raidl, G.R. Combining metaheuristics and exact algorithms in combinatorial optimization: A survey and classification. In Proceedings of the International Work-Conference on the Interplay Between Natural and Artificial Computation, Las Palmas, Canary Islands, Spain, 15–18 June 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 41–53. [Google Scholar]

- Šeda, M. Roadmap methods vs. cell decomposition in robot motion planning. In Proceedings of the 6th WSEAS International Conference on Signal Processing, Robotics and Automation, Corfu Island, Greece, 16–19 February 2007; World Scientific and Engineering Academy and Society (WSEAS): Athens, Greece, 2007; pp. 127–132. [Google Scholar]

- Cai, C.; Ferrari, S. Information-driven sensor path planning by approximate cell decomposition. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2009, 39, 672–689. [Google Scholar]

- Rimon, E.; Koditschek, D.E. Exact robot navigation using artificial potential functions. Dep. Pap. (ESE) 1992, 323. [Google Scholar] [CrossRef] [Green Version]

- Hocaoglu, C.; Sanderson, A.C. Planning multiple paths with evolutionary speciation. IEEE Trans. Evol. Comput. 2001, 5, 169–191. [Google Scholar] [CrossRef]

- Jung, I.K.; Hong, K.B.; Hong, S.K.; Hong, S.C. Path planning of mobile robot using neural network. In Proceedings of the ISIE’99. IEEE International Symposium on Industrial Electronics (Cat. No. 99TH8465), Bled, Slovenia, Slovenia, 12–16 July 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 3, pp. 979–983. [Google Scholar]

- Kennedy, J. Particle swarm optimization. In Encyclopedia of Machine Learning; Springer: Berlin/Heidelberg, Germany, 2010; pp. 760–766. [Google Scholar]

- Huang, H.C.; Tsai, C.C. Global path planning for autonomous robot navigation using hybrid metaheuristic GA-PSO algorithm. In Proceedings of the SICE Annual Conference, Tokyo, Japan, 13–18 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1338–1343. [Google Scholar]

| Map | Definition | Parameters |

|---|---|---|

| Logistic map [19] | ||

| ICMIC map [20] | ||

| Sinusoidal map [19] | ||

| Gauss map [21] | ||

| Tent map [22] | ||

| Circle map [23] | ||

| Complex squaring map [24] |

| Function | Definition | Interval | Optimum |

|---|---|---|---|

| Griewank | [−150, 150] | 0.0 | |

| Ackley | [−32, 32] | 0.0 | |

| Brown | [−1, 4] | 0.0 | |

| Rastrigin | [−10, 10] | 0.0 | |

| Schwefel’s 2.22 | [−100, 100] | 0.0 | |

| Schwefel’s 2.23 | [−10, 10] | 0.0 | |

| Qing | [−500, 500] | 0.0 | |

| Rosenbrock | [−2.048, 2.048] | 0.0 | |

| Schwefel | [−10, 10] | 0.0 | |

| Weierstrass | [−0.5, 0.5] | 0.0 | |

| Whitley | [−10.24, 10.24] | 0.0 | |

| Zakharov | [−5, 10] | 0.0 |

| CICA | CICA-D(0.1) | CICA-D(0.2) | CICA-D(0.3) | CICA-D(0.4) | CICA-D(0.5) | |

|---|---|---|---|---|---|---|

| Logistic map | 76 | 63 | 56 | 38 | 49 | 52 |

| ICMIC map | 55 | 47 | 42 | 39 | 31 | 36 |

| Sinusoidal map | 89 | 83 | 63 | 72 | 54 | 67 |

| Gauss map | 23 | 21 | 19 | 23 | 14 | 16 |

| Tent map | 32 | 29 | 22 | 24 | 19 | 26 |

| Circle map | 25 | 22 | 18 | 8 | 13 | 10 |

| Complex squaring map | 39 | 32 | 27 | 25 | 29 | 27 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 2.6990e-11 | 1.0341e-10 | 2.6780e-08 | 8.1404e-10 |

| CICA | 1.1707e-16 | 3.4777e-14 | 2.5794e-12 | 5.0708e-15 |

| CICA-D | 0 | 1.0767e-08 | 2.9765e-07 | 5.4310e-08 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 8.3538e-07 | 7.1169e-05 | 9.7544e-05 | 8.2014e-06 |

| CICA | 5.7959e-08 | 1.0239e-07 | 5.1388e-06 | 1.2366e-07 |

| CICA-D | 2.4248e-11 | 1.8701e-06 | 9.4602e-06 | 3.0191e-06 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 5.3185e-03 | 8.2913e-02 | 3.4768e-01 | 5.9350e-02 |

| CICA | 0 | 3.6893e-04 | 7.6523e-04 | 2.3507e-04 |

| CICA-D | 0 | 1.6878e-03 | 3.6494e-03 | 1.1136e-03 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 0 | 1.6667e-06 | 0.00005 | 9.1287e-06 |

| CICA | 0 | 9.3427e-09 | 1.0685e-07 | 3.4296e-08 |

| CICA-D | 0 | 1.0604e-07 | 1.9899e-06 | 3.9926e-07 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 2.1853e-06 | 3.6150e-05 | 4.3900e-05 | 1.0507e-05 |

| CICA | 8.4180e-09 | 1.3307e-08 | 1.5124e-08 | 1.8661e-09 |

| CICA-D | 7.6125e-10 | 7.7876e-07 | 3.5125e-05 | 3.1734e-06 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 6.2357e-11 | 2.4227e-09 | 8.1524e-09 | 1.9060e-09 |

| CICA | 8.4576e-14 | 6.3189e-12 | 4.8157e-11 | 4.2206e-12 |

| CICA-D | 3.6451e-15 | 1.2597e-09 | 7.6530e-08 | 6.9023e-09 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 3.7096e-04 | 1.2428e-03 | 5.8762e-02 | 5.2740e-03 |

| CICA | 5.2507e-08 | 7.8159e-08 | 8.3169e-07 | 6.9295e-08 |

| CICA-D | 1.8346e-11 | 6.8298e-07 | 7.4924e-07 | 1.9475e-07 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 0.001296 | 0.201608 | 1.217682 | 0.362075 |

| CICA | 0.000182 | 0.024174 | 0.07179 | 0.021891 |

| CICA-D | 0.000061 | 0.081672 | 0.36303 | 0.101833 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 3.2679e-06 | 2.9866e-05 | 7.2682e-05 | 8.4658e-06 |

| CICA | 6.2612e-09 | 8.1053e-09 | 1.5335e-08 | 1.2321e-09 |

| CICA-D | 5.3896e-10 | 6.4659e-08 | 4.2363e-06 | 3.8251e-07 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 2.7647e-03 | 3.2945e-02 | 6.3290e-02 | 1.8813e-02 |

| CICA | 0 | 1.5156e-05 | 3.1263e-05 | 9.8972e-06 |

| CICA-D | 0 | 3.9078e-04 | 7.9613e-04 | 2.5196e-04 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 5.3185e-09 | 3.0960e-08 | 3.4768e-08 | 7.4846e-09 |

| CICA | 6.8309e-14 | 9.5997e-13 | 7.6523e-12 | 6.5952e-13 |

| CICA-D | 4.2985e-15 | 7.3188e-11 | 3.6494e-10 | 3.3985e-11 |

| Min(best) | Mean | Max(worst) | St.Dev. | |

|---|---|---|---|---|

| ICA | 8.3546e-11 | 4.3202e-09 | 3.7554e-08 | 4.2478e-09 |

| CICA | 4.4263e-13 | 6.4897e-10 | 5.4924e-09 | 6.2908e-10 |

| CICA-D | 3.8908e-15 | 8.2997e-09 | 8.8761e-08 | 7.7855e-09 |

| ICA | CICA | CICA-D(0.1) | CICA-D(0.3) | CICA-D(0.5) | ||

|---|---|---|---|---|---|---|

| Fitness | Min. | 64.21 | 61.83 | 60.93 | 61.98 | 63.33 |

| Mean | 66.50 | 63.49 | 64.60 | 66.13 | 67.76 | |

| Max. | 69.76 | 67.91 | 70.13 | 70.97 | 72.41 | |

| St. Dev. | 1.6335 | 1.1657 | 2.7274 | 2.5325 | 2.7108 | |

| Execution | Mean | 28.67 | 34.91 | 12.87 | 19.81 | 36.03 |

| Time (sec) | St. Dev. | 14.28 | 18.15 | 7.24 | 8.14 | 14.56 |

| Success Rate | 86.67% | 93.33% | 87.50% | 82.50% | 75.83% | |

| ICA | CICA | CICA-D(0.1) | CICA-D(0.3) | CICA-D(0.5) | ||

|---|---|---|---|---|---|---|

| Fitness | Min. | 67.42 | 64.11 | 63.89 | 64.02 | 64.57 |

| Mean | 69.68 | 66.18 | 67.57 | 70.28 | 72.62 | |

| Max. | 75.65 | 73.71 | 79.71 | 79.52 | 83.55 | |

| St. Dev. | 1.5567 | 1.3839 | 3.0785 | 4.0630 | 5.7615 | |

| Execution | Mean | 52.18 | 57.89 | 17.98 | 46.57 | 69.05 |

| Time (sec) | St. Dev. | 12.41 | 14.59 | 4.79 | 12.48 | 19.24 |

| Success Rate | 80.83% | 88.33% | 82.50% | 76.67% | 70.83% | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.-S.; Lee, J.; Song, C.G.; Kim, S.-J. Efficient Chaotic Imperialist Competitive Algorithm with Dropout Strategy for Global Optimization. Symmetry 2020, 12, 635. https://doi.org/10.3390/sym12040635

Wang Z-S, Lee J, Song CG, Kim S-J. Efficient Chaotic Imperialist Competitive Algorithm with Dropout Strategy for Global Optimization. Symmetry. 2020; 12(4):635. https://doi.org/10.3390/sym12040635

Chicago/Turabian StyleWang, Zong-Sheng, Jung Lee, Chang Geun Song, and Sun-Jeong Kim. 2020. "Efficient Chaotic Imperialist Competitive Algorithm with Dropout Strategy for Global Optimization" Symmetry 12, no. 4: 635. https://doi.org/10.3390/sym12040635