HMCTS-OP: Hierarchical MCTS Based Online Planning in the Asymmetric Adversarial Environment

Abstract

:1. Introduction

- We model the online planning problem in the asymmetric adversarial environment as an MDP and extend the MDP to the semi-Markov decision process (SMDP) by introducing the task hierarchies. This provides the theoretical foundation for MAXQ hierarchical decomposition.

- We derive the MAXQ value hierarchical decomposition for the defined hierarchical tasks. The MAXQ value hierarchical decomposition provides a scalable way to calculate the rewards of hierarchical tasks in HMCTS-OP.

- We use the MAXQ-based task hierarchies to reduce the search space and guide the search process. Therefore, the computational cost is significantly reduced, which enables the MCTS to search deeper to find better action within a limited time frame. As a result, the HMCTS-OP can perform better in online planning in the asymmetric adversarial environment.

2. Background

2.1. Markov and Semi-Markov Decision Process

2.2. MAXQ

- represents the termination condition of subtask , which is used to judge whether is terminated. Specifically, and are the active states and termination states of respectively. If the current state or the predefined maximum calculation time or number of iterations is reached, is set to 1, indicating that is terminated.

- is a set of actions; it contains both primitive actions and high-level subtasks.

- is the optional pseudo-reward function of .

2.3. MCTS

3. Related Work

4. Method

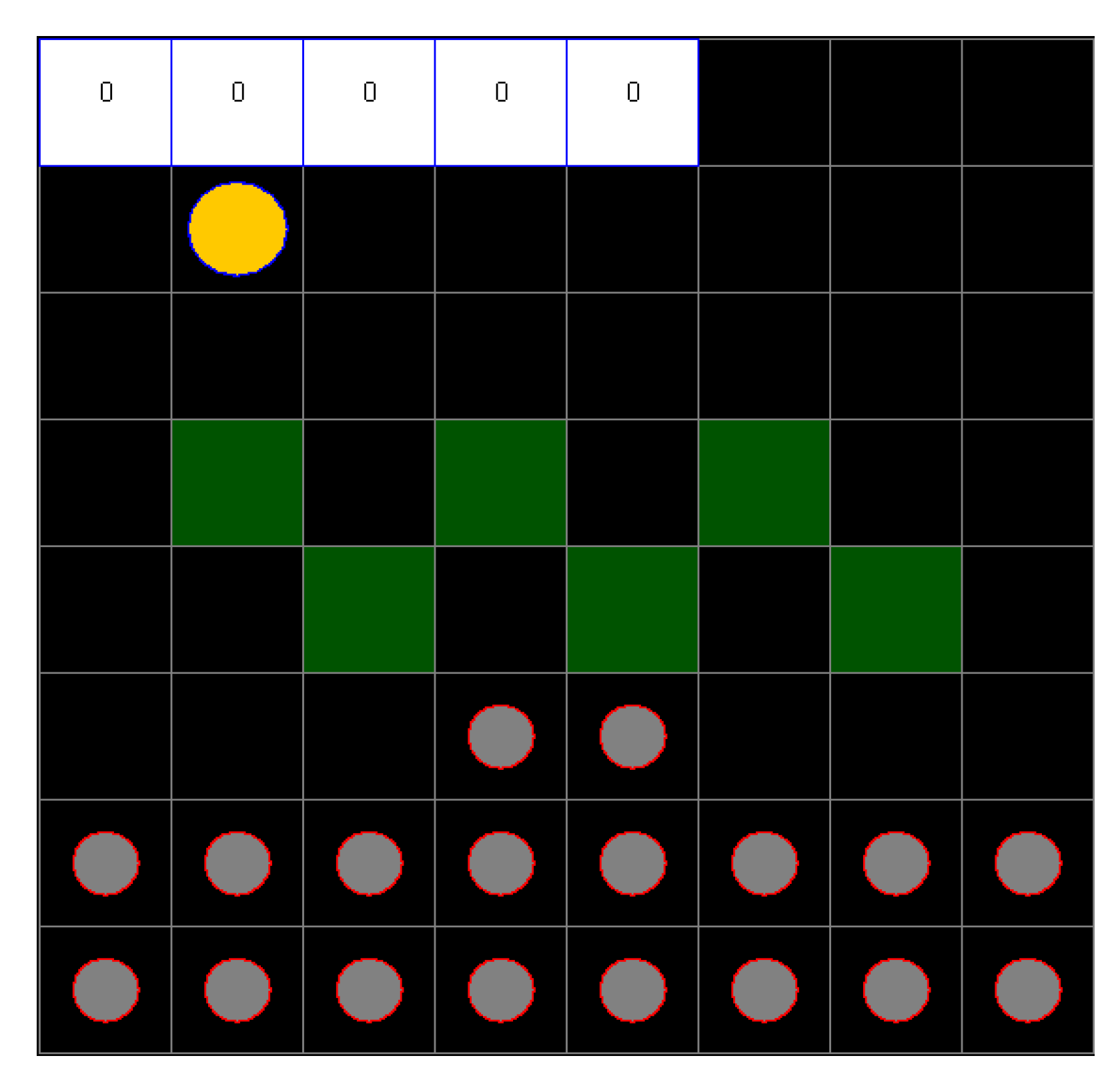

4.1. Asymmetric Adversarial Environment Modeling

- Four navigation actions: NavUp (move upward), NavDown (move downward), NavLeft (move leftward), and NavRight (move rightward).

- Four attack actions: FirUp (fire upward), FirDown (fire downward), FirLeft (fire leftward), and FirRight (fire rightward).

- Wait.

4.2. MAXQ Hierarchical Decomposition

- -

- NavUp, NavDown, NavLeft, NavRight, FirUp, FirDown, FirLeft, FirRight, and Wait: These actions are defined by the RTS; they are primitive actions. When a primitive action is performed, a local reward of −1 will be assigned to each primitive action. This method ensures that the online policy of the high-level subtask can reach the corresponding goal state as soon as possible.

- -

- NavToNeaOpp, NavToCloBaseOpp, and FireTo: The NavToNeaOpp subtask will move the light military unit to the closest enemy unit as soon as possible by performing NavUp, NavDown, NavLeft, and NavRight actions and taking into account the action uncertainties. Similarly, the NavToCloBaseOpp subtask will move the light military unit to the enemy unit closest to the base as fast as possible. The goal of the FireTo subtask is to attack enemy units within a range.

- -

- Attack and Defense: The purpose of Attack is to destroy the enemy’s units to win by planning the attacking behaviors, and the purpose of Defense is to defend against the enemy’s units to protect bases by carrying out defensive behaviors.

- -

- Root: This is a root task. The goal of Root is to destroy the enemy’s units and protect bases. In the Root task, the Attack subtask and the Defense subtask are evaluated by the hierarchical UCB1 policy according to the HMCTS-OP, which is described in the next section in detail.

4.3. Hierarchical MCTS-Based Online Planning (HMCTS-OP)

| Algorithm 1 PLAY. | |

| 1: | function PLAY (task , state , MAXQ hierarchy , rollout policy ) |

| 2: | repeat |

| 3: | HMCTS-OP |

| 4: | Execute |

| 5: | s′ |

| 6: | until termination conditions |

| 7: | end function |

| Algorithm 2 HMCTS-OP. | |

| 1: | function HMCTS-OP (task , state , MAXQ hierarchy , rollout policy ) |

| 2: | if is primitive then Return |

| 3: | end if |

| 4: | if and then Return |

| 5: | end if |

| 6: | if then Return |

| 7: | end if |

| 8: | Initialize search tree for task |

| 9: | while within computational budget do |

| 10: | HMCTSSimulate () |

| 11: | end while |

| 12: | Return GetGreedyPrimitive() |

| 13: | end function |

| Algorithm 3 HMCTSSimulate. | |

| 1: | function HMCTSSimulate (task , state , MAXQ hierarchy , depth , rollout policy ) |

| 2: | steps = 0 // the number of steps executed by task |

| 3: | if is primitive then |

| 4: | Generative-Model // domain generative model-simulator |

| 5: | steps = 1 |

| 6: | Return () |

| 7: | end if |

| 8: | if terminates or then |

| 9: | Return () |

| 10: | end if |

| 11: | if node () is not in tree then |

| 12: | Insert node () to |

| 13: | Return Rollout() |

| 14: | end if |

| 15: | if node () is not fully expanded then |

| 16: | choose untried subtask from |

| 17: | else |

| 18: | // |

| 19: | end if |

| 20: | () = HMCTSSimulate() |

| 21: | () = Rollout () |

| 22: | |

| 23: | |

| 24: | |

| 25: | |

| 26: | |

| 27: | Return () |

| 28: | end function |

| Algorithm 4 Rollout. | ||

| 1: | function Rollout (task , state , depth , rollout policy ) | |

| 2: | ||

| 3: | if is primitive then | |

| 4: | Generative-Model // domain generative model-simulator | |

| 5: | Return () | |

| 6: | end if | |

| 7: | if terminates or then | |

| 8: | Return () | |

| 9: | end if | |

| 10: | ||

| 11: | () = Rollout() | |

| 12: | () = Rollout () | |

| 13: | ||

| 14: | ||

| 15: | Return () | |

| 16: | end function | |

| Algorithm 5 GetGreedyPrimitive. | |

| 1: | function GetGreedyPrimitive (task , state ) |

| 2: | if is primitive then |

| 3: | Return |

| 4: | else |

| 5: | |

| 6: | Return GetGreedyPrimitive () |

| 7: | end if |

| 8: | end function |

5. Experiment and Results

5.1. Experiment Setting

5.2. Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Vien, N.A.; Toussaint, M. Hierarchical Monte-Carlo Planning. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 3613–3619. [Google Scholar]

- Hostetler, J.; Fern, A.; Dietterich, T. State Aggregation in Monte Carlo Tree Search. In Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014. [Google Scholar]

- He, R.; Brunskill, E.; Roy, N. PUMA: Planning under Uncertainty with Macro-Actions. In Proceedings of the Twenty-Fourth AAAI Conference on Artificial Intelligence, Atlanta, GA, USA, 11–15 July 2010; Volume 40, pp. 523–570. [Google Scholar]

- Dietterich, T.G. Hierarchical Reinforcement Learning with the MAXQ Value Function Decomposition. J. Artif. Intell. Res. 2000, 13, 227–303. [Google Scholar] [CrossRef] [Green Version]

- Puterman, M.L. Markov Decision Processes: Discrete Stochastic Dynamic Programming; John Wiley & Sons: New York, NY, USA, 1994; ISBN 0471619779. [Google Scholar]

- Browne, C.; Powley, E. A survey of monte carlo tree search methods. IEEE Trans. Comput. Intell. AI Games 2012, 4, 1–49. [Google Scholar] [CrossRef] [Green Version]

- Kocsis, L.; Szepesvári, C. Bandit Based Monte-Carlo Planning. In European Conference on Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; pp. 282–293. ISBN 978-3-540-45375-8. [Google Scholar]

- Auer, P.; Cesa-Bianchi, N.; Fischer, P. Finite-time analysis of the multiarmed bandit problem. Mach. Learn. 2002, 47, 235–256. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: London, UK, 2018. [Google Scholar]

- Črepinšek, M.; Liu, S.H.; Mernik, M. Exploration and exploitation in evolutionary algorithms: A survey. ACM Comput. Surv. 2013, 45, 1–33. [Google Scholar] [CrossRef]

- Li, Z.; Narayan, A.; Leong, T. An Efficient Approach to Model-Based Hierarchical Reinforcement Learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–10 February 2017; pp. 3583–3589. [Google Scholar]

- Doroodgar, B.; Liu, Y.; Nejat, G. A learning-based semi-autonomous controller for robotic exploration of unknown disaster scenes while searching for victims. IEEE Trans. Cybern. 2014, 44, 2719–2732. [Google Scholar] [CrossRef] [PubMed]

- Schwab, D.; Ray, S. Offline reinforcement learning with task hierarchies. Mach. Learn. 2017, 106, 1569–1598. [Google Scholar] [CrossRef] [Green Version]

- Le, H.M.; Jiang, N.; Agarwal, A.; Dudík, M.; Yue, Y.; Daumé, H. Hierarchical imitation and reinforcement learning. In Proceedings of the ICML 2018: The 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 7, pp. 4560–4573. [Google Scholar]

- Bai, A.; Wu, F.; Chen, X. Online Planning for Large Markov Decision Processes with Hierarchical Decomposition. ACM Trans. Intell. Syst. Technol. 2015, 6, 1–28. [Google Scholar] [CrossRef]

- Bai, A.; Srivastava, S.; Russell, S. Markovian state and action abstractions for MDPs via hierarchical MCTS. In Proceedings of the IJCAI: International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 3029–3037. [Google Scholar]

- Menashe, J.; Stone, P. Monte Carlo hierarchical model learning. In Proceedings of the fourteenth International Conference on Autonomous Agents and Multiagent Systems, Istanbul, Turkey, 4–8 May 2015; Volume 2, pp. 771–779. [Google Scholar]

- Sironi, C.F.; Liu, J.; Perez-Liebana, D.; Gaina, R.D. Self-Adaptive MCTS for General Video Game Playing Self-Adaptive MCTS for General Video Game Playing. In Proceedings of the International Conference on the Applications of Evolutionary Computation; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Neufeld, X.; Mostaghim, S.; Perez-Liebana, D. A Hybrid Planning and Execution Approach Through HTN and MCTS. In Proceedings of the IntEx Workshop at ICAPS-2019, London, UK, 23–24 October 2019; Volume 1, pp. 37–49. [Google Scholar]

- Ontañón, S. Informed Monte Carlo Tree Search for Real-Time Strategy games. In Proceedings of the IEEE Conference on Computational Intelligence in Games CIG, New York, NY, USA, 22–25 August 2017. [Google Scholar]

- Ontañón, S. The combinatorial Multi-armed Bandit problem and its application to real-time strategy games. In Proceedings of the Ninth Artificial Intelligence and Interactive Digital Entertainment Conference AIIDE, Boston, MA, USA, 14–15 October 2013; pp. 58–64. [Google Scholar]

- Theodorsson-Norheim, E. Kruskal-Wallis test: BASIC computer program to perform nonparametric one-way analysis of variance and multiple comparisons on ranks of several independent samples. Comput. Methods Programs Biomed. 1986, 23, 57–62. [Google Scholar] [CrossRef]

- Morris, H.; Degroot, M.J.S. Probability and Statistics, 4th ed.; Addison Wesley: Boston, MA, USA, 2011; ISBN 0201524880. [Google Scholar]

| Scenario 1 | Scenario 2 | Scenario 3 | |

|---|---|---|---|

| Size | 8 × 8 | 10 × 10 | 12 × 12 |

| Units | 23 | 23 | 23 |

| Maximal states | 1036 | 1041 | 1044 |

| Opponent | Scenario | UCT | NaiveMCTS | Informed NaiveMCTS | HMCTS-OP | Smart-HMCTS-OP |

|---|---|---|---|---|---|---|

| Random | 8 × 8 | −38.94 | −57.7 | −153.56 | 109.94 | 276.34 |

| 10 × 10 | 105.02 | 47.98 | 30.12 | 242.24 | 312.66 | |

| 12 × 12 | −6.64 | 55.72 | 69.88 | 247.52 | 261.38 | |

| UCT | 8 × 8 | −929.98 | −987.68 | −995.06 | −354.64 | −288.38 |

| 10 × 10 | −694.42 | −610.48 | −840.8 | −236.34 | −95.5 | |

| 12 × 12 | −653.12 | −660.16 | −659.54 | −173.58 | −89.34 |

| Opponent | Scenario | UCT | NaiveMCTS | Informed NaiveMCTS | HMCTS-OP | Smart-HMCTS-OP |

|---|---|---|---|---|---|---|

| Random | 8 × 8 | |||||

| 10 × 10 | ||||||

| 12 × 12 | ||||||

| UCT | 8 × 8 | |||||

| 10 × 10 | ||||||

| 12 × 12 |

| Group | GroupR11–GroupR15 | GroupU11–GroupU15 | GroupR21–GroupR25 | GroupU21–GroupU25 | GroupR31–GroupR35 | GroupU31–GroupU3 |

|---|---|---|---|---|---|---|

| p-value | 1.2883 × 10−12 | 1.2570 × 10−32 | 7.5282 × 10−13 | 3.1371 × 10−22 | 3.0367 × 10−11 | 3.6793 × 10−22 |

| Opponent | Scenario | Group | UCT | NaiveMCTS | Informed NaiveMCTS |

|---|---|---|---|---|---|

| Random | 8 × 8 | HMCTS-OP | 0.4145 | 0.2623 | 0.0115 |

| Smart-HMCTS-OP | 1.7033 × 10−7 | 4.0795 × 10−8 | 9.9303 × 10−9 | ||

| 10 × 10 | HMCTS-OP | 0.1634 | 0.0331 | 0.0010 | |

| Smart-HMCTS-OP | 6.7051 × 10−7 | 2.6123 × 10−8 | 9.9410 × 10−9 | ||

| 12 × 12 | HMCTS-OP | 6.2448 × 10−6 | 4.2737 × 10−4 | 6.7339 × 10−4 | |

| Smart-HMCTS-OP | 1.1508 × 10−7 | 1.4085 × 10−5 | 2.4075 × 10−5 | ||

| UCT | 8 × 8 | HMCTS-OP | 9.9611 × 10−9 | 9.9217 × 10−9 | 9.9217 × 10−9 |

| Smart-HMCTS-OP | 9.9230 × 10−9 | 9.9217 × 10−9 | 9.9217 × 10−9 | ||

| 10 × 10 | HMCTS-OP | 1.1821 × 10−6 | 1.9423 × 10−4 | 9.9353 × 10−9 | |

| Smart-HMCTS-OP | 9.9718 × 10−9 | 5.6171 × 10−8 | 9.9217 × 10−9 | ||

| 12 × 12 | HMCTS-OP | 7.1822 × 10−8 | 3.0630 × 10−8 | 1.5334 × 10−8 | |

| Smart-HMCTS-OP | 9.9384 × 10−9 | 9.9260 × 10−9 | 9.9225 × 10−9 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, L.; Zhang, W.; Gu, X.; Ji, X.; Chen, J. HMCTS-OP: Hierarchical MCTS Based Online Planning in the Asymmetric Adversarial Environment. Symmetry 2020, 12, 719. https://doi.org/10.3390/sym12050719

Lu L, Zhang W, Gu X, Ji X, Chen J. HMCTS-OP: Hierarchical MCTS Based Online Planning in the Asymmetric Adversarial Environment. Symmetry. 2020; 12(5):719. https://doi.org/10.3390/sym12050719

Chicago/Turabian StyleLu, Lina, Wanpeng Zhang, Xueqiang Gu, Xiang Ji, and Jing Chen. 2020. "HMCTS-OP: Hierarchical MCTS Based Online Planning in the Asymmetric Adversarial Environment" Symmetry 12, no. 5: 719. https://doi.org/10.3390/sym12050719