Compressive Sensing Based Three-Dimensional Imaging Method with Electro-Optic Modulation for Nonscanning Laser Radar

Abstract

:1. Introduction

2. System Description

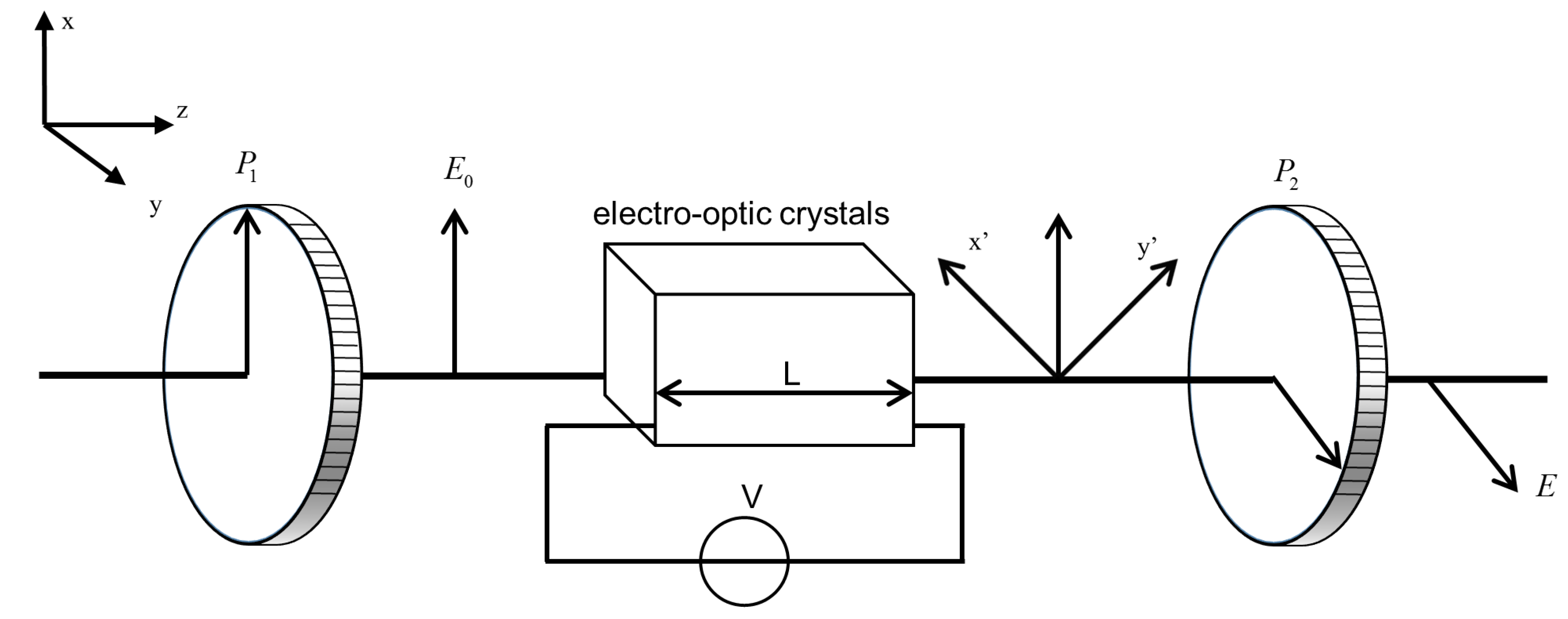

2.1. Proposed System Setup

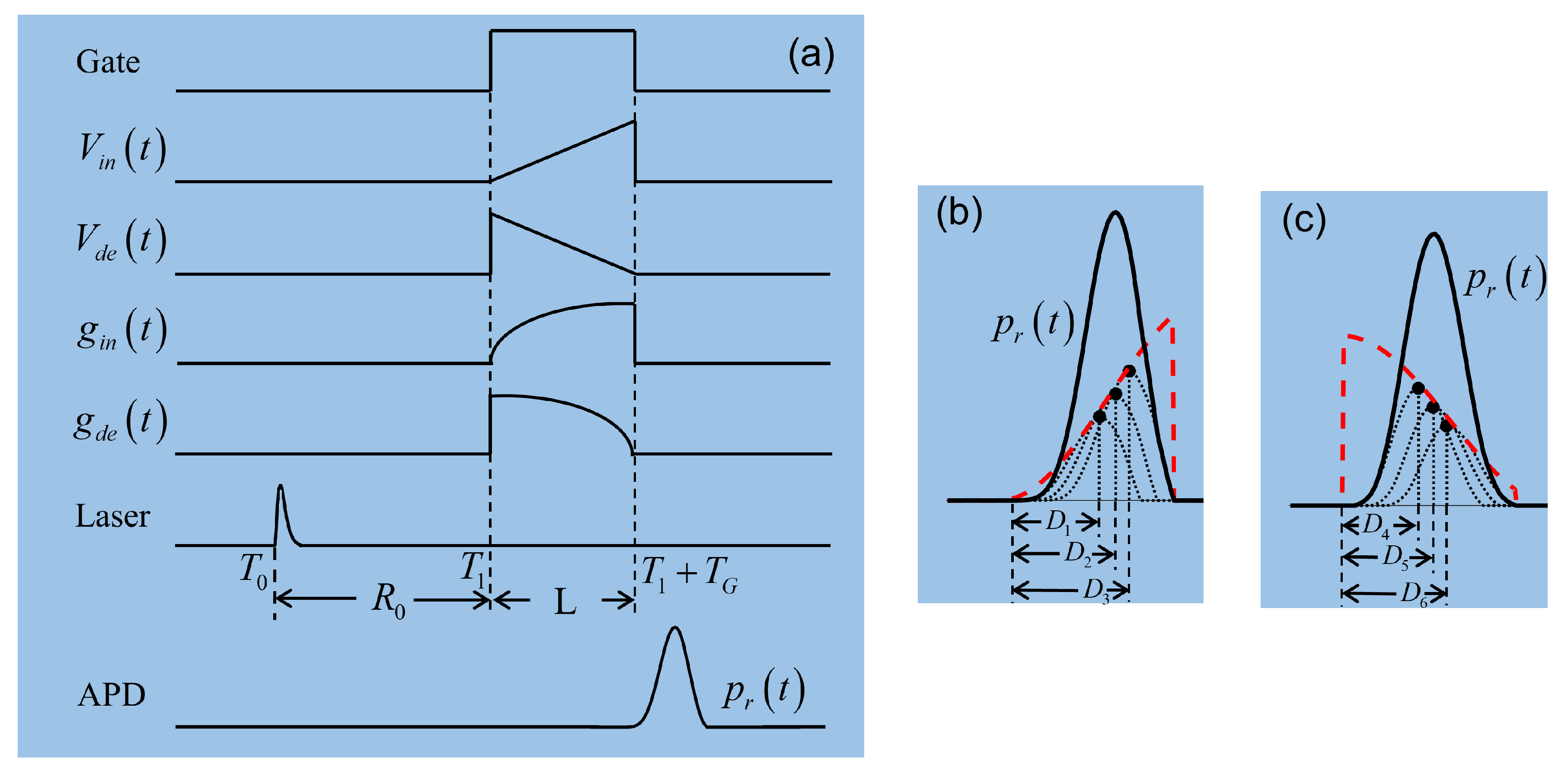

2.2. Proposed System Model

3. CS-Based Electro-Optic Modulation Method for 3D Imaging

3.1. Compressive Sensing Theory

3.2. Peak Value Obtained for 3D Reconstruction

3.3. 3D Image Reconstruction

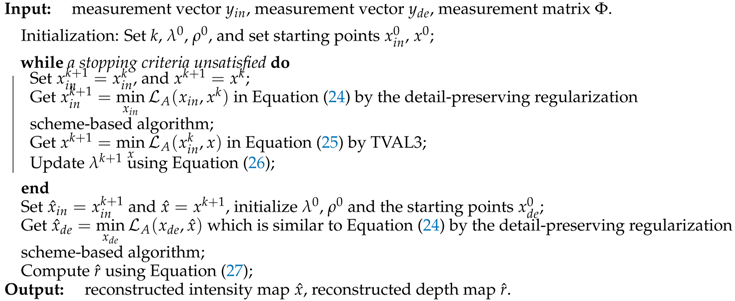

| Algorithm 1: The proposed 3D reconstruction algorithm |

|

4. Simulation Results and Discussion

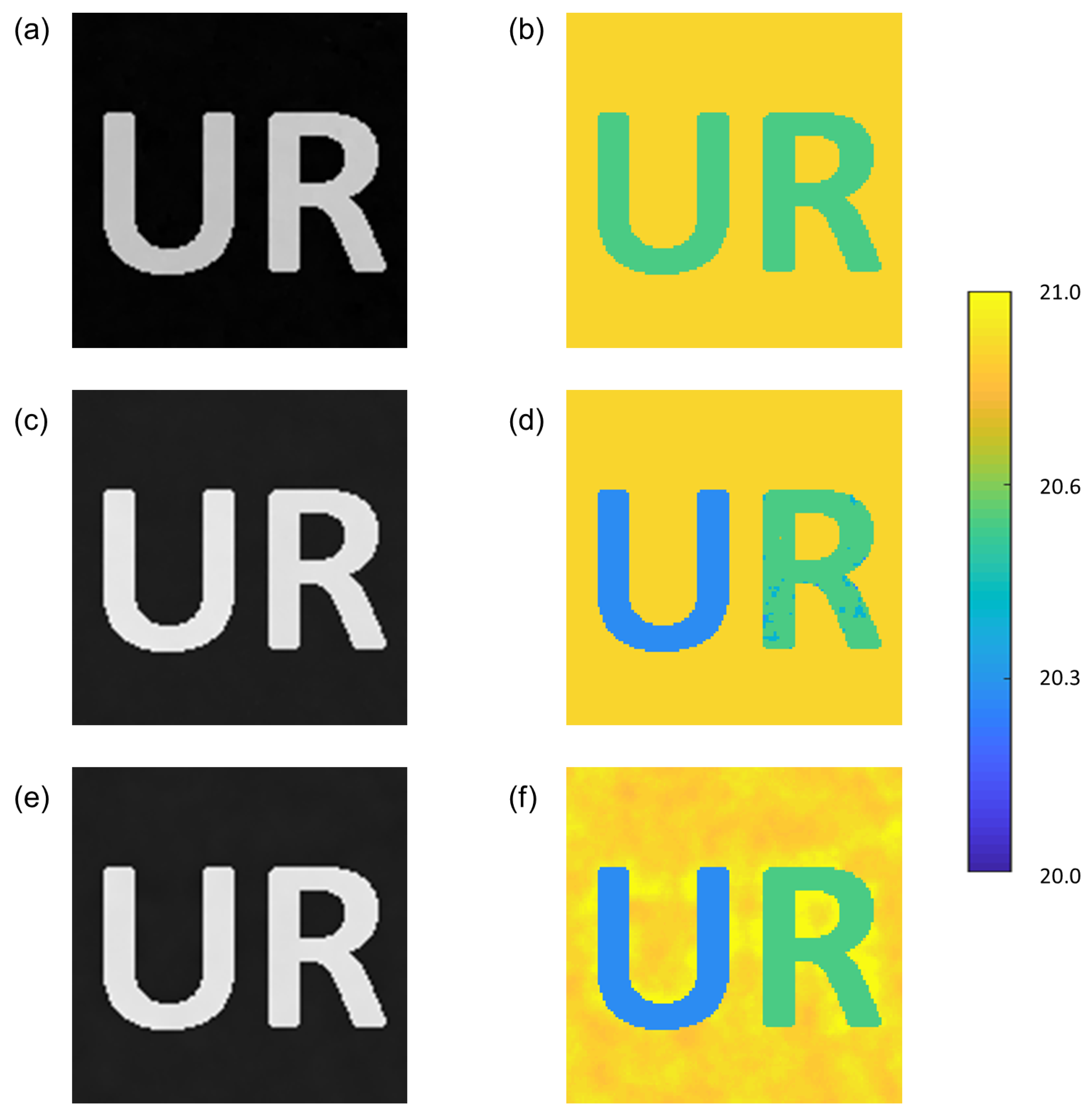

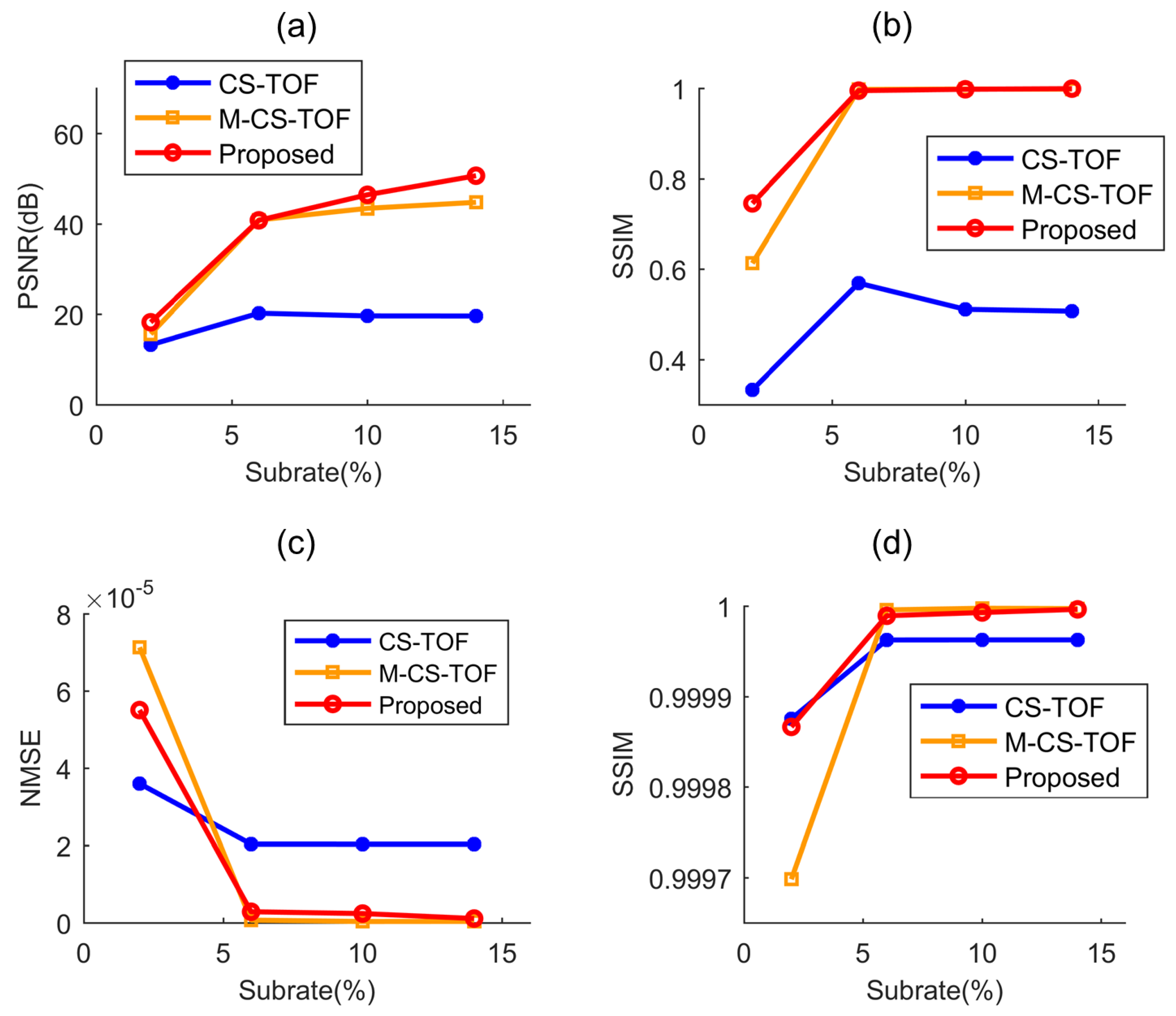

4.1. Reconstruction Performance of the Discrete Target

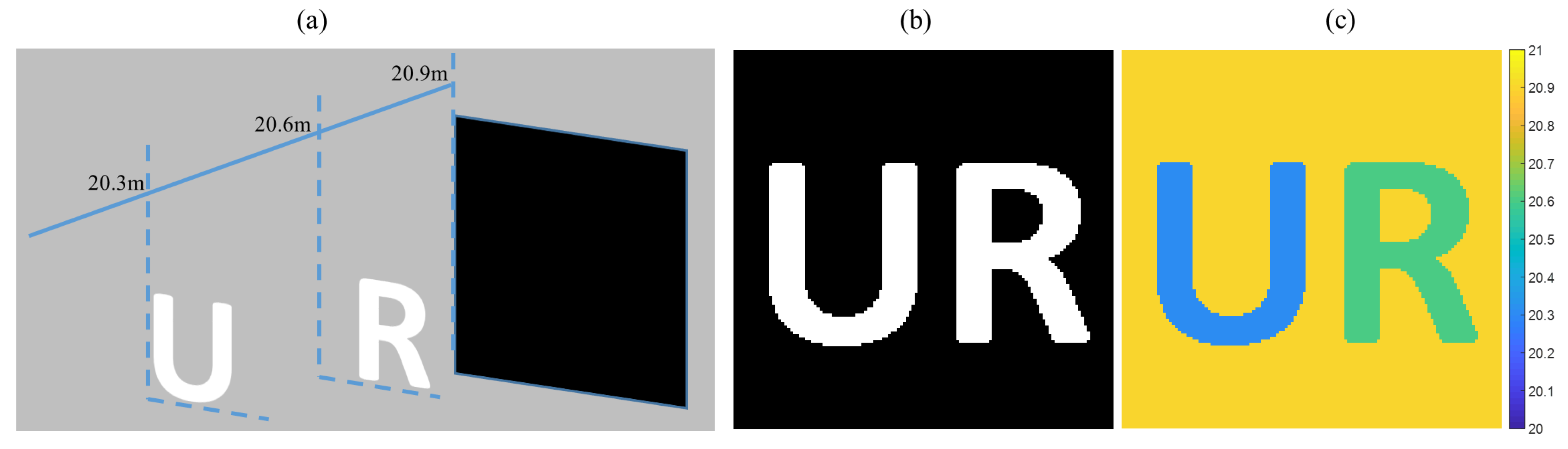

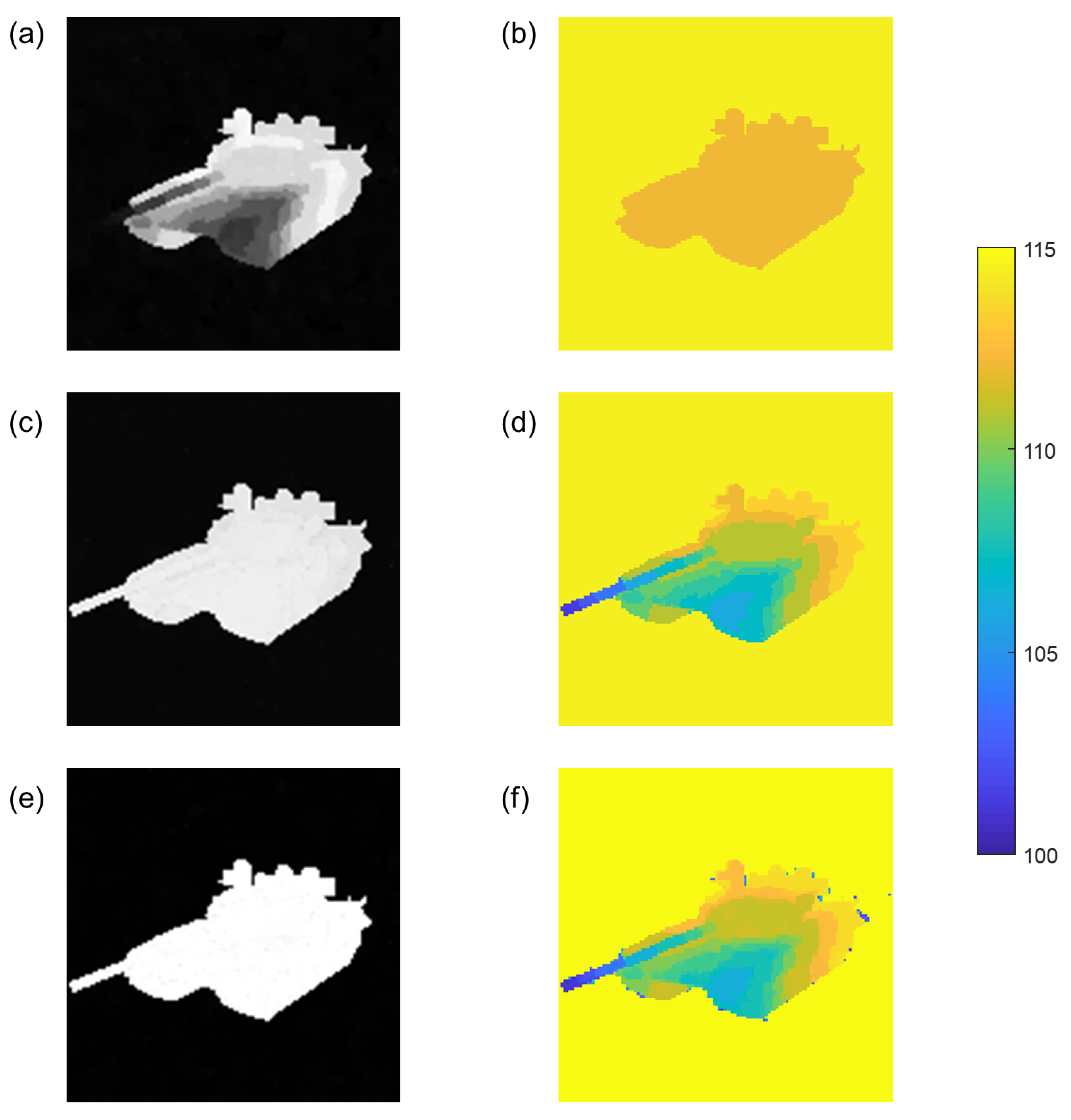

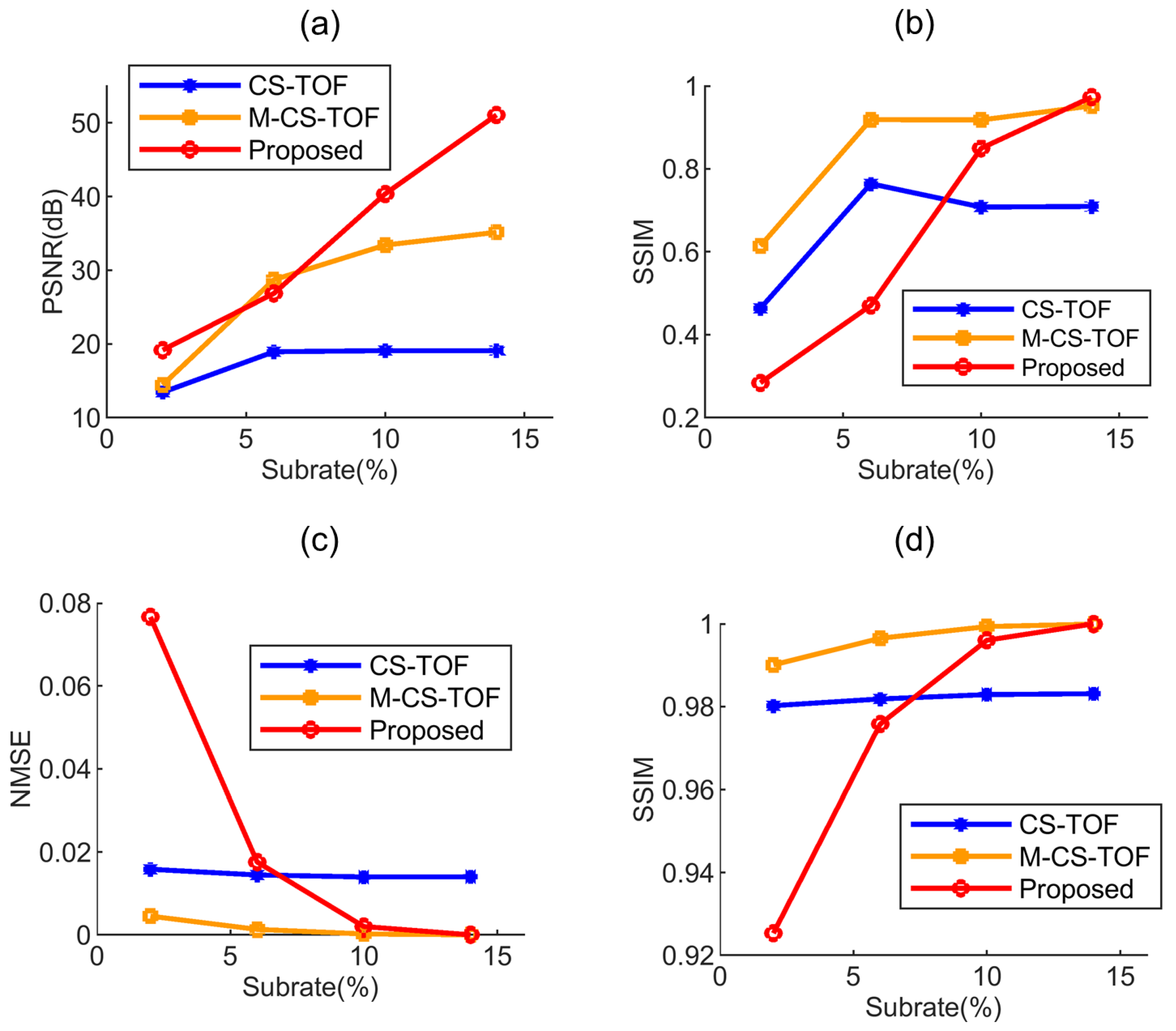

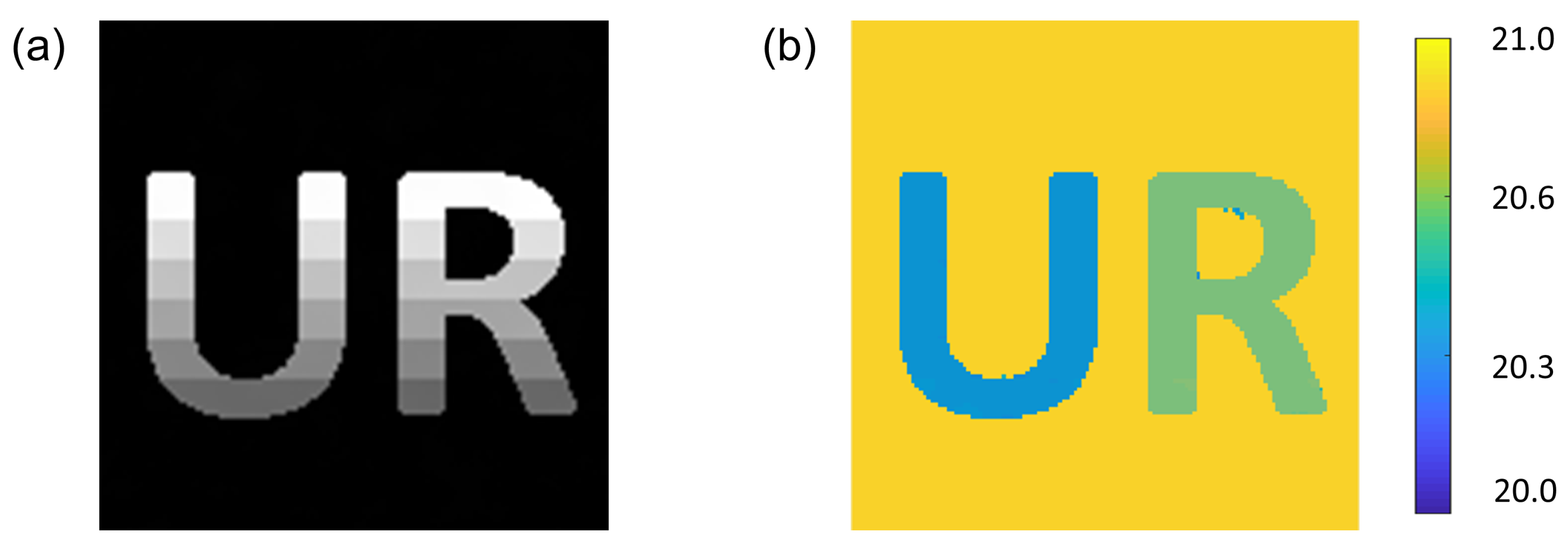

4.2. Reconstruction Performance of the Continuous Target

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| LiDAR | Laser Detection and Ranging |

| 3D | Three-dimensional |

| CS | compressive sensing |

| TOF | Time-Of-Flight |

| ADMM | alternating direction method of multiplier |

| RGI | Range-Gated Imaging |

| NBF | NarrowBand filter |

| EOM | Electro-Optic Modulator |

| DMD | Digital Micromirror Device |

| APD | Avalanche PhotoDiode |

| SNR | Signal-to-Noise Ratio |

| FWHM | Full Width at Half Maximum |

| DCT | Discrete Cosine Transform |

| DWT | Discrete Wavelet Transfrom |

| RIP | Restricted Isometry Property |

| TV | Total Variation |

| TVAL3 | Total Variation minimization based on Augmented Lagrangian and ALternating direction Algorithm |

| TGV | Generalization of TV |

| PSNR | Peak Signal to Noise Ratio |

| NMSE | Normalized Mean Squared Error |

| SSIM | Structural SIMilarity |

References

- Molebny, V.; Mcmanamon, P.; Steinvall, O.; Kobayashi, T.; Chen, W. Laser radar: Historical prospective—From the East to the West. Opt. Eng. 2016, 56, 031220. [Google Scholar] [CrossRef]

- Li, L.; Wu, L.; Wang, X.; Dang, E. Gated viewing laser imaging with compressive sensing. Appl. Opt. 2012, 51, 2706–2712. [Google Scholar] [CrossRef] [PubMed]

- Gao, H.; Zhang, Y.; Guo, H. Multihypothesis-Based Compressive Sensing Algorithm for Nonscanning Three-Dimensional Laser Imaging. IEEE J. STARS 2018, 11, 311–321. [Google Scholar] [CrossRef]

- Colaco, A.; Kirmani, A.; Howland, G.A.; Howell, J.C.; Goyal, V.K. Compressive Depth Map Acquisition Using a Single Photon-Counting Detector: Parametric Signal Processing Meets Sparsity. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 96–102. [Google Scholar] [CrossRef] [Green Version]

- Gibson, G.M.; Sun, B.; Edgar, M.P.; Phillips, D.B.; Hempler, N.; Maker, G.T.; Malcolm, G.P.A.; Padgett, M.J. Real-time imaging of methane gas leaks using a single-pixel camera. Opt. Express 2017, 25, 2998–3005. [Google Scholar] [CrossRef] [PubMed]

- Edgar, M.; Johnson, S.; Phillips, D.; Padgett, M. Real-time computational photon-counting LiDAR. Opt. Eng. 2018, 57, 031304. [Google Scholar] [CrossRef] [Green Version]

- Sun, M.J.; Zhang, J.M. Single-Pixel Imaging and Its Application in Three-Dimensional Reconstruction: A Brief Review. Sensors 2019, 19, 732. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.D.; Li, C.L.; Meng, Q.P.; Liu, S.J.; Zhang, Y.; Wang, J.Y. Infrared Image Super Resolution by Combining Compressive Sensing and Deep Learning. Sensors 2018, 18, 2587. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Gao, K. MAP-MRF-based super-resolution reconstruction approach for coded aperture compressive temporal imaging. Appl. Sci. 2018, 8, 338. [Google Scholar] [CrossRef] [Green Version]

- Donoho, D.L. Compressed sensing. IEEE T. Inform. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candès, E.J. Compressive sampling. In Proceedings of the International Congress of Mathematicians, Madrid, Spain, 22–30 August 2006; Volume 3, pp. 1433–1452. [Google Scholar]

- Rani, M.; Dhok, S.B.; Deshmukh, R.B. A Systematic Review of Compressive Sensing: Concepts, Implementations and Applications. IEEE Access 2018, 6, 4875–4894. [Google Scholar] [CrossRef]

- Gan, H.P.; Xiao, S.; Zhang, T.; Zhang, Z.M.; Li, J.; Gao, Y. Chaotic Pattern Array for Single-Pixel Imaging. Electronics 2019, 8, 536. [Google Scholar] [CrossRef] [Green Version]

- Duarte, M.F.; Davenport, M.A.; Takhar, D.; Laska, J.N.; Sun, T.; Kelly, K.F.; Baraniuk, R.G. Single-pixel imaging via compressive sampling. IEEE Signal Proc. Mag. 2008, 25, 83–91. [Google Scholar] [CrossRef] [Green Version]

- Howland, G.A.; Dixon, P.B.; Howell, J.C. Photon-counting compressive sensing laser radar for 3D imaging. Appl. Opt. 2011, 50, 5917–5920. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, M.J.; Edgar, M.P.; Gibson, G.M.; Sun, B.; Radwell, N.; Lamb, R.; Padgett, M.J. Single-pixel three-dimensional imaging with time-based depth resolution. Nat. Commun. 2016, 7. [Google Scholar] [CrossRef] [PubMed]

- Howland, G.A.; Lum, D.J.; Ware, M.R.; Howell, J.C. Photon counting compressive depth mapping. Opt. Express 2013, 21, 23822–23837. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Busck, J.; Heiselberg, H. Gated viewing and high-accuracy three-dimensional laser radar. Appl. Opt. 2004, 43, 4705–4710. [Google Scholar] [CrossRef] [PubMed]

- Laurenzis, M.; Christnacher, F.; Monnin, D. Long-range three-dimensional active imaging with superresolution depth mapping. Opt. Lett. 2007, 32, 3146–3148. [Google Scholar] [CrossRef]

- Jin, C.; Sun, X.; Zhao, Y.; Zhang, Y.; Liu, L. Gain-modulated three-dimensional active imaging with depth-independent depth accuracy. Opt. Lett. 2009, 34, 3550–3552. [Google Scholar] [CrossRef]

- Tsagkatakis, G.; Woiselle, A.; Tzagkarakis, G.; Bousquet, M.; Starck, J.L.; Tsakalides, P. Multireturn compressed gated range imaging. Opt. Eng. 2015, 54, 031106. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Li, Y.; Zhou, Y. Multi-pulse time delay integration method for flexible 3D super-resolution range-gated imaging. Opt. Express 2015, 23, 7820–7831. [Google Scholar] [CrossRef]

- Laurenzis, M.; Becher, E. Three-dimensional laser-gated viewing with error-free coding. Opt. Eng. 2018, 57, 7. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, B.; Liu, E.; Peng, Z. Electro-optic modulation methods in range-gated active imaging. Appl. Opt. 2016, 55, A184–A190. [Google Scholar] [CrossRef]

- An, Y.; Zhang, Y.; Guo, H.; Wang, J. Compressive Sensing-Based Three-Dimensional Laser Imaging With Dual Illumination. IEEE Access 2019, 7, 25708–25717. [Google Scholar] [CrossRef]

- Fade, J.; Perrotin, E.; Bobin, J. Polarizer-free two-pixel polarimetric camera by compressive sensing. Appl. Opt. 2018, 57, B102–B113. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Y.L.; Suo, J.L.; Wang, Y.W.; Dai, Q.H. Doubling the pixel count limitation of single-pixel imaging via sinusoidal amplitude modulation. Opt. Express 2018, 26, 6929–6942. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Liu, B.; Liu, E.; Peng, Z. Adaptive Polarization-Modulated Method for High-Resolution 3D Imaging. IEEE Photonic Technol. Lett. 2016, 28, 295–298. [Google Scholar] [CrossRef]

- Gao, H.; Zhang, Y.-M.; Guo, H.-C. A compressive sensing algorithm using truncated SVD for three-dimensional laser imaging of space-continuous targets. J. Mod. Optic. 2016, 63, 2166–2172. [Google Scholar] [CrossRef]

- Duarte, M.F.; Baraniuk, R.G. Kronecker Compressive Sensing. IEEE Trans. Image Process. 2012, 21, 494–504. [Google Scholar] [CrossRef]

- Buyssens, P.; Daisy, M.; Tschumperle, D.; Lezoray, O. Exemplar-Based Inpainting: Technical Review and New Heuristics for Better Geometric Reconstructions. IEEE Trans. Image Process. 2015, 24, 1809–1824. [Google Scholar] [CrossRef]

- Duarte, M.F.; Sarvotham, S.; Baron, D.; Wakin, M.B.; Baraniuk, R.G. Distributed compressed sensing of jointly sparse signals. In Proceedings of the Conference Record of the Thirty-Ninth Asilomar Conference onSignals, Systems and Computers, Pacific Grove, CA, USA, 30 October–2 November 2005; pp. 1537–1541. [Google Scholar]

- Candes, E.J.; Wakin, M.B. An introduction to compressive sampling. IEEE Signal Proc. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Bhattacharjee, T.; Maity, S.P. Progressive and hierarchical share-in-share scheme over cloud. J. Inf. Secur. Appl. 2019, 46, 108–120. [Google Scholar] [CrossRef]

- Bian, L.H.; Suo, J.L.; Dai, Q.H.; Chen, F. Experimental comparison of single-pixel imaging algorithms. J. Opt. Soc. Am. A 2018, 35, 78–87. [Google Scholar] [CrossRef] [PubMed]

- Guo, W.; Qin, J.; Yin, W. A New Detail-Preserving Regularization Scheme. SIAM J. Imaging Sci. 2014, 7, 1309–1334. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Li, C. An Efficient Algorithm for Total Variation Regularization with Applications to the Single Pixel Camera and Compressive Sensing. Master’s Thesis, Department of Computational and Applied Mathematics, Houston, TX, USA, 2009. [Google Scholar]

- Li, F.; Chen, H.; Pediredla, A.; Yeh, C.; He, K.; Veeraraghavan, A.; Cossairt, O. CS-ToF: High-resolution compressive time-of-flight imaging. Opt. Express 2017, 25, 31096–31110. [Google Scholar] [CrossRef] [Green Version]

- Kirmani, A.; Colaço, A.; Wong, F.N.; Goyal, V.K. Exploiting sparsity in time-of-flight range acquisition using a single time-resolved sensor. Opt. Express 2011, 19, 21485–21507. [Google Scholar] [CrossRef]

- Czajkowski, K.M.; Pastuszczak, A.; Kotynski, R. Single-pixel imaging with Morlet wavelet correlated random patterns. Sci. Rep. 2018, 8, 8. [Google Scholar] [CrossRef] [Green Version]

- Marques, E.C.; Maciel, N.; Naviner, L.; Cai, H.; Yang, J. A Review of Sparse Recovery Algorithms. IEEE Access 2019, 7, 1300–1322. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE T. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

| Expression | Value |

|---|---|

| Wavelength | 905 nm |

| Sampling Rate of APD | 1 GHz |

| Peak Power of Transmitter Pulse | 70 W |

| FWHM | 10 ns |

| Efficiency of Optical Transmitting System | 0.9 |

| Efficiency of Optical Receiving System | 0.9 |

| Single-pass Atmospheric Transmittance | 0.98 |

| Imaging Methods | CS-TOF | M-CS-TOF | Proposed |

|---|---|---|---|

| FWHM | resolution related | free | free |

| Sampling rate | higher | higher | lower |

| Sets of measurements | some | dozens | 2 |

| Number of frames | some | dozens | 2 |

| Execution time | medium | long | short |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, Y.; Zhang, Y.; Guo, H.; Wang, J. Compressive Sensing Based Three-Dimensional Imaging Method with Electro-Optic Modulation for Nonscanning Laser Radar. Symmetry 2020, 12, 748. https://doi.org/10.3390/sym12050748

An Y, Zhang Y, Guo H, Wang J. Compressive Sensing Based Three-Dimensional Imaging Method with Electro-Optic Modulation for Nonscanning Laser Radar. Symmetry. 2020; 12(5):748. https://doi.org/10.3390/sym12050748

Chicago/Turabian StyleAn, Yulong, Yanmei Zhang, Haichao Guo, and Jing Wang. 2020. "Compressive Sensing Based Three-Dimensional Imaging Method with Electro-Optic Modulation for Nonscanning Laser Radar" Symmetry 12, no. 5: 748. https://doi.org/10.3390/sym12050748