2.1. Software Dynamic Image Fault Code Detects Sample Information Acquisition

In order to realize the effective detection and diagnosis of software dynamic image faults in embedded software, software dynamic image fault data acquisition and signal extraction and analysis are the first step [

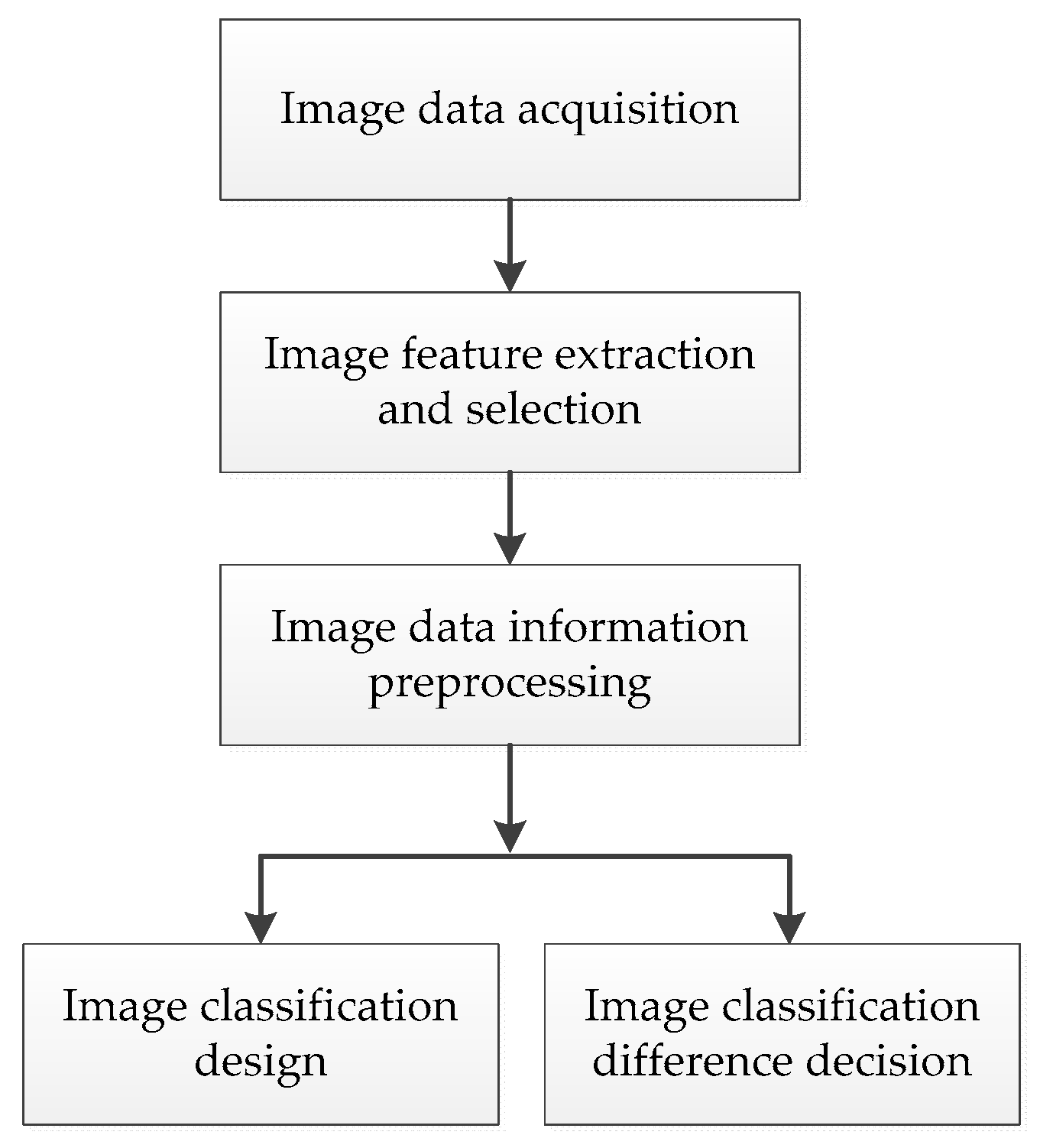

7]. In embedded software, the feature of the dynamic image is based on similar high-dynamic information and spatial dimension features reflected by the same type of pixels under the same conditions. The classification of dynamic image features in embedded software is the basis of dynamic image feature extraction. The process of image pixel feature classification in embedded software is divided into two stages: design and decision-making. In the design stage, the pixel information that can be resolved is analyzed, designed, and calculated via sample analysis. In the decision-making stage, the calculated pixel information that cannot be resolved is classified. The dynamic image feature classification process for embedded software is shown in

Figure 1.

In embedded software, image data need to be normalized to reduce the data noise of the embedded network and enhance the image data signal. After dynamic image feature normalization processing, various mathematical transformations must be carried out on the image data information. The most commonly used is wavelet transform, which is a more suitable multidirectional texture calculation method for image data analysis in embedded software. A software dynamic image fault signal model of a software dynamic image fault was constructed using embedded feature extraction and fuzzy control algorithm, and the dynamic image fault recovery path of the software dynamic image fault feature was input into the expert system diagnosis database. With the association and intersection of large-scale data, data characteristics and real needs have changed. Data characterized by large-scale, multi-source, heterogeneous, cross-domain, cross-media, cross-language, dynamic evolution, and generalization play a more important role, and the corresponding data storage, analysis, and understanding are also facing major challenges. Big data fusion methods aim to maximize the value of big data by data association, cross, and fusion. The key to this problem is data fusion.

Figure 2 shows the design of the overall model of data fusion related to this study.

The implementation principle of big data fusion methods actually refers to the centralized data repository, which is built based on the distributed file systems moosefs, mongodb database cluster, and vituoso database. They are, respectively, used to store the unstructured data downloaded from each data source, the structured data downloaded from each data source, and the data obtained after analyzing the original data, the transformed RDF data, and the newly established association relationship data through the semantic enhancement system. These data are gathered through various download components and management systems of the data aggregation module. For downloaded data, customized data analysis tools are developed to complete the transformation of data from its original form to an “attribute value” structured form, and configuration-based data conversion tools are developed. The “attribute value”, which is valuable for discovering the association between data, is extracted, and the extracted data are transformed into a consistent RDF format through necessary data merging, splitting, equivalent transformation, and other processing. The association mechanism between data is determined and, on this basis, similarity calculation, reasoning, ontology mapping, and other association discovery methods are used to increase the semantic associations between data. Finally, data are passed through a service interface module to provide external services. Big data fusion is a means of dealing with big data, and is used to discover knowledge from big data and integrate knowledge in a way that is closer to human thinking according to the semantic and logical associations of knowledge. It includes two steps: data fusion and knowledge fusion. Data fusion involves the dynamic extraction, integration, and transformation of multisource data into knowledge resources, which lays the foundation for knowledge fusion. Knowledge fusion is responsible for the different granularity understandings of the relationship between knowledge and knowledge; thus, knowledge has different levels of comprehensibility and comprehensibility so as to facilitate the interpretation of objective phenomena. Data fusion and knowledge fusion do not exist in isolation. The knowledge acquired via knowledge fusion can be used as a reference factor for data fusion to assist data fusion, and data fusion serves not only to provide integrated data for knowledge fusion, but may also have a reference role for knowledge fusion.

This process of software dynamic image fault diagnosis of embedded software is carried out by combining signal feature extraction and big data fusion methods, and a software dynamic image fault diagnosis analysis database is established [

8,

9,

10]. The original signal is collected for software dynamic image fault detection, the laser detection method of embedded software working condition sample in the presence of software dynamic image fault state is used for beamforming processing, and the fault code sensor is used for data acquisition. The breakpoint data are collected under the software dynamic image fault condition, the software dynamic image fault data classification clustering center

,

is initialized to extract the software dynamic image fault spectrum feature of the embedded software, and the laser data receiving model of the embedded software under the software dynamic image fault condition is obtained as follows.

In the software dynamic image fault distribution index section of the embedded software, the phase characteristic analysis of the software dynamic image fault part is carried out [

11,

12], and the deviation degree S and the similarity

of the independent and distributed random variable of the dynamic image fault node of the software are, respectively,

The software dynamic image fault detection fault code data under the software dynamic image fault condition is subjected to a sample regression analysis, and the correlation function of the software dynamic image fault sample characteristic data is calculated as follows.

Among these, the number of sampling points in the time domain of the software dynamic image fault feature sequence is

, and the integral

of correlation dimension obeys the exponential law, i.e.,

According to the software dynamic image fault code detection sample information acquisition result, the length

fl between the optimal sampling points S of the software dynamic image fault signal of the software dynamic image fault is obtained [

13,

14], the software dynamic image fault signal length l of the software dynamic image fault is obtained as

where

and

are feature decomposition coefficients,

is a software dynamic image fault signal,

is a scale for a given wide-band high-resolution software dynamic image fault, and

is the energy of a software dynamic image fault signal representing a software dynamic image failure. Statistical regression analysis is then carried out on the detection sample, pattern recognition is carried out on the software dynamic image fault signal of the software dynamic image fault, and the sensing information fusion method is combined to carry out the software dynamic image fault detection of the embedded software [

15,

16].

2.2. Multidimensional Wavelet Decomposition of Fault Recovery Path

In constructing a software dynamic image fault signal model of a software dynamic image fault, it is necessary to denoise and purify the signal in order to improve the software dynamic image fault detection performance. In wavelet analysis, different basis functions are obtained from the base wavelet through translation and expansion

where

(a positive set of real numbers). For large values of

, the basis function becomes the extended image wavelet, which is a low-frequency function; for small values of

, the basis function becomes the reduced wavelet, which is a narrow high-frequency function.

The wavelet transform

is defined as

The Time 2 frequency resolution of is variable; the time range of is shorter at high frequencies, and the frequency width of is narrower at low frequencies. Wavelet analysis involves decomposition of the signal into two parts: approximation and detail. Therefore, the original signal generates two signals through two complementary filters. The high-scale and low-frequency components of the signal are approximately represented, while the low-scale and high-frequency components are represented in detail. The approximation part can be decomposed into the third layer of approximation and details, and the signal can be decomposed into many low-resolution components if it is repeated in this way. The details of wavelet packet analysis can be decomposed just like the approximate part. For N-level decomposition, it will produce 2n different ways.

First, the signal is decomposed by wavelet as shown in

Figure 3, and then the noise part is usually included in the high-frequency or low-frequency signal, and the wavelet coefficients are processed in the form of threshold value as required. Reconstruction of the signal can then achieve the purpose of noise reduction. In the figure, cA and cD are the returned low-frequency and high-frequency coefficient vectors, respectively. The decomposition relation is

. To further decompose, we can further decompose the low-frequency part of

into the low-frequency part of

and the high-frequency part of

. After the discrete wavelet transform of the sampled signal

, the wavelet coefficient

, which can be obtained by the properties of wavelet transform, is still composed of two parts: one is the wavelet coefficient

corresponding to

, and the other is the wavelet coefficient

corresponding to

.

When a signal with rich frequency components is used as an input to excite the system, the suppression and enhancement of each frequency component of the sampled echo signal by wavelet transform will change. Generally, it can obviously inhibit some frequency components and enhance others. Therefore, compared with the output of the normal system, the energy of signals in the same frequency band will be greatly different. It will reduce the signal energy in some frequency bands and increase the energy in other frequency bands. Therefore, the signals of each frequency component can be separated correctly.

Generally speaking, the denoising process of wavelet transform can be divided into three steps: (1) Wavelet decomposition of a multidimensional signal. Select a wavelet and determine the time level of wavelet decomposition, and then carry out multilayer wavelet decomposition to give a group of wavelet coefficients. (2) Wavelet decomposition threshold quantization of high-frequency coefficients. For each layer of high-frequency coefficients from the first layer to the nth layer, select a threshold to quantize, and find the estimated wavelet coefficients to make them as small as possible. (3) Multidimensional wavelet reconstruction, according to the low-frequency coefficients of the nth layer of wavelet decomposition and the high-frequency estimation from the first layer to the nth layer after quantization processing, carries on one-dimensional signal wavelet reconstruction.

The multiresolution analysis characteristic of a wavelet can decompose the signal at different scales, and decompose the mixed signals of different frequencies interweaved into subsignals of different frequency bands. Therefore, the signal has the ability to process according to frequency bands. Because the noise is a relatively stable Gaussian white noise, the average power of its wavelet coefficient is inversely proportional to the scale, and the amplitude of the discrete detail signal decreases with the increase of the scale. Because the wavelet transform is linear, the wavelet coefficients of the degraded signal are the sum of the wavelet coefficients of the signal and the wavelet coefficients of the noise. The discrete approximation part and the discrete detail part of the degraded signal are, respectively, the sum of the discrete approximation part and the discrete detail part after the signal transform and the discrete approximation part and the discrete detail part after the noise transform. Therefore, in the process of denoising, after using the signal and white noise in wavelet transform, their respective wavelet coefficients have different properties, which may eliminate or weaken the noise. Wavelet analysis is used in signal denoising, which is mainly reflected in the different laws of signals after wavelet transform in different resolutions, setting different threshold values in different resolutions, adjusting wavelet coefficients, and achieving the purpose of denoising. Because the length of a software dynamic image fault signal interferes with the amplitude of the signal, the length of the signal is regarded as the characteristic quantity [

17,

18], which can effectively feedback the software dynamic image fault category of the equipment. Assuming that the maximum energy of the software dynamic image fault signal is expressed by

, λ is used as the correlation coefficient to describe the signal in order to improve the resolution and sensitivity of the software dynamic image fault signal, and the amplitude of the software dynamic image fault beam of the embedded software is taken as the effective characteristic quantity. The maximum wave peak and wave valley difference of the software dynamic image fault signal of the software dynamic image fault are calculated as

Spectrum decomposition and blind source separation of the dynamic image fault signal of embedded software s under multi-load are then carried out [

19,

20]. A wavelet detector is used to combine the time domain characteristic quantity and frequency domain feature quantity of the signal in order to estimate the joint parameters, as shown in

Figure 4.

According to the wavelet detector designed in

Figure 1, signal denoising can be carried out. The dynamic image fault signal of broadband high-resolution software is set as a set of stationary random signals [

21,

22,

23], which is represented as the multilayer wavelet scale feature decomposition of the signal by

, the joint parameter estimation of time and frequency of

, and the acquisition of discrete signal

. The fault code width of software dynamic image fault detection in embedded software is

. The software dynamic image fault signal depth directly affects the signal amplitude, represents the depth feature, and can be used to calculate the energy distribution characteristic quantity of the software dynamic image fault signal.

The correlation relationship of each feature quantity of the software dynamic image fault signal is analyzed and compared. Combined with association rule mining and the spectrum feature extraction method, the aggregation degree of the software dynamic image fault signal in each feature quantity is obtained. According to the estimated result of signal feature quantity, the feature information of the software dynamic image fault is comprehensively reflected, and the software dynamic image fault detection is realized by combining with the fault code detection method [

24,

25,

26].