Down Syndrome Face Recognition: A Review

Abstract

:1. Introduction

2. Face Recognition Background

Challenges Facing Face Recognition in Down Syndrome (DS)

- Illumination: The performance drops when illumination changes as a result of skin reflectance and internal cameral control. The appearance of the face changes drastically when there is variation in illumination. It is to be noted that the difference between images of two subjects under the same illumination condition is lower than the difference between the same subjects taken under varying illumination condition.

- Pose Invariant: Changes in pose introduces self-occlusion and projective deformations that affect the authentication process of the face detection. This is a result of the fact that the face images stored in the database may have a positional frontal view, which may be different in pose with the input image and results in incorrect face detection. Therefore, a balance neutral angle is needed for both input image and database images.

- Facial Expression: The facial expressions also impose some problems due to different facial gestures, but have less effect in the detection of the face. The algorithms are relatively robust to facial expression recognition with the exception of the scream expression.

- Timing: Due to the fact that the face changes over time, delay in time may affect the identification process in a nonlinear way over a long period of time, which has proven difficult to solve.

- Aging Variation: Naturally and practically speaking, human beings can identify faces very easily even when aging, but not easily with computer algorithms. Increase in age affects the appearance of the person, which in turn affects the rate of recognition.

- Occlusion: The unavailability of the entire input face as a result of glasses, moustache, beard, scarf, etc., could drastically affect the performance of the recognition.

- Resolution: The image captures from a surveillance camera generally has a very small face area and low resolution and the acquisition distance at which the image is captured, even with a good camera, is very important to the availability of the information needed for face identification.

3. Down Syndrome Datasets

4. DS Face Recognition Pipeline

4.1. Face Detection

4.1.1. Feature Invariant

4.1.2. Appearance-Based

4.2. Feature Extraction

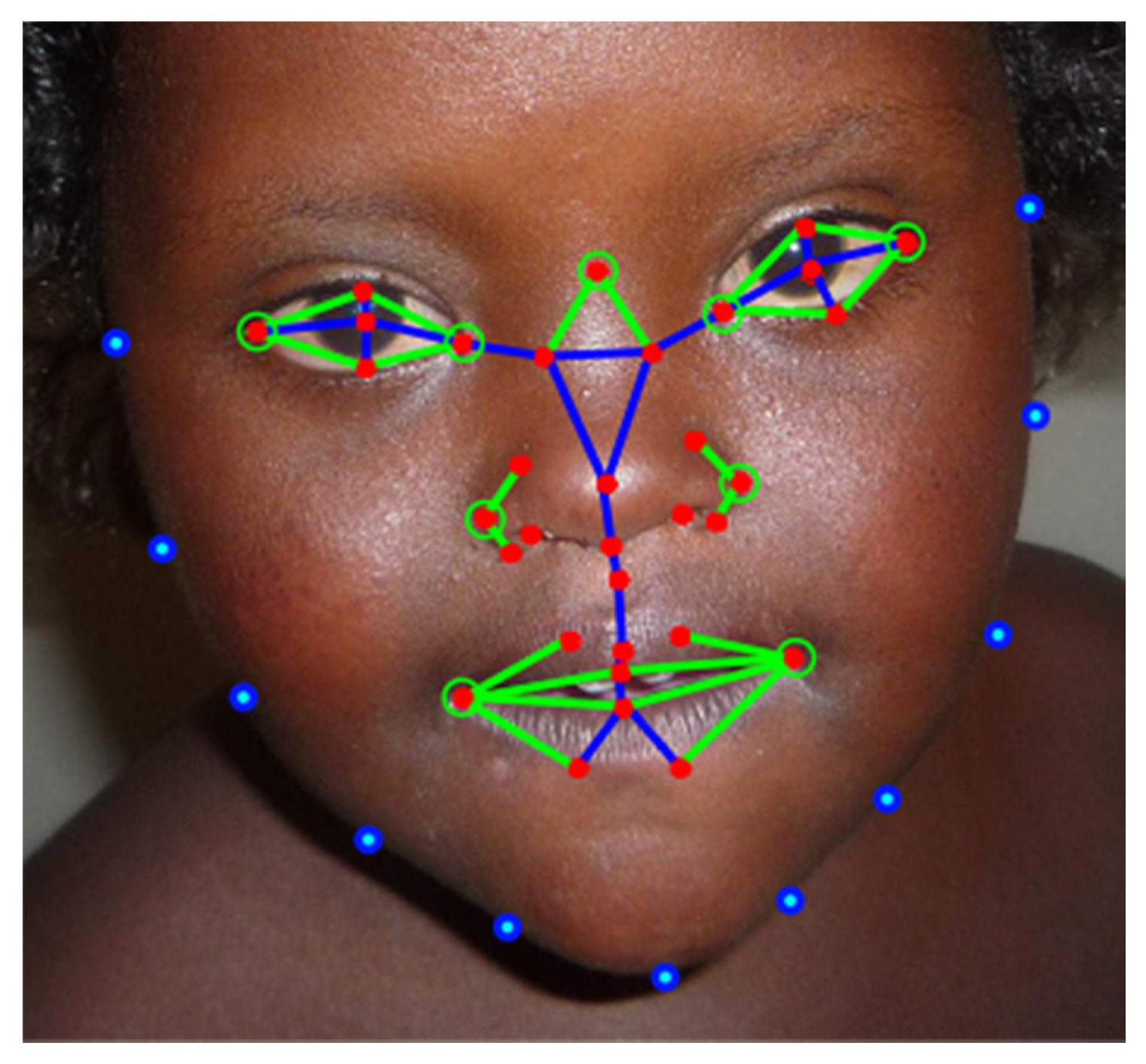

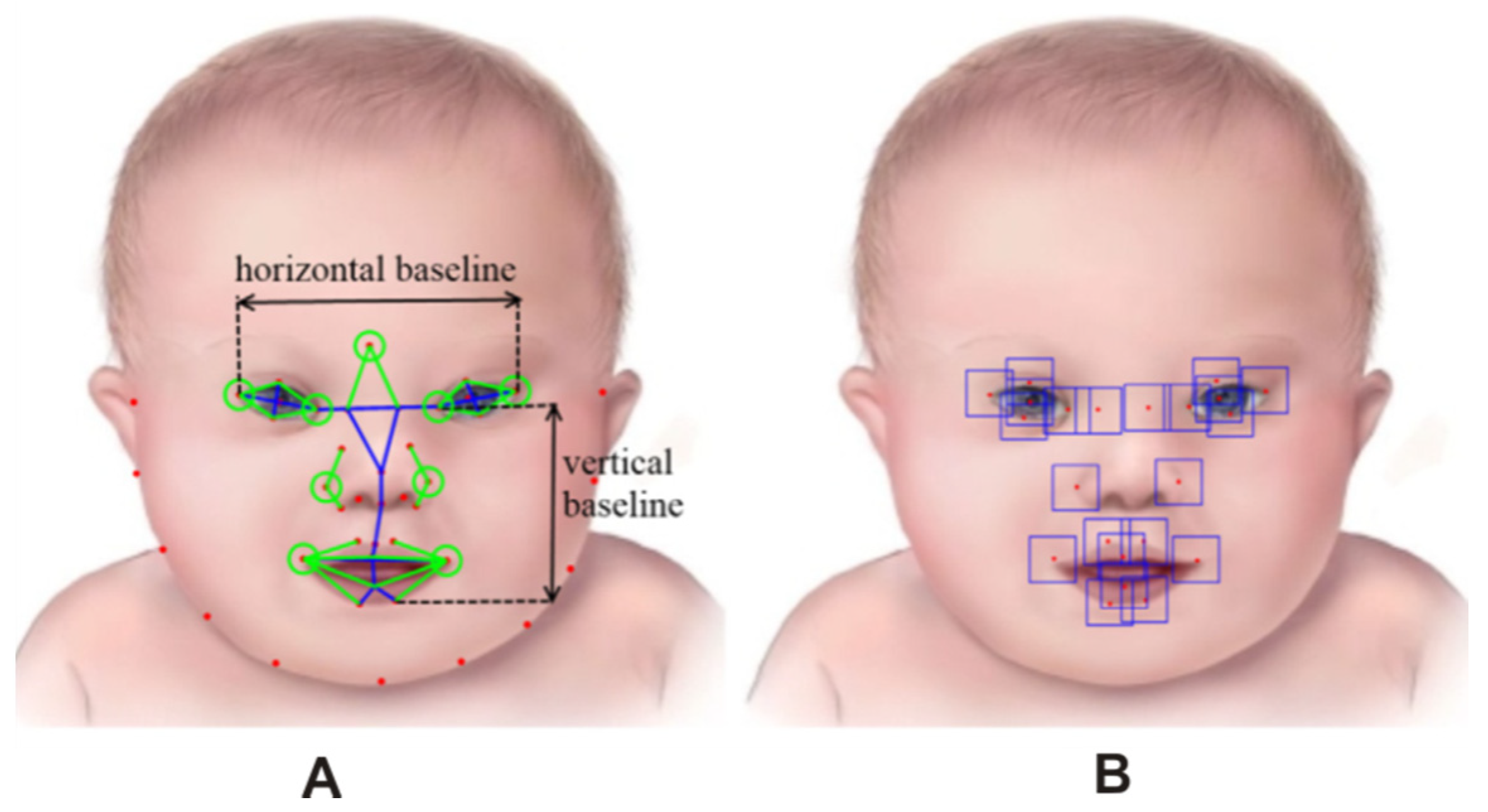

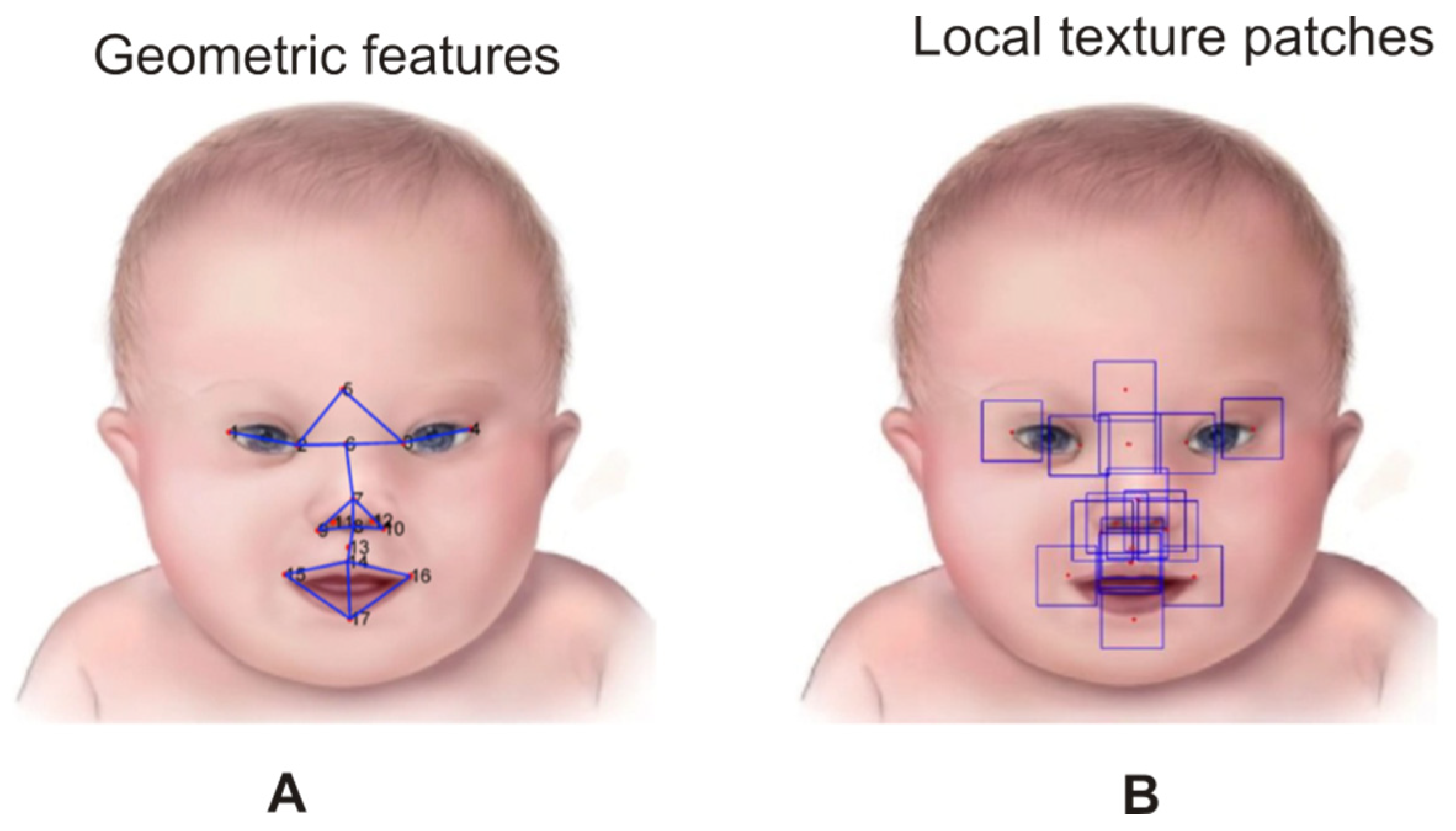

4.2.1. Local Feature-Based

4.2.2. Statistical-Based

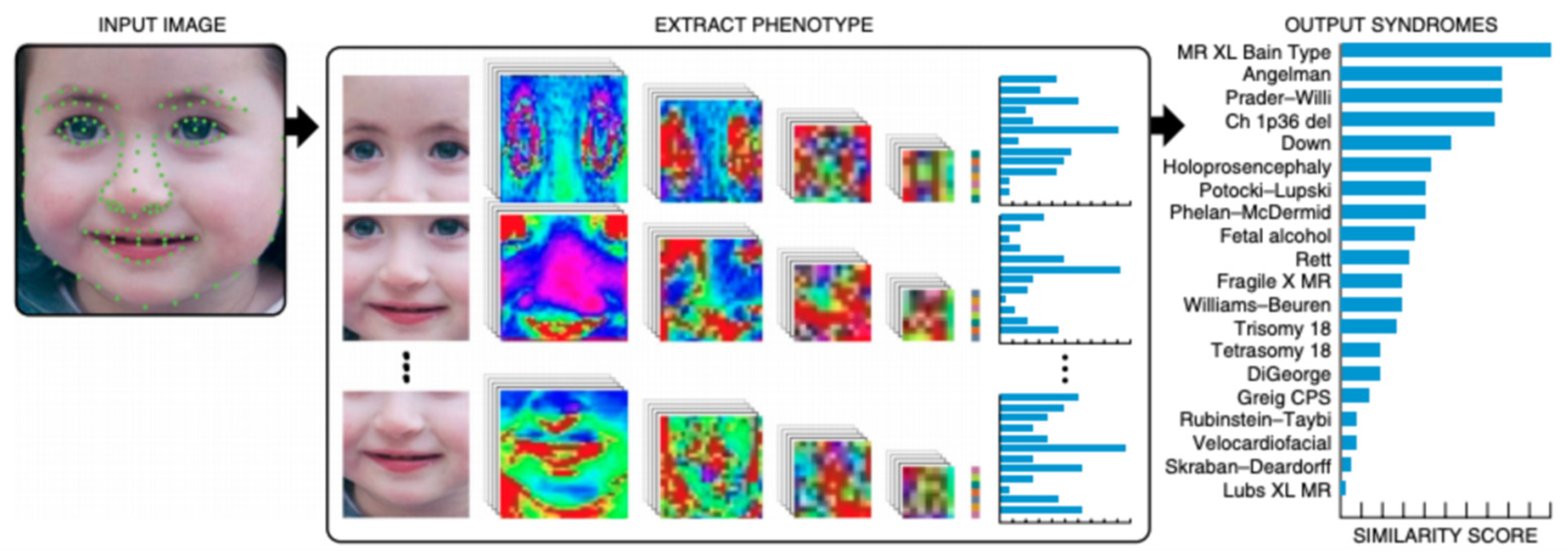

4.2.3. Neural Network-Based

4.2.4. Feature Extraction Comparison

4.3. Classification

4.3.1. Support Vector Machine (SVM) Approach

4.3.2. Neural Network Approach

4.3.3. Other Classifiers

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Antonarakis, S.E.; Lyle, R.; Dermitzakis, E.T.; Reymond, A.; Deutsch, S. Chromosome 21 and down syndrome: From genomics to pathophysiology. Nat. Rev. Genet. 2004, 5, 725. [Google Scholar] [CrossRef] [PubMed]

- Malt, E.A.; Dahl, R.C.; Haugsand, T.M.; Ulvestad, I.H.; Emilsen, N.M.; Hansen, B.; Cardenas, Y.; Skøld, R.O.; Thorsen, A.; Davidsen, E. Health and disease in adults with Down syndrome. Tidsskr. Nor. Laegeforen. Tidsskr. Prakt. Med. Raekke 2013, 133, 290–294. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hickey, F.; Hickey, E.; Summar, K.L. Medical update for children with Down syndrome for the pediatrician and family practitioner. Adv. Pediatr. 2012, 59, 137. [Google Scholar] [CrossRef] [PubMed]

- Patterson, D. Molecular genetic analysis of Down syndrome. Hum. Genet. 2009, 126, 195–214. [Google Scholar] [CrossRef]

- Hitzler, J.K.; Zipursky, A. Origins of leukaemia in children with Down syndrome. Nat. Rev. Cancer 2005, 5, 11. [Google Scholar] [CrossRef]

- Ram, G.; Chinen, J. Infections and immunodeficiency in Down syndrome. Clin. Exp. Immunol. 2011, 164, 9–16. [Google Scholar] [CrossRef] [PubMed]

- Lintas, C.; Sacco, R.; Persico, A.M. Genome-Wide expression studies in autism spectrum disorder, Rett syndrome, and Down syndrome. Neurobiol. Dis. 2012, 45, 57–68. [Google Scholar] [CrossRef]

- Bloemers, B.L.; van Bleek, G.M.; Kimpen, J.L.; Bont, L. Distinct abnormalities in the innate immune system of children with Down syndrome. J. Pediatr. 2010, 156, 804–809. [Google Scholar] [CrossRef]

- Bittles, A.H.; Bower, C.; Hussain, R.; Glasson, E.J. The four ages of Down syndrome. Eur. J. Public Health 2007, 17, 221–225. [Google Scholar] [CrossRef]

- Kusters, M.; Verstegen, R.; Gemen, E.; De Vries, E. Intrinsic defect of the immune system in children with Down syndrome: A review. Clin. Exp. Immunol. 2009, 156, 189–193. [Google Scholar] [CrossRef]

- Farkas, L.G.; Katic, M.J.; Forrest, C.R. Surface anatomy of the face in Down’s syndrome: Age-Related changes of anthropometric proportion indices in the craniofacial regions. J. Craniofac. Surg. 2002, 13, 368–374. [Google Scholar] [CrossRef]

- Farkas, L.G.; Munro, I.R. Anthropometric Facial Proportions in Medicine; Charles C. Thomas Publisher: Springfield, IL, USA, 1987. [Google Scholar]

- Cornejo, J.Y.R.; Pedrini, H.; Machado-Lima, A.; dos Santos Nunes, F.D.L. Down syndrome detection based on facial features using a geometric descriptor. J. Med. Imaging 2017, 4, 044008. [Google Scholar] [CrossRef]

- Reardon, W.; Donnai, D. Dysmorphology demystified. Arch. Dis. Child. Fetal Neonatal Ed. 2007, 92, F225–F229. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goldstein, A.G.; Chance, J.E. Recognition of children’s faces: II. Percept. Mot. Skills 1965, 20, 547–548. [Google Scholar] [CrossRef]

- Valentine, T. Cognitive and Computational Aspects of Face Recognition: Explorations in Face Space; Routledge: Abingdon, UK, 2017. [Google Scholar]

- Kanade, T. Picture Processing System by Computer Complex and Recognition of Human Faces. Ph.D. Thesis, Kyoto University, Kyoto, Japan, 1974. [Google Scholar]

- Jafri, R.; Arabnia, H.R. A survey of face recognition techniques. J. Inf. Process. Syst. 2009, 5, 41–68. [Google Scholar] [CrossRef] [Green Version]

- Abate, A.F.; Nappi, M.; Riccio, D.; Sabatino, G. 2D and 3D face recognition: A survey. Pattern Recognit. Lett. 2007, 28, 1885–1906. [Google Scholar] [CrossRef]

- Grother, P.J. Face Recognition Vendor Test 2002 Supplemental Report; NIST Interagency/Internal Report: Gaithersburg, MD, USA, 2004. [Google Scholar]

- Ortega, R.C.M.H.; Solon, C.C.E.; Aniez, O.S.; Maratas, L.L. Examination of facial shape changes associated with cardiovascular disease using geometric morphometrics. Cardiology 2018, 3, 1–10. [Google Scholar]

- Savriama, Y.; Klingenberg, C.P. Beyond bilateral symmetry: Geometric morphometric methods for any type of symmetry. BMC Evol. Biol. 2011, 11, 280. [Google Scholar] [CrossRef]

- Brunelli, R.; Poggio, T. Face recognition: Features versus templates. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 1042–1052. [Google Scholar] [CrossRef]

- Nixon, M. Eye spacing measurement for facial recognition. In Proceedings of the 1985 Applications of Digital Image Processing VIII, San Diego, CA, USA, 20–22 August 1985; pp. 279–285. [Google Scholar]

- Chen, L.-F.; Liao, H.-Y.M.; Lin, J.-C.; Han, C.-C. Why recognition in a statistics-based face recognition system should be based on the pure face portion: A probabilistic decision-based proof. Pattern Recognit. 2001, 34, 1393–1403. [Google Scholar] [CrossRef]

- Kirby, M.; Sirovich, L. Application of the Karhunen-Loeve procedure for the characterization of human faces. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 103–108. [Google Scholar] [CrossRef] [Green Version]

- Sirovich, L.; Kirby, M. Low-Dimensional procedure for the characterization of human faces. JOSA A 1987, 4, 519–524. [Google Scholar] [CrossRef]

- Singh, S.K.; Chauhan, D.; Vatsa, M.; Singh, R. A robust skin color based face detection algorithm. Tamkang J. Sci. Eng. 2003, 6, 227–234. [Google Scholar]

- Yang, M.-H.; Kriegman, D.J.; Ahuja, N. Detecting faces in images: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 34–58. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Chellappa, R.; Phillips, P.J.; Rosenfeld, A. Face recognition: A literature survey. ACM Comput. Surv. CSUR 2003, 35, 399–458. [Google Scholar] [CrossRef]

- Burçin, K.; Vasif, N.V. Down syndrome recognition using local binary patterns and statistical evaluation of the system. Expert Syst. Appl. 2011, 38, 8690–8695. [Google Scholar] [CrossRef]

- Zhao, Q.; Rosenbaum, K.; Sze, R.; Zand, D.; Summar, M.; Linguraru, M.G. Down syndrome detection from facial photographs using machine learning techniques. In Proceedings of the Medical Imaging 2013: Computer-Aided Diagnosis, Lake Buena Vista, FL, USA, 9–14 February 2013; p. 867003. [Google Scholar]

- Kruszka, P.; Porras, A.R.; Sobering, A.K.; Ikolo, F.A.; La Qua, S.; Shotelersuk, V.; Chung, B.H.; Mok, G.T.; Uwineza, A.; Mutesa, L. Down syndrome in diverse populations. Am. J. Med. Genet. Part A 2017, 173, 42–53. [Google Scholar] [CrossRef] [PubMed]

- Ferry, Q.; Steinberg, J.; Webber, C.; FitzPatrick, D.R.; Ponting, C.P.; Zisserman, A.; Nellåker, C. Diagnostically relevant facial gestalt information from ordinary photos. eLife 2014, 3, e02020. [Google Scholar] [CrossRef]

- Zhao, Q.; Okada, K.; Rosenbaum, K.; Kehoe, L.; Zand, D.J.; Sze, R.; Summar, M.; Linguraru, M.G. Digital facial dysmorphology for genetic screening: Hierarchical constrained local model using ICA. Med. Image Anal. 2014, 18, 699–710. [Google Scholar] [CrossRef]

- Saraydemir, Ş.; Taşpınar, N.; Eroğul, O.; Kayserili, H.; Dinçkan, N. Down syndrome diagnosis based on gabor wavelet transform. J. Med. Syst. 2012, 36, 3205–3213. [Google Scholar] [CrossRef]

- Shukla, P.; Gupta, T.; Saini, A.; Singh, P.; Balasubramanian, R. A deep learning frame-work for recognizing developmental disorders. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 27–29 March 2017; pp. 705–714. [Google Scholar]

- Özdemir, M.E.; Telatar, Z.; Eroğul, O.; Tunca, Y. Classifying dysmorphic syndromes by using artificial neural network based hierarchical decision tree. Australas. Phys. Eng. Sci. Med. 2018, 41, 451–461. [Google Scholar] [CrossRef] [PubMed]

- Gurovich, Y.; Hanani, Y.; Bar, O.; Nadav, G.; Fleischer, N.; Gelbman, D.; Basel-Salmon, L.; Krawitz, P.M.; Kamphausen, S.B.; Zenker, M. Identifying facial phenotypes of genetic disorders using deep learning. Nat. Med. 2019, 25, 60. [Google Scholar] [CrossRef] [PubMed]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; p. 1. [Google Scholar]

- Bradski, G.; Kaehler, A. The OpenCV library. Dr Dobb’s J. Softw. Tools 2000, 3, 122–125. [Google Scholar]

- Kruszka, P.; Addissie, Y.A.; McGinn, D.E.; Porras, A.R.; Biggs, E.; Share, M.; Crowley, T.B.; Chung, B.H.; Mok, G.T.; Mak, C.C. 22q11. 2 deletion syndrome in diverse populations. Am. J. Med Genet. Part A 2017, 173, 879–888. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Ramanan, D. Face detection, pose estimation, and landmark localization in the wild. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2879–2886. [Google Scholar]

- Li, H.; Lin, Z.; Shen, X.; Brandt, J.; Hua, G. A convolutional neural network cascade for face detection. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5325–5334. [Google Scholar]

- Rai, M.C.E.; Werghi, N.; Al Muhairi, H.; Alsafar, H. Using facial images for the diagnosis of genetic syndromes: A survey. In Proceedings of the 2015 International Conference on Communications, Signal Processing, and Their Applications (ICCSPA’15), Sharjah, UAE, 17–19 February 2015; pp. 1–6. [Google Scholar]

- Gurovich, Y.; Hanani, Y.; Bar, O.; Fleischer, N.; Gelbman, D.; Basel-Salmon, L.; Krawitz, P.; Kamphausen, S.B.; Zenker, M.; Bird, L.M. DeepGestalt-Identifying rare genetic syndromes using deep learning. arXiv 2018, arXiv:1801.07637. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face description with local binary patterns: Application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef]

- Yang, M.-H. Face recognition using kernel methods. In Proceedings of the 2015 Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 1457–1464. [Google Scholar]

- Kuru, K.; Niranjan, M.; Tunca, Y.; Osvank, E.; Azim, T. Biomedical visual data analysis to build an intelligent diagnostic decision support system in medical genetics. Artif. Intell. Med. 2014, 62, 105–118. [Google Scholar] [CrossRef]

- Boehringer, S.; Guenther, M.; Sinigerova, S.; Wurtz, R.P.; Horsthemke, B.; Wieczorek, D. Automated syndrome detection in a set of clinical facial photographs. Am. J. Med. Genet. Part A 2011, 155, 2161–2169. [Google Scholar] [CrossRef]

- Boehringer, S.; Vollmar, T.; Tasse, C.; Wurtz, R.P.; Gillessen-Kaesbach, G.; Horsthemke, B.; Wieczorek, D. Syndrome identification based on 2D analysis software. Eur. J. Hum. Genet. 2006, 14, 1082. [Google Scholar] [CrossRef] [Green Version]

- Loos, H.S.; Wieczorek, D.; Würtz, R.P.; von der Malsburg, C.; Horsthemke, B. Computer-Based recognition of dysmorphic faces. Eur. J. Hum. Genet. 2003, 11, 555. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, Q.; Okada, K.; Rosenbaum, K.; Zand, D.J.; Sze, R.; Summar, M.; Linguraru, M.G. Hierarchical constrained local model using ICA and its application to Down syndrome detection. In Proceedings of the 2013 International Conference on Medical Image Computing and Computer-Assisted Intervention, Nagoya, Japan, 22–26 September 2013; pp. 222–229. [Google Scholar]

- Cerrolaza, J.J.; Porras, A.R.; Mansoor, A.; Zhao, Q.; Summar, M.; Linguraru, M.G. Identification of dysmorphic syndromes using landmark-specific local texture descriptors. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 1080–1083. [Google Scholar]

- Wilfred, O.O.; Lee, G.-B.; Park, J.-J.; Cho, B.-J. Facial component features for facial expression identification using Active Appearance Models. In Proceedings of the AFRICON 2009, Nairobi, Kenya, 23–25 September 2009; pp. 1–5. [Google Scholar]

- Mahoor, M.H.; Abdel-Mottaleb, M.; Ansari, A.-N. Improved active shape model for facial feature extraction in color images. J. Multimed. 2006, 1, 21–28. [Google Scholar]

- Lee, Y.-H.; Kim, C.G.; Kim, Y.; Whangbo, T.K. Facial landmarks detection using improved active shape model on android platform. Multimed. Tools Appl. 2015, 74, 8821–8830. [Google Scholar] [CrossRef]

- Erogul, O.; Sipahi, M.E.; Tunca, Y.; Vurucu, S. Recognition of down syndromes using image analysis. In Proceedings of the 2009 14th National Biomedical Engineering Meeting, Izmir, Turkey, 20–22 May 2009; pp. 1–4. [Google Scholar]

- Gabor, D. Theory of communication. Part 1: The analysis of information. J. Inst. Electr. Eng. Part III Radio Commun. Eng. 1946, 93, 429–441. [Google Scholar] [CrossRef] [Green Version]

- Bruna, J.; Mallat, S. Invariant scattering convolution networks. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1872–1886. [Google Scholar] [CrossRef] [Green Version]

- Vorravanpreecha, N.; Lertboonnum, T.; Rodjanadit, R.; Sriplienchan, P.; Rojnueangnit, K. Studying Down syndrome recognition probabilities in Thai children with de-identified computer-aided facial analysis. Am. J. Med. Genet. Part A 2018, 176, 1935–1940. [Google Scholar] [CrossRef]

- Intrator, N.; Reisfeld, D.; Yeshurun, Y. Face recognition using a hybrid supervised/unsupervised neural network. Pattern Recognit. Lett. 1996, 17, 67–76. [Google Scholar] [CrossRef]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin, Germany, 2013. [Google Scholar]

- Rejani, Y.; Selvi, S.T. Early detection of breast cancer using SVM classifier technique. arXiv 2009, arXiv:0912.2314. [Google Scholar]

- Kohonen, T. Self-Organization and Associative Memory; Springer Science & Business Media: Berlin, Germany, 2012; Volume 8. [Google Scholar]

- Islam, M.J.; Wu, Q.J.; Ahmadi, M.; Sid-Ahmed, M.A. Investigating the performance of naive-bayes classifiers and k-nearest neighbor classifiers. In Proceedings of the 2007 International Conference on Convergence Information Technology (ICCIT 2007), Gyeongju, Korea, 21–23 November 2007; pp. 1541–1546. [Google Scholar]

- Amadasun, M.; King, R. Improving the accuracy of the Euclidean distance classifier. Can. J. Electr. Comput. Eng. 1990, 15, 16–17. [Google Scholar]

- Jain, A.K.; Duin, R.P.W.; Mao, J. Statistical pattern recognition: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 4–37. [Google Scholar] [CrossRef] [Green Version]

| Ref | Databases | Total Images | DS Images | Landmarks | Detection Methods |

|---|---|---|---|---|---|

| [34] | Healthy & 8 known disorders including DS | 2878 | 209 | 36 | OpenCV |

| [35] | Healthy & 8 known syndromes including DS | 154 | 50 | 44 | Viola–Jones (Haar like) |

| [33] | Healthy & DS | 261 | 129 | 44 | Viola–Jones (Haar like) |

| [37] | 6 known syndromes including DS | 1126 | 537 | 68 | PASCAL VOC |

| [13] | Healthy & DS | 306 | 153 | 16 | Viola–Jones (Haar like) |

| [39] | 216 known syndromes including DS | 17,000 | - | 130 | DCNN cascade |

| Ref | Databases | Total Images | Features Extracted | Extraction Methods |

|---|---|---|---|---|

| [31] | Healthy & DS | 107 | 59 | LBP |

| [33] | Healthy & DS | 261 | 126 | Geometric + texture features |

| [35] | Healthy & DS | 130 | 27 | ICA |

| [34] | Healthy & 8 known disorders including DS | 2878 | 630 | PCA |

| [36] | Healthy & DS | 30 | 2000 | GWT+PCA+LDA |

| [37] | 6 known syndromes including DS | 1126 | - | - |

| [55] | Healthy & 15 known disorders including DS | 145 | 27 | Local texture descriptors |

| [59] | Healthy & DS | 36 | - | EFBG |

| [54] | 100 | 159 | HCLM | |

| [32] | Healthy & DS | 48 | - | Geometric + local feature |

| [13] | Healthy & DS | 306 | - | Geometric +CENTRIST |

| [38] | 5 known syndromes including DS | 160 | 18 | Geometric features |

| [62] | - | 175 | - | DCNN |

| [39] | 216 known syndromes including DS | 17,000 | - | DCNN |

| Years | Ref | Features Classified | Classifiers | Training/Testing | Sensitivity | Specificity | Accuracy (%) |

|---|---|---|---|---|---|---|---|

| 2009 | [59] | - | ANN | - | - | - | 68.7 |

| 2011 | [31] | 59 | ED | - | 0.98 | 0.90 | 95.35 |

| 2012 | [36] | 2000 | KNN | - | 0.960 | 0.960 | 96 |

| SVM | 0.973 | 0.973 | 97.34 | ||||

| 2013 | [54] | 159 | SVM-RBF | - | - | - | 96.5 |

| 2013 | [32] | - | SVM | - | - | - | 97.9 |

| 2014 | [34] | 630 | SVM | - | - | - | 94.4 |

| 2014 | [35] | 27 | SVM | - | - | - | 96.7 |

| 2016 | [55] | 27 | SVM-RBF | - | 0.86 | 0.96 | 91 |

| 2017 | [33] | 126 | SVM | - | 0.961 | 0.924 | 94.3 |

| 2017 | [13] | - | SVM | - | - | - | 98.39 |

| 2017 | [37] | - | DCNN | 1126/1126 | - | - | 98.8 |

| 2018 | [38] | 18 | ANN-HDT | 130/30 | - | - | 86.7 |

| 2018 | [62] | - | DCNN | - | 1.0 | 0.872 | 89 |

| 2019 | [39] | - | DCNN | 90%/10% | 91 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agbolade, O.; Nazri, A.; Yaakob, R.; Ghani, A.A.; Cheah, Y.K. Down Syndrome Face Recognition: A Review. Symmetry 2020, 12, 1182. https://doi.org/10.3390/sym12071182

Agbolade O, Nazri A, Yaakob R, Ghani AA, Cheah YK. Down Syndrome Face Recognition: A Review. Symmetry. 2020; 12(7):1182. https://doi.org/10.3390/sym12071182

Chicago/Turabian StyleAgbolade, Olalekan, Azree Nazri, Razali Yaakob, Abdul Azim Ghani, and Yoke Kqueen Cheah. 2020. "Down Syndrome Face Recognition: A Review" Symmetry 12, no. 7: 1182. https://doi.org/10.3390/sym12071182

APA StyleAgbolade, O., Nazri, A., Yaakob, R., Ghani, A. A., & Cheah, Y. K. (2020). Down Syndrome Face Recognition: A Review. Symmetry, 12(7), 1182. https://doi.org/10.3390/sym12071182