Controlling Safety of Artificial Intelligence-Based Systems in Healthcare

Abstract

:1. Introduction

2. Methodology

2.1. List of Attributes

2.1.1. Systematic Review

2.1.2. Interviews

- Q1. What are the attributes of safety policies for implemented AI models in healthcare?

- Q2. What are the attributes of incentives for clinicians for implemented AI models in healthcare?

- Q3. What are the attributes of clinician and patient training for implemented AI models in healthcare?

- Q4. What are the attributes of communication and interaction for implemented AI models in healthcare?

- Q5. What are the attributes of planning of actions for implemented AI models in healthcare?

- Q6. What are the attributes of control of actions for implemented AI models in healthcare?

2.2. Weight of Attributes

2.3. The Rating System

- (1)

- 0/1, in which the rating options are “0” (no) or “1” (yes),

- (2)

- 0–1, in which the rating options are a fraction between “0” and “1”,

- (3)

- 0/1/NA, in which the rating options are “0” or “1” or “not applicable”, and

- (4)

- 0–1/NA, in which the rating options are a fraction between “0” and “1” or “not applicable.”

2.4. Finalizing the Model

3. Results

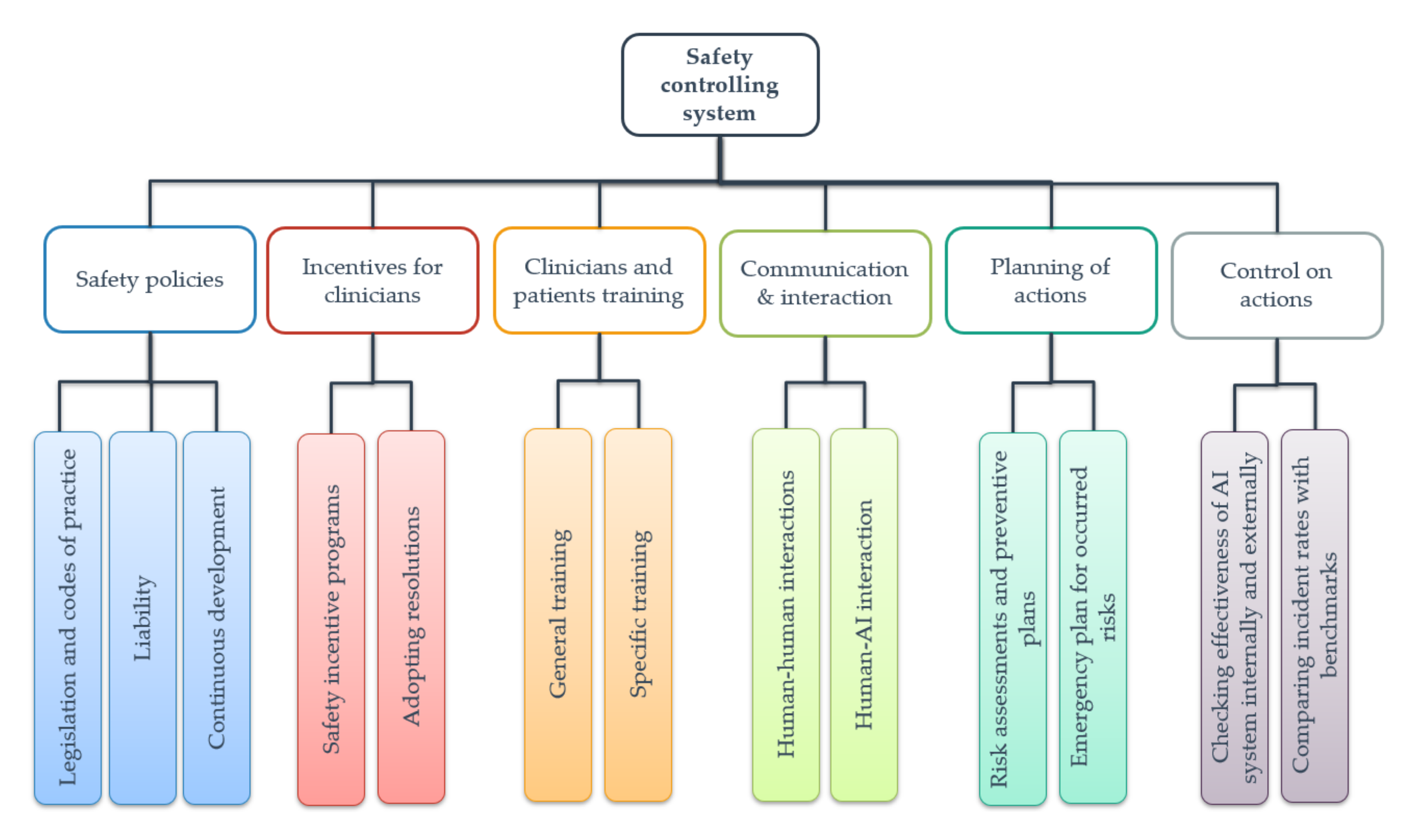

3.1. The First Key Dimension

3.2. The Second Key Dimension

3.3. The Third Key Dimension

3.4. The Fourth Key Dimension

3.5. The Fifth Key Dimension

3.6. The Sixth Key Dimension

4. Discussion

4.1. First Key Dimension

4.2. Second and Third Key Dimensions

4.3. Fourth Key Dimension

4.4. Fifth Key Dimension

- The virtual environment allows AI developers to simulate rare cases for training models [92].

- The entire training process can occur in a simulated environment without the need to collect data [93].

- Learning in the virtual environment is fast; for example, AlphaZero, an AI-based computer program, was trained over a day to become a master in playing Go, chess, and shogi [29].

4.5. Sixth Key Dimension

5. Study Limitations

- The comprehensibility of the considered safety elements to potential auditors.

- The robustness of the rating scale for each safety element to secure a reliable rating under similar conditions.

- The potential for improving key dimensions and different layers of attributes.

- The feedback from the healthcare institutions about the system.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, J.; Baxter, S.L.; Xu, J.; Xu, J.; Zhou, X.; Zhang, K. The Practical Implementation of Artificial Intelligence Technologies in Medicine. Nat. Med. 2019, 25, 30–36. [Google Scholar] [CrossRef] [PubMed]

- Topol, E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Hamet, P.; Tremblay, J. Artificial Intelligence in Medicine. Metabolism 2017, 69, S36–S40. [Google Scholar] [CrossRef] [PubMed]

- Newell, A.; Shaw, J.C.; Simon, H.A. Elements of a Theory of Human Problem Solving. Psychol. Rev. 1958, 65, 151. [Google Scholar]

- Samuel, A.L. Some Studies in Machine Learning Using the Game of Checkers. IBM J. Res. Dev. 1959, 3, 210–229. [Google Scholar] [CrossRef]

- Warner, H.R.; Toronto, A.F.; Veasey, L.G.; Stephenson, R. A Mathematical Approach to Medical Diagnosis: Application to Congenital Heart Disease. JAMA 1961, 177, 177–183. [Google Scholar] [CrossRef]

- Weizenbaum, J. ELIZA—A Computer Program for the Study of Natural Language Communication between Man and Machine. Commun. ACM 1966, 9, 36–45. [Google Scholar]

- De Dombal, F.T.; Leaper, D.J.; Staniland, J.R.; McCann, A.P.; Horrocks, J.C. Computer-Aided Diagnosis of Acute Abdominal Pain. Br. Med. J. 1972, 2, 9–13. [Google Scholar] [CrossRef] [Green Version]

- Szolovits, P. Artificial Intelligence in Medical Diagnosis. Ann. Intern. Med. 1988, 108, 80. [Google Scholar] [CrossRef]

- Castelvecchi, D. Can We Open the Black Box of AI? Nat. News 2016, 538, 20. [Google Scholar] [CrossRef] [Green Version]

- Finlayson, S.G.; Bowers, J.D.; Ito, J.; Zittrain, J.L.; Beam, A.L.; Kohane, I.S. Adversarial Attacks on Medical Machine Learning. Science 2019, 363, 1287–1289. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Marcus, G. Deep Learning: A Critical Appraisal. arXiv 2018, arXiv:180100631. [Google Scholar]

- Deo Rahul, C. Machine Learning in Medicine. Circulation 2015, 132, 1920–1930. [Google Scholar] [CrossRef] [Green Version]

- Yu, K.-H.; Berry, G.J.; Rubin, D.L.; Re, C.; Altman, R.B.; Snyder, M. Association of Omics Features with Histopathology Patterns in Lung Adenocarcinoma. Cell Syst. 2017, 5, 620–627. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. Chestx-Ray8: Hospital-Scale Chest x-Ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106. [Google Scholar]

- Strodthoff, N.; Strodthoff, C. Detecting and Interpreting Myocardial Infarction Using Fully Convolutional Neural Networks. Physiol. Meas. 2019, 40, 015001. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bejnordi, B.E.; Veta, M.; Van Diest, P.J.; Van Ginneken, B.; Karssemeijer, N.; Litjens, G.; Van Der Laak, J.A.; Hermsen, M.; Manson, Q.F.; Balkenhol, M. Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women with Breast Cancer. JAMA 2017, 318, 2199–2210. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-Level Classification of Skin Cancer with Deep Neural Networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Yu, K.-H.; Beam, A.L.; Kohane, I.S. Artificial Intelligence in Healthcare. Nat. Biomed. Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef]

- Gerke, S.; Minssen, T.; Cohen, I.G. Ethical and Legal Challenges of Artificial Intelligence-Driven Health Care. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Ntoutsi, E.; Fafalios, P.; Gadiraju, U.; Iosifidis, V.; Nejdl, W.; Vidal, M.-E.; Ruggieri, S.; Turini, F.; Papadopoulos, S.; Krasanakis, E.; et al. Bias in Data-Driven Artificial Intelligence Systems-An Introductory Survey. Wiley Interdiscip. Rev.-Data Min. Knowl. Discov. 2019, e1356. [Google Scholar] [CrossRef] [Green Version]

- Vandewiele, G.; De Backere, F.; Lannoye, K.; Vanden Berghe, M.; Janssens, O.; Van Hoecke, S.; Keereman, V.; Paemeleire, K.; Ongenae, F.; De Turck, F. A Decision Support System to Follow up and Diagnose Primary Headache Patients Using Semantically Enriched Data. BMC Med. Inform. Decis. Mak. 2018, 18, 98. [Google Scholar] [CrossRef] [PubMed]

- Kwon, B.C.; Choi, M.-J.; Kim, J.T.; Choi, E.; Kim, Y.B.; Kwon, S.; Sun, J.; Choo, J. RetainVis: Visual Analytics with Interpretable and Interactive Recurrent Neural Networks on Electronic Medical Records. IEEE Trans. Vis. Comput. Graph. 2018, 25, 299–309. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- De Fauw, J.; Ledsam, J.R.; Romera-Paredes, B.; Nikolov, S.; Tomasev, N.; Blackwell, S.; Askham, H.; Glorot, X.; O’Donoghue, B.; Visentin, D. Clinically Applicable Deep Learning for Diagnosis and Referral in Retinal Disease. Nat. Med. 2018, 24, 1342–1350. [Google Scholar] [CrossRef]

- Ting, D.S.W.; Pasquale, L.R.; Peng, L.; Campbell, J.P.; Lee, A.Y.; Raman, R.; Tan, G.S.W.; Schmetterer, L.; Keane, P.A.; Wong, T.Y. Artificial Intelligence and Deep Learning in Ophthalmology. Br. J. Ophthalmol. 2019, 103, 167–175. [Google Scholar] [CrossRef] [Green Version]

- Bleicher, A. Demystifying the Black Box That Is AI. Available online: https://www.scientificamerican.com/article/demystifying-the-black-box-that-is-ai/ (accessed on 4 March 2020).

- Heaven, W.D. Why Asking an AI to Explain Itself Can Make Things Worse. Available online: https://www.technologyreview.com/s/615110/why-asking-an-ai-to-explain-itself-can-make-things-worse/ (accessed on 4 March 2020).

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding Deep Learning Requires Rethinking Generalization. arXiv 2016, arXiv:161103530. [Google Scholar]

- Schemelzer, R. Understanding Explainable AI. Available online: https://www.forbes.com/sites/cognitiveworld/2019/07/23/understanding-explainable-ai/#406c97957c9e (accessed on 10 April 2020).

- London, A.J. Artificial Intelligence and Black-Box Medical Decisions: Accuracy versus Explainability. Hastings Cent. Rep. 2019, 49, 15–21. [Google Scholar] [CrossRef]

- Wang, F.; Kaushal, R.; Khullar, D. Should Health Care Demand Interpretable Artificial Intelligence or Accept “Black Box” Medicine? Ann. Intern. Med. 2019, 172, 59–60. [Google Scholar] [CrossRef]

- Buehler, M.; Iagnemma, K.; Singh, S. The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer: Berlin/Heidelberg, Germany, 2009; Volume 56. [Google Scholar]

- Wang, X.; Bisantz, A.M.; Bolton, M.L.; Cavuoto, L.; Chandola, V. Explaining Supervised Learning Models: A Preliminary Study on Binary Classifiers. Ergon. Des. 2020, 28, 20–26. [Google Scholar] [CrossRef]

- Teo, E.A.L.; Ling, F.Y.Y. Developing a Model to Measure the Effectiveness of Safety Management Systems of Construction Sites. Build. Environ. 2006, 41, 1584–1592. [Google Scholar]

- Fernández-Muñiz, B.; Montes-Peon, J.M.; Vazquez-Ordas, C.J. Safety Management System: Development and Validation of a Multidimensional Scale. J. Loss Prev. Process Ind. 2007, 20, 52–68. [Google Scholar] [CrossRef]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.A.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA Statement for Reporting Systematic Reviews and Meta-Analyses of Studies That Evaluate Health Care Interventions: Explanation and Elaboration. PLoS Med. 2009, 6, e1000100. [Google Scholar] [CrossRef] [PubMed]

- Davahli, M.R.; Karwowski, W.; Gutierrez, E.; Fiok, K.; Wróbel, G.; Taiar, R.; Ahram, T. Identification and Prediction of Human Behavior through Mining of Unstructured Textual Data. Symmetry 2020, 12, 1902. [Google Scholar] [CrossRef]

- Qu, S.Q.; Dumay, J. The qualitative research interview. Qual. Res. Account. Manag. 2011, 8, 238–264. [Google Scholar] [CrossRef]

- DiCicco-Bloom, B.; Crabtree, B.F. The Qualitative Research Interview. Med. Educ. 2006, 40, 314–321. [Google Scholar] [CrossRef]

- HAWKIRB Studies That Are Not Human Subjects Research. Available online: https://hso.research.uiowa.edu/studies-are-not-human-subjects-research (accessed on 27 December 2020).

- Davahli, M.R.; Karwowski, W.; Fiok, K.; Wan, T.T.; Parsaei, H.R. A Safety Controlling System Framework for Implementing Artificial Intelligence in Healthcare. Preprints 2020, 2020120313. [Google Scholar] [CrossRef]

- Legendre, P. Species Associations: The Kendall Coefficient of Concordance Revisited. J. Agric. Biol. Environ. Stat. 2005, 10, 226. [Google Scholar] [CrossRef]

- Ćwiklicki, M.; Klich, J.; Chen, J. The Adaptiveness of the Healthcare System to the Fourth Industrial Revolution: A Preliminary Analysis. Futures 2020, 122, 102602. [Google Scholar] [CrossRef]

- Hale, A.R.; Baram, M.S. Safety Management: The Challenge of Change; Pergamon Oxford: Oxford, UK, 1998. [Google Scholar]

- Matheny, M.E.; Whicher, D.; Israni, S.T. Artificial Intelligence in Health Care: A Report From the National Academy of Medicine. JAMA 2020, 323, 509–510. [Google Scholar] [CrossRef]

- Zhu, Q.; Jiang, X.; Zhu, Q.; Pan, M.; He, T. Graph Embedding Deep Learning Guides Microbial Biomarkers’ Identification. Front. Genet. 2019, 10, 1182. [Google Scholar] [CrossRef] [Green Version]

- Challen, R.; Denny, J.; Pitt, M.; Gompels, L.; Edwards, T.; Tsaneva-Atanasova, K. Artificial Intelligence, Bias and Clinical Safety. BMJ Qual. Saf. 2019, 28, 231–237. [Google Scholar] [CrossRef] [PubMed]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key Challenges for Delivering Clinical Impact with Artificial Intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rose, S. Machine Learning for Prediction in Electronic Health Data. JAMA Netw. Open 2018, 1, e181404. [Google Scholar] [CrossRef] [PubMed]

- U.S. Food and Drug Administration. Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD)-Discussion Paper; Discussion Paper and Request for Feedback; U.S. Food and Drug Administration: Silver Spring, MD, USA, 2019.

- Shah, P.; Kendall, F.; Khozin, S.; Goosen, R.; Hu, J.; Laramie, J.; Ringel, M.; Schork, N. Artificial Intelligence and Machine Learning in Clinical Development: A Translational Perspective. NPJ Digit. Med. 2019, 2, 1–5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nordling, L. A Fairer Way Forward for AI in Health Care. Nature 2019, 573, S103–S105. [Google Scholar] [CrossRef] [Green Version]

- Stewart, E. Self-Driving Cars Have to Be Safer than Regular Cars. The Question Is How Much. Available online: https://www.vox.com/recode/2019/5/17/18564501/self-driving-car-morals-safety-tesla-waymo (accessed on 7 March 2020).

- Golden, J.A. Deep Learning Algorithms for Detection of Lymph Node Metastases from Breast Cancer: Helping Artificial Intelligence Be Seen. JAMA 2017, 318, 2184–2186. [Google Scholar] [CrossRef]

- Amershi, S.; Weld, D.; Vorvoreanu, M.; Fourney, A.; Nushi, B.; Collisson, P.; Suh, J.; Iqbal, S.; Bennett, P.N.; Inkpen, K. Guidelines for Human-AI Interaction. In Proceedings of the 2019 Chi Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Salazar, J.W.; Redberg, R.F. Leading the Call for Reform of Medical Device Safety Surveillance. JAMA Intern. Med. 2020, 180, 179–180. [Google Scholar] [CrossRef]

- Ventola, C.L. Challenges in Evaluating and Standardizing Medical Devices in Health Care Facilities. Pharm. Ther. 2008, 33, 348. [Google Scholar]

- Wang, L.; Haghighi, A. Combined Strength of Holons, Agents and Function Blocks in Cyber-Physical Systems. J. Manuf. Syst. 2016, 40, 25–34. [Google Scholar] [CrossRef]

- Callahan, A.; Fries, J.A.; Ré, C.; Huddleston, J.I.; Giori, N.J.; Delp, S.; Shah, N.H. Medical Device Surveillance with Electronic Health Records. NPJ Digit. Med. 2019, 2, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Forcier, M.B.; Gallois, H.; Mullan, S.; Joly, Y. Integrating Artificial Intelligence into Health Care through Data Access: Can the GDPR Act as a Beacon for Policymakers? J. Law Biosci. 2019, 6, 317. [Google Scholar] [CrossRef] [PubMed]

- Westerheide, F. The Artificial Intelligence Industry and Global Challenges. Available online: https://www.forbes.com/sites/cognitiveworld/2019/11/27/the-artificial-intelligence-industry-and-global-challenges/ (accessed on 9 March 2020).

- Nicola, S.; Behrmann, E.; Mawad, M. It’s a Good Thing Europe’s Autonomous Car Testing Is Slow; Bloomberg: New York, NY, USA, 2018. [Google Scholar]

- Price, W.N.; Cohen, I.G. Privacy in the Age of Medical Big Data. Nat. Med. 2019, 25, 37–43. [Google Scholar] [CrossRef] [PubMed]

- Wenyan, W. China Is Waking up to Data Protection and Privacy. Here’s Why That Matters. Available online: https://www.weforum.org/agenda/2019/11/china-data-privacy-laws-guideline/ (accessed on 29 February 2020).

- Lindsey, N. China’s Privacy Challenges with AI and Mobile Apps. Available online: https://www.cpomagazine.com/data-privacy/chinas-privacy-challenges-with-ai-and-mobile-apps/ (accessed on 29 February 2020).

- O’Meara, S. Will China Lead the World in AI by 2030? Nature 2019, 572, 427–428. [Google Scholar] [CrossRef] [PubMed]

- US Department of Health and Human Services. Software as a Medical Device (SAMD): Clinical Evaluation; Guidance for Industry and Food and Drug Administration Staff, 2017; US Department of Health and Human Services: Atlanta, GA, USA, 2017.

- Digital Health Innovation Action Plan. US Food and Drug Administration. Available online: https://www.fda.gov/downloads/MedicalDevices/DigitalHealth/UCM568735.pdf (accessed on 7 November 2020).

- Xu, H.; Caramanis, C.; Mannor, S. Sparse Algorithms Are Not Stable: A No-Free-Lunch Theorem. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 187–193. [Google Scholar]

- Sun, W. Stability of Machine Learning Algorithms. Open Access Diss. 2015. Available online: https://docs.lib.purdue.edu/dissertations/AAI3720039/ (accessed on 9 March 2020).

- Cheatham, B.; Javanmardian, K.; Samandari, H. Confronting the Risks of Artificial Intelligence. McKinsey Q. 2019, 1–9. Available online: https://assets.noviams.com/novi-file-uploads/MISBO/Shared_Resources/AI_Resources/Confronting-the-risks-of-artificial-intelligence-vF.pdf (accessed on 18 November 2020).

- Langlotz, C.P.; Allen, B.; Erickson, B.J.; Kalpathy-Cramer, J.; Bigelow, K.; Cook, T.S.; Flanders, A.E.; Lungren, M.P.; Mendelson, D.S.; Rudie, J.D.; et al. A Roadmap for Foundational Research on Artificial Intelligence in Medical Imaging: From the 2018 NIH/RSNA/ACR/The Academy Workshop. Radiology 2019, 291, 781–791. [Google Scholar] [CrossRef]

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A. Feedback on a Publicly Distributed Image Database: The Messidor Database. Image Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef] [Green Version]

- Yu, K.-H.; Zhang, C.; Berry, G.J.; Altman, R.B.; Ré, C.; Rubin, D.L.; Snyder, M. Predicting Non-Small Cell Lung Cancer Prognosis by Fully Automated Microscopic Pathology Image Features. Nat. Commun. 2016, 7, 12474. [Google Scholar] [CrossRef] [Green Version]

- Bhagwat, N.; Viviano, J.D.; Voineskos, A.N.; Chakravarty, M.M.; Initiative, A.D.N. Modeling and Prediction of Clinical Symptom Trajectories in Alzheimer’s Disease Using Longitudinal Data. PLoS Comput. Biol. 2018, 14, e1006376. [Google Scholar] [CrossRef]

- Gianfrancesco, M.A.; Tamang, S.; Yazdany, J.; Schmajuk, G. Potential Biases in Machine Learning Algorithms Using Electronic Health Record Data. JAMA Intern. Med. 2018, 178, 1544–1547. [Google Scholar] [CrossRef]

- Kocheturov, A.; Pardalos, P.M.; Karakitsiou, A. Massive Datasets and Machine Learning for Computational Biomedicine: Trends and Challenges. Ann. Oper. Res. 2019, 276, 5–34. [Google Scholar] [CrossRef]

- Li, Z.; Wang, C.; Han, M.; Xue, Y.; Wei, W.; Li, L.-J.; Fei-Fei, L. Thoracic Disease Identification and Localization with Limited Supervision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8290–8299. [Google Scholar]

- Nam, J.G.; Park, S.; Hwang, E.J.; Lee, J.H.; Jin, K.-N.; Lim, K.Y.; Vu, T.H.; Sohn, J.H.; Hwang, S.; Goo, J.M. Development and Validation of Deep Learning–Based Automatic Detection Algorithm for Malignant Pulmonary Nodules on Chest Radiographs. Radiology 2019, 290, 218–228. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Steiner, D.F.; MacDonald, R.; Liu, Y.; Truszkowski, P.; Hipp, J.D.; Gammage, C.; Thng, F.; Peng, L.; Stumpe, M.C. Impact of Deep Learning Assistance on the Histopathologic Review of Lymph Nodes for Metastatic Breast Cancer. Am. J. Surg. Pathol. 2018, 42, 1636. [Google Scholar] [CrossRef] [PubMed]

- Chilamkurthy, S.; Ghosh, R.; Tanamala, S.; Biviji, M.; Campeau, N.G.; Venugopal, V.K.; Mahajan, V.; Rao, P.; Warier, P. Deep Learning Algorithms for Detection of Critical Findings in Head CT Scans: A Retrospective Study. Lancet 2018, 392, 2388–2396. [Google Scholar] [CrossRef]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.B.H.; Thomas, L.; Enk, A. Man against Machine: Diagnostic Performance of a Deep Learning Convolutional Neural Network for Dermoscopic Melanoma Recognition in Comparison to 58 Dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef]

- Yasaka, K.; Akai, H.; Abe, O.; Kiryu, S. Deep Learning with Convolutional Neural Network for Differentiation of Liver Masses at Dynamic Contrast-Enhanced CT: A Preliminary Study. Radiology 2018, 286, 887–896. [Google Scholar] [CrossRef] [Green Version]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131. [Google Scholar] [CrossRef]

- Jaremko, J.L.; Azar, M.; Bromwich, R.; Lum, A.; Alicia Cheong, L.H.; Gibert, M.; Laviolette, F.; Gray, B.; Reinhold, C.; Cicero, M.; et al. Canadian Association of Radiologists White Paper on Ethical and Legal Issues Related to Artificial Intelligence in Radiology. Can. Assoc. Radiol. J. 2019, 70, 107–118. [Google Scholar] [CrossRef] [Green Version]

- Patel, N.M.; Michelini, V.V.; Snell, J.M.; Balu, S.; Hoyle, A.P.; Parker, J.S.; Hayward, M.C.; Eberhard, D.A.; Salazar, A.H.; McNeillie, P. Enhancing Next-Generation Sequencing-Guided Cancer Care through Cognitive Computing. Oncologist 2018, 23, 179. [Google Scholar] [CrossRef] [Green Version]

- CBINSIGHTS Google Healthcare with AI l CB Insights. Available online: https://www.cbinsights.com/research/report/google-strategy-healthcare/ (accessed on 7 March 2020).

- Miotto, R.; Li, L.; Kidd, B.A.; Dudley, J.T. Deep Patient: An Unsupervised Representation to Predict the Future of Patients from the Electronic Health Records. Sci. Rep. 2016, 6, 1–10. [Google Scholar] [CrossRef]

- Camacho, D.M.; Collins, K.M.; Powers, R.K.; Costello, J.C.; Collins, J.J. Next-Generation Machine Learning for Biological Networks. Cell 2018, 173, 1581–1592. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hill, J. Simulation: The Bedrock of AI. Available online: https://medium.com/simudyne/simulation-the-bedrock-of-ai-12153eaf7971 (accessed on 8 March 2020).

- Chawla, V. How Training AI Models In Simulated Environments Is Helping Researchers. Anal. India Mag. 2019. Available online: https://analyticsindiamag.com/how-training-ai-models-in-simulated-environments-is-helping-researchers/ (accessed on 10 December 2020).

- O’Kane, S. Tesla and Waymo Are Taking Wildly Different Paths to Creating Self-Driving Cars. Available online: https://www.theverge.com/transportation/2018/4/19/17204044/tesla-waymo-self-driving-car-data-simulation (accessed on 2 March 2020).

- Upton, R. Artificial Intelligence’s Need for Health Data—Finding An Ethical Balance. Hit Consult. 2019. [Google Scholar]

- Wang, F.; Preininger, A. AI in Health: State of the Art, Challenges, and Future Directions. Yearb. Med. Inform. 2019, 28, 016–026. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hsu, J. Spectrum AI Could Make Detecting Autism Easier. Available online: https://www.spectrumnews.org/features/deep-dive/can-computer-diagnose-autism/ (accessed on 19 February 2020).

- Christian, J.; Dasgupta, N.; Jordan, M.; Juneja, M.; Nilsen, W.; Reites, J. Digital Health and Patient Registries: Today, Tomorrow, and the Future. In 21st Century Patient Registries: Registries for Evaluating Patient Outcomes: A User’s Guide: 3rd Edition, Addendum [Internet]; Agency for Healthcare Research and Quality (US): Rockville, MD, USA, 2018. [Google Scholar]

- Sayeed, R.; Gottlieb, D.; Mandl, K.D. SMART Markers: Collecting Patient-Generated Health Data as a Standardized Property of Health Information Technology. NPJ Digit. Med. 2020, 3, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- U.S. Food and Drug Administration Medical Device Data Systems, Medical Image Storage Devices, and Medical Image Communications Devices. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/medical-device-data-systems-medical-image-storage-devices-and-medical-image-communications-devices (accessed on 11 April 2020).

- Rong, G.; Mendez, A.; Assi, E.B.; Zhao, B.; Sawan, M. Artificial Intelligence in Healthcare: Review and Prediction Case Studies. Engineering 2020, 6, 291–301. [Google Scholar] [CrossRef]

- Machanick, P. Approaches to Addressing the Memory Wall. Sch. IT Electr. Eng. Univ. QLD 2002. Available online: https://www.researchgate.net/profile/Philip_Machanick/publication/228813498_Approaches_to_addressing_the_memory_wall/links/00b7d51c988e408fb3000000.pdf (accessed on 10 October 2020).

- Devalla, S.K.; Liang, Z.; Pham, T.H.; Boote, C.; Strouthidis, N.G.; Thiery, A.H.; Girard, M.J.A. Glaucoma Management in the Era of Artificial Intelligence. Br. J. Ophthalmol. 2020, 104, 301–311. [Google Scholar] [CrossRef]

| Characteristics | Interviewees (Number) | Interviewees (Percent) | |

|---|---|---|---|

| Age | |||

| 30 to 34 | 2 | 20% | |

| 35 to 39 | 4 | 40% | |

| 40 to 44 | 4 | 40% | |

| Years of experience in AI | |||

| 0 to 4 | 1 | 10% | |

| 5 to 9 | 4 | 40% | |

| 10 to 14 | 5 | 50% | |

| Gender | |||

| Male | 10 | 100% | |

| Female | 0 | 0 | |

| Race/Ethnicity category | |||

| Non-Hispanic Black | 0 | 0 | |

| Non-Hispanic Asian | 0 | 0 | |

| Non-Hispanic White | 10 | 100% | |

| Non-Hispanic Other | 0 | 0 | |

| Hispanic | 0 | 0 | |

| Occupation | |||

| Postdoctoral researcher | 2 | 20% | |

| Data scientist | 5 | 50% | |

| Machine learning scientist | 2 | 20% | |

| Data engineer | 1 | 10% | |

| Attributes | Weight | Rating System | |||

|---|---|---|---|---|---|

| SCS | 100.00 | ||||

| Safety policies | 23.50 | ||||

| Legislation and codes of practice | 11.25 | ||||

| Is there a commitment to current legal regimes, such as federal regulations, state tort law, the Common Rule, Federal Trade Commission Act, legislation associated with data privacy, and legislation associated with the explainability of AI? | 3.00 | 0–1 | |||

| Is a written declaration available reflecting the safety objectives of the AI-based medical device? | 2.00 | 0/1 | |||

| Are clinicians informed about the safety objectives of the AI-based medical device? | 2.00 | 0/1 | |||

| Is a written declaration available reflecting the safety concerns of the directors of health institution? | 1.50 | 0/1 | |||

| Does the health institution coordinate the AI-based medical device policies with other existence policies? | 1.50 | 0/1 | |||

| Is there a positive atmosphere to ensure that individuals from all parties, such as the health institution and the AI developer, participate in and contribute to safety objectives? | 1.25 | 0–1 | |||

| Liability | 7.00 | ||||

| Are the responsibilities of the AI developer established in writing? | 1.00 | 0/1 | |||

| Are the responsibilities of clinicians established in writing? | 0.75 | 0/1 | |||

| Are the responsibilities of the source of training data established in writing? | 0.75 | 0/1 | |||

| Are the responsibilities of the source of suppliers who provide the system platform established in writing? | 0.75 | 0/1 | |||

| Are the responsibilities of the AI algorithm (at the higher level) established in writing? | 0.25 | 0/1/NA | |||

| Is there a positive atmosphere to ensure that individuals from all parties, such as the health institution and the AI developer, know their responsibilities? | 0.75 | 0–1 | |||

| Is there an appropriate balance for the responsibilities of different parties? | 0.75 | 0–1 | |||

| Is there any procedure for resolving conflicts between parties? | 1.00 | 0/1 | |||

| Is resolving conflicts established in writing? | 1.00 | 0/1 | |||

| Continuous development | 5.25 | ||||

| Is there a commitment to FDA regulations regarding Software as Medical Device (SaMD)? | 0.75 | 0/1 | |||

| Is there involvement in the Digital Health Software Precertification (Pre-Cert) Program? | 0.75 | 0/1/NA | |||

| Is an organizational excellence framework established in writing? | 0.75 | 0/1 | |||

| Is there a commitment to organizational excellence? | 1.50 | 0/1 | |||

| Is there a testing policy for updated AI-based devices? | 1.50 | 0/1 | |||

| Incentives for clinicians | 5.25 | ||||

| Safety incentive programs | 2.25 | ||||

| Are there any incentives offered to clinicians to put defined procedures of implemented AI systems into practice? | 0.75 | 0/1 | |||

| Are incentives frequently offered to clinicians to suggest improvements in the performance and safety of implemented AI systems? | 1.00 | 0/1 | |||

| Are there disincentive programs for clinicians who fail to put defined procedures of implemented AI systems into practice? | 0.50 | 0/1 | |||

| Adopting resolutions | 3.00 | ||||

| Are there any meetings with clinicians to adopt their recommendations concerning AI-based medical device operation? | 1.50 | 0/1 | |||

| Is adoption of resolutions coordinated with other parties, such as the AI developer? | 0.50 | 0/1 | |||

| Do any modifications or changes in AI-based medical device operations involve direct consultation with clinicians who are affected? | 1.00 | 0/1 | |||

| Clinician and patient training | 5.25 | ||||

| General training | 3.75 | ||||

| Are clinicians given sufficient training concerning AI system operation when they enter a health institution, change their position, or use new AI-based devices? | 1.75 | 0/1 | |||

| Is there a need for follow-up training? | 0.50 | 0/1/NA | |||

| Are general training actions continual and integrated with the established training plan? | 0.50 | 0/1/NA | |||

| Are the health institution’s characteristics considered in developing training plans? | 0.50 | 0/1/NA | |||

| Is the training plan coordinated with all parties, such as the AI developer and health institution? | 0.50 | 0–1/NA | |||

| Specific training | 1.50 | ||||

| Are specific patients or clinicians who are facing high-risk events trained? | 0.75 | 0/1/NA | |||

| Are specific training actions continual and integrated with the established specific training plan? | 0.75 | 0/1/NA | |||

| Communication and interaction | 27.00 | ||||

| Human–human interactions | 9.00 | ||||

| Is an information system developed between a health institution and an AI developer during the lifetime of AI-based medical devices? | 2.00 | 0/1 | |||

| Are clinicians informed before modifications and changes in AI-based medical device operation? | 2.00 | 0/1 | |||

| Is there written information about procedures and the correct way of interacting with AI-based medical devices? | 2.00 | 0/1 | |||

| Is there any communication plan established between parties? | 1.50 | 0–1 | |||

| Is there any procedure to monitor communication and resolve problems such as language, technical, and cultural barriers between parties? | 1.50 | 0/1 | |||

| Human–AI interactions | 18.00 | ||||

| Is there any established description of what the AI-based medical device can do? | 1.50 | 0/1 | |||

| Is there any established description of how well the AI-based medical device performs? | 1.50 | 0/1 | |||

| Is the AI-based medical device time service (when to act or interrupt) based on the clinician’s current task? | 1.50 | 0/1 | |||

| Does the AI-based medical device display information relevant to the clinician’s current task? | 1.50 | 0/1 | |||

| Are the clinicians interacting with AI-based medical devices in a way that they would expect (are social and cultural norms considered)? | 1.50 | 0/1 | |||

| Is there any procedure to ensure that the AI-based medical device’s behaviors and language do not reinforce unfair and undesirable biases? | 1.50 | 0/1 | |||

| Is it easy to request the AI-based medical device’s services when needed? | 0.75 | 0/1 | |||

| Is it easy to ignore or dismiss undesired and unwanted AI-based medical device services? | 0.75 | 0/1 | |||

| Is it easy to refine, edit, or even recover when the AI-based medical device is wrong? | 0.75 | 0/1 | |||

| Is it possible to disambiguate the AI-based medical device’s services when they do not match clinicians’ goals? | 0.75 | 0/1 | |||

| Is it clear why the AI-based medical device did what it did (access to explanations and visualizations of why the AI-based medical device behaved as it did, in terms of mitigating the black-box)? | 0.75 | 0/1 | |||

| Does the AI-based medical device have short term memory and allow clinicians to efficiently access the memory? | 0.75 | 0/1 | |||

| Does the AI-based medical device learn from clinicians’ actions (personalizing clinicians’ experience by learning from their behaviors over time)? | 0.75 | 0/1 | |||

| Are there several disruptive changes when updating the AI-based medical device? | 0.75 | 0/1 | |||

| Can clinicians provide feedback concerning the interaction with the AI-based medical device? | 0.75 | 0/1 | |||

| Can the AI-based medical device identify clinicians’ wrong or unwanted actions? How it will react to them? | 0.75 | 0/1 | |||

| Can the clinicians customize what the AI-based medical device can monitor or analyze? | 0.75 | 0/1 | |||

| Can the AI-based medical device notify clinicians about updates and changes? | 0.75 | 0/1 | |||

| Planning of actions | 15.00 | ||||

| Risk assessments and preventive plans | 12.00 | ||||

| Are all risks and adverse events identified concerning the implemented AI system? | 2.50 | 0/1 | |||

| Is there any system in place for assessing all detected risks and adverse events of AI operation? | 1.75 | 0/1 | |||

| Are prevention plans established according to information provided by risk assessment? | 1.75 | 0/1 | |||

| Does the prevention plan clearly specify for clinicians who are responsible for performing actions? | 1.25 | 0/1 | |||

| Are specific dates set for performing preventive measures? | 1.25 | 0/1 | |||

| Are procedures, actions, and processes elaborated upon on the basis of performed preventive measures? | 1.50 | 0/1 | |||

| Are clinicians (involved in using the implemented AI system) informed about prevention plans? | 1.00 | 0/1 | |||

| Are prevention plans occasionally reviewed and updated on the basis of any changes or modifications in operation? | 1.00 | 0/1 | |||

| Emergency plan for risks | 3.00 | ||||

| Is an emergency plan in place for the remaining risks and adverse events of AI operation? | 0.75 | 0/1 | |||

| Does the emergency plan clearly specify for clinicians who are responsible for performing actions? | 0.75 | 0/1 | |||

| Are the clinicians (involved in using the implemented AI system) informed about the emergency plan? | 0.75 | 0/1 | |||

| Is the emergency plan occasionally reviewed and updated on the basis of any changes or modifications in operation? | 0.75 | 0/1 | |||

| Control of actions | 24.00 | ||||

| Checking the effectiveness of the AI system internally and externally | 18.00 | ||||

| Is effective post-market surveillance developed to monitor AI-based medical devices? | 2.50 | 0/1/NA | |||

| Are there occasional checks performed on the execution of the preventive plan and emergency plan? | 2.50 | 0/1 | |||

| Are there procedures to check collection, transformation, and analysis of data? | 2.25 | 0/1 | |||

| Is there a clear distinction between the information system and the post-market surveillance system? | 2.25 | 0/1 | |||

| Are accidents and incidents reported, investigated, analyzed, and recorded? | 2.25 | 0/1 | |||

| Are there occasional external evaluations (audits) to validate preventive and emergency plans? | 2.00 | 0/1/NA | |||

| Are there occasional external evaluations (audits) to ensure the efficiency of all policies and procedures? | 2.00 | 0/1/NA | |||

| Are there procedures to report the results of external and internal evaluation? | 2.25 | 0/1/NA | |||

| Comparing incident rates with benchmarks | 6.00 | ||||

| Do the accident and incident rates regularly compare with those of other healthcare institutions from the same sector using similar processes? | 3.00 | 0/1/NA | |||

| Do all policies and procedures regularly compare with those of other healthcare institutions from the same sector using similar processes? | 3.00 | 0/1/NA | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Davahli, M.R.; Karwowski, W.; Fiok, K.; Wan, T.; Parsaei, H.R. Controlling Safety of Artificial Intelligence-Based Systems in Healthcare. Symmetry 2021, 13, 102. https://doi.org/10.3390/sym13010102

Davahli MR, Karwowski W, Fiok K, Wan T, Parsaei HR. Controlling Safety of Artificial Intelligence-Based Systems in Healthcare. Symmetry. 2021; 13(1):102. https://doi.org/10.3390/sym13010102

Chicago/Turabian StyleDavahli, Mohammad Reza, Waldemar Karwowski, Krzysztof Fiok, Thomas Wan, and Hamid R. Parsaei. 2021. "Controlling Safety of Artificial Intelligence-Based Systems in Healthcare" Symmetry 13, no. 1: 102. https://doi.org/10.3390/sym13010102

APA StyleDavahli, M. R., Karwowski, W., Fiok, K., Wan, T., & Parsaei, H. R. (2021). Controlling Safety of Artificial Intelligence-Based Systems in Healthcare. Symmetry, 13(1), 102. https://doi.org/10.3390/sym13010102