Symmetry in Emotional and Visual Similarity between Neutral and Negative Faces

Abstract

:1. Introduction

2. Materials and methods

2.1. Participants

2.2. Stimuli

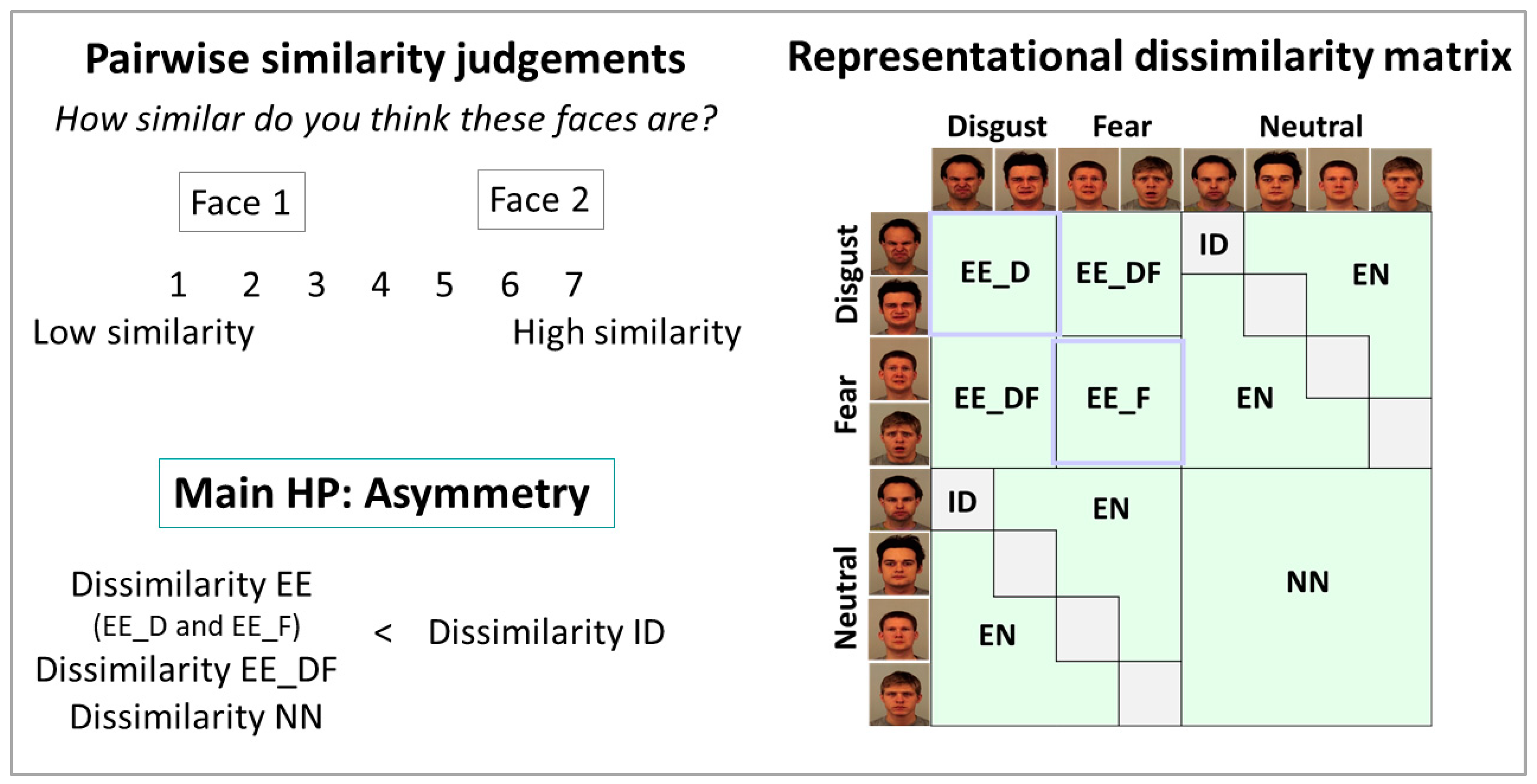

2.3. Experimental Procedure

2.4. Data Analysis

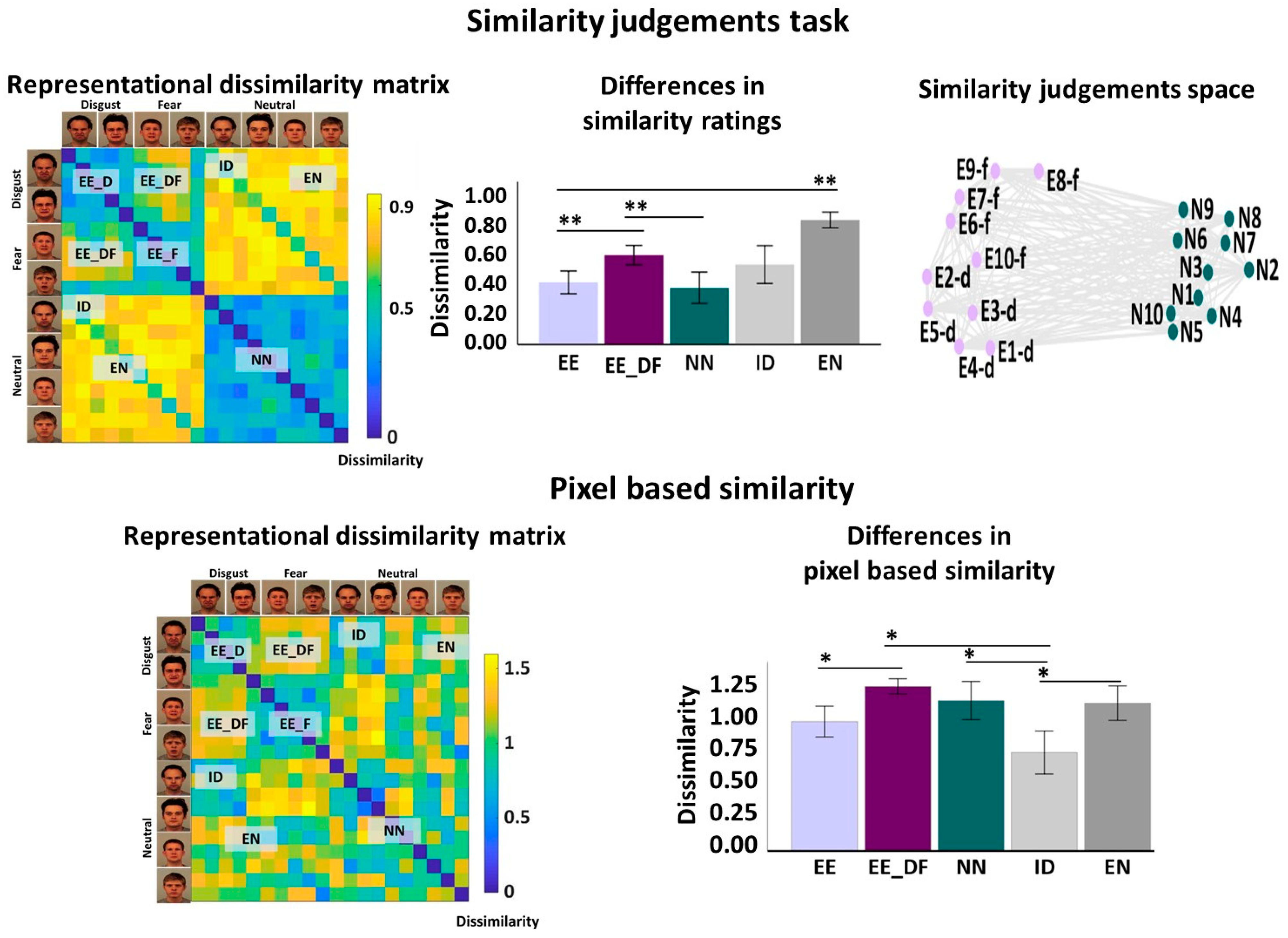

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Russell, J.A.; Pratt, G. A description of the affective quality attributed to environments. J. Personal. Soc. Psychol. 1980, 38, 311. [Google Scholar] [CrossRef]

- Russell, J.A.; Bullock, M. Multidimensional scaling of emotional facial expressions: Similarity from preschoolers to adults. J. Personal. Soc. Psychol. 1985, 48, 1290. [Google Scholar] [CrossRef]

- Hoemann, K.; Xu, F.; Barrett, L.F. Emotion words, emotion concepts, and emotional development in children: A constructionist hypothesis. Dev. Psychol. 2019, 55, 1830. [Google Scholar] [CrossRef] [PubMed]

- Tseng, A.; Bansal, R.; Liu, J.; Gerber, A.J.; Goh, S.; Posner, J.; Colibazzi, T.; Algermissen, M.; Chiang, I.C.; Russell, J.A.; et al. Using the circumplex model of affect to study valence and arousal ratings of emotional faces by children and adults with autism spectrum disorders. J. Autism Dev. Disord. 2014, 44, 1332–1346. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Koch, A.; Alves, H.; Krüger, T.; Unkelbach, C. A general valence asymmetry in similarity: Good is more alike than bad. J. Exp. Psychol. Learn. Mem. Cogn. 2016, 42, 1171. [Google Scholar] [CrossRef] [Green Version]

- Talmi, D.; Moscovitch, M. Can semantic relatedness explain the enhancement of memory for emotional words? Mem. Cogn. 2004, 32, 742–751. [Google Scholar] [CrossRef]

- Cowen, A.S.; Keltner, D. Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proc. Natl. Acad. Sci. USA 2017, 114, E7900–E7909. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Leclerc, C.M.; Kensinger, E.A. Effects of age on detection of emotional information. Psychol. Aging 2008, 23, 209. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Biondi, G.; Franzoni, V.; Yuanxi, L.; Milani, A. Web-based similarity for emotion recognition in web objects. In Proceedings of the 9th International Conference on Utility and Cloud Computing, New York, NY, USA, 6–9 December 2016. [Google Scholar]

- Aviezer, H.; Hassin, R.R.; Ryan, J.; Grady, C.; Susskind, J.; Anderson, A.; Moscovitch, M.; Bentin, S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 2008, 19, 724–732. [Google Scholar] [CrossRef]

- Van Tilburg, W.A.; Igou, E.R. Boredom begs to differ: Differentiation from other negative emotions. Emotion 2017, 17, 309. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Halberstadt, J.B.; Niedenthal, P.M. Emotional state and the use of stimulus dimensions in judgment. J. Personal. Soc. Psychol. 1997, 72, 1017. [Google Scholar] [CrossRef]

- Mondloch, C.J.; Nelson, N.L.; Horner, M. Asymmetries of influence: Differential effects of body postures on perceptions of emotional facial expressions. PLoS ONE 2013, 8, e73605. [Google Scholar] [CrossRef] [PubMed]

- Gallo, D.A.; Foster, K.T.; Johnson, E.L. Elevated false recollection of emotional pictures in young and older adults. Psychol. Aging 2009, 24, 981. [Google Scholar] [CrossRef] [Green Version]

- Chavez, R.S.; Heatherton, T.F. Representational similarity of social and valence information in the medial pFC. J. Cogn. Neurosci. 2015, 27, 73–82. [Google Scholar] [CrossRef] [Green Version]

- Levine, S.M.; Wackerle, A.; Rupprecht, R.; Schwarzbach, J.V. The neural representation of an individualized relational affective space. Neuropsychologia 2018, 120, 35–42. [Google Scholar] [CrossRef] [PubMed]

- Kragel, P.A.; LaBar, K.S. Decoding the nature of emotion in the brain. Trends Cogn. Sci. 2016, 20, 444–455. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- King, M.L.; Groen, I.I.A.; Steel, A.; Kravitz, D.J.; Baker, C.I. Similarity judgments and cortical visual responses reflect different properties of object and scene categories in naturalistic images. NeuroImage 2019, 197, 368–382. [Google Scholar] [CrossRef]

- Chikazoe, J.; Lee, D.H.; Kriegeskorte, N.; Anderson, A.K. Population coding of affect across stimuli, modalities and individuals. Nat. Neurosci. 2014, 17, 1114. [Google Scholar] [CrossRef] [Green Version]

- Yuen, K.; Johnston, S.J.; Martino, F.; Sorger, B.; Formisano, E.; Linden, D.E.J.; Goebel, R. Pattern classification predicts individuals’ responses to affective stimuli. Transl. Neurosci. 2012, 3, 278–287. [Google Scholar] [CrossRef]

- Todd, R.M.; Miskovic, V.; Chikazoe, J.; Anderson, A.K. Emotional objectivity: Neural representations of emotions and their Interaction with cognition. Annu. Rev. Psychol. 2020, 71, 25–48. [Google Scholar] [CrossRef] [PubMed]

- Talmi, D. Enhanced emotional memory: Cognitive and neural mechanisms. Curr. Dir. Psychol. Sci. 2013, 22, 430–436. [Google Scholar] [CrossRef] [Green Version]

- Starita, F.; Kroes, M.C.W.; Davachi, L.; Phelps, E.A.; Dunsmoor, J.E. Threat learning promotes generalization of episodic memory. J. Exp. Psychol. Gen. 2019, 148, 1426. [Google Scholar] [CrossRef] [PubMed]

- Riberto, M.; Pobric, G.; Talmi, D. The emotional facet of subjective and neural indices of similarity. Brain Topogr. 2019, 32, 956–964. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ekman, P.; Friesen, W.V. Measuring facial movement. Environ. Psychol. Nonverbal Behav. 1976, 1, 56–75. [Google Scholar] [CrossRef]

- Lundqvist, D.; Flykt, A.; Öhman, A. The Karolinska Directed Emotional Faces-KDEF; Department of Clinical Neuroscience, Karolinska Institutet: Stockholm, Sweden, 1998. [Google Scholar]

- Tottenham, N.; Tanaka, J.W.; Leon, A.C.; McCarry, T.; Nurse, M.; Hare, T.; Marcus, D.J.; Westerlund, A.; Casey, B.; Nelson, C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009, 168, 242–249. [Google Scholar] [CrossRef] [Green Version]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Guntupalli, J.S.; Wheeler, K.G.; Gobbini, M.I. Disentangling the representation of identity from head view along the human face processing pathway. Cereb. Cortex 2016, 27, 46–53. [Google Scholar] [CrossRef] [Green Version]

- Haxby, J.V.; Guntupalli, J.S.; Connolly, A.C.; Halchenko, Y.; Conroy, B.R.; Gobbini, M.I.; Hanke, M.; Ramadge, P.J. A common, high-dimensional model of the representational space in human ventral temporal cortex. Neuron 2011, 72, 404–416. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Charest, I.; Kievit, R.; Schmitz, T.W.; Deca, D.; Kriegeskorte, N. Unique semantic space in the brain of each beholder predicts perceived similarity. Proc. Natl. Acad. Sci. USA 2014, 111, 14565–14570. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Said, C.P.; Moore, C.D.; Engell, A.D.; Todorov, A.; Haxby, J.V. Distributed representations of dynamic facial expressions in the superior temporal sulcus. J. Vis. 2010, 10, 11. [Google Scholar] [CrossRef] [Green Version]

- Plutchik, R.E.; Conte, H.R. Circumplex Models of Personality and Emotions; American Psychological Association: Washington, DC, USA, 1997. [Google Scholar]

- Posner, J.; Russell, J.A.; Peterson, B.S. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005, 17, 715–734. [Google Scholar] [CrossRef] [PubMed]

- Palermo, R.; Coltheart, M. Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behav. Res. Methods Instrum. Comput. 2004, 36, 634–638. [Google Scholar] [CrossRef]

- Leppänen, J.M.; Hietanen, J.K. Positive facial expressions are recognized faster than negative facial expressions, but why? Psychol. Res. 2004, 69, 22–29. [Google Scholar] [CrossRef]

- Pochedly, J.T.; Widen, S.C.; Russell, J.A. What emotion does the “facial expression of disgust” express? Emotion 2012, 12, 1315. [Google Scholar] [CrossRef] [PubMed]

- Matsumoto, D.; Ekman, P. Facial expression analysis. Scholarpedia 2008, 3, 4237. [Google Scholar] [CrossRef]

- Valentine, T.; Lewis, M.B.; Hills, P.J. Face-space: A unifying concept in face recognition research. Q. J. Exp. Psychol. 2016, 69, 1996–2019. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nestor, A.; Plaut, D.C.; Behrmann, M. Feature-based face representations and image reconstruction from behavioral and neural data. Proc. Natl. Acad. Sci. USA 2016, 113, 416–421. [Google Scholar] [CrossRef] [Green Version]

- Wegrzyn, M.; Vogt, M.; Kireclioglu, B.; Schneider, J.; Kissler, J. Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS ONE 2017, 12, e0177239. [Google Scholar] [CrossRef] [Green Version]

- Sirovich, L.; Kirby, M. Low-dimensional procedure for the characterization of human faces. JOSA A 1987, 4, 519–524. [Google Scholar] [CrossRef]

- üge Çarıkçı, M.; Özen, F. A face recognition system based on eigenfaces method. Procedia Technol. 2012, 1, 118–123. [Google Scholar] [CrossRef] [Green Version]

- Yuan, J.; Mcdonough, S.; You, Q.; Luo, J. Sentribute: Image sentiment analysis from a mid-level perspective. In Proceedings of the Second International Workshop on Issues of Sentiment Discovery and Opinion Mining, Chicago, IL, USA, 11 August 2013. [Google Scholar]

- Hsu, L.-K.; Tseng, W.S.; Kang, L.W.; Wang, Y.C.F. Seeing through the expression: Bridging the gap between expression and emotion recognition. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo (ICME), San Jose, CA, USA, 15–19 July 2013. [Google Scholar]

- Sharma, P.; Esengönül, M.; Khanal, S.R.; Khanal, T.T.; Filipe, V.; Reis, M.J.C.S. Student concentration evaluation index in an e-learning context using facial emotion analysis. In Proceedings of the International Conference on Technology and Innovation in Learning, Teaching and Education, Thessaloniki, Greece, 20–22 June 2018; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Leal, S.L.; Tighe, S.K.; Yassa, M.A. Asymmetric effects of emotion on mnemonic interference. Neurobiol. Learn. Mem. 2014, 111, 41–48. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gray, K.L.; Adams, W.J.; Hedger, N.; Newton, K.E.; Garner, M. Faces and awareness: Low-level, not emotional factors determine perceptual dominance. Emotion 2013, 13, 537. [Google Scholar] [CrossRef] [PubMed]

- Kriegeskorte, N.; Mur, M.; Bandettini, P. Representational similarity analysis–connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2008, 2, 4. [Google Scholar] [CrossRef] [Green Version]

- Shinkareva, S.V.; Wang, J.; Wedell, D.H. Examining similarity structure: Multidimensional scaling and related approaches in neuroimaging. Comput. Math. Methods Med. 2013, 2013, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Zhao, S.; Ding, G.; Huang, Q.; Chua, T.-S.; Schuller, B.W.; Keutzer, K. Affective Image Content Analysis: A Comprehensive Survey. IJCAI 2018, 5534–5541. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Riberto, M.; Talmi, D.; Pobric, G. Symmetry in Emotional and Visual Similarity between Neutral and Negative Faces. Symmetry 2021, 13, 2091. https://doi.org/10.3390/sym13112091

Riberto M, Talmi D, Pobric G. Symmetry in Emotional and Visual Similarity between Neutral and Negative Faces. Symmetry. 2021; 13(11):2091. https://doi.org/10.3390/sym13112091

Chicago/Turabian StyleRiberto, Martina, Deborah Talmi, and Gorana Pobric. 2021. "Symmetry in Emotional and Visual Similarity between Neutral and Negative Faces" Symmetry 13, no. 11: 2091. https://doi.org/10.3390/sym13112091

APA StyleRiberto, M., Talmi, D., & Pobric, G. (2021). Symmetry in Emotional and Visual Similarity between Neutral and Negative Faces. Symmetry, 13(11), 2091. https://doi.org/10.3390/sym13112091