1. Introduction

In recent years, with the analysis of huge amounts of data and the popularization of Industry 4.0 and IoT (Internet of things) devices, various traditional factories have kept up with this wave, hoping to create greater benefits for enterprise, and to achieve data before analysis, one must have the ability to digitize the product. Hole saw caps (hole saw caps) are currently measured manually, so this paper attempts to develop an optical inspection system for the hole saw back caps, hoping to quickly saw the holes in a convenient way. The manufacturer currently uses optical instrument projection and some jigs to measure the size. This process requires a lot of manual processing and will take a lot of time. Therefore, the manufacturer hopes that the operation process can be as simple and fast as possible to saw the size data of the hole saw caps. The size and information of the cover are digitized to facilitate subsequent product analysis and product history and for other purposes. In addition, the development of a measurement system of the hole saw caps requires all measurement subjects to be constructed symmetrically and harmoniously in order to pursue the overall optimization of the hole saw cap measurement data.

In this research, we designed and produced a special optical measurement platform for the hole saw caps. This research uses Solidworks to design the optical platform mechanism [

1], design the machine structure on Solidworks, and communicate with the manufacturer after the design is completed with regard to whether the steps, accuracy, and structure of the optical inspection platform meet the requirements of the manufacturer. The software tools consist of the programming languages C [

2] and Python; Python packages NumPy [

3], Matplotlib, SciPy, imutils, and Cloudan; database CouchDB [

4]; computer vision libraries OpenCV and Atom IDE (integrated development environment); and git management software GitKraken [

5]. The tools include the power supply RIGOL DP832, a desktop computer, a monitor, a light source for inspection, a paper box, a C920 camera, and a vernier caliper. These tools are used for writing hole saw cap measurement programs and combine the mechanisms of inspection to achieve the goal of the hole saw cap measurement system.

Automated optical inspection (AOI) is a technology based on machine vision as the inspection standard. The composition of the AOI inspection system is divided into six parts—camera, lens, light source, computer, mechanism, and electronic control—along with appropriate image processing algorithms to achieve the goal [

6]. Before selecting hardware, we must first set up the AOI workflow to understand the working distance, field of view (FOV), camera frame rate (frames per second, FPS), focus length (FL), depth of field (DOF), aperture, sensor size, sensor pixel size, magnification, resolution, lens mount, and camera calibration. When a physical object is captured by a camera as a 2D plane image at a certain point in the 3D space, optical aberration is caused by refraction. At this time, camera correction is required to correct the aberration generated during the projection process. Common aberration types include spherical aberration, coma, astigmatism, field curvature, and distortion [

7]. Distortion can be divided into two types according to the axial direction, radial distortion, and tangential distortion. There are only two ways to correct distortion. One is to replace the lens with a telecentric lens to remove the distortion through optical means, and the other is to use mathematical means to remove the distortion. In the future, we will use both. The Zhang calibration method was mainly used to correct radial distortion and does not include tangential distortion [

8]. Before the calibration method in [

8] was introduced, the existing calibration methods were roughly divided into two categories. The first is photogrammetric calibration, which uses a calibration object of known shape and size to calibrate the camera. This method requires expensive calibration equipment to be achieved. The second is the self-calibration method. This method requires moving the camera in a static scene, taking multiple pictures, and then calibrating using a non-linear method. Although this method is convenient, it has poor robustness and low accuracy. The calibration method in [

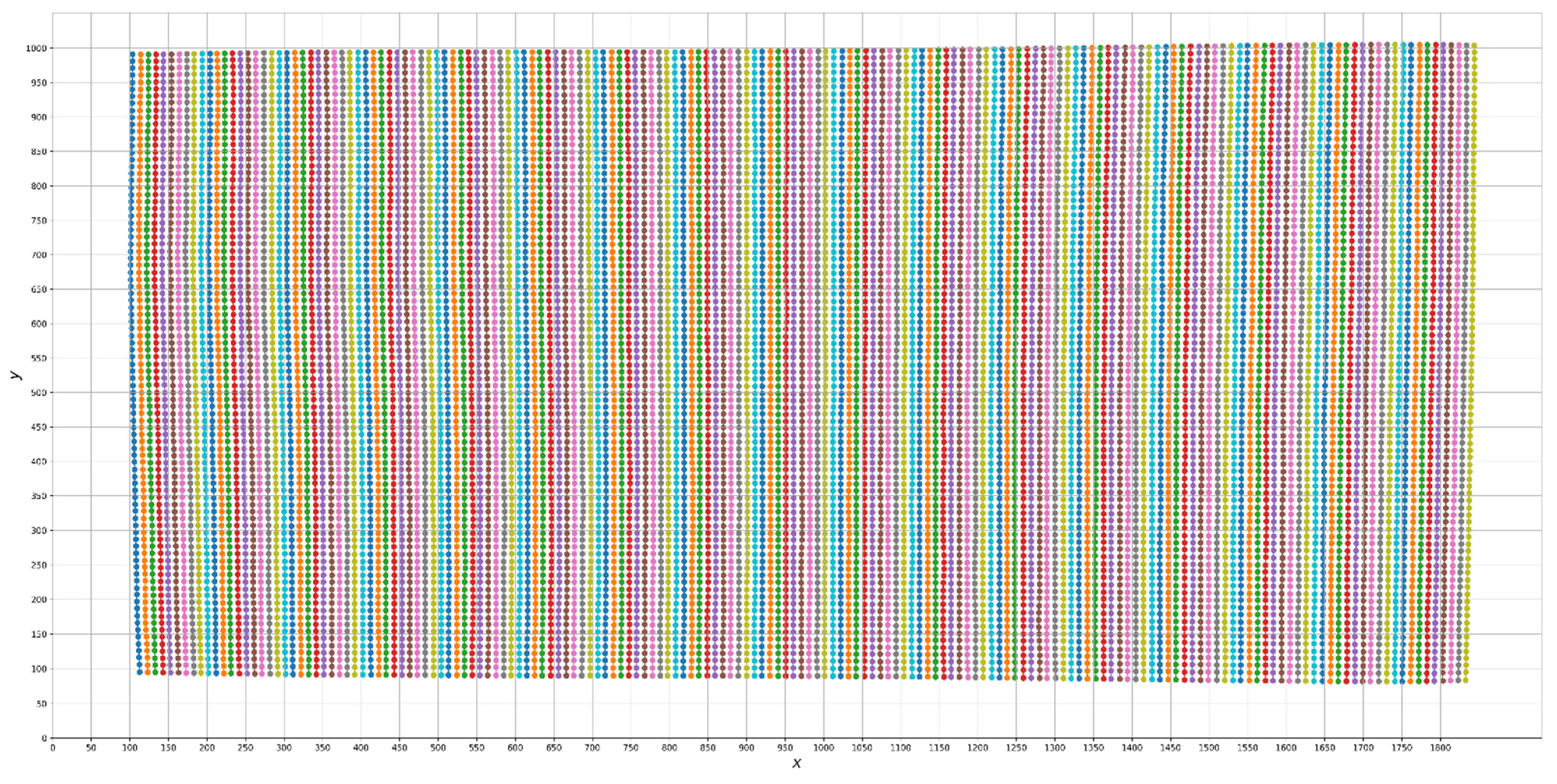

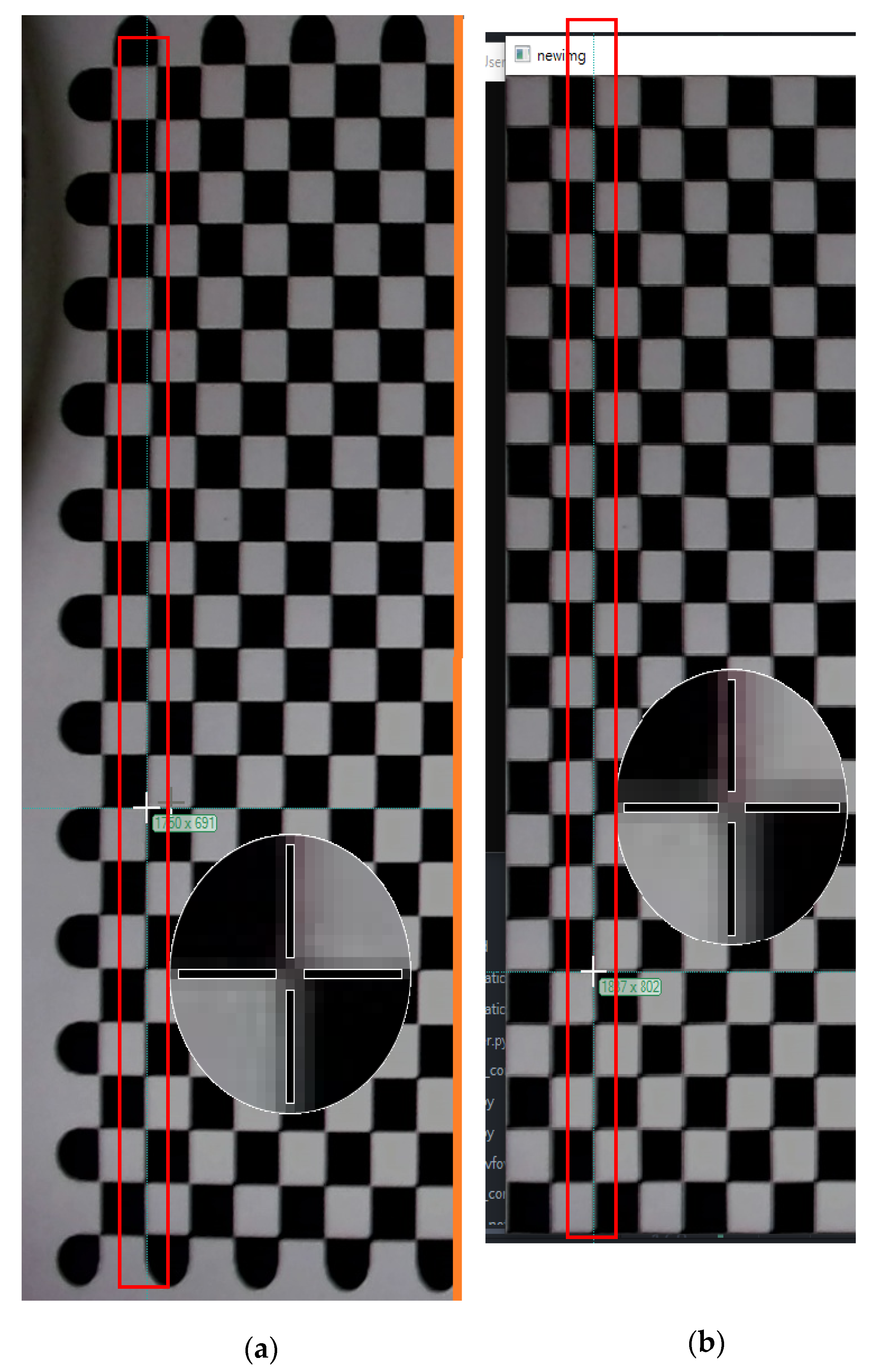

8] solves the above problems. It is easy to use and has high accuracy. One only needs to print out the chessboard and paste it on a flat surface or directly take the real chessboard, and one can perform the calibration. The calibration process is quite easy. The chessboard swinging multiple angles in front of the camera is taken side by side, and then the coordinates of the chessboard on the photo are input into the algorithm to obtain the data required for correction. OpenCV has already written Zhang’s calibration algorithm [

9]. In OpenCV, with the function name calibrate-Camera (this is the camel case naming method, which is often used for program function name naming), the user only needs to set up the camera and obtain the chessboard with it. The size of the picture, the coordinates of the chessboard in the photo, and the specifications of the chessboard can be used to obtain calibration data using the calibrate-Camera function. In the field of mathematical numerical analysis, interpolation is a process or method of inferring new data points within a range through known, discrete data points. In this paper, we try to use interpolation for lens correction and compare Newton polynomials, Lagrange polynomials, and cubic interpolation. Based on the above, we have developed and designed a measurement system for the hole saw caps, which uses the computer vision method to measure the dimensions of the hole saw caps. The dark box environment is made of a light source and the back plate of the hole saw cap material, and two cameras are used to observe the hole saw caps from above and from the side. A personal desktop computer obtains the camera screen and uses the Python program to calculate the size of the hole saw caps and to display the measurement results. Recently, Nieslony et al. [

10] have focused on research problems related to the surface topography and metrological problems after the drilling process of explosively cladded Ti–steel plates. However, the author evaluated the results of the topography of the material after the drilling process based on mechanical and electromagnetic methods. In the literature [

11], the authors have considered a comparative analysis of the Sensofar S Neox 3D optical profilometer, Alicona Infinite Focus SL optical measuring system, and a digital Keyence VHX-6000 microscope on assessing the surface topography of the Ti6Al4V titanium alloy after finish turning under dry machining conditions. However, the surface topography of the materials has been evaluated in many studies, which often used different devices with different measuring methods. Therefore, it is impossible to compare the measuring equipment and techniques used.

Finally, we used the developed measuring system for the hole saw caps to measure the hole saw caps to verify the usability and effectiveness of the developed measurement system. Firstly, RIGOL DP832 is a programmable three-channel linear DC power supply (30V/3A*2, 5V/3A*1). It can supply the light source power of the detection system, and the voltage and current can be adjusted on the control panel to adjust the light source. The light source uses Eddie Vision Technology’s ring-shaped light source RI-140-45 green light and the backlight light source FL250-150 green light. Both of these light sources can be controlled by voltage. The computer specifications used in this study are an i5-9400 CPU (central processing unit), 32 GB (gigabytes) of memory, and the win10 21H1 operating system. The screen is a BenQ GL2580. The camera uses Logitech’s C920 camera. The camera has the UVC (USB video class) protocol that OpenCV supports. We can directly use the computer to execute OpenCV to control the camera’s exposure, resolution, transmission format, and other parameters. Although the 1920 × 1080 resolution of this camera is not enough for the inspection of the hole saw caps, the price is relatively low, and it is suitable for the prototype development of the hole saw cap measurement system. The vernier caliper is a tool for measuring the length of the workpiece, which can measure the length unit at the millimeter level. The method of use is to use the outer fixed surface on the caliper to measure the inner diameter, the inner fixed surface to measure the outer diameter, and the depth rod to measure the depth. In this study, the measuring tool described above will be used as the measurement standard to determine that the measurement result of the hole saw cap optical measurement system is accurate. However, the errors are all within the range of 2 pixels (1 pixel is equal to 0.07 mm), which meets the detection standards. We have developed a system that saves manpower and does not use jigs for direct measurement. Furthermore, we provide relevant industries with a suitable price (under USD 3000) and easy-to-use hole saw cap inspection methods.