Abstract

The underlying function in reproducing kernel Hilbert space (RKHS) may be degraded by outliers or deviations, resulting in a symmetry ill-posed problem. This paper proposes a nonconvex minimization model with -quasi norm based on RKHS to depict this degraded problem. The underlying function in RKHS can be represented by the linear combination of reproducing kernels and their coefficients. Thus, we turn to estimate the related coefficients in the nonconvex minimization problem. An efficient algorithm is designed to solve the given nonconvex problem by the mathematical program with equilibrium constraints (MPEC) and proximal-based strategy. We theoretically prove that the sequences generated by the designed algorithm converge to the nonconvex problem’s local optimal solutions. Numerical experiment also demonstrates the effectiveness of the proposed method.

1. Introduction

The reproducing kernel Hilbert space (RKHS, denote as ) has been widely studied in many studies [1,2,3,4,5,6,7]. Its most critical property is that the functions in RKHS can be linearly represented by reproducing kernel function. In addition, many studies have analyzed the properties of unitary or binary functions in RKHS. These functions usually can be regarded as signals or images in discrete form, so as to build optimization models and solve some application problems, such as image super-resolution and image restoration.

In general, the Hilbert space can be considered as which is a probability measure on the subset , is complete for a class of real valued functions with . Moreover, the reproducing kernel of can be defined as: (1) for any , the function blongs to . (2) the function has so-called reproducing property, that is for all , and represents an associated inner product. By this relation, we could get the Gram matrix by the discretization of reproducing kernel for , thus it is easy to get the following discrete formulation by considering the bias :

where is the coefficient we need to estimate, besides, is a real symmetry matrix.

In the real world, the underlying function generally will be polluted by outliers and Gaussian perturbation, which gets the following symmetry ill-posed problem:

where can be viewed as outliers and stands for Gaussian perturbation. Our final goal is to accurately estimate the coefficients and from the known and . After obtaining and , we could calculate the underlying function by (1). Note that, solving the problem (2) is quite a chanllenging task, since the variables , , and in (2) are all unknown, which leads to a ill-posed problem.

In [8], Papageorfiou et al, considered that the function g can be linearly represented by coefficient and constant c as , and proposed a kernel regularized orthogonal maching pursuit (KROMP) method to solve the nonconvex problem. However, the KROMP method has two weakness: one is that the constant c is not general and flexible; the other is that the convergence of its algorithm is not guaranteed theoretically. Therefore, this paper mainly establishes the nonconvex optimization model for the degraded problem (2) in RKHS, and gives the designed algorithm whose convergence can be guaranteed, finally shows the effectiveness of the proposed method in some simulation experiments.

Regularized modeling is a promising way to deal with ill-posed problems. Actually, the variable representing outliers is generally sparse, which motivates us to formulate a sparsity-based regularization model. Especially, -quasi norm that counts the non-zero elements in a vector is an ideal metric to depict the sparse property. Therefore, the nonconvex minimization problem for solving the ill-posed problem (2) can be simply shown as follows,

where , and are positive parameters. The first term in (3) is deduced from the Gaussian perturbation of under the framework of maximum a posteriori (MAP) estimation. The second and third terms are two regularized terms to depict the underlying prior for and . The last term is a -quasi norm to depict the sparse prior of outlier . Note that the given regularization model (3) is a nonconvex minimization problem due to the nonconvex property of term. In general, the term will be replaced approximately by some other convex terms (e.g., term or hard threshold [9,10,11,12]) for simpler computation and convergence guarantee. However, if taking this way, it will lose the accuracy of depicting sparsity, which may result in unreasonable outcomes.

However, the nonconvex minimization problems usually have the following difficulties which are encountered to solve: (1) Whether the designed algorithm can effectively solve the minimization model? (2) Whether the convergence analysis of the designed algorithm can be guaranteed? (3) Whether the initial value affects the convergence of the designed algorithm? Thus, many studies have been devote to conquer these weaknesses of nonconvex problems.

Recently, the nonconvex problem can be reformulated as an equivalent minimization problem based on the mathematical program with equilibrium constraints (MPEC) which can be effectively solved by the classical algorithms [13,14,15]. For instance, Yuan et al. [14] have proposed an equivalent biconvex MPEC formulation for -quasi norm of the nonconvex minimization problem. Additionally, the proximal alternating based algorithm has widely been used to solve the nonconvex and nonsmooth problems [16,17,18,19,20,21,22,23,24]. In [18], Bolte et al. propose a proximal alternating linear minimization algorithm (PALM) framework to solve nonconvex and nonsmoothing minimization problem, and give the convergence analysis of the algorithm.

In this paper, we mainly focus on the above mentioned difficulties of nonconvex minimization problem (3) to design an efficient algorithm with convergence guarantee theoretically. A simple and representative example is employed to verify the effectiveness of the proposed method. Besides, the contributions of this work can be summarized as: (1) New nonconvex minimization modeling based on RKHS; (2) Convergence guarantee of the designed algorithm for the nonconvex problem.

2. The Solution for the Nonconvex Minimization Problem

Based on the MPEC lemma of -quasi norm (see more details from [14]), the nonconvex minimization problem (3) can be equivalently reformulated the following model:

where ⊙ represents point-wise multiplication, and is indicator function projecting the elements of into . The constrained minimization problem (4) can be rewritten as the following unconstrained minimization problem by the penalty strategy:

where , , , , .

We utilize the proximal-based algorithm to effectively deal with the unconstrained problem (5) by alternatingly solving each variable, which leads to the following subproblems:

where the related parameters are all nonnegative, i.e., , and , , here is identity matrix, and represents Frobenius norm, , .

In particular, above subproblems are all convex functions whose closed-form solutions can be easily calculated as follows:

We iteratively and alternatingly update , , and according to (10)–(13). The final algorithm for the nonconvex minimization problem (3) is summarized in Algorithm 1.

In Algorithm 1, “” means the maximum iterations and the “” represents the relative error between adjacent iterations. When the iteration stops, the final underlying function can be estimated by the relation of (1).

| Algorithm 1: The algorithm to minimize the problem (5) |

Input: blurred matrix , positive parameters , , , and , . Initialize: start with any , , . While or (1) Compute solved by Equation (10). (2) Compute by Equation (11). (3) Compute by Equation (12). (4) Compute by Equation (13). (5) Update penalty parameter by . (6) Calculate the relative error , where , and . (7) . Endwhile Output:, and |

3. Convergence Analysis

For the sake of notational simplicity, we uniform expression as: (1) Frobenius norm if ∗ is a matrix; (2) -norm if * is a vector. Denote that and its domain field is .

Lemma 1.

Let the bounded sequenceis generated by designed algorithm. Then the sequencesufficiently decreases as follows:

where. Note that,, andis Lipschitz constant offor, andis that offor.

Proof.

Since is Lipschitz differential for variables and , respectively. There exist positive constants and which satisfy:

-subproblem: Based on the designed algorithm, is the minimum solution of -subproblem in -th iteration, then we have:

-subproblem: Based on the designed algorithm, is the minimum solution of -subproblem in -th iteration, then we have:

-subproblem: Based on the designed algorithm, is the minimum solution of -subproblem in -th iteration, then we have:

-subproblem: Based on the designed algorithm, is the minimum solution of -subproblem in -th iteration, then we have:

Lemma 2.

Let the bounded sequencebe generated by the designed algorithm. Then:

where, and, which the contantis bounded-value of the sequenceand ∂ is subdifferential operator.

Proof.

Obviously, satisfies the first-order optimal condition of -subproblem because of is the k-th solution of the -subproblem. Similar to the other variables, then we have:

Since the object function is continuous and differentiable for each variable, we have:

Lemma 3.

Let the bounded sequencebe generated by designed algorithm and the initial variablebe bounded. Then the sequenceis bounded.

Proof.

Obviously, the continuous function is proper and coercive, since there exists if and only if . Thus, the sequence is bounded because the sequence generated by the designed algorithm is bounded. □

Lemma 4.

The functionin Equation (3) is a Kurdyka-ojasiewicz (K) function (The definition of a K function and some examples can be found in [18]).

Proof.

According to the definition and some examples for the KŁ function in [18,25], are polynomial functions which are obviously real analytic functions. Thus, is a KŁ function. □

Theorem 1.

Let the sequencebe bounded which is generated by the designed algorithm. Then the sequenceconverges to a critical point.

Proof.

Since the sequence is bounded, so it must exist a subsequence which converges to a critical point satisfying . Since the function is continuous, then the sequence is converged to , i.e., .

According to Lemmas 1–3, it means that the sequence is also converged. Thus, the sequence and the subsequence will converge to the same function value as follows:

If there is an index such that . It is obviously that the sequence up to a stationary point and the corresponding function value does also not change based on Lemma 1, i.e., . Thus, it is the critical point of and the conclusion of Theorem 1 is obviously established.

Next part will prove that Theorem 1 still holds when the index is nonexistent.

Based on Lemma 1, it implies that for any , we have:

From (28), it implies that for any , there exists , so we have the following inequation when ,

Denote a set of limit points of the sequence as , and let express the minimum distance between one point and a set , i.e.,

Based on Lemma 2, is a continuous and differential function, and we have since as . It implies that for any , there exists a positive index , when , then:

Set , and for each , there exists:

From the Lemma 4, is a KŁ function in domain . Thus, there is a concave function such that:

Moreover, based on the concavity of , it has:

Denote for all nonnegative integers p, and . Combine Equation (33), Lemmas 1 and 2, we have:

which can be rewritten as follows:

It is a well-known inequality for any , thus, we have:

According to the definition of , we have . Thus,

Thus, is a Cauchy sequence, and has a finite length. Because of the completeness of Hilbert space, the Cauchy sequence is also certainly a convergence sequence. Thus, the sequence generated by designed algorithm converges to a critical point . Moreover, the convergence of the sequence generated by the designed algorithm can be guaranteed for any initial value. □

4. Numerical Result

In this section, we conduct some simple simulation examples to show the effectiveness of the proposed method. We choose f as the ground-truth function (discrete form as ) and add Gaussian noise and sparse outliers to generate the observation. The proposed method is compared with the kernel-based regression using orthogonal matching pursuit (KROMP) method [8], and the parameters of KROMP method are selected according to the range mentioned in the literature. The parameters of the proposed method in the experiment set empirically to: , , , , , , and it should be noted that better visual and numerical results can be obtained by fine-tuning the parameters more carefully.

The related error (ReErr) for the quantitative evaluation, which is a commonly index to measure the effect of restoration, and it is defined as:

where is the restoration result which is estimated by different methods. Experiments are implemented in MATLAB (R2016a) on a desktop with 16Gb RAM and Inter(R) Core(TM) CPU i5-4590: @3.30GHz.

Example 1.

The binary continuous function f is a given as follows:

where, (two dimensions, respectively, take 21 discrete points),. After discretization of f, 20 dB Gaussian noise and 10% outlier noise were added to obtain the final degraded data.

In order to show the experimenta results of different examples, we will show the ground-truth data, the degraded data polluted by noise and outliers, and the restored outcome calculated by the proposed method and the KROMP method, respectively.

Example 2.

The binary continuous function f is a given as follows:

where,(two dimensions, respectively, take 31 discrete points),. After discretization of f which is a binary continuous function, 10 dB Gaussian noise and 10% outlier noise were added to obtain the final degraded data.

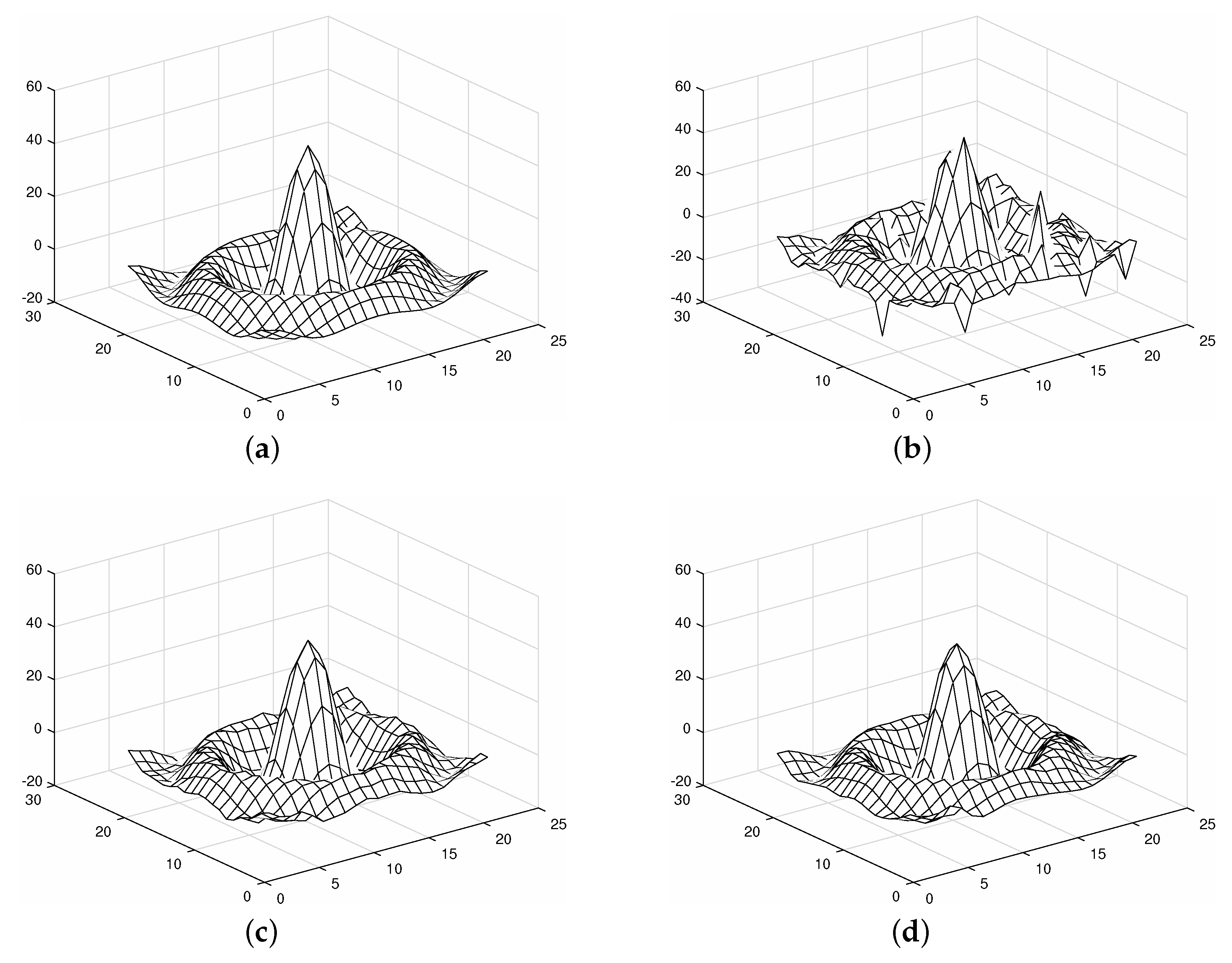

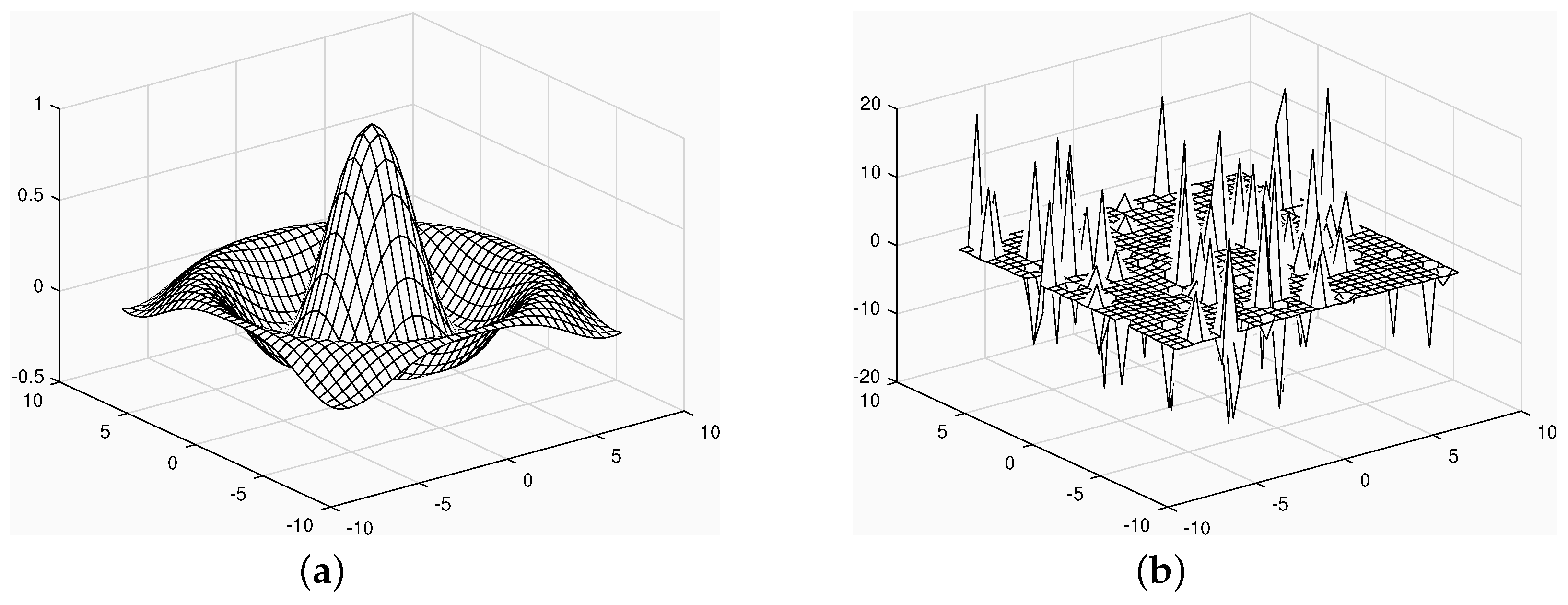

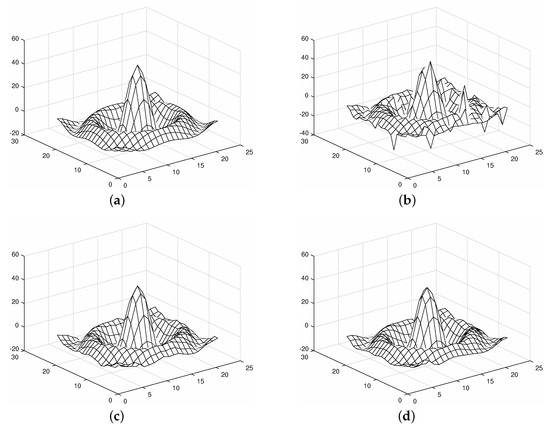

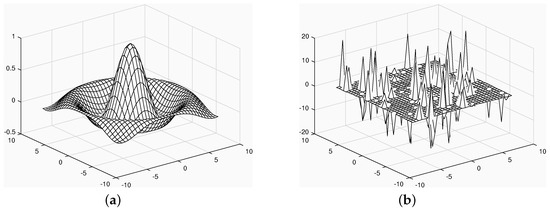

From Figure 1 and Figure 2, although the shape of ground-truth in Example 2 is similar to that of Example 1 (see Figure 1a and Figure 2a), in fact, the degree of degradation of Example 2 is much greater than that of Example 1, which the function value and the degree of noise pollution are different (see Figure 1b and Figure 2b). The proposed method has obvious restorated outcomes, and can effectively recover original data (see Figure 1c, however the restorated outcomes of the KROMP method still have obvious noise residual in Figure 1d and Figure 2d. It also shows the effectiveness of the proposed method.

Figure 1.

In Example 1, the ground-truth was degraded by 20 dB Gaussian noise and 10% outliers, and the visual results of restoration by each method were obtained. (a) Ground-truth; (b) degraded; (c) the restored outcome by the proposed method, (d) the restored outcome by the KROMP method.

Figure 2.

In Example 2, the real data was degraded by 10 dB Gaussian noise and 10% outliers, and the visual results of restoration by each method were obtained. (a) Ground-truth; (b) degraded; (c) the restored outcome by the proposed method, (d) the restored outcome by the KROMP method.

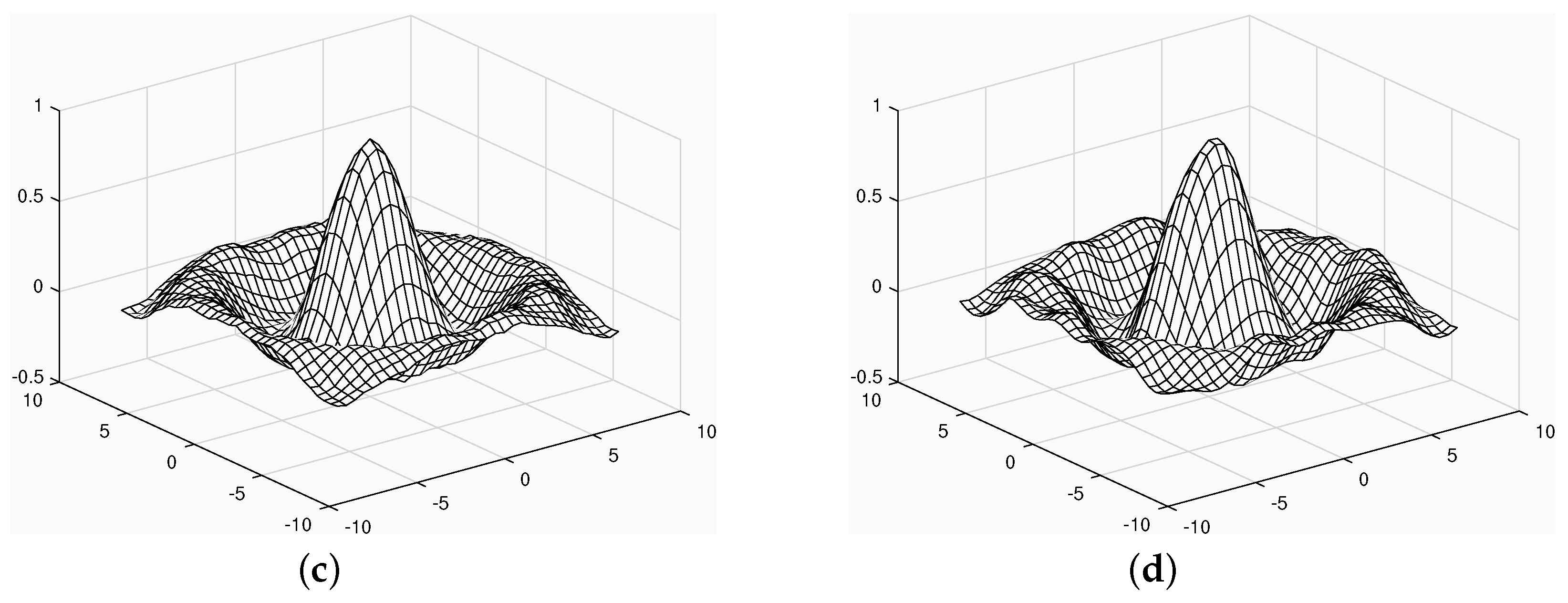

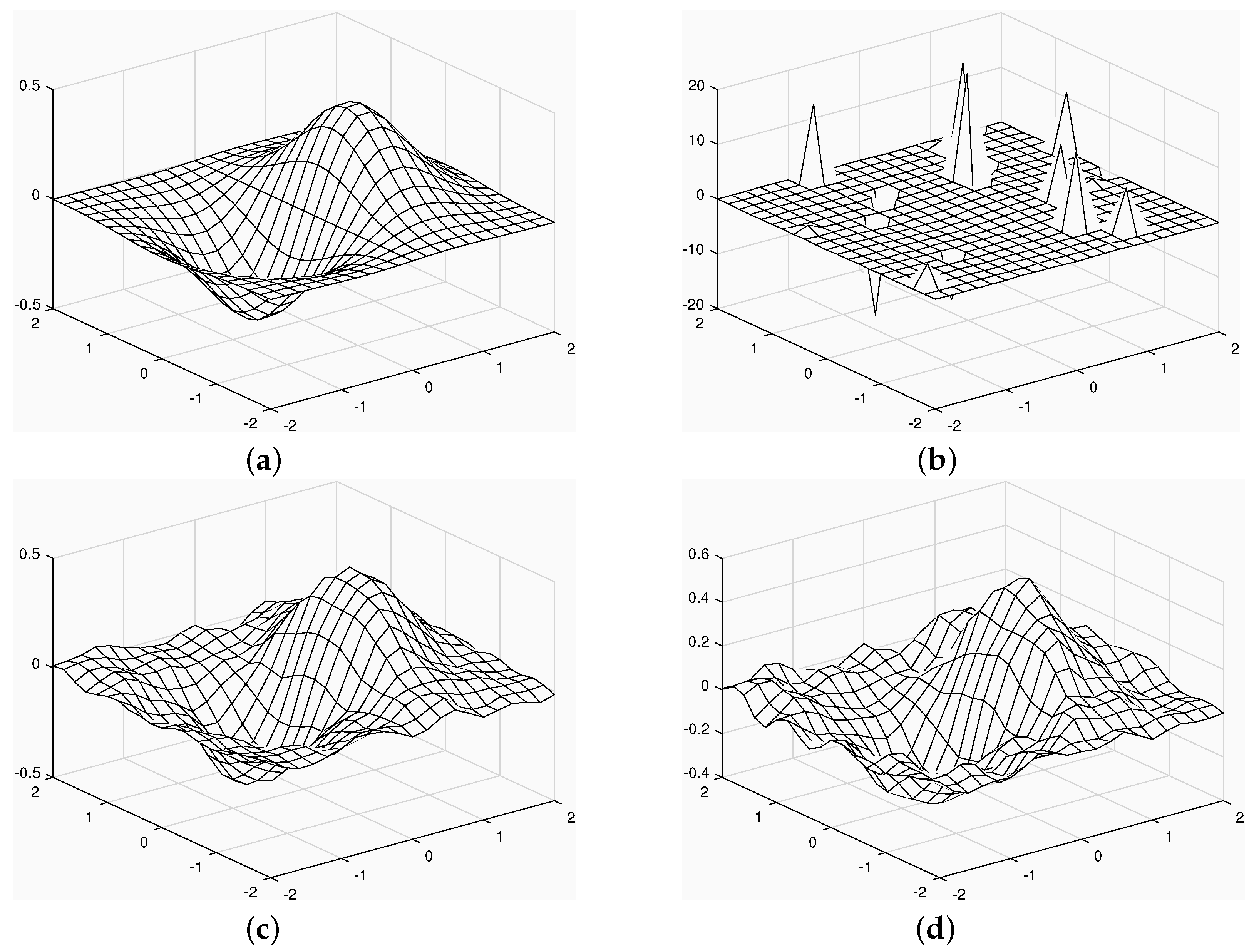

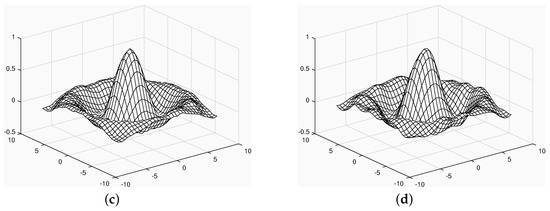

Example 3.

The binary continuous function f is a given as follows

where,(two dimensions, respectively, take 21 discrete points). After discretization of f which is a binary continuous function, 10 dB Gaussian noise and 5% outlier noise were added to obtain the final degraded data.

It can be seen that even in the case of extremely large external pollution, such as Example 3 in Figure 3b, the proposed method can obtain more accurate recovery data (Figure 3c) than the KROMP method (Figure 3d). In addition, the relative error (ReErr) results from Example 1 to Example 3 are shown in Table 1, and the better results have been bolded. It is obvious that the proposed method has smaller relative errors compared with the KROMP method, which verifies the effectiveness of the proposed method.

Figure 3.

In Example 3, the real data was degraded by 10 dB Gaussian noise and 5% outliers, and the visual results of restoration by each method were obtained. (a) Ground-truth; (b) degraded; (c) the restored outcome by the proposed method, (d) the restored outcome by the KROMP method.

Table 1.

The ReErr results from Example 1 to Example 3 (Bold: the best).

5. Conclusions

In this paper, we proposed a new nonconvex modeling to deal with a challenging symmetry ill-posed problem. An efficient algorithm for solving the given nonconvex problem is designed by MPEC and the proximal-based regularization. Theoretically, the bounded sequence generated by the designed algorithm can be guaranteed to converge to the nonconvex problem’s local optimal solution. Furthermore, the convergence of the designed algorithm can be guaranteed for any initial value. The numerical experiments show that the proposed method can achieve better restoration results. For example, our method could obtain smaller relative error comparing with benchmark KROMP approach, besides, could interpolate more mesh points and significantly reduce noise.

Author Contributions

Conceptualization, software and writing—original draft preparation, H.-X.D. and L.-J.D.; methodology and validation, formal analysis and writing—review and editing, H.-X.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by Scientific Research Startup Fund of Xihua University (RZ2000002862).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data in the study is generated by ourself.

Acknowledgments

The authors thank the reviewers for their comments, which have improved the content of this manuscripts.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tanaka, K. Generation of point sets by convex optimization for interpolation in reproducing kernel Hilbert spaces. Numer. Algorithms 2020, 84, 1049–1079. [Google Scholar] [CrossRef] [Green Version]

- Mo, Y.; Qian, T.; Mai, W.X.; Chen, Q.H. The AFD methods to compute Hilbert transform. Appl. Math. Lett. 2015, 45, 18–24. [Google Scholar] [CrossRef]

- Karvonen, T.; Särkkä, S.; Tanaka, K. Kernel-based interpolation at approximate Fekete points. Numer. Algorithms 2020, 84, 1049–1079. [Google Scholar]

- Silalahi, D.D.; Midi, H.; Arasan, J.; Mustafa, M.S.; Caliman, J.P. Kernel Partial Least Square Regression with High Resistance to Multiple Outliers and Bad Leverage Points on Near-Infrared Spectral Data Analysis. Symmetry 2021, 13, 547. [Google Scholar] [CrossRef]

- Deng, L.J.; Guo, W.H.; Huang, T.Z. Single-image super-resolution via an iterative reproducing kernel Hilbert space method. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 2001–2014. [Google Scholar] [CrossRef]

- Li, X.Y.; Wu, B.Y. A new reproducing kernel collocation method for nonlocal fractional boundary value problems with non-smooth solutions. Appl. Math. Lett. 2018, 86, 194–199. [Google Scholar] [CrossRef]

- Wu, Q.; Li, Y.; Xue, W. A Kernel Recursive Maximum Versoria-Like Criterion Algorithm for Nonlinear Channel Equalization. Symmetry 2019, 11, 1067. [Google Scholar] [CrossRef] [Green Version]

- Papageorgiou, G.; Bouboulis, P.; Theodoridis, S. Robust kernel-based regression using orthogonal matching pursuit. In Proceedings of the IEEE International Workshop on Machine Learning for Signal Processing, Southampton, UK, 22–25 September 2013; pp. 1–6. [Google Scholar]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic decomposition by basis pursuit. SIAM Rev. 2001, 43, 129–159. [Google Scholar] [CrossRef] [Green Version]

- Donoho, D.L. For most large underdetermined systems of linear equations the minimial ℓ1-norm solution is also the sparsest solution. Commun. Pure Appl. Math. 2006, 59, 797–829. [Google Scholar] [CrossRef]

- Dong, B.; Zhang, Y. An efficient algorithm for ℓ0 minimization in wavelet frame based image restoration. J. Sci. Comput. 2013, 54, 350–368. [Google Scholar] [CrossRef] [Green Version]

- Zuo, W.M.; Meng, D.Y.; Zhang, L.; Feng, X.C.; Zhang, D. A generalized iterated shrinkage algorithm for non-convex sparse coding. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 217–224. [Google Scholar]

- Ye, J.J.; Zhu, D. New necessary optimality conditions for bilevel programs by combining the MPEC and value function approaches. SIAM J. Optim. 2010, 20, 1885–1905. [Google Scholar] [CrossRef]

- Yuan, G.Z.; Ghanem, B. ℓ0 TV: A sparse optimization method for impulse noise image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 352–364. [Google Scholar] [CrossRef] [Green Version]

- Yuan, G.Z.; Ghanem, B. An exact penalty method for binary optimization based on MPEC formulation. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Attouch, H.; Bolte, J.; Redont, P. and Soubeyran, A. Proximal alternating minimization and projection methods for nonconvex problems. An approach based on the Kurdyka–Lojasiewicz inequality. Math. Oper. Res. 2010, 35, 435–457. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.J.; Dong, M.M.; Xu, Y. A sparse rank-1 approximation algorithm for high-order tensors. Appl. Math. Lett. 2020, 102, 106–140. [Google Scholar] [CrossRef]

- Bolte, J.; Sabach, S.; Teboulle, M. Proximal alternating linearized minimization or nonconvex and nonsmooth problems. Math. Program. 2014, 146, 459–494. [Google Scholar] [CrossRef]

- Sun, T.; Barrio, R.; Jiang, H.; Cheng, L.Z. Convergence rates of accelerated proximal gradient algorithms under independent noise. Numer. Algorithms 2019, 81, 631–654. [Google Scholar] [CrossRef]

- Hu, W.; Zheng, W.; Yu, G. A Unified Proximity Algorithm with Adaptive Penalty for Nuclear Norm Minimization. Symmetry 2019, 11, 1277. [Google Scholar] [CrossRef] [Green Version]

- An, Y.; Zhang, Y.; Guo, H.; Wang, J. Compressive Sensing Based Three-Dimensional Imaging Method with Electro-Optic Modulation for Nonscanning Laser Radar. Symmetry 2020, 12, 748. [Google Scholar] [CrossRef]

- Ma, F. Convergence study on the proximal alternating direction method with larger step size. Numer. Algorithms 2020, 85, 399–425. [Google Scholar] [CrossRef]

- Pham, Q.M.; Lachmund, D.; Hào, D.N. Convergence of proximal algorithms with stepsize controls for non-linear inverse problems and application to sparse non-negative matrix factorization. Numer. Algorithms 2020, 85, 1255–1279. [Google Scholar] [CrossRef]

- Tiddeman, B.; Ghahremani, M. Principal Component Wavelet Networks for Solving Linear Inverse Problems. Symmetry 2021, 13, 1083. [Google Scholar] [CrossRef]

- Xu, Y.Y.; Yin, W.T. A block coordinate descent method for regularized multiconvex optimization with applications to nonnegative tensor factorization and completion. SIAM J. Imaging Sci. 2013, 6, 1758–1789. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).