1. Introduction

Android operates on 85% of mobile phones with over 2 billion active devices per month worldwide [

1]. The Google Play Store is the official market for Android applications (apps) that distribute over 3 million Android apps in 30 categories. For example, it provides entertainment, customization, education, and financial apps [

2]. A previous study [

3] indicated that a mobile device, on average, has between 60 and 90 apps installed. Besides, an Android user, on average spends 2 h and 15 min on apps every day. Therefore, checking the app’s reliability is a significant task. Recent research [

2] showed that the number of Android apps downloaded is increasing drastically every year. Unfortunately, 17% of Android apps were still considered to be low-quality apps in 2019 [

4]. Another study found that 53% of users would avoid using an app if the app crashed [

5]. A mobile app crash not only offers a poor user experience but also negatively impact the app’s overall rating [

6,

7]. The inferior quality of Android apps can be attributed to insufficient testing due to its rapid development practice. Android apps are ubiquitous, operating in complex environments, and evolve under market pressure. Android developers neglect appropriate testing practices as they consider it time-consuming, expensive, and involving a lot of repetitive tasks. Mobile app crashes are evitable and avoidable by intensive and extensive testing of mobile apps [

6]. Through a graphical user interface (GUI), mobile app testing verifies the functionality and accuracy of mobile apps before these apps are released to the market [

8,

9,

10]. Automated mobile app testing starts by generating test cases that include event sequences of the GUI components. In the mobile app environment, the test input (or test data) will be based on user interaction and system interaction (e.g., apps notification). The development of GUI test cases usually takes a lot of time and effort because of their non-trivial structures and highly interactive nature of GUIs. Android apps [

11,

12] usually possess many states and transitions, which can lead to an arduous testing process and poor testing performance for large apps. Over the past decade, Android test generation tools have been developed to automate user interaction and system interaction as inputs [

13,

14,

15,

16,

17,

18]. The purpose of these tools is to generate test cases and explore the app’s functions by employing different techniques. These techniques are random-based, model-based, systematic based, and reinforcement learning. However, there are issues with low code coverage of existing tools [

11,

19,

20], due to the inability to explore app functions extensively because some of the app functions can only be explored through a specific sequence of events [

21]. Such tools must not only choose which GUI component to interact with but also which type of input to perform. Each type of input for each GUI component is likely to improve coverage. Coverage is an important metric to measure the efficiency of testing [

22]. Combining different granularities from instruction, method, and activity coverage is beneficial for better results in testing Android apps. The reason is that activities and methods are vital to app development, so the numeric values of activity and method coverage are intuitive and informative [

23]. Activity is the primary interface for user interaction and an activity comprises several methods and underlying code logic. Each method in every activity comprises a different number of lines of code. Instruction coverage provides information about the amount of code that has been executed. Hence, improving instruction and method coverage ensures that more of the app’s functionalities associated with each activity are explored and tested [

23,

24,

25]. Similarly, activity coverage is a necessary condition to detect crashes that can occur when interacting with the app’s UI. The more coverage the tool explores, the more likely it would discover potential crashes [

26].

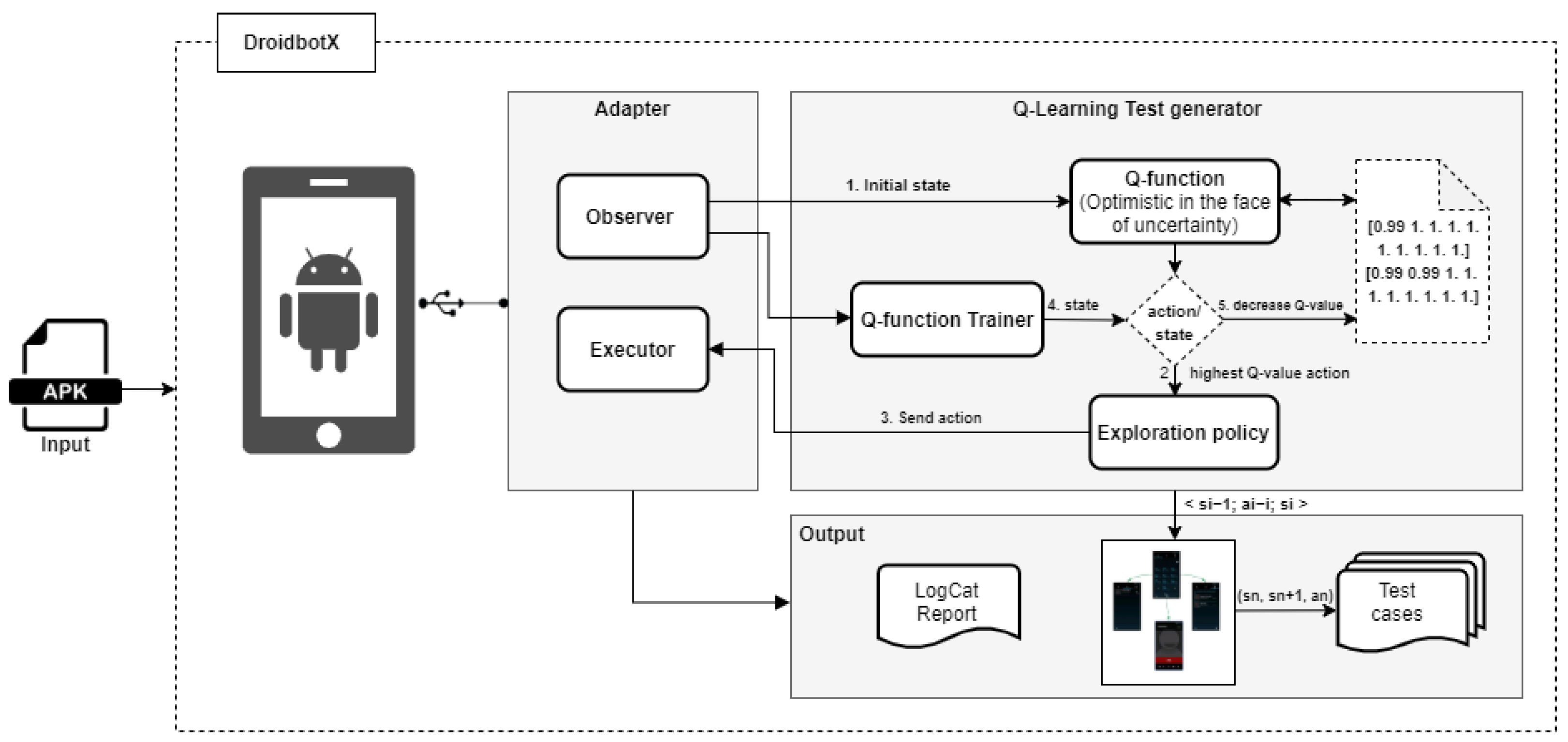

This research proposes an approach that generates a GUI test case based on the Q-Learning technique. This approach systematically selects events and guides the exploration to expose the functionalities of an application under test (AUT) to maximize instruction, method, and activity coverage by minimizing redundant execution of events.

This approach was implemented into the test tool named DroidbotX (

https://github.com/husam88/DroidbotX, accessed on 9 February 2021) and it is publicly available. The problem-based learning approach in teaching the public using DroidbotX is also available in the ALIEN (Active Learning in Engineering) (

https://virtual-campus.eu/alien/problems/droidbotx-gui-testing-tool/, accessed on 9 February 2021) virtual platform. The tool was used to evaluate the practical usefulness and applicability of our approach. DroidbotX constructs a state-transition model of an app and generates test cases. These test cases follow the sequences of events that are the most likely to explore the app’s functionalities. The proposed approach was evaluated against state-of-the-art test generation tools. DroidbotX was compared with Android Monkey [

27], Sapienz [

16], Stoat [

15], Droidbot [

28], Humanoid [

29] on 30 Android apps from the F-Droid repository [

30].

In this study, instruction coverage, method coverage, activity coverage, and crash detection were analyzed to assess the performance of the approach. DroidbotX achieved higher instruction coverage, method coverage, activity coverage, and detected more crashes than the other tools on the 30 subject apps. Specifically, DroidbotX consistently resulted in 51.5% instruction coverage, 57% method coverage, 86.5% activity coverage, and triggered 18 crashes over the five tools.

The rest of this paper is divided as follows.

Section 2 describes a test case generation for Android apps.

Section 3 discusses reinforcement learning and focused on Q-Learning.

Section 4 presents the background of Android apps and

Section 5 discusses the related GUI testing tools.

Section 6 presents the proposed approach while

Section 7 presents an empirical evaluation.

Section 8 analyzes and discusses the findings.

Section 9 describes threats to validity and

Section 10 concludes the paper.

3. Q-Learning

Q-learning is a type of model-free technique of reinforcement learning (RL) [

36]. RL is a branch of machine learning. Unlike other branches like supervised and unsupervised learning, its algorithms are trained using reward and punishment to interact with the environment. It is based on the concept of behavioral psychology that works on interacting directly with an environment which plays a key component in artificial intelligence. In RL techniques, a reward is observed if the agent reaches an objective. RL techniques include Actor-critic, Deep Q Network (DQN), State-Action-Reward-State-Action (SARSA), and Q-Learning. The major components of RL are the agent and the environment. The agent serves as an independent entity that performs unconstrained actions within the environment in order to achieve a specific goal. The agent performs an activity on the environment and uses trial-and-error interactions to gain information about the environment. There are four other basic concepts in the RL system along with the agent and the environment: (i) policy, (ii) reward, (iii) action, and (iv) state. The state describes the present situation of the environment and mimics the behavior of the environment. For example, this gives rise to the current situation and action. The model might predict the resultant next state and the next reward. Models are used to plan and decide on a course of action by considering possible future situations before they are experienced. Similarly, the reward is an abstract concept to evaluate actions. Reward refers to immediate feedback after performing an action. The policy defines the agent approach to select an action from a given state. It is the core of the RL agent and sufficient to determine behavior. In general, policies may be stochastic. An action is a possible move in a particular state.

Q-Learning is used to find an optimal action-selection policy for the given AUT, where the policy sets out the rule that the agent must follow when choosing a particular action from a set of actions [

37]. There is an action execution that is immediately preceded to choose each action, which moves the agent from the current state to a new state. This agent is rewarded with a reward

r upon executing the action

a. The value of the reward is then measured using the reward function

R. For the agent, the main aim of Q-Learning is to learn how to act in an optimal way that maximizes the cumulative reward. Thus, a reward is granted when an entire sequence of actions is carried out.

Q-Learning uses its Q-values to resolve RL problems. For each policy

, the action-value function or quality function (Q-function) should be properly defined. Nonetheless, the value

is the expected cumulative reward that can be achieved by executing a sequence of actions that starts with action

from

; and then follows the policy

. The optimal Q-function

is the maximum

expected cumulative reward achievable for a given (state, action) pair over all possible policies.

Intuitively, if is known, the optimal strategy at each step is to take action that maximizes the sum: , where is the immediate reward of the current step, while stands for the current time step, hence denotes the next one. The discount value () is introduced to control the long-term rewards’ relevance with the immediate one.

Figure 1 presents the RL mechanism in the context of the Android app testing. In automated GUI testing, AUT is the environment; the state is the set of actions available on the AUT screen. The GUI actions are the set of actions available in the current state of the environment, and the testing tool is the agent. Initially, the testing tool does not know the AUT. As the tool generates and executes test event input based on trial-and-error interaction, the knowledge about AUT is updated to find a policy that facilitates systematic exploration to make efficient future action selection decisions. This exploration generates event sequences that can be used as test cases.

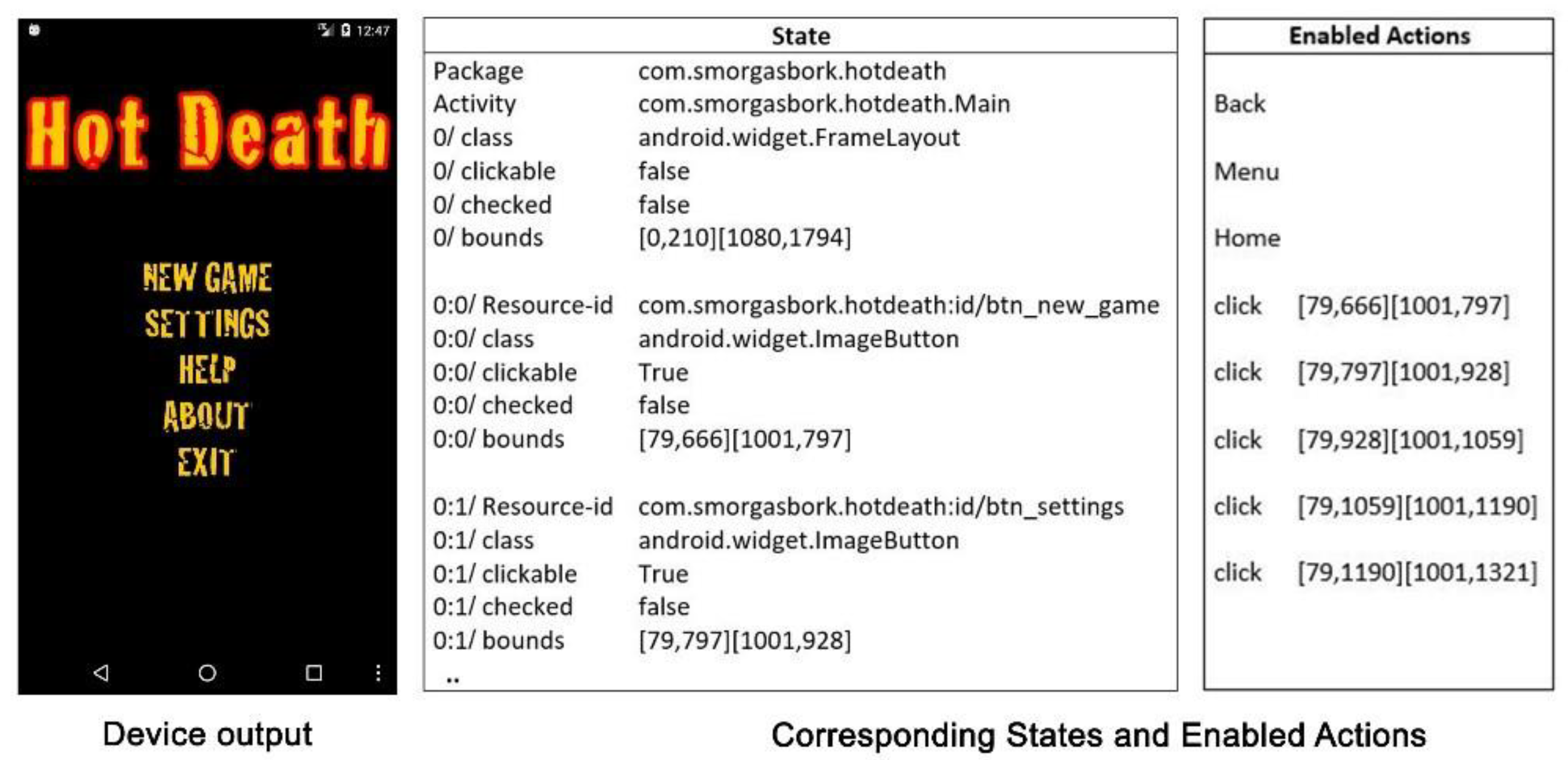

4. Android Apps Execution

There are four key components of an Android app as follows: (i) activities, (ii) services, (iii) broadcast receivers, and (iv) content providers. Each component represents a point where the user or system communicates with the GUI components. These components must be declared in the corresponding XML (eXtensible Markup Language) file. Android app manifest is an invaluable XML file stored in the root directory of the app’s source as AndroidManifest.xml. When the device is compiled, the manifest file will be converted into a binary format. This file provides the necessary details about the device to the Android system, such as the package name and App ID, the minimum level of API (application programming interface) required, the list of mandatory permissions, and the hardware specifications.

Activity is the interface layer of the application the user manipulates to engage. Each activity represents a group of layouts such as the linear layout, which horizontally or vertically organizes the screen items. The interface includes GUI elements, known as widgets or controls. These elements are buttons, text boxes, search bars, switches, and number pickers. These elements allow users to interact with the apps. The widgets are handled as task stacks within the system. When an app is launched in the Android system, a new activity starts by default. It is usually positioned at the peak of the current stack and automatically becomes the running activity. Furthermore, the previous activity then remains in the stack just below it and does not come back to the foreground until the new activity exits. Stacks of operation can be seen on the screen. Activity is the primary target of testing tools for the Android app as the user navigates through the screen. The complete lifecycle of an activity is described by the following Activity methods; created, paused, resumed, and destroyed. These methods are linked together to disable in case the activity changes status. The activity lifecycle is tightly coupled with the Android framework, which is managed by an essential service called the Activity manager [

38].

The activity comprises a set of views and fragments that present information to the user while interacting with the application. A fragment is a class that contains a portion of the user interface or behavior of the app, which can be placed as part of an activity. Fragments support more dynamic and flexible user interface (UI) designs on a large screen such as tablets. It was implemented in Android from API level 11 onwards. The fragment must always be embedded in an activity, and the fragment’s lifecycle is directly affected by the lifecycle of the host activity. Fragments inside the activity will be stopped if the activity is stopped and destroyed if the activity is destroyed.

8. Results

In this section, the research questions were answered by measuring and comparing four aspects: (i) instruction coverage, (ii) method coverage, (ii) activity coverage, and (iv) the number of detected crashes achieved by each testing tool on selected apps in our experiments.

Table 3 shows the results obtained from the six testing tools. The gray background cells in

Table 3 indicate the maximum value achieved during the test. The percentage value is the rounded-up value obtained from the average of the five iterations of the tests performed on each AUT.

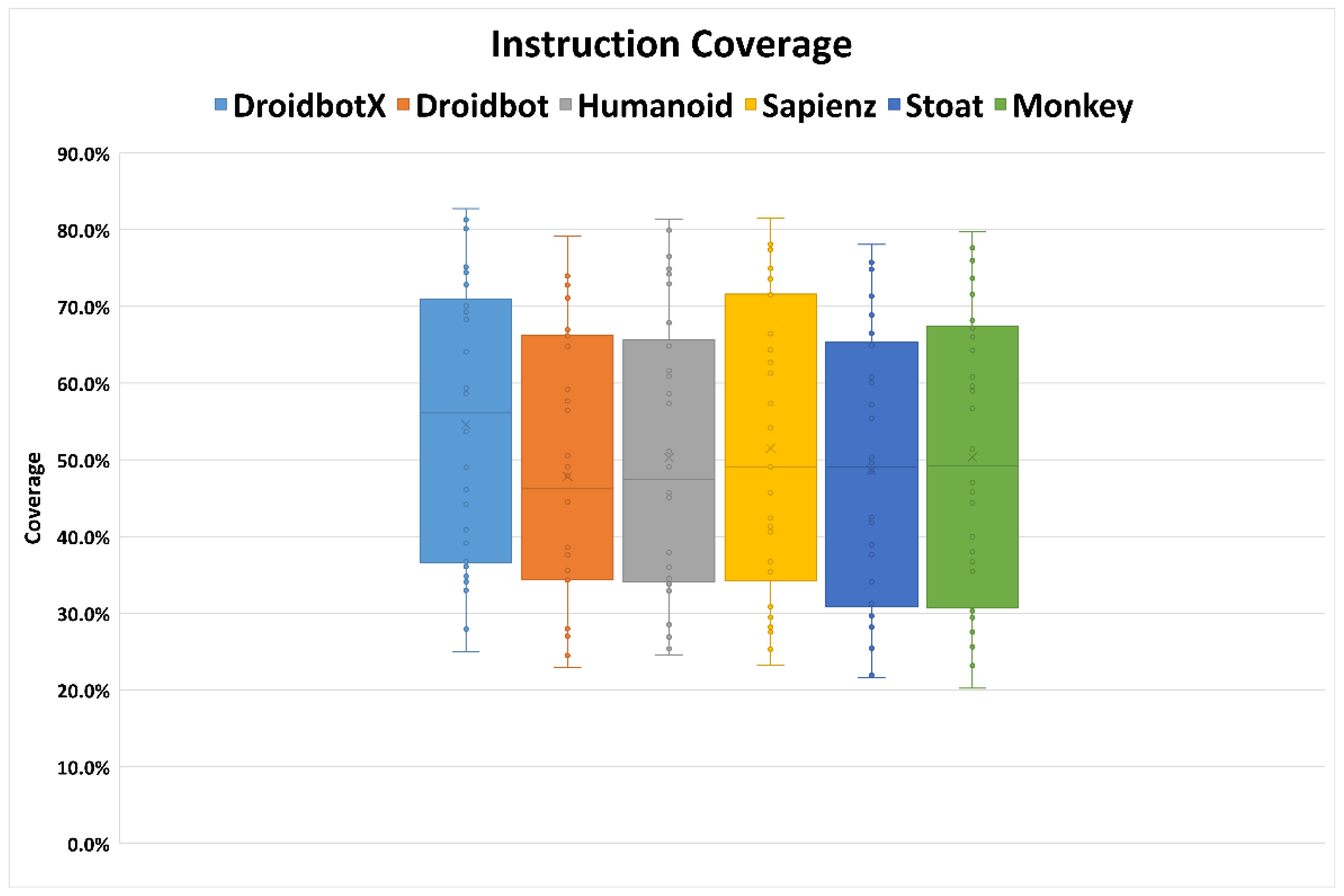

RQ.1: How does the coverage achieved by DroidbotX compare to the state-of-the-art tools?

- (1)

Instruction coverage: The overall comparison of instruction coverage achieved by testing tools on selected Android apps is shown in

Table 3. On average, DroidbotX achieved 51.5% instruction coverage, which is the highest across the compared tools. It achieved the highest value on 9 of 30 apps (including four ties, i.e., where DroidbotX covered the same number of instructions as another tool) compared to other tools. Sapienz achieved 48.1%, followed by Android Monkey (46.8%), Humanoid (45.8%), Stoat (45%), and Droidbot (45%).

Figure 5 presents the boxplots, where x indicates the mean of the final instruction coverage results across target apps. The boxes provide the minimum, mean, and maximum coverage achieved by the tools. Better results from DroidbotX can be explained as it accurately identifies which parts of the app are inadequately explored. The DroidbotX approach is used to explore the UI components by checking all actions available in each state and avoiding the use of the explored action to maximize coverage. In comparison, Humanoid achieved a 45.8% average value and had the highest coverage on 4 out of 30 apps due to its ability to prioritize critical UI components. Humanoid chooses from 10 actions available in each state that are likely to interact with human users.

Android Monkey’s coverage was close to Sapienz’s coverage during a one-hour test. Sapienz uses Android Monkey to generate events and uses an optimized evolutionary algorithm to increase coverage. Stoat and Droidbot achieved lower coverage than the other four tools. First, Droidbot explores UIs in depth-first order. Although this greedy strategy can reach deep UI pages at the beginning, it may get stuck because the order of the event execution is fixed at runtime. Second, Droidbot does not explicitly revisit the previously explored states, and this may fail to include a new code that should be reached by different sequences.

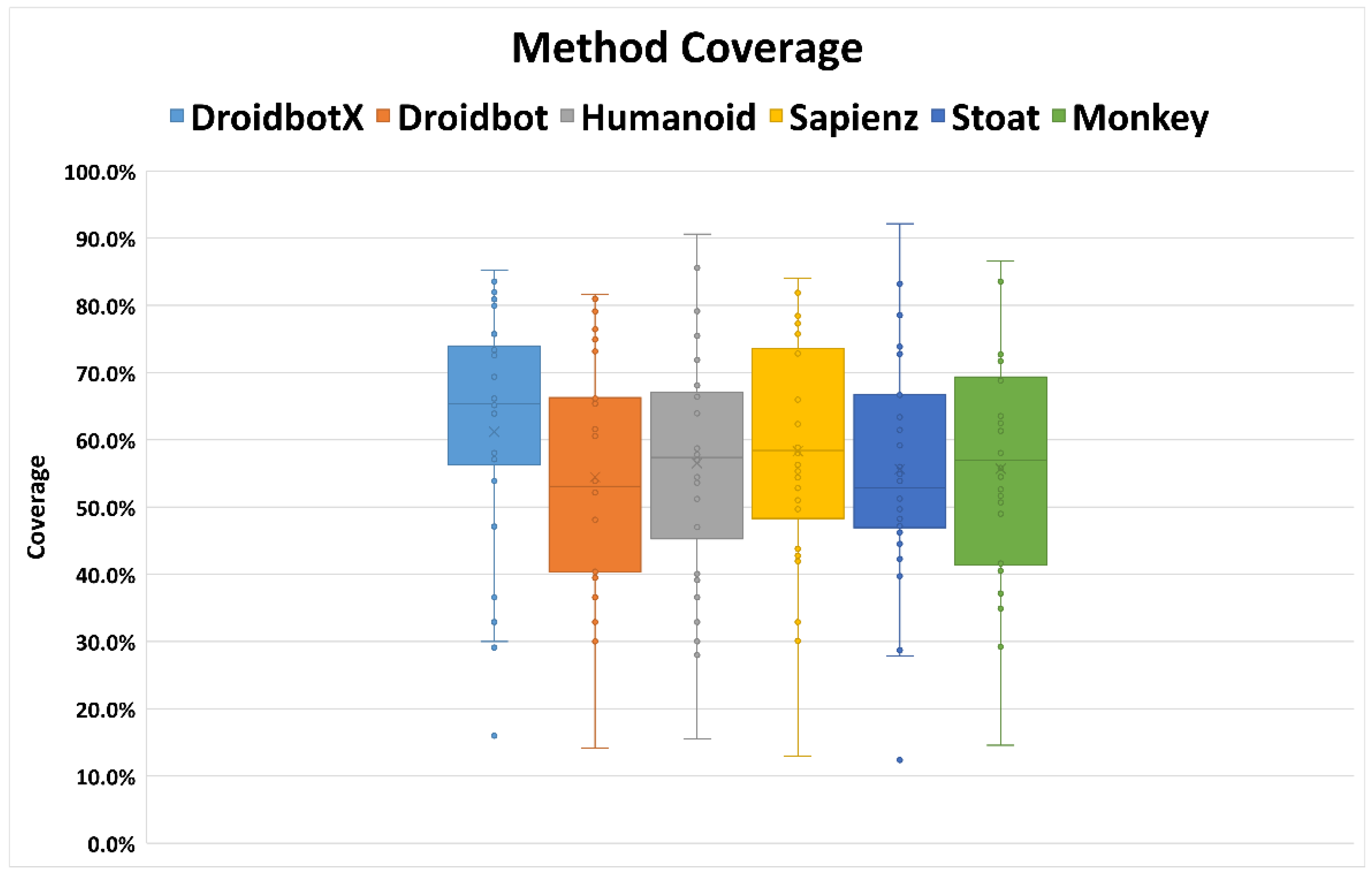

- (2)

Method coverage: DroidbotX significantly outperformed state-of-the-art tools in method coverage with an average value of 57%. The highest value was achieved on 9 out of 30 apps (including three ties where the tool covered the same method coverage as another tool).

Table 3 shows that the coverage of app instructions obtained by the tools is lower than that of the method. This indicates that the method coverage cannot fully cover all the statements in the app’s method. On average, Sapienz, Android Monkey, Humanoid, Stoat, and Droidbot achieved 53.7%, 52.1%, 51.2%, 50.9%, and 50.6% of method coverage, respectively. Stoat and Droidbot did not obtain the highest coverage of 50% on 10 of 30 apps after five rounds of testing. In contrast, DroidbotX achieved the highest method coverage of 50% in the 24 apps that were tested. In comparison, Android Monkey obtained less than 50% method coverage in eight apps. This study concluded that the AUT functionalities can be accomplished and explored using the observe-select-execute strategy and tested on standard equipment. Sapienz displayed the best method coverage on 5 out of 30 apps (including four ties where the tool covered the same method coverage as another tool). Sapienz’s coverage was significantly higher for some apps such as “WLAN Scanner”, “HotDeath”, “ListMyApps”, “SensorReadout”, and “Terminal emulator”. These apps have functionality that requires complex interactions with validated text input fields. Sapienz uses the Android Monkey input generation, which continuously generates events without waiting for the effect of the previous event. Moreover, Sapienz and Android Monkey can generate several events, broadcasts, and text that have not been supported by other tools. DroidbotX obtained the best results for several other apps, especially “A2DPVolume”, “Blockinger”, “Ethersynth”, “Resdicegame”, “Weather Notification”, and “World Clock”. The DroidbotX approach assigns Q-values to encourage the execution of actions that lead to new or partially explored states. This enables the approach to repeatedly execute high-value action sequences and revisit the subset of GUI states that provides access to most of the AUT’s functionality.

Figure 6 presents the boxplots, where x indicates the mean of the final method coverage results across target apps. DroidbotX had the best performance compared to state-of-the-art tools, and Android Monkey was used as a reference for evaluation in most Android testing tools. Android Monkey can be considered a baseline because it comes with an Android SDK and is popular among developers. Android Monkey obtained a lower coverage compared to DroidbotX because of its redundancy and random exploratory approach.

- (3)

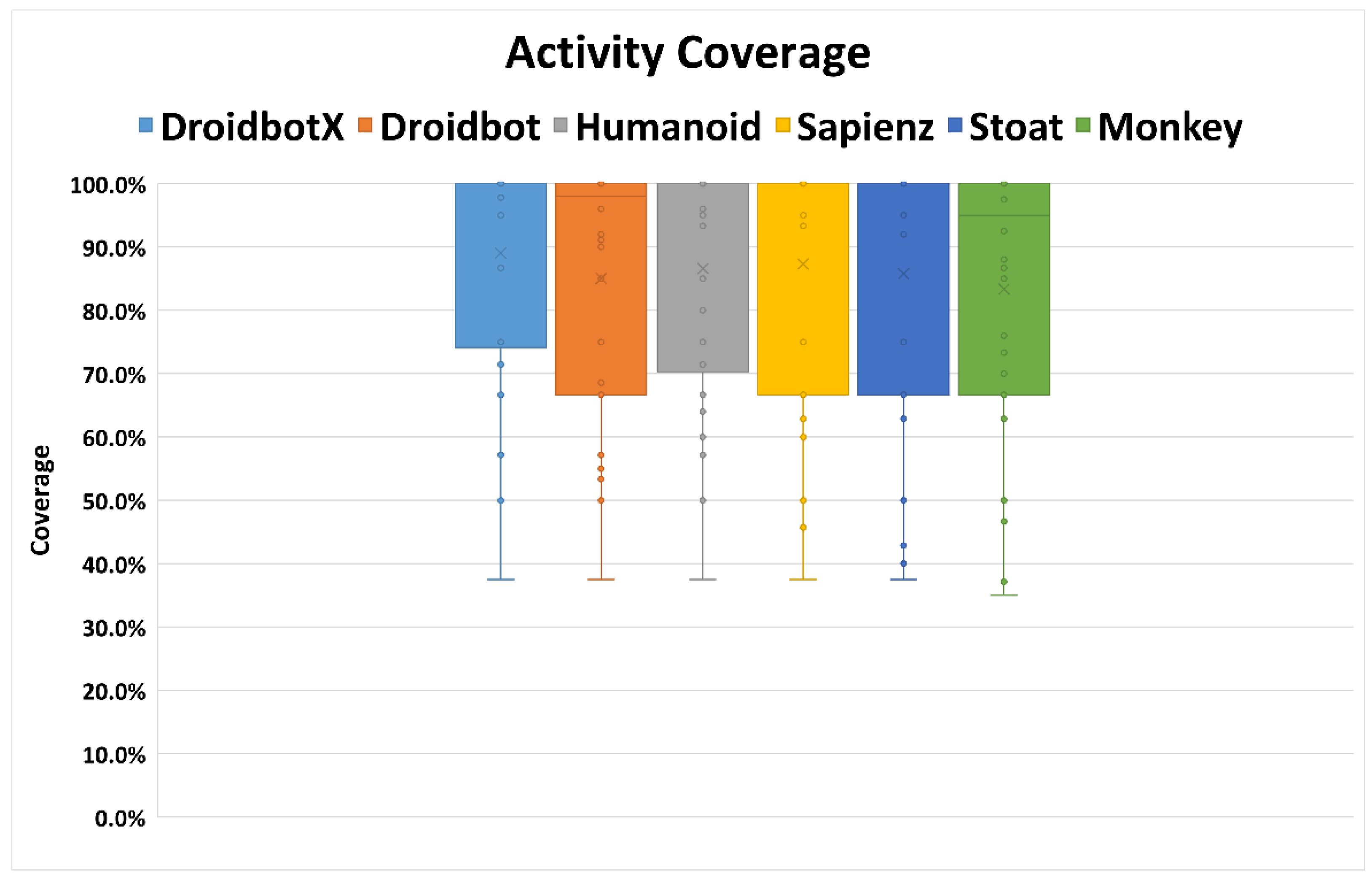

Activity coverage: the activity coverage is measured by intermittent observation of the activity stack on the AUT and recording all activities listed down in the android manifest file. The activity coverage metric was chosen because, once DroidbotX has reached an activity, it can explore most of the activity’s actions. The results determine activity coverage differences between DroidbotX and other state-of-the-art tools. The resulting average value of the tools revealed that the activity coverage performed better than instruction and method coverage, as shown in

Table 3.

DroidbotX outperformed the other tools in its activity coverage, such as instruction and method coverage. DroidbotX has an average coverage of 86.5%, which was best achieved by the “Alarm Clock” app (including 28 ties, i.e., whereby DroidbotX covered the same number of activities as another tool). DroidbotX outperformed other tools because it did not explicitly revisit previously explored states due to its reward function. This was followed by Sapienz and Humanoid, with the average mean value of activity coverage at 84% and 83.3%, respectively. Stoat successfully outperformed Android Monkey in activity coverage with an average activity coverage of 83% due to an intrusive null intent fuzzing that can start an activity with empty intents. All tools under study were able to cover more than 50% of coverage on 25 apps, and four testing tools covered 100% activity coverage on 15 apps. Android Monkey, however, achieved less than 50% activity coverage of about three apps. Android Monkey achieved the least activity coverage with an average mean value of 80%.

Figure 7 shows the variance of the mean activity coverage of 5 runs across all 30 apps of the tool. The horizontal axis shows the tools used for the comparison. The vertical axis shows the percentage of activity coverage. Activity coverage was higher than the instruction and method coverage. DroidbotX, Droidbot, Humanoid, Sapienz, Stoat, and Android Monkey obtained a 100% coverage increased from a mean coverage of 89%, 85%, 86.6%, 87.3%, 85.8%, and 83.4%, respectively. All tools were able to cover above 50% of the activity coverage. Although Android Monkey implemented more types of events than other tools, it achieved the least activity coverage. Android Monkey generates random events at random positions in the App activities. Therefore, its activity coverage can differ significantly from app to app and may be affected by the number of events sequences generated. To sum up, the high coverage of DroidbotX was mainly due to the ability of DroidbotX to perform a meaningful sequence of actions that could drive the app into new activities.

RQ.2: How effective is DroidbotX in detecting unique app crashes compared to the state-of-the-art tools?

A crash is uniquely identified by the error message and the crashing activity. LogCat [

71] is used to repeatedly check the crashes encountered during the AUT execution. LogCat is a tool that uses the command-line interface to dump logs of all the system-level messages. Log reports were manually analyzed to identify unique crashes from the error stack following the Su et al. [

15] protocol. First, crashes unrelated to the app’s execution by retaining only exceptions containing the app’s package name and filter crashes of the tool itself, or initialization errors of the apps in the Android emulator. Second, compute a hash over the sanitized stack trace of the crash to identify unique crashes. Different crashes should have a different stack trace and thus a different hash. Each unique crash exception is recorded per tool, and the execution process is repeated five times to prevent randomness in the results. The number of unique app crashes is used as a measure of the performance of the crash detection tool. Crashes detected by tools on a different version of Android via normalized stack traces were not compared because different versions of Android have different framework code. In particular, Android 6.0 uses the ART runtime while Android 4.4 uses Dalvik VM, different runtime environments have different thread entry methods. Based on

Figure 8, each of the tools compared complements the others in crash detection and has its advantages. DroidbotX triggered an average of 18 unique crashes in 14 apps, followed by Sapienz (16), Stoat (14), Droidbot (12), Humanoid (12), and Android Monkey (11).

Like activity coverage, Android Monkey remains the same as it has the least capacity to detect crashes due to its exploratory approach that generates a lot of ineffective and redundant events.

Figure 8 summarizes the distribution of crashes by the six testing tools. Most of the bugs are caused by accessing null references. Common reasons are that developers forget to initialize references, access references that have been cleaned up, skip checks of null references, and fail to verify certain assumptions about the environments [

57]. DroidbotX is the only tool to detect IllegalArgumentException on the ‘‘World Clock’’ app, because it is capable of managing the exploration of states, and systematically sends back button events that may change the activity life cycle. This bug is caused by an incorrect redefinition of the onPause method of activity. Android apps may have incorrect behavior due to mismanagement of the activity’s lifecycle. Sapienz uses Android Monkey to generate an initial population of event sequences (including both user and system events) prior to genetic optimization. This allows Sapienz to trigger other types of exception, including ArrayIndexOutOfBoundsException, and ClassCastException. For the “Alarm Clock” app, DroidbotX and Droidbot detected a crash on an activity that was not discovered by other tools in the five runs. Manually inspected several randomly selected crashes to confirm that they do appear in the original APK as well, and found no discrepancy between the original and the instrumented APK behaviors.

RQ.3: How does DroidbotX compare to the state-of-the-art tools in terms of test sequence length?

The effectiveness of events sequence length on test coverage and crash detection was investigated. The event sequence length generally shows the number of steps required by the test input generation tools to detect a crash. It is critical to highlight its effectiveness due to its significant effects on time, testing effort, and computational costs.

Figure 9 depicts the progressive coverage of each tool over the threshold time used (i.e., 60 min). The progressive average coverage for all 30 apps was calculated every 10 min for each of the test generation tools in the study and a direct comparison of the final coverage was published. In the first 10 min, the coverage for all testing tools increased rapidly, as the apps had just started. At 30 min, DroidbotX achieved the highest coverage value compared to other tools. The reason is that the UCB exploration strategy implemented in DroidbotX finds events based on their reward and Q-value, which eventually tries to select and execute the previously unexecuted or less executed events, thus aiming for high coverage.

Sapienz coverage increased rapidly, as the apps had just started, whereas all UI states were new but could not exceed the peak reached after 40 min. Sapienz has a high tendency to explore visited states, which could generate more event sequences. Stoat, Droidbot, and Humanoid had almost the same result and had better activity coverage than Android Monkey. Android Monkey could not exceed the peak reached after 50 min. The reason is that a random approach generates the same set of redundant events leading to a fall in its activity exploration ability. It is essential to highlight that these redundant events produced insignificant coverage improvement as the time budget increased.

Table 4 shows that the Q-Learning approach implemented in DroidbotX achieved 51.5% instruction coverage, 57% method coverage, 86.5% activity coverage, and triggered 18 crashes within the shortest event sequence length compared to other tools.

The results show that adapting Q-Learning with the UCB strategy can significantly improve the effectiveness of the generated test cases. DroidbotX generated a sequence length of 50 events per AUT state with an average of 623 events per run across all apps (which is smaller than the default maximum sequence length of Sapienz). DroidbotX completed exploration before reaching the maximum number of events (set to 1000) within the time limit. Sapienz produced 6000 events and optimized events sequence lengths through the generation of 500 events per AUT state. Nevertheless, it created the largest number of events after Android Monkey. However, the coverage improvement was closer to Humanoid and Droidbot, which generated a smaller number of events. Both Humanoid and Droidbot generated 1000 events per hour. Sapienz uses Android Monkey that requires many events, which may include many redundant events to achieve high coverage. Hence, the coverage gained by Android Monkey only increases slightly as the number of events increases. Thus, a long events sequence length led to a minor positive effect on coverage and crash detection.

Table 5 shows the statistics of models built by Droidbot, Humanoid, and DroidbotX. These tools use the UI transition graph to save the memory of state transitions. The graph model enables DroidbotX to manage the exploration of states systematically to avoid being trapped in a certain state, which also can help to minimize unnecessary transitions. DroidbotX generates an average of 623 events to construct the graph model, while Droidbot and Humanoid generate 969 and 926 average events, respectively. Droidbot cannot exhaustively explore app functions due to its simple exploration strategies. The depth-first systematic strategy used in Droidbot is surprisingly much less effective than the random strategy since it visits UIs in a fixed order and spends much time on restarting the app when no new UI components are found. Stoat requires more time for test execution due to its model construction in the initial phase which consumes time. Model-free tools such as Android Monkey and Sapienz can easily mislead exploration because of the lack of connectivity information between GUIs [

54]. The model constructed by DroidbotX is still not complete since it cannot capture all possible behaviors during exploration, which is still an important research goal on GUI testing [

15]. All the events would introduce non-deterministic behavior if they were not properly modeled such as system events and events coming from motion sensors (e.g., accelerometer, gyroscope, and magnetometer). Motion sensors are used for gesture recognition which refers to recognizing meaningful body motions including the movement of the fingers, hands, arms, head, face, or body performed with the intent to convey meaningful information or to interact with the environment [

72]. DroidbotX will be extended in the future to include more system events.