2.3. Construction of SU(2): The Geometrical Meaning of Spinors

The idea behind the meaning of a

spinor of SU(2) is that we will no longer rotate vectors, but that we will “rotate” rotations. To explain what we mean by this, we start from the following diagram for a group

G:

This diagram tries to illustrate a table for group multiplication. Admittedly, we will not be able to write down such a table for an infinite group, but we will only use it to render more vivid the ideas. Such a table tells us everything about the group we need to know: one can check on such a table that the group axioms are satisfied, and one can do all the necessary calculations. For the rotation group, we do not need to know how the rotations work on vectors. We might need to know how they work on vectors to construct the table, but once this task has been completed, we can forget about the vectors. The infinite table in Equation (

2) defines the whole group structure. When we look at one line of the table—the one flagged by the arrow—we see that we can conceive a group element

in a hand-waving way as a “function”

that works on the other group elements

according to:

. Thus, we can identify

with a function. More rigorously, we can say that we represent the group element

by a group automorphism

F

. A rotation operates in this representation not on a vector, but on other rotations. We “turn rotations” instead of vectors. This is a construction that always works: The automorphisms of a group

G are themselves a group that is isomorphic to

G, such that they can be used to represent

G.

It can be easily seen that this idea regarding the meaning of a spinor is true. As we will show below in Equation (

8), the general form of a rotation matrix

in SU(2) is:

A

spinor

can then be seen to be just a stenographic notation for a

SU(2) rotation matrix

by taking its first column

:

This is based on the fact that the first column of

contains already the whole information about

and that

. Instead of

, we can write then

without any loss of information. Additionally, we can alternatively use the second column

as a shorthand and as a (so-called) conjugated spinor. (In [

23] it is explained that

corresponds to a reversal. But in this paper we will hardly pay attention to the conjugated spinors. We will, almost all the time, focus our attention on the first column as the representation of a rotation). We have this way discovered the well-defined geometrical meaning of a spinor. As already stated, it is just a group element. This is all that spinors in SU(2) are about. Spinors code group elements. Within SU(2),

rotation matrices operate on

spinor matrices. These spinor matrices represent themselves the rotations that are “rotated”. Explaining that a spinor in SU(2) is a rotation is in our opinion far more illuminating than describing it as the square root of an isotropic vector according to the textbook doctrine. It is this insight that breaks the deadlock of our incomprehension. We will explain the textbook relationship between spinors and square roots of isotropic vectors in

Section 2.7.

Stating that a spinor in SU(2) is a rotation is actually an

abus de langage. A spinor is, just like a

SO(3) rotation matrix, an unambiguous representation of a rotation within the group theory. But due to the isomorphism we can merge the concepts and call the matrix or the spinor a rotation, in complete analogy with what we proposed in

Section 1.4. For didactical reasons, we can consider a spinor as conceptually equivalent to a system of “generalized coordinates” for a rotation.

We should not be surprised by the removal of the vectors from the formalism in favour of the group elements themselves, as described above. Group theory is all about this kind of abstraction. We try to obtain general results from just a few abstract axioms for the group elements, without bothering about their intuitive meaning in a more specific context of a practical realization. Additionally, as far as representations are concerned, we do not have to get back to a specific context. We always have a representation at hand in the form of group automorphisms. This is a well-known fact, but in its general abstract formulation this fact looks indeed very abstract. Here, we can see what this abstract representation in terms of automorphisms intuitively means in the context of the specific example of the rotation group. The idea is then no longer abstract: We can identify the matrices of SU(2) with the group automorphisms , and the rotation matrices with the group elements , such that is algebraically represented by: .

Remark 4. From this, it must be already obvious that spinors in SU(2) do not build a vector space as we stressed in Section 2.2. The three-dimensional rotation group is not a vector space, but a curved manifold (because the group is non-abelian). We cannot try to find a meaning for a linear combination of SU(2) matrices , in analogy to what we can do with matrices in SO(3), where we can fall back on the fact that matrices of the image space correspond to elements of a vector space or . The reason for this is that the spinors do not build a vector space, such that we cannot define by falling back on some definition for in the image space. Additionally, the very reason why we cannot define , is that we cannot define . In trying to define linear combinations of SU(2) matrices or spinors, we thus hit a vicious circle from which we cannot escape. Furthermore, the relation between spinors and vectors of is not linear as may have already transpired from Atiyah’s statement cited above and as we will explain below (see Section 2.7). This frustrates all attempts to find a meaning for a linear combination of spinors in SU(2) based on the meaning of the linear combination with the same coefficients in SO(3). Therefore, trying to make sense of linear combinations of spinors is an impasse. Remark 5. We can extrapolate [17] the idea that the representation theory “rotates rotations rather than vectors” to SO(n), such that we will then obtain a good geometrical intuition for the group theory. If we could also extrapolate to SO(n) the idea that spinors are group elements, we would then obtain a very good intuition for spinors that is generally valid. We could then, e.g., also understand why spinors constitute an ideal . The ideal would then just be the group and the group is closed with respect to the composition of rotations. Remark 6. Unfortunately, things are not that simple and we will not be able to realize this dream. The idea that spinors are just rotations gives us a very nice intuition for them in SU(2). However, the interpretation in SU(2) of a single column matrix as a shorthand for the whole information needed to define a group element unambiguously is not correct in general. A first example of a case where the column matrices cannot be identified with group elements is the representation SL(2,) of the homogeneous Lorentz group. In fact, defining an element of the homogeneous Lorentz group requires specifying six independent real parameters. That information cannot possibly be present in a single column of the representation matrix. A second example is the representation that is given by the Clifford algebra of SO(n). Characterizing an element of the rotation group SO(n) of requires specifying independent real parameters (see the discussion about the Vielbein

in Section 2.7.1). The complete information regarding these independent real parameters cannot always be crammed into the complex column matrices used in the representations, because there is a small set of values of n for which . The information about a group element contained in a column matrix is in these cases thus forcedly partial. These examples show that the identification between group elements and the column matrices that we call spinors anticipated here is not true in general. Thus, the general meaning of a spinor cannot be that it is a group element. What the general meaning could be becomes then less clear such that one has to consider like Cartan isotropic vectors, representing oriented planes, as discussed in [17]. However, due to the fact that we are forced to introduce a superposition of two states in order to derive the Dirac equation (see Section 4.1), the column matrices used in the Dirac theory will again contain all of the information regarding the group elements. For the applications in QM, we can therefore maintain the idea that a spinor is a group element! Furthermore, what we stated in the previous remark does not imply that we do not understand the algebra of the representation. In fact, the representation matrices of the group SO(n) do represent the group elements. For the application in QM, this means that we will really completely understand the formalism. In the approach to the general case SO(n) the main idea will, thus, be to consider the formalism just as a formalism of rotation matrices and the column matrices as auxiliary sub-quantities which encase only a subset of the complete information about group elements. Remark 7. We must point out that we do not know with certainty to which extend Atiyah wanted to be general when he talked about “the square root of geometry”. We think that what Atiyah had in mind was based on Equations (29) and (57), rather than making a general statement for SO(n). We can see from Equations (29) and (57) that the terminology “square root” used by Atiyah is only a loose metaphor, and in the generalization of the approach to groups of rotations in , with , the metaphor will become even more loose [17]. For SO(n), the ideas can be based on the developments in Section 2.6, where we point out a quadratic relationship between vectors and spinors, which is generally valid. However, for the moment, we want to explore the idea of a single-column spinor that contains the complete information about a rotation in SU(2), where the intuitively attractive idea that a column spinor represents a group element is viable. It remains to explain under which form the information regarding the rotation is wrapped up inside this column matrix. This is done in several steps.

2.4. Generating the Group From Reflections

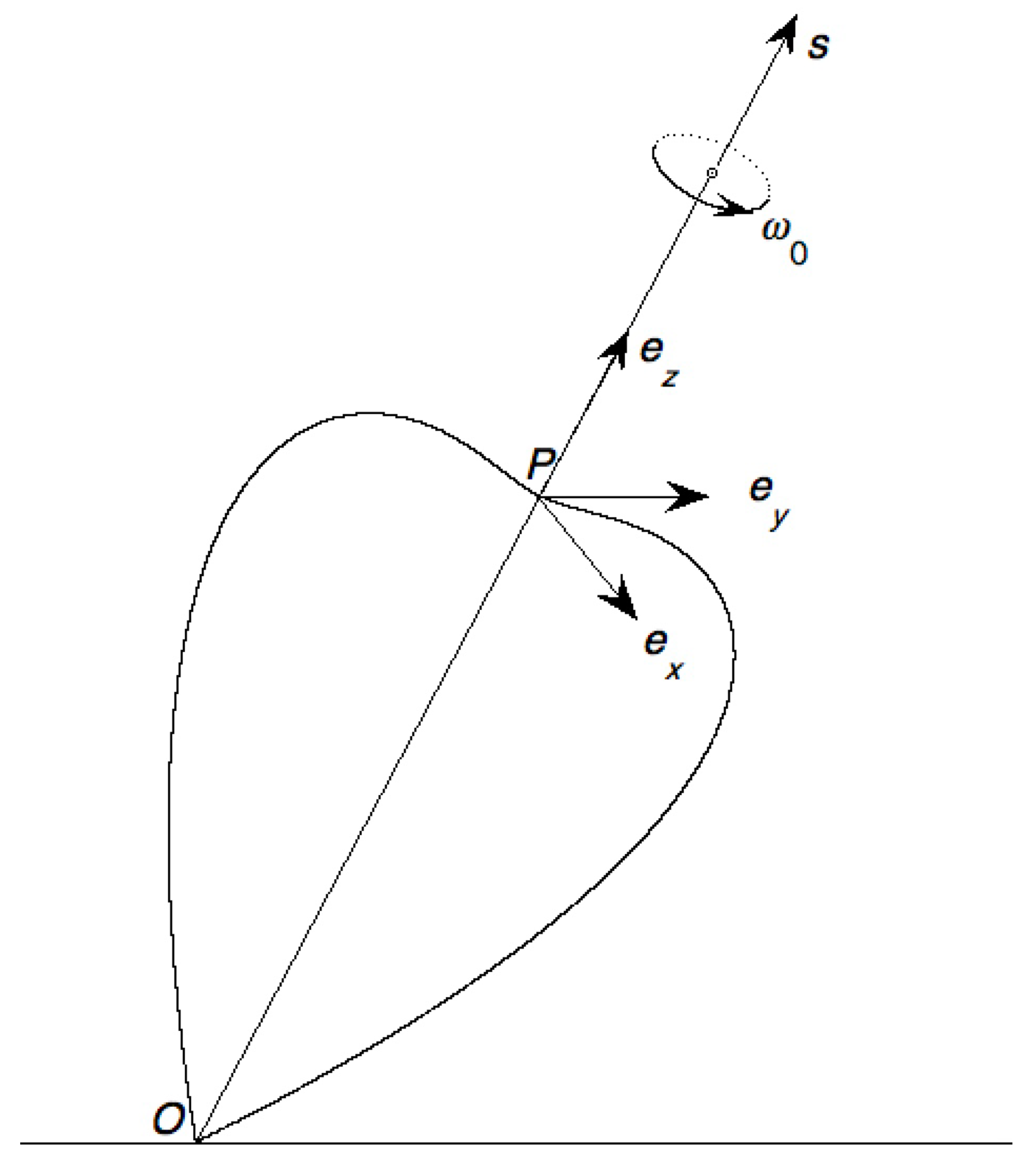

The first step is deciding that we will generate the whole group of rotations and reversals from reflections, based on the idea that a rotation of SO(3) is the product of two reflections, as explained in

Figure 1. Therefore, we need to cast a reflection into the form of a

-matrix. The coordinates of the unit vector

, which is the normal to the reflection plane that defines the reflection

A, should be present as parameters within the reflection matrix

but we do not know how. Therefore, we heuristically decompose the matrix

that codes the reflection

A defined by

linearly as

, where

are unknown matrices, as summarized in the following diagram:

If we know the matrix , this will tell us where and with which coefficients pops up in . The same applies mutatis mutandis for and . The matrices , we use to code this way reflection matrices within , can be found by expressing isomorphically through what defines a reflection, viz. that the reflection operator A is idempotent. We find out that this can be done, provided the three matrices simultaneously satisfy the six conditions , i.e., provided we take, e.g., the Pauli matrices for . Here, is the unit matrix.

Remark 8. Physicists among the readers will recognize that this construction is algebraically

completely analogous to the one that introduces the gamma matrices in the Dirac equation. However, geometrically, it is entirely different. Dirac’s approach aims at taking the “square root of the Klein-Gordon equation”. Thus, it searches a way to write vectors, e.g., the four-vector as a linear expression that permits to interpret it as the square root of a quadratic form, e.g., , as e.g., explained by Deheuvels [28]. Therefore, Dirac’s derivation is taking place within the context of the algebra of vectors and multi-vectors. Our approach consists in finding the expression for reflections. Our derivation takes thus place within the algebra of group elements. The two approaches do not define the same geometrical objects and not the same algebras. Remark 9. We may note that, to an extent, the fact that our heuristics work is a kind of a fluke, because the fact that the reflection matrix is linear in , , within SU(2) is special and not general. It is typical of the spinor-based representations that we present in this paper. A counter-example is the expression for a reflection matrix in SO(3), which is quadratic in the parameters , , and : Writing this way permits to verify immediately algebraically that it corresponds to . Writing as in Equation (6) shows that the expression is purely quadratic. This is due to the fact that vectors in SO(3) are rank-2 tensor products of the spinors of SU(2), as we will discuss in this paper. We may also note that we have defined the reflection matrices without defining a “vector space” on which they would be working. They are defined en bloc

, and it is this aspect that saddles us up with the problem of the meaning of the column matrices, called spinors, which occur in the formalism. We are used to qualify such column matrices as column vectors, but, as we pointed out, spinors are not vectors. Thus, it is no longer natural to break down the square matrices into columns. The complete information resides in the block of the square matrix. When we break up that block into columns, the information contained in a column may be partial, and perhaps the question what a column then means might be ill-conceived (see [17], pp. 22–23). This complies with the idea that is expressed in Remark 6 in Section 2.3.

We discuss, in

Section 2.9 of [

17], that the solution

is not unique and that there are many other possible choices. However, we follow here the tradition to adopt the choice of the Pauli matrices. The reflection matrix

is thus given by:

The symbol serves here to warn that the notation is a purely conventional shorthand for . It does not express a true scalar product involving , but just exploits the mimicry with the expression for a scalar product to introduce the shorthand.

By expressing a rotation as the product of two reflections, one can then derive the well-known Rodrigues formula:

for a rotation by an angle

around an axis that is defined by the unit vector

. To derive this result, it suffices to consider two reflections

A (with matrix

) and

B (with matrix

), whose planes contain

, and that have an angle

between them. Using the algebraic identity

then yields the desired result. There is an infinite set of such pairs of planes, and which precise pair one chooses from this set does not matter.

Starting from Equation (

8), it is easy to check that each rotation matrix has the form that is given by Equation (

3) and, therefore, belongs to SU(2). Conversely, each element of SU(2) is a rotation matrix. We can now also appreciate why SU(2) is a double covering of SO(3). Consider the matrix product:

in the derivation of the Rodrigues equation in Equation (

8). Imagine that we keep

A fixed and increase the angle

between the reflection planes

and

of

A and

B from

onwards. Of course,

is the angle of the rotation

. This means that the reflection plane

with normal vector

that defines

B is rotating. In the matrix product that occurs in Equation (

9), the numbers in the matrix

would remain fixed, while the numbers in the matrix

would be continuously changing, like the digits that display hundredths of seconds on a wrist watch. When the starting value of the angle

between the reflection planes

and

is zero, the reflection planes are parallel,

, and the starting value of

is

. When

reaches the value

, the rotating reflection plane

will have come back to its original position parallel to the fixed reflection plane

, and the resulting rotation

will correspond to a rotation over an angle

.

As far as group elements are concerned, we have, thus, made a full turn both of the reflection

B and the rotation

when

will have made a turn of

in

. This is because we only need to rotate a plane in

over

to bring it back to its original position. The consequence of this is that we can define any plane

(or reflection

U) always equivalently by two normal unit vectors

and

. These full turns of

B and

within the group must be parameterized with a “group angle”

if we want to express the periodicity within the rotation group in terms of trigonometric functions. However, for the normal vector

, which we have used to define

B and that belongs to

, this is different. For

, its starting value is

. For

, its value has become

, such that we obtain

in Equation (

9). There is nothing wrong with that because both the normal vectors

and

define the same plane

. Thus, each group element

g is represented by two matrices

and

. As the group elements

B and

have recovered their initial values, we have

. In general, we have

. Only after a rotation over a “group angle”

, which corresponds to a rotation of

over an angle

will we obtain the values

and

.

Remark 10. It is often presented as a mystery of QM that one must turn the wave function over before we obtain the starting configuration () again. There is even a beautiful neutron experiment that has been performed to provide physical proof for the truth of this fact to physicists [29]. We can see, from a proper understanding of the group theory, that this is quite trivial and it is a mathematical rather than physical truth. Most textbooks mystify this subject matter by invoking topological arguments. We explain this link with topology in [16], Subsection 3.11.2, and Figure 3.5, where we compare a full turn on the group with a full turn on a Moebius ring. This link is thus conceptually very clear and simple. However, in the illustration of this topological argument by Feynman [30], Dirac [31], or Misner et al. [8], the connection between the topological argument and the physical model is hard to see. It is, e.g., very difficult to follow how disentangling the threads in the work of Misner et al. would make the point. Remark 11. Representing a rotation as the product of two reflections is convenient for calculating the product of two rotations. Consider two rotations and . Call π the plane that is defined by and . Call the plane of the reflection that defines as and the plane of the reflection that defines as . It then follows that .

2.6. A Parallel Formalism For Vectors

By construction, the representation SU(2) contains for the moment, (as we explained) deliberately, only group elements. Of course, it would be convenient if we were also able to calculate the action of the group elements on vectors. This is our next step. We can figure out how to do this based on the fact that we have already used a unit vector to define a reflection A and its corresponding reflection matrix . Inversely, the reflection A also defines up to a sign, such that there exists a one-to-one correspondence between reflections A and the two-member sets of unit vectors (and the corresponding two-member sets of reflection matrices ). This one-to-one correspondence between two-member sets of vectors and reflections will actually impose the formalism for vectors upon us. We can consider that a reflection A and its parameter set are conceptually the same thing.

When a reflection travels around the group, the two-member set of vectors will travel together with it. Let us explain what we mean by the informal term “traveling” here. In SO(3), a vector has a representation matrix . It is transformed by a group element g with representation matrix into another vector : we just calculate the representation matrix of as . The vector travels this way under a group action to another vector . The point we want to make is that in SU(2), things are not as simple. Under the action of a group element g with matrix representation , a reflection A will not travel to another reflection .

Let G be the group that is generated by the reflections. The subgroup of pure rotations is the subset that is obtained from an even number of reflections. The subset obtained from an odd number of reflections is not a subgroup. It contains the reflections and the reversals. Reflections are of course geometrical objects of a different type than reversals and pure rotations. This also transpires from the fact that a reflection is defined by a unit vector , where is the unit sphere in . Thus, it is defined by two independent real parameters while rotations and reversals are defined by three independent real parameters. Group elements and are of the same geometrical type if they are related by a similarity transformation: . They have then the same group character.

In general, a new group element obtained by operating with an arbitrary group element on the reflection A will no longer be a reflection that can be associated with a unit vector, like it was the case for A, because, in general, can be of a different geometrical type than A. Group elements that transform a reflection A into an other reflection, B, are the identity element and rotations R that can be written as . For this to be possible, the rotation axis of R must belong to the reflection plane of A. In other words, the reflections do not travel according to the general rule .

In order to transform a reflection

A always into another reflection, we must use a similarity transformation:

. Hence, if

B and

A are reflections, defined by the unit vectors

and

, then there exists a group element

, such that

and

. Hence, if

A is a reflection operating on

, then the similar reflection

B that operates on

will be represented by

. The reflection plane

and normal

of this reflection

B will have the same angles with respect to

as

and

with respect to

. Thus, we can move this way the reflection

A in

around to group elements

B in

, and, of course, the parameter set

will travel with it from

to

to a parameter set

. The ambiguity between

and

is also carried along. For the representation matrices of reflections we have thus:

whereby we allow for the ambiguity in the sign of

, because Equation (

24) is not a transformation law for vectors, but for reflections and their associated two-member sets of vectors.

Of course, the idea would be that and , but the combined presence of and does not permit reproducing the change of sign in the formalism, because it has been designed for group elements, not for vectors. This is very clear for , while in the formalism , which is the correct calculation for . On the other hand, a vector that is perpendicular to is characterized by .

To see this, consider the rotation R that transforms into and into . For the reflections and , we have . The similarity transformation based on will transform into the reflection A with matrix representation and into the reflection B with matrix representation . Applying the similarity transformation to proves then the identity. Therefore, , while the vector belongs to the reflection plane and it should not change sign under the reflection A.

Thus, we see that, in all cases, we get the sign of the reflected vector wrong. Thus, we can lift the ambiguity and treat the vectors correctly by introducing the sign by brute force:

In doing so, we quit the formalism for group elements and enter a new formalism for vectors. The transition is enacted by conceiving and elaborating the idea that we can use the matrix

also as the representation of the unit vector

, since the matrix

contains the components of the vector

and the reflection

A defines

. To get rid of the ambiguity about the signs of the vectors that exist within the definition of the reflection matrices, it suffices to use

as a representation for a unit vector

, and to introduce the rule that

is transformed according to:

This will be further justified below. The transformation under other elements is then obtained by using the decomposition of g into reflections. This way, we have developed a parallel formalism for the matrices , wherein takes now a different meaning, viz. that of a representation of a unit vector and obeys a different kind of transformation algebra, that is no longer linear, but quadratic in the transformation matrices. This idea can be generalized to a vector of arbitrary length v, which is then represented by . In fact, the scalar v is a group invariant, because the rotation group is defined as the group that leaves v invariant. We have then .

This idea that, within SU(2), a vector

is represented by a matrix

according to the isomorphism:

was introduced by Cartan [

4]. It is a definition that makes it possible to do calculations on vectors. In reading Cartan, one could get the impression that we have the leisure to introduce this definition at will. In reality, it is not a matter of mere definition. While introducing the idea as a definition would not lead to errors in the formalism, it would nevertheless be a false presentation of the state of affairs, because it is no longer at our discretion to define things at will. As we can see from the reasoning above, the definition is entirely forced upon us by the one-to-one correspondence between sets of unit vectors

, and reflections

A.

We cannot stress enough that, even if reflections L and unit vectors are both represented by the same matrix , they are obviously completely different quantities, belonging to completely different spaces L and and completely different algebras.

Using

, one can derive, from the rule

, that

, which can be seen as an alternative definition of the parallel formalism for vectors. As anticipated above, we can use this result to check the correctness of the rule of Equation (

26) geometrically. It suffices in this respect to observe that the reflection

A, defined by the unit vector

, transforms

into

. Expressed in the matrices this yields:

.

We see that the transformation law for vectors is quadratic in in contrast with the transformation law for group elements g, which is linear: . Vectors transform thus quadratically as rank-2 tensor products of spinors, whereas spinors transform linearly. This gives us a full understanding of the relationship between vectors and spinors. It is much easier to understand this relationship in the terms that are used here, vectors are quadratic expressions in terms of spinors, than in the equivalent terms used by Atiyah, spinors are square roots of vectors.

Remark 12. This solution is analogous to the solution proposed by Gauss, Wessel, and Argand to solve the problem of the meaning of . As described on p. 118 of reference [24], one first defines as , with two operations + and × defined by and . One then shows that is isomorphic to , where . This permits identifying and justifies introducing the notations , and . One can prove then that . The fact that this solution for the riddle what the meaning of a spinor is has escaped attention is due to the fact that spinors are in general introduced based on the construction proposed in Equation (

29) below. This construction emphasizes the fact that a spinor is a kind of square root of a vector at the detriment of the notion developed here and that a vector is a rank-2 expression in terms of spinors. However, these relations between spinors and vectors are a property that only constitute a secondary notion, which is not really instrumental in clarifying the concept of a spinor. The essential and clarifying notion in SU(2) is that a spinor corresponds to a rotation.

The reader will notice that the definition with is analogous to Dirac’s way of introducing the gamma matrices to write the energy-momentum four-vector as and postulating . In other words, it is the metric that defines the whole formalism, because we are considering groups of metric-conserving transformations (as the definition of a geometry in the philosophy of Felix Klein’s Erlangen program).

For more information regarding the calculus on the rotation and reversal matrices, we refer the reader to reference [

16]. Let us just mention that as a reflection

A works on a vector

according to

, a rotation

will work on it according to

. The identity

explains, in an alternative way, why the representation that we end up with is SU(2).

In summary, there are two parallel formalisms in SU(2), one for the vectors and one for the group elements. In both formalisms, a matrix can occur, but with different meanings. In a formalism for group elements, fulfils the rôle of the unit vector that defines the reflection A, such that we must have , and then the reflection matrix transforms according to: under a group element g with matrix representation . The new group element that is represented by will then, in general, no longer be a reflection that can be associated with a unit vector like it was the case for . In a formalism of vectors, can be different from 1 and the matrix (that represents now a vector) transforms according to: . Here can be associated again with a vector.

We cannot emphasize enough that the vector formalism is a parallel formalism that is different from the one for reflections, because the reflections that are defined by and are equivalent, while the vectors and are not. Here, we have two concepts that are algebraically identical but not geometrically and this is the source of a lot of confusion. The folklore that one must rotate a wave function by to obtain the same wave function again is part of that confusion. The reflection operator is a thing that is entirely different from the unit vector , even if their expressions are algebraically identical. By rotating a reflection plane over an angle , we obtain the same reflection, while it takes rotating over an angle to obtain the same vector .

Remark 13. Both in the representation matrices for reflections A and for vectors , the quantities , , are the three Pauli matrices. In the representation () defined by Equation (27), the Pauli matrices are just the images, i.e., the coding of the three basis vectors . As clearly indicated in the diagram of Equation (5), σ is a shorthand for the triple . The use of the symbol serves to draw the attention to the fact that the notation is a purely conventional shorthand for , which codes the vector within the formalism. Thus, it is analogous to writing pedantically as: . The danger of using the convenient shorthand is that it conjures up the image of a scalar product, while there is no scalar product whatsoever. The fact that represents the vector , and that the Pauli matrices just represent the basis vectors , was clearly stated by Cartan, but physicists nevertheless have hineininterpretiert

the vector as a scalar product in the theory of the anomalous g-factor for the electron. Here, μ would be the magnetic dipole of the electron and its potential energy with the magnetic field . In reality, just expresses the magnetic-field pseudo-vector . The quantity can never represent the spin, because it is already defined in Euclidean geometry before we apply this geometry to the physics where we want to consider spin. This reveals that physicists do not only use spinors like vectors: They also use vectors like scalars. We have fully discussed and tidied up this problem in [23], where we have proposed a better interpretation of the Stern–Gerlach experiment. Remark 14. A similar confusion arises in the definition of the helicity of the neutrino [19], pp.105–106, Equation (5.30), [32]. It is defined as , and claimed to be “the projection” of the “spin” on the unit vector . This is again a confusion between the shorthand notation for the representation of the vector and a true scalar product. As just mentioned, in reality, just represents the unit vector . The factor has been added only due to the confusion and the belief that would then be the spin operator, while the true spin operator is . There is absolutely no reference to spin whatsoever in the operator . The definition leads to a confusing discussion about the difference between helicity and chirality in textbooks. This example shows that physicists cannot deny that they have considered and as a true scalar products. 2.8. Justifying the Introduction of a Clifford Algebra

The author has figured out the whole contents of the present paper from scratch, because he found the textbook presentations impenetrable. The author has also not studied books on Clifford algebra [

34] in depth, such that some works may well provide the motivation we will try to give here, and that we were not able to spot in textbooks. Our criticism is based on the observation that, very often, mathematical objects that algebraically look identical are, in reality, entirely different geometrical objects. We have seen that we can introduce representations

for vectors

into the formalism by extrapolating the meaning of the algebra of the representations

of reflection operators

L

. We have seen how confusing

L

and

through the algebraic identity of their representation matrices

can trap us into a conceptual impasse of trying to give geometrical meaning to mindless algebra. This is not the end of the story. Whereas, it is meaningful in the group theory to consider the product

of two reflections

B and

A and the corresponding representation matrix

, it is

a priori not defined what the purely formal product of two vectors

and

defined by

is supposed to mean. Here, again, entirely different geometrical objects are represented by identical algebraic expressions. We have learned definitions for

and for

, but not for

. However, inspection of the algebra reveals that:

an algebraic identity that we used in deriving Equation (

8). Here, we recognize the familiar quantities

and

. Whereas, this kind of algebra is meaningful for reflection matrices, it is

a priori not meaningful for vectors. It can be given a meaning

a posteriori in terms of vectors, at the risk of introducing confusion by ignoring the fact that the vector formalism is a parallel formalism, as we clearly outlined from the outset. Based on this confusion, one can obtain then a formalism, whereby one sums quantities that are not of the same type, by writing expressions of the type:

as a shorthand for Equation (

46). What Clifford algebra does is defining

mano militare that such expressions are meaningful as an algebra on multi-vectors. In general, such a definition is introduced out of the blue. By focusing on the purely algebraic part of the formalism, it is possible to confuse the vectors

and the reflection matrices

. This has several inconveniences. First of all, it is puzzling for the reader to understand where this idea comes from, because the algebra adds quantities of different symmetries and dimensions. All at once, one teaches him that, from now on, one can add kiwis and bananas, while one has told him before during his whole life that this is not feasible. Moreover, this is done tacitly, as though this would not be a problem at all. Nothing is done to ease away the bewilderment of a critical reader. One only laconically teaches him how to get used to it without asking further questions. One just rolls out the algebra, such that the reader can learn to imitate it mindlessly. As this is rather easy, the reader will quickly become acquainted with it, such that the justified initial questions will be silenced. However, it takes an algebraic shortcut to the full geometrical explanation by exploiting algebraic coincidences.

The second problem is that after the introduction of the definition of the Clifford algebra with its cuisine of adding kiwis and bananas, all the geometry of the rotations seems to follow effortlessly from this definition in an extremely elegant way. This gives the impression that everything is derived by magic from thin air, which really leaves one left wondering. In fact, the only vital ingredient that is needed to obtain this powerful and elegant formalism seems to be the impenetrable slight of hand of adding kiwis and bananas.

For sure, our presentation looks somewhat more cumbersome and less elegant than the approach where one takes off from the definition of the Clifford algebra in grand style. However, that elegant grand style is only a short-cut to the detailed explanation, and it is obtained by sweeping some more tedious parts under the carpet. The strong point of our approach is that it provides the detailed geometrical motivation for the complete Clifford algebra. An interesting feature that also exists in our approach is that we can consider all kinds of products:

The worked-out algebra contains expressions that correspond to hyper-parallelepipeds and other quantities of various dimensions (that can be symmetrical or anti-symmetrical). The symmetry is signalled by the presence or absence of a factor

ı. These quantities transform under a rotation

to:

Thus, that we can rotate all of these quantities within a unique formalism is not an asset of Clifford algebra that would not exist in our exploratory approach. We see that, by formalizing the algebra for the sake of elegance, we can obtain a very abstract formulation whereby we loose completely sight of the clear geometrical ideas. Mathematicians would argue that this does not matter. However, the problem is that now confusion reigns. Additionally when the cat is away, the mice will play. The abstraction eases extrapolating the algebra in a meaningless way beyond the limits defined by its geometrical meaning, e.g., by introducing linear combinations of spinors. From that point on the framework may now contain some well-hidden logical nonsense, as taking linear combinations of spinors is not a granted procedure. The structure that results from this transgression is the very elegant Hilbert space formalism of QM. This is now highly abstract, and any obvious link with the original geometrical meaning has been completely flushed. This favours an attitude where calculating becomes much more important than thinking. As matter of fact, in QM, the leitmotiv has become to “shut up and calculate”. Additionally, after hiding away this way, the whole geometrical meaning of the formalism, a physicist may enter the room and ask: I have a beautiful formalism that grinds out theoretical predictions which agree with the experimental data to unprecedented precision, but I just cannot figure out what it means.