1. Introduction

Tomato is one of the crops planted all over the world and is rich in nutritional value [

1]. Most studies have shown that the intake of tomatoes or tomato products has an inhibitory effect on some cancers (such as lung cancer, gastric cancer, etc.), which is beneficial for multiple health outcomes in humans [

2,

3]. However, in the cultivation process of tomatoes, due to the influence of climate, environment, pests, and other factors, they will be infected with different kinds and degrees of diseases. These diseases are highly infectious, widely spread, and harmful; are not conducive to the growth of tomatoes; and also affect the yield and quality of fruit. Therefore, it is of great significance to accurately identify tomato diseases and carry out early prevention and timely treatment. With the development of deep learning, convolutional neural networks (CNN) have made major breakthroughs in the field of disease recognition. Most researchers [

4,

5,

6,

7,

8] improved the architecture of neural network models and applied them to the recognition of plant diseases, which has achieved good results. However, a neural network with good performance usually requires a large number of parameters, and a large amount of data is required to make these parameters work correctly. To obtain accurate disease data, manual collection and labeling are needed, which are time-consuming, labor-intensive, and error-prone. Therefore, in the field of tomato disease recognition, it is often impossible to obtain enough data to train the neural network, which is also the main reason that affects the further improvement of tomato disease recognition accuracy.

In the field of computer vision, data augmentation is a typical and effective method to deal with small data sets, including supervised and unsupervised methods. The unsupervised data augmentation method expands samples by geometric transformation [

9,

10,

11,

12] (such as flipping, clipping, rotation, scaling, etc.), which is simple and operable, so it is widely used in the data processing of diseased leaves. Liu et al. [

13] used conventional image rotation, brightness adjustment, and principal component analysis methods to expand the image, solve the problem of insufficient images of apple disease, and achieve good recognition results. Abas et al. [

14] used rotation, translation, scaling, and other methods to enhance the plant image data and used an improved VGG16 network to classify plants, so as to solve the problem of overfitting caused by too few plant samples. This kind of generation method can generate a large amount of data, which solves the problem of insufficient data to a certain extent but still has the following shortcomings: the image expanded by geometric transformation contains less information and is temporary, and a partially expanded image is not conducive to the training of the model, is not applicable to some tasks, and even reduces the performance of the model. At the same time, due to the large randomness of the generated data, there is no consistent target, which will produce a large number of redundant samples. In order to generate images that are more beneficial to specific tasks, an unsupervised image generation method based on learning has been proposed [

15,

16,

17,

18]. This kind of method uses different geometric transformation sequences to transform images, finds the most beneficial combination of classification results through reinforcement learning to enhance data, and adaptively adjusts it according to the current training iteration, finally generating a customized, high-performance data augmentation plan. However, this method is time-consuming and resource-intensive for training, so it has limitations on some tasks.

GAN [

19] (generative adversarial network) is another data augmentation method based on unsupervised methods. It has been proven to be able to generate images with a similar distribution to the original data, showing subversive performance in image generation [

20,

21,

22,

23]. Clément et al. [

24] used a GAN-based image augmentation method to enhance the training data and segmented the apple disease on tree crown with a smaller data set. Compared with the results without image generation, the F1 value was increased by 17%. The DCGAN (deep convolution generative adversarial network) [

25] used a CNN (convolutional neural network) to replace the multilayer perceptron in GAN to improve the quality of the generated images. Purbaya et al. [

26] used a regularization method to improve DCGAN and generated plant leaf data to prevent the model from overfitting. Although DCGAN improves the quality of the generated images, it cannot control the classes of the generated images, limiting the generation of multi-class images. To this end, ACGAN (generative adversarial network with an auxiliary classifier) [

27] is proposed, which adds class information constraints to the generator and an auxiliary classifier to the discriminator to classify the output image, so that it has the function of image classification and can guide the generator to generate images of different classes. In addition, in order to generate higher quality data, many new architectures based on GAN have been proposed and applied to the field of plant science [

25,

28,

29,

30,

31,

32]. For the problem of insufficient data for apple diseases, Tian et al. [

33] used CycleGAN [

34] to generate images, which enriched the diversity of training data. Zhu et al. [

35] proposed a data augmentation method based on cGAN, adding condition information to the input of generator to control the class of the generated image. In addition, dense connection was added to the generator and discriminator to enhance the information input of each layer and significantly improve the classification performance. Liu et al. [

36] added dense connections in GAN, and improved the loss function to generate grape leaves to achieve better recognition results in the same recognition network. However, the goal of GAN is to generate images that are as real as possible. It learns the overall information of the image, ignoring the key local disease information, and cannot generate images with clear disease spots. At the same time, due to the lack of detail texture, most of the images generated are of poor quality.

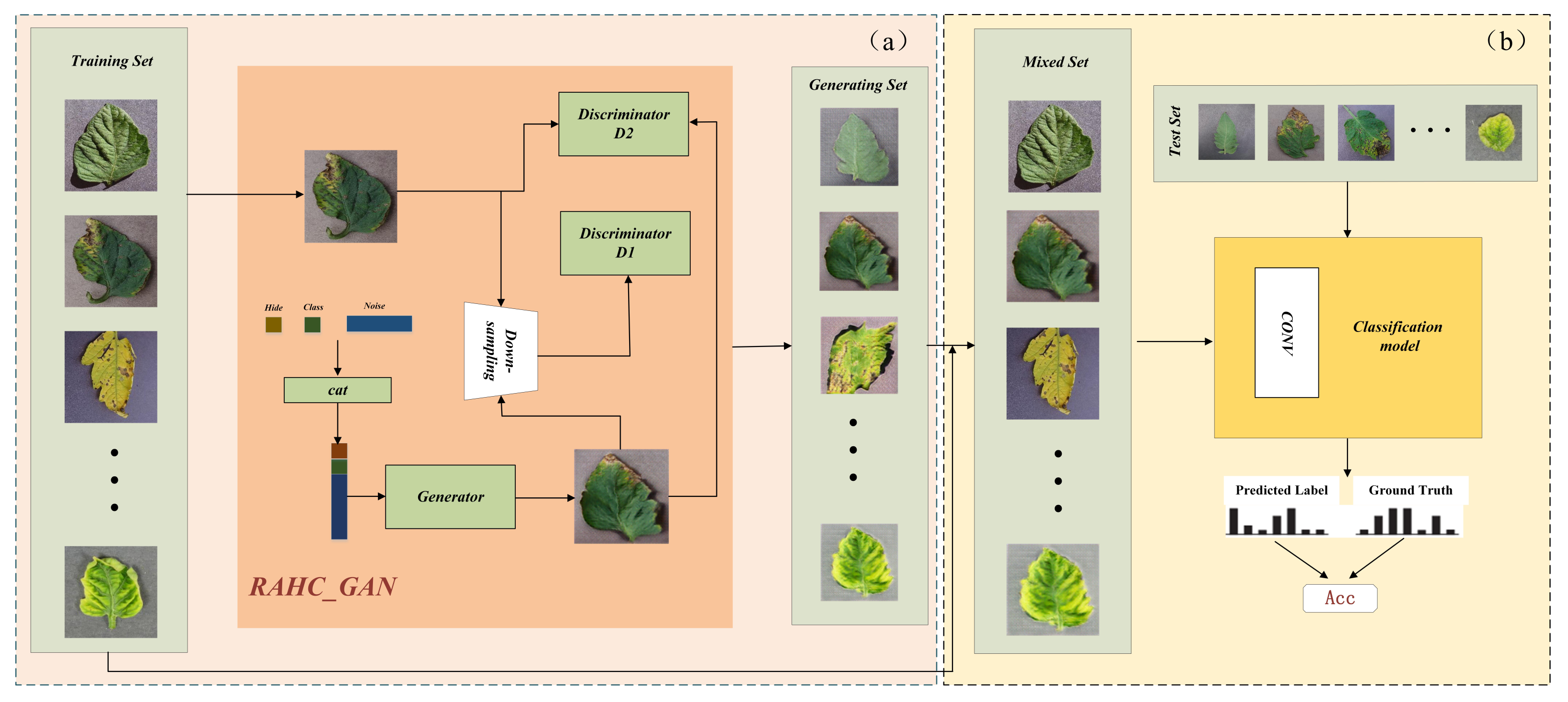

In order to solve the problem of insufficient data for tomato disease identification and insufficient disease features of expanded data, this paper proposes an improved ACGAN method RAHC_GAN for data augmentation (

Figure 1a) and establishes an expanded data set of original data and generated data using RAHC_GAN (

Figure 1b), so as to meet the data required for neural network training and improve the performance of the classifier in tomato leaf disease recognition. The innovations and contributions are as follows:

Adding a set of continuous hidden variables to the input of the generative adversarial network to improve the diversity of the generated images. Due to the intra-class differences of the same tomato disease being small, the traditional generative adversarial network has difficulty learning the intra-class differences, and then it will generate similar images, resulting in the phenomenon of mode collapse [

37]. In order to avoid this phenomenon, we use the hidden variable and class label to generate tomato leaves with different diseases. The class label is used to generate specific disease classes, and the hidden variable is used to improve the potential changes in the same class. For each class of disease, we capture the potential differences within the class by changing the value of the hidden variable, such as the area size and severity of the disease, so as to supplement the information within the class and enrich the diversity of the generated images.

The fusion of residual attention block and multi-scale discriminator to enrich disease information of generated pictures. We add residual attention blocks to the generator. The residual network deepens the network depth and avoids network degradation. The attention mechanism makes the generator pay more attention to the disease information in the leaves from the perspectives of channel and space and guides the generation of tomato diseased leaves with obvious disease features. In addition, we introduce a multi-scale discriminator, which can capture different levels of information in the image, enrich the texture and edges of the generated leaves, and make the generated leaves more complete, richer in detail, and clearer in texture.

Using RAHC_GAN to expand the training set to meet the large amount of data needed for neural network training. We use the expanded data set as the training set, train four kinds of recognition networks (AlexNet, VGGNet, GoogLeNet, and ResNet) through transfer learning, and use the test set for performance evaluation. The experimental results show that in multiple recognition networks, the performance of the expanded data set is better than that of the original training set.

Figure 1.

Schematic of the proposed strategy. This strategy consists of two parts: (a) using the proposed method, RAHC_GAN, to generate tomato diseased leaves; (b) the generated data are extended to the training set to train the classifier, so as to improve the disease recognition performance of the classifier.

Figure 1.

Schematic of the proposed strategy. This strategy consists of two parts: (a) using the proposed method, RAHC_GAN, to generate tomato diseased leaves; (b) the generated data are extended to the training set to train the classifier, so as to improve the disease recognition performance of the classifier.

The rest of this paper is organized as follows:

Section 2 introduces related work and the model we proposed. Then,

Section 3 shows the experimental results and analyses.

Section 4 shows the related discussions.

Section 5 contains the conclusions and future prospects.

4. Discussion

Deep learning training requires a large amount of data. When the data are insufficient, a variety of methods can be used for data augmentation, such as flipping, cropping, etc. Instead of this method, GAN does not require the manual selection of transformation operations, but through the confrontation of generator and discriminator, it simulates the distribution of the original data and generates a group of data. Experimental results show that the extended data generated by GAN can improve the recognition performance of plant diseases. However, because the variability of the image generated by GAN is limited, it is impossible to capture all the possible changes in the image, so the performance improvement is limited. At the same time, the images generated by GAN have limitations. Although adding data can improve the recognition performance, adding too much data in the training set may destroy the original data set and cause performance degradation. Therefore, in future work, we will continue to explore more effective data augmentation methods to generate higher-quality images while maintaining better recognition performance.

In addition, because GAN is a kind of neural network, its training also depends on data. When the amount of data in the training set is too small, the image of the specified class cannot be generated accurately by noise. Therefore, we will also try to use image to image conversion generation instead of noise to image conversion for data augmentation.

5. Conclusions

In the training of a recognition network, there is a strong symmetric relationship between sufficient and insufficient training samples. In order to solve the problem of insufficient data, we proposed a generative adversarial network model for tomato disease leaf generation, called RAHC_GAN, and evaluated it on four disease identification networks: AlexNet, VGGNet, GoogLeNet, and ResNet. The experimental results show that the expanded data set can meet the large amount of data required for neural network training, which makes the data set expanded by RAHC_GAN have a greater improvement in recognition accuracy under different classification models. At the same time, we found that under the same experimental settings, VGGNet, as the classification network with the most network parameters, has the lowest recognition performance among the four networks without data augmentation, and the performance improvement is the most obvious after using RAHC_GAN for data augmentation, which shows that on the neural network with large parameters, sufficient data can significantly improve its recognition performance.

In comparison with other data augmentation methods, the data set expanded by RAHC_GAN has better performance on the four classification networks, indicating that RAHC_GAN can generate tomato diseased leaf images with obvious disease features from random noise and can effectively solve the problem of insufficient training data. The results of ablation experiments show that in the generation of leaves with the same disease, the newly added hidden variable can learn the potential intra-class change information, so as to enrich the class information of the same disease. The addition of residual attention blocks can make the generator pay attention to the disease area in the image and guide it to generate leaves with obvious disease features. The introduction of a multi-scale discriminator can enrich the detailed texture of the generated images.

The application of RAHC_GAN on apple, grape, and corn data from the PlantVillage data set shows that the proposed method has good generalization ability. In addition to solving the problem of insufficient data for tomato leaf identification, it can also be used for other plant research tasks lacking diseased leaf data.