Reinforced Neighbour Feature Fusion Object Detection with Deep Learning

Abstract

:1. Introduction

2. Related Work

2.1. One-Stage Detection

2.2. Two-Stage Detection

3. Reinforced Neighbour Feature Fusion (RNFF)

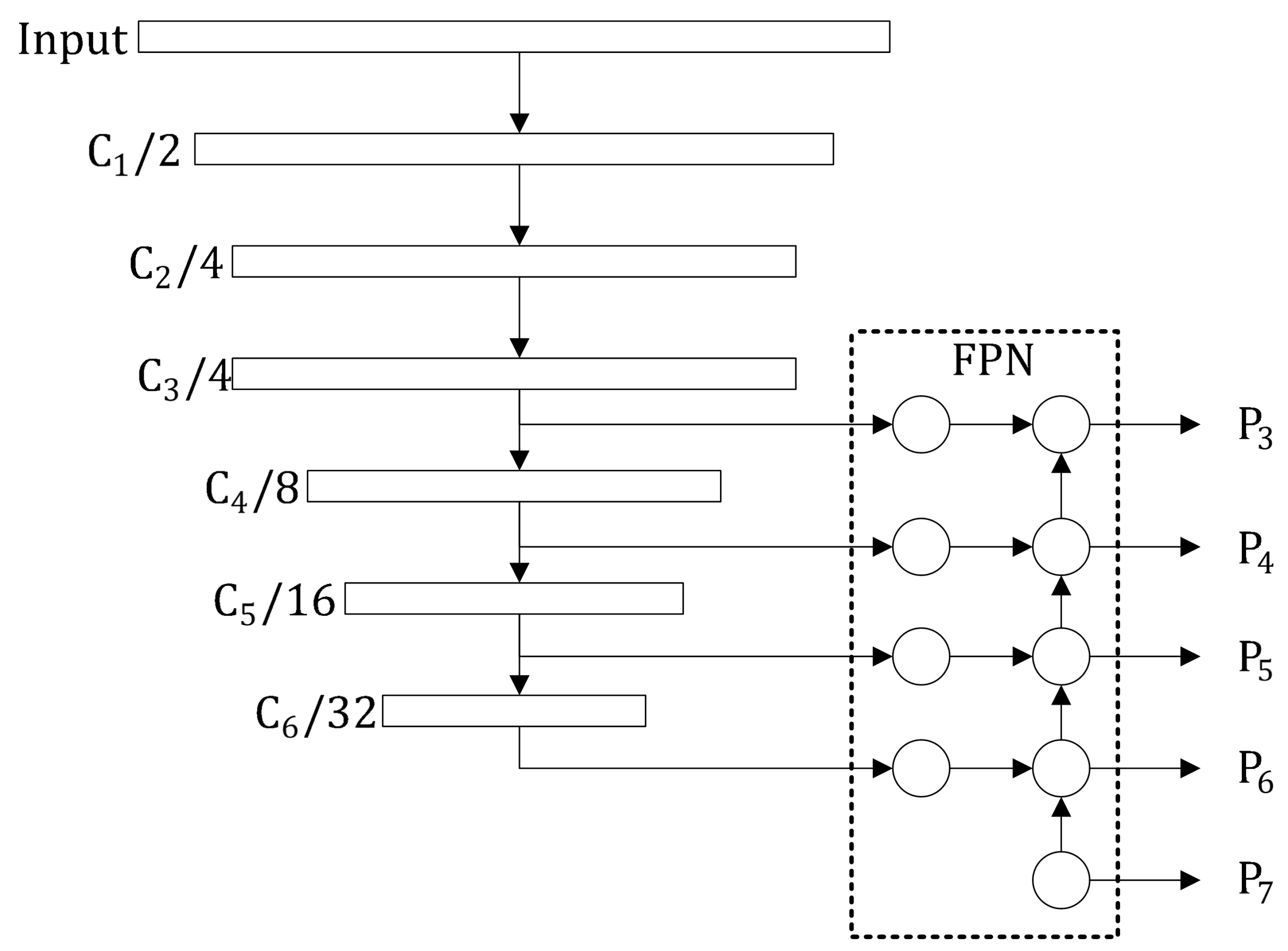

3.1. Neighbour Feature Pyramid Network (NFPN)

3.2. Resnet Region of Interest Feature Extraction (Resroie)

3.3. Recursive Feature Pyramid (RFP)

4. Experiments and Results

4.1. Dataset

4.2. Implementation Details

4.3. Module-Wise Ablation Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, D.; Ding, B.; Wu, Y.; Chen, L.; Zhou, H. Unsupervised Learning from Videos for Object Discovery in Single Images. Symmetry 2021, 13, 38. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Dordrecht, The Netherlands, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Law, H.; Teng, Y.; Russakovsky, O.; Deng, J. CornerNet-Lite: Efficient keypoint based object detection. arXiv 2019, arXiv:1904.08900. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar]

- Zhou, X.; Zhuo, J.; Krahenbuhl, P. Bottom-up object detection by grouping extreme and center points. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 850–859. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards balanced learning for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 821–830. [Google Scholar]

- Rossi, L.; Karimi, A.; Prati, A. A novel region of interest extraction layer for instance segmentation. arXiv 2020, arXiv:2004.13665. [Google Scholar]

- Liu, Y.; Wang, Y.; Wang, S.; Liang, T.; Zhao, Q.; Tang, Z.; Ling, H. Cbnet: A novel composite backbone network architecture for object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11653–11660. [Google Scholar] [CrossRef]

- Qin, Z.; Li, Z.; Zhang, Z.; Bao, Y.; Yu, G.; Peng, Y.; Sun, J. Thundernet: Towards real-time generic object detection on mobile devices. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6718–6727. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. Cspnet: A new backbone that can enhance learning capability of cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, J.; Chen, K.; Yang, S.; Loy, C.C.; Lin, D. Region proposal by guided anchoring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2965–2974. [Google Scholar]

- Lin, T.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Mogelmose, A.; Trivedi, M.M.; Moeslund, T.B. Vision-based traffic sign detection and analysis for intelligent driver assistance systems: Perspectives and survey. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1484–1497. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. arXiv 2014, arXiv:1405.0312. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 21–24 June 2021; pp. 13029–13038. [Google Scholar]

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You only look one-level feature. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 21–24 June 2021; pp. 13039–13048. [Google Scholar]

- AlDahoul, N.; Abdul Karim, H.; Lye Abdullah, M.H.; Ahmad Fauzi, M.F.; Ba Wazir, A.S.; Mansor, S.; See, J. Transfer Detection of YOLO to Focus CNN’s Attention on Nude Regions for Adult Content Detection. Symmetry 2021, 13, 26. [Google Scholar] [CrossRef]

- Maninis, K.K.; Caelles, S.; Pont-Tuset, J.; Van Gool, L. Deep extreme cut: From extreme points to object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 616–625. [Google Scholar]

- Zhang, Y.; Hu, C.; Lu, X. Improved YOLOv3 Object Classification in Intelligent Transportation System. arXiv 2020, arXiv:2004.03948,. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Gao, S.; Cheng, M.; Zhao, K.; Zhang, X.; Yang, M.; Torr, P.H.S. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kok, K.Y.; Rajendran, P. A Descriptor-Based Advanced Feature Detector for Improved Visual Tracking. Symmetry 2021, 13, 1337. [Google Scholar] [CrossRef]

- Lin, D.; Shen, D.; Shen, S.; Ji, Y.; Lischinski, D.; Cohen-Or, D.; Huang, H. Zigzagnet: Fusing top-down and bottom-up context for object segmentation. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7490–7499. [Google Scholar]

- Zhou, Z.; Lai, Q.; Ding, S.; Liu, S. Novel Joint Object Detection Algorithm Using Cascading Parallel Detectors. Symmetry 2021, 13, 137. [Google Scholar] [CrossRef]

- Liang, H.; Yang, J.; Shao, M. FE-RetinaNet: Small Target Detection with Parallel Multi-Scale Feature Enhancement. Symmetry 2021, 13, 950. [Google Scholar] [CrossRef]

| Method | mAP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|

| Baseline | 65.2 | 76.1 | 74.8 | 66.8 | 71.0 | 70.5 |

| BFP | 63.9 | 75.9 | 74.0 | 63.4 | 71.2 | 77.9 |

| BiFPN | 58.4 | 70.9 | 69.8 | 55.4 | 65.1 | 83.5 |

| BiFPN × 2 | 49.2 | 59.9 | 58.4 | 49.1 | 56.6 | 78.5 |

| NFPN (ours) | 66.0 | 77.4 | 75.7 | 65.8 | 72.1 | 75.5 |

| ResRoIE + NFPN (ours) | 67.2 | 78.7 | 77.3 | 68.0 | 74.1 | 76.0 |

| NFPN × 2 + ResRoIE (ours) | 69.8 | 78.0 | 76.2 | 66.1 | 72.3 | 80.5 |

| NFPN + RFP + ResRoIE (ours) | 67.5 | 78.8 | 77.5 | 68.3 | 73.8 | 81.0 |

| Method | mAP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|

| Baseline | 37.4 | 58.1 | 40.4 | 21.2 | 41.0 | 48.1 |

| PANet | 37.5 | 58.6 | 40.8 | 21.5 | 41.0 | 48.6 |

| NFPN (ours) | 37.9 | 58.2 | 41.1 | 21.1 | 41.3 | 49.5 |

| NFPN + ResRoIE (ours) | 39.0 | 59.8 | 42.5 | 23.1 | 42.6 | 50.5 |

| RNFF (ours) | 39.3 | 60.0 | 42.4 | 22.9 | 42.2 | 50.6 |

| Method | mAP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|

| Baseline | 37.4 | 58.1 | 40.4 | 21.2 | 41.0 | 48.1 |

| Libra R-CNN | 38.7 | 59.9 | 42.0 | 22.5 | 41.1 | 48.7 |

| Grid R-CNN | 40.4 | 58.5 | 43.6 | 22.7 | 43.9 | 53.0 |

| Guided anchoring | 39.6 | 58.7 | 43.4 | 21.2 | 43.0 | 52.7 |

| GRoIE | 37.5 | 59.2 | 40.6 | 22.3 | 41.5 | 47.8 |

| Libra R-CNN + RNFF (ours) | 39.3 | 59.2 | 42.8 | 22.6 | 42.7 | 51.2 |

| Grid R-CNN + RNFF (ours) | 40.6 | 59.5 | 43.7 | 24.1 | 44.6 | 52.7 |

| Guided anchoring + RNFF (ours) | 40.5 | 59.3 | 44.1 | 23.1 | 44.2 | 54.0 |

| GRoIE + RNFF (ours) | 40.1 | 59.7 | 43.5 | 23.1 | 44.5 | 52.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, N.; Li, Y.; Liu, H. Reinforced Neighbour Feature Fusion Object Detection with Deep Learning. Symmetry 2021, 13, 1623. https://doi.org/10.3390/sym13091623

Wang N, Li Y, Liu H. Reinforced Neighbour Feature Fusion Object Detection with Deep Learning. Symmetry. 2021; 13(9):1623. https://doi.org/10.3390/sym13091623

Chicago/Turabian StyleWang, Ningwei, Yaze Li, and Hongzhe Liu. 2021. "Reinforced Neighbour Feature Fusion Object Detection with Deep Learning" Symmetry 13, no. 9: 1623. https://doi.org/10.3390/sym13091623