Construction of Virtual Interaction Location Prediction Model Based on Distance Cognition

Abstract

:1. Introduction

2. Related Work

2.1. Quantitative Analysis of Egocentric Distance Cognition

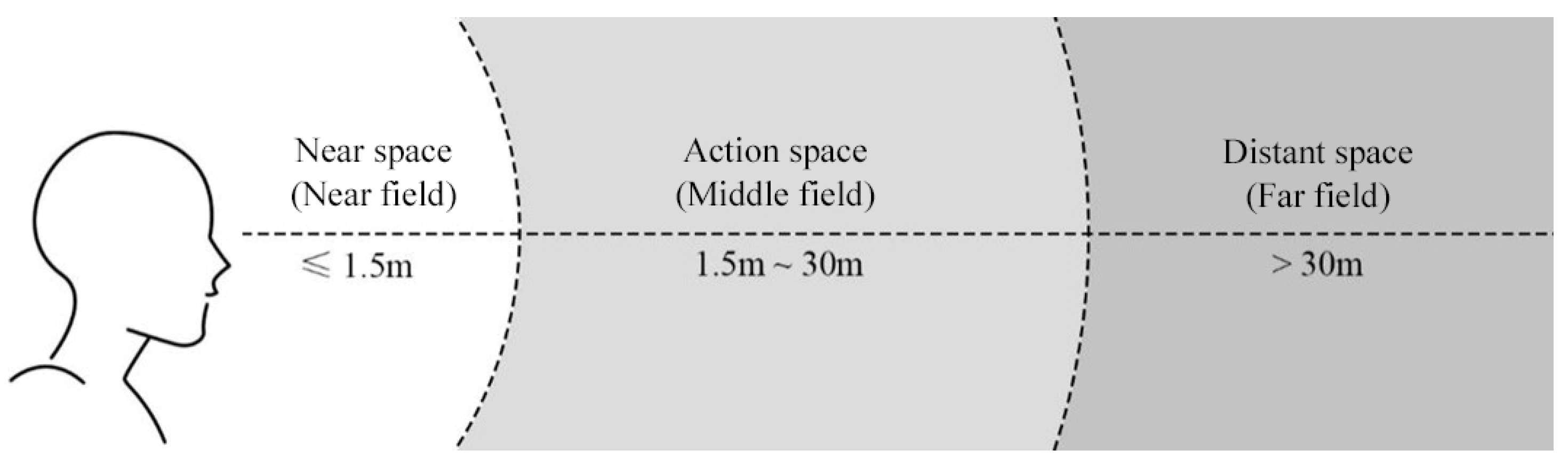

2.1.1. Egocentric Extrapersonal Distance

2.1.2. Egocentric Peripersonal Distance

2.2. Research of Egocentric Distance Cognition

2.3. Research of Egocentric Distance Cognition

2.3.1. Report Method

2.3.2. Quality of Computer Graphics

2.3.3. Technology of Stereoscopic Display

2.3.4. VR Experience of Participants

2.3.5. Other Factors

3. Virtual Interactive Space Distance Cognition Experiment

3.1. Experimental Equipment and Related Tools

3.2. VR System and Experiment Participants

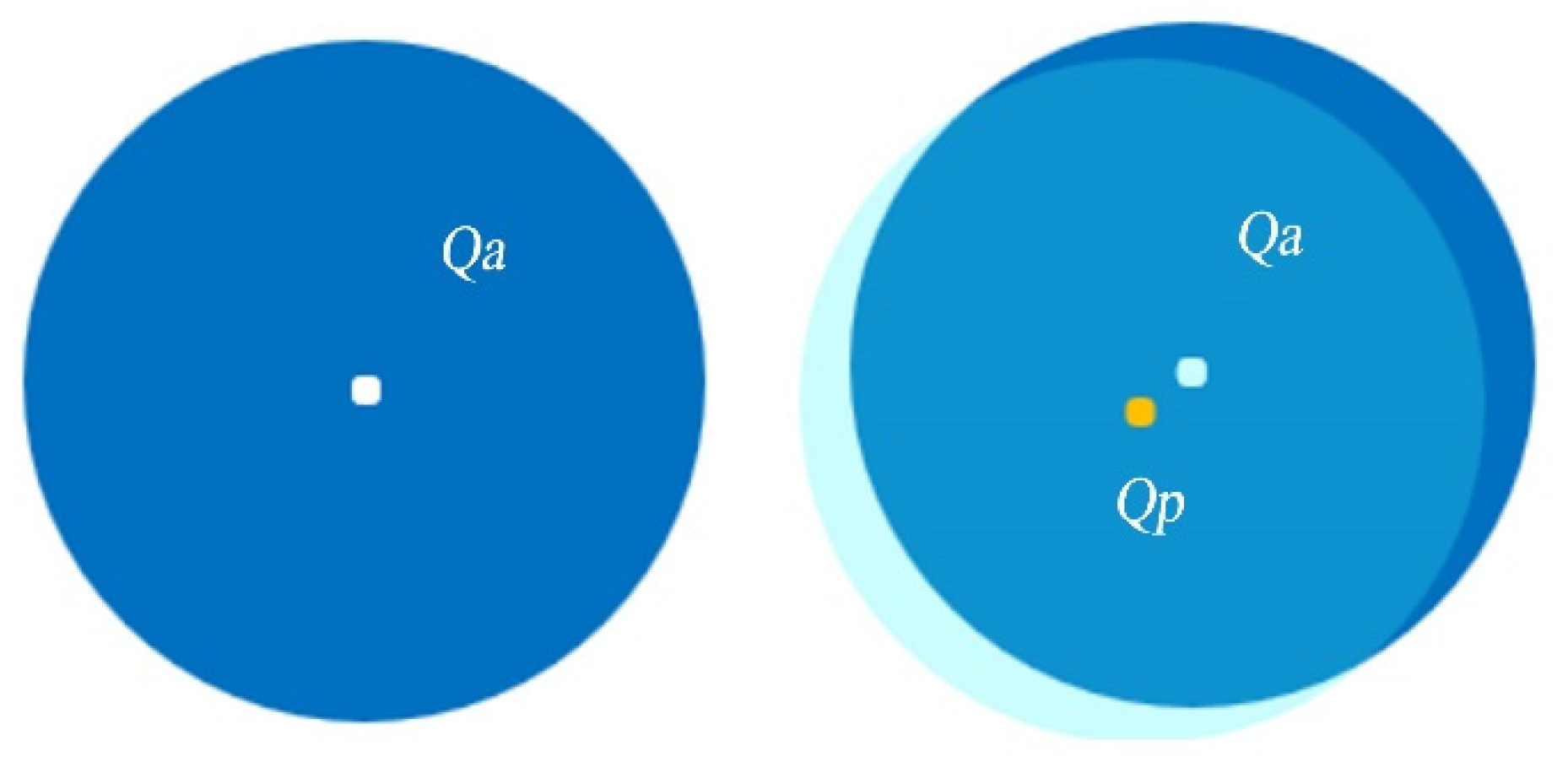

3.3. Experimental Scheme Design

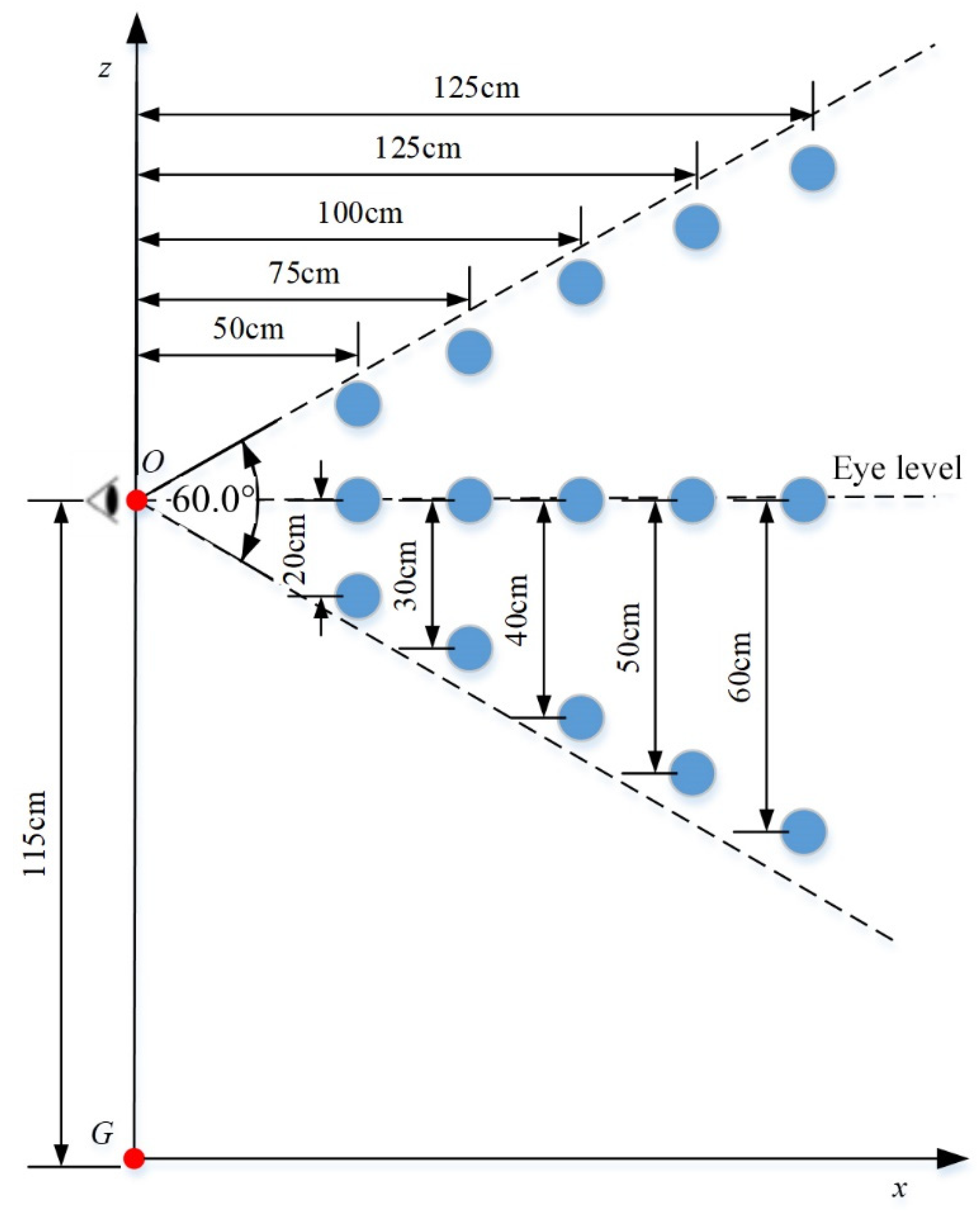

3.4. Experimental Environment Layout

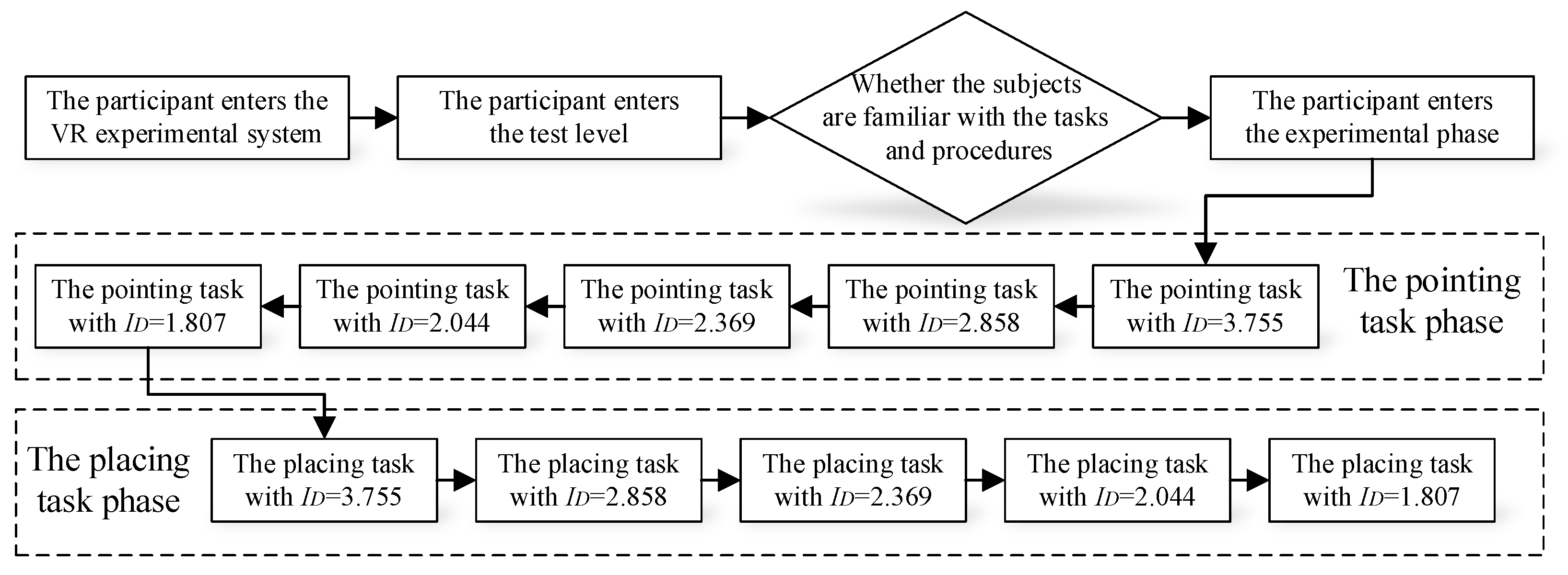

3.5. Experimental Process

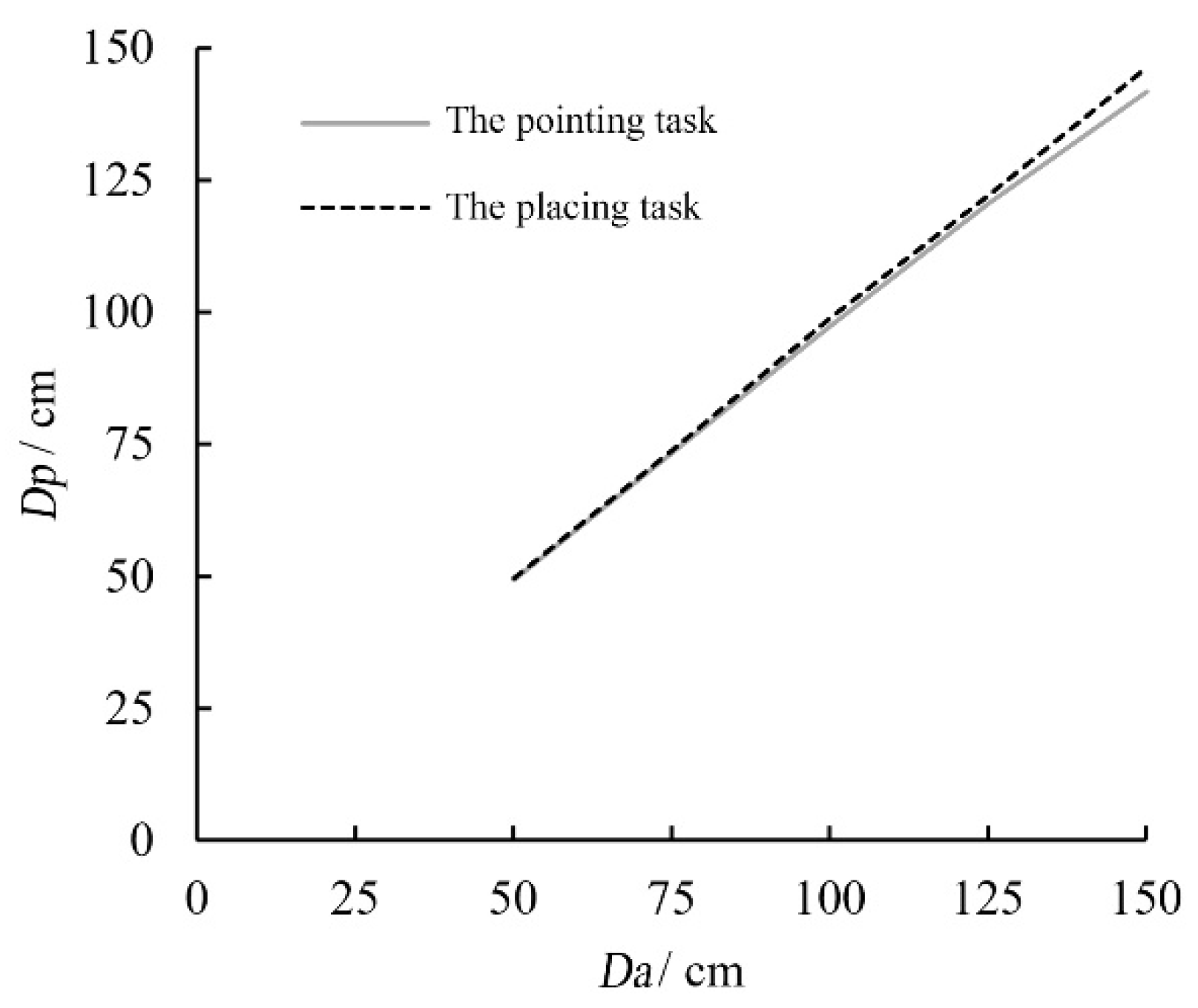

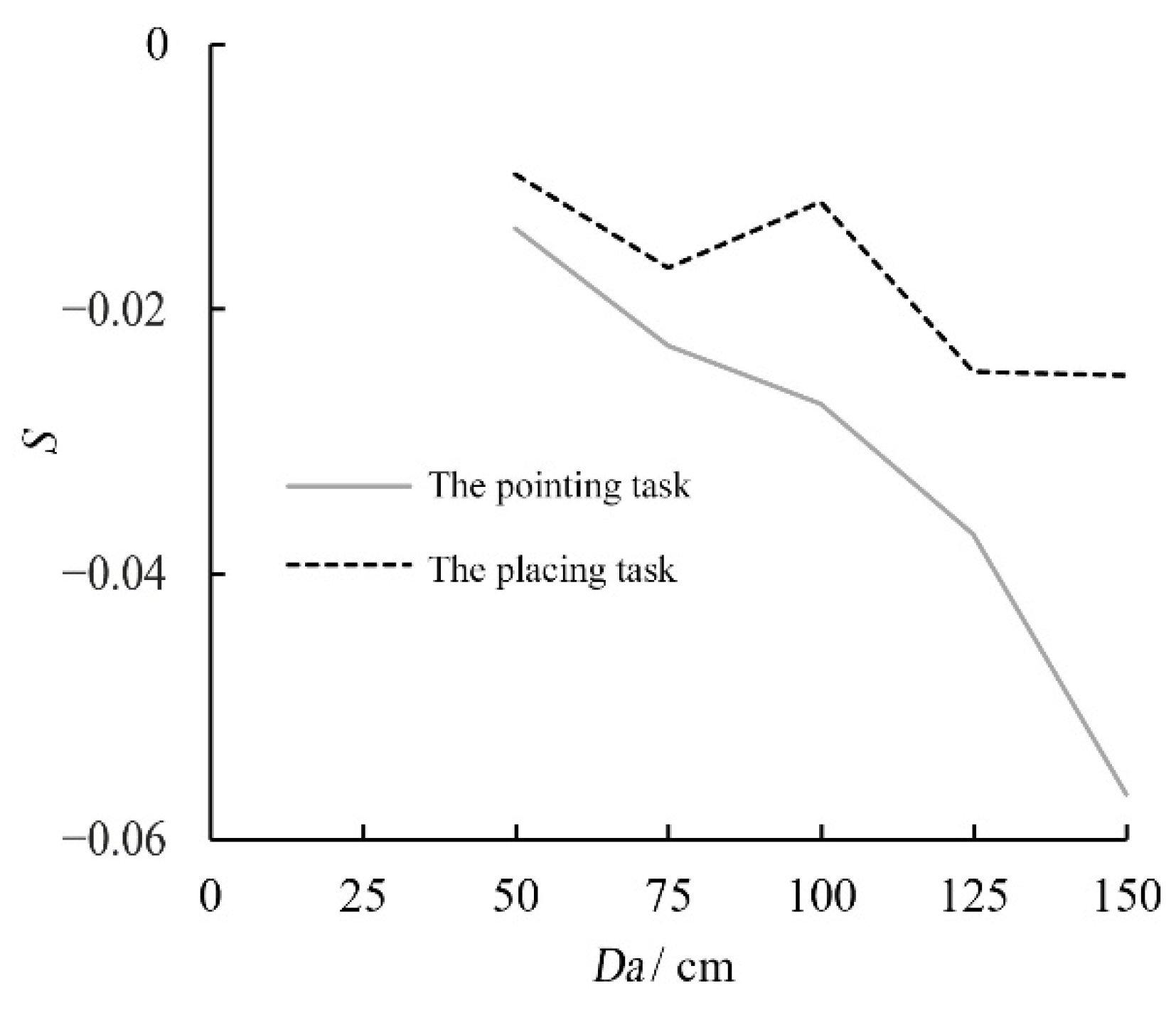

3.6. Analysis of the Experimental Results

3.6.1. The Independent Variable

3.6.2. The Dependent Variable

3.6.3. Cognitive Characteristics of Egocentric Peripersonal Distance

4. Virtual Interaction Location Prediction Model Based on Linear Regression Analysis

4.1. Data Processing

4.2. Data Analysis

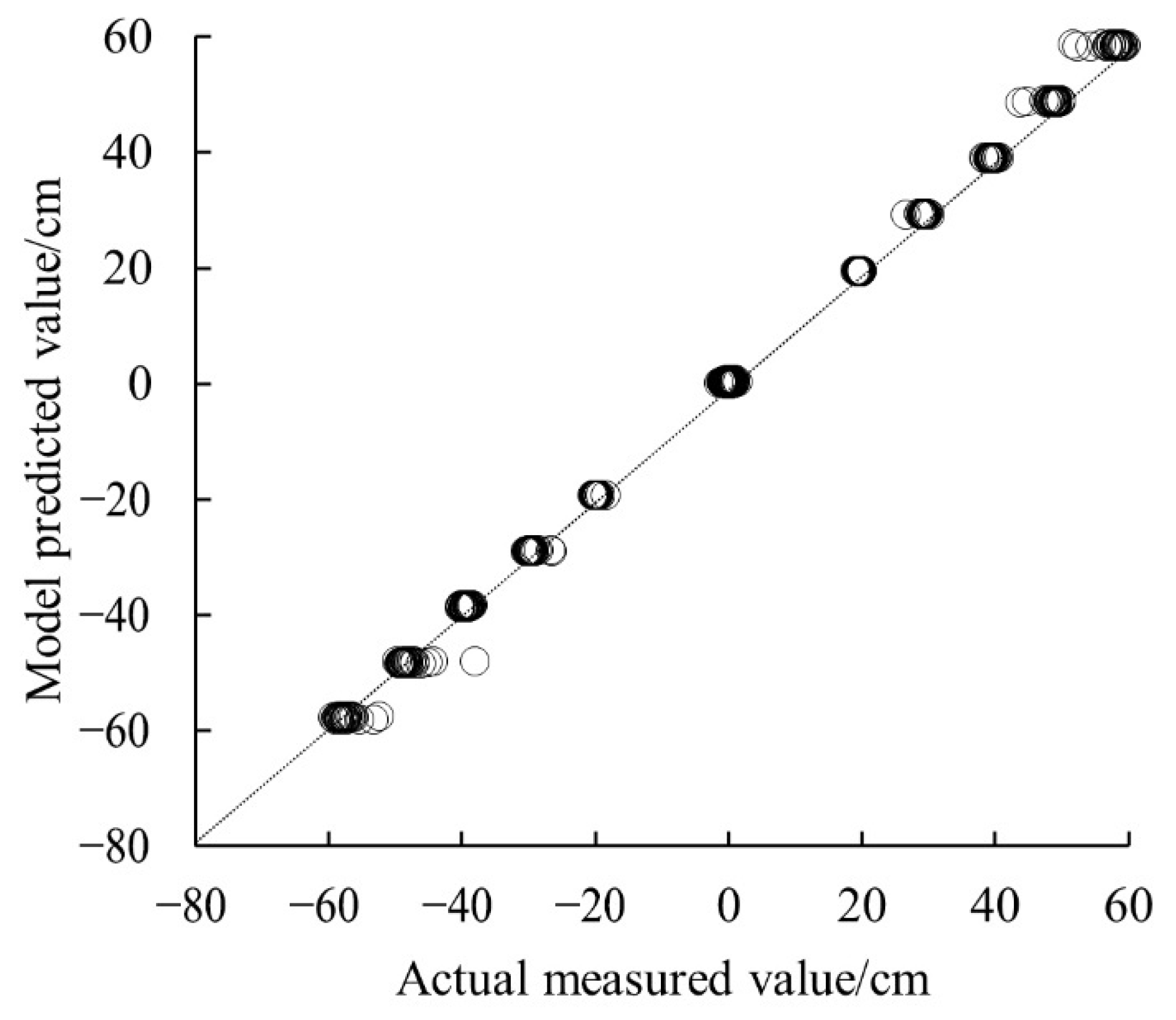

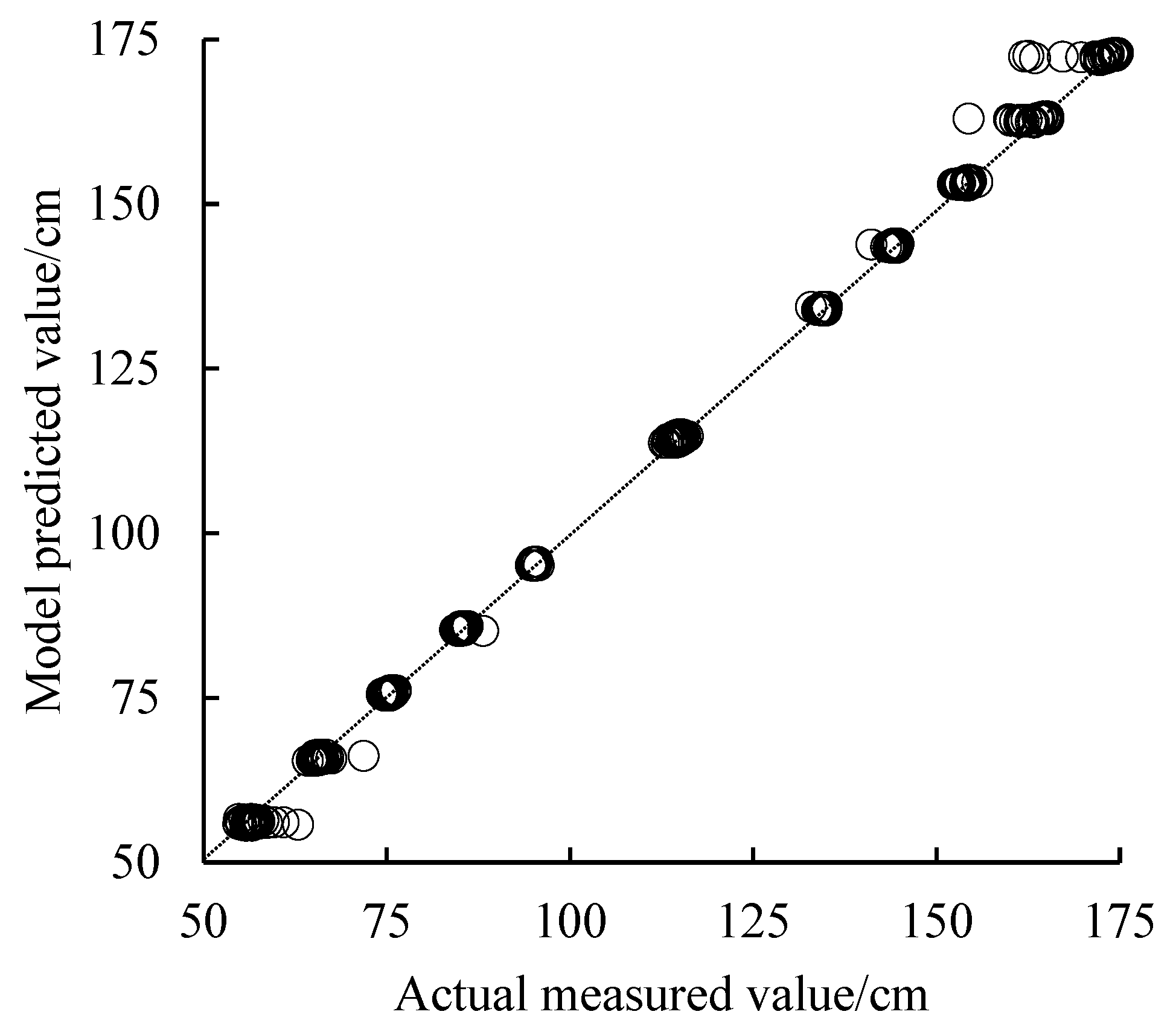

4.3. Construction of Virtual Interaction Location Prediction Regression Model

4.3.1. Sample Characteristics

4.3.2. Test for Collinearity of Independent Variables

4.3.3. Construction of Multiple Regression Model

4.3.4. Construction of Stepwise Regression Model

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhao, Z.Y.; Lv, J.; Pan, W.J.; Hou, Y.K.; Fu, Q.W. Research on VR spatial cognition based on virtual reproduction behavior. Chin. J. Eng. Des. 2020, 27, 340–348. [Google Scholar]

- Paes, D.; Irizarry, J.; Pujoni, D. An evidence of cognitive benefits from immersive design review: Comparing three-dimensional perception and presence between immersive and non-immersive virtual environments. Autom. Constr. 2021, 130, 103849. [Google Scholar] [CrossRef]

- Faure, C.; Limballe, A.; Bideau, B.; Kulpa, R. Virtual reality to assess and train team ball sports performance: A scoping review. J. Sport. Sci. 2020, 38, 192–205. [Google Scholar] [CrossRef] [PubMed]

- Woldegiorgis, B.H.; Lin, C.J.; Liang, W.Z. Impact of parallax and interpupillary distance on size judgment performances of virtual objects in stereoscopic displays. Ergonomics 2019, 62, 76–87. [Google Scholar] [CrossRef] [PubMed]

- Ping, J.; Thomas, B.H.; Baumeister, J.; Guo, J.; Weng, D.; Liu, Y. Effects of shading model and opacity on depth perception in optical see-through augmented reality. J. Soc. Inf. Disp. 2020, 28, 892–904. [Google Scholar] [CrossRef]

- Paes, D.; Arantes, E.; Irizarry, J. Immersive environment for improving the understanding of architectural 3D models: Comparing user spatial perception between immersive and traditional virtual reality systems. Autom. Constr. 2017, 84, 292–303. [Google Scholar] [CrossRef]

- Sahu, C.K.; Young, C.; Rai, R. Artificial intelligence (AI) in augmented reality (AR)-assisted manufacturing applications: A review. Int. J. Prod. Res. 2021, 59, 4903–4959. [Google Scholar] [CrossRef]

- Lin, C.J.; Woldegiorgis, B.H. Interaction and visual performance in stereoscopic displays: A review. J. Soc. Inf. Disp. 2015, 23, 319–332. [Google Scholar] [CrossRef]

- Buck, L.E.; Young, M.K.; Bodenheimer, B. A comparison of distance estimation in HMD-based virtual environments with different HMD-based conditions. ACM Trans. Appl. Percept. (TAP) 2018, 15, 1–15. [Google Scholar] [CrossRef]

- Willemsen, P.; Gooch, A.A.; Thompson, W.B.; Creem-Regehr, S.H. Effects of Stereo Viewing Conditions on Distance Perception in Virtual Environments. Presence (Camb. Mass.) 2008, 17, 91–101. [Google Scholar] [CrossRef]

- Naceri, A.; Chellali, R.; Hoinville, T. Depth perception within peripersonal space using headmounted display. Presence Teleoperators Virtual Environ. 2011, 20, 254–272. [Google Scholar] [CrossRef]

- Armbrüster, C.; Wolter, M.; Kuhlen, T.; Spijkers, W.; Fimm, B. Depth Perception in Virtual Reality: Distance Estimations in Peri- and Extrapersonal Space. Cyberpsychology Behav. 2008, 11, 9–15. [Google Scholar] [CrossRef] [PubMed]

- Nikolić, D.; Whyte, J. Visualizing a new sustainable world: Toward the next generation of virtual reality in the built environment. Buildings 2021, 11, 546. [Google Scholar] [CrossRef]

- Joe Lin, C.; Abreham, B.T.; Caesaron, D.; Woldegiorgis, B.H. Exocentric distance judgment and accuracy of head-mounted and stereoscopic widescreen displays in frontal planes. Appl. Sci. 2020, 10, 1427. [Google Scholar] [CrossRef] [Green Version]

- Gralak, R. A method of navigational information display using augmented virtuality. J. Mar. Sci. Eng. 2020, 8, 237. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Mavros, P.; Krukar, J.; Hölscher, C. The effect of navigation method and visual display on distance perception in a large-scale virtual building. Cogn. Process. 2021, 22, 239–259. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.J.; Woldegiorgis, B.H.; Caesaron, D. Distance estimation of near-field visual objects in stereoscopic displays. J. Soc. Inf. Disp. 2014, 22, 370–379. [Google Scholar] [CrossRef]

- Lin, C.J.; Woldegiorgis, B.H.; Caesaron, D.; Cheng, L. Distance estimation with mixed real and virtual targets in stereoscopic displays. Displays 2015, 36, 41–48. [Google Scholar] [CrossRef]

- Malbos, E.; Burgess, G.H.; Lançon, C. Virtual reality and fear of shark attack: A case study for the treatment of squalophobia. Clin. Case Stud. 2020, 19, 339–354. [Google Scholar] [CrossRef]

- Makaremi, M.; N’Kaoua, B. Estimation of Distances in 3D by Orthodontists Using Digital Models. Appl. Sci. 2021, 11, 8285. [Google Scholar] [CrossRef]

- Willemsen, P.; Colton, M.B.; Creem-Regehr, S.H.; Thompson, W.B. The effects of head-mounted display mechanical properties and field of view on distance judgments in virtual environments. ACM Trans. Appl. Percept. (TAP) 2009, 6, 1–14. [Google Scholar] [CrossRef]

- El Jamiy, F.; Marsh, R. Survey on depth perception in head mounted displays: Distance estimation in virtual reality, augmented reality, and mixed reality. IET Image Process. 2019, 13, 707–712. [Google Scholar] [CrossRef]

- Cardoso, J.C.S.; Perrotta, A. A survey of real locomotion techniques for immersive virtual reality applications on head-mounted displays. Comput. Graph. 2019, 85, 55–73. [Google Scholar] [CrossRef]

- Yu, M.; Zhou, R.; Wang, H.; Zhao, W. An evaluation for VR glasses system user experience: The influence factors of interactive operation and motion sickness. Appl. Ergon. 2019, 74, 206–213. [Google Scholar] [CrossRef] [PubMed]

- Bremers, A.W.D.; Yöntem, A.Ö.; Li, K.; Chu, D.; Meijering, V.; Janssen, C.P. Perception of perspective in augmented reality head-up displays. Int. J. Hum. Comput. Stud. 2021, 155, 102693. [Google Scholar] [CrossRef]

- Hong, J.Y.; Lam, B.; Ong, Z.T.; Ooi, K.; Gan, W.S.; Kang, J.; Tan, S.T. Quality assessment of acoustic environment reproduction methods for cinematic virtual reality in soundscape applications. Build. Environ. 2019, 149, 1–14. [Google Scholar] [CrossRef]

- Grabowski, A. Practical skills training in enclosure fires: An experimental study with cadets and firefighters using CAVE and HMD-based virtual training simulators. Fire Saf. J. 2021, 125, 103440. [Google Scholar] [CrossRef]

- Harris, D.J.; Buckingham, G.; Wilson, M.R.; Vine, S.J. Virtually the same? How impaired sensory information in virtual reality may disrupt vision for action. Exp. Brain Res. 2019, 237, 2761–2766. [Google Scholar] [CrossRef] [Green Version]

- Hecht, H.; Welsch, R.; Viehoff, J.; Longo, M.R. The shape of personal space. Acta Psychol. 2019, 193, 113–122. [Google Scholar] [CrossRef]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. Gen. 1992, 121, 262–269. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

| The Distance Cognition Accuracy/% | Real Space | VR Space |

|---|---|---|

| <60 | —— | Willemsen, Thompson, et al. |

| 60~70 | —— | Kelly, et al. |

| 70~75 | —— | Piryankova, Plumert, et al. |

| 75~80 | Plumert, Ziemer, et al. | Jones, Ziemer, Steinicke, Sahm, Messing, Durgin, et al. |

| 80~85 | —— | Iosa, Nguyen, Jones, et al. |

| 85~90 | —— | Mohler, et al. |

| 90~95 | Thompson, Willemsen, et al. | Ahmed, Kunz, et al. |

| 95~100 | Wu, Ahmed, Steinicke, Sahm, Sinai, Jones, Messing, Durgin, et al. | Takahashi, et al. |

| 100~110 | Fukusima, Piryankova, et al. | Lin, et al. |

| ID | A/cm | W/cm |

|---|---|---|

| 3.755 | 25 | 2 |

| 50 | 4 | |

| 75 | 6 | |

| 100 | 8 | |

| 125 | 10 | |

| 2.858 | 25 | 4 |

| 50 | 8 | |

| 75 | 12 | |

| 100 | 16 | |

| 125 | 20 | |

| 2.369 | 25 | 6 |

| 50 | 12 | |

| 75 | 18 | |

| 100 | 24 | |

| 125 | 30 | |

| 2.044 | 25 | 8 |

| 50 | 16 | |

| 75 | 24 | |

| 100 | 32 | |

| 125 | 40 | |

| 1.807 | 25 | 10 |

| 50 | 20 | |

| 75 | 30 | |

| 100 | 40 | |

| 125 | 50 |

| Qa/cm | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 50 cm | 75 cm | 100 cm | 125 cm | 150 cm | ||||||||||

| x | y | z | x | y | z | x | y | z | x | y | z | x | y | z |

| 50 | −20 | 135 | 75 | −30 | 145 | 100 | −40 | 155 | 125 | −50 | 165 | 150 | −60 | 175 |

| 50 | 0 | 135 | 75 | 0 | 145 | 100 | 0 | 155 | 125 | 0 | 165 | 150 | 0 | 175 |

| 50 | 20 | 135 | 75 | 30 | 145 | 100 | 40 | 155 | 125 | 50 | 165 | 150 | 60 | 175 |

| 50 | 20 | 115 | 75 | 30 | 115 | 100 | 40 | 115 | 125 | 50 | 115 | 150 | 60 | 115 |

| 50 | 0 | 115 | 75 | 0 | 115 | 100 | 0 | 115 | 125 | 0 | 115 | 150 | 0 | 115 |

| 50 | −20 | 115 | 75 | −30 | 115 | 100 | −40 | 115 | 125 | −50 | 115 | 150 | −60 | 115 |

| 50 | −20 | 95 | 75 | −30 | 85 | 100 | −40 | 75 | 125 | −50 | 65 | 150 | −60 | 55 |

| 50 | 0 | 95 | 75 | 0 | 85 | 100 | 0 | 75 | 125 | 0 | 65 | 150 | 0 | 55 |

| 50 | 20 | 95 | 75 | 30 | 85 | 100 | 40 | 75 | 125 | 50 | 65 | 150 | 60 | 55 |

| Variate | Class III Sum of Squares | Degrees of Freedom | The Mean Square | F | Significant |

|---|---|---|---|---|---|

| interaction task | 0.022 | 1 | 0.022 | 48.868 | <0.001 |

| difficulty coefficient | 0.007 | 4 | 0.002 | 3.695 | 0.006 |

| target location | 0.011 | 8 | 0.001 | 3.070 | 0.002 |

| interaction task & difficulty coefficient | 0.015 | 4 | 0.004 | 8.601 | <0.001 |

| interaction task & target location | 0.005 | 8 | 0.001 | 1.367 | 0.209 |

| difficulty coefficient & target location | 0.010 | 32 | 0.000 | 0.704 | 0.886 |

| target location & difficulty coefficient & target location | 0.012 | 32 | 0.000 | 0.825 | 0.740 |

| Variate | Class III Sum of Squares | Degrees of Freedom | The Mean Square | F | Significant |

|---|---|---|---|---|---|

| interaction task | 0.021 | 1 | 0.021 | 47.269 | <0.001 |

| difficulty coefficient | 0.007 | 4 | 0.002 | 3.803 | 0.005 |

| target location | 0.011 | 8 | 0.001 | 3.112 | 0.002 |

| interaction task and difficulty coefficient | 0.016 | 4 | 0.004 | 8.722 | <0.001 |

| interaction task and target location | 0.005 | 8 | 0.001 | 1.330 | 0.227 |

| difficulty coefficient and target location | 0.011 | 32 | 0.000 | 0.734 | 0.855 |

| target location & difficulty coefficient & target location | 0.012 | 32 | 0.000 | 0.806 | 0.767 |

| Sample Type | Sample Size | Parameter | Min | Max | Mean | SD |

|---|---|---|---|---|---|---|

| The independent variable | 450 | J | 0 | 1 | 0.5 | 0.50 |

| W/cm | 2 | 50 | 18 | 12.66 | ||

| /cm | 50 | 150 | 100 | 35.40 | ||

| /cm | −60 | 60 | 0 | 34.68 | ||

| /cm | 55 | 175 | 115 | 34.68 | ||

| The dependent variable | 450 | /cm | 47.02 | 149.53 | 97.20 | 33.61 |

| /cm | −59.21 | 59.72 | 0.14 | 33.62 | ||

| /cm | 54.68 | 174.73 | 114.57 | 33.63 |

| Parameter | J | W | ||||

|---|---|---|---|---|---|---|

| The correlation coefficient | J | 1 | 0 | 0 | 0 | 0 |

| W | 0 | 1 | −0.671 | 0 | 0 | |

| 0 | −0.671 | 1 | 0 | 0 | ||

| 0 | 0 | 0 | 1 | 0 | ||

| 0 | 0 | 0 | 0 | 1 | ||

| VIF | J | — | 1.000 | 1.000 | 1.000 | 1.000 |

| W | 1.000 | — | 1.818 | 1.000 | 1.000 | |

| 1.000 | 1.818 | — | 1.000 | 1.000 | ||

| 1.000 | 1.000 | 1.000 | — | 1.000 | ||

| 1.000 | 1.000 | 1.000 | 1.000 | — |

| The Model Number | The Regression Model | RMES/cm | rRMSE/% | |

|---|---|---|---|---|

| 1-1 | 0.993 | 2.751 | 28.30 | |

| 1-2 | 0.994 | 2.621 | 26.97 | |

| 1-3 | 0.993 | 2.716 | 27.94 | |

| 1-4 | 0.993 | 2.747 | 28.26 | |

| 1-5 | 0.993 | 2.750 | 28.29 | |

| 1-6 | 0.994 | 2.583 | 26.57 | |

| 1-7 | 0.994 | 2.616 | 26.91 | |

| 1-8 | 0.994 | 2.620 | 26.96 | |

| 1-9 | 0.994 | 2.711 | 27.89 | |

| 1-10 | 0.994 | 2.715 | 27.93 | |

| 1-11 | 0.993 | 2.746 | 28.25 | |

| 1-12 | 0.994 | 2.578 | 26.52 | |

| 1-13 | 0.994 | 2.582 | 26.56 | |

| 1-14 | 0.994 | 2.615 | 26.90 | |

| 1-15 | 0.994 | 2.711 | 27.89 | |

| 1-16 | 0.994 | 2.577 | 26.51 |

| The Model Number | The Regression Model | RMES/cm | rRMSE/% | |

|---|---|---|---|---|

| 2-1 | 0.999 | 1.0913 | 12.76 | |

| 2-2 | 0.999 | 1.0908 | 12.75 | |

| 2-3 | 0.999 | 1.0907 | 12.75 | |

| 2-4 | 0.999 | 1.0865 | 12.70 | |

| 2-5 | 0.999 | 1.0822 | 12.65 | |

| 2-6 | 0.999 | 1.0903 | 12.75 | |

| 2-7 | 0.999 | 1.0861 | 12.70 | |

| 2-8 | 0.999 | 1.0818 | 12.64 | |

| 2-9 | 0.999 | 1.0876 | 12.72 | |

| 2-10 | 0.999 | 1.0815 | 12.65 | |

| 2-11 | 0.999 | 1.0774 | 12.60 | |

| 2-12 | 0.999 | 1.0872 | 12.71 | |

| 2-13 | 0.999 | 1.0811 | 12.64 | |

| 2-14 | 0.999 | 1.0769 | 12.59 | |

| 2-15 | 0.999 | 1.0784 | 12.61 | |

| 2-16 | 0.999 | 1.0780 | 12.60 |

| The Model Number | The Regression Model | RMES/cm | rRMSE/% | |

|---|---|---|---|---|

| 3-1 | 0.998 | 1.3499 | 1.18 | |

| 3-2 | 0.998 | 1.3276 | 1.16 | |

| 3-3 | 0.998 | 1.3514 | 1.18 | |

| 3-4 | 0.998 | 1.3426 | 1.17 | |

| 3-5 | 0.998 | 1.3480 | 1.18 | |

| 3-6 | 0.998 | 1.3291 | 1.16 | |

| 3-7 | 0.998 | 1.3201 | 1.15 | |

| 3-8 | 0.998 | 1.3256 | 1.16 | |

| 3-9 | 0.998 | 1.3382 | 1.17 | |

| 3-10 | 0.998 | 1.3495 | 1.18 | |

| 3-11 | 0.998 | 1.3407 | 1.17 | |

| 3-12 | 0.998 | 1.3155 | 1.15 | |

| 3-13 | 0.998 | 1.3270 | 1.16 | |

| 3-14 | 0.998 | 1.3181 | 1.15 | |

| 3-15 | 0.998 | 1.3362 | 1.17 | |

| 3-16 | 0.998 | 1.3134 | 1.14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Zhao, H.; Lv, J.; Chen, Q.; Xiong, Q. Construction of Virtual Interaction Location Prediction Model Based on Distance Cognition. Symmetry 2022, 14, 2178. https://doi.org/10.3390/sym14102178

Liu Z, Zhao H, Lv J, Chen Q, Xiong Q. Construction of Virtual Interaction Location Prediction Model Based on Distance Cognition. Symmetry. 2022; 14(10):2178. https://doi.org/10.3390/sym14102178

Chicago/Turabian StyleLiu, Zhenghong, Huiliang Zhao, Jian Lv, Qipeng Chen, and Qiaoqiao Xiong. 2022. "Construction of Virtual Interaction Location Prediction Model Based on Distance Cognition" Symmetry 14, no. 10: 2178. https://doi.org/10.3390/sym14102178

APA StyleLiu, Z., Zhao, H., Lv, J., Chen, Q., & Xiong, Q. (2022). Construction of Virtual Interaction Location Prediction Model Based on Distance Cognition. Symmetry, 14(10), 2178. https://doi.org/10.3390/sym14102178