U-Net is a CNN architecture formed by a symmetrical encoder–decoder backbone with skip connection that is widely used for automated image segmentation and has demonstrated remarkable performance [

38,

39]. U-Net is a symmetrical network structure. Its core idea is to first realize down-sampling to reduce the size of the image, extract feature information through the convolution basis superimposed by the convolution layer and the pool layer, and then use the transposed convolution to recover the feature size in the up-sampling stage. The number of channels of the image is halved. In the down sampling stage, the context features are captured to accurately locate. Jump connection fuses the feature map of each layer sampled down with the feature map of the corresponding layer sampled up. Input the fused feature map into two convolution layers and the activation function until the output feature map has the same size ratio with the input, and the segmented image can be achieved. The feature fusion mode of U-Net network differs from that of Full Convolutional Networks for Semantic Segmentation (FCN). The difference is that FCN simply adds features of different scales, while U-Net network combines the low-resolution information of down sampling and the high-resolution information of up-sampling. Its jump connection is to splice the feature information according to the channel dimension. It effectively connects the feature information of coding path and decoding path, fills in the underlying information, and improves the training accuracy. Compared with FCN, U-Net can obtain more refined training effects. Because U-Net also shows excellent performance on small data sets, it is commonly used in image processing. Because U-Net needs to classify each pixel in each image, it will cause a lot of redundancy due to a large number of repeated feature extraction processes while forming a huge amount of computation, which will eventually lead to low training efficiency of the entire network. Furthermore, when data samples are heavy, using a large number of pooling operations will reduce the dimension of each feature map, resulting in the loss of much valuable information. In the process of encoding and decoding of U-net network, successive up- and down-sampling operations will lead to the loss of valuable detailed information, which leads to imperfect segmentation accuracy. Moreover, U-net is not clear in identifying background targets, such as being inadequate in identifying minor targets and fuzzy targets.

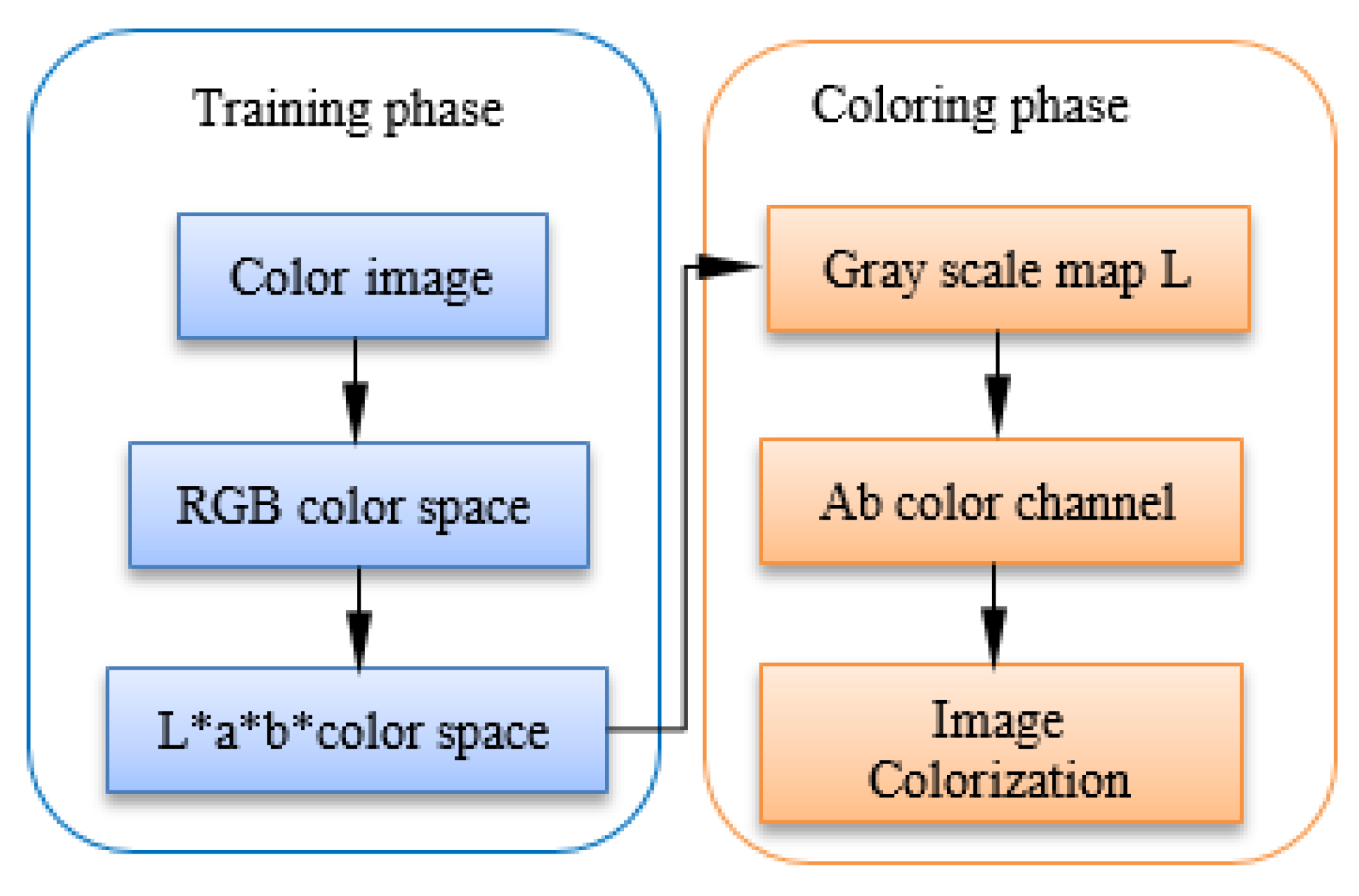

In order to obtain a feature layer with richer global semantic information and a greater receptive field, we reduce the model parameters by reducing the depth of the network, introduce expansion convolution and remove the maximum pooling link, and add symmetrical jump connections to the corresponding convolution and transposition convolution layers, so that the network can better obtain more important feature information of the feature layer, and reduce training time without losing the model segmentation accuracy. The above methods enable the new model to achieve better coloring results on black and white person photos, which is called CU-net. This paper proposes an improved convolutional neural network model CU-net, based on U-net as the main network framework of this paper. U-net has the ability of local perception, which is suitable for small data sets, and the network structure is simple. It can obtain a better segmentation effect in the case of a short training time. We have made the following improvements to U-net: we complete three down-sampling and three up-sampling in order to decrease the depth of the network. It is worth mentioning that in the down-sampling process, the maximum pooling operation is removed and the dilated convolution is introduced.

3.2.5. Activation Function and Batch Normalization

It is particularly important for image segmentation to fuse low-level features and high-level features and effectively propagate them back to all layers, which are related to the final coloring of the image. Because of the channel number of feature pixels, the resolution is distinct in different feature fusion layers, and the shallower the layer, the larger the scale. Before fusing low-level features and high-level features of different sizes, the output features of each layer need to be batch-standardized. First of all, normalization is to subtract the mean value from the input value of the data and then divide it by the standard deviation of the data. Image processing is a process of feature extraction layer by layer, and the output of each layer can be interpreted as the data after feature extraction. Therefore, normalization means that data normalization is performed at each layer of the network. However, such an operation is computationally expensive, just like using minimum batch gradient descent. The “batch” in batch normalization actually refers to sampling a small batch of data, and then normalizing the output of the batch of data at each layer of the network [

41]. In this way, the problems of uneven distribution and “gradient dispersion” of each layer can be solved, and the network training can be accelerated.

Assume several pieces of data are employed to one sampling training.

is the mean of the output value of the neuron model of layer j when the kth data is trained.

represents the average output value of this batch of data at the I neurons of layer J.

represents the standard deviation of the output value of this batch of data at the i-neuron type of layer J. The output value after batch normalization is as follows:

The mean value of neuron type output

is measured by:

The standard deviation of neuron output value

is measured by:

where

is a very small constant to prevent the denominator of Formula (3) from tending to 0. The purpose of batch normalization is actually very simple, that is, to adjust the input data of each layer of the neural network to the standard normal distribution with the mean value of zero and the variance of 1. In order to solve the gradient saturation problem, ReLU activation function is used. Generally, batch normalization (BN) operation is added to large training data sets and deep neural networks. Compared with the shallow neural networks including three down-sampling and three up-sampling processes, and small data sets, sigmoid activation is more suitable for this paper. Sigmoid function has an exponential form as follows:

The main reason for using a sigmoid function is that it exists between 0 and 1, which is convenient for the derivation. Therefore, it is applicable to the model that must predict the probability as the output. Since the probability of anything only exists between 0 and 1, sigmoid is the correct choice. When the output value is large, the sigmoid function will enter the saturation region, resulting in its derivative being almost zero. Even if we know that the neuron needs to be corrected, the gradient will be too small to train. The value of sigmoid activation function between [–2, 2] is an approximately linear region. Therefore, what this paper does is to put the batch standardized transformation before the activation function, which is equivalent to adding a preprocessing operation at the input of each layer, and then entering the next layer of the network, as showed in

Figure 9.

What the BN algorithm does is to normalize the input value as much as possible in the narrow area of sigmoid activation function [–2, 2]. As |x| increases, s’(x) tends to zero.

Since x is affected by W, b and the parameters of all the layers below, changes to those parameters during training will likely move many dimensions of x into the saturated regime of the nonlinearity and slow down the convergence. The change in the distribution of internal nodes in the deep network during the training process is called the internal covariate shift, which is defined as the change in the network activation distribution caused by the change of network parameters during the training. In order to improve training, we sought to reduce changes in internal covariates. The training speed is expected to be improved by fixing the distribution of layer inputs during the training.

To prevent each dimension from being malicious, we normalize each scalar feature independently, by making it have the mean of zero and the variance of 1. For a layer with d-dimensional input, each dimension is expressed as:

where the expectation and variance are computed over the training data set. For an input x with dimension d, it is necessary to standardize each dimension. Suppose that the x we input is an RGB three channel color images, then d here is the channels of the input image, that means d = 3. Standardized processing refers to processing R channel, G channel and B channel respectively.

is the average value of each batch of training data neurons

, while

is the standard deviation of activation of each batch of neurons. The distribution of any value of

has an expected value of 0 and a variance of 1, which can be obtained by observation:

The proof process of Formula (8) is given below. Let the mean value of the sample be

, and its expression is

Let

be the original standard deviation and

be the variance, and we can acquire the equation

Let

represent the changed

:

If we bring Equation (12) into Equation (13), we get

Simply normalizing the input of each layer may change what the layer can represent. For example, normalized sigmoid input constrains them to nonlinear linear states. In order to solve this problem, the parameter

and the parameter

are introduced for each A. They are learnable parameters, similar to weights and offsets, and are generally updated by gradient descent method. They scale and shift the normalized values:

these parameters are learned together with the original model parameters and the representation ability of the network is restored.

The average value of each dimension in the feature space is calculated from the input, and then the average value vector is subtracted from each training sample. After this operation is completed, the variance of each dimension is calculated after the lower branch, and the whole denominator of the normalization equation is calculated. Next, invert it and multiply it by the difference between the input and the mean. The last two operations on the right perform compression by multiplying the input γ and finally adding β to obtain the batch standardized output. When BN is not used, the conversion of the active layer is:

where

is the layer input, the weight matrix

and the offset vector

are the learning parameters of the model, and are nonlinear sigmoid functions. For the sigmoid activation function, the change of

at both ends of the function image is small relative to the change of

, which is particularly prone to the problem of gradient attenuation. Therefore, some normalization processing before the sigmoid function can alleviate the problem of gradient attenuation. The BN transform is added immediately before the nonlinearity. Since

may be another nonlinear output, the shape of its distribution may change during training, and as constraining its first and second moments will not eliminate the covariate shift, we can also normalize the layer input

. In contrast,

is more likely to have a symmetric non sparse distribution, and normalizing it may produce activation with a stable distribution. The

is normalized and the deviation

is not taken into account. The effect will be canceled by the subsequent average subtraction. Therefore,

is replaced by

.

The methods in this paper are compared with other methods, and the results are presented in

Table 9.

By comparing the initial and batch-standardized variants, the number of training steps required to achieve the initial maximum accuracy (72.2%) and the maximum accuracy achieved by network work, it can be found that the BN sigmoid method in this experiment has obtained better results.

In summary, in order to reduce the problem of internal covariate offset, a standardized “layer” must be added before each layer is activated. Each dimension (input neuron) must be standardized separately, instead of being completely consistent with all dimensions. In this way, it is possible to optimize with a higher learning rate without having to accommodate small-scale dimensions as before. Normalization before sigmoid activation function can alleviate the problem of gradient attenuation and achieve better accuracy, and more weight interfaces fall in the data, reducing the risk of overfitting.