3D Copyright Protection Based on Binarized Computational Ghost Imaging Encryption and Cellular Automata Transform

Abstract

:1. Introduction

- Ghost imaging optical encryption generates ciphertext through different light intensities. By controlling the initial phase, it saves key space and improves the security performance of the system.

- Ghost imaging generates a key through different light intensities, and the speckle is embedded into the original image through meta-cellular automata watermark. The embedding effect of the speckle key is better than that of traditional watermark embedding.

- Ghost image encryption embeds the original image through the speckle key. The extracted speckle still has irregular light intensity distribution. Without the key, the correct watermark cannot be restored, which strengthens the security of the system.

- The key is embedded into the original image through meta-cellular automata, and the algorithm has strong confidentiality. In extracting the watermark, the same sort as that in embedding should be used, otherwise it will be extracted incorrectly, which makes the encryption of the scheme have a double guarantee.

2. Theoretical Analysis

2.1. Image Encryption Based on Binarized Computational Ghost Imaging (BCGI)

2.2. Watermark Embedding and Extraction Based on Cellular Automata Transform (CAT)

2.2.1. Cellular Automata

2.2.2. Cellular Automata Transform

2.2.3. Logistic Chaotic Mapping

2.2.4. Watermark Embedding and Extraction

3. Experiment Result and Discussion

3.1. Experiental Setup

3.2. Performance Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Acknowledgments

Conflicts of Interest

References

- Lacy, J.; Quackenbush, S.R.; Reibman, A.R.; Snyder, J.H. Intellectual property protection systems and digital watermarking. Opt. Express 1998, 3, 478–484. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, J.; Jung, J.-H.; Jeong, Y.; Hong, K.; Lee, B. Real-time integral imaging system for light field microscopy. Opt. Express 2014, 22, 10210–10220. [Google Scholar] [CrossRef] [PubMed]

- Lippmann, G. Epreuves reversibles donnant la sensation du relief. J. Phys. 1908, 7, 821–825. [Google Scholar] [CrossRef]

- Llavador, A.; Sánchez-Ortiga, E.; Saavedra, G.; Javidi, B.; Martínez-Corral, M. Free-depths reconstruction with synthetic impulse response in integral imaging. Opt. Express 2015, 23, 30127–30135. [Google Scholar] [CrossRef]

- Burckhardt, C.B. Optimum Parameters and Resolution Limitation of Integral Photography. J. Opt. Soc. Am. 1968, 58, 71–74. [Google Scholar] [CrossRef]

- Nikolaidis, N.; Pitas, I. Robust image watermarking in the spatial domain. Signal Process. 1998, 66, 385–403. [Google Scholar] [CrossRef]

- Chen, W.; Chen, X.; Stern, A.; Javidi, B. Phase-Modulated Optical System with Sparse Representation for Information Encoding and Authentication. IEEE Photonics J. 2013, 5, 6900113. [Google Scholar] [CrossRef]

- Li, Z.; Xia, F.; Zheng, G.; Zhang, J. Copyright protection in digital museum based on digital holography and discrete wavelet transform. Chin. Opt. Lett. 2008, 6, 251–254. [Google Scholar]

- Ishikawa, Y.; Uehira, K.; Yanaka, K. Practical Evaluation of Illumination Watermarking Technique Using Orthogonal Transforms. J. Disp. Technol. 2010, 6, 351–358. [Google Scholar] [CrossRef]

- Li, X.; Lee, I.-K. Robust copyright protection using multiple ownership watermarks. Opt. Express 2015, 23, 3035–3046. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Wang, Y.; Wang, Q.-H.; Kim, S.-T.; Zhou, X. Copyright Protection for Holographic Video Using Spatiotemporal Consistent Embedding Strategy. IEEE Trans. Ind. Inform. 2019, 15, 6187–6197. [Google Scholar] [CrossRef]

- Valandar, M.Y.; Barani, M.J.; Ayubi, P. A blind and robust color images watermarking method based on block transform and secured by modified 3-dimensional Hénon map. Soft Comput. 2019, 24, 771–794. [Google Scholar] [CrossRef]

- Li, X.; Ren, Z.; Wang, T.; Deng, H. Ownership protection for light-field 3D images: HDCT watermarking. Opt. Express 2021, 29, 43256–43269. [Google Scholar] [CrossRef]

- Hamidi, M.; El Haziti, M.; Cherifi, H.; El Hassouni, M. A Hybrid Robust Image Watermarking Method Based on DWT-DCT and SIFT for Copyright Protection. J. Imaging 2021, 7, 218. [Google Scholar] [CrossRef]

- Wu, X.; Li, J.; Tu, R.; Cheng, J.; Bhatti, U.A.; Ma, J. Contourlet-DCT based multiple robust watermarkings for medical images. Multimedia Tools Appl. 2018, 78, 8463–8480. [Google Scholar] [CrossRef]

- Hurrah, N.N.; Loan, N.A.; Parah, S.A.; Sheikh, J.A.; Muhammad, K.; de Macedo, A.R.L.; de Albuquerque, V.H.C. INDFORG: Industrial Forgery Detection Using Automatic Rotation Angle Detection and Correction. IEEE Trans. Ind. Inform. 2020, 17, 3630–3639. [Google Scholar] [CrossRef]

- Kamili, A.; Hurrah, N.N.; Parah, S.A.; Bhat, G.M.; Muhammad, K. DWFCAT: Dual watermarking framework for industrial image authentication and tamper localization. IEEE Trans. Ind. Inform. 2020, 17, 5108–5117. [Google Scholar] [CrossRef]

- Wang, Y.; Ren, Z.; Zhang, L.; Li, D.; Li, X. 3D image hiding using deep demosaicking and computational integral imaging. Opt. Lasers Eng. 2021, 148, 106772. [Google Scholar] [CrossRef]

- Kishk, S.; Javidi, B. Information hiding technique with double phase encoding. Appl. Opt. 2002, 41, 5462–5470. [Google Scholar] [CrossRef]

- Wang, X.; Chen, W.; Chen, X. Fractional Fourier domain optical image hiding using phase retrieval algorithm based on iterative nonlinear double random phase encoding. Opt. Express 2014, 22, 22981–22995. [Google Scholar] [CrossRef]

- Rajanbabu, D.T.; Raj, C. Multi level encryption and decryption tool for secure administrator login over the network. Indian J. Sci. Technol. 2014, 7, 8. [Google Scholar] [CrossRef]

- Rrfregier, P.; Javidi, B. Optical image encryption based on input plane and Fourier plane random encoding. Opt. Lett. 1995, 20, 767–769. [Google Scholar] [CrossRef] [PubMed]

- Clemente, P.; Durán, V.; Torres-Company, V.; Tajahuerce, E.; Lancis, J. Optical encryption based on computational ghost imaging. Opt. Lett. 2010, 35, 2391–2393. [Google Scholar] [CrossRef] [PubMed]

- Duran, V.; Clemente, P.; Torres-Company, V.; Tajahuerce, E.; Lancis, J.; Andrés, P. Optical encryption with compressive ghost imaging. In Proceedings of the 2011 Conference on Lasers and Electro-Optics Europe and 12th European Quantum Electronics Conference (CLEO EUROPE/EQEC), Munich, Germany, 22–26 May 2011; p. 1. [Google Scholar] [CrossRef]

- Zhang, L.; Pan, Z.; Liang, D.; Ma, X.; Zhang, D. Study on the key technology of optical encryption based on compressive ghost imaging with double random-phase encoding. Opt. Eng. 2015, 54, 125104. [Google Scholar] [CrossRef]

- Zhang, X.; Meng, X.; Yin, Y.; Yang, X.; Wang, Y.; Li, X.; Peng, X.; He, W.; Dong, G.; Chen, H. Two-level image authentication by two-step phase-shifting interferometry and compressive sensing. Opt. Lasers Eng. 2018, 100, 118–123. [Google Scholar] [CrossRef]

- Zheng, P.; Tan, Q.; Liu, H.-C. Inverse computational ghost imaging for image encryption. Opt. Express 2021, 29, 21290–21299. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, Y.; Zhang, D. Research on multiple-image encryption mechanism based on Radon transform and ghost imaging. Opt. Commun. 2021, 504, 127494. [Google Scholar] [CrossRef]

- Bromberg, Y.; Katz, O.; Silberberg, Y. Ghost imaging with a single detector. Phys. Rev. A 2009, 79, 053840. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.G.; Wang, B.P.; Pei, S.K.; Zhou, Y.H.; Shi, W.M.; Liao, X. Using M-ary decomposition and virtual bits for visually meaningful image encryption. Inf. Sci. 2021, 580, 174–201. [Google Scholar] [CrossRef]

- Erkan, U.; Toktas, A.; Toktas, F.; Alenezi, F. 2D eπ-map for image encryption. Inf. Sci. 2022, 589, 770–789. [Google Scholar] [CrossRef]

- Dong, Y.; Zhao, G.; Ma, Y.; Pan, Z.; Wu, R. A novel image encryption scheme based on pseudo-random coupled map lattices with hybrid elementary cellular automata. Inf. Sci. 2022, 593, 121–154. [Google Scholar] [CrossRef]

- Jiang, D.; Liu, L.; Zhu, L.; Wang, X.; Rong, X.; Chai, H. Adaptive embedding: A novel meaningful image encryption scheme based on parallel compressive sensing and slant transform. Signal Process. 2021, 188, 108220. [Google Scholar] [CrossRef]

- Huang, W.; Jiang, D.; An, Y.; Liu, L.; Wang, X. A novel double-image encryption algorithm based on rossler hyperchaotic system and compressive sensing. IEEE Access 2021, 9, 41704–41716. [Google Scholar] [CrossRef]

- Toktas, A.; Erkan, U. 2D fully chaotic map for image encryption constructed through a quadruple-objective optimization via artificial bee colony algorithm. Neural Comput. Appl. 2021, 34, 4295–4319. [Google Scholar] [CrossRef]

- Agarwal, N.; Singh, P.K. Discrete cosine transforms and genetic algorithm based watermarking method for robustness and imperceptibility of color images for intelligent multimedia applications. Multimed. Tools Appl. 2022, 1–27. [Google Scholar] [CrossRef]

- Vaidya, S.P. Fingerprint-based robust medical image watermarking in hybrid transform. Vis. Comput. 2022, 1–16. [Google Scholar] [CrossRef]

- Hussain, M.; Riaz, Q.; Saleem, S.; Ghafoor, A.; Jung, K.H. Enhanced adaptive data hiding method using LSB and pixel value differencing. Multimed. Tools Appl. 2021, 80, 20381–20401. [Google Scholar] [CrossRef]

| Method | PSNR (dB) |

|---|---|

| Our method | 40.12 |

| Method [36] | 35.63 |

| Method [37] | 36.09 |

| Method [38] | 35.04 |

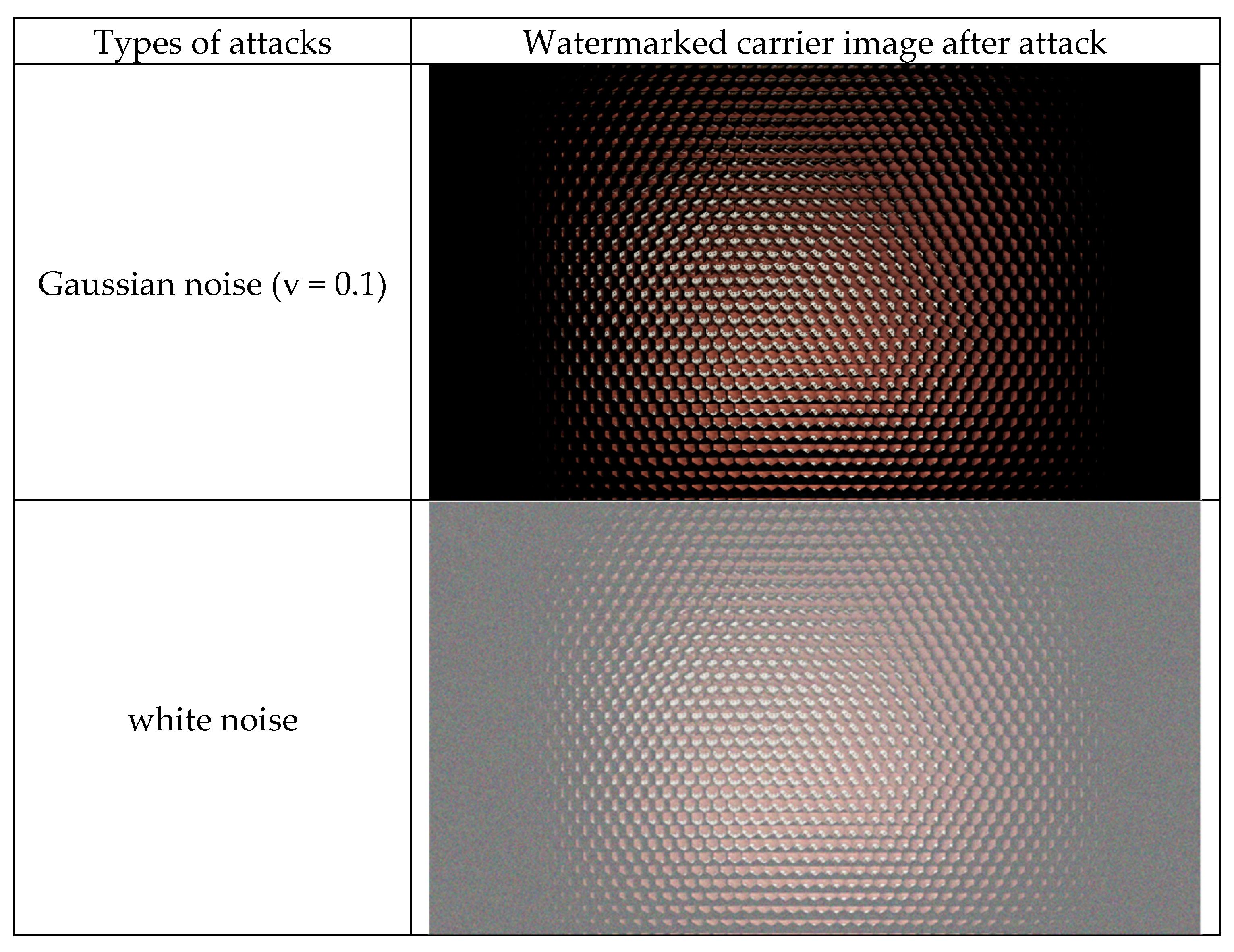

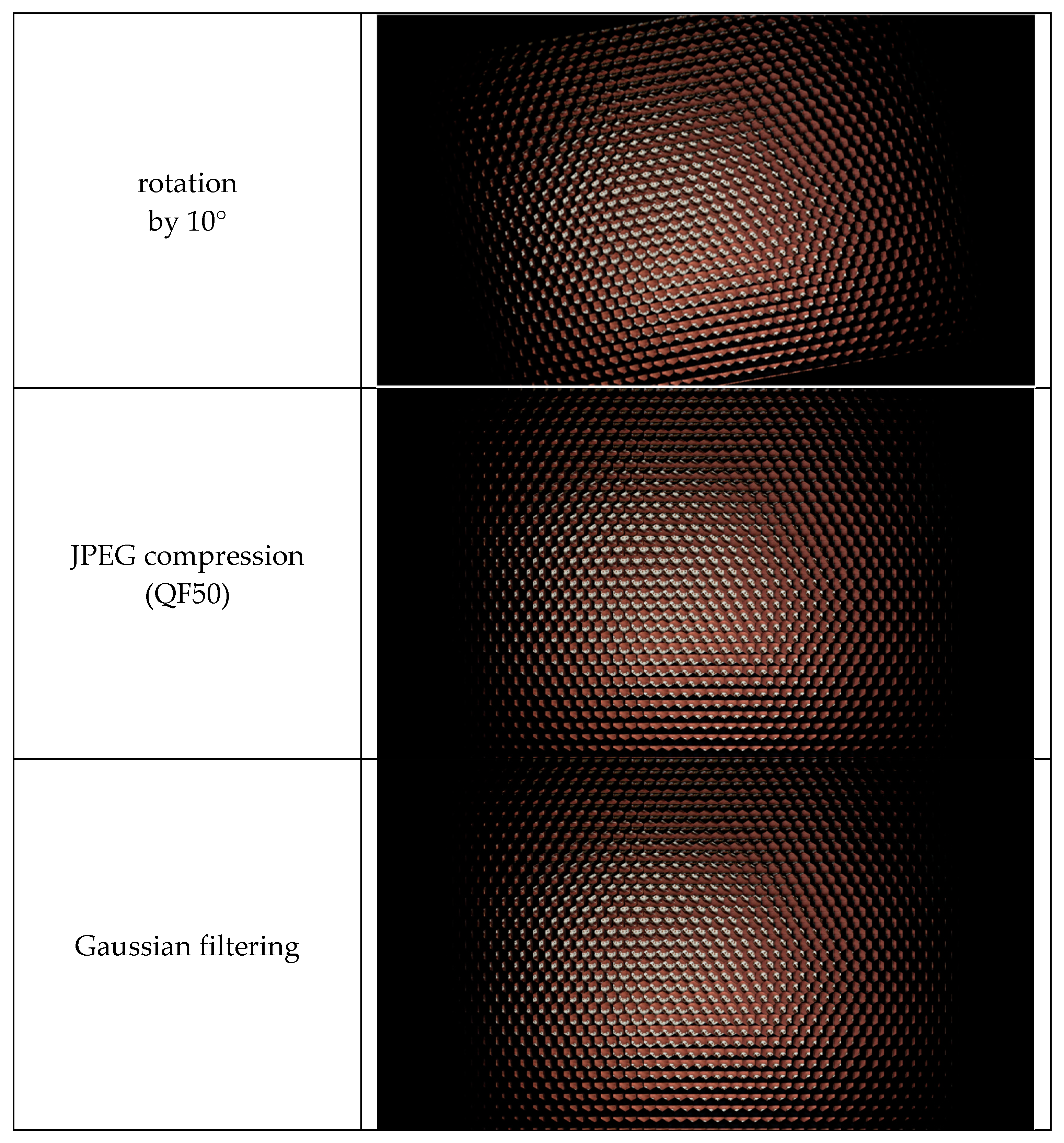

| Attacks | No Attacks | Gaussian Noise (v = 0.1) | White Noise | Rotation by 10° | JPEG Compression | Gaussian Filtering |

|---|---|---|---|---|---|---|

| Extracted ‘SCU’ watermark |  |  |  |  |  |  |

| Extracted ‘ZY’ watermark |  |  |  |  |  |  |

| Extracted ‘TL’ watermark |  |  |  |  |  |  |

| Attack | Our Method | Method [36] | Method [37] | |||

|---|---|---|---|---|---|---|

| NC | BER | NC | BER | NC | BER | |

| Gaussian noise (v = 0.1) | 0.9610 | 0.0162 | 0.9513 | 0.0185 | 0.9605 | 0.0184 |

| white noise | 0.9602 | 0.0184 | 0.9510 | 0.0195 | 0.9593 | 0.0189 |

| JPEG compression (QF50) | 0.9510 | 0.0194 | 0.9502 | 0.0204 | 0.9418 | 0.0264 |

| Gaussian filtering | 0.9692 | 0.0153 | 0.9508 | 0.0197 | 0.9684 | 0.0178 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, M.; Chen, M.; Li, J.; Yu, C. 3D Copyright Protection Based on Binarized Computational Ghost Imaging Encryption and Cellular Automata Transform. Symmetry 2022, 14, 595. https://doi.org/10.3390/sym14030595

Wang M, Chen M, Li J, Yu C. 3D Copyright Protection Based on Binarized Computational Ghost Imaging Encryption and Cellular Automata Transform. Symmetry. 2022; 14(3):595. https://doi.org/10.3390/sym14030595

Chicago/Turabian StyleWang, Meng, Mengli Chen, Jianzhong Li, and Chuying Yu. 2022. "3D Copyright Protection Based on Binarized Computational Ghost Imaging Encryption and Cellular Automata Transform" Symmetry 14, no. 3: 595. https://doi.org/10.3390/sym14030595