Asymmetric Free-Hand Interaction on a Large Display and Inspirations for Designing Natural User Interfaces

Abstract

:1. Introduction

2. Literature Review

2.1. Fundamental Task in Free-Hand Interaction and Implementing Techniques

2.2. Ergonomic Concerns in Free-Hand Interaction and User Interfaces

2.3. Arm Movement Kinematic Features and Influences on Free-Hand Interaction

3. Research Objectives and Hypotheses

3.1. Objectives

3.2. Hypotheses Development

4. Methods

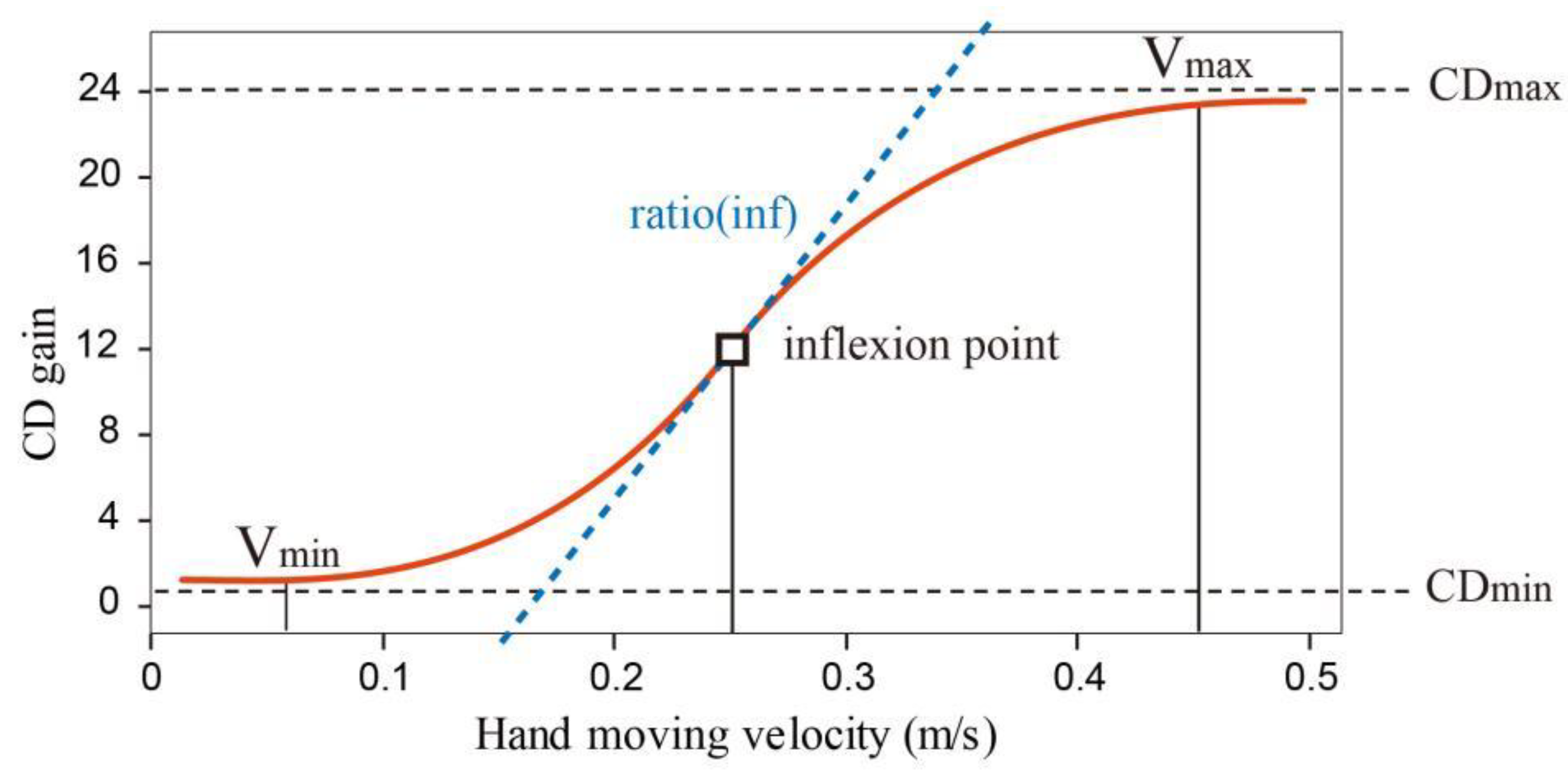

4.1. Free-Hand Target Acquisition Technique

4.2. Participants

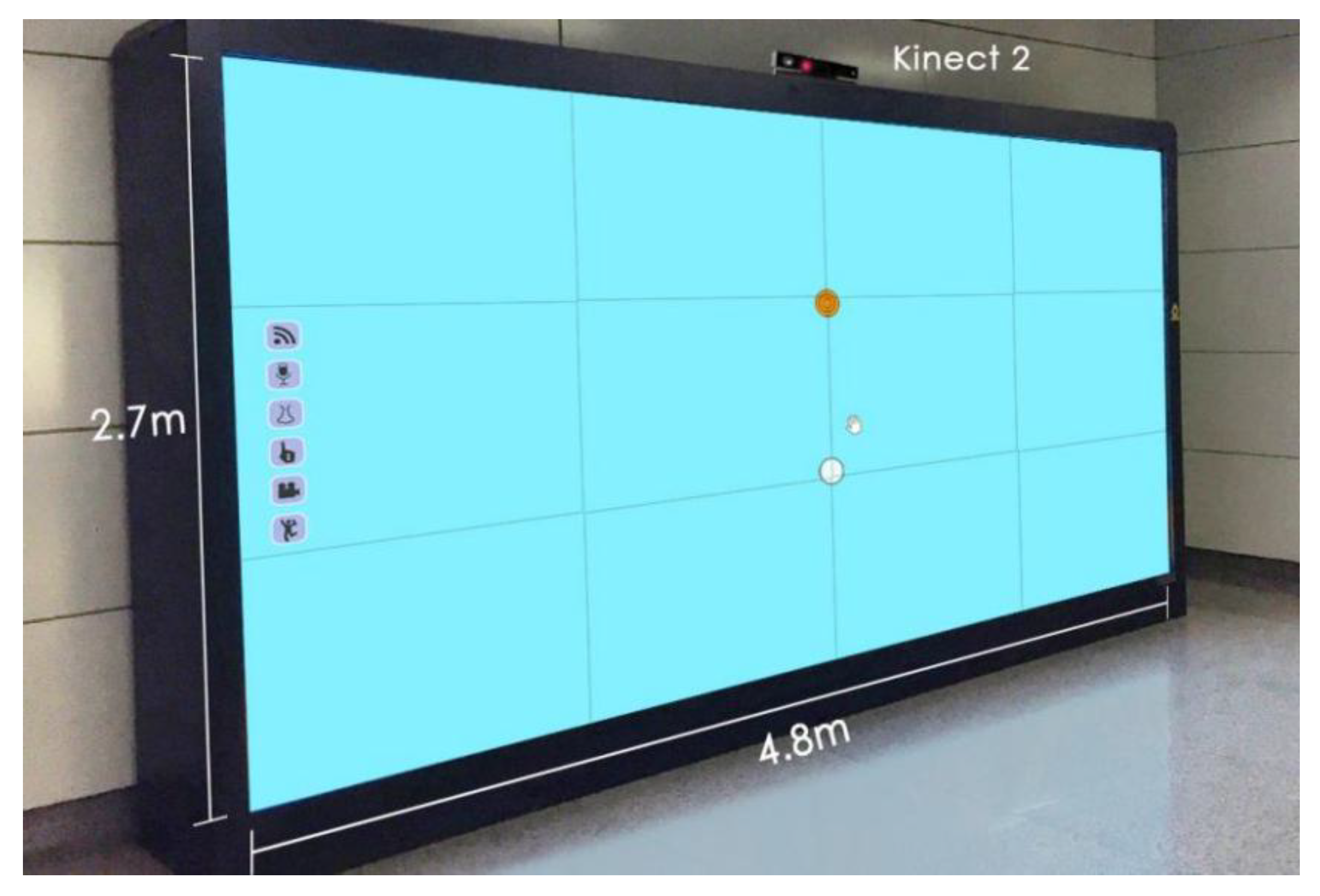

4.3. Apparatus

4.4. Independent Variables

4.5. Procedure

4.6. Design

5. Analyses and Results

5.1. Movement Time

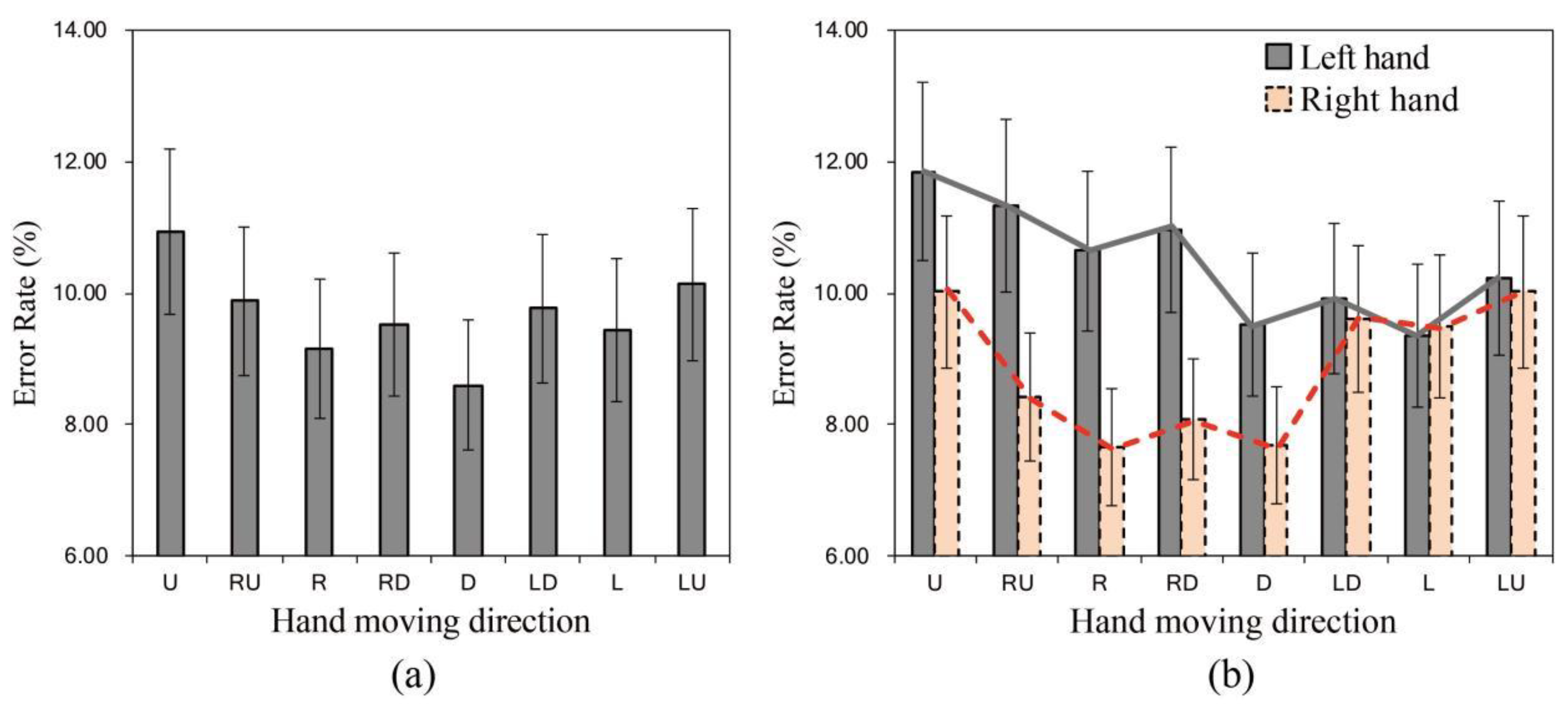

5.2. Error Rate

6. Findings Summary

- (1)

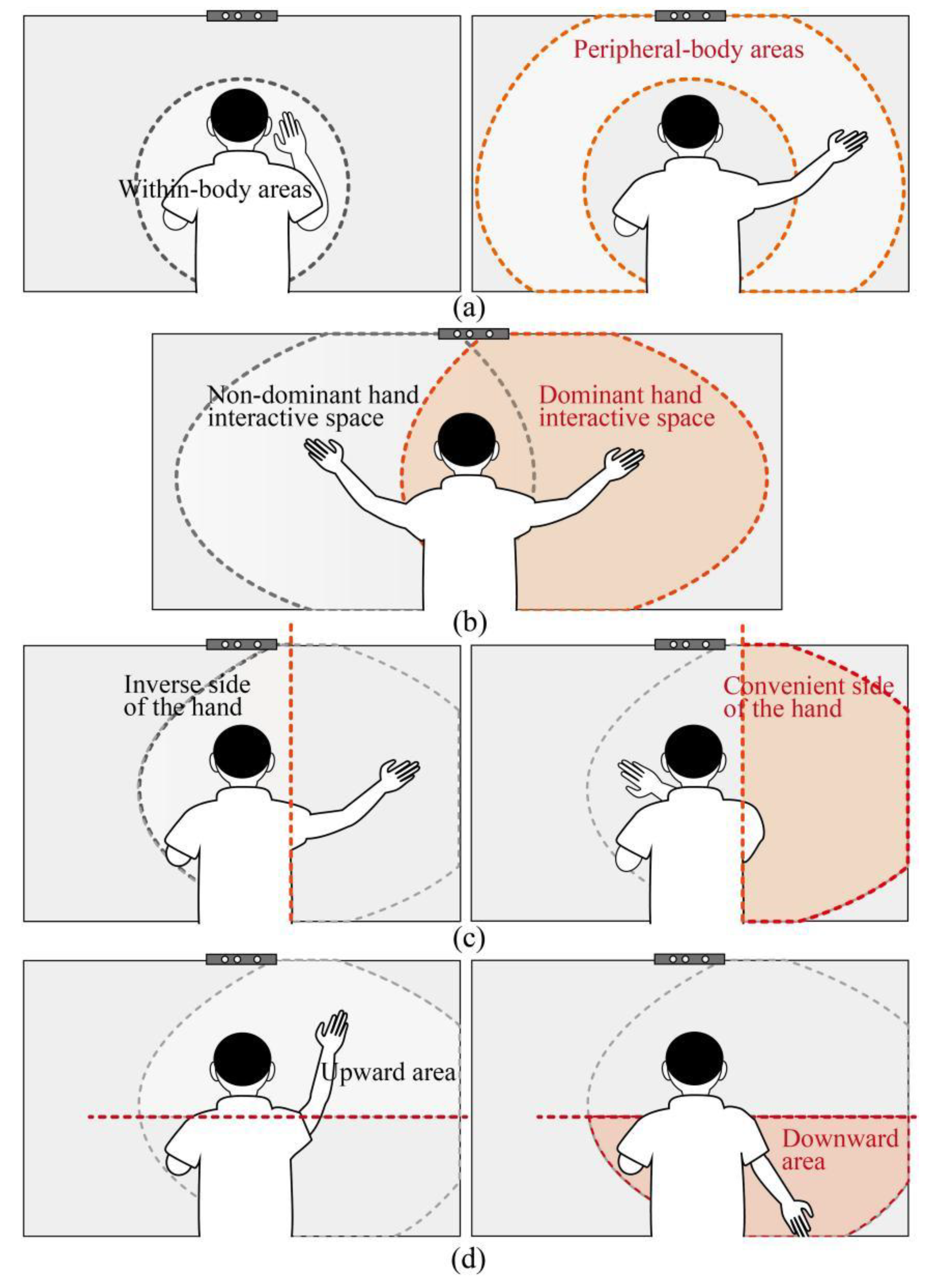

- Free-hand acquisition of targets at a closer distance (closer to the display center) generated a higher accuracy than that at a farther distance. This can be further interpreted as an ergonomic feature that hand movement and interaction in the within-body space through a bent arm posture was more accurate than that in the peripheral-body space through a stretched arm posture, as illustrated in Figure 8a.

- (2)

- All the participants engaged in the experiment were right-handed. The experimental result proved that the right-hand interaction not only had a higher target acquisition efficiency but also generated fewer errors, indicating an ‘asymmetry’ pattern in the dominant hand interaction and the other hand interaction, as illustrated in Figure 8b.

- (3)

- In either hand interaction, target acquisition on the display area at the hand’s convenient side was more efficient and accurate than that at the hand’s inverse side, as illustrated in Figure 8c.

- (4)

- Apart from the asymmetric interaction performance between the user’s left-sided and the right-sided areas, there was another ‘asymmetry’ pattern between upward and downward areas, as illustrated in Figure 8d. It was found that downward arm movement not only had a higher target acquisition efficiency but also generated a more satisfying accuracy than upward arm movement.

7. Discussion and Future Work

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ardito, C.; Buono, P.; Costabile, M.F.; Desolda, G. Interaction with Large Displays: A Survey. ACM Comput. Surv. 2015, 47, 1–38. [Google Scholar] [CrossRef]

- Muñoz, G.F.; Cardenas, R.A.M.; Pla, F. A Kinect-Based Interactive System for Home-Assisted Active Aging. Sensors 2021, 21, 417. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Microsoft Kinect Sensor and Its Effect. IEEE MultiMedia 2012, 19, 4–10. [Google Scholar] [CrossRef] [Green Version]

- Thakur, N.; Han, C.Y. An Ambient Intelligence-Based Human Behavior Monitoring Framework for Ubiquitous Environments. Information 2021, 12, 81. [Google Scholar] [CrossRef]

- Czerwinski, M.; Robertson, G.; Meyers, B.; Smith, G.; Robbins, D.; Tan, D. Large Display Research Overview. In Proceedings of the Extended Abstracts at CHI 2006 Conference on Human Factors in Computing Systems, Montréal, QC, Canada, 22–27 April 2006; pp. 69–74. [Google Scholar] [CrossRef]

- Stojko, L. Intercultural Usability of Large Public Displays. In Proceedings of the 2020 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Virtual Event, Mexico, 12–17 September 2020; pp. 218–222. [Google Scholar] [CrossRef]

- Tan, D.S.; Gergle, D.; Scupelli, P.; Pausch, R. Physically large displays improve performance on spatial tasks. ACM Trans. Comput. Hum. Interact. 2006, 13, 71–99. [Google Scholar] [CrossRef]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced Computer Vision with Microsoft Kinect Sensor: A Review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar] [CrossRef] [PubMed]

- Cavallo, M.; Rotini, R.; Cutti, A.G.; Parel, I. Functional Anatomy and Biomechanic Models of the Elbow. In The Elbow: Principles of Surgical Treatment and Rehabilitation, 1st ed.; Porcellini, G., Rotini, R., Stignani Kantar, S., Di Giacomo, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 29–40. [Google Scholar] [CrossRef]

- Keefe, D.F.; Gupta, A.; Feldman, D.; Carlis, J.V.; Keefe, S.K.; Griffin, T.J. Scaling up multi-touch selection and querying: Interfaces and applications for combining mobile multi-touch input with large-scale visualization displays. Int. J. Hum. Comput. Stud. 2012, 70, 703–713. [Google Scholar] [CrossRef]

- Malik, S.; Ranjan, A.; Balakrishnan, R. Interacting with Large Displays from a Distance with Vision-Tracked Multi-Finger Gestural Input. In Proceedings of the 18th Annual ACM Symposium on User interface software and technology, Seattle, WA, USA, 23–26 October 2005; pp. 43–52. [Google Scholar] [CrossRef] [Green Version]

- Shoemaker, G.; Tang, A.; Booth, K.S. Shadow Reaching: A new Perspective on Interaction for Large Displays. In Proceedings of the 20th Annual ACM Symposium on User Interface Software and Technology, Newport, RI, USA, 7–10 October 2007; pp. 53–56. [Google Scholar]

- Banerjee, A.; Burstyn, J.; Girouard, A.; Vertegaal, R. Pointable: An In-Air Pointing Technique to Manipulate Out-of-Reach Targets on Tabletops. In Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces, Kobe, Japan, 13–16 November 2011; pp. 11–20. [Google Scholar] [CrossRef]

- Jude, A.; Poor, G.M.; Guinness, D. An Evaluation of Touchless Hand Gestural Interaction for Pointing Tasks with Preferred and Non-Preferred Hands. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction: Fun, Fast, Foundational, Helsinki, Finland, 26–30 October 2014; pp. 668–676. [Google Scholar] [CrossRef]

- Hespanhol, L.; Tomitsch, M.; Grace, K.; Collins, A.; Kay, J. Investigating Intuitiveness and Effectiveness of Gestures for Free Spatial Interaction with Large Displays. In Proceedings of the 2012 International Symposium on Pervasive Displays, Porto, Portugal, 4–5 June 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Vogel, D.; Balakrishnan, R. Distant Freehand Pointing and Clicking on Very Large, High Resolution Displays. In Proceedings of the 18th Annual ACM Symposium on User Interface Software and Technology, Seattle, WA, USA, 23–26 October 2005; pp. 33–42. [Google Scholar] [CrossRef] [Green Version]

- Haque, F.; Nancel, M.; Vogel, D. Myopoint: Pointing and Clicking Using Forearm Mounted Electromyography and Inertial Motion Sensors. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 3653–3656. [Google Scholar] [CrossRef] [Green Version]

- Toledo-Perez, D.C.; Rodriguez-Resendiz, J.; Gomez-Loenzo, R.A. A study of computing zero crossing methods and an improved proposal for emg signals. IEEE Access 2020, 8, 8783–8790. [Google Scholar] [CrossRef]

- Toledo-Pérez, D.C.; Martínez-Prado, M.A.; Gómez-Loenzo, R.A.; Paredes-García, W.J.; Rodríguez-Resendiz, J. A study of movement classification of the lower limb based on up to 4-EMG channels. Electronics 2019, 8, 259. [Google Scholar] [CrossRef] [Green Version]

- Patil, A.K.; Balasubramanyam, A.; Ryu, J.Y.; B N, P.K.; Chakravarthi, B.; Chai, Y.H. Fusion of Multiple Lidars and Inertial Sensors for the Real-Time Pose Tracking of Human Motion. Sensors 2020, 20, 5342. [Google Scholar] [CrossRef]

- Clark, A.; Dünser, A.; Billinghurst, M.; Piumsomboon, T.; Altimira, D. Seamless Interaction in Space. In Proceedings of the 23rd Australian Computer-Human Interaction Conference, Canberra, Australia, 28 November–2 December 2011; pp. 88–97. [Google Scholar] [CrossRef]

- Schwaller, M.; Brunner, S.; Lalanne, D. Two Handed Mid-Air Gestural HCI: Point + Command. In Proceedings of the 15th International Conference on Human-Computer Interaction, Las Vegas, NV, USA, 21–26 July 2013; pp. 388–397. [Google Scholar] [CrossRef]

- Bi, X.; Shi, Y.; Chen, X.; Xiang, P. Facilitating Interaction with Large Displays in Smart Spaces. In Proceedings of the 2005 Joint Conference on Smart Objects and Ambient Intelligence: Innovative Context-Aware Services: Usages and Technologies, Grenoble, France, 12–14 October 2005; pp. 105–110. [Google Scholar] [CrossRef]

- Mäkelä, V.; Heimonen, T.; Turunen, M. Magnetic Cursor: Improving Target Selection in Freehand Pointing Interfaces. In Proceedings of the International Symposium on Pervasive Displays, Copenhagen, Denmark, 5–6 June 2014; pp. 112–117. [Google Scholar] [CrossRef]

- Bateman, S.; Mandryk, R.L.; Gutwin, C.; Xiao, R. Analysis and comparison of target assistance techniques for relative ray-cast pointing. Int. J. Hum. Comput. Stud. 2013, 71, 511–532. [Google Scholar] [CrossRef]

- Ren, G.; O’Neill, E. 3D selection with freehand gesture. Comput. Graph. 2013, 37, 101–120. [Google Scholar] [CrossRef]

- Zanini, A.; Patané, I.; Blini, E.; Salemme, R.; Brozzoli, C. Peripersonal and reaching space differ: Evidence from their spatial extent and multisensory facilitation pattern. Psychon. Bull. Rev. 2021, 28, 1894–1905. [Google Scholar] [CrossRef] [PubMed]

- Ball, R.; North, C.; Bowman, D.A. Move to Improve: Promoting Physical Navigation to Increase User Performance with Large Displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 191–200. [Google Scholar] [CrossRef]

- Shoemaker, G.; Tsukitani, T.; Kitamura, Y.; Booth, K.S. Body-Centric Interaction Techniques for Very Large Wall Displays. In Proceedings of the Nordic Conference on Human-Computer Interaction, Reykjavik, Iceland, 16–20 October 2010; pp. 463–472. [Google Scholar] [CrossRef] [Green Version]

- Feng, J.; Spence, I. Left or Right? Spatial Arrangement for Information Presentation on Large Displays. In Proceedings of the 2010 Conference of the Center for Advanced Studies on Collaborative Research, Toronto, ON, Canada, 1–4 November 2010; pp. 154–159. [Google Scholar] [CrossRef]

- Previc, F.H. Functional specialization in the lower and upper visual fields in humans: Its ecological origins and neurophysiological implications. Behav. Brain Sci. 1990, 13, 519–542. [Google Scholar] [CrossRef]

- Fan, X.; Liu, Z.; Zhou, Q.; Xie, F. Spatial Effect of Target Display on Visual Search. In Proceedings of the International Conference on Human-Computer Interaction, Los Angeles, CA, USA, 2–7 August 2015; pp. 98–103. [Google Scholar] [CrossRef]

- Po, B.A.; Fisher, B.D.; Booth, K.S. Mouse and Touchscreen Selection in the Upper and Lower Visual Fields. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; pp. 359–366. [Google Scholar] [CrossRef] [Green Version]

- Fikkert, W.; Vet, P.V.D.; Nijholt, A. User-Evaluated Gestures for Touchless Interactions from a Distance. In Proceedings of the 2010 IEEE International Symposium on Multimedia, Taichung, Taiwan, 13–15 December 2010; pp. 153–160. [Google Scholar] [CrossRef] [Green Version]

- Banerjee, A.; Burstyn, J.; Girouard, A.; Vertegaal, R. MultiPoint: Comparing laser and manual pointing as remote input in large display interactions. Int. J. Hum. Comput. Stud. 2012, 70, 690–702. [Google Scholar] [CrossRef] [Green Version]

- Nancel, M.; Wagner, J.; Pietriga, E.; Chapuis, O.; Mackay, W. Mid-Air pan-and-Zoom on Wall-Sized Displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 177–186. [Google Scholar] [CrossRef] [Green Version]

- Jota, R.; Pereira, J.M.; Jorge, J.A. A Comparative Study of Interaction Metaphors for Large-Scale Displays. In Proceedings of the Extended Abstracts at CHI 2009 Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 4135–4140. [Google Scholar] [CrossRef]

- Nancel, M.; Pietriga, E.; Chapuis, O.; Beaudouin-Lafon, M. Mid-Air Pointing on Ultra-Walls. ACM Trans. Comput. Hum. Interact. 2015, 22, 1–62. [Google Scholar] [CrossRef]

- Büsch, D.; Hagemann, N.; Bender, N. The dimensionality of the edinburgh handedness inventory: An analysis with models of the item response theory. Later. Asymmetries Body Brain Cogn. 2010, 15, 610–628. [Google Scholar] [CrossRef] [PubMed]

- Natapov, D.; Castellucci, S.J.; MacKenzie, I.S. ISO 9241-9 Evaluation of Video Game Controllers. In Proceedings of the Graphics Interface 2009, Kelowna, BC, Canada, 25–27 May 2009; pp. 223–230. [Google Scholar]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 1954, 47, 381–391. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Soukoreff, R.W.; Mackenzie, I.S. Towards a standard for pointing device evaluation, perspectives on 27 years of fitts’ law research in HCI. Int. J. Hum. Comput. Stud. 2004, 61, 751–789. [Google Scholar] [CrossRef]

| Independent Variables | Values |

|---|---|

| Moving amplitude (A) | (1) 800 mm; (2) 1600 mm; (3) 2400 mm; |

| Target width (W) | (1) 32 mm; (2) 64 mm; (3) 128 mm; |

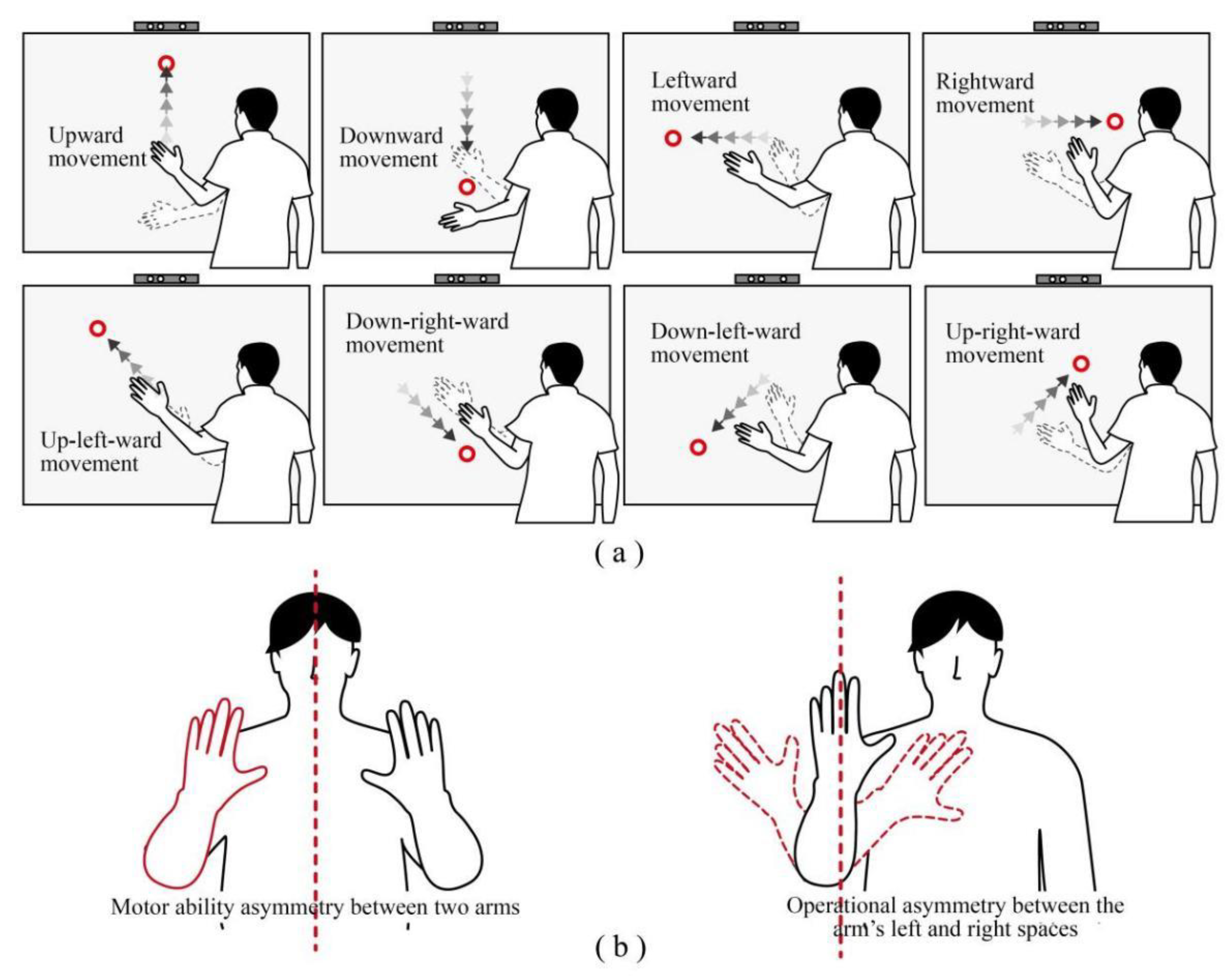

| Moving direction | (1) Upward (U); (2) Downward (D); (3) Leftward (L); (4) Rightward (R); (5) Left-up-ward (LU); (6) Right-up-ward (RU); (7) Left-down-ward (LD); (8) Right-down-ward (RD); |

| Hand use choice | (1) Left hand; (2) Right hand; |

| Hand Moving Direction | Mean MT (ms) ± SD | |

|---|---|---|

| Left Hand | Right Hand | |

| Upward (U) | 1553.08 ± 182.51 | 1558.86 ± 183.27 |

| Right-up-ward (RU) | 1593.50 ± 176.70 | 1389.29 ± 175.77 |

| Rightward (R) | 1414.21 ± 172.99 | 1270.84 ± 172.16 |

| Right-down-ward (RD) | 1490.38 ± 168.26 | 1344.44 ± 168.92 |

| Downward (D) | 1280.38 ± 183.54 | 1274.42 ± 182.68 |

| Left-down-ward (LD) | 1331.33 ± 169.77 | 1471.64 ± 169.94 |

| Leftward (L) | 1282.83 ± 173.42 | 1428.34 ± 173.90 |

| Left-up-ward (LU) | 1393.19 ± 178.69 | 1588.52 ± 175.48 |

| Hand Moving Direction | Mean Error Rate (%) ± SD | |

|---|---|---|

| Left Hand | Right Hand | |

| Upward (U) | 11.85 ± 1.23 | 10.02 ± 1.15 |

| Right-up-ward (RU) | 11.34 ± 1.36 | 8.42 ± 1.07 |

| Rightward (R) | 10.64 ± 1.29 | 7.67 ± 0.90 |

| Right-down-ward (RD) | 10.96 ± 1.24 | 8.08 ± 0.84 |

| Downward (D) | 9.52 ± 0.98 | 7.68 ± 0.83 |

| Left-down-ward (LD) | 9.92 ± 1.05 | 9.61 ± 1.09 |

| Leftward (L) | 9.35 ± 1.17 | 9.50 ± 1.03 |

| Left-up-ward (LU) | 10.24 ± 1.09 | 10.03 ± 1.02 |

| Research | Interaction Difference: Within-Body Space vs. Peripheral Space | Interaction Difference: Dominant Hand vs. Non-Dominant Hand | Interaction Difference: Hand’s Convenient Side vs. Inverse Side | Interaction Difference: Upward Space vs. Downward Space |

|---|---|---|---|---|

| Lou et al. (2022) | Yes | Yes | Yes | Yes |

| Ball et al. [26] | Yes | No | No | No |

| Shoemaker et al. [27] | Yes | No | No | No |

| Previc [29] | No | No | No | Yes |

| Po et al. [31] | No | No | No | Yes |

| Malik et al. [11]; Jude et al. [14] | No | Yes | No | No |

| Ren & O’Neill [24] | No | No | Yes | Yes |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lou, X.; Chen, Z.; Hansen, P.; Peng, R. Asymmetric Free-Hand Interaction on a Large Display and Inspirations for Designing Natural User Interfaces. Symmetry 2022, 14, 928. https://doi.org/10.3390/sym14050928

Lou X, Chen Z, Hansen P, Peng R. Asymmetric Free-Hand Interaction on a Large Display and Inspirations for Designing Natural User Interfaces. Symmetry. 2022; 14(5):928. https://doi.org/10.3390/sym14050928

Chicago/Turabian StyleLou, Xiaolong, Ziye Chen, Preben Hansen, and Ren Peng. 2022. "Asymmetric Free-Hand Interaction on a Large Display and Inspirations for Designing Natural User Interfaces" Symmetry 14, no. 5: 928. https://doi.org/10.3390/sym14050928

APA StyleLou, X., Chen, Z., Hansen, P., & Peng, R. (2022). Asymmetric Free-Hand Interaction on a Large Display and Inspirations for Designing Natural User Interfaces. Symmetry, 14(5), 928. https://doi.org/10.3390/sym14050928