Learning by Autonomous Manifold Deformation with an Intrinsic Deforming Field

Abstract

:1. Introduction

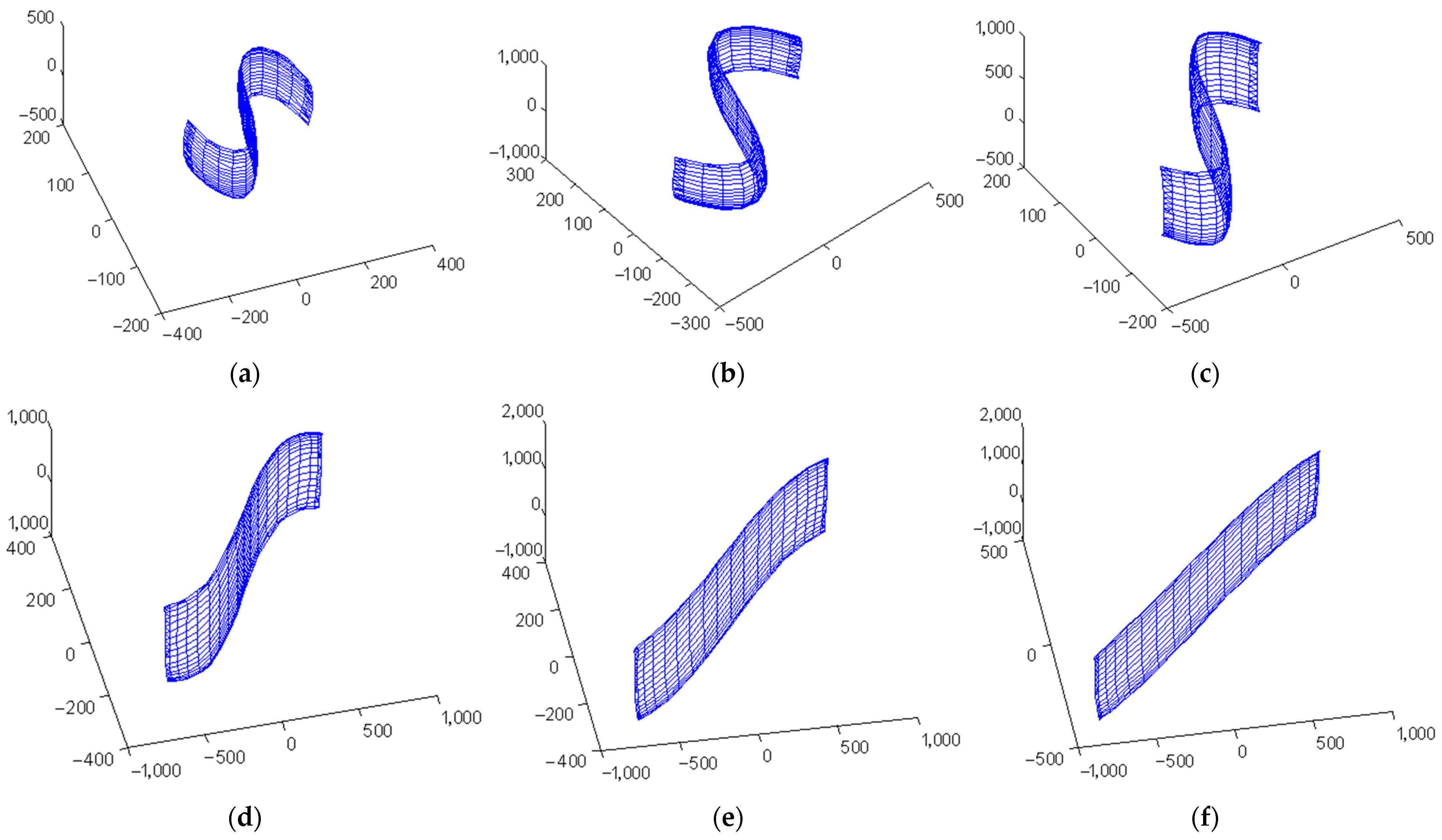

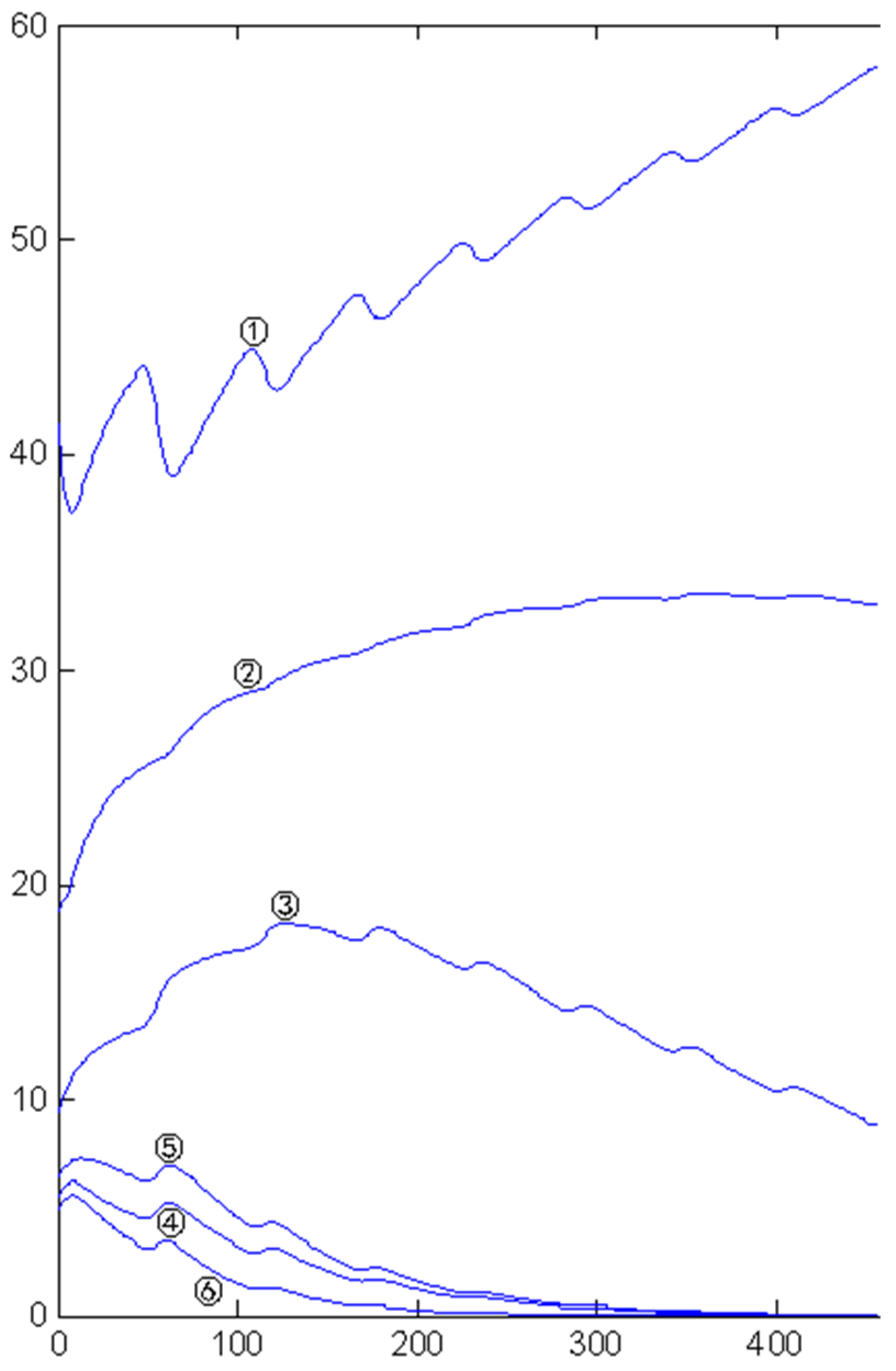

2. Dimension Reduction by Autonomous Deformation of Data Manifolds

2.1. The Soft Neighborhood of Data Points

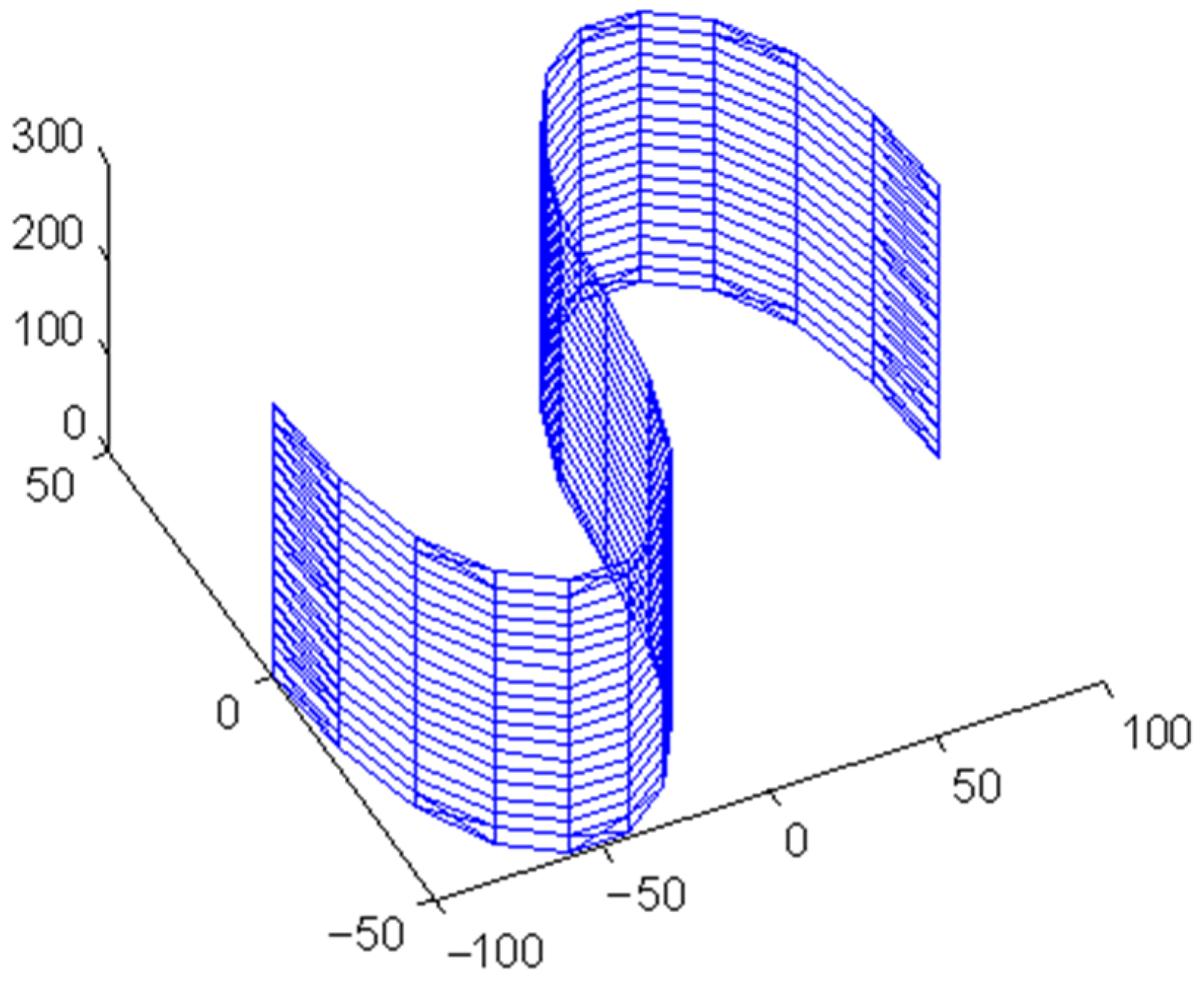

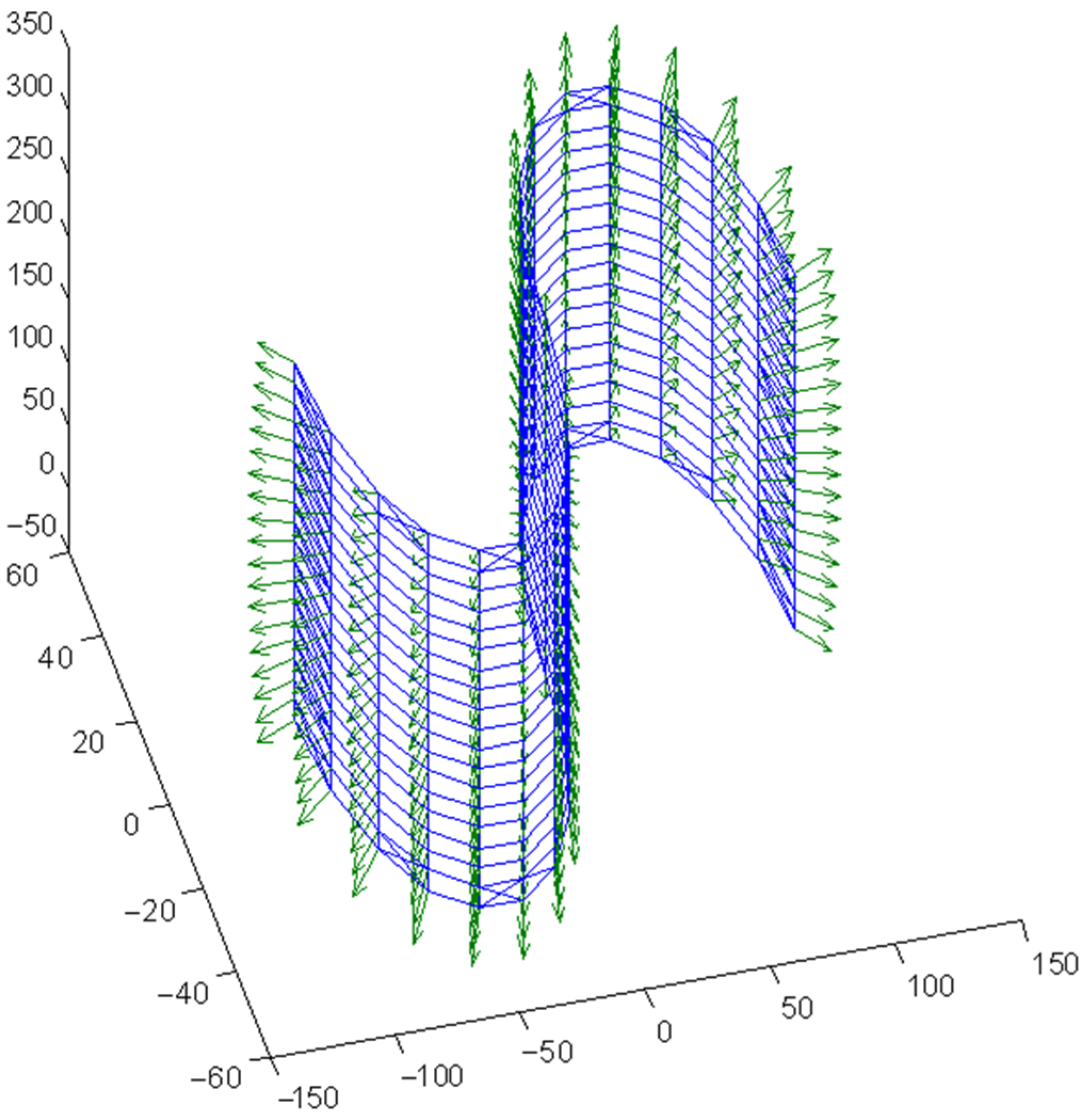

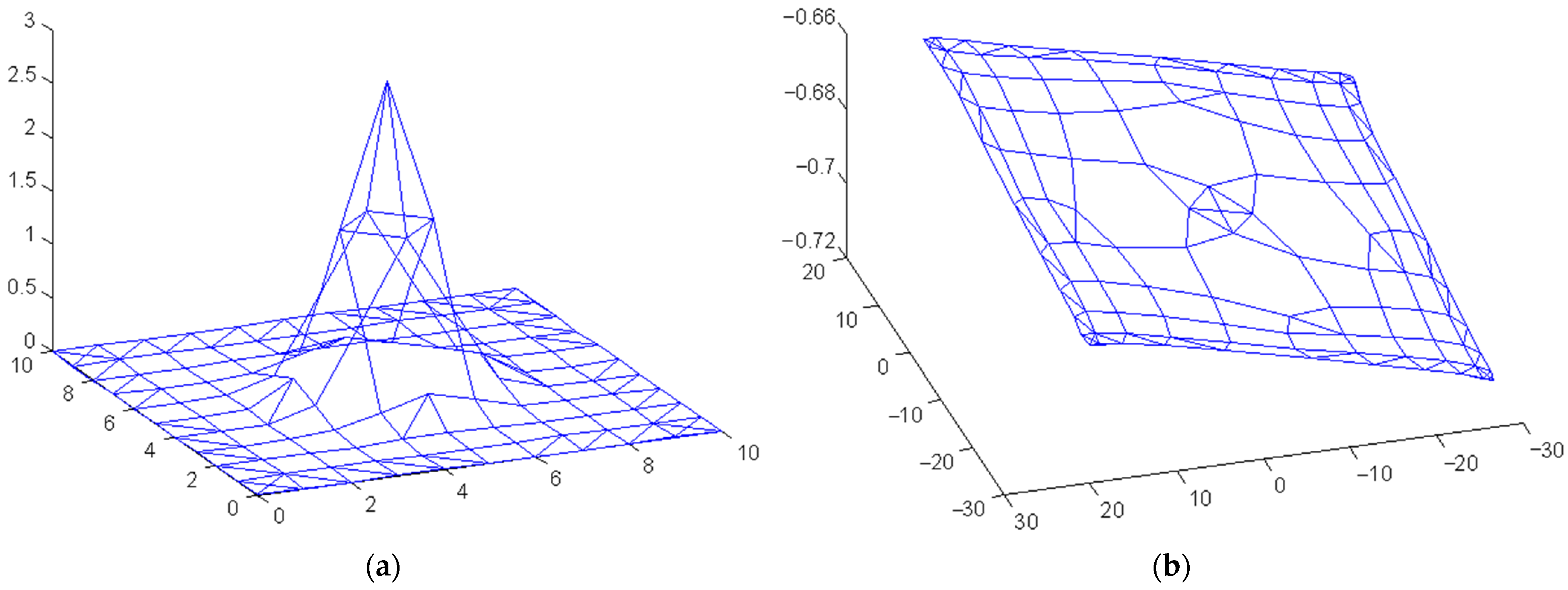

2.2. Intrinsic Deforming Vector Field with Flattening Effect

2.3. The Manifold Deformation Learning Algorithm

- Step 1:

- Compute the Euclidean distance dij between each pair of data points in the original data set.

- Step 2:

- For each data point pi, find the k nearest neighbor points as the members of its soft neighborhood point set.

- Step 3:

- For each data point pi, compute the neighbor degree NDij of each point in its soft neighborhood set.

- Step 4:

- The counter of the deforming steps C is initialized to zero.

- Step 5:

- For each data point pi, compute the displacement vector according to Equation (5) ( is determined by s current position and the current manifold shape in Rn).

- Step 6:

- For each data point pi, update s position according to .

- Step 7:

- Increase C by 1.

- Step 8:

- Check the termination condition. If the sum of for all the points is smaller than a threshold ε or C reaches a given value Cmax, go to Step 9. Otherwise, return to Step 5.

- Step 9:

- Carry out principal component analysis (PCA) on the deformed manifold to obtain the final dimension reduction result (the number of principal components is taken as the estimated intrinsic dimension of the manifold, and the low-dimension coordinates of each data point pi are computed by the projection onto the principal component vectors).

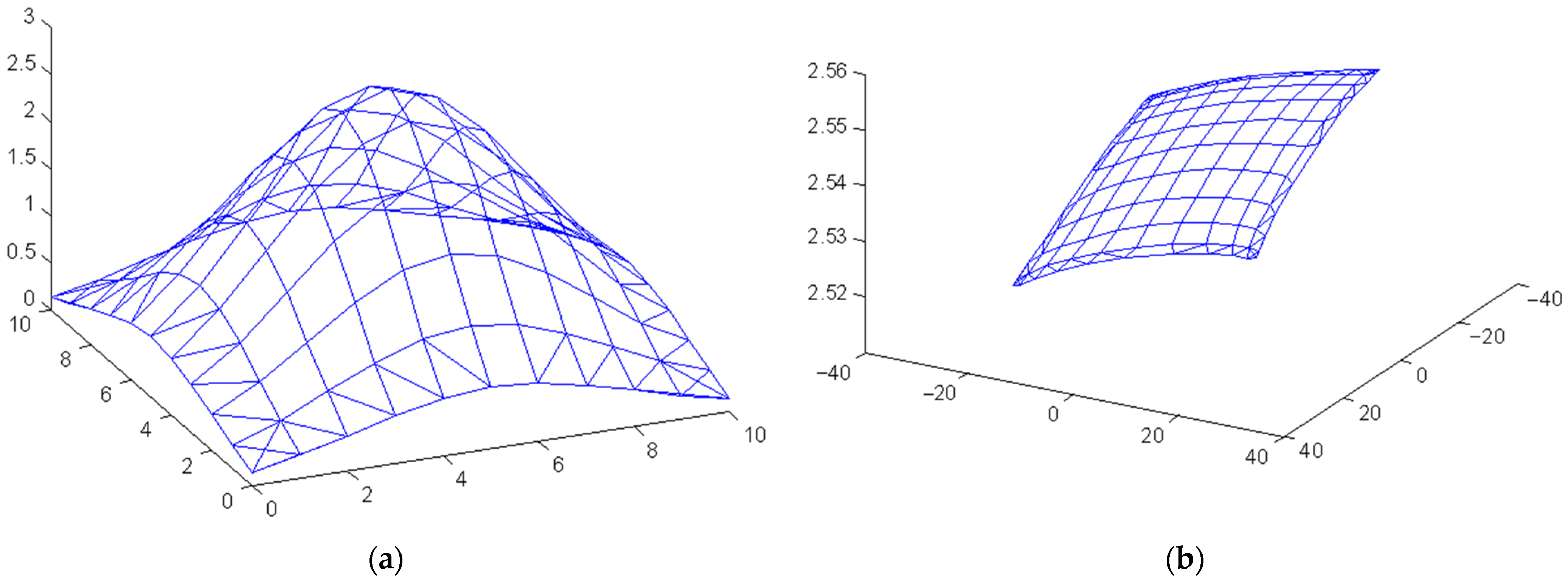

3. Simulation Study on Data Sets

3.1. Simulation Study on Test Data Sets

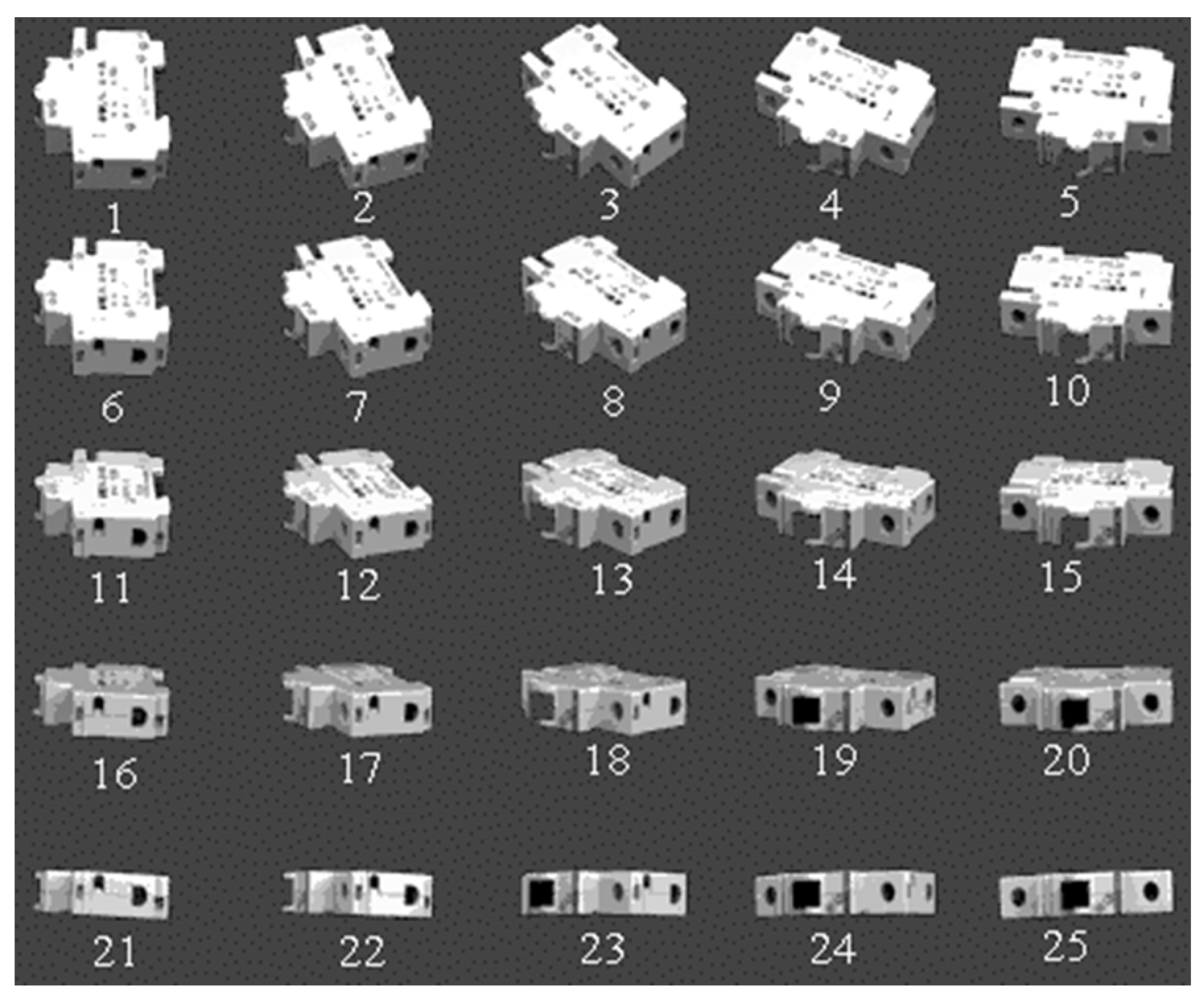

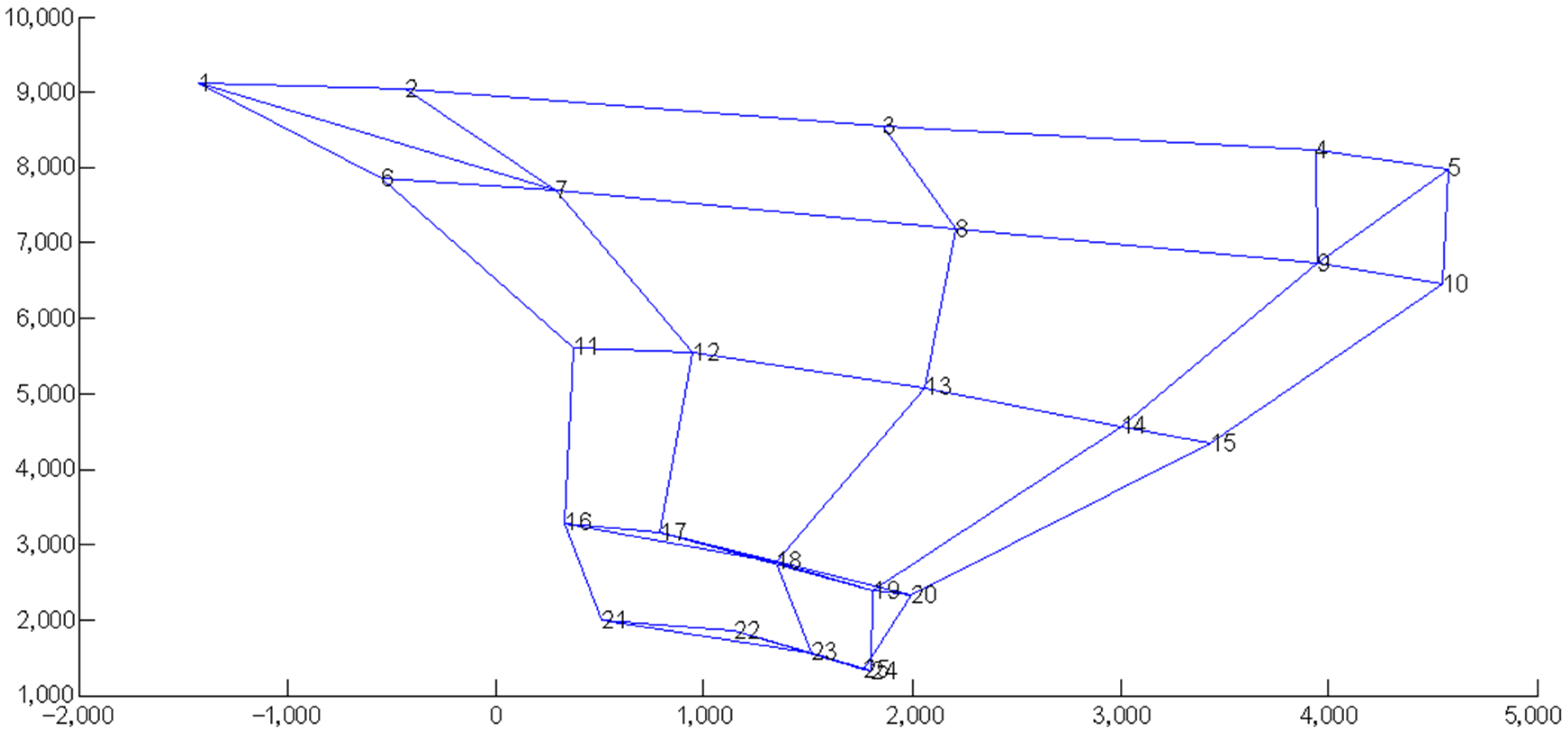

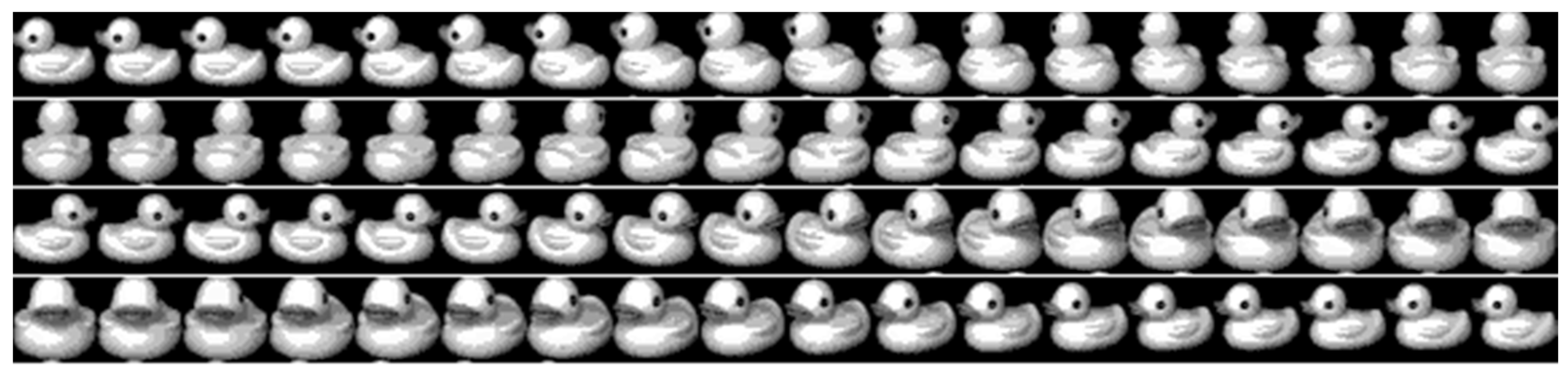

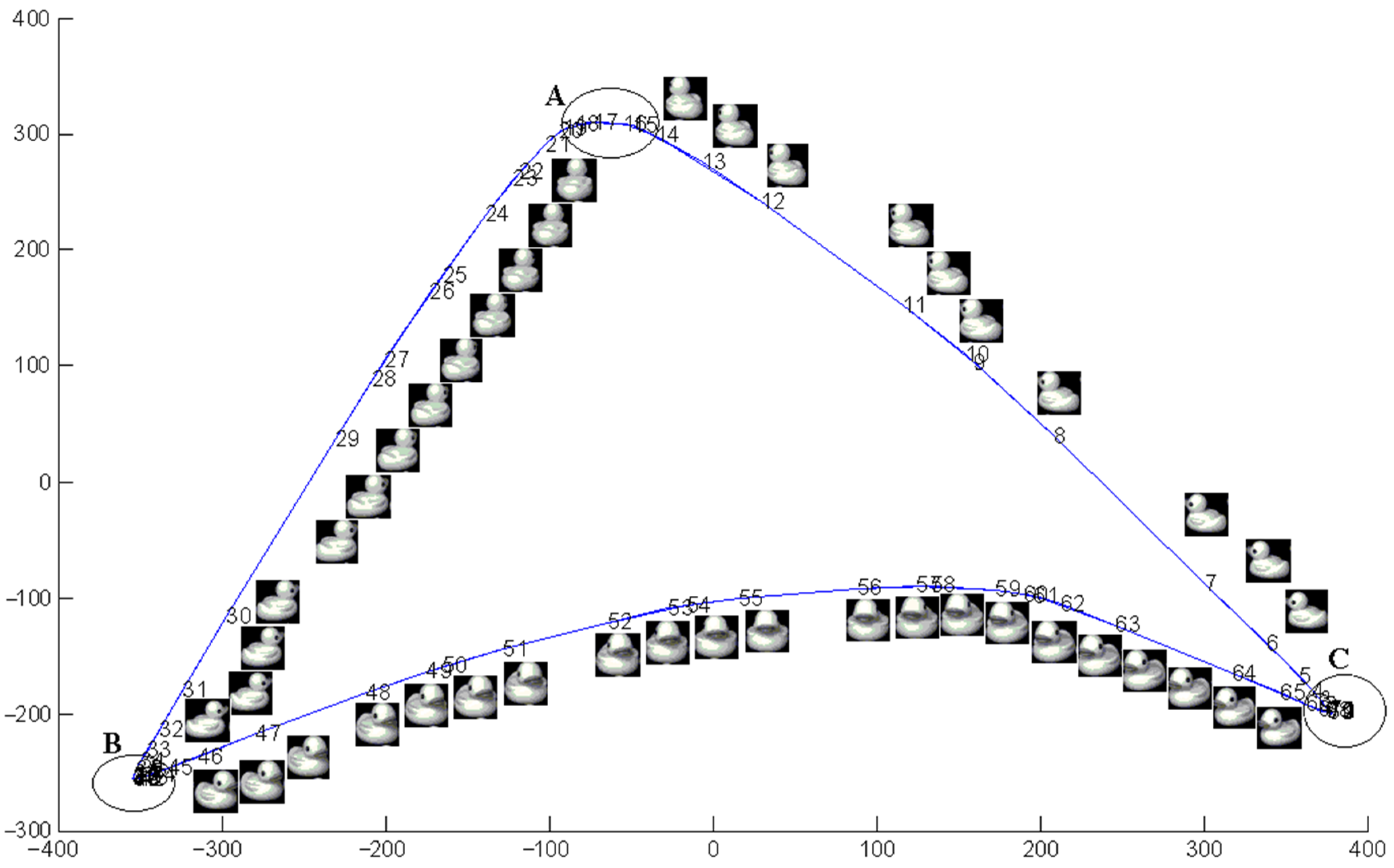

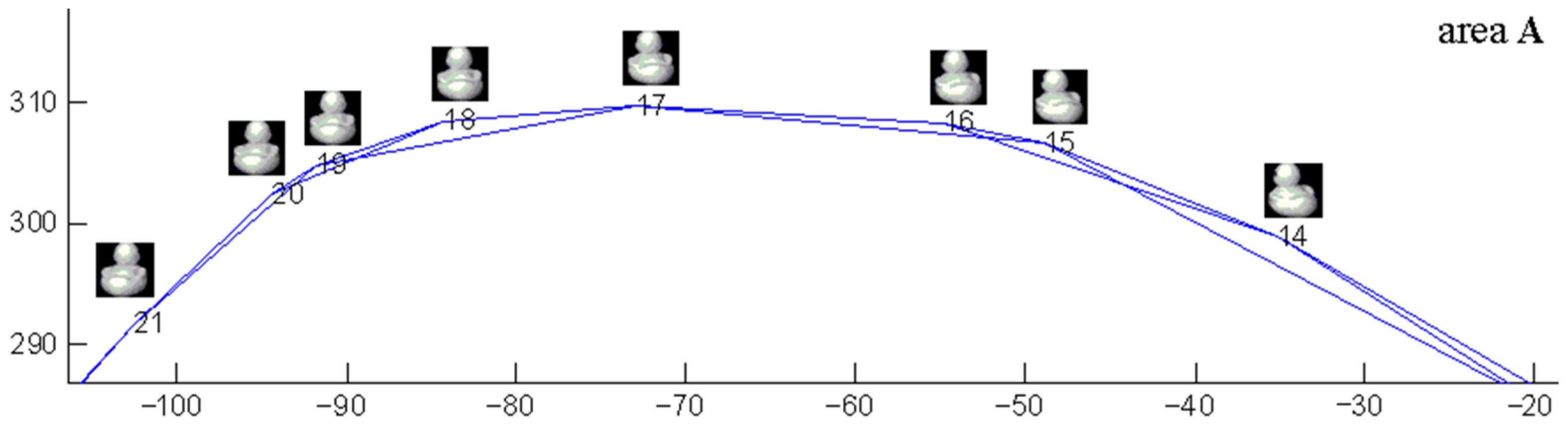

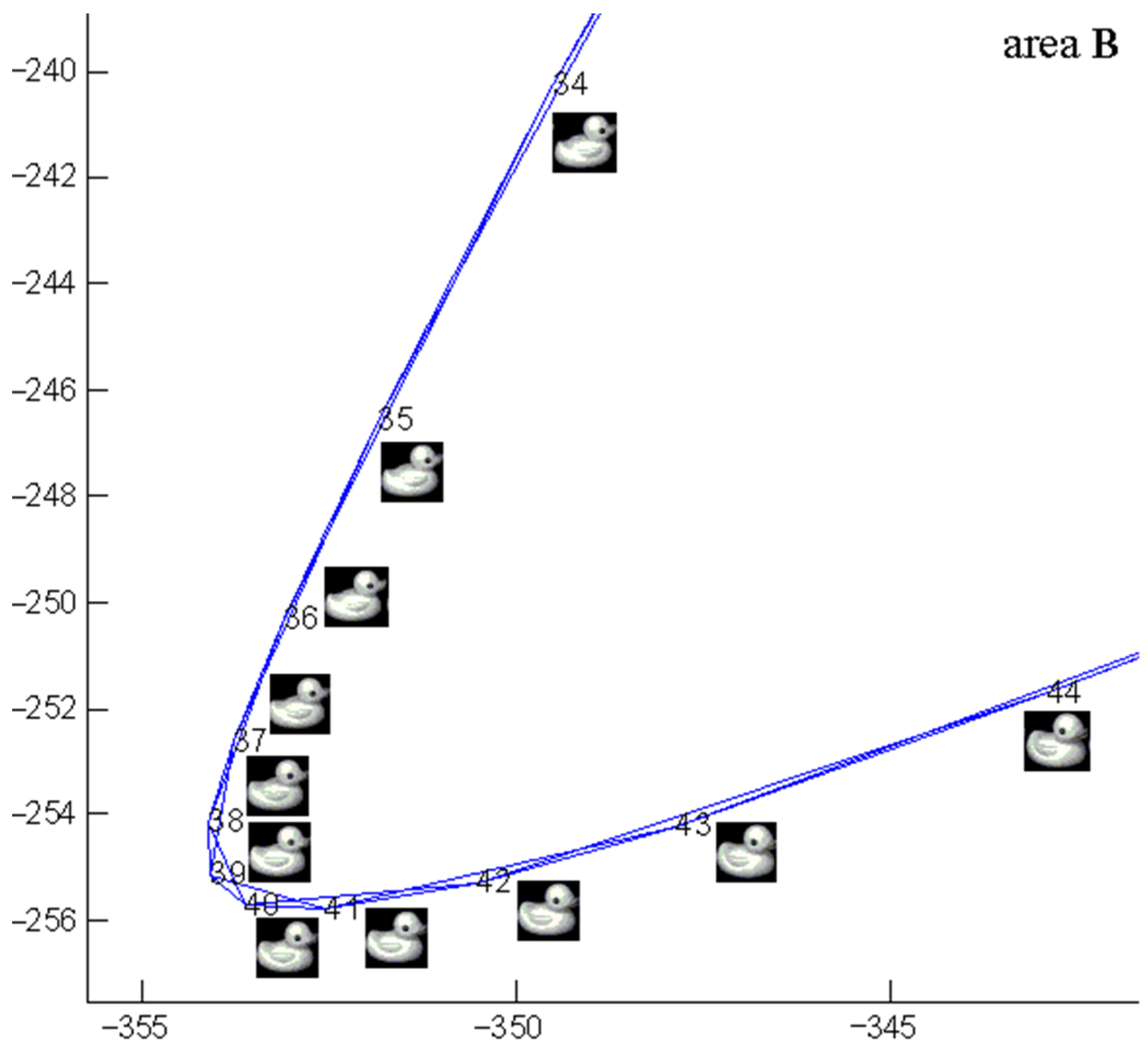

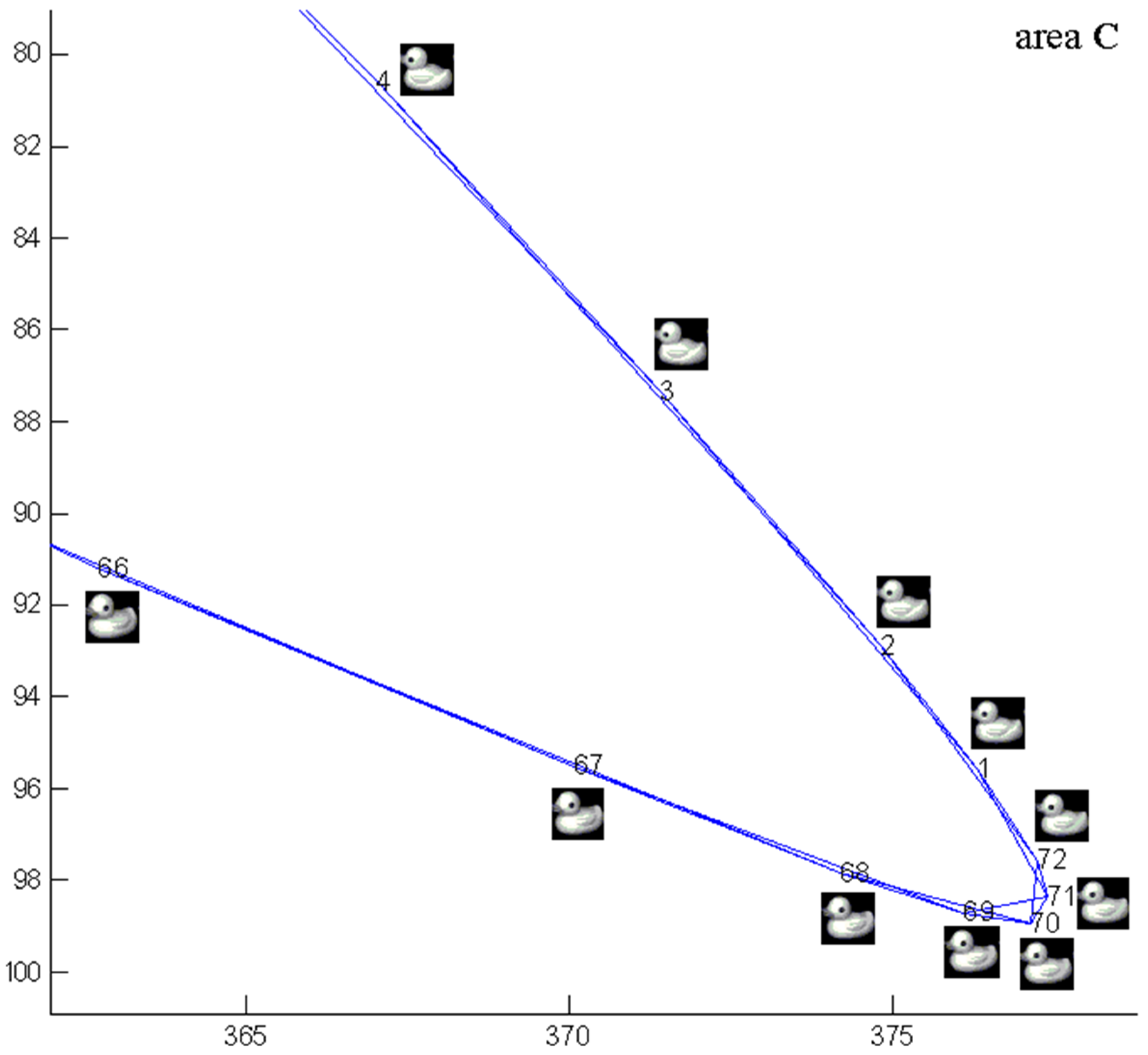

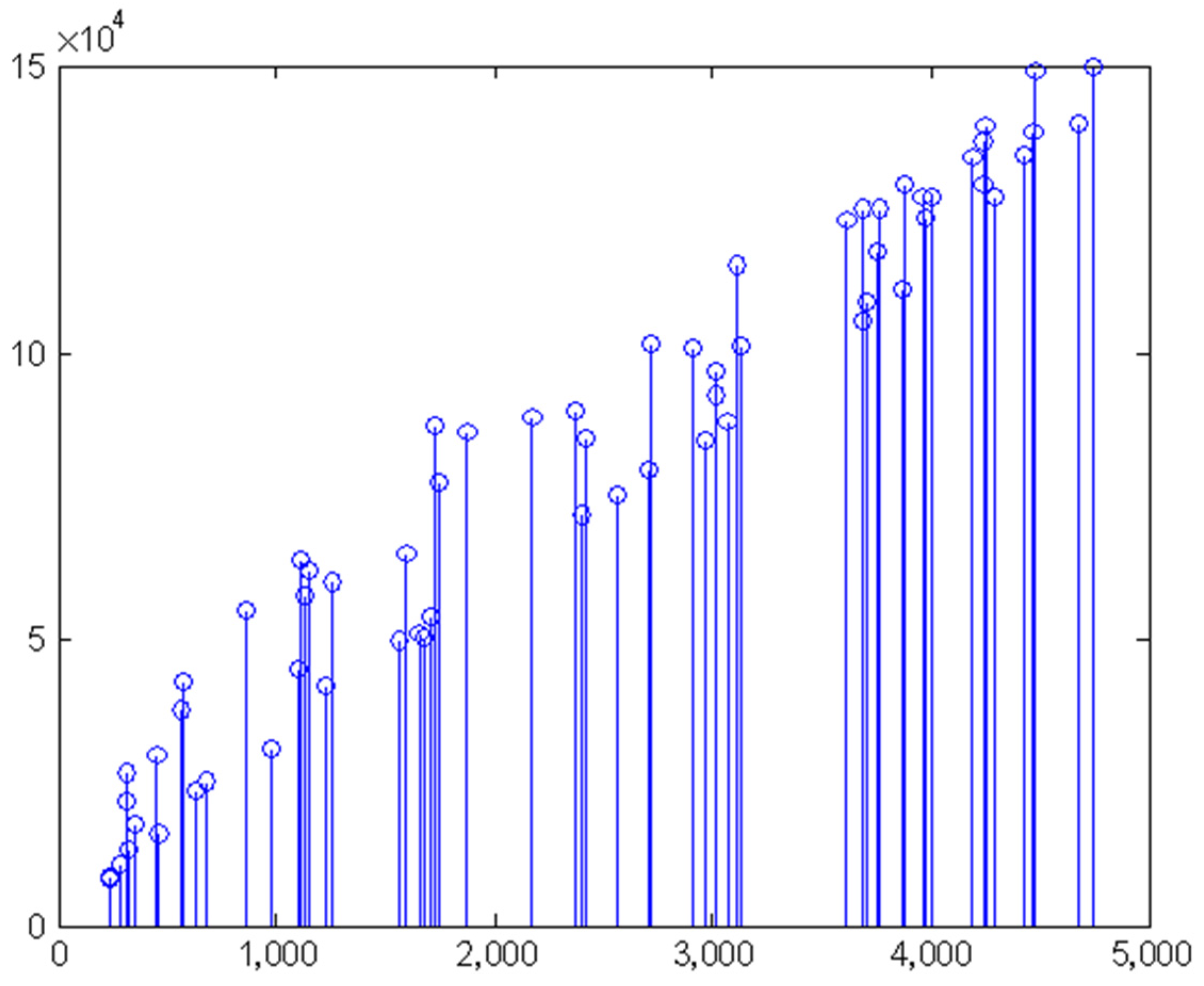

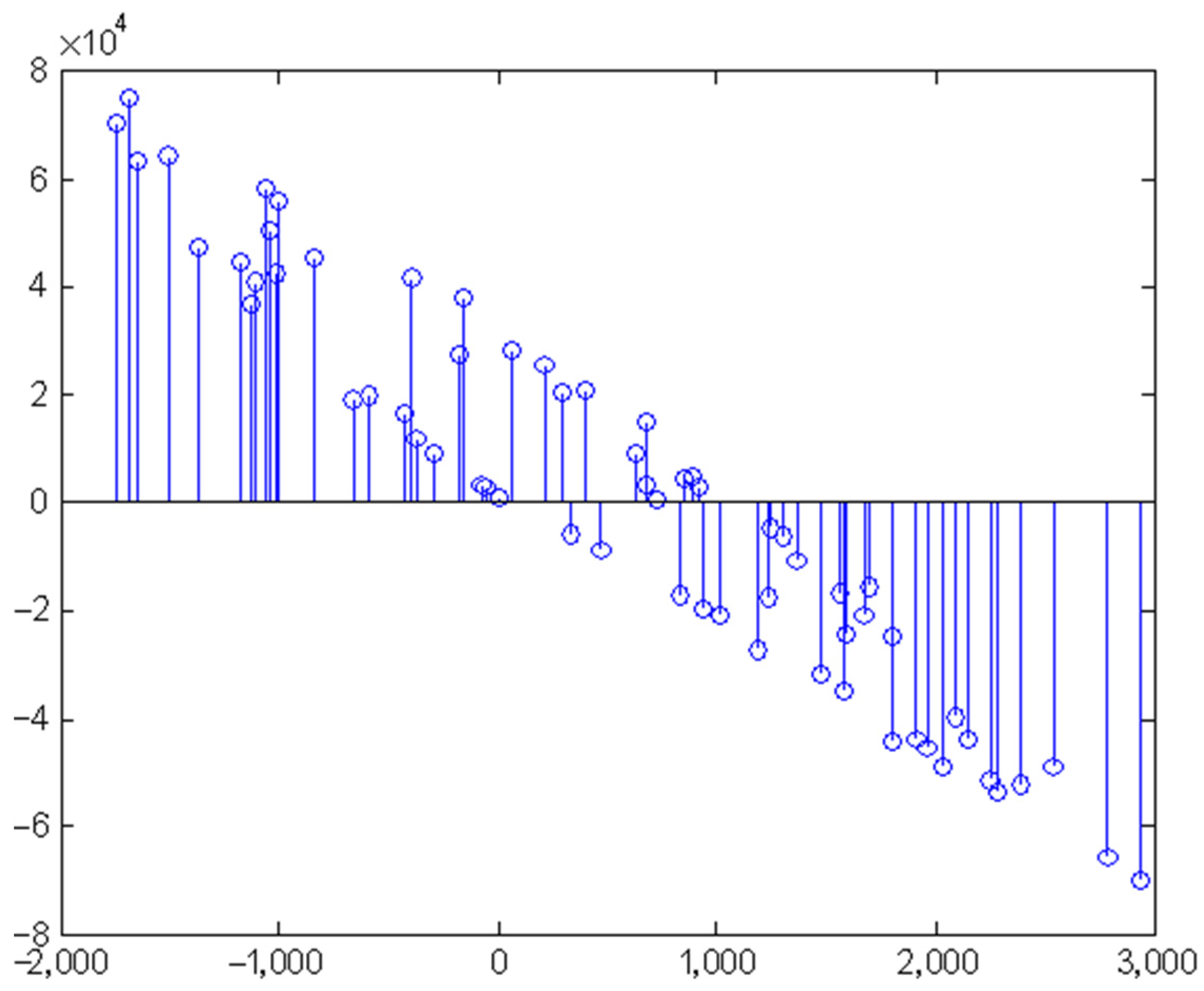

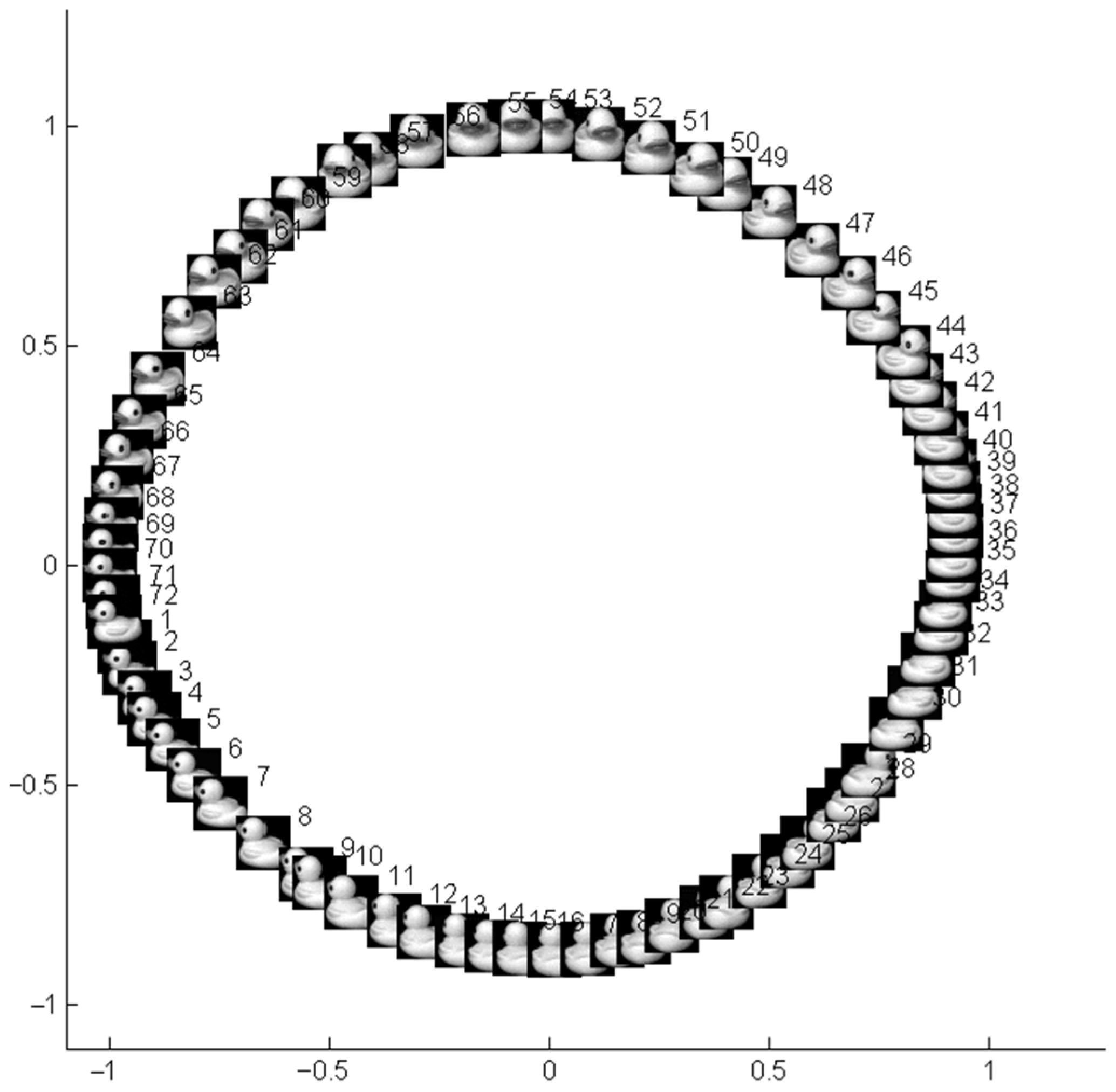

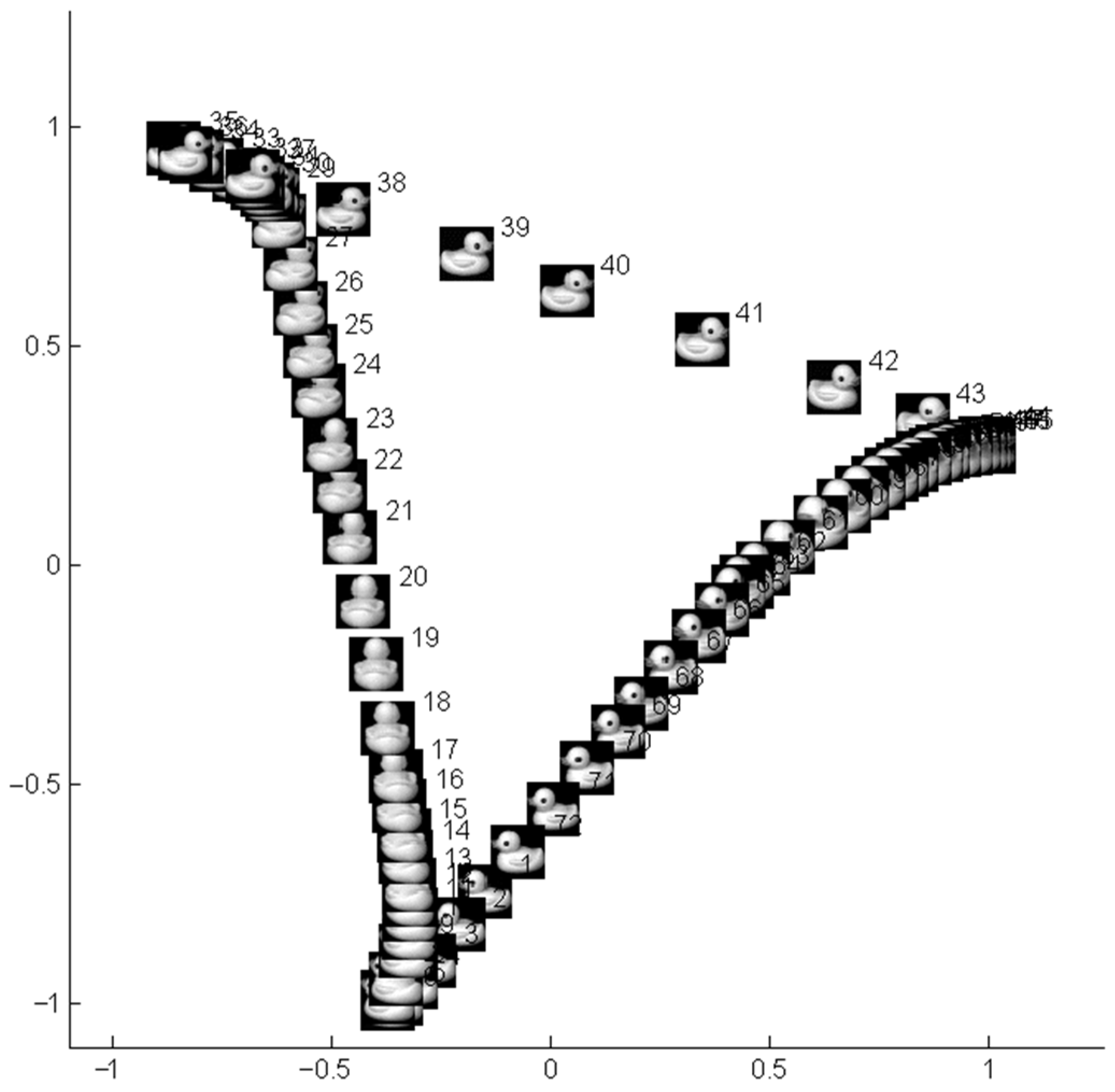

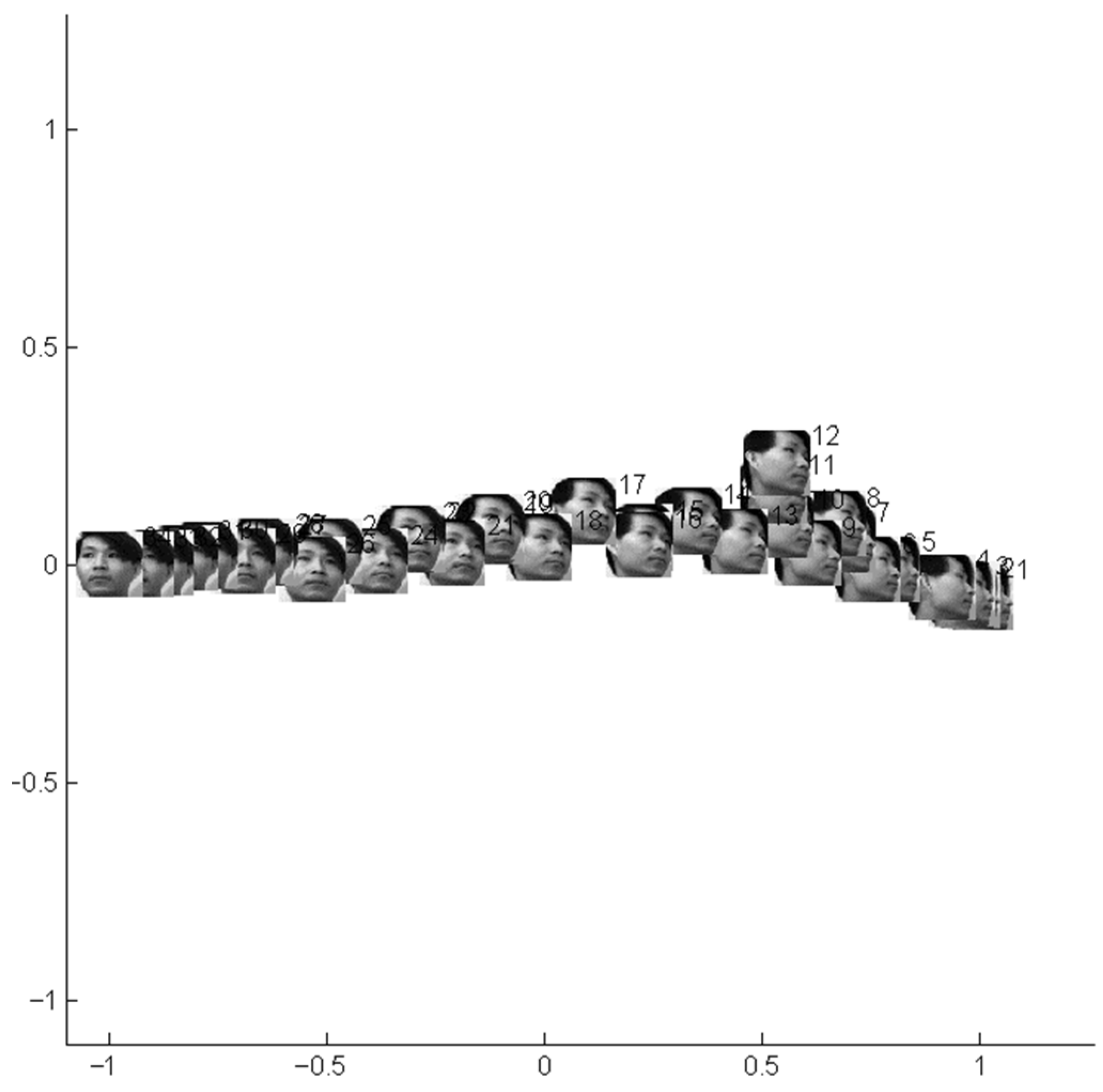

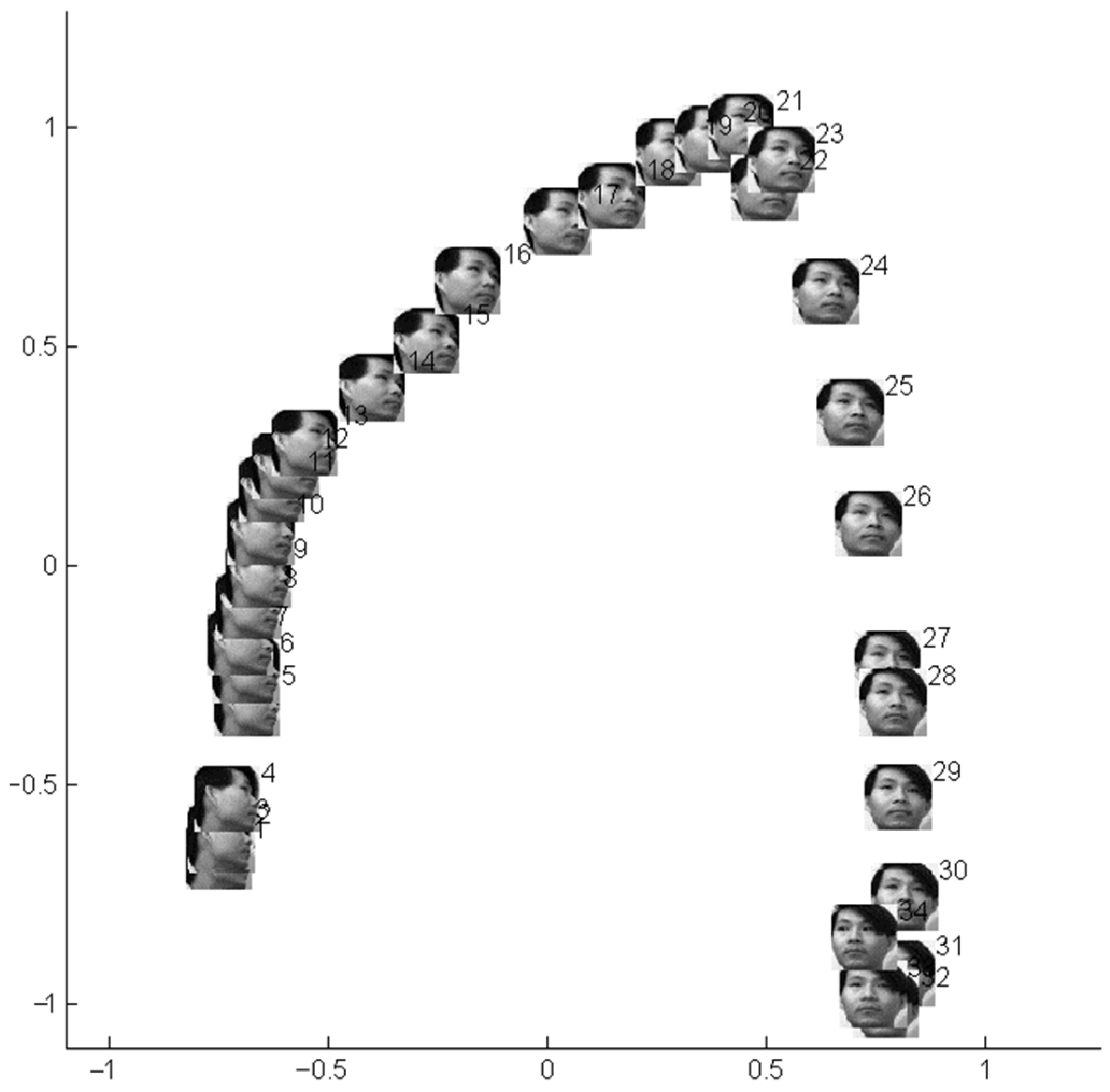

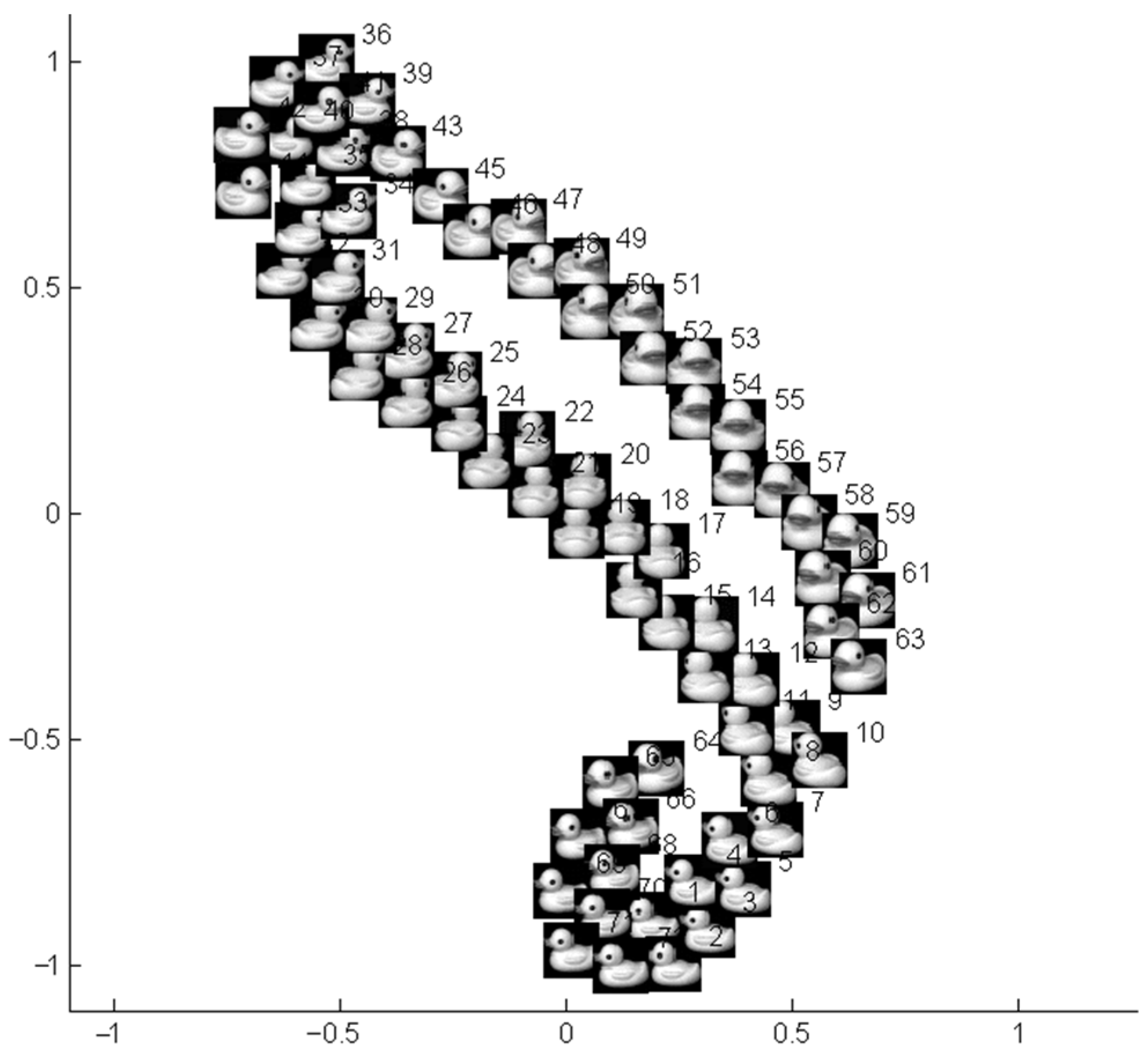

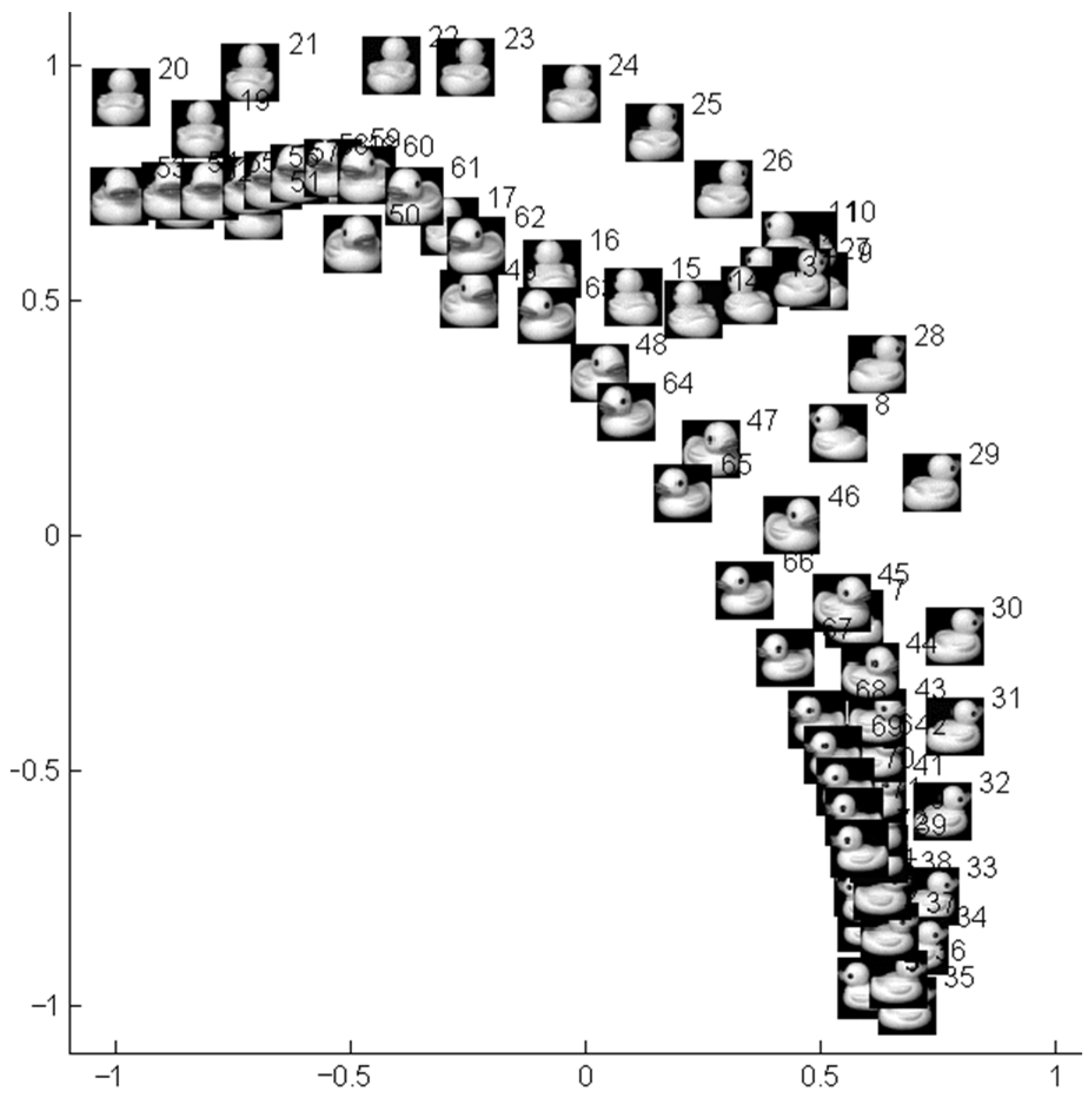

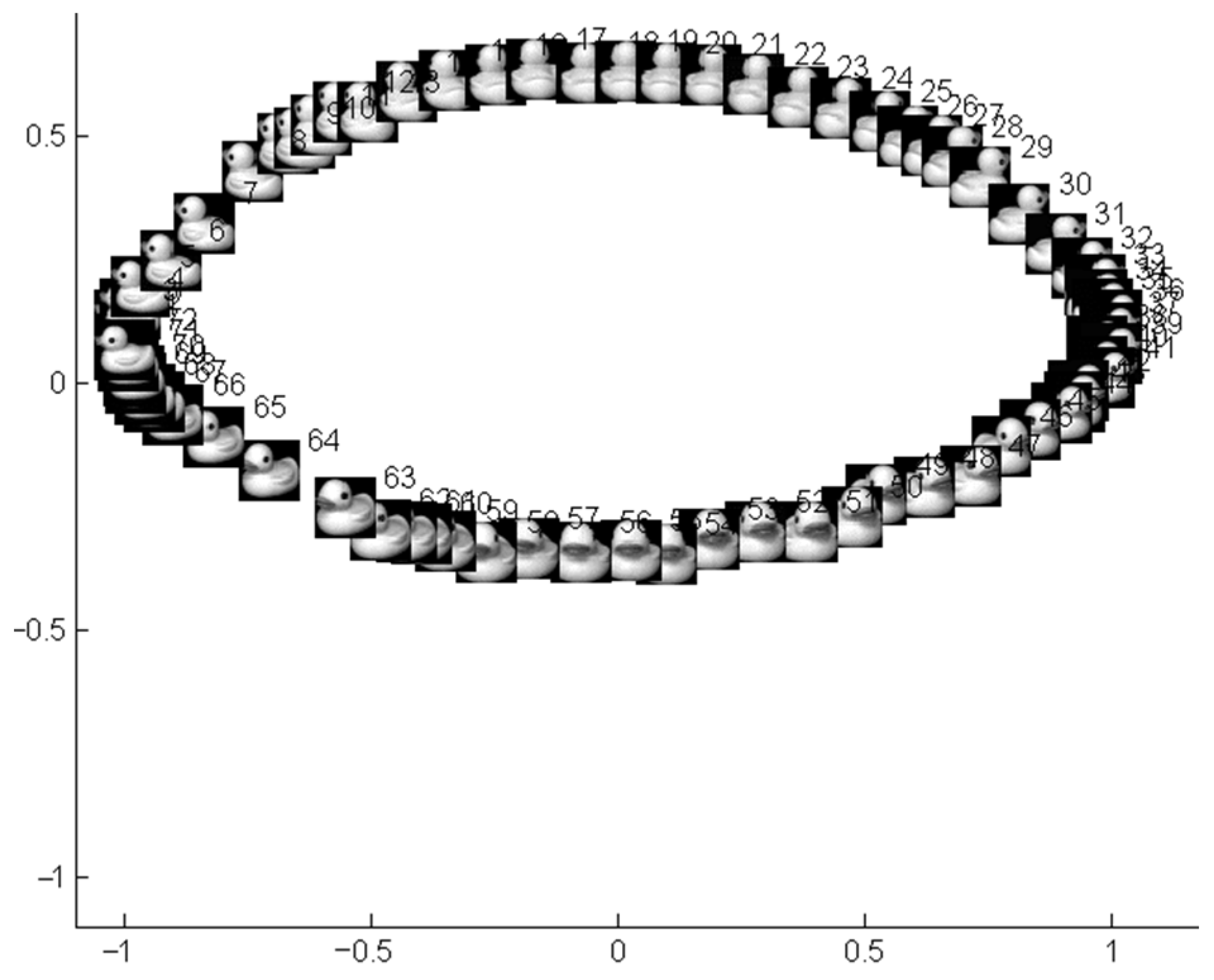

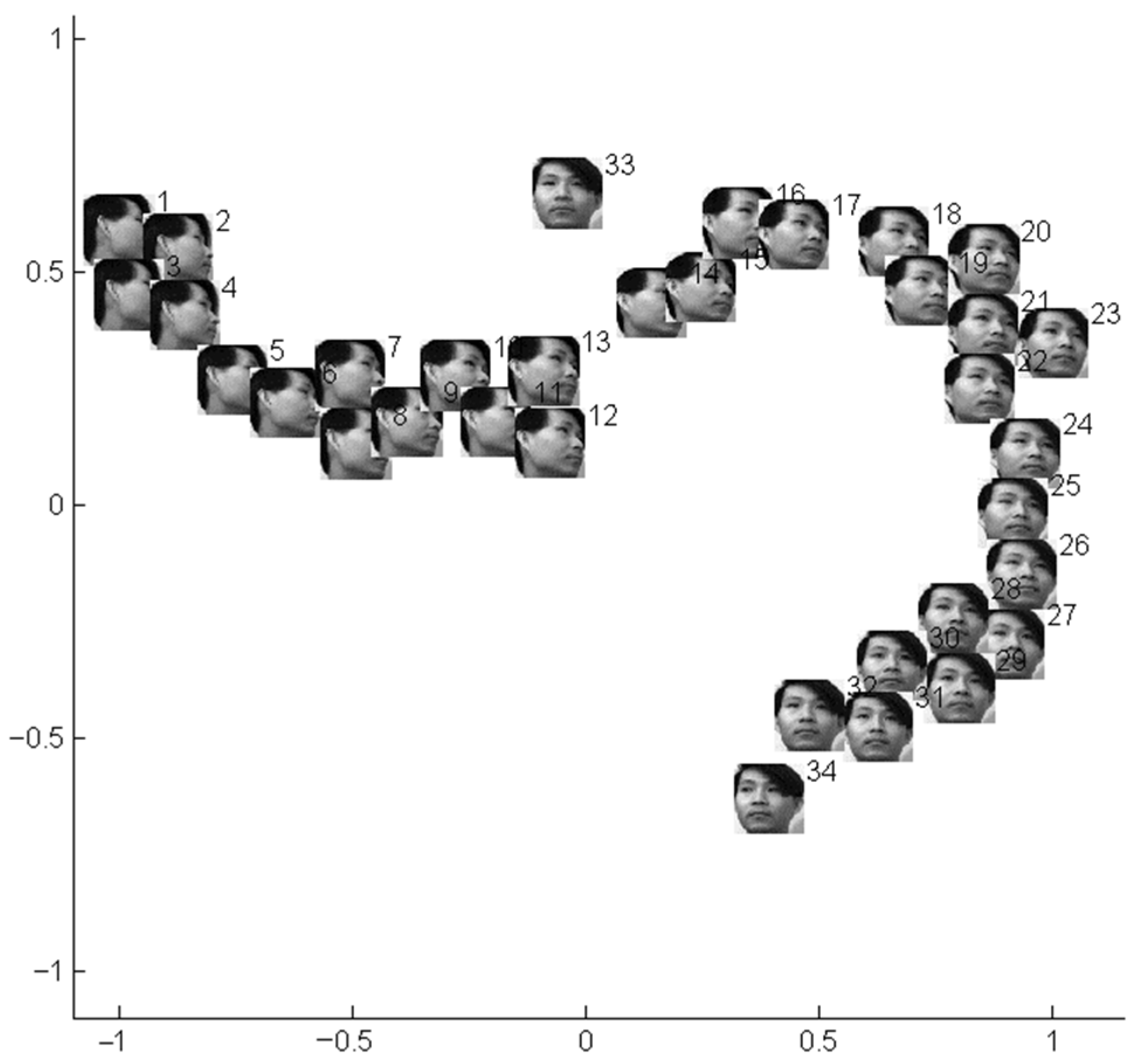

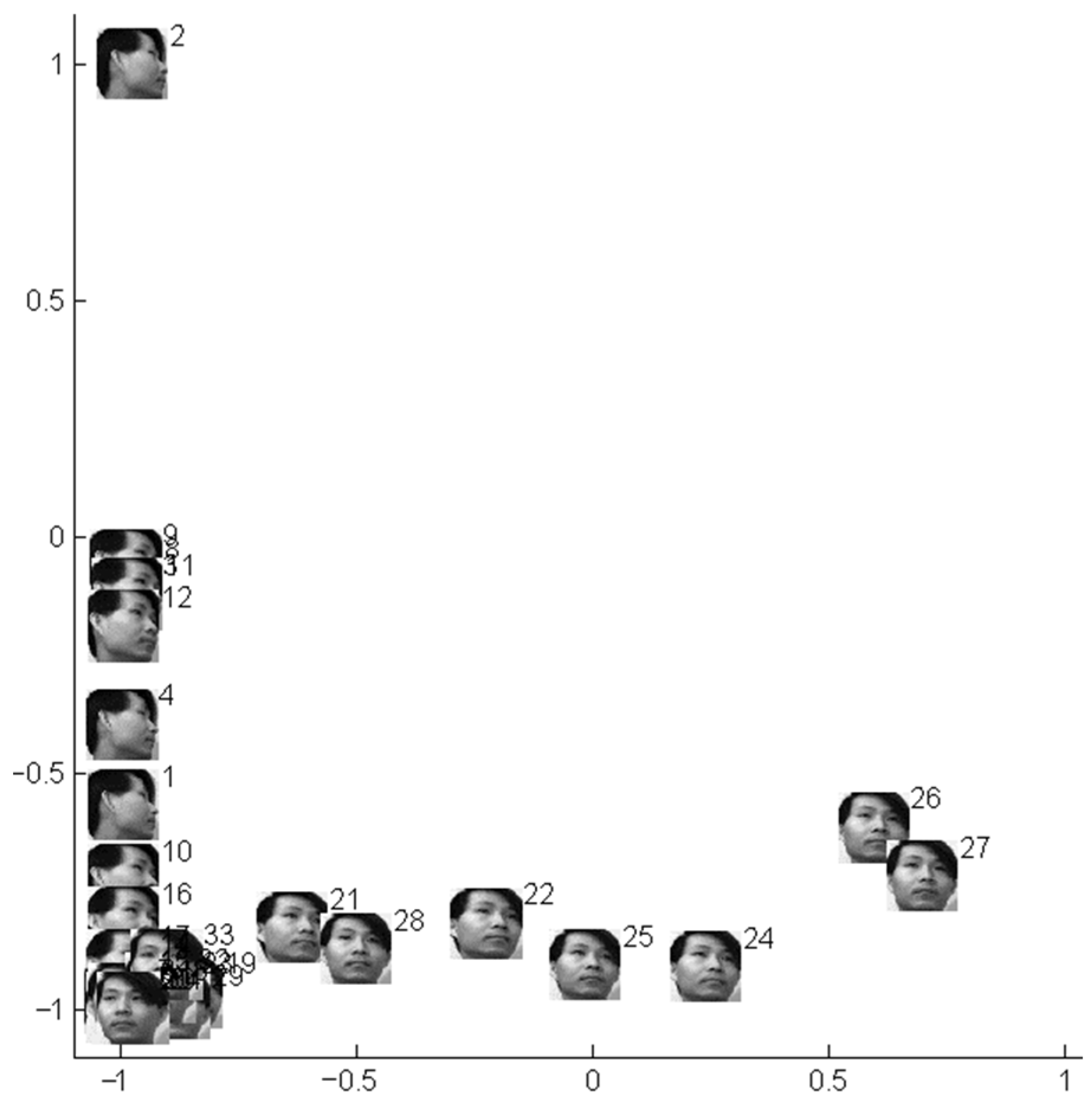

3.2. Simulation Study on Practical Data Sets

3.3. Comparison with Typical Manifold Learning Methods

4. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jia, W.; Sun, M.; Lian, J.; Hou, S. Feature dimensionality reduction: A review. Complex Intell. Syst. 2022, 8, 2663–2693. [Google Scholar] [CrossRef]

- Ghosh, D. Sufficient Dimension Reduction: An Information-Theoretic Viewpoint. Entropy 2022, 24, 167. [Google Scholar] [CrossRef] [PubMed]

- Riznyk, V. Big Data Process Engineering under Manifold Coordinate Systems. WSEAS Trans. Inf. Sci. Appl. 2021, 18, 7–11. [Google Scholar] [CrossRef]

- Donoho, D.L. High-Dimensional Data Analysis: The Curses and Blessing of Dimensionality. In Proceedings of the of AMS Mathematical Challenges of the 21st Century, Los Angeles, LA, USA, 7–12 August 2000; pp. 1–32. [Google Scholar]

- Ray, P.; Reddy, S.S.; Banerjee, T. Various dimension reduction techniques for high dimensional data analysis: A review. Artif. Intell. Rev. 2021, 54, 3473–3515. [Google Scholar] [CrossRef]

- Ayesha, S.; Hanif, M.K.; Talib, R. Overview and comparative study of dimensionality reduction techniques for high dimensional data. Inf. Fusion 2020, 59, 44–58. [Google Scholar] [CrossRef]

- Langdon, C.; Genkin, M.; Engel, T.A. A unifying perspective on neural manifolds and circuits for cognition. Nat. Rev. Neurosci. 2023, 24, 363–377. [Google Scholar] [CrossRef] [PubMed]

- Cohen, U.; Chung, S.Y.; Lee, D.D.; Sompolinsky, H. Separability and geometry of object manifolds in deep neural networks. Nat. Commun. 2020, 11, 746. [Google Scholar] [CrossRef] [PubMed]

- Seung, H.S.; Lee, D.D. The manifold ways of perception. Science 2000, 290, 2268–2269. [Google Scholar] [CrossRef]

- Tenenbaum, J.B.; Silva, V.; Langford, J.C. A Global Geometric Framework for Nonlinear Dimensionality Reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear Dimensionality Reduction by Locally Linear Embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P. Laplacian Eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Weinberger, K.Q.; Saul, L.K. Unsupervised learning of image manifolds by semidefinite programming. Int. J. Comput. Vision 2006, 70, 77–90. [Google Scholar] [CrossRef]

- Lin, T.; Zha, H.B. Riemannian Manifold Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 796–809. [Google Scholar] [PubMed]

- Ran, R.; Qin, H.; Zhang, S.; Fang, B. Simple and Robust Locality Preserving Projections Based on Maximum Difference Criterion. Neural Process Lett. 2022, 54, 1783–1804. [Google Scholar] [CrossRef]

- Maaten, L.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Zhang, Z.Y.; Zha, H.Y. Principal Manifolds and Nonlinear Dimensionality Reduction via Tangent Space Alignment. SIAM J. Sci. Comput. 2004, 26, 313–338. [Google Scholar] [CrossRef]

- Yan, S.C.; Xu, D.; Zhang, B.Y.; Zhang, H.J.; Yang, Q.; Lin, S. Graph embedding and extensions: A general framework for dimensionality reduction. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 40–51. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Tao, D.; Li, X.; Yang, J. Patch Alignment for Dimensionality Reduction. IEEE Trans. Knowl. Data Eng. 2009, 21, 1299–1313. [Google Scholar] [CrossRef]

- Huang, X.; Wu, L.; Ye, Y. A Review on Dimensionality Reduction Techniques. Int. J. Pattern Recogni. Artif. Intell. 2019, 33, 1950017. [Google Scholar] [CrossRef]

- Bengio, Y.; Larochelle, H.; Vincent, P. Non-local manifold parzen windows. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 5–8 December 2005; pp. 115–122. [Google Scholar]

- Bengio, Y.; Monperrus, M.; Larochelle, H. Nonlocal estimation of manifold structure. Neural Comput. 2006, 18, 2509–2528. [Google Scholar] [CrossRef]

- Bengio, Y.; Monperrus, M. Non-local manifold tangent learning. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 13–18 December 2004; pp. 129–136. [Google Scholar]

- Xu, X.Z.; Liang, T.M.; Zhu, J.; Zheng, D.; Sun, T.F. Review of classical dimensionality reduction and sample selection methods for large-scale data processing. Neurocomputing 2019, 328, 5–15. [Google Scholar] [CrossRef]

- Zeng, X.H. Applications of average geodesic distance in manifold learning. In Proceedings of the 3rd International Conference on Rough Sets and Knowledge Technology, Chengdu, China, 17–19 May 2008; pp. 540–547. [Google Scholar]

- Hassanien, A.E.; Emary, E. Swarm Intelligence: Principles, Advances, and Applications; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Slowik, A. Swarm Intelligence Algorithms; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar]

- Iba, H. AI and SWARM: Evolutionary Approach to Emergent Intelligence; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Nguyen, H.T.; Walker, C.L.; Walker, E.A. A First Course in Fuzzy Logic; Chapman and Hall/CRC: Boca Raton, FL, USA, 2018. [Google Scholar]

- Viksten, F.; Forssen, P.-E.; Johansson, B.; Moe, A. Comparison of Local Image Descriptors for Full 6 Degree-of-Freedom Pose Estimation. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 2779–2786. [Google Scholar]

- Object Pose Estimation Database. Available online: https://www.cvl.isy.liu.se/research/objrec/posedb/index.html (accessed on 9 February 2023).

- Nene, S.A.; Nayar, S.K.; Murase, H. Columbia Object Image Library (COIL-20); Technical Report CUCS-005-96; Columbia University: New York, NY, USA, 1996. [Google Scholar]

- Columbia University Image Library. Available online: https://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php (accessed on 26 May 2022).

- Georghiades, A.S.; Belhumeur, P.N.; Kriegman, D.J. From Few to Many: Illumination Cone Models for Face Recognition under Variable Lighting and Pose. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 643–660. [Google Scholar] [CrossRef]

- Extended Yale Face Database B. Available online: http://vision.ucsd.edu/~leekc/ExtYaleDatabase/ExtYaleB.html (accessed on 26 May 2022).

- Graham, D.B.; Allinson, N.M. Characterizing Virtual Eigensignatures for General Purpose Face Recognition. In Face Recognition: From Theory to Applications; NATO ASI Series F, Computer and Systems Sciences; Wechsler, H., Phillips, P.J., Bruce, V., Soulié, F.F., Huang, T.S., Eds.; Fogelman-Soulie; Springer: Berlin/Heidelberg, Germany, 1998; Volume 163, pp. 446–456. [Google Scholar]

- Sam Roweis: Data for MATLAB. Available online: https://cs.nyu.edu/~roweis/data.html (accessed on 29 June 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhuang, X.; Mastorakis, N. Learning by Autonomous Manifold Deformation with an Intrinsic Deforming Field. Symmetry 2023, 15, 1995. https://doi.org/10.3390/sym15111995

Zhuang X, Mastorakis N. Learning by Autonomous Manifold Deformation with an Intrinsic Deforming Field. Symmetry. 2023; 15(11):1995. https://doi.org/10.3390/sym15111995

Chicago/Turabian StyleZhuang, Xiaodong, and Nikos Mastorakis. 2023. "Learning by Autonomous Manifold Deformation with an Intrinsic Deforming Field" Symmetry 15, no. 11: 1995. https://doi.org/10.3390/sym15111995

APA StyleZhuang, X., & Mastorakis, N. (2023). Learning by Autonomous Manifold Deformation with an Intrinsic Deforming Field. Symmetry, 15(11), 1995. https://doi.org/10.3390/sym15111995