3.1. Handling Missing Data

The variables with the lowest number of missing values below 10%, based on

Table 1, namely PM

2.5, NO

x, O

3, CO, and SO

2, were imputed using the median value technique from the predetermined AQI category. For example, suppose there is a missing value in the PM

2.5 attribute, and the AQI Bucket category is Good. Based on

Table 2, a range of values is obtained between 0 and 30, so the imputation value using the median technique is

. For other missing values, the same method is used, using the median value based on the category of each variable. Furthermore, the missing values above 10%, namely PM

10 and NH

3, were imputed using the KNN regressor method. NH

3 has fewer missing values than PM

10, so NH

3 is imputed using the KNN regressor first by ignoring the PM

10. To carry out the training process on the NH

3, the

value is determined first by looking at the most optimal root mean square error (RMSE). The RMSE for the

value measured ranges from 1 to 25 for the NH

3, as can be seen in

Figure 3.

At

, the highest RMSE value is almost around 30% and continues to decrease towards a value of 22%. The lowest RMSE value was obtained at

, so the

value was chosen to be trained on the NH

3 attribute using the KNN regressor. After the NH

3 is filled, the PM

10 is imputed using the KNN regressor. In the same way, the

value is determined by the PM

10. The RMSE results obtained for the

value in the PM

10 can be seen as shown in

Figure 4. For

, the highest RMSE value is almost around 42% and continues to decrease towards a value of 36%. The lowest RMSE value was obtained at

, so the

value was chosen to be trained on the PM

10 using the KNN regressor. The results of the imputation process using the KNN regressor are then compared between the predicted value and the actual value, which can be seen as shown in

Figure 5.

In

Figure 5, the predicted initial value is still on a straight diagonal line, which means there is a match between the expected and actual values. However, towards the end of the predicted value, some values slightly deviate from the actual value. To see how successful the KNN regressor method is in filling in the missing values on the PM

10 and NH

3 variables, measurements are made using the accuracy value with the obtained value of 0.8412.

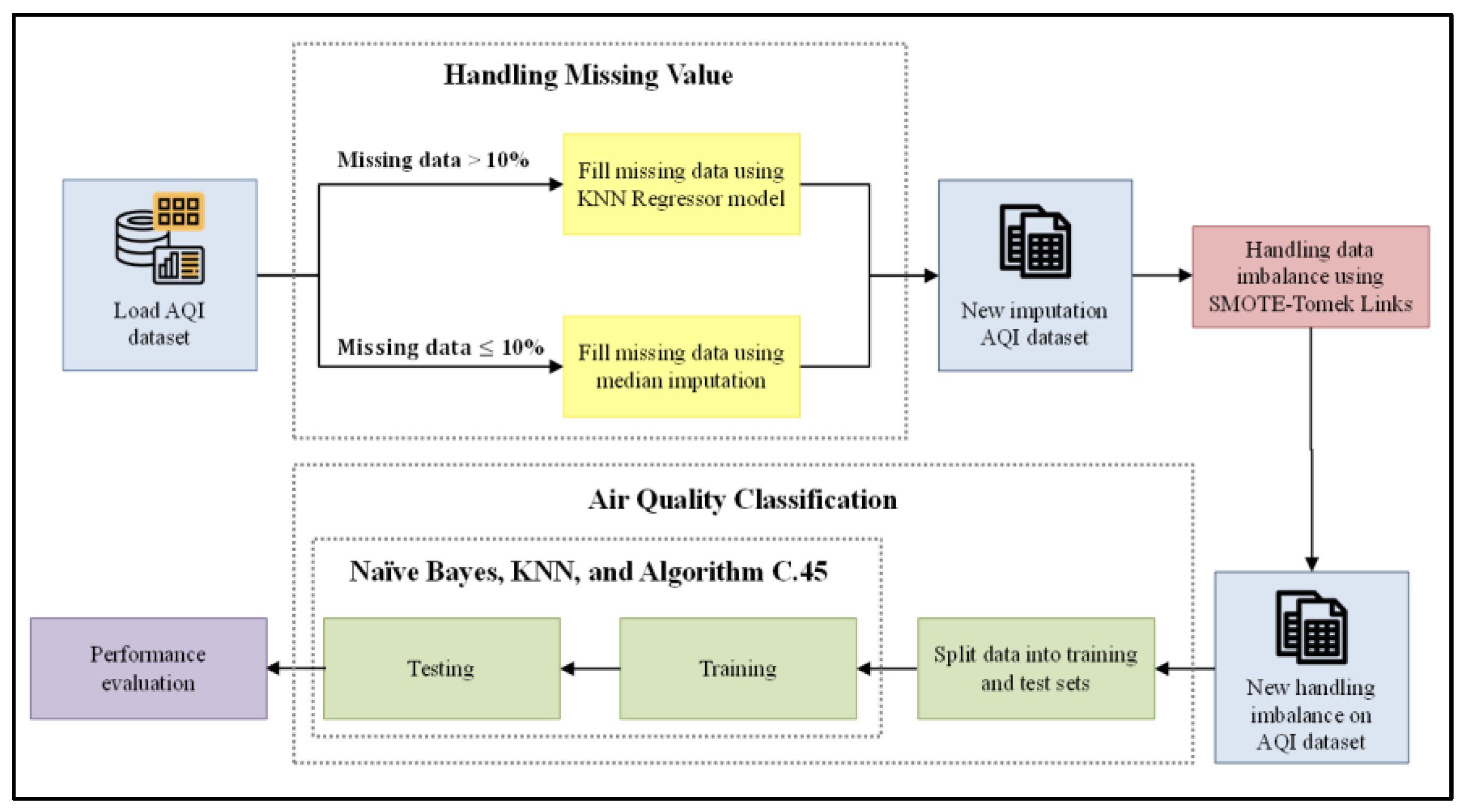

3.3. Performance of Prediction Model

The results of applying Median-KNN-SMOTE-Tomek Links to handle missing data and class imbalances are applied to predict air quality. The methods used in this study are Naive Bayes (NB), KNN, and C4.5. The performance metrics used to measure the results of the application of Median-KNN-SMOTE-Tomek Links are accuracy, precision, recall, and

F1-score. A comparison of the prediction results using the proposed methods can be seen in

Table 3. The accuracy of the prediction data after being imputed using Median-KNN and balanced using SMOTE-Tomek Links (Median-KNN-SMOTE-Tomek Links) increases significantly compared with data that are only imputed with Median-KNN, not balanced using SMOTE-Tomek Links (Median-KNN). The increase in accuracy from highest to lowest was 29.16% (C4.5), 19.75% (KNN), and 5.16% (NB). In line with this increase, C4.5 has the highest accuracy compared with the other two methods, with a metric value of 100%.

The other performance metrics measured in each class, such as precision, recall, and

F1-score, for each prediction method can be seen in

Figure 7,

Figure 8 and

Figure 9.

Figure 7,

Figure 8 and

Figure 9 show that the prediction results using data after SMOTE-Tomek Links (Median-KNN-SMOTE-Tomek Links) exhibit an increase in the other metrics, precision, recall, and

F1-score, in all three methods compared with the data that were only imputed using Median-KNN. In data imputation using Median-KNN, the average precision ranges from 48% to 89%, the average recall ranges from 25% to 87%, and the average

F1-score ranges from 37% to 80%. Meanwhile, the data after SMOTE-Tomek Links produce an average precision ranging from 67% to 100%, average recall ranging from 58% to 100%, and average

F1-score ranging from 64% to 100%.

Furthermore, we used five treatments to show the effect of Median-KNN regressor imputation and SMOTE-Tomek Links resampling in dealing with missing data and class imbalance, respectively (

Table 4). For the first treatment, all missing values are discarded, and class imbalances are ignored. All missing values are discarded in the second treatment, and class imbalances are handled with SMOTE. All missing values are discarded in the third treatment, and class imbalances are handled with SMOTE-Tomek Links. The missing values are handled with the Median-KNN regressor in the fourth treatment, and class imbalances are handled with SMOTE. For the fourth treatment, the missing values are handled with the Median-KNN regressor, and class imbalances are handled with SMOTE-Tomek Links.

We show that the increase in predictive performance with Naive Bayes from treatment 1 to treatment 3 is due to the weakening of the correlations of most of the predictor variables (

Table 5,

Table 6 and

Table 7). In these three datasets, the more the variable correlations are weakened, the more predictive performance increases with Naive Bayes. In other words, fulfilling more naive assumptions between predictor variables can further improve the predictive performance.

The same thing happened to the data of the fourth and fifth treatments, where the missing data were imputed with the Median-KNN regressor and then the imbalanced classes were resampled using SMOTE and SMOTE-Tomek Links. The correlation between predictor variables increased from the fourth treatment to the fifth treatment, causing the prediction performance using the NB method to decrease (

Table 8 and

Table 9).

In implementing the KNN method for the five treatments, we explored a number of values of

in the range 1–100, determining which produces the highest accuracy. The highest

values in the training dataset for each treatment were 30, 93, 61, 55, and 2, respectively (

Figure 10). Especially for the fifth treatment, the accuracy value was stable at 100% for

= 1 to 100.

The use of Median-KNN regressor imputation and SMOTE-Tomek Links resampling, proposed in this work to improve air quality prediction performance, obtained significant results using the KNN and C4.5 methods. Even with the C4.5 method, the model performance reached 100% on all metrics. As non-parametric methods, both the KNN and C4.5 methods do not consider the effect of the correlation between predictor variables, so adding observations to balance classes positively affects predictive performance.

Furthermore, improved evaluation results for the three proposed methods with data obtained before SMOTE-Tomek Links and after SMOTE-Tomek Links are compared with those of other studies.

Table 10 shows that not all studies that handled imbalanced classes before using SMOTE-Tomek Links had improved performance on all metrics. An increase in performance is marked with a positive value, while a decrease is marked with a negative value in accuracy, precision, recall and

F1-Score. In credit card fraud detection [

20], with a class ratio of 0.17:99.83, the class imbalance handled with SMOTE-Tomek Links resulted in a recall of 94.94% from the previous 74.83%. The increase in this metric by 20.11% was not followed by an increase in accuracy, which actually decreased from 99.17% to 98.32%. However, the decline did not reach 1%.

High accuracy does not always mean that the algorithm performs better in all situations. It is sometimes misleading in situations such as imbalanced class datasets, so it is not always considered to be accurate. In credit card fraud detection [

20], if you only predict that the transaction is genuine and not fraudulent, it will cause big problems for credit card companies. As a result, the proportion of transaction cases that are predicted to be fraud but are not fraud must be higher than the proportion of transaction cases that are predicted not to be a fraud but are fraud. Thus, a high increase in a recall is needed compared to a high increase in accuracy.

In the case of DNA methylation classification [

25], only NB had improved performance on all three metrics—precision, recall, and

F1-Score—while the classification using random forest (RF) and logistic regression (LR) for data that are balanced with SMOTE-Tomek Links each have performance improvements in recall and

F1-score or recall only. The highest increase was only achieved for recall using Naive Bayes. Overall, the increase achieved was less than 25% and the decline did not reach 15%.

A recall is a more significant evaluation measure than precision in most high-risk disease (such as cancer) detection situations. The recall represents the percentage of all cancer cases that the model correctly predicted, whereas the precision represents the percentage of predictions made by the cancer model where cancer is truly present. Similar to the detection of credit card fraud, DNA methylation also requires a more significant increase in recall compared to other evaluation measures.

The most significant performance increase (38%–60%) was obtained from monitoring an electrical rotating machine dataset [

19] with class ratios of 37.5:62.5 and 16.7:83.3. In the first ratio, the metric that has the highest increase is precision, while in the second ratio, this metric is the

F1-score. All of these studies predict cases using KNN. The increase in performance metrics, which reached 60% in [

19], could also be due to the small number of samples. Further exploration is needed for large samples.

In our proposed method, the highest increase in prediction performance was achieved by C4.5, for which the metrics ranged from 29.16% to 33.5%. Generally, the resampling technique of SMOTE-Tomek Links has a positive effect on improving the prediction performance of the KNN and C4.5 methods, especially for India air quality prediction, where the amount of missing data is less than 10% or more than or equal to 10%, handled using the median and KNN regressor, respectively.

Furthermore,

Table 11 present the performance metrics obtained by this proposed study using the SMOTE-Tomek Links technique and the three proposed methods are compared with previous research using this air quality dataset. Sethi and Mittal [

32] obtained the lowest accuracy value, which only calculated accuracy and precision values. The lowest precision, recall, and

F1-scores were obtained in this study using the Median-KNN-SMOTE-Tomek Links with NB method. This method of handling lost data and class imbalance that combines the Median-KNN and SMOTE-Tomek Link methods, then predicts quality with C4.5, has a very satisfying performance, where all performance metrics reach 100%. Sufficient experimentation is required to obtain a satisfactory predictive model performance.