A Chaotic-Based Interactive Autodidactic School Algorithm for Data Clustering Problems and Its Application on COVID-19 Disease Detection

Abstract

:1. Introduction

- ➢

- Increasing the discovery of the optimal solution in the proposed model with a balance between exploration and exploitation by chaotic maps

- ➢

- They provide an improved version of the IAS for the data clustering problem based on chaotic maps.

- ➢

- Evaluation of the proposed model on 20 UCI datasets

- ➢

- Assessment of the proposed model based on fitness function and convergence rate

- ➢

- Developing the BIAS as the binary version of IAS using the V-shaped transfer function to find valuable features from COVID-19.

- ➢

- Comparison of the proposed model with ABC, BA, CSA, and AEFA

- ➢

- We are applying the BIAS in a case study to detect COVID-19.

2. Related Works

3. Material and Method

3.1. IAS Algorithm

| Algorithm 1 The method of student generation and assessment of student eligibility |

| Algorithm 2 Individual Training Session |

| 1: 3: 4: 5: |

| Algorithm 3 Collective Training Session |

| 1: 2: 3: 5: |

| Algorithm 4 New student challenge |

| 1: 3: 4: 5: |

3.2. Chaotic Maps

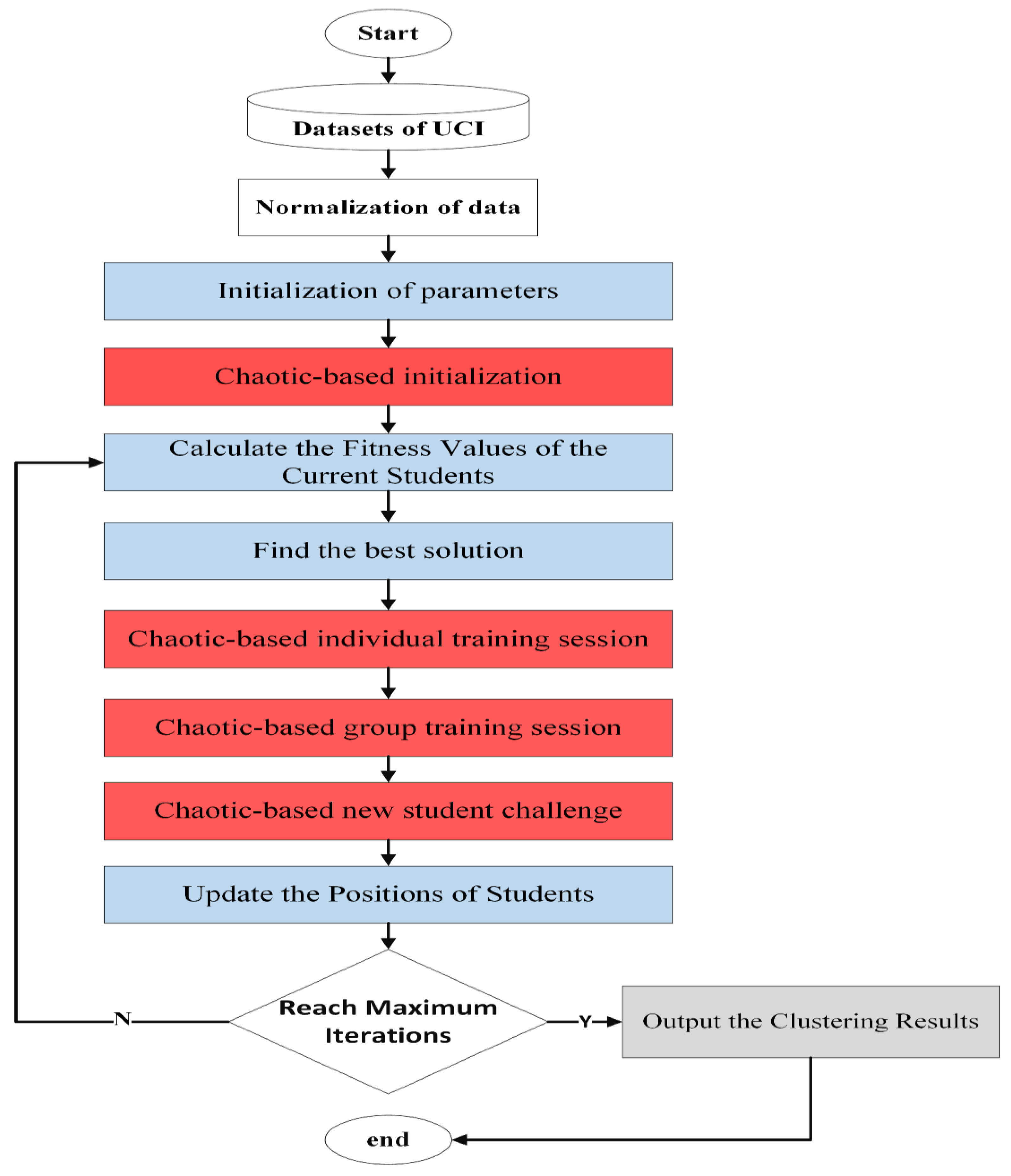

4. Proposed Model

4.1. Pre-Processing

4.2. Chaotic-Based Population Generation

4.3. Chaotic-Based Individual Training Session

| Algorithm 5 Chaotic-Based Individual Training |

| 1: 3: 6: |

4.4. Chaotic-Based Group Training Session

| Algorithm 6 Chaotic-Based Group Training |

| 1: 4: 5: 6: |

4.5. Chaotic-Based New Student Challenge

| Algorithm 7 Chaotic-Based New Student |

2: m= 3: 5: |

4.6. Formation of Clusters

4.7. Fitness Function of Clustering

5. Results and Discussion

5.1. Dataset

5.2. Simulation Environment and Parameters Determination

5.3. Comparison of the Proposed Model with Other Metaheuristics

6. Real Application: Binary CIAS on COVID-19 Dataset

6.1. Fitness Function

6.2. Transfer Function

6.3. Evaluation Criteria

7. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sorkhabi, L.B.; Gharehchopogh, F.S.; Shahamfar, J. A systematic approach for pre-processing electronic health records for mining: Case study of heart disease. Int. J. Data Min. Bioinform. 2020, 24, 97–120. [Google Scholar] [CrossRef]

- Arasteh, B.; Abdi, M.; Bouyer, A. Program source code comprehension by module clustering using combination of discretized gray wolf and genetic algorithms. Adv. Eng. Softw. 2022, 173, 103252. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Taghian, S.; Mirjalili, S.; Ewees, A.A.; Abualigah, L.; Elaziz, M.A. MTV-MFO: Multi-Trial Vector-Based Moth-Flame Optimization Algorithm. Symmetry 2021, 13, 2388. [Google Scholar] [CrossRef]

- Izci, D. A novel improved atom search optimization algorithm for designing power system stabilizer. Evol. Intell. 2022, 15, 2089–2103. [Google Scholar] [CrossRef]

- Ekinci, S.; Izci, D.; Al Nasar, M.R.; Abu Zitar, R.; Abualigah, L. Logarithmic spiral search based arithmetic optimization algorithm with selective mechanism and its application to functional electrical stimulation system control. Soft Comput. 2022, 26, 12257–12269. [Google Scholar] [CrossRef]

- Arasteh, B.; Sadegi, R.; Arasteh, K. Bölen: Software module clustering method using the combination of shuffled frog leaping and genetic algorithm. Data Technol. Appl. 2021, 55, 251–279. [Google Scholar] [CrossRef]

- Arasteh, B.; Sadegi, R.; Arasteh, K. ARAZ: A software modules clustering method using the combination of particle swarm optimization and genetic algorithms. Intell. Decis. Technol. 2020, 14, 449–462. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S.; Gholizadeh, H. A comprehensive survey: Whale Optimization Algorithm and its applications. Swarm Evol. Comput. 2019, 48, 1–24. [Google Scholar] [CrossRef]

- Jahangiri, M.; Hadianfard, M.A.; Najafgholipour, M.A.; Jahangiri, M.; Gerami, M.R. Interactive autodidactic school: A new metaheuristic optimization algorithm for solving mathematical and structural design optimization problems. Comput. Struct. 2020, 235, 106268. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-tr06; Erciyes University: Ercis, Turkey, 2005. [Google Scholar]

- Yang, X.-S. A new metaheuristic bat-inspired algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Springer: Berlin/Heidelberg, Germany, 2010; pp. 65–74. [Google Scholar]

- Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

- Yadav, A.; Kumar, N. Artificial electric field algorithm for engineering optimization problems. Expert Syst. Appl. 2020, 149, 113308. [Google Scholar] [CrossRef]

- Ahmadi, R.; Ekbatanifard, G.; Bayat, P. A Modified Grey Wolf Optimizer Based Data Clustering Algorithm. Appl. Artif. Intell. 2021, 35, 63–79. [Google Scholar] [CrossRef]

- Ashish, T.; Kapil, S.; Manju, B. Parallel bat algorithm-based clustering using mapreduce. In Networking Communication and Data Knowledge Engineering; Springer: Berlin/Heidelberg, Germany, 2018; pp. 73–82. [Google Scholar]

- Eesa, A.S.; Orman, Z. A new clustering method based on the bio-inspired cuttlefish optimization algorithm. Expert Syst. 2020, 37, e12478. [Google Scholar] [CrossRef]

- Olszewski, D. Asymmetric k-means algorithm. In Adaptive and Natural Computing Algorithms; Lecture Notes in Computer Science; Dobnikar, A., Lotric, U., Ster, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6594, pp. 1–10. [Google Scholar]

- Aggarwal, S.; Singh, P. Cuckoo and krill herd-based k-means++ hybrid algorithms for clustering. Expert Syst. 2019, 36, e12353. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, C.; Zhang, H. Improved K-means algorithm based on density Canopy. Knowl. Based Syst. 2018, 145, 289–297. [Google Scholar] [CrossRef]

- Kumar, A.; Kumar, D.; Jarial, S. A novel hybrid K-means and artificial bee colony algorithm approach for data clustering. Decis. Sci. Lett. 2018, 7, 65–76. [Google Scholar] [CrossRef]

- Nasiri, J.; Khiyabani, F.M. A whale optimization algorithm (WOA) approach for clustering. Cogent Math. Stat. 2018, 5, 1483565. [Google Scholar] [CrossRef]

- Qaddoura, R.; Faris, H.; Aljarah, I. An efficient evolutionary algorithm with a nearest neighbor search technique for clustering analysis. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 8387–8412. [Google Scholar] [CrossRef]

- Zhou, Y.; Wu, H.; Luo, Q.; Abdel-Baset, M. Automatic data clustering using nature-inspired symbiotic organism search algorithm. Knowl. Based Syst. 2019, 163, 546–557. [Google Scholar] [CrossRef]

- Ewees, A.A.; Elaziz, M.A. Performance analysis of Chaotic Multi-Verse Harris Hawks Optimization: A case study on solving engineering problems. Eng. Appl. Artif. Intell. 2020, 88, 103370. [Google Scholar] [CrossRef]

- Chen, K.; Zhou, F.; Liu, A. Chaotic dynamic weight particle swarm optimization for numerical function optimization. Knowl. Based Syst. 2018, 139, 23–40. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, Y.; Yu, C.; Heidari, A.A.; Li, S.; Chen, H.; Li, C. Gaussian mutational chaotic fruit fly-built optimization and feature selection. Expert Syst. Appl. 2019, 141, 112976. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S.; Nadimi-Shahraki, M.H.; Barshandeh, S.; Abdollahzadeh, B.; Zamani, H. CQFFA: A Chaotic Quasi-oppositional Farmland Fertility Algorithm for Solving Engineering Optimization Problems. J. Bionic Eng. 2022, 20, 158–183. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef]

- Ahmed, S.; Sheikh, K.H.; Mirjalili, S.; Sarkar, R. Binary Simulated Normal Distribution Optimizer for feature selection: Theory and application in COVID-19 datasets. Expert Syst. Appl. 2022, 200, 116834. [Google Scholar] [CrossRef]

- Piri, J.; Mohapatra, P.; Acharya, B.; Gharehchopogh, F.S.; Gerogiannis, V.C.; Kanavos, A.; Manika, S. Feature Selection Using Artificial Gorilla Troop Optimization for Biomedical Data: A Case Analysis with COVID-19 Data. Mathematics 2022, 10, 2742. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Fatahi, A.; Zamani, H.; Mirjalili, S. Binary Approaches of Quantum-Based Avian Navigation Optimizer to Select Effective Features from High-Dimensional Medical Data. Mathematics 2022, 10, 2770. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Fatahi, A.; Zamani, H.; Mirjalili, S.; Oliva, D. Hybridizing of Whale and Moth-Flame Optimization Algorithms to Solve Diverse Scales of Optimal Power Flow Problem. Electronics 2022, 11, 831. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Moeini, E.; Taghian, S.; Mirjalili, S. DMFO-CD: A Discrete Moth-Flame Optimization Algorithm for Community Detection. Algorithms 2021, 14, 314. [Google Scholar] [CrossRef]

| Methods | Chaotics | Mathematical Model | Range |

|---|---|---|---|

| CIAS-1 | Chebyshev | pq + 1 = cos(q cos − 1(pq)) | (−1, 1) |

| CIAS-2 | Circle | (0, 1) | |

| CIAS-3 | Guess/mouse | (0, 1) | |

| CIAS-4 | Iterative | (−1, 1) | |

| CIAS-5 | Logistic | , c = 4 | (0, 1) |

| CIAS-6 | Piecewise | (0, 1) | |

| CIAS-7 | Sine | (0, 1) | |

| CIAS-8 | Singer | (0, 1) | |

| CIAS-9 | Sinusoidal | , c = 2.3 | (0, 1) |

| CIAS-10 | Tent | (0, 1) |

| No. | Datasets | Number of Features | Number of Samples |

|---|---|---|---|

| 1 | Balance Scale | 4 | 625 |

| 2 | Blood | 4 | 748 |

| 3 | breast | 30 | 569 |

| 4 | CMC | 9 | 1473 |

| 5 | Dermatology | 34 | 366 |

| 6 | Glass | 9 | 214 |

| 7 | Haberman’s Survival | 3 | 306 |

| 8 | hepatitis | 19 | 155 |

| 9 | Iris | 4 | 150 |

| 10 | Libras | 90 | 360 |

| 11 | lung cancer | 32 | 56 |

| 12 | Madelon | 500 | 2600 |

| 13 | ORL | 1024 | 400 |

| 14 | seeds | 7 | 210 |

| 15 | speech | 310 | 125 |

| 16 | Starlog (Heart) | 13 | 270 |

| 17 | Steel | 33 | 1941 |

| 18 | Vowel | 3 | 871 |

| 19 | wine | 13 | 178 |

| 20 | Wisconsin | 9 | 699 |

| Values | Parameters | Algorithms |

|---|---|---|

| ABC [10] | Limit 5D | 5D |

| Population Size | 20 | |

| Number of lookers | 20 | |

| Iterations | 100 | |

| BA [11] | R | 0.5 |

| A | 0.8 | |

| population size | 20 | |

| Iterations | 100 | |

| CSA [12] | Ap | 0.8 |

| population size is | 20 | |

| Iterations | 100 | |

| AEFA [13] | FCheck | 1 |

| population size | 20 | |

| Iterations | 100 | |

| Proposed Model | population size | 20 |

| Iterations | 100 |

| Dataset | Results | IAS-1 | IAS-2 | IAS-3 | IAS-4 | IAS-5 | IAS-6 | IAS-7 | IAS-8 | IAS-9 | IAS-10 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Blood | Worst | 4.21E+05 | 8.46E+05 | 8.46E+05 | 4.20E+05 | 8.47E+05 | 8.46E+05 | 8.47E+05 | 8.46E+05 | 8.46E+05 | 8.46E+05 |

| Best | 4.10E+05 | 4.10E+05 | 4.12E+05 | 4.10E+05 | 4.18E+05 | 4.13E+05 | 4.12E+05 | 4.15E+05 | 4.20E+05 | 4.13E+05 | |

| Avg | 4.14E+05 | 4.93E+05 | 4.93E+05 | 4.15E+05 | 5.46E+05 | 5.20E+05 | 5.22E+05 | 6.30E+05 | 6.55E+05 | 5.20E+05 | |

| Cancer | Worst | 4.25E+03 | 4.45E+03 | 3.59E+03 | 3.63E+03 | 4.36E+03 | 3.94E+03 | 4.71E+03 | 4.24E+03 | 4.25E+03 | 5.36E+03 |

| Best | 3.28E+03 | 3.93E+03 | 3.30E+03 | 3.26E+03 | 3.68E+03 | 3.42E+03 | 3.94E+03 | 3.77E+03 | 3.50E+03 | 3.72E+03 | |

| Avg | 3.82E+03 | 4.20E+03 | 3.44E+03 | 3.48E+03 | 4.08E+03 | 3.62E+03 | 4.40E+03 | 4.03E+03 | 3.83E+03 | 5.05E+03 | |

| CMC | Worst | 9.70E+03 | 9.91E+03 | 1.38E+04 | 1.01E+04 | 1.32E+04 | 1.26E+04 | 1.31E+04 | 1.38E+04 | 1.38E+04 | 1.31E+04 |

| Best | 8.08E+03 | 7.79E+03 | 7.40E+03 | 7.33E+03 | 7.80E+03 | 7.60E+03 | 7.31E+03 | 6.93E+03 | 7.66E+03 | 7.15E+03 | |

| Avg | 9.11E+03 | 8.67E+03 | 1.16E+04 | 8.85E+03 | 1.10E+04 | 1.09E+04 | 1.01E+04 | 1.18E+04 | 1.21E+04 | 1.05E+04 | |

| Dermatology | Worst | 4.95E+03 | 3.54E+03 | 1.21E+04 | 4.76E+03 | 4.65E+03 | 4.00E+03 | 7.71E+03 | 4.94E+03 | 5.05E+03 | 4.37E+03 |

| Best | 3.05E+03 | 2.75E+03 | 2.83E+03 | 2.87E+03 | 3.31E+03 | 3.03E+03 | 2.96E+03 | 3.08E+03 | 2.93E+03 | 2.90E+03 | |

| Avg | 3.75E+03 | 3.27E+03 | 1.15E+04 | 3.69E+03 | 3.80E+03 | 3.41E+03 | 3.99E+03 | 3.79E+03 | 3.71E+03 | 3.51E+03 | |

| Iris | Worst | 2.29E+02 | 3.03E+02 | 2.97E+02 | 2.38E+02 | 2.75E+02 | 2.88E+02 | 2.86E+02 | 3.03E+02 | 3.03E+02 | 2.84E+02 |

| Best | 1.67E+02 | 1.71E+02 | 1.75E+02 | 1.55E+02 | 2.00E+02 | 1.47E+02 | 2.05E+02 | 1.85E+02 | 1.97E+02 | 1.71E+02 | |

| Avg | 1.97E+02 | 2.55E+02 | 2.51E+02 | 1.96E+02 | 2.43E+02 | 2.39E+02 | 2.57E+02 | 2.66E+02 | 2.57E+02 | 2.29E+02 | |

| Orl | Worst | 9.55E+05 | 7.65E+05 | 5.28E+05 | 5.59E+05 | 5.69E+05 | 7.77E+05 | 5.72E+05 | 7.32E+05 | 6.38E+05 | 7.67E+05 |

| Best | 8.44E+05 | 7.35E+05 | 5.23E+05 | 5.36E+05 | 5.55E+05 | 7.44E+05 | 5.54E+05 | 6.70E+05 | 5.73E+05 | 7.51E+05 | |

| Avg | 9.37E+05 | 7.62E+05 | 5.27E+05 | 5.45E+05 | 5.64E+05 | 7.71E+05 | 5.65E+05 | 7.01E+05 | 6.28E+05 | 7.60E+05 | |

| Steel | Worst | 3.8E+09 | 4.64E+09 | 4.18E+09 | 3.8E+09 | 4.64E+09 | 4.26E+09 | 4.64E+09 | 4.64E+09 | 4.64E+09 | 4.64E+09 |

| Best | 2.40E+06 | 2.42E+06 | 2.48E+06 | 2.36E+06 | 2.55E+06 | 2.48E+06 | 2.51E+06 | 2.55E+06 | 2.37E+06 | 2.44E+06 | |

| Avg | 3.29E+09 | 3.97E+09 | 3.85E+09 | 3.05E+09 | 3.92E+09 | 3.72E+09 | 3.85E+09 | 3.96E+09 | 3.98E+09 | 3.71E+09 | |

| Wine | Worst | 2.26E+04 | 2.90E+04 | 3.51E+04 | 2.27E+04 | 8.38E+04 | 3.83E+04 | 4.28E+04 | 8.38E+04 | 8.38E+04 | 8.38E+04 |

| Best | 1.74E+04 | 1.82E+04 | 1.77E+04 | 1.75E+04 | 1.75E+04 | 1.78E+04 | 1.79E+04 | 1.79E+04 | 1.91E+04 | 1.82E+04 | |

| Avg | 2.01E+04 | 2.18E+04 | 3.40E+04 | 2.00E+04 | 3.31E+04 | 2.58E+04 | 2.52E+04 | 3.64E+04 | 3.83E+04 | 3.35E+04 | |

| Balance Scale | Worst | 1.49E+03 | 1.51E+03 | 2.33E+03 | 1.63E+03 | 1.54E+03 | 1.52E+03 | 1.51E+03 | 1.53E+03 | 1.79E+03 | 1.51E+03 |

| Best | 1.45E+03 | 1.45E+03 | 1.52E+03 | 1.47E+03 | 1.46E+03 | 1.44E+03 | 1.45E+03 | 1.45E+03 | 1.48E+03 | 1.45E+03 | |

| Avg | 1.47E+03 | 1.49E+03 | 2.15E+03 | 1.55E+03 | 1.49E+03 | 1.48E+03 | 1.48E+03 | 1.50E+03 | 1.61E+03 | 1.48E+03 | |

| Worst | 2.37E+03 | 2.99E+03 | 2.69E+03 | 2.44E+03 | 3.22E+03 | 2.79E+03 | 3.13E+03 | 2.55E+03 | 2.50E+03 | 2.79E+03 | |

| Breasts | Best | 2.22E+03 | 2.69E+03 | 2.58E+03 | 2.29E+03 | 2.84E+03 | 2.53E+03 | 2.68E+03 | 2.43E+03 | 2.43E+03 | 2.47E+03 |

| Avg | 2.30E+03 | 2.89E+03 | 2.63E+03 | 2.37E+03 | 3.10E+03 | 2.73E+03 | 2.98E+03 | 2.51E+03 | 2.47E+03 | 2.63E+03 | |

| Glass | Worst | 8.46E+02 | 8.86E+02 | 9.65E+02 | 8.76E+02 | 1.19E+03 | 1.15E+03 | 1.19E+03 | 1.19E+03 | 1.20E+03 | 1.20E+03 |

| Best | 5.52E+02 | 5.87E+02 | 5.89E+02 | 5.15E+02 | 5.31E+02 | 5.73E+02 | 6.19E+02 | 5.65E+02 | 5.93E+02 | 6.10E+02 | |

| Avg | 8.13E+02 | 7.72E+02 | 9.35E+02 | 7.44E+02 | 9.74E+02 | 8.87E+02 | 9.86E+02 | 1.02E+03 | 1.09E+03 | 8.79E+02 | |

| Haberman | Worst | 3.61E+03 | 4.47E+03 | 4.46E+03 | 3.64E+03 | 4.14E+03 | 4.46E+03 | 5.64E+03 | 4.16E+03 | 4.28E+03 | 4.52E+03 |

| Best | 2.70E+03 | 2.84E+03 | 3.07E+03 | 2.73E+03 | 3.00E+03 | 3.14E+03 | 3.21E+03 | 2.78E+03 | 3.01E+03 | 2.79E+03 | |

| Avg | 3.14E+03 | 3.85E+03 | 3.43E+03 | 3.29E+03 | 3.65E+03 | 3.78E+03 | 3.77E+03 | 3.70E+03 | 3.78E+03 | 3.91E+03 | |

| Heart | Worst | 1.97E+04 | 2.72E+04 | 3.33E+04 | 1.97E+04 | 4.22E+04 | 3.46E+04 | 4.17E+04 | 4.22E+04 | 4.15E+04 | 3.62E+04 |

| Best | 2.40E+06 | 2.42E+06 | 2.48E+06 | 2.36E+06 | 1.46E+04 | 1.43E+04 | 1.42E+04 | 1.37E+04 | 1.42E+04 | 1.31E+04 | |

| Avg | 3.29E+09 | 3.97E+09 | 3.85E+09 | 3.05E+09 | 2.43E+04 | 2.18E+04 | 2.75E+04 | 2.71E+04 | 3.03E+04 | 1.99E+04 | |

| Hepatitis | Worst | 2.26E+04 | 2.90E+04 | 3.51E+04 | 2.27E+04 | 2.22E+04 | 2.25E+04 | 1.96E+04 | 2.25E+04 | 2.24E+04 | 2.27E+04 |

| Best | 1.74E+04 | 1.82E+04 | 1.77E+04 | 1.75E+04 | 1.35E+04 | 1.31E+04 | 1.34E+04 | 1.36E+04 | 1.32E+04 | 1.34E+04 | |

| Avg | 2.01E+04 | 2.18E+04 | 3.40E+04 | 2.00E+04 | 1.83E+04 | 1.78E+04 | 1.72E+04 | 1.90E+04 | 1.79E+04 | 1.75E+04 | |

| Libras | Worst | 1.49E+03 | 1.51E+03 | 2.33E+03 | 1.63E+03 | 1.05E+03 | 9.16E+02 | 9.21E+02 | 6.11E+02 | 7.15E+02 | 7.38E+02 |

| Best | 1.45E+03 | 1.45E+03 | 1.52E+03 | 1.47E+03 | 6.89E+02 | 8.72E+02 | 6.69E+02 | 5.78E+02 | 6.88E+02 | 5.97E+02 | |

| Avg | 1.47E+03 | 1.49E+03 | 2.15E+03 | 1.55E+03 | 8.82E+02 | 8.94E+02 | 8.62E+02 | 5.87E+02 | 7.07E+02 | 6.60E+02 | |

| Lung Cancer | Worst | 2.37E+03 | 2.99E+03 | 2.69E+03 | 2.44E+03 | 1.98E+02 | 1.87E+02 | 2.03E+02 | 2.19E+02 | 1.97E+02 | 2.07E+02 |

| Best | 2.22E+03 | 2.69E+03 | 2.58E+03 | 2.29E+03 | 1.69E+02 | 1.70E+02 | 1.80E+02 | 1.79E+02 | 1.66E+02 | 1.76E+02 | |

| Avg | 2.30E+03 | 2.89E+03 | 2.63E+03 | 2.37E+03 | 1.88E+02 | 1.80E+02 | 1.95E+02 | 1.97E+02 | 1.83E+02 | 1.93E+02 | |

| Madelon | Worst | 8.46E+02 | 8.86E+02 | 9.65E+02 | 8.76E+02 | 1.95E+06 | 1.83E+06 | 1.86E+06 | 1.82E+06 | 1.84E+06 | 1.82E+06 |

| Best | 5.52E+02 | 5.87E+02 | 5.89E+02 | 5.15E+02 | 1.94E+06 | 1.83E+06 | 1.84E+06 | 1.82E+06 | 1.82E+06 | 1.82E+06 | |

| Avg | 8.13E+02 | 7.72E+02 | 9.35E+02 | 7.44E+02 | 1.95E+06 | 1.83E+06 | 1.85E+06 | 1.82E+06 | 1.83E+06 | 1.82E+06 | |

| Seeds | Worst | 3.61E+03 | 4.47E+03 | 4.46E+03 | 3.64E+03 | 7.75E+02 | 8.30E+02 | 8.41E+02 | 7.97E+02 | 8.58E+02 | 7.19E+02 |

| Best | 2.70E+03 | 2.84E+03 | 3.07E+03 | 2.73E+03 | 5.29E+02 | 5.15E+02 | 4.93E+02 | 5.29E+02 | 5.28E+02 | 5.33E+02 | |

| Avg | 3.14E+03 | 3.85E+03 | 3.43E+03 | 3.29E+03 | 6.63E+02 | 6.42E+02 | 6.66E+02 | 6.96E+02 | 7.47E+02 | 6.29E+02 | |

| Speech | Worst | 1.97E+04 | 2.72E+04 | 3.33E+04 | 1.97E+04 | 6.58E+12 | 6.58E+12 | 6.52E+12 | 6.58E+12 | 6.58E+12 | 6.55E+12 |

| Best | 2.40E+06 | 2.42E+06 | 2.48E+06 | 2.36E+06 | 3.68E+12 | 2.54E+12 | 3.02E+12 | 3.31E+12 | 3.45E+12 | 3.46E+12 | |

| Avg | 3.29E+09 | 3.97E+09 | 3.85E+09 | 3.05E+09 | 5.40E+12 | 5.06E+12 | 5.09E+12 | 5.03E+12 | 4.42E+12 | 5.07E+12 | |

| Vowel | Worst | 2.26E+04 | 2.90E+04 | 3.51E+04 | 2.27E+04 | 5.83E+05 | 4.49E+05 | 5.72E+05 | 5.76E+05 | 6.92E+05 | 4.43E+05 |

| Best | 1.74E+04 | 1.82E+04 | 1.77E+04 | 1.75E+04 | 2.45E+05 | 2.50E+05 | 2.60E+05 | 2.32E+05 | 2.37E+05 | 2.23E+05 | |

| Avg | 2.01E+04 | 2.18E+04 | 3.40E+04 | 2.00E+04 | 4.04E+05 | 3.46E+05 | 3.87E+05 | 4.38E+05 | 4.46E+05 | 3.31E+05 |

| Dataset | CSA | ABC | BA | AEFA | CIAS | |

|---|---|---|---|---|---|---|

| Blood | worst | 4.10E+05 | 3.90E+06 | 6.01E+05 | 4.88E+06 | 4.41E+05 |

| best | 4.08E+05 | 4.11E+05 | 6.01E+05 | 4.85E+05 | 4.41E+05 | |

| avg | 4.09E+05 | 1.13E+06 | 6.01E+05 | 1.99E+06 | 4.41E+05 | |

| Cancer | worst | 4.43E+03 | 9.45E+03 | 5.85E+03 | 3.57E+03 | 2.96E+03 |

| best | 4.09E+03 | 3.57E+03 | 5.81E+03 | 3.56E+03 | 2.96E+03 | |

| avg | 4.30E+03 | 5.78E+03 | 5.82E+03 | 3.56E+03 | 2.96E+03 | |

| CMC | worst | 6.47E+03 | 1.05E+04 | 7.72E+03 | 6.74E+03 | 5.53E+03 |

| best | 6.30E+03 | 5.95E+03 | 7.69E+03 | 6.74E+03 | 5.53E+03 | |

| avg | 6.35E+03 | 7.75E+03 | 7.70E+03 | 6.74E+03 | 5.53E+03 | |

| Dermatology | worst | 2.97E+03 | 3.51E+03 | 3.08E+03 | 3.14E+03 | 2.25E+03 |

| best | 2.97E+03 | 3.16E+03 | 3.07E+03 | 3.13E+03 | 2.24E+03 | |

| avg | 2.97E+03 | 3.35E+03 | 3.07E+03 | 3.14E+03 | 2.25E+03 | |

| Iris | worst | 1.06E+02 | 3.60E+02 | 1.50E+02 | 1.07E+02 | 9.67E+01 |

| best | 1.03E+02 | 1.22E+02 | 1.46E+02 | 1.05E+02 | 9.67E+01 | |

| avg | 1.04E+02 | 2.21E+02 | 1.48E+02 | 1.07E+02 | 9.67E+01 | |

| Orl | worst | 5.01E+05 | 7.77E+05 | 6.36E+05 | 7.33E+05 | 5.03E+05 |

| best | 5.00E+05 | 7.68E+05 | 6.36E+05 | 7.26E+05 | 5.03E+05 | |

| avg | 5.00E+05 | 7.74E+05 | 6.36E+05 | 7.30E+05 | 5.03E+05 | |

| Steel | worst | 2.99E+09 | 9.93E+09 | 6.82E+09 | 2.98E+10 | 5.81E+09 |

| best | 2.95E+09 | 2.15E+09 | 6.82E+09 | 6.30E+09 | 5.81E+09 | |

| avg | 2.97E+09 | 3.40E+09 | 6.82E+09 | 1.85E+10 | 5.81E+09 | |

| Wine | worst | 1.72E+04 | 1.83E+04 | 1.71E+04 | 5.35E+04 | 1.63E+04 |

| best | 1.71E+04 | 1.65E+04 | 1.71E+04 | 1.90E+04 | 1.63E+04 | |

| avg | 1.72E+04 | 1.72E+04 | 1.71E+04 | 5.02E+04 | 1.63E+04 | |

| balance scale | worst | 1.43E+03 | 1.72E+03 | 1.45E+03 | 1.43E+03 | 1.43E+03 |

| best | 1.43E+03 | 1.44E+03 | 1.44E+03 | 1.43E+03 | 1.43E+03 | |

| avg | 1.43E+03 | 1.52E+03 | 1.44E+03 | 1.43E+03 | 1.43E+03 | |

| breasts | worst | 3.43E+03 | 6.08E+03 | 3.05E+03 | 2.36E+03 | 2.02E+03 |

| best | 3.39E+03 | 2.32E+03 | 3.03E+03 | 2.36E+03 | 2.02E+03 | |

| avg | 3.41E+03 | 4.34E+03 | 3.04E+03 | 2.36E+03 | 2.02E+03 | |

| glass | worst | 4.37E+02 | 6.30E+02 | 3.69E+02 | 4.11E+02 | 2.53E+02 |

| best | 3.91E+02 | 3.07E+02 | 3.65E+02 | 4.10E+02 | 2.53E+02 | |

| avg | 4.10E+02 | 5.03E+02 | 3.67E+02 | 4.11E+02 | 2.53E+02 | |

| Haberman | worst | 2.62E+03 | 1.11E+04 | 2.94E+03 | 2.59E+03 | 2.57E+03 |

| best | 2.59E+03 | 2.62E+03 | 2.93E+03 | 2.59E+03 | 2.57E+03 | |

| avg | 2.61E+03 | 3.90E+03 | 2.93E+03 | 2.59E+03 | 2.57E+03 | |

| heart | worst | 1.10E+04 | 3.01E+04 | 1.45E+04 | 1.19E+04 | 1.06E+04 |

| best | 1.08E+04 | 1.07E+04 | 1.45E+04 | 1.13E+04 | 1.06E+04 | |

| avg | 1.09E+04 | 1.37E+04 | 1.45E+04 | 1.18E+04 | 1.06E+04 | |

| Hepatitis | worst | 1.24E+04 | 1.25E+04 | 1.48E+04 | 1.93E+04 | 1.18E+04 |

| best | 1.20E+04 | 1.18E+04 | 1.48E+04 | 1.48E+04 | 1.18E+04 | |

| avg | 1.22E+04 | 1.21E+04 | 1.48E+04 | 1.93E+04 | 1.18E+04 | |

| Libras | worst | 5.87E+02 | 9.16E+02 | 7.34E+02 | 7.78E+02 | 5.41E+02 |

| best | 5.85E+02 | 8.71E+02 | 7.23E+02 | 7.78E+02 | 5.40E+02 | |

| avg | 5.86E+02 | 8.92E+02 | 7.26E+02 | 7.78E+02 | 5.41E+02 | |

| lung Cancer | worst | 1.59E+02 | 1.71E+02 | 1.64E+02 | 1.65E+02 | 1.38E+02 |

| best | 1.58E+02 | 1.60E+02 | 1.63E+02 | 1.65E+02 | 1.38E+02 | |

| avg | 1.59E+02 | 1.66E+02 | 1.63E+02 | 1.65E+02 | 1.38E+02 | |

| Madelon | worst | 1.86E+06 | 3.91E+06 | 2.85E+06 | 2.67E+06 | 1.91E+06 |

| best | 1.86E+06 | 3.64E+06 | 2.85E+06 | 2.52E+06 | 1.90E+06 | |

| avg | 1.86E+06 | 3.77E+06 | 2.85E+06 | 2.59E+06 | 1.90E+06 | |

| seeds | worst | 3.77E+02 | 1.04E+03 | 3.69E+02 | 3.68E+02 | 3.12E+02 |

| best | 3.67E+02 | 3.72E+02 | 3.63E+02 | 3.65E+02 | 3.12E+02 | |

| avg | 3.71E+02 | 5.29E+02 | 3.64E+02 | 3.66E+02 | 3.12E+02 | |

| speech | worst | 4.65E+12 | 2.41E+12 | 6.92E+12 | 3.63E+13 | 3.00E+12 |

| best | 3.71E+12 | 2.16E+12 | 6.92E+12 | 7.07E+12 | 3.00E+12 | |

| avg | 4.18E+12 | 2.26E+12 | 6.92E+12 | 1.68E+13 | 3.00E+12 | |

| vowel | worst | 1.71E+05 | 3.73E+05 | 2.55E+05 | 4.16E+05 | 1.62E+05 |

| best | 1.69E+05 | 1.92E+05 | 2.55E+05 | 2.09E+05 | 1.62E+05 | |

| avg | 1.70E+05 | 2.58E+05 | 2.55E+05 | 3.27E+05 | 1.62E+05 | |

| No. | Features Name | Description |

|---|---|---|

| 1 | Location | The location where patients belong to |

| 2 | Country | The country where patients belong to |

| 3 | Gender | The gender of patients |

| 4 | Age | The ages of the patients |

| 5 | vis_wuhan (Yes: 1, 0: No) | Whether the patients visited Wuhan, China |

| 6 | from_wuhan (Yes: 1, 0: No) | Whether the patients from Wuhan, China |

| 7 | symptom 1 | Fever |

| 8 | symptom 2 | Cough |

| 9 | symptom 3 | Cold |

| 10 | symptom 4 | Fatigue |

| 11 | symptom 5 | Body pain |

| 12 | symptom 6 | Malaise |

| 13 | diff_sym_hos | The day’s difference between the symptoms being noticed and admission to the hospital |

| 14 | Class | The class of patient can be either death or recovery |

| Models | Iterations | Precision | Recall | F-Measure | Accuracy |

|---|---|---|---|---|---|

| BABC | 100 | 91.15 | 91.24 | 91.19 | 91.21 |

| 200 | 91.48 | 91.62 | 91.55 | 91.95 | |

| BBA | 100 | 92.29 | 92.38 | 92.33 | 92.15 |

| 200 | 92.37 | 92.51 | 92.44 | 92.48 | |

| BCSA | 100 | 94.14 | 94.26 | 94.20 | 94.71 |

| 200 | 95.25 | 95.38 | 95.31 | 95.32 | |

| BAEFA | 100 | 94.06 | 94.19 | 94.09 | 94.36 |

| 200 | 94.52 | 94.63 | 94.57 | 94.79 | |

| Proposed Model | 100 | 95.53 | 95.76 | 95.64 | 95.84 |

| 200 | 96.04 | 96.35 | 96.19 | 96.25 |

| Features | Precision | Recall | F-Measure | Accuracy |

|---|---|---|---|---|

| 5 | 98.32 | 98.43 | 98.37 | 98.68 |

| 5 | 98.41 | 98.47 | 98.44 | 98.41 |

| 6 | 98.26 | 98.35 | 98.30 | 98.23 |

| 6 | 98.14 | 98.46 | 98.30 | 98.06 |

| 7 | 98.45 | 98.55 | 98.50 | 98.31 |

| 7 | 97.35 | 97.49 | 97.42 | 97.76 |

| 8 | 97.50 | 97.58 | 97.54 | 97.65 |

| 8 | 97.14 | 97.30 | 97.22 | 97.52 |

| 9 | 97.32 | 97.58 | 97.45 | 97.41 |

| 10 | 97.29 | 97.48 | 97.38 | 97.52 |

| 10 | 97.06 | 97.19 | 97.12 | 97.13 |

| 11 | 96.58 | 96.67 | 96.62 | 96.84 |

| 11 | 96.61 | 96.75 | 96.68 | 96.92 |

| 12 | 96.35 | 96.42 | 96.38 | 96.56 |

| 12 | 96.42 | 96.56 | 96.49 | 96.42 |

| 13 | 96.11 | 96.20 | 96.15 | 96.25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gharehchopogh, F.S.; Khargoush, A.A. A Chaotic-Based Interactive Autodidactic School Algorithm for Data Clustering Problems and Its Application on COVID-19 Disease Detection. Symmetry 2023, 15, 894. https://doi.org/10.3390/sym15040894

Gharehchopogh FS, Khargoush AA. A Chaotic-Based Interactive Autodidactic School Algorithm for Data Clustering Problems and Its Application on COVID-19 Disease Detection. Symmetry. 2023; 15(4):894. https://doi.org/10.3390/sym15040894

Chicago/Turabian StyleGharehchopogh, Farhad Soleimanian, and Aysan Alavi Khargoush. 2023. "A Chaotic-Based Interactive Autodidactic School Algorithm for Data Clustering Problems and Its Application on COVID-19 Disease Detection" Symmetry 15, no. 4: 894. https://doi.org/10.3390/sym15040894

APA StyleGharehchopogh, F. S., & Khargoush, A. A. (2023). A Chaotic-Based Interactive Autodidactic School Algorithm for Data Clustering Problems and Its Application on COVID-19 Disease Detection. Symmetry, 15(4), 894. https://doi.org/10.3390/sym15040894