1. Introduction

The support vector machine (SVM) [

1,

2,

3,

4,

5] is designed for the binary classification problem, which is the development basis of other classifiers in machine learning due to its superior performance in text classification tasks. The SVM is widely used in data classification and regression analysis problems, such as artificial intelligence, speech recognition, remote image analysis, financial management, etc. Scene recognition plays a crucial role in supporting cognitive communications of unmanned aerial vehicle (UAV). To leverage scenario-dependent channel characteristics, we propose an air-to-ground (A2G) scenario identification model based on support vector machine (SVM) [

6]. SVM is based on the principle of structural risk minimization, which has excellent generalization performance, and the basic idea is to determine the maximum distance between the two types of data. Although a SVM is highly applicable to classification problems, it still faces some challenges in handling its learning tasks. To avoid the occurrence of over-fitting, a SVM is extended to a soft-margin SVM (C-SVM) [

7] after introducing relaxation variables. The loss function of the C-SVM is a hinge loss, which is very sensitive to the noise points in the sample, so as to avoid over-fitting. Moreover, the SVM needs to solve a quadratic programming problem (QPP) of complexity

[

7] in the process of solving. Therefore, many new programmes have been proposed to speed up the training process of the SVM. For example, how to decompose a large QPP into multiple small QPPs, or how to build other variant models of the new SVM. Among the more popularly accepted is the generalized eigenvalue proximal support vector machine (GEPSVM) [

8] and the twin support vector machine (TSVM) [

3,

9,

10,

11,

12]. The GEPSVM searches for two nonparallel hyperplanes by solving two related generalized eigenvalue problems. The TSVM divides a large QPP into two small QPPs, of which the principle is based on the GEPSVM search for two nonparallel hyperplanes, so that the positive (negative) sample is as close as possible to the positive class (negative class) hyperplane, and as close to the other hyperplane as possible. Therefore, the TSVM runs much faster than the SVM in theory.

In the process of studying the TSVM, some scholars have found that the TSVM needs to solve two QPPs, which is not feasible for large-scale classification. In order to reduce the calculated amount of the TSVM and maintain the advantage, the least squares support vector machine (LSSVM) was first proposed by Suykens et al. [

2,

13,

14]. The LSSVM is one of the important achievements in the field of machine learning in recent years, and its training process follows the principle of risk minimization, which replaces the inequality constraints with equality constraints and solves two sets of linear equations. Based on this, Tomar et al. extended the LSTSVM to a multi-class classification and proposed a multi-class least-squares twin support vector machine (MLSTSVM) [

7]. For multiple samples, the MLSTSVM generates multiple hyperplanes, where one plane corresponds to one sample, where the ith sample is as close as possible to the ith hyperplane, while being as far away from the other hyperplane as possible. Xie et al. [

15] propose a novel Laplacian Lp norm least squares twin support vector machine (Lap-LpLSTSVM). The experimental results on both synthetic and real-world datasets show that the Lap-LpLSTSVM outperforms other state-of-the-art methods and can also deal with noisy datasets. Ye et al. proposed the information-weighted TWSVM classification method (WLTSVM) [

16], which represents the compactness of intra-class samples and the discreteness of inter-class samples by using inter-class graphs. In addition, a new SVM was also proposed by Shao et al. [

8], which is a twin bounded support vector machine (TBSVM) [

9]. It increases the regularization term containing the 2-norm and achieves the principle of minimizing the structural risk and improves the classification performance of the SVM. The TSVM is calculated faster than the SVM, but the square

-norm distance used in the TSVM increases the effect of the outliers; thus, affecting the construction of the hyperplane in the presence of noise. To enhance the classification performance of twin support vector machines (TSVMs) on imbalanced datasets, a reduced universum twin support vector machine for class imbalance learning (RUTSVM) has been proposed based on the universum TSVM framework. However, RUTSVM suffers from a key drawback in terms of matrix inverse computation involved in solving its dual problem and finding classifiers. To address this issue, Moosae [

17] proposes an improved version of RUTSVM called IRUTSVM. Therefore, on the basis of the TSVM, Wang et al. proposed the term capped

-norm twin support vector machines (CTSVM) [

18,

19]; thus, eliminating the effect of partial outliers and increasing the robustness. From here, we see that the

-norm increases the robustness better than the square

-norm. However, the classification of the

-norm distance measures also shows its drawbacks. When the outliers are large, the capped

-norm measures are neither convex nor smooth, making them difficult to optimize. For the problem of the

-norm, a robust twin support vector machine based on the

-norm (pTSVM) [

20,

21,

22] formula was proposed recently, which better suppresses the effect of outliers than the

-norm and the square

-norm.

To further suppress the adverse effects of the outliers, we introduce a bounded, smooth, and non-convex Welsch loss [

23,

24,

25]. The Welsch loss is based on the loss function of the Welsch estimation method, of which the Welsch estimation method is one method in robust estimation. When the data error is normally distributed, it is comparable to the mean square error loss, but the Welsch loss is more robust when the error is a non-normal distribution and the error is caused by outliers. To improve the robustness and generalization performance of the TBSVM, this paper proposes a classifier to increase the terms of the Welsch loss function on the basis of the capped

-norm, which is a capped

-norm metric based on a robust twin support vector machine with a Welsch loss (WCTBSVM). The WCTBSVM is more robust than the TBSVM, while reducing the effect of outliers and improving the classification performance. Beyond this, the fast WCTBSVM (FWCTBSVM) is proposed based on the idea of least squares; changing the inequality constraint to the equality constraint accelerates the operation of the algorithm.

Combined with the above content, this article carries out the main work and mainly has the following points:

(1) By analyzing the characteristics of both the Welsch loss function and the capped -norm metric distance, a new robust learning algorithm based on a TBSVM is proposed as a WCTBSVM. Without loss of precision, a least squares version of WCTBSVM is constructed, named FWCTBSVM.

(2) The iterative algorithms of WCTBSVM and FWCTBSVM models are given, and their convergence is analyzed according to the characteristics of the models.

(3) Through experiments on UCI datasets and artificial datasets, we found that the WCTBSVM and FWCTBSVM have advantages over several other methods in terms of robustness and feasibility.

(4) To see the advantages of the WRTBSVM and FWRTBSVM more intuitively, we conducted a statistical monitoring analysis to further verify that the classification performance of the WRTBSVM and FWRTBSVM was stronger than that of the TSVM, TBSVM, LSTSVM, and CTSVM.

In

Section 2, we introduce some related models, such as the TSVM, TBSVM, CTSVM, LSTSVM, and capped

-norm. In

Section 3, we integrate the Welsch function into the model, and then solve the model. A large number of data experiments are conducted to test the operation of the model in

Section 5. The fifth chapter is a summary of the full text.

2. Related Work

In this paper, we propose two models based on the following relevant content. We first make a simple introduction to four classification models: the twin support vector machine (TSVM), twin bounded support vector machine (TBSVM), capped

-norm twin support vector machine (CTSVM), and least squares twin support vector machine (LSTSVM), as well as the capped

-norm and Welsch loss functions to be introduced in the model of this paper [

25].

2.1. TSVM

The TSVM was first proposed by Jayadeva et al. The basic theory is to produce two nonparallel classification planes, and keep each class close to the corresponding one, and far away from the other one. The TSVM differs from the SVM by dividing a convex quadratic programming problem into two convex quadratic programming problems; thus, speeding up the rate of classification identification.

We set up a training dataset

, where

and

denote the class to which the point ith belongs. Class 1 and class -1 are represented as positive samples and negative samples, respectively. The number of samples in

containing positive class samples is denoted by

, and the number of samples containing negative class samples is denoted by

, where

. Let

represents all positive class samples, and

represents all negative class samples. Thus, we determine two non-parallel hyperplanes:

and

where

are the normal vector of the hyperplane and

are the offset of the hyperplane.

Subsequently, we can obtain a model of the TSVM:

where

are regularization parameters,

and

are vectors of ones of appropriate dimensions,

and

are the slack vectors.

Introducing the Lagrange multipliers

and

, let

and

, and we can get the dual problem:

Finally, two nonparallel hyperplanes can be obtained by solving the QPPs in formulas (

5) and (

6)

2.2. TBSVM

To enhance the classification performance of the TSVM, a new TSVM model called a TBSVM was proposed by Shao et al. [

8]. The TBSVM is two non-parallel hyperplanes for classification by solving two smaller QPPs. Thus, we can write the TBSVM as:

and

where

and

are the slack vectors, 0 is a zero vector,

and

are the regularization parameters,

are all unit vectors,

A and

B are the same as

A and

B of (

3). Based on optimization theory and dual theory, let

and

. We can then get the dual problems of (

8) and (

9) as follows:

and

where

and

are

multipliers. Further, we can get the solution problems of (

10) and (

11) as follows:

2.3. CTSVM

Using the

-norm in the TSVM increases the effect of the noises without reaching the structure of the optimized classification hyperplanes. Therefore, the capped

-norm twin support vector machine (CTSVM) is introduced to increase the robustness to the noise, which helps reduce noise during the model training. The capped

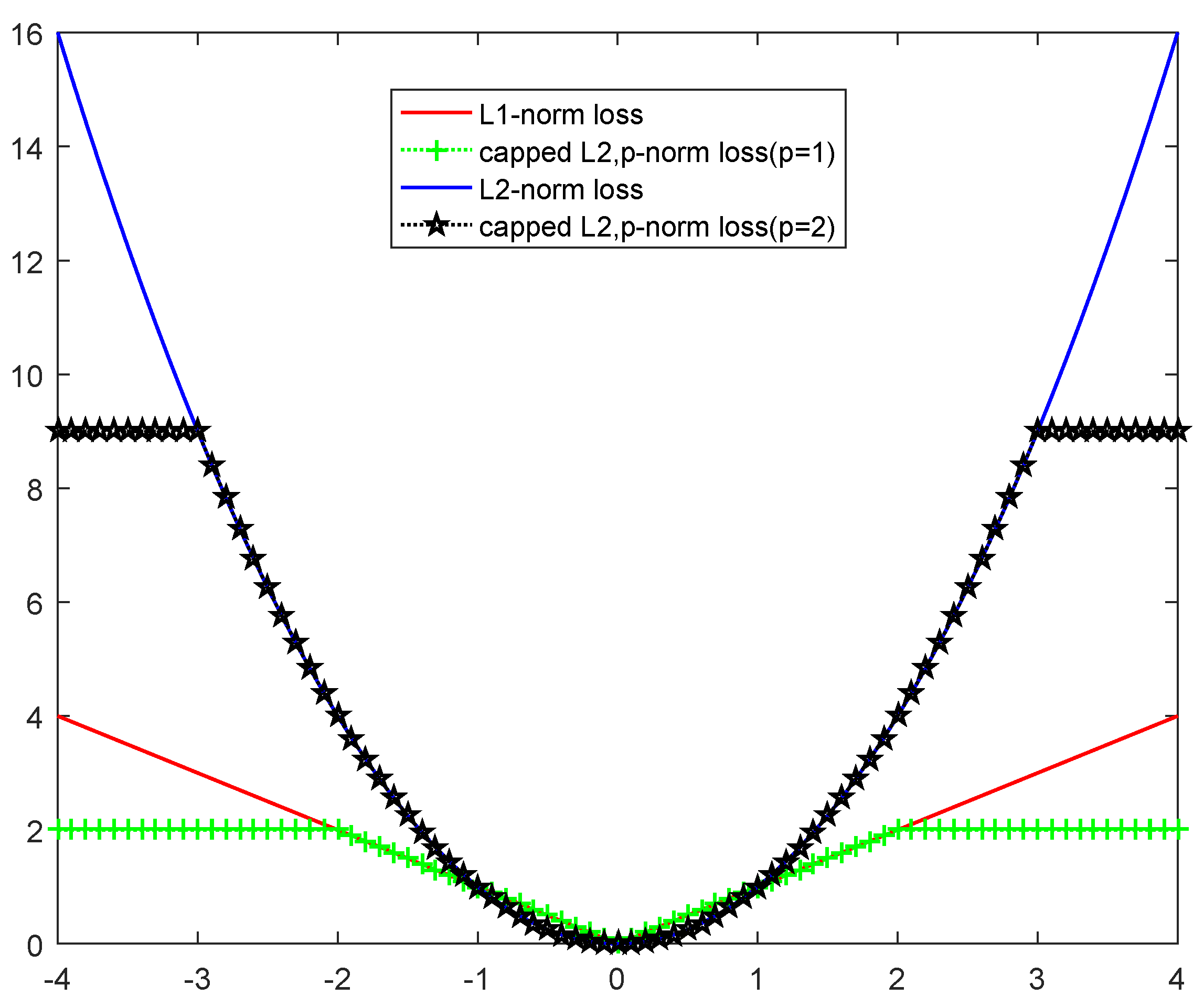

-norm is shown in

Figure 1.

The CTSVM classifier is obtained by solving the following problems:

and

where,

and

,

and

are the unit vectors,

,

are the thresholding parameters, and

and

are the slack vectors. Here, we use the capped

-norm to reduce the influence of the outliers. At the capped

-norm loss function, when the data point is misclassfied, the loss is

.

We can reformulate the problems as the following approximation ones:

and

where

F,

Q,

K and

U are four diagonal matrices with diagonal elements as:

Based on optimization theory and dual theory, let

and

. We can then get the dual problems of (

16) and (

17)

and

where

and

are

multipliers.

2.4. LSTSVM

The TSVM has a great advantages over the SVM in the process of classification, but it also expands the influence of noise; thus, increasing the calculation difficulty when processing large amounts of data. However, the least squares twin support vector machine (LSTSVM) can change the inequality constraint into the equation constraint, which can avoid the problems that the TSVM deals with regarding large amounts of data. Therefore, we have the LSTSVM as

and

where,

and

represent regularization parameters, and

and

are slack vectors.

Furthermore, the optimization problems (

22) and (

23) can be rewritten:

and

Let

and

. We take the partial derivative of

and

,

and

to 0 in (

24) and (

25). Thus, we can get:

and

2.5. Capped -norm

It is well known that the squared -norm distance metric is used in most variant classifiers associated with the TSVM, but this is more sensitive to outliers. Because of the differentiable of the squared -norm, the negative effect on the outliers with the square term is added; thus, decreasing the classification performance of the model. However, the -norm inhibits the negative effect of the outliers better than the -norm and squared -norm, and the , -norm becomes the -norm. Obviously, by setting an appropriate p, based on the capped -norm, there is more robustness than the capped -norm, and these algorithms show that the capped -norm is robust to Gaussian noise.

For any vector, the

and

,

-norm and capped

-norm are defined as:

where

is the thresholding parameter.

From

Figure 2 we can find that the capped

-norm is more robust than the

-norm and

-norm; thus, making the classification performance of the model better.

Because the capped -norm is relatively so well robust, we introduce the capped -norm in the model proposed in this paper and further improve the generalization and robustness of the TBSVM.

2.6. Welsch Regularization

In this paper, we focus on the Welsch loss function. It is a bounded, smooth, and non-convex loss, which is very robust to the noises. It is defined as:

where,

is a penalty parameter.

Figure 3 shows the Welsch loss function,

under different values of

, which changes from 1 to 3 [

23,

26,

27,

28,

29].

Through

Figure 3, we found that the upper bound of the Welsch loss function increases and the convergence speed slows down as

gradually increases. Thus, the impact of noise on the model during the training process is limited. Consequently, the Welsch loss can further enhance the robustness of the model.

5. Conclusions and Future Directions

Based on the binary classification problem, a new TBSVM model is proposed in this paper as the WCTBSVM, which introduces bounded, smooth, non-convex Welsch loss terms in the TBSVM model and iterative optimization of the relevant model variables using the HQ optimization algorithm to handle the Welsch loss function term. Meanwhile, the capped -norm distance based on the TBSVM and results in the WCTBSVM model are introduced, which make it more generalized and robust than the TBSVM; thus, improving the classification performance of the model. In order to reduce the time complexity and space complexity of the WCTBSVM, we obtained the FWCTBSVM using least squares, which also speeds up the operation efficiency of the model while maintaining the performance advantages of the WCTBSVM.

According to the theoretical basis, we conducted accuracy testing experiments on a UCI dataset and manual dataset and found that the classification performance of the WCTBSVM model is indeed better than the TSVM, TBSVM, LSTSVM, and CTSVM. To further determine the reliability of the WCTBSVM, we also performed statistical test analysis, of which the results still show that the classification performance of the WCTBSVM is more outstanding. Therefore, we can still apply the model to semi-supervised learning in other classification experiments to further study the performance of the model. In future work, how to extend our method to multi-view learning and multi-instance learning are worthy of further study. Certainly, how to develop fast algorithms for our method is also worth studying.