FSVM- and DAG-SVM-Based Fast CU-Partitioning Algorithm for VVC Intra-Coding

Abstract

:1. Introduction

2. Related Works

2.1. Based on Traditional Algorithms

2.2. Machine Learning Based

3. Proposed Algorithm

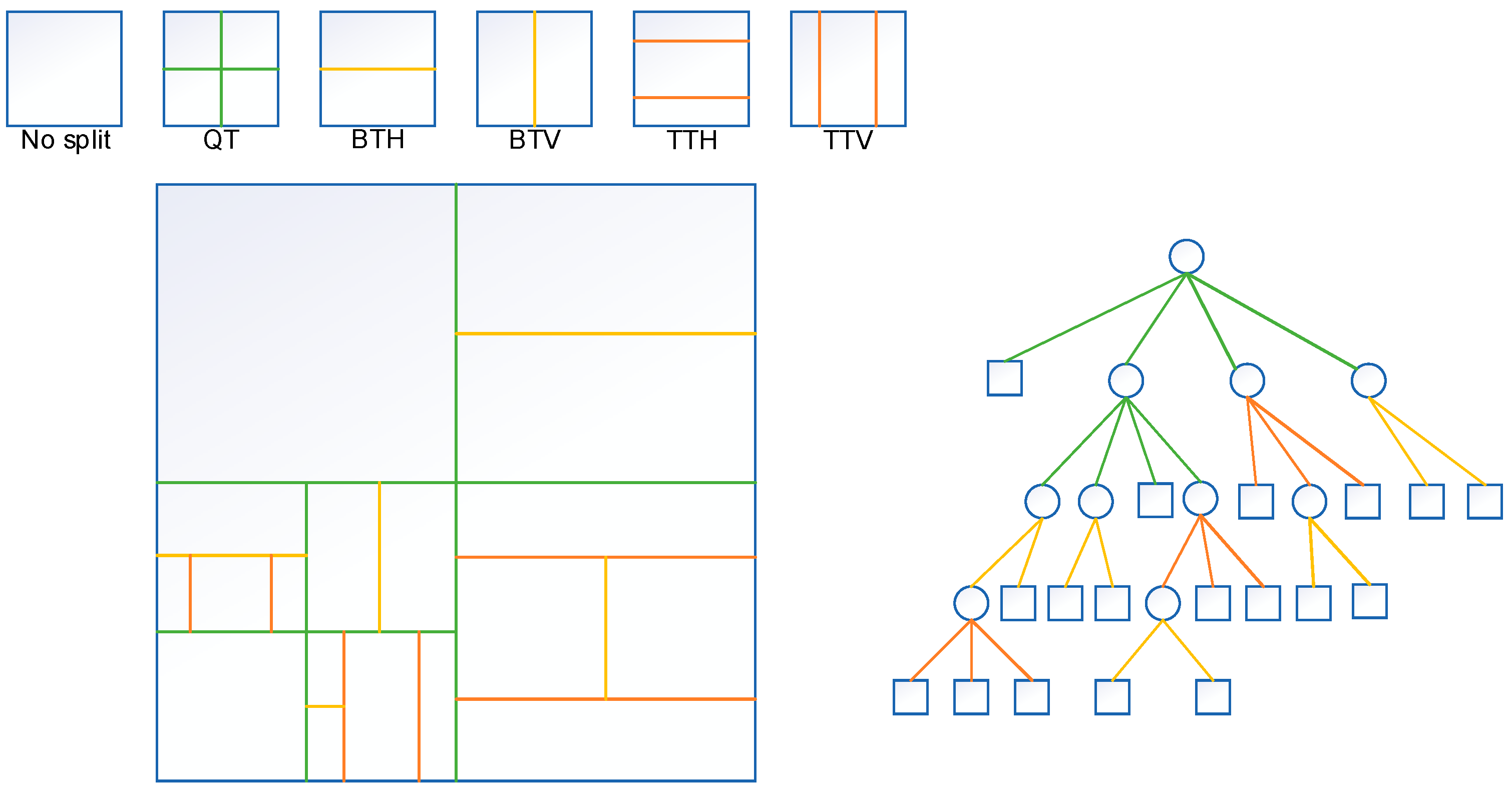

3.1. Statistical Analysis of QTMT Division Structure

3.2. Early Termination Algorithm Based on FSVMs

3.3. Fast CU Partitioning Based on DAG-SVMs

3.4. Overall Algorithm

4. Experimental Results

4.1. Analysis of Experimental Results

4.2. Comparison with Other Algorithms

4.3. Supplementary Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| VVC | Versatile Video Coding |

| HEVC | High-Efficiency Video Coding |

| CU | Coding Unit |

| FSVM | Fuzzy Support Vector Machine |

| DAG-SVM | Directed Acyclic Graph Support Vector Machine |

| CTU | Coding Tree Unit |

| MTT | Multi-Type Tree |

| QTMT | Quad-tree with Nested Multi-type Tree |

| RDO | Rate Distortion Optimization |

| QT | Quad Tree |

| BTH | Horizontal Binary Tree |

| BTV | Vertical Binary Tree |

| TTH | Horizontal Trinomial Tree |

| TTV | Vertical Trinomial Tree |

| QP | Quantization Parameter |

| TS | Time-Saving |

| BDBR | Bjøntegaard Delta Bit Rate |

| VTM | VVC Test Model |

References

- Sullivan, G.J.; Ohm, J.R.; Han, W.J.; Wiegand, T. Overview of the High Efficiency Video Coding (HEVC) Standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Saldanha, M.; Sanchez, G.; Marcon, C.; Agostini, L. Complexity Analysis of VVC Intra Coding. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 3119–3123. [Google Scholar]

- Werda, I.; Maraoui, A.; Sayadi, F.E.; Masmoudi, N. Fast CU Partition and Intra Mode Prediction Method for HEVC. In Proceedings of the 2022 IEEE 9th International Conference on Sciences of Electronics, Technologies of Information and Telecommunications (SETIT), Hammamet, Tunisia, 28–30 May 2022; pp. 562–566. [Google Scholar]

- Huang, Y.; Xu, J.; Zhang, L.; Zhao, Y.; Song, L. Intra Encoding Complexity Control with a Time-Cost Model for Versatile Video Coding. In Proceedings of the 2022 IEEE International Symposium on Circuits and Systems (ISCAS), Austin, TX, USA, 27 May–1 June 2022; pp. 2027–2031. [Google Scholar]

- Liu, X.; Li, Y.; Liu, D.; Wang, P.; Yang, L.T. An Adaptive CU Size Decision Algorithm for HEVC Intra Prediction Based on Complexity Classification Using Machine Learning. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 144–155. [Google Scholar] [CrossRef]

- Huang, Y.W.; Hsu, C.W.; Chen, C.Y.; Chuang, T.D.; Hsiang, S.T.; Chen, C.C.; Chiang, M.S.; Lai, C.Y.; Tsai, C.M.; Su, Y.C.; et al. A VVC Proposal with Quaternary Tree Plus Binary-Ternary Tree Coding Block Structure and Advanced Coding Techniques. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1311–1325. [Google Scholar] [CrossRef]

- Islam, M.Z.; Ahmed, B. A Machine Learning Approach for Fast CU Partitioning and Time Complexity Reduction in Video Coding. In Proceedings of the 2021 5th International Conference on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, 17–19 December 2021; pp. 1–5. [Google Scholar]

- Zhu, L.; Zhang, Y.; Kwong, S.; Wang, X.; Zhao, T. Fuzzy SVM-Based Coding Unit Decision in HEVC. IEEE Trans. Broadcast 2018, 64, 681–694. [Google Scholar] [CrossRef]

- Fan, Y.; Sun, H.; Katto, J.; Ming’E, J. A Fast QTMT Partition Decision Strategy for VVC Intra Prediction. IEEE Access 2020, 8, 107900–107911. [Google Scholar] [CrossRef]

- Akbulut, O.; Konyar, M.Z. Improved Intra-Subpartition Coding Mode for Versatile Video Coding. Signal Image Video Process. 2022, 16, 1363–1368. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, S.; Xiong, R.; Liu, G.; Zeng, B. Cross-Block Difference Guided Fast CU Partition for VVC Intra Coding. In Proceedings of the 2021 International Conference on Visual Communications and Image Processing (VCIP), Munich, Germany, 5–8 December 2021; pp. 1–5. [Google Scholar]

- Li, Y.; Yang, G.; Song, Y.; Zhang, H.; Ding, X.; Zhang, D. Early Intra CU Size Decision for Versatile Video Coding Based on a Tunable Decision Model. IEEE Trans. Broadcast 2021, 67, 710–720. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhao, Y.; Jiang, B.; Huang, L.; Wei, T. Fast CU Partition Decision Method Based on Texture Characteristics for H.266/VVC. IEEE Access 2020, 8, 203516–203524. [Google Scholar] [CrossRef]

- Chen, J.; Sun, H.; Katto, J.; Zeng, X.; Fan, Y. Fast QTMT Partition Decision Algorithm in VVC Intra Coding Based on Variance and Gradient. In Proceedings of the 2019 IEEE Visual Communications and Image Processing (VCIP), Sydney, Australia, 1–4 December 2019; pp. 1–4. [Google Scholar]

- Qian, X.; Zeng, Y.; Wang, W.; Zhang, Q. Co-Saliency Detection Guided by Group Weakly Supervised Learning. IEEE Trans. Multimed. 2022, 1. [Google Scholar] [CrossRef]

- Zhang, Q.; Guo, R.; Jiang, B.; Su, R. Fast CU Decision-Making Algorithm Based on DenseNet Network for VVC. IEEE Access 2021, 9, 119289–119297. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Y.; Huang, L.; Jiang, B. Fast CU Partition and Intra Mode Decision Method for H.266/VVC. IEEE Access 2020, 8, 117539–117550. [Google Scholar] [CrossRef]

- Wu, G.; Huang, Y.; Zhu, C.; Song, L.; Zhang, W. SVM Based Fast CU Partitioning Algorithm for VVC Intra Coding. In Proceedings of the 2021 IEEE International Symposium on Circuits and Systems (ISCAS), Daegu, Korea, 22–28 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Quan, H.; Wu, W.; Luo, L.; Zhu, C.; Guo, H. Random Forest Based Fast CU Partition for VVC Intra Coding. In Proceedings of the 2021 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Chengdu, China, 22–28 May 2021; pp. 1–5. [Google Scholar]

- Park, S.H.; Kang, J.W. Fast Multi-Type Tree Partitioning for Versatile Video Coding Using a Lightweight Neural Network. IEEE Trans. Multimed. 2021, 23, 4388–4399. [Google Scholar] [CrossRef]

- Shu, C.; Yang, C.; An, P. An Online SVM Based VVC Intra Fast Partition Algorithm With Pre-Scene-Cut Detection. In Proceedings of the 2022 IEEE International Symposium on Circuits and Systems (ISCAS), Austin, TX, USA, 27 May 2022–1 June 2022; pp. 3033–3037. [Google Scholar]

- Chen, M.J.; Lee, C.A.; Tsai, Y.H.; Yang, C.M.; Yeh, C.H.; Kau, L.J.; Chang, C.Y. Efficient Partition Decision Based on Visual Perception and Machine Learning for H.266/Versatile Video Coding. IEEE Access 2022, 10, 42141–42150. [Google Scholar] [CrossRef]

- Abdallah, B.; Belghith, F.; Ben Ayed, M.A.; Masmoudi, N. Low-Complexity QTMT Partition Based on Deep Neural Network for Versatile Video Coding. Signal Image Video Process. 2021, 15, 1153–1160. [Google Scholar] [CrossRef]

- Lin, T.L.; Jiang, H.Y.; Huang, J.Y.; Chang, P.C. Fast Intra Coding Unit Partition Decision in H.266/FVC Based on Spatial Features. J. Real-Time Image Process. 2020, 17, 493–510. [Google Scholar] [CrossRef]

- Bouaafia, S.; Khemiri, R.; Messaoud, S.; Ben Ahmed, O.; Sayadi, F.E. Deep Learning-Based Video Quality Enhancement for the New Versatile Video Coding. Neural Comput. Appl. 2022, 34, 14135–14149. [Google Scholar] [CrossRef]

- He, S.; Deng, Z.; Shi, C. Fast Decision Algorithm of CU Size for HEVC Intra-Prediction Based on a Kernel Fuzzy SVM Classifier. Electronics 2022, 11, 2791. [Google Scholar] [CrossRef]

- HoangVan, X.; NguyenQuang, S.; DinhBao, M.; DoNgoc, M.; Duong, D.T. Fast QTMT for H.266/VVC Intra Prediction Using Early-Terminated Hierarchical CNN Model. In Proceedings of the 2021 International Conference on Advanced Technologies for Communications (ATC), Ho Chi Minh City, Vietnam, 14–16 October 2021; pp. 195–200. [Google Scholar]

- Li, T.; Xu, M.; Tang, R.; Chen, Y.; Xing, Q. DeepQTMT: A Deep Learning Approach for Fast QTMT-Based CU Partition of Intra-Mode VVC. IEEE Trans. Image Process. 2021, 30, 5377–5390. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Y.; Huang, L.; Jiang, B.; Wang, X. Fast CU Partition Decision for H.266/VVC Based on the Improved DAG-SVM Classifier Model. Multimed. Syst. 2021, 27, 1–14. [Google Scholar] [CrossRef]

- Menon, V.V.; Amirpour, H.; Timmerer, C.; Ghanbari, M. INCEPT: Intra CU Depth Prediction for HEVC. In Proceedings of the 2021 IEEE 23rd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 6–8 October 2021; pp. 1–6. [Google Scholar]

- Tang, G.; Jing, M.; Zeng, X.; Fan, Y. Adaptive CU Split Decision with Pooling-Variable CNN for VVC Intra Encoding. In Proceedings of the 2019 IEEE Visual Communications and Image Processing (VCIP), Sydney, Australia, 1–4 December 2019; pp. 1–4. [Google Scholar]

- Zhao, J.; Li, P.; Zhang, Q. A Fast Decision Algorithm for VVC Intra-Coding Based on Texture Feature and Machine Learning. Comput. Intel. Neurosc. 2022, 2022, 7675749. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, S.; Zhang, J.; Wang, S.; Ma, S. Probabilistic Decision Based Block Partitioning for Future Video Coding. IEEE Trans. Image Process. 2018, 27, 1475–1486. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Wang, J.; Du, C.; Zhu, J.; Xu, X. A Support Vector Machine Based Fast Planar Prediction Mode Decision Algorithm for Versatile Video Coding. Multimed. Tools Appl. 2022, 81, 17205–17222. [Google Scholar] [CrossRef]

- Zhao, J.; Wu, A.; Zhang, Q. SVM-Based Fast CU Partition Decision Algorithm for VVC Intra Coding. Electronics 2022, 11, 2147. [Google Scholar] [CrossRef]

- Huang, Y.H.; Chen, J.J.; Tsai, Y.H. Speed up H.266/QTMT Intra-Coding Based on Predictions of ResNet and Random Forest Classifier. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–12 January 2021; pp. 1–6. [Google Scholar]

- Ni, C.T.; Lin, S.H.; Chen, P.Y.; Chu, Y.T. High Efficiency Intra CU Partition and Mode Decision Method for VVC. IEEE Access 2022, 10, 77759–77771. [Google Scholar] [CrossRef]

| Hardware | |

| CPU | Intel(R) Core (TM) i7-11800H |

| RAM | 16 GB |

| OS | Microsoft Windows 10 64 bits |

| Software | |

| Reference software | VTM Encoder Version 10.0 |

| Configuration | All Intra |

| QP | 22, 27, 32, 37 |

| Test Sequence | Zhao [35] | Huang [36] | NI [37] | Proposed | |||||

|---|---|---|---|---|---|---|---|---|---|

| BDBR (%) | TS (%) | BDBR (%) | TS (%) | BDBR (%) | TS (%) | BDBR (%) | TS (%) | ||

| ClassA1 4K | Campfire | 1.37 | 56.37 | / | / | 0.53 | 52.57 | 1.25 | 57.16 |

| Drums | / | / | 0.51 | 53.77 | / | / | 1.37 | 58.04 | |

| FoodMarket4 | 1.24 | 47.32 | / | / | 0.44 | 58.89 | 1.14 | 54.85 | |

| ClassA2 4K | DaylightRoad | / | / | 0.75 | 45.58 | / | / | 1.23 | 52.29 |

| ParkRunning3 | 1.07 | 55.93 | / | / | 0.21 | 46.07 | 1.31 | 55.45 | |

| TrafficFlow | / | / | 1.21 | 53.42 | / | / | 0.92 | 54.39 | |

| ClassB 1920 × 1080 | BQTerrace | 1.23 | 55.37 | 0.56 | 33.13 | 0.46 | 48.03 | 1.03 | 54.98 |

| Cactus | 2.07 | 51.37 | 0.63 | 37.53 | 0.55 | 46.12 | 1.28 | 56.81 | |

| Kimono | 1.31 | 54.68 | 0.41 | 53.01 | 0.40 | 55.41 | 1.36 | 57.47 | |

| MarkerPlace | / | / | / | / | 0.30 | 46.15 | 0.91 | 51.89 | |

| ClassC 832 × 480 | BasketballDrill | 1.60 | 53.92 | 1.05 | 29.89 | 0.96 | 46.37 | 0.76 | 49.38 |

| BQMall | 1.87 | 53.39 | 0.58 | 30.77 | 0.69 | 50.00 | 1.04 | 50.66 | |

| PartyScene | 0.87 | 52.74 | 0.13 | 28.03 | 0.42 | 46.01 | 0.85 | 55.92 | |

| RaceHorsesC | 1.25 | 51.07 | 0.63 | 32.28 | 0.47 | 48.66 | 1.09 | 56.24 | |

| ClassD 416 × 240 | BlowingBubbles | 1.57 | 51.33 | 0.13 | 22.08 | 0.51 | 45.16 | 0.93 | 53.61 |

| BasketballPass | 1.53 | 53.96 | 0.52 | 27.03 | 0.70 | 50.50 | 0.94 | 52.43 | |

| BQsquence | 0.93 | 54.78 | 0.21 | 19.71 | / | / | 0.86 | 53.28 | |

| RaceHorses | 1.14 | 55.79 | 0.09 | 23.59 | 0.47 | 48.66 | 1.07 | 57.39 | |

| ClassE 1280 × 720 | FourPeople | 2.19 | 55.21 | 0.86 | 27.99 | 0.82 | 49.96 | 1.12 | 55.86 |

| Johnny | 2.37 | 56.43 | 1.06 | 41.05 | 0.76 | 52.42 | 1.18 | 56.34 | |

| KristenAndSara | 1.88 | 55.46 | 0.86 | 35.71 | 0.77 | 48.40 | 0.79 | 49.81 | |

| Average | 1.50 | 53.83 | 0.60 | 34.97 | 0.56 | 49.38 | 1.07 | 54.49 | |

| Method | Contribution | |

|---|---|---|

| Comparison 1 | Zhao [35] | Two conventional SVM models are used to provide guidance for determining whether the current CU is split and the type of partition. The algorithm is limited in the size of the CUs it can apply to, predicting the type of partition for only six sizes of CUs, with a limited reduction in complexity, and the traditional SVM can only solve binary classification problems. |

| Proposed | Uses FSVMs and DAG-SVMs and two classifications to judge whether the CU is split and the type of partition, and can judge CUs of various sizes. The scope of application is wider, and the FSVM introduces the information entropy measure, which better solves the problems of poor noise tolerance and less accurate classification results of traditional SVMs. Meanwhile, adding the class distance in cluster analysis to the DAG-SVM model can effectively solve the problem of predicting CU division type multi-classification and make the classification results more accurate. | |

| Comparison 2 | Huang [36] | Uses ResNet and the Random Forest classifier to anticipate the depth and partition type of CUs. It is only applicable to 32 × 32 CUs, which have a single size, the calculation cost of Random Forest is too high, and the ResNet model is also relatively complicated, so the complexity reduction is limited. |

| Proposed | The FSVM and DAG-SVM are used to determine whether the CU is split and the type of partition, respectively. It is suitable for CUs of various sizes and has a wider range, and the FSVM and DAG-SVM are more flexible, with more accurate classification results and more reduction in intra-coding complexity. | |

| Comparison 3 | NI [37] | The method based on texture analysis is used to make decisions on the TT partition and BT partition in the CU-partition type, and the QT partition and non-partitioned CU are ignored for fewer types. |

| Proposed | The FSVM classifier is first used to determine whether the CU needs to be split, and then the DAG-SVM classifier is used to determine the partition type for the CU that needs to be split, applying all partition types. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, F.; Wang, Z.; Zhang, Q. FSVM- and DAG-SVM-Based Fast CU-Partitioning Algorithm for VVC Intra-Coding. Symmetry 2023, 15, 1078. https://doi.org/10.3390/sym15051078

Wang F, Wang Z, Zhang Q. FSVM- and DAG-SVM-Based Fast CU-Partitioning Algorithm for VVC Intra-Coding. Symmetry. 2023; 15(5):1078. https://doi.org/10.3390/sym15051078

Chicago/Turabian StyleWang, Fengqin, Zhiying Wang, and Qiuwen Zhang. 2023. "FSVM- and DAG-SVM-Based Fast CU-Partitioning Algorithm for VVC Intra-Coding" Symmetry 15, no. 5: 1078. https://doi.org/10.3390/sym15051078