Image Denoising Method Relying on Iterative Adaptive Weight-Mean Filtering

Abstract

:1. Introduction

2. A Summary of Some Well-Known Fractional Operators

- ■

- The Liouville–Caputo derivative [53]:

- ■

- The Caputo–Fabrizio derivative [54]:where .

- ■

- The Atangana–Baleanu fractional derivative in the Caputo sense [55]:where stands for the well-known Mittag–Leffler function given by .

- ■

- The Atangana–Baleanu fractional integral in the Caputo sense [55]:where is a function defined by

3. An Overview of the Atangana–Baleanu Fractional Masks

- For an fractional integral mask, we introduce the following symmetric window mask

. - For an fractional integral mask, we construct the following symmetric integral mask

. - In addition, for an fractional mask, the following symmetric structure is considered

. - For an fractional mask, the following symmetric windows mask is proposed

. - Moreover, an fractional mask can be constructed similarly in a symmetric form as

.

4. Some Discretizations in Determining the Approximation of the AB Integral Operator

4.1. Fractional Mask Based on the Grunwald–Letnikov Idea (AB1)

- Using Equation (7) with , the corresponding integral definition of Grunwald–Letnikov is obtained as

- ■

- Using coefficients in Equation (13), the so-called fractional AB1 masks of different sizes including , can be characterized.

4.2. Fractional Mask Based on the Toufik–Atangana Idea (AB2)

4.3. Fractional Mask Based on Euler’s Method Idea (AB3)

4.4. Fractional Mask Based on the Middle Point Idea (AB4)

5. The Main Algorithm of the Paper

- Considering the above symbols and definitions, the main denoising algorithm in this paper (Algorithm 1) is presented as follows

Algorithm 1 The algorithm of the Atangana–Baleanu iterative adaptive mean filter. Input: Obtain C as a noisy image Output: Obtain D as a denoised image Step 1. Obtain a noisy image matrix where . Step 2. Change the format of matrix C from uint8 to double if needed. Repeat Step 3. Set D:=C. Step 4. For p from 5 to 1 Construct the binary matrix of C. Construct and . For For If For r from 1 to p If Construct . Construct . Break End If End For End If End For End For Until . Step 5. D is the denoised image matrix. Step 6. Change the format of matrix D from double to uint8.

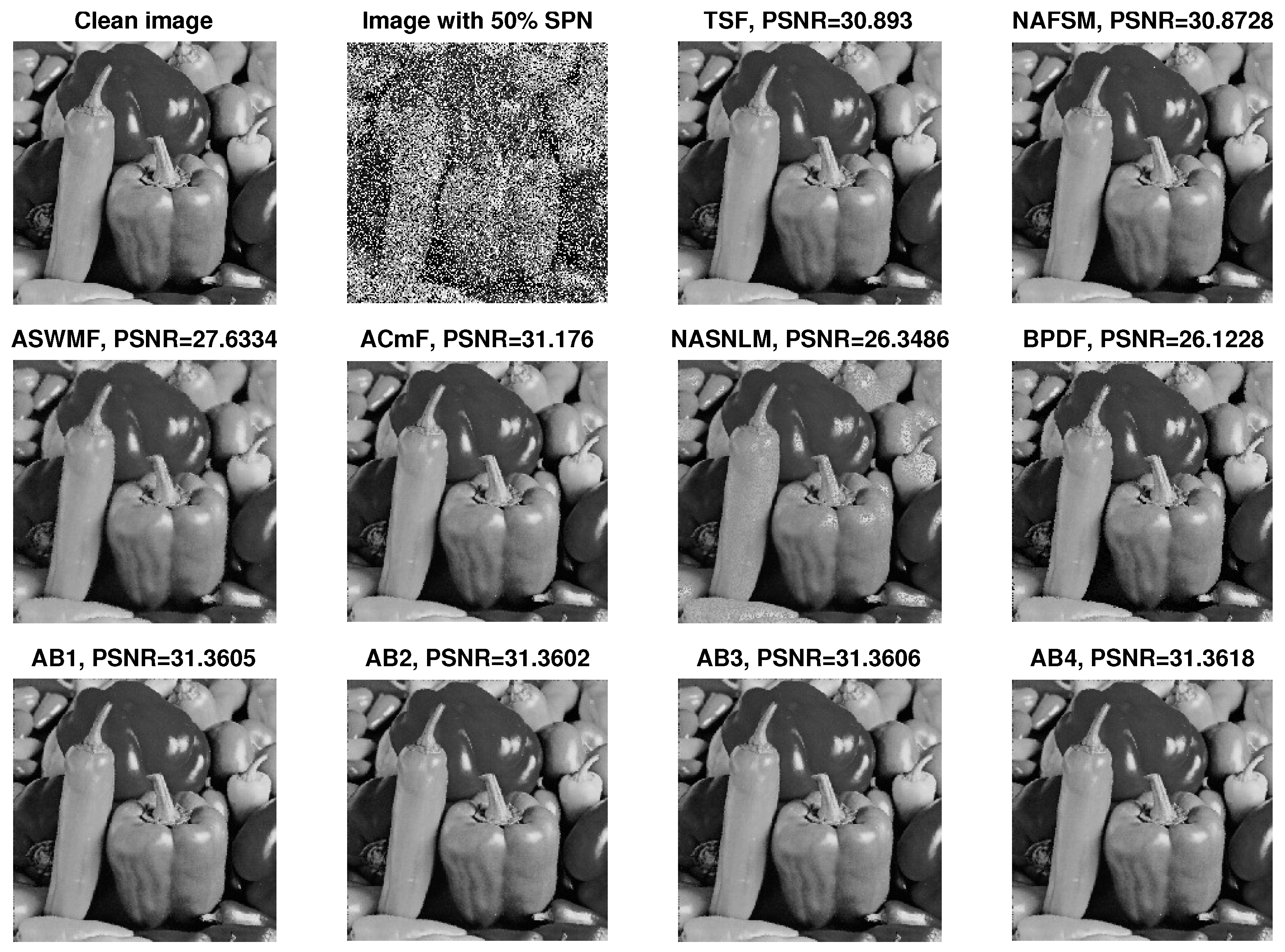

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Song, F.; Liu, Y.; Shen, D.; Li, L.; Tan, J. Learning Control for Motion Coordination in Wafer Scanners: Toward Gain Adaptation. IEEE Trans. Ind. Electron. 2022, 69, 13428–13438. [Google Scholar] [CrossRef]

- Meng, Q.; Lai, X.; Yan, Z.; Su, C.Y.; Wu, M. Motion planning and adaptive neural tracking control of an uncertain two-link rigid–flexible manipulator with vibration amplitude constraint. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 3814–3828. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, X.; Jiang, S. A Laboratory and Field Universal Estimation Method for Tire–Pavement Interaction Noise (TPIN) Based on 3D Image Technology. Sustainability 2022, 14, 12066. [Google Scholar] [CrossRef]

- Zhao, C.; Cheung, C.F.; Xu, P. High-efficiency sub-microscale uncertainty measurement method using pattern recognition. ISA Trans. 2020, 101, 503–514. [Google Scholar] [CrossRef] [PubMed]

- Ghanbari, B.; Baleanu, D. Applications of two novel techniques in finding optical soliton solutions of modified nonlinear Schrödinger equations. Results Phys. 2022, 44, 106171. [Google Scholar] [CrossRef]

- Zeng, Q.; Bie, B.; Guo, Q.; Yuan, Y.; Han, Q.; Han, X.; Chen, M.; Zhang, X.; Yang, Y.; Liu, M.; et al. Hyperpolarized Xe NMR signal advancement by metal-organic framework entrapment in aqueous solution. Proc. Natl. Acad. Sci. USA 2020, 117, 17558–17563. [Google Scholar] [CrossRef] [PubMed]

- Lin, X.; Wen, Y.; Yu, R.; Yu, J.; Wen, H. Improved Weak Grids Synchronization Unit for Passivity Enhancement of Grid-Connected Inverter. IEEE J. Emerg. Sel. Top. Power Electron. 2022, 10, 7084–7097. [Google Scholar] [CrossRef]

- Wang, F.; Wang, H.; Zhou, X.; Fu, R. A Driving Fatigue Feature Detection Method Based on Multifractal Theory. IEEE Sens. J. 2022, 22, 19046–19059. [Google Scholar] [CrossRef]

- Cao, K.; Wang, B.; Ding, H.; Lv, L.; Dong, R.; Cheng, T.; Gong, F. Improving physical layer security of uplink NOMA via energy harvesting jammers. IEEE Trans. Inf. Forensics Secur. 2020, 16, 786–799. [Google Scholar] [CrossRef]

- Zhuo, Z.; Du, L.; Lu, X.; Chen, J.; Cao, Z. Smoothed Lv distribution based three-dimensional imaging for spinning space debris. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–3. [Google Scholar] [CrossRef]

- Sun, L.; Hou, J.; Xing, C.; Fang, Z. A robust hammerstein-wiener model identification method for highly nonlinear systems. Processes 2022, 10, 2664. [Google Scholar] [CrossRef]

- Hu, J.; Wu, Y.; Li, T.; Ghosh, B.K. Consensus control of general linear multiagent systems with antagonistic interactions and communication noises. IEEE Trans. Autom. Control 2018, 64, 2122–2127. [Google Scholar] [CrossRef]

- Zhong, T.; Wang, W.; Lu, S.; Dong, X.; Yang, B. RMCHN: A Residual Modular Cascaded Heterogeneous Network for Noise Suppression in DAS-VSP Records. IEEE D 2023, 20, 7500205. [Google Scholar] [CrossRef]

- Zhou, G.; Yang, F.; Xiao, J. Study on pixel entanglement theory for imagery classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5409518. [Google Scholar] [CrossRef]

- Huppert, A.; Katriel, G. Mathematical modelling and prediction in infectious disease epidemiology. Clin. Microbiol. Infect. 2022, 19, 999–1005. [Google Scholar] [CrossRef] [PubMed]

- Djilali, S.; Ghanbari, B. Dynamical behavior of two predators–one prey model with generalized functional response and time-fractional derivative. Adv. Differ. Equ. 2021, 2021, 235. [Google Scholar] [CrossRef]

- Fan, X.; Wei, G.; Lin, X.; Wang, X.; Si, Z.; Zhang, X.; Shao, Q.; Mangin, S.; Fullerton, E.; Jiang, L.; et al. Reversible switching of interlayer exchange coupling through atomically thin VO2 via electronic state modulation. Matter 2020, 2, 1582–1593. [Google Scholar] [CrossRef]

- Baleanu, D.; Jajarmi, A.; Mohammadi, H.; Rezapour, S. A new study on the mathematical modelling of human liver with Caputo–Fabrizio fractional derivative. Chaos Solitons Fractals 2020, 134, 109705. [Google Scholar] [CrossRef]

- Wang, G.; Zhao, B.; Wu, B.; Wang, M.; Liu, W.; Zhou, H.; Zhang, C.; Wang, Y.; Han, Y.; Xu, X. Research on the macro-mesoscopic response mechanism of multisphere approximated heteromorphic tailing particles. Lithosphere 2022, 2022, 1977890. [Google Scholar] [CrossRef]

- Defterli, O.; Baleanu, D.; Jajarmi, A.; Sajjadi, S.S.; Alshaikh, N.; Asad, J.H. Fractional treatment: An accelerated mass-spring system. Rom. Rep. Phys. 2022, 74, 122. [Google Scholar]

- Xu, S.; Dai, H.; Feng, L.; Chen, H.; Chai, Y.; Zheng, W.X. Fault Estimation for Switched Interconnected Nonlinear Systems with External Disturbances via Variable Weighted Iterative Learning. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 2011–2015. [Google Scholar] [CrossRef]

- Bai, X.; Shi, H.; Zhang, K.; Zhang, X.; Wu, Y. Effect of the fit clearance between ceramic outer ring and steel pedestal on the sound radiation of full ceramic ball bearing system. J. Sound Vib. 2022, 529, 116967. [Google Scholar] [CrossRef]

- Huang, N.; Chen, Q.; Cai, G.; Xu, D.; Zhang, L.; Zhao, W. Fault diagnosis of bearing in wind turbine gearbox under actual operating conditions driven by limited data with noise labels. IEEE Trans. Instrum. Meas. 2021, 70, 3502510. [Google Scholar] [CrossRef]

- Ghanbari, B.; Atangana, A. A new application of fractional Atangana–Baleanu derivatives: Designing ABC-fractional masks in image processing. Phys. A Stat. Mech. Appl. 2020, 542, 123516. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, L.; Zheng, W.; Yin, L.; Hu, R.; Yang, B. Endoscope image mosaic based on pyramid ORB. Biomed. Signal Process. Control 2022, 71, 103261. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, J.; Hu, R.; Yang, B.; Liu, S.; Yin, L.; Zheng, W. Improved feature point pair purification algorithm based on SIFT during endoscope image stitching. Front. Neurorobotics 2022, 16, 840594. [Google Scholar] [CrossRef] [PubMed]

- Ghanbari, B.; Atangana, A. Some new edge detecting techniques based on fractional derivatives with non-local and non-singular kernels. Adv. Differ. Equ. 2020, 2020, 435. [Google Scholar] [CrossRef]

- Liu, S.; Yang, B.; Wang, Y.; Tian, J.; Yin, L.; Zheng, W. 2D/3D multimode medical image registration based on normalized cross-correlation. Appl. Sci. 2022, 12, 2828. [Google Scholar] [CrossRef]

- Cao, Z.; Wang, Y.; Zheng, W.; Yin, L.; Tang, Y.; Miao, W.; Liu, S.; Yang, B. The algorithm of stereo vision and shape from shading based on endoscope imaging. Biomed. Signal Process. Control 2022, 76, 103658. [Google Scholar] [CrossRef]

- Ban, Y.; Liu, M.; Wu, P.; Yang, B.; Liu, S.; Yin, L.; Zheng, W. Depth estimation method for monocular camera defocus images in microscopic scenes. Electronics 2022, 11, 2012. [Google Scholar] [CrossRef]

- Wang, S.; Sheng, H.; Zhang, Y.; Yang, D.; Shen, J.; Chen, R. Blockchain-Empowered Distributed Multi-Camera Multi-Target Tracking in Edge Computing. IEEE Trans. Ind. Inform. 2023. [Google Scholar] [CrossRef]

- Zhou, W.; Lv, Y.; Lei, J.; Yu, L. Global and local-contrast guides content-aware fusion for RGB-D saliency prediction. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 3641–3649. [Google Scholar] [CrossRef]

- Zhou, W.; Yu, L.; Zhou, Y.; Qiu, W.; Wu, M.W.; Luo, T. Local and global feature learning for blind quality evaluation of screen content and natural scene images. IEEE Trans. Image Process. 2018, 27, 2086–2095. [Google Scholar] [CrossRef]

- Yang, M.; Wang, H.; Hu, K.; Yin, G.; Wei, Z. IA-Net: An Inception–Attention-Module-Based Network for Classifying Underwater Images from Others. IEEE J. Ocean. Eng. 2022, 47, 704–717. [Google Scholar] [CrossRef]

- Zhou, G.; Bao, X.; Ye, S.; Wang, H.; Yan, H. Selection of optimal building facade texture images from UAV-based multiple oblique image flows. IEEE Trans. Geosci. Remote. Sens. 2020, 59, 1534–1552. [Google Scholar] [CrossRef]

- Liu, R.; Wang, X.; Lu, H.; Wu, Z.; Fan, Q.; Li, S.; Jin, X. SCCGAN: Style and characters inpainting based on CGAN. Mob. Netw. Appl. 2021, 26, 3–12. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.M. A review of image denoising algorithms, with a new one. Multiscale Model. Simul. 2005, 4, 490–530. [Google Scholar] [CrossRef]

- Huang, J.; Zhao, Z.; Ren, C.; Teng, Q.; He, X. A prior-guided deep network for real image denoising and its applications. Knowl. Based Syst. 2022, 255, 109776. [Google Scholar] [CrossRef]

- Liang, H.; Li, N.; Zhao, S. Salt and Pepper Noise Removal Method Based on a Detail-Aware Filter. Symmetry 2021, 13, 515. [Google Scholar] [CrossRef]

- Li, M.; Cai, G.; Bi, S.; Zhang, X. Improved TV Image Denoising over Inverse Gradient. Symmetry 2023, 15, 678. [Google Scholar] [CrossRef]

- Al-Shamasneh, A.R.; Ibrahim, R.W. Image Denoising Based on Quantum Calculus of Local Fractional Entropy. Symmetry 2023, 15, 396. [Google Scholar] [CrossRef]

- Zhou, G.; Song, B.; Liang, P.; Xu, J.; Yue, T. Voids filling of DEM with multiattention generative adversarial network model. Remote Sens. 2022, 14, 1206. [Google Scholar] [CrossRef]

- Tian, C.; Fei, L.; Zheng, W.; Xu, Y.; Zuo, W.; Lin, C.W. Deep learning on image denoising: An overview. Neural Netw. 2020, 131, 251–275. [Google Scholar] [CrossRef] [PubMed]

- Ilesanmi, A.E.; Ilesanmi, T.O. Methods for image denoising using convolutional neural network: A review. Complex Intell. Syst. 2021, 7, 2179–2198. [Google Scholar] [CrossRef]

- Zhong, Y.; Liu, L.; Zhao, D.; Li, H. A generative adversarial network for image denoising. Multimedia Tools and Applications. Multimed. Tools Appl. 2020, 79, 16517–16529. [Google Scholar] [CrossRef]

- Rahman, S.M.; Hasan, M.K. Wavelet-domain iterative center weighted median filter for image denoising. Signal Process. 2003, 83, 1001–1012. [Google Scholar] [CrossRef]

- Thanh, D.N.; Engínoğlu, S. An iterative mean filter for image denoising. IEEE Access 2019, 7, 167847–167859. [Google Scholar]

- Feng, Y.; Zhang, B.; Liu, Y.; Niu, Z.; Fan, Y.; Chen, X. A D-band manifold triplexer with high isolation utilizing novel waveguide dual-mode filters. IEEE Trans. Terahertz Sci. Technol. 2022, 12, 678–688. [Google Scholar] [CrossRef]

- Xu, K.D.; Guo, Y.J.; Liu, Y.; Deng, X.; Chen, Q.; Ma, Z. 60-GHz compact dual-mode on-chip bandpass filter using GaAs technology. IEEE Electron Device Lett. 2021, 42, 1120–1123. [Google Scholar] [CrossRef]

- Xu, B.; Guo, Y. A novel DVL calibration method based on robust invariant extended Kalman filter. IEEE Trans. Veh. Technol. 2022, 71, 9422–9434. [Google Scholar] [CrossRef]

- Xu, B.; Wang, X.; Zhang, J.; Guo, Y.; Razzaqi, A.A. A novel adaptive filtering for cooperative localization under compass failure and non-gaussian noise. IEEE Trans. Veh. Technol. 2022, 71, 3737–3749. [Google Scholar] [CrossRef]

- Chen, Z.; Zhou, Z.; Adnan, S. Joint low-rank prior and difference of Gaussian filter for magnetic resonance image denoising. Med Biol. Eng. Comput. 2021, 59, 607–620. [Google Scholar] [CrossRef]

- Samko, G.; Kilbas, A.A.; Marichev, O.I. Fractional Integrals and Derivatives: Theory and Applications; Gordon and Breach: Yverdon, Switzerland, 1993. [Google Scholar]

- Caputo, M.; Fabrizio, M. A new definition of fractional derivative without singular kernal. Prog. Fract. Differ. Appl. 2015, 1, 73–85. [Google Scholar]

- Atangana, A.; Baleanu, D. New fractional derivatives with non-local and non-singular kernel: Theory and application to heat transfer model. Therm. Sci. 2016, 20, 763–769. [Google Scholar] [CrossRef]

- Huading, J.; Pu, Y. Fractional calculus method for enhancing digital image of bank slip. Proc. Congr. Image Signal Process. 2008, 3, 326–330. [Google Scholar]

- Toufik, M.; Atangana, A. New numerical approximation of fractional derivative with non-local and non-singular kernel: Application to chaotic models. Eur. Phys. J. Plus 2017, 132, 444. [Google Scholar] [CrossRef]

- Li, C.; Zeng, F. The finite difference methods for fractional ordinary differential equations. Num. Funct. Anal. Opt. 2013, 34, 149–179. [Google Scholar] [CrossRef]

- Pu, Y.F.; Zhou, J.L.; Yuan, X. Fractional differential mask: A fractional differential-based approach for multiscale texture enhancement. IEEE Trans. Image Process 2010, 19, 491–511. [Google Scholar]

- Pu, Y.F.; Zhou, J.L. Adaptive cesáro mean filter for salt-and-pepper noise removal. El-Cezeri 2020, 7, 304–314. [Google Scholar]

| Noise | Noisy | TSF | NAFSM | ASWMF | ACmF | NASNLM | BPDF | AB1 | AB2 | AB3 | AB4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10% | 1847 | 5.499 | 5.520 | 8.874 | 5.760 | 101.134 | 7.715 | 5.302 | 5.302 | 5.301 | 5.303 |

| 30% | 5521 | 18.480 | 18.506 | 27.718 | 18.409 | 251.650 | 30.209 | 16.946 | 16.946 | 16.948 | 16.948 |

| 50% | 9235 | 36.470 | 36.897 | 55.834 | 35.772 | 261.184 | 88.470 | 33.260 | 33.260 | 33.260 | 33.256 |

| 70% | 12,867 | 64.135 | 67.713 | 112.755 | 63.441 | 123.526 | 292.682 | 61.302 | 61.298 | 61.305 | 61.298 |

| 90% | 16,595 | 126.05 | 231.62 | 380.34 | 129.49 | 109.80 | 5203.18 | 127.87 | 127.87 | 127.87 | 127.88 |

| Noise | Noisy | TSF | NAFSM | ASWMF | ACmF | NASNLM | BPDF | AB1 | AB2 | AB3 | AB4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10% | 0.171 | 0.975 | 0.975 | 0.972 | 0.974 7 | 0.806 | 0.969 | 0.976 | 0.976 | 0.976 | 0.976 |

| 30% | 0.047 | 0.919 | 0.919 | 0.912 | 0.918 | 0.726 | 0.899 | 0.924 | 0.924 | 0.924 | 0.9248 |

| 50% | 0.022 | 0.848 | 0.848 | 0.837 | 0.847 | 0.684 | 0.804 | 0.857 | 0.857 | 0.857 | 0.857 |

| 70% | 0.011 | 0.757 | 0.753 | 0.735 | 0.756 | 0.699 | 0.641 | 0.763 | 0.763 | 0.763 | 0.763 |

| 90% | 0.005 | 0.640 | 0.580 | 0.558 | 0.630 | 0.692 | 0.243 | 0.632 | 0.632 | 0.632 | 0.632 |

| Noise | Noisy | TSF | NAFSM | ASWMF | ACmF | NASNLM | BPDF | AB1 | AB2 | AB3 | AB4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10% | 1999 | 4.522 | 4.610 | 14.335 | 5.518 | 47.783 | 9.694 | 5.518 | 5.516 | 5.519 | 5.519 |

| 30% | 6011 | 17.312 | 17.442 | 45.082 | 17.942 | 106.736 | 42.410 | 17.328 | 17.329 | 17.325 | 17.325 |

| 50% | 10,102 | 43.700 | 43.642 | 89.646 | 40.793 | 121.435 | 124.066 | 39.049 | 39.052 | 39.046 | 39.053 |

| 70% | 14,039 | 87.155 | 90.318 | 179.179 | 82.002 | 95.531 | 433.330 | 80.039 | 80.039 | 80.038 | 80.036 |

| 90% | 18,066 | 201.78 | 327.27 | 605.09 | 200.25 | 190.14 | 8084.16 | 197.85 | 197.85 | 197.84 | 197.84 |

| Noise | Noisy | TSF | NAFSM | ASWMF | ACmF | NASNLM | BPDF | AB1 | AB2 | AB3 | AB4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10% | 0.173 | 0.987 | 0.986 | 0.977 | 0.987 | 0.882 | 0.981 | 0.987 | 0.987 | 0.987 | 0.987 |

| 30% | 0.058 | 0.942 | 0.941 | 0.899 | 0.945 | 0.831 | 0.909 | 0.945 | 0.945 | 0.945 | 0.945 |

| 50% | 0.028 | 0.886 | 0.886 | 0.816 | 0.893 | 0.772 | 0.806 | 0.895 | 0.895 | 0.895 | 0.895 |

| 70% | 0.012 | 0.856 | 0.852 | 0.764 | 0.858 | 0.827 | 0.687 | 0.861 | 0.861 | 0.861 | 0.861 |

| 90% | 0.005 | 0.751 | 0.682 | 0.572 | 0.746 | 0.784 | 0.190 | 0.748 | 0.748 | 0.748 | 0.748 |

| Noise | Noisy | TSF | NAFSM | ASWMF | ACmF | NASNLM | BPDF | AB1 | AB2 | AB3 | AB4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10% | 1865 | 6.176 | 6.206 | 14.002 | 5.953 | 68.955 | 10.217 | 6.391 | 6.389 | 6.391 | 6.390 |

| 30% | 5711 | 24.211 | 24.248 | 45.786 | 21.818 | 190.735 | 42.992 | 22.209 | 22.213 | 22.209 | 22.211 |

| 50% | 9421 | 48.769 | 48.918 | 83.942 | 43.474 | 221.964 | 107.896 | 42.887 | 42.887 | 42.890 | 42.895 |

| 70% | 13,222 | 88.111 | 91.346 | 145.301 | 82.937 | 135.603 | 301.688 | 81.513 | 81.507 | 81.515 | 81.507 |

| 90% | 17,026 | 187.60 | 310.07 | 377.12 | 190.02 | 182.89 | 2695.97 | 188.13 | 188.13 | 188.14 | 188.12 |

| Noise | Noisy | TSF | NAFSM | ASWMF | ACmF | NASNLM | BPDF | AB1 | AB2 | AB3 | AB4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10% | 0.207 | 0.984 | 0.983 | 0.969 | 0.984 | 0.895 | 0.976 | 0.984 | 0.984 | 0.984 | 0.984 |

| 30% | 0.028 | 0.886 | 0.886 | 0.816 | 0.893 | 0.772 | 0.806 | 0.895 | 0.895 | 0.895 | 0.895 |

| 50% | 0.014 | 0.806 | 0.802 | 0.702 | 0.812 | 0.727 | 0.619 | 0.814 | 0.814 | 0.814 | 0.814 |

| 70% | 0.014 | 0.806 | 0.802 | 0.702 | 0.812 | 0.727 | 0.619 | 0.814 | 0.814 | 0.814 | 0.814 |

| 90% | 0.006 | 0.652 | 0.599 | 0.508 | 0.648 | 0.658 | 0.313 | 0.651 | 0.651 | 0.651 | 0.651 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, M.; Wang, S.; Ju, X.; Wang, Y. Image Denoising Method Relying on Iterative Adaptive Weight-Mean Filtering. Symmetry 2023, 15, 1181. https://doi.org/10.3390/sym15061181

Wang M, Wang S, Ju X, Wang Y. Image Denoising Method Relying on Iterative Adaptive Weight-Mean Filtering. Symmetry. 2023; 15(6):1181. https://doi.org/10.3390/sym15061181

Chicago/Turabian StyleWang, Meixia, Susu Wang, Xiaoqin Ju, and Yanhong Wang. 2023. "Image Denoising Method Relying on Iterative Adaptive Weight-Mean Filtering" Symmetry 15, no. 6: 1181. https://doi.org/10.3390/sym15061181

APA StyleWang, M., Wang, S., Ju, X., & Wang, Y. (2023). Image Denoising Method Relying on Iterative Adaptive Weight-Mean Filtering. Symmetry, 15(6), 1181. https://doi.org/10.3390/sym15061181