Abstract

With the rapid development of current technology, computer vision technology has also achieved better development, providing more possibilities for digital image generation. However, traditional digital image generation methods have high operational requirements for designers due to difficulties in collecting data sets and simulating environmental scenes, which results in poor quality, lack of diversity, and long generation speed of generated images, making it difficult to meet the current needs of image generation. In order to better solve these problems and promote the better development of digital image generation, this paper introduced a virtual interaction design under current popular blockchain technology in image generation, built a generative model through the virtual interaction design under the blockchain technology, optimized the image generation, and verified it in practice. The research results show that the digital image generation number of the method in this paper is 641. This indicates that method generates more images, has better diversity in digital image generation, and better image quality. Compared to other digital image generation methods, this method can better meet the needs of high-quality and diverse images, better adapt to the needs of different image generation, and is of great significance for promoting the better development of image generation.

1. Introduction

With the continuous improvements in computer technology and the development of image processing technology, digital image generation methods have undergone tremendous changes, and new image generation methods are emerging one after another. From traditional graphics to automatic encoding technology and then to deep learning technology, image generation methods are developing towards diversification, flexibility, and efficiency. Although using existing traditional digital image technology can achieve good results in image generation, the use of professional software to create and edit the effects of virtual environments in the face of image generation tasks is directly related to the operational ability of designers. Especially for the creation of environmental scenes, in order to simulate every aspect clearly, it is necessary to accurately simulate objective conditions such as the shape, material, and light of the object. Compared with the traditional digital image generation methods, this paper intends to use the virtual interaction design method under blockchain technology to establish a universal generation model, and train it and adjust it appropriately, so as to achieve effective extraction of different types of data and self-adaptation to various application scenarios. In the case of the same generation of images, the use of virtual interaction design under blockchain technology for digital image generation can improve the generation quality and diversity of generated images. Therefore, this paper focuses on the analysis of virtual interaction design under blockchain technology, hoping that this method can improve the existing image generation methods, make up for their shortcomings, and improve the quality and diversity of generated images.

With the development of computer vision technology, image generation has become a research field for many scholars. Although their research directions on image generation vary, their focus is on optimizing the quality of high image generation. Jiang Yuming proposed a text-driven controllable framework, Text2Human, to improve the quality and diversity of image generation, and experiments show that the framework generates more diverse and realistic images [1]. Ho Jonathan believed that the cascaded diffusion model can generate high fidelity images without the need for any auxiliary image classifiers to improve the quality of image generation, and has been experimentally proven to have higher resolution in generating images [2]. Niu Shuanlong proposed an image generation method to solve the problem of scarcity in industrial images, and demonstrated that this method can generate an image data set that balances diversity and authenticity, improving the quality of image generation [3]. Choi Hongyoon used a deep generation network for nuclear magnetic resonance image generation and stated that the generated images exhibit visually similar signal patterns to real nuclear magnetic resonance images. He believes that the generation method can be used in the multi-modal image generation of various organs and for further quantitative analysis [4]. These scholars’ research on image generation quality can enrich their theoretical content and provide more reference for optimizing image generation quality, but there are also some shortcomings. Due to scholars’ limited research on the quality of image generation and lack of analysis on the efficiency of digital image generation, the research is not comprehensive enough to meet the current needs of digital image generation, and cannot be well applied.

With the better promotion of virtual reality technology, some scholars have also explored image generation and quality evaluation based on virtual reality, and analyzed the effectiveness of virtual reality in image generation. Kim Hak Gu proposed a new deep-learning-based virtual reality image generation and quality evaluation method. This method utilized encoded positional and visual features to improve image generation quality, while also automatically predicting the visual quality of omnidirectional images [5]. Madhusudana Pavan Chennagiri proposed to apply virtual reality to the quality assessment of panoramic image generation and image stitching, and said that the generation of virtual reality can reconstruct images through quality models to improve the quality of ghost artifact images. He also pointed out that the quality model is very relevant to the subjective scores in the stitching image quality assurance database [6]. Scholars have a certain theoretical value for the discussion of virtual reality used in image generation. However, because its research is not combined with virtual interaction design under blockchain technology, the research on digital image generation is not comprehensive enough and needs to be improved. From the research of the above scholars, it can be seen that there is less research on the use of virtual interaction design under blockchain technology in digital image generation and analysis at present, and its theory is relatively blank, which means it is difficult to support its promotion and application. Further research is needed in the future.

In order to better improve the quality and diversity of digital image generation, this article proposed a new method to improve the effectiveness of digital image generation based on theoretical analysis of digital image generation and previous scholars’ discussions on virtual reality and image generation. Through empirical research, it was found that the new method proposed in this article generated better image quality, shorter image generation time, and better image diversity. Compared with traditional image generation methods, the innovation of this method is that it pays attention to the importance of virtual interaction design under blockchain technology and uses it in image generation, which helps to improve the speed of image generation and ultimately better promote the development of computer image processing technology.

2. Overview of Digital Image Generation Theory

2.1. Image Generation

For a long time, with the development of other disciplines, image processing technology has penetrated into various aspects of people’s daily lives. With the increasing application scope of digital image processing technology, such as mobile shooting devices, film special effects production, and electronic games, people’s demand for digital images is also constantly expanding, which directly drives the development of image generation technology. The essence of digital image generation is to transform one possible representation of an image into another without changing its feature distribution. When faced with image generation tasks such as scene construction or style transformation, traditional digital image processing techniques would use different artificially designed algorithms to process them based on different environments and compositions. Image generation is accumulated through various subdivision steps, gradually achieving the goal of image generation, and ultimately generating a satisfactory image [7].

In the field of digital image processing, the speed of image generation depends, to a large extent, on the software architecture that encapsulates the image processing technology, as well as various algorithms, acceleration optimization techniques used in the image generation process, and the proficiency of manual operation. As digital images have the characteristic of matrix storage, they particularly rely on the image computing capabilities of hardware devices in image processing technology [8]. The utilization efficiency of traditional image generation methods is also related to the realism of image application scenarios, graphic generation, and the complexity of establishing virtual scenes that meet the requirements [9]. Although this type of drawing software has been highly encapsulated, the various functions inside are actually based on traditional graphic processing algorithms.

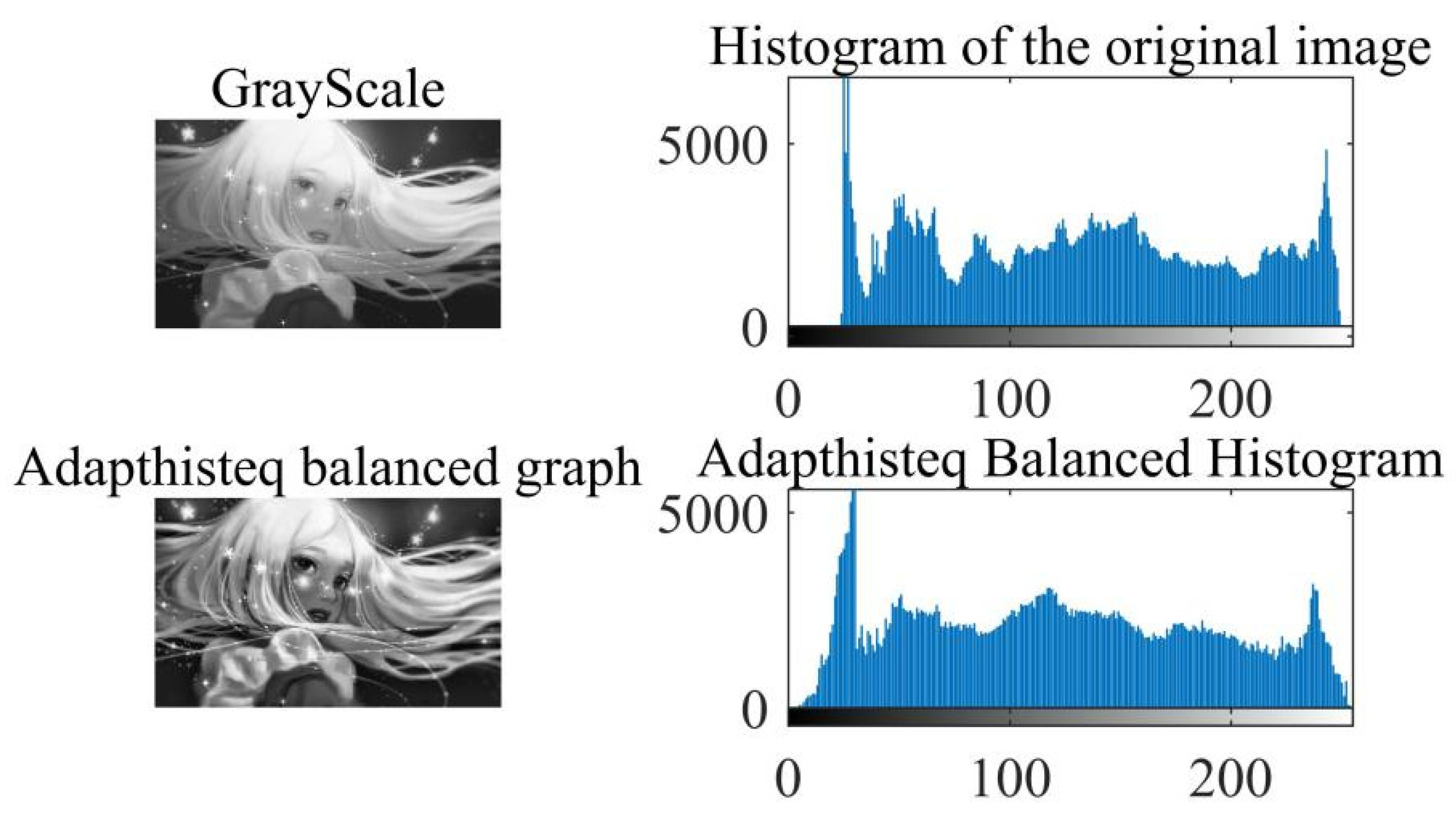

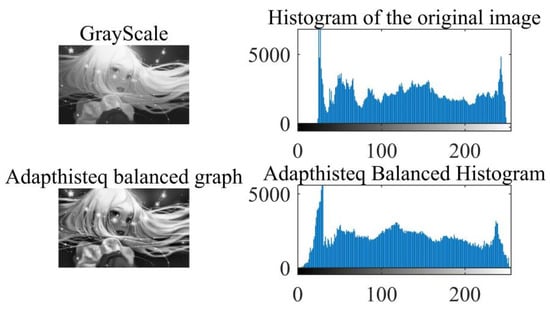

The grayscale histogram of a digital image is a statistical representation of the number of pixels within each grayscale level in the image. It reflects the grayscale changes in the digital image and plays a fundamental role in strong compression, description, and other aspects of digital image generation and processing. The grayscale histogram of digital images is shown in Figure 1:

Figure 1.

Digital image grayscale histogram.

Currently, most image generation is driven by parameter model networks, which do not fully utilize the image content in the data set, but instead use the data set as an initial reference material to reproduce the appearance of the object. The generation of semi-parametric images is mainly focused on early image generation work. Semi-parametric image generation uses a manually segmented external image repository, which can provide clear details and unify styles, and can also fill in the edge content between blocks. The application flexibility of semi-parametric image generation is poor, and the proportion of manual participation is high. From the output results, semi-parametric image generation is closer to image stitching.

Currently, there is no universal algorithm that can ensure the quality and diversity of generated images while also adapting to various image generation. Although there have been many widely used methods in the field of digital image processing technology, such as edge extraction, shape transformation, style transformation, and region segmentation, in some aspects of digital image processing technology, there have been many widely used methods, but their central idea is more focused on multiple operation processes targeting specific objects, which are very complex and inefficient. Therefore, this paper applies virtual interaction design under blockchain technology to digital image generation, hoping to better optimize image generation methods.

2.2. Related Technologies

2.2.1. Blockchain Technology

As the most basic technical support for Bitcoin, blockchain essentially provides reliable support for database security through decentralization and trust [10]. Based on the decentralized, untrustworthy, and immutable characteristics of blockchain, it can effectively solve the fairness issues in traditional data authentication, thereby achieving fairness and openness in data authentication [11]. The blockchain ledger belongs to a decentralized database that is used to store detailed information related to each transaction. Transactions are recorded in chronological order in the ledger and stored as a series of information blocks, each of which is referenced by the previous information, forming an interconnected chain. The ledger is distributed across various nodes in the blockchain system, and each node retains and maintains a complete copy. The blockchain automatically synchronizes and verifies the transactions of all nodes. This account is transparent to all participating members and can be verified without the need for central or external verification services to be trustworthy [12].

Blockchain belongs to the underlying technology of Bitcoin [13]. It can be used as a distributed ledger, and can be used to compare all transaction information of Bitcoin for public recording. Each block contains some transactions, which are connected to form a blockchain [14]. Due to the characteristics of decentralization, trustworthiness, and being tamper-proof, blockchain has strong security and privacy protection capabilities, and is widely used in various fields such as finance, justice, and media [15]. As blockchain technology continues to improve, Turing-complete programmable blockchains are being developed.

2.2.2. Blockchain Technology and Image Generation

The openness, transparency, immutability, and chain storage characteristics of blockchain make it very consistent with the traceability requirements of image information in image generation. Media organizations and creators in the alliance chain can submit information related to image generation through blockchain technology and submit image upload applications to the alliance chain. The blockchain system uses a method of comparing hash values to conduct self-search queries. If no image that is the same or similar to the image to be chained is found, it indicates that the image can be linked to the block and stored. Afterwards, the blockchain system combines image information such as the organization to which the image belongs, image hash value features, and image amount into a transaction, and writes the transaction into the block. If an image that is identical or similar to the image to be linked is found, it indicates that the image cannot be linked. At the same time, the blockchain system would track all transaction records of the image until it returns to its original state, which is the copyright owner information.

2.2.3. Virtual Interaction Design

With the continuous development and improvement of sensing technology and virtual reality devices, nowadays, except for the inability to reproduce senses such as smell and taste, virtual reality technology has been able to simulate and reproduce the vast majority of human senses. Compared with traditional interaction methods, virtual interaction design has the following characteristics: first, the interaction design of virtual reality scenes has a stronger sense of immersion. Immersion refers to the degree of reality of the surrounding environment that users feel in the virtual reality scene. Research on interaction design of image generation requires designers to have in-depth communication and interaction with images, and the immersion brought by virtual reality technology can well meet the needs of virtual interaction design. Second, virtual reality is more interactive. Compared with traditional interaction design, users in virtual reality scenes are highly free and can freely use related devices for mobile communication without any impact. The highly free interactive way of virtual interaction design can have a certain impact on the thoughts and feelings of designers. Third, virtual interaction design can stimulate designers’ creativity. Virtual scene is a simulation of the real world, which is an idealized real world. Interaction design in virtual scenes can bring inspiration to users, expand their cognitive and perceptual thinking, and bring more possibilities to the interaction design of image generation.

2.2.4. Virtual Interaction Design and Image Generation

With the advancement of modern technology, the image design techniques used by people in their daily lives are also undergoing changes. Virtual reality technology with intuitive simulation and sensory experience has become the preferred technology for engineering design and image generation. It is an emerging practical technology that combines computer, electronic information, and simulation technology. It also presents planar images that cannot be felt by humans in the form of three-dimensional models [16,17]. Image generation in virtual reality scenes is actually the process of direct interaction between users and images. During this process, users can easily compare human–machine dimensions and preview interactive actions with the generated images, which allows them to better grasp the generated image specifications. This method uses virtual interaction design to reconstruct the image, thus, improving the quality of the image and realizing the optimization of the image generation effect.

2.3. Realization of Digital Image Generation under Virtual Interaction Design

Through virtual interaction design, the shape, posture, interior, exterior, and other parameters of the space target are analyzed, and a generative model is constructed. Through mathematical simulation, a simulation image that can represent the space target is formed. In simulation experiments, the following steps are required to generate a simulation image. It is necessary to set a scene coordinate and construct a scene environment. Then, 3D modeling is performed on the moving object, and the moving object is placed in the corresponding space according to its motion trajectory. The external and internal parameters of the camera are set in real-time based on the tracking status parameters of the photoelectric theodolite. A generative model is established from the above data, and projection conversion is performed based on the set parameter information.

Given a set of data , all of which are represented by X, the purpose is to calculate the distribution of A according to , and then calculate all possible A according to , including data other than . However, this is only an ideal generative model. In practical applications, how to perform latent distribution learning on data is a difficult problem. To achieve this, the implicit variable S can be introduced and the data can be generated through Formula (1):

Among them, is a model generated by S to generate A.

When S is in a high-dimensional continuous space, there exists:

The generation process then becomes: for any S, is used to calculate the probability. If it matches, it can end, and if it does not, it needs to return to the previous step. In this case, the generation process is uncontrollable. It is necessary to find a calculus of variations function to replace , which is represented by the formula:

The purpose of the variations self-encoder is to minimize the lower bound of the logarithmic likelihood of the data. According to Formula (3), a new generative model is constructed, which can optimize the network parameters by gradually reducing the loss function. The formula is:

A variable score self-encoder can use the encoding processing of output images to obtain the distribution of such encoded images, thereby obtaining the noise distribution corresponding to each image. It can also generate the desired image by selecting a certain noise, but the image generated by this method has low clarity. This is because this method directly calculates the mean square error between the generated image and the original image without adversarial learning.

The purpose of generative adversarial networks is to achieve a balance between generators and discriminators [18]. That is to say, the optimization of network parameters is realized by the continuous reduction in the loss function in the following Formula (5):

In image generation, generative adversarial networks use adversarial methods for learning to improve image defect recognition. This makes the obtained image clearer [3]. However, generating networks can be used to distinguish between “true” and “false” images, but this method can only make the images appear more realistic and cannot ensure that the image content is for daily use. This means that generating a network can only make the background pattern appear more realistic, but in reality, there are no real objects.

The virtual interaction design under the blockchain technology divides the imaging process of the generative model into two parts. One is the target image simulation, and the other is the background image simulation. It is assumed that the meridian would always be in the ideal tracking state, that is, the target is always imaged at the center of the field of view. On this basis, based on the flight path and attitude of the mock object and the position of the theodolite station, the corresponding background image is generated by pre-processing it and saved as a sequence image. In the simulation of the target image, it is necessary to turn off the background image and ensure that the background grayscale value of the target image is zero. When conducting background simulation, the simulated target model should be excluded, so that the simulated image only contains background changes. When generating digital images, the real-time data output by the encoder is used to calculate the miss distance and rotation angle of the target image, and then the rotated and translated target image is overlapped with the background image to make it as close as possible to the actual image.

The discriminator network distinguishes between the actual image and the generated image. Due to the addition of random performance, the robustness of the discriminator network is effectively improved, which can avoid the problem of large differences in network discrimination results between the early and late stages, achieve better discrimination results, and also promote the generator to produce more realistic images. This generative model formed by virtual interaction design based on blockchain technology not only maintains the encoding method of the variations self-encoder, but also maintains the global structure of generation confrontation network. It replaces the encoding results of the variable score self-encoder with random noise in the generated adversarial network, thereby solving the problem of the original generated adversarial network not being able to determine which random noise can generate the desired image, and improving the situation where the original generated adversarial network produces blurry images.

The generator minimization loss function is:

The maximum loss function of discriminator is:

The discriminator is updated by maximizing the following formula:

The generator is updated by minimizing the following formula:

The image features extracted by the self-coding method have less redundant information. Compared with using the whole image as the input mode to generate the countermeasure network, it can accelerate the regression of the loss function.

3. Empirical Evaluation on Digital Image Generation

After studying the virtual interaction design based on blockchain technology, this paper proposed to use it in digital image generation. In order to verify the feasibility of this idea, this paper also needed to conduct empirical research on it.

3.1. Experimental Design and Data Sources

The data set selected for this study is the CIFAR-10 data set, which includes 50,000 training images, 10,000 test images, and 10 categories. Each category contains 6000 images, with an image size of 32 × 32 × 3. A total of 7000 images were used as the training set, and 1000 images were used as the image testing set. The number of images, the time required for image generation, the generation loss, and discriminating losses after digital image generation for the method in this paper, [1] (that is, text-driven controlled frame Text2Human image generation method), and [2] (that is, cascaded diffusion model-based image generation method) were compared to draw relevant conclusions. The experimental environment is shown in Table 1:

Table 1.

Experimental environment.

3.2. Evaluation of Experimental Results

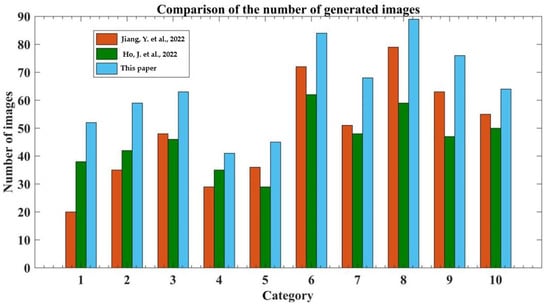

3.2.1. Comparison of the Number of Images Generated after Digital Image Generation

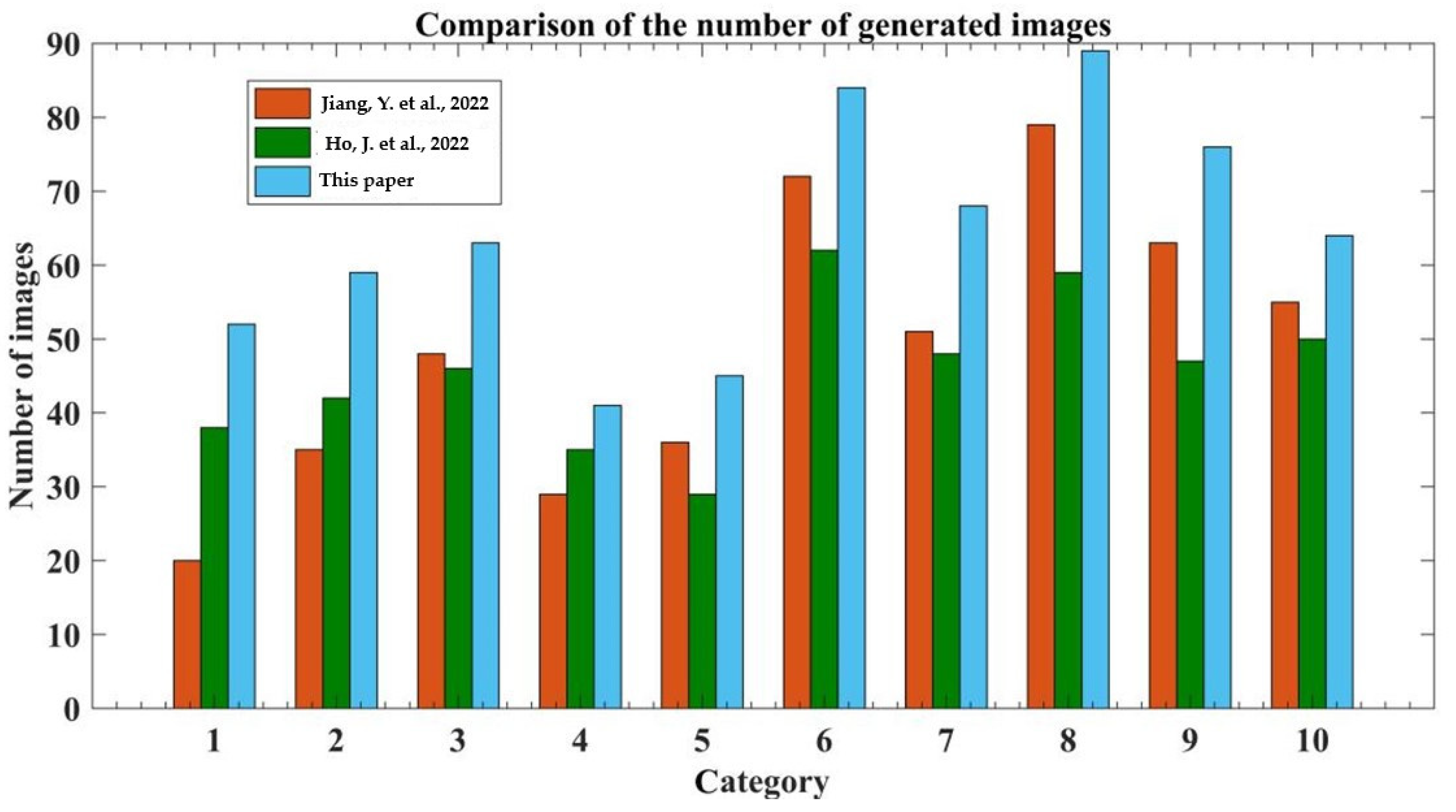

The diversity of digital image generation is an important criterion for measuring image generation methods. A good image generation method generates more images and a richer variety of image types. The higher the diversity of image generation methods, the more images of the same type are generated. This article compares the number of image types generated by three methods, and the specific results are shown in Figure 2 (horizontal coordinates 1 to 10 represent the category of images, and vertical coordinates 0 to 90 represent the number of generated images):

Figure 2.

Comparison of the number of digital images of different categories generated by different methods [1,2].

From Figure 2, it can be seen that the total number of digital images generated by the method in [1] is 488, among which category 6 and category 8 have the highest number of digital images generated, with 72 and 79 images, respectively. The number of digital images generated by the methods in this article for categories 6 and 8 are 84 and 89, respectively, with category 6 generating 12 more digital images than the method in [1], and category 8 generating 10 more digital images than the method in [1]. The total number of digital images generated by the method in [2] is 456, with category 6 having the highest number of generated digital images at 62. However, compared with the methods in this paper and [1] under the same type, the method in [2] has the lowest number of generated digital images in category 6. The method used in this article generates the largest number of digital images, totaling 641, which is 153 more than the method used in [1] and 185 more than the method used in [2]. From this, it can be seen that the performance of this method is the best, with better richness in digital image generation. The number of digital images that can be generated in the same type is the highest. Compared to the other two methods, this method has superior performance, can better improve the number of digital image generation, and enhances the richness of digital image generation. This is because this method combines the virtual interaction design under the blockchain technology, providing more possibilities for the generation of digital images.

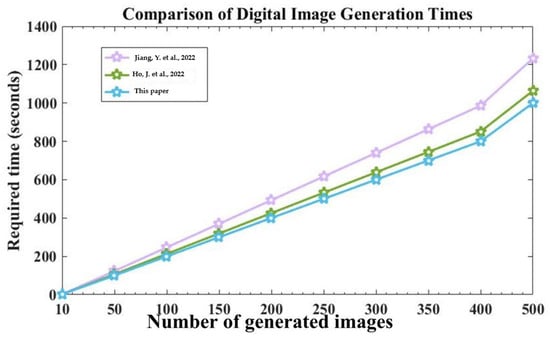

3.2.2. Time Required for Image Generation

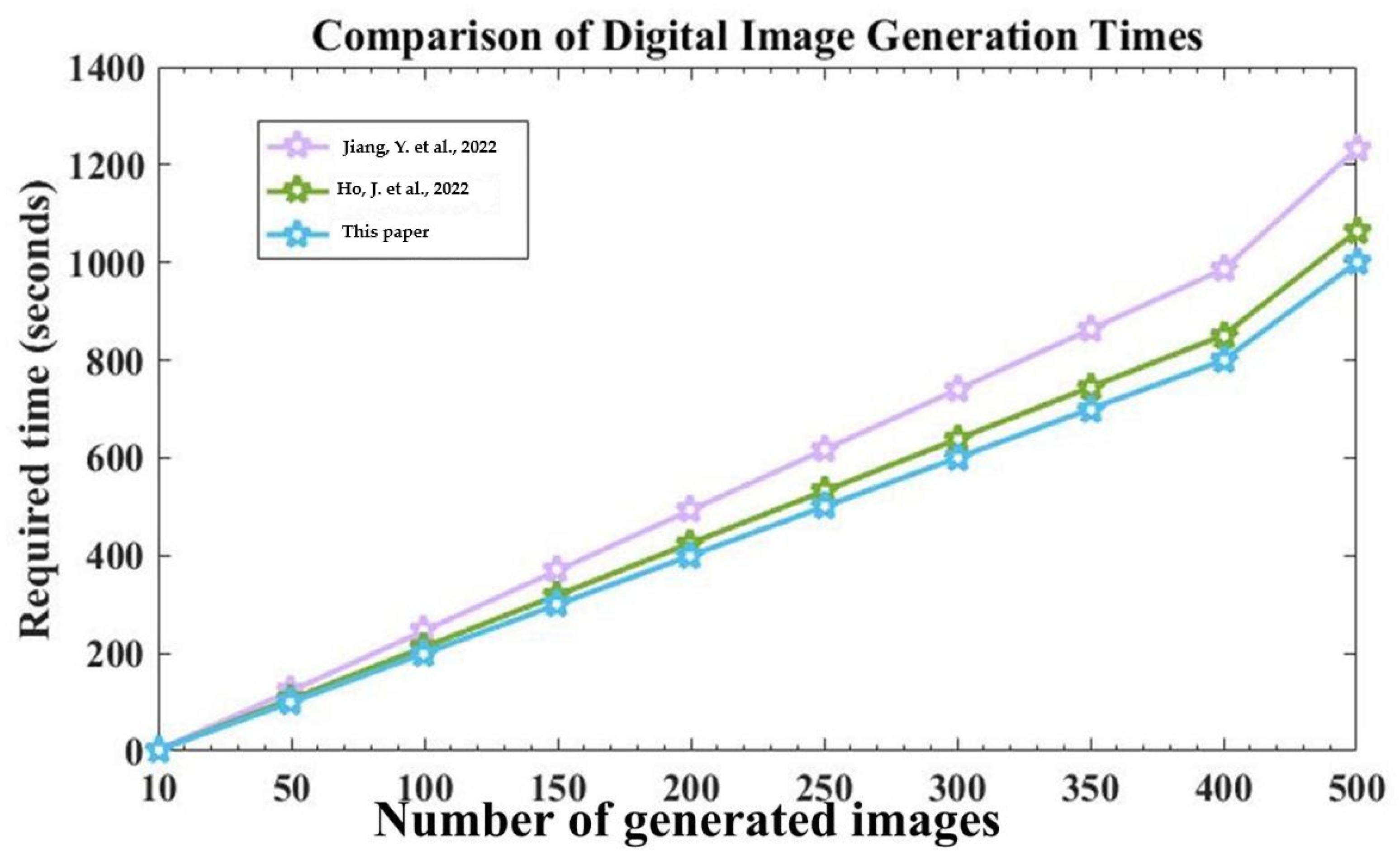

Image generation time is the most intuitive manifestation of the performance of image generation methods. The shorter the time required for image generation, the better the performance of the image generation method and the faster its generation speed. This article compares the time required for generating digital images using three methods, and the results are shown in Figure 3 (the horizontal axis 10 to 500 represents the number of images required to be generated, and the vertical axis 0 to 1400 represents the time required for digital image generation):

Figure 3.

Comparison of the time required for digital image generation using different methods [1,2].

From Figure 3, it can be seen that when the number of digital images is 10, the time required for the method in [1] to generate images is 2.47 s; the time required for the method in [2] to generate images is 2.13 s; the time required for the method in this paper to generate images is 2 s. When the number of digital images is 100, the time required for the method in [1] to generate images is 246.53 s; the time required for the method in [2] to generate images is 212.77 s; the time required for the method in this paper to generate images is 200 s. When the number of digital images is 500, the time required for the method in [1] to generate images is 1232.67 s; the time required for the method in [2] to generate images is 1063.83 s; the time required for the method in this paper to generate images is 1000 s. When the number of digital images is 10, the method proposed in this paper reduces the image generation time by 0.47 s compared to the method proposed in [1] and 0.13 s compared to the method proposed in [2]. When the number of digital images is 100, the method proposed in this paper is 46.53 s quicker in generating images than the method proposed in [1], and 12.77 s quicker than the method proposed in [2]. When the number of digital images is 500, the method proposed in this paper reduces the image generation time by 232.67 s compared to the method proposed in [1] and 63.83 s compared to the method proposed in [2]. From this, it can be seen that compared to the methods in [1,2], the method proposed in this paper requires the least time for digital image generation, indicating that the advantage of the method proposed in this paper in terms of digital image generation speed is more obvious and can better meet the current digital image generation needs.

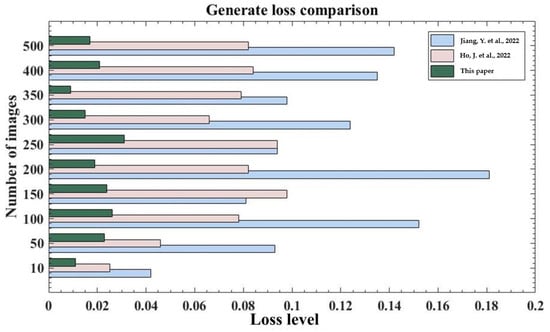

3.2.3. Generating Loss Comparison

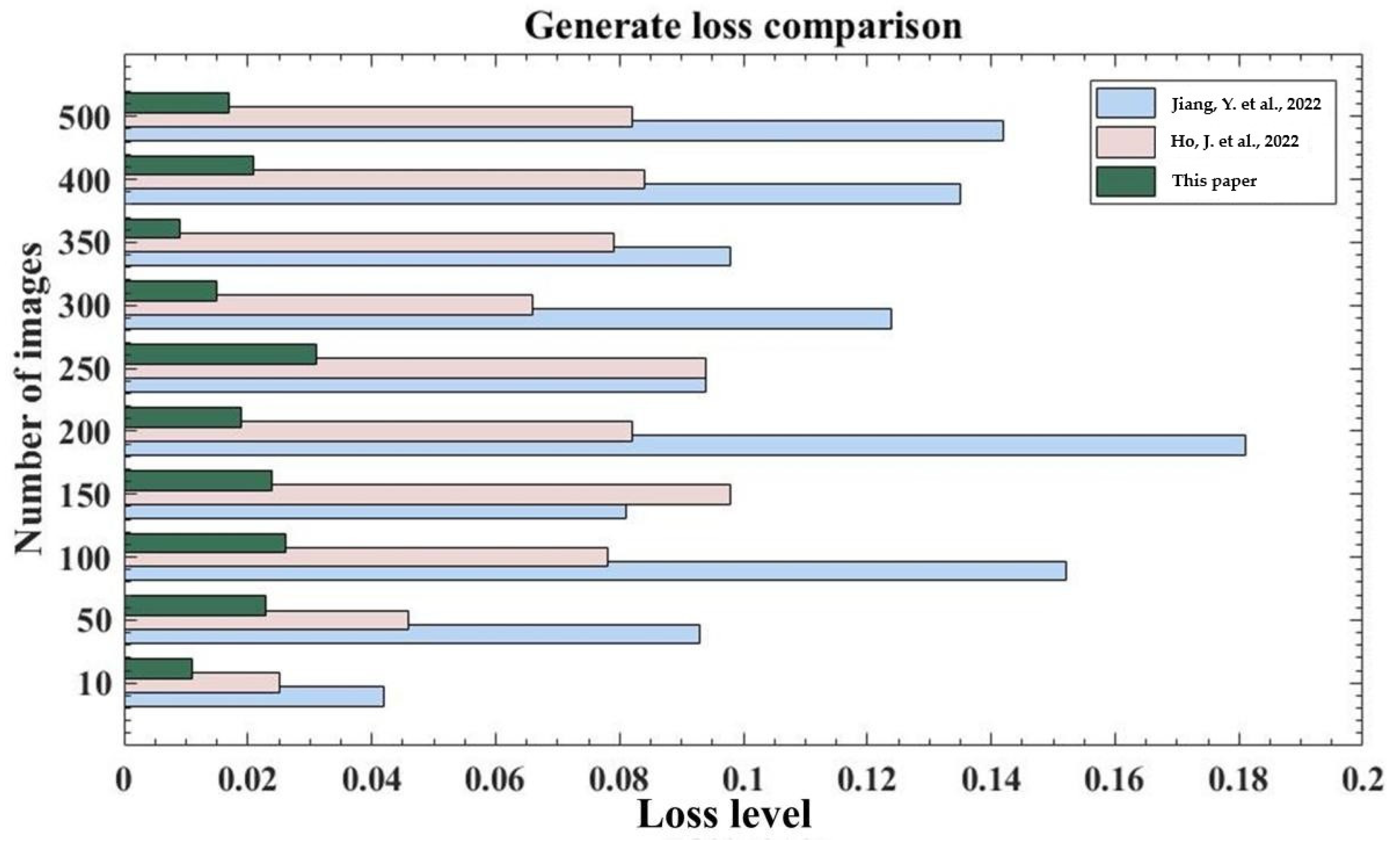

Image generation loss is an important component of measuring the quality of image generation. The smaller the generation loss, the more visually superior the generated digital image is, and its image is also closer to a natural image. To compare the quality of digital images generated by different methods, this article tests the generation loss of different images, and the results are shown in Figure 4 (vertical coordinates 10 to 500 represent the number of images, and horizontal coordinates 0 to 0.2 represent the loss in digital image generation):

Figure 4.

Comparison of digital image generation losses using different methods [1,2].

From Figure 4, it can be seen that when the number of digital images is 10, the digital image generation loss in the method in [1] reaches the minimum of 0.042, while the generation loss in the method in [2] is 0.025. The digital image generation loss in the method in this paper is 0.011, which is 0.031 less than the method in [1] and 0.014 less than the method in [2]. When the number of digital images is 500, the digital image generation loss in the method in [1] is 0.142; the generation loss in the method in [2] is 0.082; the digital image generation loss in the method in this paper is 0.017. The generation loss in the method in this paper is 0.125 less than that of the method in [1], and 0.065 less than that of the method in [2]. From this, it can be seen that the method proposed in this article has the smallest generation loss and good image generation effect, and is relatively standardized. This is because the image generative model in this paper has undergone virtual interaction design, which superimposes the rotated and translated target image on the background image, making the generated image more close to the confirmation image.

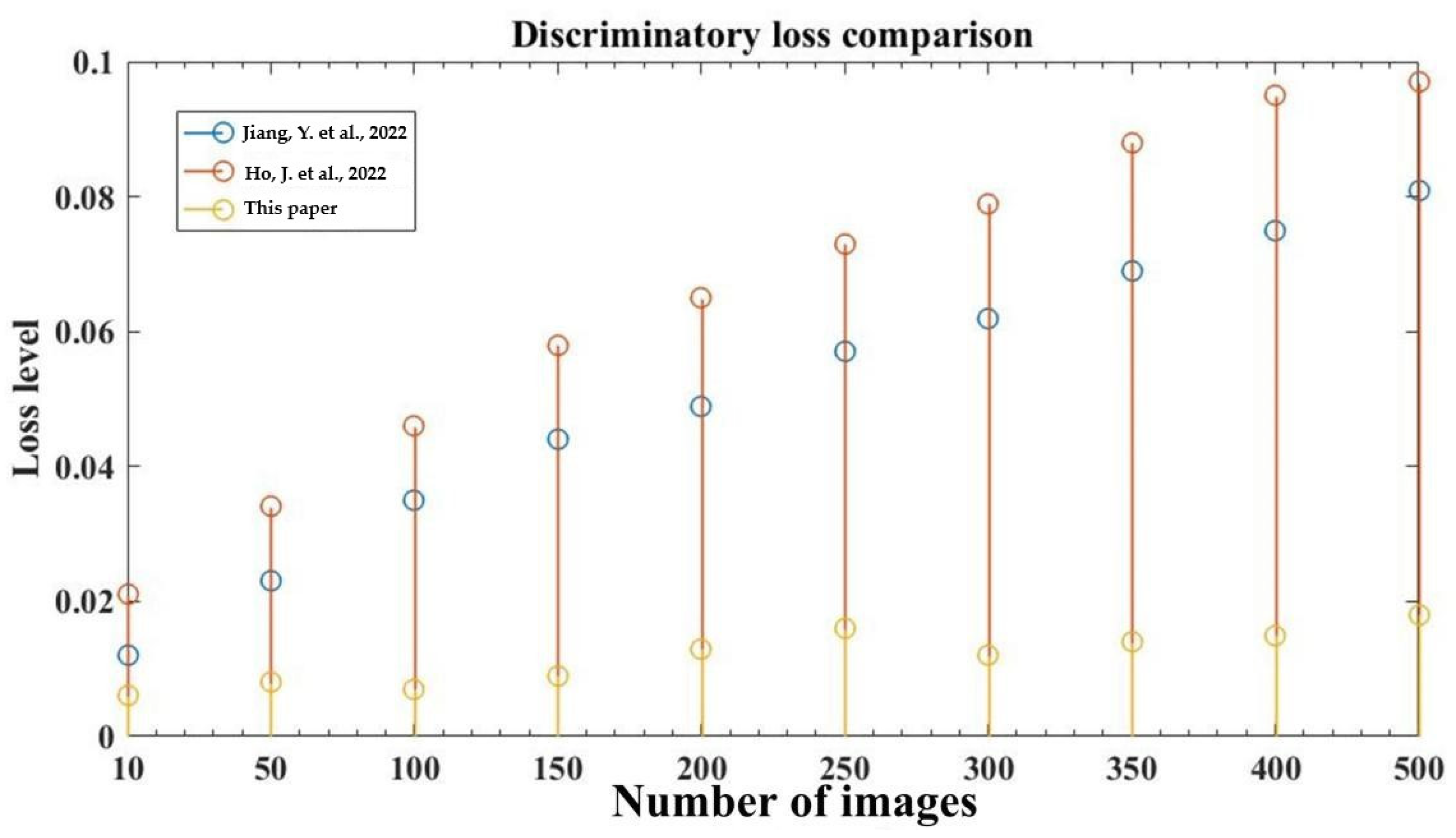

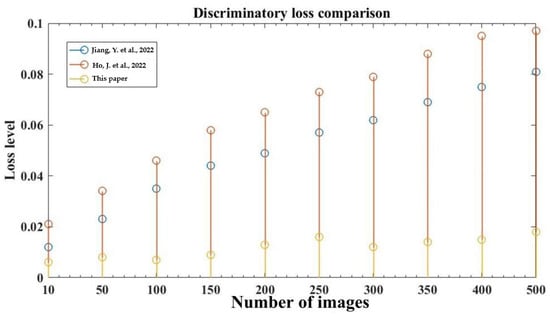

3.2.4. Comparison of Discriminatory Losses

After testing the generation loss of the image, it is also necessary to test its discrimination loss. Generally speaking, the smaller the discrimination loss, the better the fitting degree of the model and the better the image quality. This article compares the discriminating losses of three models, and the results are shown in Figure 5 (the horizontal axis 10 to 500 represents the number of images, and the vertical axis 0 to 0.1 represents the loss in digital image discrimination):

Figure 5.

Comparison of digital image discrimination losses using different methods [1,2].

From Figure 5, it can be seen that when the number of images is 10, the digital image discrimination loss in the method in [1] is 0.012; the digital image discrimination loss in the method in [2] is 0.021; the digital image discrimination loss in the method in this paper is 0.006. When the number of images is 100, the digital image discrimination loss in the method in [1] is 0.035; the digital image discrimination loss in the method in [2] is 0.046; the digital image discrimination loss in the method in this paper is 0.007. When the number of images is 500, the digital image discrimination loss in the method in [1] is 0.081; the digital image discrimination loss in the method in [2] is 0.097; the digital image discrimination loss in the method in this paper is 0.018. When the number of images is 10, the discrimination loss in the method in this paper is 0.006 lower than that of the method in [1], and 0.015 lower than that of the method in [2]. It can be seen that the discriminant loss in this method is lower than that of [1,2], and the quality of the generated images is much higher than that of [1,2].

3.2.5. Image Generation Similarity Comparison

This article intends to use structural similarity (SSIM) as an evaluation indicator to study the comprehensive quality of digital image generation under three methods. SSIM is not simply a pixel level similarity calculation between the generated character images and annotated images. It is a comprehensive quality evaluation indicator for the generated images. The higher the similarity value, the better the quality of the digital image formed. The similarity of image structure in three ways is shown in Figure 2.

From Table 2, it can be seen that as more images are generated, the similarity value of image structure gradually decreases. However, overall, the image structure similarity value of the method proposed in this paper is much higher than that of the methods proposed in [1,2]. When generating 10 images, the image structure similarity value of the method in [1] is 0.649, the image structure similarity value of the method in [2] is 0.687, and the image structure similarity value of the method in this paper is 0.895. When the number of generated images is 500, the image structure similarity value of the method in [1] is 0.601, the image structure similarity value of the method in [2] is 0.629, and the image structure similarity value of the method in this paper is 0.847. It can be seen that among the three methods, the image structure similarity value of the method proposed in this paper is highest, second highest in [2], and the image structure similarity value of the method proposed in [1] is the lowest. This indicates that the image generation quality under this method is the best, and the pixel resolution of the generated digital image is the best, which is more in line with current needs.

Table 2.

Comparison of image structure similarity generated by three methods.

4. Conclusions

The rapid progress of technology is driving the rapid development of various industries and posing different challenges to different fields. In terms of digital image generation, how to improve the effectiveness of digital image generation has become a hot topic for many scholars to explore. The theme of this paper is the analysis of digital image generation based on virtual interaction design under blockchain technology. First, it introduced the relevant background of this research, then summarized and analyzed the advantages and disadvantages of previous scholars’ research on image generation, and then described its image generation theory. On this basis, this paper constructs a new generative model combining the virtual interaction design under current blockchain technology, and finds that this method is feasible through empirical research. Experiments prove that applying virtual interaction design under blockchain technology to digital image generation can not only increase the diversity of image generation, but also improve the quality of image generation, making the generation loss and discrimination loss in generated images better than traditional digital image generation methods. In addition, its image generation time is also better than other methods, which can greatly reduce image generation time and improve generation speed, and has good market promotion and application prospects. Due to practical limitations, there are still some shortcomings in the research on the stability of image generation and multi-objective image generation in this article. However, there are also some shortcomings in this article. Due to practical limitations, this article only explores the diversity, speed, and quality of image generation, and has not conducted research on the stability of image generation. Therefore, there may be some mode crashes. At the same time, this article’s research is limited to image generation for a single target, and further exploration is needed for multi-objective image generation in the future.

Funding

The author received no financial support for the research, authorship, and/or publication of this article.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Jiang, Y.; Yang, S.; Qiu, H.; Wu, W.; Loy, C.C.; Liu, Z. Text2human: Text-driven controllable human image generation. ACM Trans. Graph. (TOG) 2022, 41, 1–11. [Google Scholar] [CrossRef]

- Ho, J.; Saharia, C.; Chan, W.; Fleet, D.J.; Norouzi, M.; Salimans, T. Cascaded Diffusion Models for High Fidelity Image Generation. J. Mach. Learn. Res. 2022, 23, 2249–2281. [Google Scholar]

- Niu, S.; Li, B.; Wang, X.; Lin, H. Defect Image Sample Generation with GAN for Improving Defect Recognition. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1611–1622. [Google Scholar] [CrossRef]

- Choi, H.; Lee, D.S. Generation of Structural MR Images from Amyloid PET: Application to MR-Less Quantification. J. Nucl. Med. 2018, 59, 1111–1117. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.G.; Lim, H.-T.; Ro, Y.M. Deep Virtual Reality Image Quality Assessment with Human Perception Guider for Omnidirectional Image. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 917–928. [Google Scholar] [CrossRef]

- Madhusudana, P.C.; Soundararajan, R. Subjective and Objective Quality Assessment of Stitched Images for Virtual Reality. IEEE Trans. Image Process. 2019, 28, 5620–5635. [Google Scholar] [CrossRef] [PubMed]

- Saad, A.H.S.; Mohamed, M.S.; Hafez, E.H. Coverless Image Steganography Based on Jigsaw Puzzle Image Generation. Comput. Mater. Contin. 2021, 67, 2077–2091. [Google Scholar]

- Amhmed, B. Distributed System Design Based on Image Processing Technology and Resource State Synchronization Method. Distrib. Process. Syst. 2021, 2, 28–35. [Google Scholar] [CrossRef]

- Kavita, U. Visual Intelligent Recognition System based on Visual Thinking. Kinet. Mech. Eng. 2021, 2, 46–54. [Google Scholar] [CrossRef]

- Zheng, Z.; Xie, S.; Dai, H.-N.; Chen, X.; Wang, H. Blockchain challenges and opportunities: A survey. Int. J. Web Grid Serv. 2018, 14, 352–375. [Google Scholar] [CrossRef]

- Kim, J. Blockchain Supply Information Sharing Management System Based on Embedded System. Distrib. Process. Syst. 2022, 3, 60–77. [Google Scholar] [CrossRef]

- Xu, M.; Chen, X.; Kou, G. A systematic review of blockchain. Financ. Innov. 2019, 5, 27. [Google Scholar] [CrossRef]

- Liao, J.Y. Self-employment in the Construction Machinery Industry with Blockchain Technology in Mind. Kinet. Mech. Eng. 2022, 3, 20–28. [Google Scholar] [CrossRef]

- Gorkhali, A.; Li, L.; Shrestha, A. Blockchain: A literature review. J. Manag. Anal. 2020, 7, 321–343. [Google Scholar] [CrossRef]

- Zhang, R.; Xue, R.; Liu, L. Security and Privacy on Blockchain. ACM Comput. Surv. 2019, 52, 1–34. [Google Scholar] [CrossRef]

- Kumar, A.; Abhishek, K.; Nerurkar, P.; Ghalib, M.R.; Shankar, A.; Cheng, X. Secure smart contracts for cloud-based manufacturing using Ethereum blockchain. Trans. Emerg. Telecommun. Technol. 2020, 33, e4129. [Google Scholar] [CrossRef]

- Bashabsheh, A.K.; Alzoubi, H.H.; Ali, M.Z. The application of virtual reality technology in architectural pedagogy for building constructions. Alex. Eng. J. 2019, 58, 713–723. [Google Scholar] [CrossRef]

- Huang, G.; Jafari, A.H. Enhanced balancing GAN: Minority-class image generation. Neural Comput. Appl. 2021, 35, 5145–5154. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).