A Transfer Learning-Based Pairwise Information Extraction Framework Using BERT and Korean-Language Modification Relationships

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Collection and Tagging

2.1.1. Data Collection

2.1.2. Data Tagging

2.2. Transfer Learning-Based Pairwise Information Extraction Framework

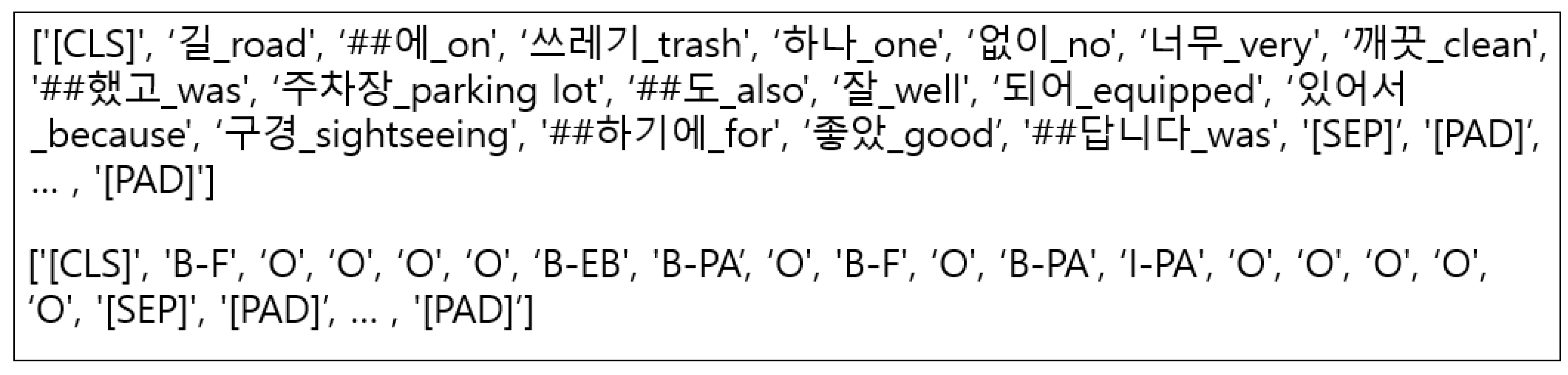

2.2.1. BERT-Based Transfer Learning Model for the Named Entity Recognition Algorithm of Modification Relationships

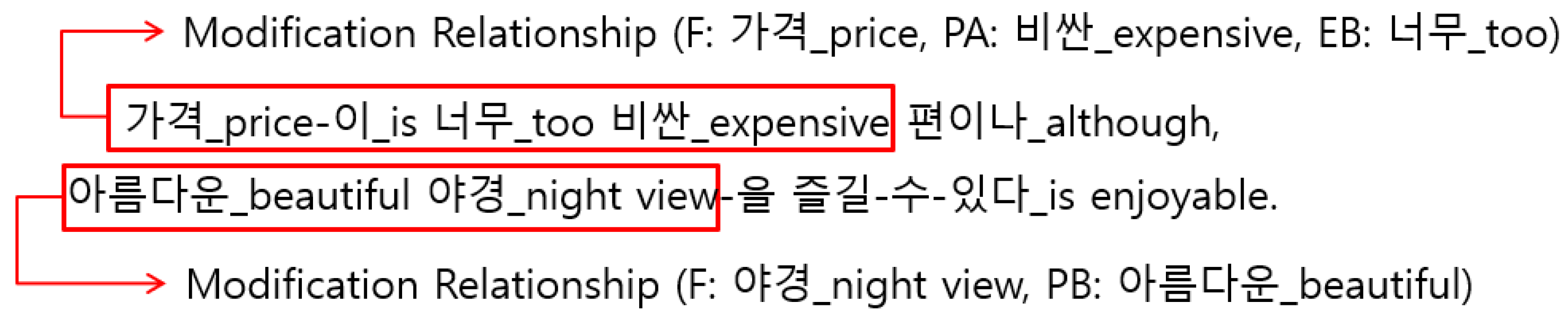

2.2.2. Modification-Relationships Extraction Algorithm

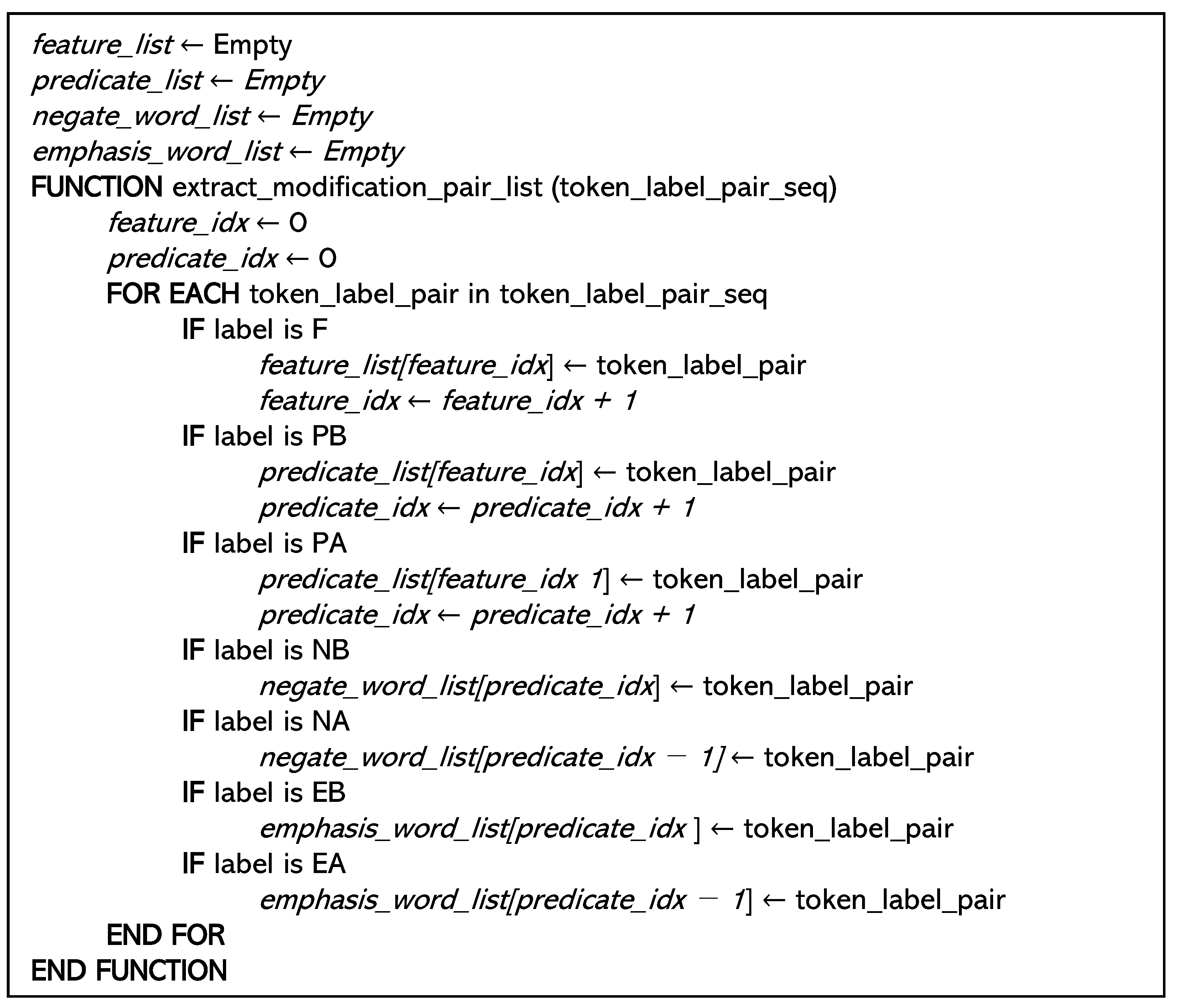

- If a new feature is found while running the for-loop for each vocabulary token and named-entity token pair list in a sentence, the found feature is added to the feature list first, and the index of the feature list is increased by one for the next pair of modification relationships;

- If a PB tag, which represents the prepositional predicate, is found, the corresponding vocabulary and named-entity tokens are added to the predicate list with the current feature index;

- In the case of a PA tag, which represents the postpositional predicate, the index of the predicate list is set to −1 at the current feature index since it modifies the previously located feature;

- Negation words and emphasis words that modify the predicate are similarly stored in the negation word list and the emphasis word list using the current predicate index in the case of prepositional tags and the previous predicate index in the case of postpositional tags;

- The algorithm finally returns the predicate list and its modifying feature list with identical indices if they are in the modifying relationships. Additionally, it returns negation word and emphasis word lists with identical indices to their modifying predicate in the predicate list.

3. Experiments and Results

3.1. Experimental Environments and Metrics

3.2. Experimental Results and Discussion

4. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tikayat Ray, A.; Fischer, O.J.; Mavris, D.N.; White, R.T.; Cole, B.F. aeroBERT-NER: Named-Entity Recognition for Aerospace Requirements Engineering using BERT. In Proceedings of the AIAA SCITECH 2023 Forum, National Harbor, MD, USA, 23–27 January 2023; p. 2583. [Google Scholar]

- Zhang, Y.; Zhang, H. FinBERT–MRC: Financial Named Entity Recognition Using BERT Under the Machine Reading Comprehension Paradigm. Neural Process. Lett. 2023, 55, 7393–7413. [Google Scholar] [CrossRef]

- Lv, X.; Xie, Z.; Xu, D.; Jin, X.; Ma, K.; Tao, L.; Qiu, Q.; Pan, Y. Chinese named entity recognition in the geoscience domain based on bert. Earth Space Sci. 2022, 9, e2021EA002166. [Google Scholar] [CrossRef]

- Akhtyamova, L. Named entity recognition in Spanish biomedical literature: Short review and BERT model. In Proceedings of the 2020 26th Conference of Open Innovations Association (FRUCT), Yaroslavl, Russia, 20–24 April 2020; pp. 1–7. [Google Scholar]

- Kim, Y.M.; Lee, T.H. Korean clinical entity recognition from diagnosis text using BERT. BMC Med. Inform. Decis. Mak. 2020, 20, 242. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; p. 30. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Syed, M.H.; Chung, S.T. MenuNER: Domain-adapted BERT based NER approach for a domain with limited dataset and its application to food menu domain. Appl. Sci. 2021, 11, 6007. [Google Scholar] [CrossRef]

- Yang, R.; Gan, Y.; Zhang, C. Chinese Named Entity Recognition Based on BERT and Lightweight Feature Extraction Model. Information 2022, 13, 515. [Google Scholar] [CrossRef]

- Agrawal, A.; Tripathi, S.; Vardhan, M.; Sihag, V.; Choudhary, G.; Dragoni, N. BERT-based transfer-learning approach for nested named-entity recognition using joint labeling. Appl. Sci. 2022, 12, 976. [Google Scholar] [CrossRef]

- Li, W.; Du, Y.; Li, X.; Chen, X.; Xie, C.; Li, H.; Li, X. UD_BBC: Named entity recognition in social network combined BERT-BiLSTM-CRF with active learning. Eng. Appl. Artif. Intell. 2022, 116, 105460. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, S.; Jiang, D.W.; Chen, G. BERT-JAM: Maximizing the utilization of BERT for neural machine translation. Neurocomputing 2021, 460, 84–94. [Google Scholar] [CrossRef]

- Wu, X.; Xia, Y.; Zhu, J.; Wu, L.; Xie, S.; Qin, T. A study of BERT for context-aware neural machine translation. Mach. Learn. 2022, 111, 917–935. [Google Scholar] [CrossRef]

- Yan, R.; Li, J.; Su, X.; Wang, X.; Gao, G. Boosting the Transformer with the BERT Supervision in Low-Resource Machine Translation. Appl. Sci. 2022, 12, 7195. [Google Scholar] [CrossRef]

- Zhang, Z.; Han, X.; Liu, Z.; Jiang, X.; Sun, M.; Liu, Q. ERNIE: Enhanced language representation with informative entities. arXiv 2019, arXiv:1905.07129. [Google Scholar]

- Yamada, I.; Asai, A.; Shindo, H.; Takeda, H.; Matsumoto, Y. Luke: Deep contextualized entity representations with entity-aware self-attention. arXiv 2020, arXiv:2010.01057. [Google Scholar]

- Jeong, H.; Kwak, J.; Kim, J.; Jang, J.; Lee, H. A Study on Methods of Automatic Extraction of Korean-Language Modification Relationships for Sentiment analysis. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; pp. 544–546. [Google Scholar]

- Wu, Y.; Schuster, M. Google’s neural machine translation system: Bridging the gap between human and machine translation. arXiv 2016, arXiv:1609.08144. [Google Scholar]

- Graves, A. Generating sequences with recurrent neural networks. arXiv 2013, arXiv:1308.0850. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Huggingface’s transformers: State-of-the-art natural language processing. arXiv 2019, arXiv:1910.03771. [Google Scholar]

- Huggingface Tokenizers: Fast State-of-the-Art Tokenizers optimized for Research and Production. Available online: https://github.com/huggingface/tokenizers (accessed on 26 December 2023).

- Lee, J. Kcbert: Korean comments bert. In Proceedings of the Annual Conference on Human and Language Technology, Lisboa, Portugal, 3–5 November 2020; pp. 437–440. [Google Scholar]

- Huggingface Model Hub. Available online: https://huggingface.co/models (accessed on 26 December 2023).

- Naver News. Available online: https://news.naver.com/ (accessed on 26 December 2023).

| Modification Relationship | Type of Word Order | Example Phrase or Sentence |

|---|---|---|

| Prepositional modification | (Predicate, Noun) | 아름다운_beautiful 야경_night view |

| Prepositional modification and Prepositional negation | (Negation, Predicate, Noun) | 안_not 예쁜_beautiful 야경_night view |

| Prepositional modification and Postpositional negation | (Predicate, Negation, Noun) | 아름답지_beautiful 않은_not 야경_night view |

| Postpositional modification | (Noun, Predicate) | 야경_night view-이_is 아름답습니다_beautiful |

| Postpositional modification and Prepositional negation | (Noun, Negation, Predicate) | 야경_night view-이_is 안_not 예쁩니다_beautiful |

| Postpositional modification and Postpositional negation | (Noun, Predicate, Negation) | 야경_night view-이_is 아름답지_beautiful 않습니다_not |

| Tag | Description |

|---|---|

| F | Noun word/s representing the name of feature |

| PB | Predicate word/s modifying feature comes Before F |

| PA | Predicate word/s modifying feature comes After F |

| NB | Negation word/s comes Before PB or PA |

| NA | Negation word/s comes After PB or PA |

| EB | Emphasis words comes Before PB or PA |

| EA | Emphasis words comes After PB or PA |

| Model | Precision | Recall | F1-Score |

|---|---|---|---|

| Domain-specific Model | 82.1 | 79.5 | 80.8 |

| koBERT based | |||

| Fine-tuning Model | 79.2 | 81.2 | 80.2 |

| Our proposed Model | 83.1 | 80.7 | 81.9 |

| kcbert-large based | |||

| Fine-tuning Model | 81.2 | 81.8 | 81.5 |

| Our proposed Model | 85.3 | 81.1 | 83.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, H. A Transfer Learning-Based Pairwise Information Extraction Framework Using BERT and Korean-Language Modification Relationships. Symmetry 2024, 16, 136. https://doi.org/10.3390/sym16020136

Jeong H. A Transfer Learning-Based Pairwise Information Extraction Framework Using BERT and Korean-Language Modification Relationships. Symmetry. 2024; 16(2):136. https://doi.org/10.3390/sym16020136

Chicago/Turabian StyleJeong, Hanjo. 2024. "A Transfer Learning-Based Pairwise Information Extraction Framework Using BERT and Korean-Language Modification Relationships" Symmetry 16, no. 2: 136. https://doi.org/10.3390/sym16020136

APA StyleJeong, H. (2024). A Transfer Learning-Based Pairwise Information Extraction Framework Using BERT and Korean-Language Modification Relationships. Symmetry, 16(2), 136. https://doi.org/10.3390/sym16020136