An Extensive Investigation into the Use of Machine Learning Tools and Deep Neural Networks for the Recognition of Skin Cancer: Challenges, Future Directions, and a Comprehensive Review

Abstract

:1. Introduction

- A detailed and comprehensive survey involves almost all the present ML and DL algorithms, their brief survey, positives, drawbacks, and their application in skin cancer detection;

- A specific tabular overview of research on DL and ML methods for detecting and diagnosing skin cancer is presented. Important contributions, as well as their limitations, are included in the tabulated overview;

- The article also outlines several current open research issues and potential future research paths for advancements in the diagnosis of skin cancer;

- This study thoroughly explains the supervised and unsupervised learning algorithms involved in cancer detection.

2. Research Methodology and ML Algorithms for the Diagnosis of Skin Cancer

2.1. Random Forest

2.2. K Nearest Neighbors (KNN)

2.3. Support Vector Machine (SVM)

2.4. Naïve Bayes Classifier (NBC)

2.5. Linear Regression (LR)

2.6. K-Means Clustering (KMC)

2.7. Ensemble Learning (EL)

2.8. Long Short-Term Memory (LSTM)

2.9. Decision Tree (DT)

2.10. Auto-Regressive Integrated Moving Average (ARIMA)

3. Artificial Neural Network in Skin Lesion Detection

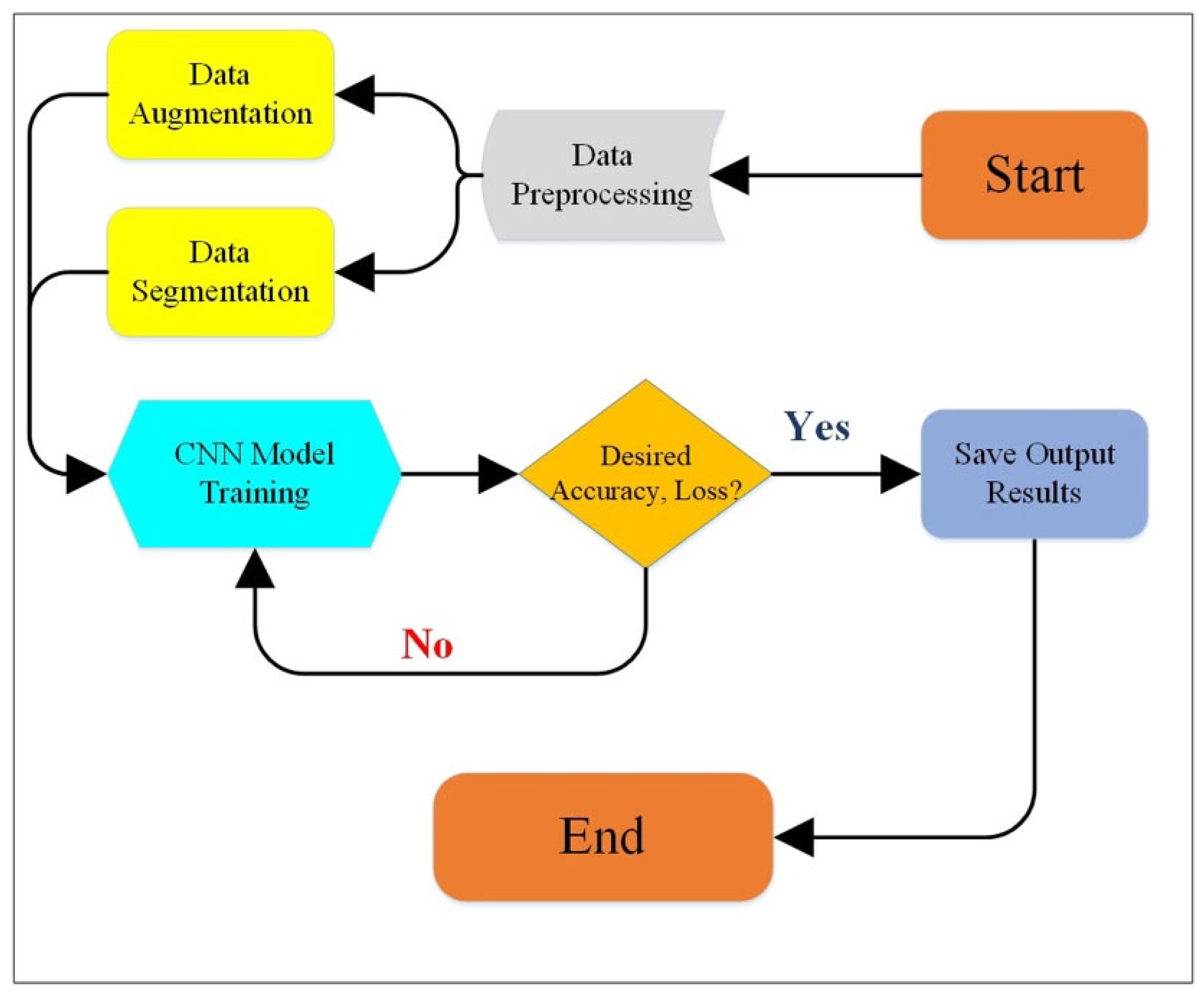

4. CNN in the Detection of Skin Cancer

Validation Metrics in ML

5. Challenges and Future Scope

- Non-public databases and images collected through the World Wide Web are employed for research when publicly accessible information is not available. Because of this, replicating the results is more challenging, considering a dataset is not available.

- Additionally, most studies have found that lesion scaling is significant if it is less than 6 mm, which makes it impossible to diagnose melanoma and considerably reduces the efficacy of the diagnostic.

- Most of the methods concentrate on fundamental deep-learning techniques. Fusion methods, on the other hand, are reported more accurately. Despite this, fusion methods for datasets are not as frequently documented in the literature.

- It has been discovered that deep learning techniques properly identify 70% of training images and thirty percent of testing images. On the other hand, results indicate that a high training ratio is required to achieve satisfactory results. When the ideal balance is achieved, deep learning techniques perform effectively. Developing hybrid techniques that perform better with lower training ratios is a difficult task.

- An annual melanoma diagnosis competition has been organized by the International Skin Imaging Collaboration (ISIC) since 2016, yet one of the limitations of ISIC is the availability of only light-skinned data. For the images to be featured in the databases, they must have dark hair.

- For a more accurate diagnosis of skin cancer and to extract the features of an image, the artificial neural network needs a lot of processing capacity and a strong GPU. Because deep learning has limited processing power, it is challenging to develop algorithms for skin cancer detection.

- The inefficiency of employing neural networks for skin cancer diagnosis is one of the most significant issues. Before the system can effectively analyze and interpret the characteristics from picture data, it must go through a rigorous training process that takes a lot of patience and exceptionally powerful hardware.

6. Results, Discussion, and Conclusions

| Ref. | Algorithms | Limitations and Novel Contributions | Results |

|---|---|---|---|

| [3] | SVM | CNN models have been implemented in this work, but SVM has outperformed all the CNN algorithms by showing the best results and classifying the types of skin cancer. | ACC.: 99%, PREC.: 0.99, RECALL:0.99 F1: 0.99 |

| ResNet 50 | Implemented the CNN models for the detection and classification of skin cancer using the HAM10000 datasets, where Adam is used as an optimizer. | ACC.: 83%, PREC.: 0.81, RECALL: 0.83 F1: 0.78 | |

| MobileNet | ACC.: 72%, PREC.: 0.86, RECALL: 0.72 F1: 0.77 | ||

| [102] | SVM+CNN | DL techniques for lesion categorization and segmentation. Skin color variances can cause it to operate less well than necessary, as was previously indicated. Transfer learning is encouraged because the sample size is small. | ACC.: 92% |

| [106] | ANN | Pre-processing and the smooth bootstrap technique are employed before data augmentation. Features are extracted from a pre-processed image. | ACC.: 85.93%, SPEC.: 85.89%, SENS.: 88.78% |

| [107] | DL + K-Means Clustering | An automated approach that uses preprocessing to reduce noise and improve visual information segments of skin melanoma at an early stage using quicker RCNN and FKM clustering based on deep learning. The technique helps dermatologists identify the potentially fatal illness early on through testing with clinical images. | ACC.: 95.40%, SPEC.: 97.10%, SENS.: 90.00% |

| [108] | RCNN | Utilizing RCNN enhances segmentation efficacy by computing deep features. The given method is not scalable and is difficult, which results in overhead costs. | ACC.: 94.78%, SPEC.: 94.18%, SENS.: 97.61% |

| [109] | GRU/IOPA | Images of skin lesions are pre-processed, the lesion is segmented, features are extracted on HAM10000, detecting skin cancer with enhanced orca predation algorithm (IOPA) and gated recurrent unit (GRU) networks. | ACC.: 99%, SPEC.: 97%, SENS.: 95% |

| [110] | Deep Learning model | Lesion classification and segmentation were carried out with 2000 photos from the ISIC dataset and created a multiscale FCRN deep learning network. | ACC.: 75.11%, SPEC.: 84.44%, SENS.: 90.88% |

| [111] | ResFCN | A new automated technique for segmenting skin lesions has been created. Utilizing a step-by-step, probability-based technique, it integrates complementary segmentation results after identifying the distinct visual characteristics for each category (melanoma versus non-melanoma) through a deep, group-specific learning approach. Because the process is non-scalable and difficult, it results in additional costs. | ACC.: 94.29%, SPEC.: 93.05%, SENS.: 93.77% |

| [112] | SVM + ANN | The use of SVM and several ANN structures for accuracy, performance, and image categorization of human skin lesion are discussed. When multiple algorithms are compared, SVM performs better than others, like the Gaussian kernel. | ACC.: 96.78%, SPEC.: 89.29%, SENS.: 95.44% |

| Ref. | Datasets | Algorithm | Results % | ||

|---|---|---|---|---|---|

| Specificity | Accuracy | Sensitivity | |||

| [107] | ISBI 2016 | Fuzzy c-means + Deep RCNN | 95.10 | 94.31 | 94.04 |

| [115] | ISIC | Novel Regularizer + CNN | 94.26 | 97.50 | 93.59 |

| [116] | ISBI-2018 | InceptionNet V3 + ResNet Ensemble | 86.31 | 88.97 | 79.58 |

| [117] | Dermis, DermQuest | CNN Optimized | 99.37 | 92.95 | 93.87 |

| [117] | ISBI-2017 | CNN + LDA | 52.67 | 85.15 | 97.38 |

| [118] | PH2 | DCNN | 92.78 | 94.91 | 93.92 |

| [119] | MED Node | DNN + Transfer Learning | 97.20 | 97.37 | 97.52 |

| [120] | ISBI-2017 | DenseNet + IcNR | 93 | 93.43 | NA |

| [121] | ISBI-2016 | VGG16 + GoogleNet | 70.03 | 88.92 | 93.75 |

| [122] | PH2 | VGG16 + AlexNet | 99.77 | 97.51 | 96.90 |

| Ref. | Datasets | Algorithm | Results % | ||

|---|---|---|---|---|---|

| Specificity | Accuracy | Sensitivity | |||

| [28] | ISIC 2019 | ResNet 50 + Sand Cat Swarm Optimization | 93.47 | 92.03 | 92.56 |

| [38] | ISIC 2018 | Ensemble Learning of ML and DL | 92.3 | 93 | 94 |

| [123] | HAM10000 | Randon Forest DNN | 97.59 | 96.80 | 66.11 |

| [124] | ISIC 2020 | Contextual image feature fusion (CIFF). NET | 96.8 | 98.3 | 40.1 |

| [125] | ISIC 2020 | Teacher Student | 96.21 | 95.2 | 31.03 |

| [126] | ISIC 2018 | Lightweight U Architecture (Lea Net) | 96.2 | 93.5 | 89.9 |

| [127] | ISIC 2017 | ResU-Net | 94.49 | 92.38 | 87.31 |

| [128] | HAM10000 | Deep Convolutional Ensemble Net DCEN | 84.79 | 99.53 | 98.58 |

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shah, A.; Shah, M.; Pandya, A.; Sushra, R.; Sushra, R.; Mehta, M.; Patel, K.; Patel, K. A Comprehensive Study on Skin Cancer Detection using Artificial Neural Network (ANN) and Convolutional Neural Network (CNN). Clin. eHealth 2023, 6, 76–84. [Google Scholar] [CrossRef]

- Narmatha, P.; Gupta, S.; Lakshmi, T.V.; Manikavelan, D. Skin cancer detection from dermoscopic images using Deep Siamese domain adaptation convolutional Neural Network optimized with Honey Badger Algorithm. Biomed. Signal Process. Control 2023, 86, 105264. [Google Scholar] [CrossRef]

- Mampitiya, L.I.; Rathnayake, N.; De Silva, S. Efficient and low-cost skin cancer detection system implementation with a comparative study between traditional and CNN-based models. J. Comput. Cogn. Eng. 2023, 2, 226–235. [Google Scholar] [CrossRef]

- Murugan, A.; Nair, S.A.H.; Preethi, A.A.P.; Kumar, K.S. Diagnosis of skin cancer using machine learning techniques. Microprocess. Microsyst. 2021, 81, 103727. [Google Scholar] [CrossRef]

- Tabrizchi, H.; Parvizpour, S.; Razmara, J. An improved VGG model for skin cancer detection. Neural Process. Lett. 2023, 55, 3715–3732. [Google Scholar] [CrossRef]

- Ali, M.S.; Miah, M.S.; Haque, J.; Rahman, M.M.; Islam, M.K. An enhanced technique of skin cancer classification using deep convolutional neural network with transfer learning models. Mach. Learn. Appl. 2021, 5, 100036. [Google Scholar] [CrossRef]

- Ahmad, I.; Ilyas, H.; Hussain, S.I.; Raja, M.A.Z. Evolutionary Techniques for the Solution of Bio-Heat Equation Arising in Human Dermal Region Model. Arab. J. Sci. Eng. 2023, 49, 3109–3134. [Google Scholar] [CrossRef]

- Tahir, M.; Naeem, A.; Malik, H.; Tanveer, J.; Naqvi, R.A.; Lee, S.W. DSCC_Net: Multi-Classification Deep Learning Models for Diagnosing of Skin Cancer Using Dermoscopic Images. Cancers 2023, 15, 2179. [Google Scholar] [CrossRef]

- Arshed, M.A.; Mumtaz, S.; Ibrahim, M.; Ahmed, S.; Tahir, M.; Shafi, M. Multi-Class Skin Cancer Classification Using Vision Transformer Networks and Convolutional Neural Network-Based Pre-Trained Models. Information 2023, 14, 415. [Google Scholar] [CrossRef]

- Veeramani, N.; Jayaraman, P.; Krishankumar, R.; Ravichandran, K.S.; Gandomi, A.H. DDCNN-F: Double decker convolutional neural network’F’feature fusion as a medical image classification framework. Sci. Rep. 2024, 14, 676. [Google Scholar] [CrossRef]

- Shetty, B.; Fernandes, R.; Rodrigues, A.P.; Chengoden, R.; Bhattacharya, S.; Lakshmanna, K. Skin lesion classification of dermoscopic images using machine learning and convolutional neural network. Sci. Rep. 2022, 12, 18134. [Google Scholar] [CrossRef] [PubMed]

- Giansanti, D. Advancing Dermatological Care: A Comprehensive Narrative Review of Tele-Dermatology and mHealth for Bridging Gaps and Expanding Opportunities beyond the COVID-19 Pandemic. Healthcare 2023, 11, 1911. [Google Scholar] [CrossRef] [PubMed]

- Lai, W.; Kuang, M.; Wang, X.; Ghafariasl, P.; Hosein Sabzalian, M.; Lee, S. Skin cancer diagnosis (SCD) using Artificial Neural Network (ANN) and Improved Gray Wolf Optimization (IGWO). Nat. Sci. Rep. 2022, 13, 19377. [Google Scholar] [CrossRef] [PubMed]

- Nassir, J.; Alasabi, M.; Qaisar, S.M.; Khan, M. Epileptic Seizure Detection Using the EEG Signal Empirical Mode Decomposition and Machine Learning. In Proceedings of the 2023 International Conference on Smart Computing and Application (ICSCA), Hail, Saudi Arabia, 5–6 February 2023; pp. 1–6. [Google Scholar]

- Khan, S.I.; Qaisar, S.M.; López, A.; Nisar, H.; Ferrero, F. EEG Signal based Schizophrenia Recognition by using VMD Rose Spiral Curve Butterfly Optimization and Machine Learning. In Proceedings of the 2023 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Kuala Lumpur, Malaysia, 22–25 May 2023; pp. 1–6. [Google Scholar]

- Pietkiewicz, P.; Giedziun, P.; Idziak, J.; Todorovska, V.; Lewandowicz, M.; Lallas, A. Diagnostic accuracy of hyperpigmented microcircles in dermatoscopy of non-facial non-acral melanomas: A Pilot Retrospective Study using a Public Image Database. Dermatology 2023, 239, 976–987. [Google Scholar] [CrossRef] [PubMed]

- Khristoforova, Y.; Bratchenko, I.; Bratchenko, L.; Moryatov, A.; Kozlov, S.; Kaganov, O.; Zakharov, V. Combination of Optical Biopsy with Patient Data for Improvement of Skin Tumor Identification. Diagnostics 2022, 12, 2503. [Google Scholar] [CrossRef]

- Greenwood, J.D.; Merry, S.P.; Boswell, C.L. Skin biopsy techniques. Prim. Care Clin. Off. Pract. 2022, 49, 2503. [Google Scholar] [CrossRef]

- Acar, D.D.; Witkowski, W.; Wejda, M.; Wei, R.; Desmet, T.; Schepens, B.; De Cae, S.; Sedeyn, K.; Eeckhaut, H.; Fijalkowska, D.; et al. Integrating artificial intelligence-based epitope prediction in a SARS-CoV-2 antibody discovery pipeline: Caution is warranted. eBioMedicine 2024, 100, 104960. [Google Scholar] [CrossRef]

- Bhatt, H.; Shah, V.; Shah, K.; Shah, R.; Shah, M. State-of-the-art machine learning techniques for melanoma skin cancer detection and classification: A comprehensive review. Intell. Med. 2023, 3, 180–190. [Google Scholar] [CrossRef]

- Jones, O.T.; Matin, R.N.; van der Schaar, M.; Bhayankaram, K.P.; Ranmuthu, C.K.I.; Islam, M.S.; Behiyat, D.; Boscott, R.; Calanzani, N.; Emery, J.; et al. Artificial intelligence and machine learning algorithms for early detection of skin cancer in community and primary care settings: A systematic review. Lancet Digit. Health 2022, 4, e466–e476. [Google Scholar] [CrossRef]

- Imran, A.; Nasir, A.; Bilal, M.; Sun, G.; Alzahrani, A.; Almuhaimeed, A. Skin cancer detection using combined decision of deep learners. IEEE Access 2022, 10, 118198–118212. [Google Scholar] [CrossRef]

- Murugan, A.; Nair, S.A.H.; Kumar, K.S. Detection of skin cancer using SVM, random forest and kNN classifiers. J. Med. Syst. 2019, 43, 269. [Google Scholar] [CrossRef] [PubMed]

- Kalpana, B.; Reshmy, A.K.; Pandi, S.S.; Dhanasekaran, S. OESV-KRF: Optimal ensemble support vector kernel random forest based early detection and classification of skin diseases. Biomed. Signal Process. Control 2023, 85, 104779. [Google Scholar] [CrossRef]

- Juan, C.K.; Su, Y.H.; Wu, C.Y.; Yang, C.S.; Hsu, C.H.; Hung, C.L.; Chen, Y.J. Deep convolutional neural network with fusion strategy for skin cancer recognition: Model development and validation. Sci. Rep. 2023, 13, 17087. [Google Scholar] [CrossRef] [PubMed]

- Boadh, R.; Yadav, A.; Kumar, A.; Rajoria, Y.K. Diagnosis of Skin Cancer by Using Fuzzy-Ann Expert System with Unification of Improved Gini Index Random Forest-Based Feature. J. Pharm. Negat. Results 2023, 14, 1445–1451. [Google Scholar]

- Alshawi, S.A.; Musawi, G.F.K.A. Skin cancer image detection and classification by CNN based ensemble learning. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 710–717. [Google Scholar] [CrossRef]

- Akilandasowmya, G.; Nirmaladevi, G.; Suganthi, S.U.; Aishwariya, A. Skin cancer diagnosis: Leveraging deep hidden features and ensemble classifiers for early detection and classification. Biomed. Signal Process. Control. 2023, 88, 105306. [Google Scholar] [CrossRef]

- Zhu, A.Q.; Wang, Q.; Shi, Y.L.; Ren, W.W.; Cao, X.; Ren, T.T.; Wang, J.; Zhang, Y.Q.; Sun, Y.K.; Chen, X.W.; et al. A deep learning fusion network trained with clinical and high-frequency ultrasound images in the multi-classification of skin diseases in comparison with dermatologists: A prospective and multicenter study. eClinicalMedicine 2024, 67, 102391. [Google Scholar] [CrossRef]

- Kumar, T.K.; Himanshu, I.N. Artificial Intelligence Based Real-Time Skin Cancer Detection. In Proceedings of the 2023 15th International Conference on Computer and Automation Engineering (ICCAE), Sydney, Australia, 3–5 March 2023; pp. 215–219. [Google Scholar]

- Balaji, P.; Hung, B.T.; Chakrabarti, P.; Chakrabarti, T.; Elngar, A.A.; Aluvalu, R. A novel artificial intelligence-based predictive analytics technique to detect skin cancer. PeerJ Comput. Sci. 2023, 9, e1387. [Google Scholar] [CrossRef]

- Singh, S.K.; Abolghasemi, V.; Anisi, M.H. Fuzzy Logic with Deep Learning for Detection of Skin Cancer. Appl. Sci. 2023, 13, 8927. [Google Scholar] [CrossRef]

- Melarkode, N.; Srinivasan, K.; Qaisar, S.M.; Plawiak, P. AI-Powered Diagnosis of Skin Cancer: A Contemporary Review, Open Challenges and Future Research Directions. Cancers 2023, 15, 1183. [Google Scholar] [CrossRef]

- Nagaraj, P.; Saijagadeeshkumar, V.; Kumar, G.P.; Yerriswamyreddy, K.; Krishna, K.J. Skin Cancer Detection and Control Techniques Using Hybrid Deep Learning Techniques. In Proceedings of the 2023 3rd International Conference on Pervasive Computing and Social Networking (ICPCSN), Salem, India, 19–20 June 2023; pp. 442–446. [Google Scholar]

- Alhasani, A.T.; Alkattan, H.; Subhi, A.A.; El-Kenawy, E.S.M.; Eid, M.M. A comparative analysis of methods for detecting and diagnosing breast cancer based on data mining. Methods 2023, 7, 8–17. [Google Scholar]

- Chadaga, K.; Prabhu, S.; Sampathila, N.; Nireshwalya, S.; Katta, S.S.; Tan, R.S.; Acharya, U.R. Application of artificial intelligence techniques for monkeypox: A systematic review. Diagnostics 2023, 13, 824. [Google Scholar] [CrossRef] [PubMed]

- Keerthana, D.; Venugopal, V.; Nath, M.K.; Mishra, M. Hybrid convolutional neural networks with SVM classifier for classification of skin cancer. Biomed. Eng. Adv. 2023, 5, 100069. [Google Scholar] [CrossRef]

- Tembhurne, J.V.; Hebbar, N.; Patil, H.Y.; Diwan, T. Skin cancer detection using ensemble of machine learning and deep learning techniques. Multimed. Tools Appl. 2023, 82, 27501–27524. [Google Scholar] [CrossRef]

- Ul Huda, N.; Amin, R.; Gillani, S.I.; Hussain, M.; Ahmed, A.; Aldabbas, H. Skin Cancer Malignancy Classification and Segmentation Using Machine Learning Algorithms. JOM 2023, 75, 3121–3135. [Google Scholar] [CrossRef]

- Ganesh Babu, T.R. An efficient skin cancer diagnostic system using Bendlet Transform and support vector machine. An. Acad. Bras. Ciênc. 2020, 92, e20190554. [Google Scholar]

- Melbin, K.; Raj, Y.J.V. Integration of modified ABCD features and support vector machine for skin lesion types classification. Multimed. Tools Appl. 2021, 80, 8909–8929. [Google Scholar] [CrossRef]

- Alsaeed, A.A.D. On the development of a skin cancer computer aided diagnosis system using support vector machine. Biosci. Biotechnol. Res. Commun. 2019, 12, 297–308. [Google Scholar]

- Alwan, O.F. Skin cancer images classification using naïve bayes. Emergent J. Educ. Discov. Lifelong Learn. 2022, 3, 19–29. [Google Scholar]

- Balaji, V.R.; Suganthi, S.T.; Rajadevi, R.; Kumar, V.K.; Balaji, B.S.; Pandiyan, S. Skin disease detection and segmentation using dynamic graph cut algorithm and classification through Naive Bayes classifier. Measurement 2020, 163, 107922. [Google Scholar] [CrossRef]

- Mobiny, A.; Singh, A.; Van Nguyen, H. Risk-Aware Machine Learning Classifier for Skin Lesion Diagnosis. J. Clin. Med. 2019, 8, 1241. [Google Scholar] [CrossRef]

- Sutradhar, R.; Barbera, L. Comparing an artificial neural network to logistic regression for predicting ED visit risk among patients with cancer: A population-based cohort study. J. Pain Symptom Manag. 2020, 60, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Browning, A.P.; Haridas, P.; Simpson, M.J. A Bayesian sequential learning framework to parameterise continuum models of melanoma invasion into human skin. Bull. Math. Biol. 2019, 81, 676–698. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Sheykhahmad, F.R.; Ghadimi, N.; Razmjooy, N. Computer-aided diagnosis of skin cancer based on soft computing techniques. Open Med. 2020, 15, 860–871. [Google Scholar] [CrossRef] [PubMed]

- Razmjooy, N.; Ashourian, M.; Karimifard, M.; Estrela, V.V.; Loschi, H.J.; Do Nascimento, D.; França, R.P.; Vishnevski, M. Computer-aided diagnosis of skin cancer: A review. Curr. Med. Imaging 2020, 16, 781–793. [Google Scholar] [CrossRef]

- Alquran, H.; Qasmieh, I.A.; Alqudah, A.M.; Alhammouri, S.; Alawneh, E.; Abughazaleh, A.; Hasayen, F. The melanoma skin cancer detection and classification using support vector machine. In Proceedings of the 2017 IEEE Jordan Conference on Applied Electrical Engineering and Computing Technologies (AEECT), Amman, Jordan, 11–13 October 2017; pp. 1–5. [Google Scholar]

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Skin cancer classification using deep learning and transfer learning. In Proceedings of the 2018 9th Cairo International Biomedical Engineering Conference (CIBEC), Cairo, Egypt, 20–22 December 2018; pp. 90–93. [Google Scholar]

- Monika, M.K.; Vignesh, N.A.; Kumari, C.U.; Kumar, M.N.V.S.S.; Lydia, E.L. Skin cancer detection and classification using machine learning. Mater. Today Proc. 2020, 33, 4266–4270. [Google Scholar] [CrossRef]

- Kumar, M.; Alshehri, M.; AlGhamdi, R.; Sharma, P.; Deep, V. A DE-ANN inspired skin cancer detection approach using fuzzy c-means clustering. Mob. Netw. Appl. 2020, 25, 1319–1329. [Google Scholar] [CrossRef]

- Tang, G.; Xie, Y.; Li, K.; Liang, R.; Zhao, L. Multimodal emotion recognition from facial expression and speech based on feature fusion. Multimed. Tools Appl. 2023, 82, 16359–16373. [Google Scholar] [CrossRef]

- Zafar, M.; Sharif, M.I.; Sharif, M.I.; Kadry, S.; Bukhari, S.A.C.; Rauf, H.T. Skin lesion analysis and cancer detection based on machine/deep learning techniques: A comprehensive survey. Life 2023, 13, 146. [Google Scholar] [CrossRef]

- Chilamkurthy, S.; Ghosh, R.; Tanamala, S.; Biviji, M.; Campeau, N.G.; Venugopal, V.K.; Mahajan, V.; Rao, P.; Warier, P. Deep learning algorithms for detection of critical findings in head CT scans: A retrospective study. Lancet 2018, 392, 2388–2396. [Google Scholar] [CrossRef]

- Manna, A.; Kundu, R.; Kaplun, D.; Sinitca, A.; Sarkar, R. A fuzzy rank-based ensemble of CNN models for classification of cervical cytology. Sci. Rep. 2021, 11, 14538. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhang, L.; Hao, Z.; Yang, Z.; Wang, S.; Zhou, X.; Chang, Q. An xception model based on residual attention mechanism for the classification of benign and malignant gastric ulcers. Sci. Rep. 2022, 12, 15365. [Google Scholar] [CrossRef] [PubMed]

- Bozkurt, A.; Gale, T.; Kose, K.; Alessi-Fox, C.; Brooks, D.H.; Rajadhyaksha, M.; Dy, J. Delineation of skin strata in reflectance confocal microscopy images with recurrent convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 25–33. [Google Scholar]

- Chen, W.; Feng, J.; Lu, J.; Zhou, J. Endo3D: Online workflow analysis for endoscopic surgeries based on 2018, 3D CNN and LSTM. In OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis: First International Workshop, OR 2.0 2018, 5th International Workshop, CARE 2018, 7th International Workshop, CLIP 2018, Third International Workshop, ISIC 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 16 September and 20 September 2018; Proceedings 5; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 97–107. [Google Scholar]

- Attia, M.; Hossny, M.; Nahavandi, S.; Yazdabadi, A. Skin melanoma segmentation using recurrent and convolutional neural networks. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017; pp. 292–296. [Google Scholar]

- Alom, M.Z. Improved Deep Convolutional Neural Networks (DCNN) Approaches for Computer Vision and Bio-Medical Imaging. Ph.D. Thesis, University of Dayton, Dayton, OH, USA, 2018. [Google Scholar]

- Miotto, R.; Wang, F.; Wang, S.; Jiang, X.; Dudley, J.T. Deep learning for healthcare: Review, opportunities and challenges. Brief. Bioinform. 2018, 19, 1236–1246. [Google Scholar] [CrossRef] [PubMed]

- Elashiri, M.A.; Rajesh, A.; Pandey, S.N.; Shukla, S.K.; Urooj, S. Ensemble of weighted deep concatenated features for the skin disease classification model using modified long short term memory. Biomed. Signal Process. Control 2022, 76, 103729. [Google Scholar] [CrossRef]

- Victor, A.; Ghalib, M.R. Automatic Detection and Classification of Skin Cancer. Int. J. Intell. Eng. Syst. 2017, 10, 444–451. [Google Scholar] [CrossRef]

- Pham, T.C.; Tran, G.S.; Nghiem, T.P.; Doucet, A.; Luong, C.M.; Hoang, V.D. A comparative study for classification of skin cancer. In Proceedings of the 2019 International Conference on System Science and Engineering (ICSSE), Dong Hoi, Vietnam, 20–21 July 2019; pp. 267–272. [Google Scholar]

- Saba, T.; Khan, M.A.; Rehman, A.; Marie-Sainte, S.L. Region extraction and classification of skin cancer: A heterogeneous framework of deep CNN features fusion and reduction. J. Med. Syst. 2019, 43, 289. [Google Scholar] [CrossRef] [PubMed]

- Ghiasi, M.M.; Zendehboudi, S. Application of decision tree-based ensemble learning in the classification of breast cancer. Comput. Biol. Med. 2021, 128, 104089. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, T.; Voigt, M.D. Decision tree analysis to stratify risk of de novo non-melanoma skin cancer following liver transplantation. J. Cancer Res. Clin. Oncol. 2018, 144, 607–615. [Google Scholar] [CrossRef]

- Sun, J.; Huang, Y. Computer aided intelligent medical system and nursing of breast surgery infection. Microprocess. Microsyst. 2021, 81, 103769. [Google Scholar] [CrossRef]

- Quinn, P.L.; Oliver, J.B.; Mahmoud, O.M.; Chokshi, R.J. Cost-effectiveness of sentinel lymph node biopsy for head and neck cutaneous squamous cell carcinoma. J. Surg. Res. 2019, 241, 15–23. [Google Scholar] [CrossRef]

- Chin, C.K.; Binti Awang Mat, D.A.; Saleh, A.Y. Skin Cancer Classification using Convolutional Neural Network with Autoregressive Integrated Moving Average. In Proceedings of the 2021 4th International Conference on Robot Systems and Applications, Chengdu, China, 9–11 April 2021; pp. 18–22. [Google Scholar]

- Kumar, N.; Kumari, P.; Ranjan, P.; Vaish, A. ARIMA model based breast cancer detection and classification through image processing. In Proceedings of the 2014 Students Conference on Engineering and Systems, Allahabad, India, 29 May 2014; pp. 1–5. [Google Scholar]

- Verma, N.; Kaur, H. Traffic Analysis and Prediction System by the Use of Modified Arima Model. Int. J. Adv. Res. Comput. Sci. 2017, 8, 58–63. [Google Scholar] [CrossRef]

- Goyal, M.; Knackstedt, T.; Yan, S.; Hassanpour, S. Artificial intelligence-based image classification methods for diagnosis of skin cancer: Challenges and opportunities. Comput. Biol. Med. 2020, 127, 104065. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Pan, Y.; Zhao, J.; Zhang, L. Skin disease diagnosis with deep learning: A review. Neurocomputing 2021, 464, 364–393. [Google Scholar] [CrossRef]

- Alphonse, A.S.; Starvin, M.S. A novel and efficient approach for the classification of skin melanoma. J. Ambient Intell. Humaniz. Comput. 2021, 12, 10435–10459. [Google Scholar] [CrossRef]

- Kabari, L.G.; Bakpo, F.S. Diagnosing skin diseases using an artificial neural network. In Proceedings of the 2009 2nd International Conference on Adaptive Science & Technology (ICAST), Accra, Ghana, 14–16 December 2009; pp. 187–191. [Google Scholar]

- Chakraborty, S.; Mali, K.; Chatterjee, S.; Banerjee, S.; Mazumdar, K.G.; Debnath, M.; Basu, P.; Bose, S.; Roy, K. Detection of skin disease using metaheuristic supported artificial neural networks. In Proceedings of the 2017 8th Annual Industrial Automation and Electromechanical Engineering Conference (IEMECON), Bangkok, Thailand, 16–18 August 2017; pp. 224–229. [Google Scholar]

- Hameed, N.; Shabut, A.M.; Hossain, M.A. Multi-class skin diseases classification using deep convolutional neural network and support vector machine. In Proceedings of the 2018 12th International Conference on Software, Knowledge, Information Management & Applications (SKIMA), Phnom Penh, Cambodia, 3–5 December 2018; pp. 1–7. [Google Scholar]

- Maduranga, M.W.P.; Nandasena, D. Mobile-based skin disease diagnosis system using convolutional neural networks (CNN). Int. J. Image Graph. Signal Process. 2022, 3, 47–57. [Google Scholar] [CrossRef]

- Nawaz, M.; Mehmood, Z.; Nazir, T.; Naqvi, R.A.; Rehman, A.; Iqbal, M.; Saba, T. Skin cancer detection from dermoscopic images using deep learning and fuzzy k-means clustering. Microsc. Res. Tech. 2022, 85, 339–351. [Google Scholar] [CrossRef] [PubMed]

- Alani, S.; Zakaria, Z.; Ahmad, A. Miniaturized UWB elliptical patch antenna for skin cancer diagnosis imaging. Int. J. Electr. Comput. Eng. 2020, 10, 1422–1429. [Google Scholar] [CrossRef]

- Aladhadh, S.; Alsanea, M.; Aloraini, M.; Khan, T.; Habib, S.; Islam, M. An effective skin cancer classification mechanism via medical vision transformer. Sensors 2022, 22, 4008. [Google Scholar] [CrossRef] [PubMed]

- Vijayakumar, G.; Manghat, S.; Vijayakumar, R.; Simon, L.; Scaria, L.M.; Vijayakumar, A.; Sreehari, G.K.; Kutty, V.R.; Rachana, A.; Jaleel, A. Incidence of type 2 diabetes mellitus and prediabetes in Kerala, India: Results from a 10-year prospective cohort. BMC Public Health 2019, 19, 140. [Google Scholar] [CrossRef]

- Zhang, D.; Li, A.; Wu, W.; Yu, L.; Kang, X.; Huo, X. CR-Conformer: A fusion network for clinical skin lesion classification. Med. Biol. Eng. Comput. 2024, 62, 85–94. [Google Scholar] [CrossRef]

- Hao, J.; Tan, C.; Yang, Q.; Cheng, J.; Ji, G. Leveraging Data Correlations for Skin Lesion Classification. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Shenzhen, China, 14–17 October 2023; pp. 61–72. [Google Scholar]

- Li, Z.; Zhao, C.; Han, Z.; Hong, C. TUNet and domain adaptation based learning for joint optic disc and cup segmentation. Comput. Biol. Med. 2023, 163, 107209. [Google Scholar] [CrossRef] [PubMed]

- Hasan, M.; Barman, S.D.; Islam, S.; Reza, A.W. Skin cancer detection using convolutional neural network. In Proceedings of the 2019 5th International Conference on Computing and Artificial Intelligence, Bali, Indonesia, 17–20 April 2019; pp. 254–258. [Google Scholar]

- Yang, Y. Medical multimedia big data analysis modeling based on DBN algorithm. IEEE Access 2020, 8, 16350–16361. [Google Scholar] [CrossRef]

- Wang, S.; Hamian, M. Skin cancer detection based on extreme learning machine and a developed version of thermal exchange optimization. Comput. Intell. Neurosci. 2021, 2021, 9528664. [Google Scholar] [CrossRef] [PubMed]

- Naeem, A.; Farooq, M.S.; Khelifi, A.; Abid, A. Malignant melanoma classification using deep learning: Datasets, performance measurements, challenges and opportunities. IEEE Access 2020, 8, 110575–110597. [Google Scholar] [CrossRef]

- Haggenmüller, S.; Maron, R.C.; Hekler, A.; Utikal, J.S.; Barata, C.; Barnhill, R.L.; Beltraminelli, H.; Berking, C.; Betz-Stablein, B.; Blum, A.; et al. Skin cancer classification via convolutional neural networks: Systematic review of studies involving human experts. Eur. J. Cancer 2021, 156, 202–216. [Google Scholar] [CrossRef] [PubMed]

- Mazhar, T.; Haq, I.; Ditta, A.; Mohsan, S.A.H.; Rehman, F.; Zafar, I.; Gansau, J.A.; Goh, L.P.W. The role of machine learning and deep learning approaches for the detection of skin cancer. Healthcare 2023, 11, 415. [Google Scholar] [CrossRef] [PubMed]

- Chaturvedi, S.S.; Tembhurne, J.V.; Diwan, T. A multi-class skin Cancer classification using deep convolutional neural networks. Multimed. Tools Appl. 2020, 79, 28477–28498. [Google Scholar] [CrossRef]

- Subramanian, R.R.; Achuth, D.; Kumar, P.S.; Kumar Reddy, K.N.; Amara, S.; Chowdary, A.S. Skin cancer classification using Convolutional neural networks. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; pp. 13–19. [Google Scholar]

- Zhang, N.; Cai, Y.X.; Wang, Y.Y.; Tian, Y.T.; Wang, X.L.; Badami, B. Skin cancer diagnosis based on optimized convolutional neural network. Artif. Intell. Med. 2020, 102, 101756. [Google Scholar] [CrossRef]

- Fu’adah, Y.N.; Pratiwi, N.C.; Pramudito, M.A.; Ibrahim, N. Convolutional neural network (CNN) for automatic skin cancer classification system. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Chennai, India, 16–17 September 2020; Volume 982, p. 012005. [Google Scholar]

- Waweru, N. Business ethics disclosure and corporate governance in Sub-Saharan Africa (SSA). Int. J. Account. Inf. Manag. 2020, 28, 363–387. [Google Scholar] [CrossRef]

- Çakmak, M.; Tenekecı, M.E. Melanoma detection from dermoscopy images using Nasnet Mobile with Transfer Learning. In Proceedings of the 2021 29th Signal Processing and Communications Applications Conference (SIU), Istanbul, Turkey, 9–11 June 2021; pp. 1–4. [Google Scholar]

- Fujisawa, Y.; Funakoshi, T.; Nakamura, Y.; Ishii, M.; Asai, J.; Shimauchi, T.; Fujii, K.; Fujimoto, M.; Katoh, N.; Ihn, H. Nation-wide survey of advanced non-melanoma skin cancers treated at dermatology departments in Japan. J. Dermatol. Sci. 2018, 92, 230–236. [Google Scholar] [CrossRef]

- Yu, X.; Zheng, H.; Chan, M.T.; Wu, W.K. Immune consequences induced by photodynamic therapy in non-melanoma skin cancers: A review. Environ. Sci. Pollut. Res. 2018, 25, 20569–20574. [Google Scholar] [CrossRef] [PubMed]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.B.H.; Thomas, L.; Enk, A.; et al. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef] [PubMed]

- Pengpid, S.; Peltzer, K. Sun protection use behaviour among university students from 25 low, middle income and emerging economy countries. Asian Pac. J. Cancer Prev. 2015, 16, 1385–1389. [Google Scholar] [CrossRef] [PubMed]

- Ragab, M.; Choudhry, H.; Al-Rabia, M.W.; Binyamin, S.S.; Aldarmahi, A.A.; Mansour, R.F. Early and accurate detection of melanoma skin cancer using hybrid level set approach. Front. Physiol. 2022, 13, 2536. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, A.S.; Roos, T. Transfer learning with ensembles of deep neural networks for skin cancer detection in imbalanced data sets. Neural Process. Lett. 2023, 55, 4461–4479. [Google Scholar] [CrossRef]

- Hurtado, J.; Reales, F. A machine learning approach for the recognition of melanoma skin cancer on macroscopic images. TELKOMNIKA 2021, 19, 1357–1368. [Google Scholar] [CrossRef]

- Nida, N.; Irtaza, A.; Javed, A.; Yousaf, M.H.; Mahmood, M.T. Melanoma lesion detection and segmentation using deep region based convolutional neural network and fuzzy C-means clustering. Int. J. Med. Inform. 2019, 124, 37–48. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, J.; Gao, W.; Bai, F.; Li, N.; Ghadimi, N. A deep learning outline aimed at prompt skin cancer detection utilizing gated recurrent unit networks and improved orca predation algorithm. Biomed. Signal Process. Control 2024, 90, 105858. [Google Scholar] [CrossRef]

- Li, Y.; Shen, L. Skin lesion analysis towards melanoma detection using deep learning network. Sensors 2018, 18, 556. [Google Scholar] [CrossRef]

- Bi, L.; Kim, J.; Ahn, E.; Kumar, A.; Feng, D.; Fulham, M. Step-wise integration of deep class-specific learning for dermoscopic image segmentation. Pattern Recognit. 2019, 85, 78–89. [Google Scholar] [CrossRef]

- Nazi, Z.A.; Abir, T.A. Automatic skin lesion segmentation and melanoma detection: Transfer learning approach with U-Net and DCNN-SVM. In Proceedings of the International Joint Conference on Computational Intelligence: IJCCI 2018, Dhaka, Bangladesh, 14–15 December 2018; pp. 371–381. [Google Scholar]

- Sivaraj, S.; Malmathanraj, R.; Palanisamy, P. Detecting anomalous growth of skin lesion using threshold-based segmentation algorithm and Fuzzy K-Nearest Neighbor classifier. J. Cancer Res. Ther. 2020, 16, 40–52. [Google Scholar] [CrossRef] [PubMed]

- Rahman, Z.; Hossain, M.S.; Islam, M.R.; Hasan, M.M.; Hridhee, R.A. An approach for multiclass skin lesion classification based on ensemble learning. Inform. Med. Unlocked 2021, 25, 100659. [Google Scholar] [CrossRef]

- Albahar, M.A. Skin lesion classification using convolutional neural network with novel regularizer. IEEE Access 2019, 7, 38306–38313. [Google Scholar] [CrossRef]

- Rodrigues, D.D.A.; Ivo, R.F.; Satapathy, S.C.; Wang, S.; Hemanth, J.; Reboucas Filho, P.P. A new approach for classification skin lesion based on transfer learning, deep learning, and IoT system. Pattern Recognit. Lett. 2020, 136, 8–15. [Google Scholar] [CrossRef]

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Classification of skin lesions using transfer learning and augmentation with Alex-net. PLoS ONE 2019, 14, e0217293. [Google Scholar] [CrossRef] [PubMed]

- Adegun, A.A.; Viriri, S. Deep learning-based system for automatic melanoma detection. IEEE Access 2019, 8, 7160–7172. [Google Scholar] [CrossRef]

- Majtner, T.; Yildirim-Yayilgan, S.; Hardeberg, J.Y. Optimised deep learning features for improved melanoma detection. Multimed. Tools Appl. 2019, 78, 11883–11903. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.; Akram, T.; Bukhari, S.A.C.; Nayak, R.S. Developed Newton-Raphson based deep features selection framework for skin lesion recognition. Pattern Recognit. Lett. 2020, 129, 293–303. [Google Scholar] [CrossRef]

- Jayapriya, K.; Jacob, I.J. Hybrid fully convolutional networks-based skin lesion segmentation and melanoma detection using deep feature. Int. J. Imaging Syst. Technol. 2020, 30, 348–357. [Google Scholar] [CrossRef]

- Ahmed, S.G.; Zeng, F.; Alrifaey, M.; Ahmadipour, M. Skin Cancer Classification Utilizing a Hybrid Model of Machine Learning Models Trained on Dermoscopic Images. In Proceedings of the 2023 3rd International Conference on Emerging Smart Technologies and Applications (eSmarTA), Taiz, Yemen, 10–11 October 2023; pp. 1–7. [Google Scholar]

- Hamida, S.; Lamrani, D.; El Gannour, O.; Saleh, S.; Cherradi, B. Toward enhanced skin disease classification using a hybrid RF-DNN system leveraging data balancing and augmentation techniques. Bull. Electr. Eng. Inform. 2024, 13, 538–547. [Google Scholar] [CrossRef]

- Rahman, M.A.; Paul, B.; Mahmud, T.; Fattah, S.A. CIFF-Net: Contextual image feature fusion for Melanoma diagnosis. Biomed. Signal Process. Control 2024, 88, 105673. [Google Scholar] [CrossRef]

- Adepu, A.K.; Sahayam, S.; Jayaraman, U.; Arramraju, R. Melanoma classification from dermatoscopy images using knowledge distillation for highly imbalanced data. Comput. Biol. Med. 2023, 154, 106571. [Google Scholar] [CrossRef]

- Hu, B.; Zhou, P.; Yu, H.; Dai, Y.; Wang, M.; Tan, S.; Sun, Y. LeaNet: Lightweight U-shaped architecture for high-performance skin cancer image segmentation. Comput. Biol. Med. 2024, 169, 107919. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Chanda, D.; Onim, M.S.H.; Nyeem, H.; Ovi, T.B.; Naba, S.S. DCENSnet: A new deep convolutional ensemble network for skin cancer classification. Biomed. Signal Process. Control 2024, 89, 105757. [Google Scholar] [CrossRef]

| Datasets | Characteristics | ||

|---|---|---|---|

| Year | Name | Testing | Training |

| 2016 | ISBI | 900 | 273 |

| 2013 | PH2 | 200 | 40 |

| 2017 | ISBI | 2000 | 374 |

| 2000 | Dermis | 397 | 146 |

| 2018 | ISBI | 10,000 | 1113 |

| 2021 | MED NODE | 170 | 100 |

| 2019 | ISBI | 25,333 | 4522 |

| 2003 | Dermot Fit | 1300 | 76 |

| 2016–2020 | ISIC | 23,906 | 21,659 |

| 2016 | TUPAC | 321 | 500 |

| 2018 | HAM10000 | 10,015 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussain, S.I.; Toscano, E. An Extensive Investigation into the Use of Machine Learning Tools and Deep Neural Networks for the Recognition of Skin Cancer: Challenges, Future Directions, and a Comprehensive Review. Symmetry 2024, 16, 366. https://doi.org/10.3390/sym16030366

Hussain SI, Toscano E. An Extensive Investigation into the Use of Machine Learning Tools and Deep Neural Networks for the Recognition of Skin Cancer: Challenges, Future Directions, and a Comprehensive Review. Symmetry. 2024; 16(3):366. https://doi.org/10.3390/sym16030366

Chicago/Turabian StyleHussain, Syed Ibrar, and Elena Toscano. 2024. "An Extensive Investigation into the Use of Machine Learning Tools and Deep Neural Networks for the Recognition of Skin Cancer: Challenges, Future Directions, and a Comprehensive Review" Symmetry 16, no. 3: 366. https://doi.org/10.3390/sym16030366

APA StyleHussain, S. I., & Toscano, E. (2024). An Extensive Investigation into the Use of Machine Learning Tools and Deep Neural Networks for the Recognition of Skin Cancer: Challenges, Future Directions, and a Comprehensive Review. Symmetry, 16(3), 366. https://doi.org/10.3390/sym16030366